Guest Post by Bob Tisdale

Why is that a big deal? 70% of NOAA’s land+ocean surface temperature dataset is sea surface temperature data.

NOAA recently published the first of the papers describing the latest update of their ERSST dataset. The new version is called, logically, ERSST.v4. The paper is Huang et al. (2014) Extended Reconstructed Sea Surface Temperature version 4 (ERSST.v4), Part I. Upgrades and Intercomparisons. The paper is paywalled, which is quite remarkable for a scientific paper documenting a U.S. government-funded dataset.

The abstract reads (my boldface):

The monthly Extended Reconstructed Sea Surface Temperature (ERSST) dataset, available on global 2°×2° grids, has been revised herein to version 4 (v4) from v3b. Major revisions include: updated and substantially more complete input data from the International Comprehensive Ocean-Atmosphere Data Set (ICOADS) Release 2.5; revised Empirical Orthogonal Teleconnections (EOTs) and EOT acceptance criterion; updated sea surface temperature (SST) quality control procedures; revised SST anomaly (SSTA) evaluation methods; updated bias adjustments of ship SSTs using Hadley Nighttime Marine Air Temperature version 2 (HadNMAT2); and buoy SST bias adjustment not previously made in v3b.

Tests show that the impacts of the revisions to ship SST bias adjustment in ERSST.v4 are dominant among all revisions and updates. The effect is to make SST 0.1°C-0.2°C cooler north of 30°S but 0.1°C-0.2°C warmer south of 30°S in ERSST.v4 than in ERSST.v3b before 1940. In comparison with the UK Met Office SST product (HadSST3), the ship SST bias adjustment in ERSST.v4 is 0.1°C-0.2°C cooler in the tropics but 0.1°C-0.2°C warmer in the mid-latitude oceans both before 1940 and from 1945 to 1970. Comparisons highlight differences in long-term SST trends and SSTA variations at decadal timescales among ERSST.v4, ERSST.v3b, HadSST3, and Centennial Observation-Based Estimates of SST version 2 (COBE-SST2), which is largely associated with the difference of bias adjustments in these SST products. The tests also show that, when compared with v3b, SSTAs in ERSST.v4 can substantially better represent the El Niño/La Niña behavior when observations are sparse before 1940. Comparisons indicate that SSTs in ERSST.v4 are as close to satellite-based observations as other similar SST analyses.

Curiously, NOAA is using satellite-based sea surface temperature data as a reference for their ship inlet-, bucket- and buoy-based dataset.

As of now, there is very little preliminary data available in an easy-to-use format. More on that later.

WHAT CHANGES MIGHT WE EXPECT?

Regarding comparisons to satellite-based data, the only dataset similar to ERSST.v4 that’s available from the KNMI Climate Explorer is ERSST.v3b—inasmuch as they do not use satellite data and they infill grids with missing data. (HADISST infills missing data but uses satellite-based data starting in 1982, and, while the HADSST3 dataset excludes satellite data, it is not infilled.)

As a reference, Figure 1 compares the current ERSST.v3b global data to the Reynolds OI.v2 satellite-enhanced data. They have remarkably similar warming rates. It will be interesting to see how closely the new ERSST.v4 matches.

Figure 1

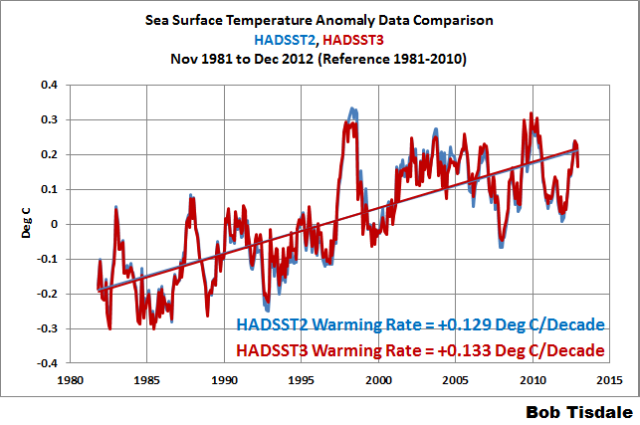

Regarding the buoy-bias adjustments, we have as a reference the upgrade of the Hadley Centre’s datasets from HADSST2 to HADSST3. Keep in mind that HADSST data are not infilled. That is, if a 5-deg latitude by 5-deg longitude grid doesn’t have data in a given month, the Hadley Centre inserts no data in that grid…it’s a blank. And that’s a problem in the mid-to-high latitudes of the Southern Hemisphere, where observations even today are relatively sparse. With that in mind, for HADSST data, the new buoy-bias adjustments added a very small increase to the warming rate from the early 1980s to the end of 2012 (when KNMI stopped updating the HADSST2 data at the Climate Explorer). See Figure 2. Will the same hold true with the new ERSST.v4 data?

Figure 2

AS A REFERENCE

We mentioned Hadley Centre’s satellite-enhanced sea surface temperature dataset HADISST above. For those interested, the global HADISST data are compared to the Hadley Centre’s primary dataset, HADSST3, in Figure 3. That’s quite a remarkable difference in warming rates, considering that HADISST is used quite often in long-term and short-term climate studies. No wonder the Hadley Centre is upgrading HADISST. It makes the HADSST3 data look like it has a bias toward warming. (Note: HADSST3 is running a number of months late at the KNMI Climate Explorer, which is why it ends in July 2014 in Figure 3.)

Figure 3

For those who want an early peek at small part of the ERSST.v4 data, data for sea surface temperature-based ENSO and PDO indices are available here. That’s the first result provided by Google in a search for ERSST.v4.

As soon as the new ERSST.v4 data are added to the KNMI Climate Explorer, I’ll provide a few comparisons.

PS: Did Richard Reynolds retire? I was surprised not to see his name as one of the co-authors of Huang et al. (2014). He’s been involved with NOAA’s sea surface temperature datasets for decades. There are a few climate scientists I would enjoy chatting with, as soon as they retire. Richard Reynolds of the NOAA is one.

Far be it for me to be cynical but it will probably show that the SST increase is “worse than we thought.”

Not at all. The average indicates 1.1 degree Celsius per century using about 35 years of observations.

All this means to me is that we are still recovering from the LIA (Little Ice Age), That is what it meant to Henrik Svensmark and Nigel Calder too.

See: Svensmark and Calder, The Chilling Stars, Icon Books UK, 2007. (Canada: Penguin Books)

Peter Suter and colleagues reported finds in the Schnidejoch Pass. Glacier retreat in the hot 2003 summer exposed remains from several distinct periods: from ~ 2800-2500 BC; from 2000-1750 BC; ~150 BC-250 AD; and the MWP up to the 14th/15th Century.

See: http://climateaudit.org/2005/11/18/archaeological-finds-in-retreating-swiss-glacier/

I’m sure they’re just trying to get the numbers to be as accurate as possible. I think I’ll put another log on the fire and make a cup of hot cocoa.

Are their reasons for making the change valid?

Are the methods they have chosen to revise the dataset correct?

I don’t know enough to answer either question, but I suspect that we may have to see how they implement the revision to tell if their methods improve the dataset or not.

There’s nothing inherently wrong with trying to improve their dataset. Indeed it is quite justifiably part of their role.

The two questions you ask are exactly the right ones. In addition I would also ask “Have they documented the changes and justifications clearly and in a readily available form?”

But the eventual change in slope is not the issue.

It is how they did the update that must be scrutinised (and I am sure will be).

As an important is the [question] are they [keeping] the old data or will it ‘get eaten by the dog’

Going to November increases the slope to 0.0131315 per year or 0.131/decade from 0.129/decade.

http://www.woodfortrees.org/plot/hadsst3gl/from:1981.8/plot/hadsst3gl/from:1981.8/trend

Cooler past, warmer present, increased gradient, why am I not surprised.

“Cooler past, warmer present” – why is it getting warmer now?

Meanwhile, the official NOAA dataset was adjusted in the past month to make 2010 much cooler (it no longer is record-high but ties with 2005). The result is that with November’s just released global value (+0.65), 2014 is already a “record hot year” a whole month in advance – it would take a negative number in December to not have a record.

Somehow none of the datasets instill a great sense of fear of runaway warming.

Has anyone calculated how much outgassing of CO2 this temperature increase would produce?Does it relate to the increase in atmospheric CO2?

How can the ocean be out gassing and at the same time become more acidic???

Less alkaline obviously as it not acidic and probably never has been.

In the same way you cannot say a pitcher of water taken from the fridge is more boiling as it warms in room temperature, you cannot say oceans are becoming more acidic. That is nonsense and remember nonsense does as nonsense says.

The point was, not the argument about acidic/alkaline but, how can the oceans be both absorbing co2 and out gassing it at the same time? I’m confused and sceptical.

No – not even close.

@ Lutz: December 15, 2014 at 7:24 pm

“Does it relate to the increase in atmospheric CO2?”

————–

You tell me.

Compare the high/low spikes on this monthly CO2 graph to Bob T’s Figure 3 graph above.

http://www.esrl.noaa.gov/gmd/webdata/ccgg/trends/co2_data_mlo_anngr.png

About 1997 there was a spike in temperature, followed by a spike in CO2 in 1999. With CO2 significantly higher in 2010, 2010 had the same high in temperatures as 1997.

Is that what you meant, that there is a general correlating trend but there is no consistent evidence of causation?

@ Alx: December 16, 2014 at 7:34 am

“Is that what you meant, that there is a general correlating trend but there is no consistent evidence of causation?”

————–

The causation is the fact that the ocean waters have been slowly warming up since the end of the Little Ice Age.

And the “steady and consistent” 56 years of factual evidence of that fact is the Keeling Curve Graph. Which also depicts the seasonal (equinox) temperature change of the ocean waters in the Southern Hemisphere, to wit:

http://i1019.photobucket.com/albums/af315/SamC_40/keelingcurve.gif

My point was that if the water temperature increases it cannot hold dissolved gases in the same concentration, so they must be released into the atmosphere. Also only sunlight can warm the ocean – most certainly a little more CO2 in the atmosphere has no effect on ocean temperature. Have you tried heating a bath full of cold water using a hair dryer?

I knew what your point was ….. but I wanted you to answer your own question instead of me responding with a “Yes” to it.

So they will be cooling the distant past for the majority of the world’s oceans? I’m not impressed. GISS has been doing the same thing for decades! Hopefully recent trends will not be affected, only the overall trend.

Thanks for the lesson in SST datasets. So far I’ve used HadSST3 and see a high correspondence to other datasets in visual plots at least, so I agree with the statement in the abstract “Comparisons indicate that SSTs in ERSST.v4 are as close to satellite-based observations as other similar SST analyses”.

The new HadSST3 is here, updated through November: http://www.metoffice.gov.uk/hadobs/hadsst3/data/HadSST.3.1.1.0/diagnostics/HadSST.3.1.1.0_monthly_globe_ts.txt – get your copy today before they run out.

Doesn’t increasing the temperature in the mid-latitudes and reducing them North and South of 30 degrees actually increase the average global temperature, as there is more surface area between the 30 degree marks?

Is it just me but I’ve always had a hard time with the x and y axis on these graphs. The trend lines always look ominous when in fact the they’re nothing of the sort. The alarmists always condense the years vs temp to make their case look better. As Willis has done these trends extrapolate/convert into the hundredths of degrees, the graphs should reflect the minimal statistical change if any.

Gonzo,

Correctomundo. By using a normal x-axis, there is nothing scary.

Using scales of tenth- and hundreth-degrees is like looking at an ant through a microscope. It’s very scary! But it’s only an ant.

Using scales like that is just another way the alarmist crowd lies to everyone.

Disagree. You have to be able to show the data clearly. There is no ‘normal’ x-axis. Divide the scale by ten and you can’t eyeball any trend. Rebase it to zero Kelvin and you’ve got a straight line. Is that what you want? Don’;t look for elephants in the window box. I’m sure Bob would agree.

Mothcatcher, you are right, you do not need a magnifying lens to view an elephant, and a magnifying lens is very helpful in studying ants.

The problem is these graphs are communicated and presented to the public as giant ants that are currently destroying civilization (aka extreme weather) and will eventually overrun and destroy us all.

Context is everything, alarmist propaganda has little to no context.

I was recently asked to range the y-axis of a graph of global surface temperatures to the scale of a home thermostat.

https://bobtisdale.wordpress.com/2014/12/11/meteorological-annual-mean-dec-nov-global-sea-surface-temperatures-set-a-record-high-in-2014-by-a-whopping/#comment-22861

He or she requested 10 to 30 deg C. What other ranges would you like?

so 0.9k/century to 1.3 K/century sounds like Earth is headed for a climate-biosphere optimum in the coming centuries. No more of this LIA catastrophe of 1450-1850 AD stuff. Eventhat makes a lot of dubious assumptionsbsuch as steady TSI, etc.

Two lots of bias adjustments and one lot of teleconnections! Science isn’t what it used to be,

I’ve read several articles recently from the AP that claim “new monthly records for 2014” using NOAA’s land and sea surface temperature record with the added claim “since 1880, when accurate temperature records began”. I’m only a Climate-Change-Denier Level 2, but even I know that the satellite data only goes back some 35 years and for that data some smallish margin of error could be claimed. For example, “October 2014 was the hottest month since 1979.” could be claimed based on a continous method and means of sampling, and any “adjustment” is applied to all data. That kind of claim just raises my eyebrows, BUT “1880”? That makes me laugh, their margin of error has to be larger than the total increase they’re claiming “since 1880”. They’re adjusting their own “estimates”, splicing sat. data onto what, “bucket and thermometer” data collection? and claiming a level of precision for this “data set” that was simply unavailable before the satellite era. How does NOAA justify this? Or do they?

Yet more “Mann made” global warming. Just a little adjustment here and there ahd they can get the CAGW going a little longer. I do hope that Inhofe is going to really investigate the propriety of all these one way adjustments.

Defunding the fr@ud is the only way to end it and it looks like it’s about to start soon:

http://www.inhofe.senate.gov/newsroom/press-releases/inhofe-statement-on-presidents-3-billion-climate-change-pledge

…. and this:

http://www.foxnews.com/politics/2014/12/15/fight-looms-over-3-billion-obama-administration-payment-to-un-linked-climate/

mairon62,

Satellite data is the most accurate there is. Look here, and tell me that 2014 is “the hottest evah!”

NOAA is fibbing. They ‘adjust’ the data to show what they want it to say.

Not to be picky, but you have to change that end time to 2015.

(A wasted posting effort by a banned sockpuppet. Comment DELETED. -mod)

Well, the land data is known to be contaminated with UHI effects and the compensating adjustments are very strange.

Also the land data is not evenly distributed – there are far fewer stations in the middle of oceans and deserts than next to airports, for instance.

And satellites don’t need their homogenisation methods to be altered every now and again – without necessarily an explanation as to why.

So why would anyone doubt that the satellite data is the most accurate?

Not that the satellite data is very accurate just that the satellite data is the most accurate.

[More wasted effort by a banned sockpuppet. Comment DELETED. -mod]

#1 – It does not measure temperature, but does rely on physics to calculate it.

#2 – So where is the the thermometer at Longitude 88, Latitude 23?

#3 – Have you thought about this or just repeat talking points?

icouldnthelpit

Because, it is regularly checked against empirical data taken/measured by weather balloons, so as to ensure that its measurements are properly callibrated.

So unless the thermometers being used in the weather balloons are inaccurate and/or incapable of properly measure temperature at altitude, the satellite data is the most accurate temperature data available to us.

Whether temperature at altitude is as relevant as temperature taken a metre or so above the ground is a different argument, but accuracy of data certainly favours the satellite data set over the bastardised land thermometer data set.

[A long, wasted posting effort by a banned sockpuppet. Comment DELETED. -mod]

“Because, it is regularly checked against empirical data taken/measured by weather balloons, so as to ensure that its measurements are properly callibrated.”

So unless the thermometers being used in the weather balloons are inaccurate and/or incapable of properly measure temperature at altitude, the satellite data is the most accurate temperature data available to us.

Whether temperature at altitude is as relevant as temperature taken a metre or so above the ground is a different argument, but accuracy of data certainly favours the satellite data set over the bastardised land thermometer data set.”

WRONG.

1. We are talking about SST here not TLT.

2. The satellite record has undergone many adjustments and continue to be adjusted.

3. the radiosonde database is ALSO homogenized and adjusted and still contains errors.

4. there is no continuous calibration of the sensors against balloons. “Corrections” are

applied to final products based on modelling.

http://www.remss.com/measurements/upper-air-temperature/validation

Thermometers on the ground are not impacted by cloud cover. Satellite measurements are. Tradeoff appears to be coverage versus accuracy.

1. Satellite’s dont measure temperature.

2. Satellites estimate temperature based on.

a) the voltage in a sensor

b) a physics model.

c) an assumed idealized weighting function.

d) multiple adjustments to multiple sensors that have changed over time

The physics behind satellite data is called Radiative Transfer. Essentially you get a brightness at the sensor.

This signal had to propagate THROUGH the atmosphere. So, to figure out the temperature at the SURFACE

from the brightness at the SENSOR, you have to solve the transmission equation. You have to “back out”

the temperature at the surface, using assumptions and a physics MODEL. This physics estimates the

effects of various gases on the transmission of radiation from the surface.To put it simply the satillite is not measuring the kinetic energy of molecules at the surface. It measure the radiation and then figures out what the temperature must be.

Therefore, IF you believe the output data of a satellite, IF you think that “temperarure” is accurate,

Then you must trust the physics that turns a brightness at the sensor into temperature at the surface.

So, here is the question. Do you or do you not believe the physics that turns a brightness into a temperature?

Lastly, The folks who work with satellite data know that its less accurate than ground observations.

That’s why every validation of SST depends upon in situ observations.

Saying the satellites are the most accurate presupposes you have an ideal to compare them to.

Here is an example of SST validation for a few platforms.

Note the large errors.

Why are there errors?

1. The satellite MODELS that produce the adjusted data, make assumptions about

water vapor and aerosols in the atmopshere.

2. all models are wrong.

Note the comparisons at the end with respect to Reynolds and Bouys and in situ calibration missions

http://mcst.gsfc.nasa.gov/sites/mcst.gsfc/files/meetings_files/mcst_2006_11_01_minnett.pdf

1. Thermistor’s dont measure temperature.

2. Thermistor’s estimate temperature based on.

a) the voltage (actually impedance) in a sensor

b) a physics model.

c) an assumed idealized weighting function.

d) multiple adjustments to multiple sensors that have changed over time

FYI,

Theo Barker, MSEE

You like Satellite data?

How about RSS, monkton uses that?

Good enough for Monkton? he claims RSS is the most accurate.

How does RSS propose to make adjustments in its data?

Simple; Use CCM3.

http://www.star.nesdis.noaa.gov/star/documents/meetings/CDR2010/talks/DayOne/Mears_C.pdf

What is CCM3

dig this.. Monkton and you support the data that is adjusted using……

http://www.cgd.ucar.edu/cms/ccm3/

That’s right DBstealy CCM3 is a GCM.. a GCM is used to help create adjustments for satellite data.

owngoal

Stephen

The manner in which satellites measure temperature, is not fundamentally different to the manner in which temperature measurment is made by any form of automated remote sensor such as a thermocouple, as opposed to a glass bowl (LIG) thermometer with expanding liquid. Even the latter is, in the extreme, just a model which depends upon proper callibration and which detioriates over time. the data taken by ships from the late 1950s onwards was not by means of LIG thermometer, but rather by way of remote sensor. The same considerations apply with ARGO which also does not use LIG thermometers..

If you are saying that all the data sets have significant issues, and all the data sets are crap, I wholeheartedly agree.

There is not one single data set in Climate Science that withstands the ordinary rigours of scientific scrutiny. All are garbage, and this no doubt goes a long way to explaining why we are unable to detect any signal to CO2 induced warming. All one can say is that within the limitations of our best measuring equipment no signal to CO2 warming is detected can be seen over and above natural variation induced changes.

This may suggest that the signal to CO2 induced warming is nil, or very small, however, the margins of errors in our data set are large so we cannot say that. Given the poor quality of our data sets, it is conceivable that the signal to CO2 induced warming is quite large, But we just do not know, one way or the other, because of the crap quality data available.

Whoops. Should have been addressed to Steven. Sorry.

“The manner in which satellites measure temperature, is not fundamentally different to the manner in which temperature measurment is made by any form of automated remote sensor such as a thermocouple, as opposed to a glass bowl (LIG) thermometer with expanding liquid.”

Wrong. Go track down all the physical theory assumed or used to turn an LIG reading ( expanding length) into a temperature. Next do the same for a thermocouple. List all the physical assumptions and theory accepted. Now, do the same for a satillite data set. You should not be surprised that the chain of processing for a satellite dataset involves more assumptions, more operations, and more theory than the other two.

While its true that all measurements REQUIRE the acceptance of theory, not all theory is equally certain.

But if you accept satillite data, then you accept radiative theory. And that theory says c02 warms the planet.

“If you are saying that all the data sets have significant issues, and all the data sets are crap, I wholeheartedly agree.”

Wrong. All data in all science has uncertainty. Uncertainty doesnt mean crap. It means what it means.

Comparing various sources, you should be able to see why claims that satellite data is clearly more accurate is tenuous at best. remain skeptical.

Mosher appears to be on crusade against satellite data. Must have touched a nerve.

Steve: I think we can agree that for a specific time-space coordinate point a wet bulb or thermistor in contact with the atmospheric gas molecules will more closely approximate the indirect measurement of the kinetic energy in said molecules than a distant electro-magnetic radiation integration of said point. That contact measurement is based on the model of energy transfer from the surrounding atmospheric gas molecules to the glass in turn to the liquid contained in the bulb, or a model of thermal modulation of electro-potential resistivity in the thermistor. Distant satellites cannot resolve to that precision.

I believe we can also agree that when integrating approximations over the entire quasi-ellipsoid surface, actually measuring by the same means several orders of magnitude greater density in a consistent distribution is a closer approximation of the entire surface. A poor approximation consistently applied in a much more dense distribution is generally better than a SWAG when integrating over the entire surface. Each pixel in the sensor represents an approximation for a specific volume of gas, as you say, distorted by intervening molecules. How many pixels are used to cover then entire quasi-ellipsoid? For RSS? For UAH?

After all, the wet bulb approximation only represents that specific time-space coordinate point. Applying that value 1km away is just a SWAG, to say nothing of 120+ km away. How many wet bulbs and thermistors are employed in the NOAA, BEST, GISS, HADCRUT3|4, NCDC data sets?

It all comes down to relative uncertainty of the integration technique used and whether “global average temperature” really has any meaning.

Your “onegoal” statement is a bit immature, IMO.

Theo Barker, MSEE

Perhaps Dr. Brown (rgbatduke) will weigh in on this matter, unless he’s grading final exams, etc.

Oh geeeeze whizs, and the “experts” infer there is little to no uncertainty in the temperature records of say, pre-1950 and dating back to the 1880’s and further.

And to think that all claims of CAGW are “rooted” in those pre-1950 temperature records.

NOAA Is [Singular] Updating Their [Plural] Sea Surface Temperature Dataset…

Grammar alert!

Sorry.

Here is MSU vs HADSST3 http://www.woodfortrees.org/plot/rss/from:1978/to:2015/plot/hadsst3sh/from:1978/to:2015/plot/hadsst3nh/from:1978/to:2015

Some differences around El Chicon and Pinatuba for SH HADSST3 ?

NOAA is a US government agency. It has been found out manipulating the surface temperature data to enhance a warming trend.

With so much debate about the so called missing heat in the atmosphere, I suspect NOAA will create the heat in the oceans by manipulating the sea temperature data to enhance a warming trend.

These government agencies have been engaged in scientific wrongdoing in the way they have been adjusting/manipulating the temperature data. Why would the stop now?

Sea ice is increasing, ocean is warming? WUWT?

Bob

You have been very busy this past week with 4 articles all on a related theme.

The fact is that there is no quality data available covering ocean temperatures which withstands the usual rigours of scientific scrutiny, This is unfortunate since it is clear that it is the ocean that is the heat pump of the planet, and it is ocean temperatures that govern our climate.

ARGO has insufficient spatial coverage, it is of too short a duration to give meaningful insight, it may have inbuilt biases (the buoys are free floating and float on currents, and these currents exist mainly due to differences in temperatures and/or density so it is quite conceivable that a free floating buoy riding on a current has an inherent bias and no steps have yet to be taken to consider and/or assess possible bias), and when ARGO was first rolled out, many buoys showed cooling and NASA made adjustment/disregarded data from these buoys since it considered the data to be wrong without carrying out any independent verification to assess whether the data showing cooling was right.

Bucket measurements are farcical, and when one considers how they were taken they have errors of several degrees. The data collected should be taken with a pinch of salt.

Measurements taken by ship’s from water drawn into the engine room are not taking SST, but rather they are measuring ocean temperature at a depth between say somewhere between 3m to 16 m depth, perhaps with 7m to 10m being more typical. The fact is that there is no consistency of measurement since this will depend upon the design and configuration of the ship involved, how it is employed (laden, partly laden, in ballast) and how it is trimmed (usually about 1 m or so to the stern to assist steerage). as the voyage progresses, the trim of the vessel alters not least because stores and consumables are consumed. Not only is the water intake at depth, the water drawn is cavitated water since it has already been cut by the bow and the bow plane waves thereby mixing the water. If the vessel is proceeding in swell and/or there are significant waves, the vessel will be heaving quite substantially and thereby further mixing the water being drawn. No one has a reasonable handle on the effects of all of this.

Further when commercial ships are used, there are commercial pressures that may influence the recording of sea temperature. This commercial pressure can be many and varied. Ships usually record data every 4 hours, but what if crew are lazy or busy doing something else? How much infilling goes on in the figures that are recorded where a crewman merely guesses what he thought the temperature was.

Sometimes there may be reasons to mis-record the data to conceal problems possibly with engine performance, or hull accretion/bottom fouling, or because a heated cargo is being carried and the ship gets paid for heating the cargo and it is thereby advantageous to suggest that ambient conditions are cooler than they truly are so that the ship can claim more for heating the cargo than the ship is actually doing. Sometimes. it is clear that a ship is under recording water temperature by several degrees since it is in its interest to show that cargo has cooled rapidly, or that heat needs to be applied earlier than it truly has to be applied.

I have reviewed hundreds of thousands (probably millions) of entries in ships logs setting out water temperature. I have seen examples where the data recorded in the engine logs, the deck logs, telexes sent by the ship to charterers/owners/weather routing agencies, and reports by weather routing agencies all differ. Some of this may be real eg., that although the ship was reporting say a noon time figure someone on the bridge was sending a telex at 12:50hrs and phoned the engine room and the crew reported the current 12:50 hrs time not the earlier noon time. Some of this may be due to transcription errors or typos etc, but ships data may not be reliable, and it is not uncommon to see inconsistencies.

I am very uneasy about the adjustments being made to ship data since as a matter of principle ships are generally not recording SST and instead they are recording water temperature drawn at depth such that the water that they are measuring is cooler than SST. If anything ships since the 1950s/early 1960s used predominantly engine room figures, under record the SST such that SST should be warmed not cooled.

But as usual in Climate Science no empirical testing is being carried out to see what adjustments should be made, merely there is a hunch that an adjustment should be made and a complete guess as to what that adjustment should be.

And once again in Climate Science one is seeing data splicing. One cannot compare bucket data with ship log data with ARGO data. Each is an individual measurement with its own inherent issues and margins of errors. What the science should be doing is dealing with each set of data individually, and assessing and consigning to each a realistic error margin bearing in mind how the measurements were taken, the equipment used, and the water sampling.

“And once again in Climate Science one is seeing data splicing. One cannot compare bucket data with ship log data with ARGO data.”

Of course you can.

As for data splicing? look at the sources spliced for satellites. what a mess.

Stephen

One can do anything. I could splice the data onto Formula 1 lap times through the ages at various circuits. It does not mean that anything worthwhile is achieved by such a spliced data set.

All the data sets are measuring different things, in a different manner, using different equipmentm. Each data set has its own particular issues, and each has its own error bands.

In this scenario, splicing is not appropriate if you desire to produce something of scientific note. If you consider that it is acceptable, this speaks volumes as to your view on quality control.

One can meaningfully look at bucket data, and see what that tells us about ocean temps during the period covered by bucket collected data, and one can ascribe a suitable error band to that data set.

One can meaningfully look at data set out in ship’s logs drwan from the water inlet of the ship, and see what that tells us about ocean temps during the period covered by engine room collected data, and one can ascribe a suitable error band to that data set..

One can meaningfully look at ARGO data, and see what that tells us about ocean temps during the period covered by ARGO collected data, and one can ascribe a suitable error band to that data set.

But one cannot legitimately splice these 3 data sets together to get a meaningful single data series covering 100 or so years.

Richard.

1. you were the one who said you could not do it.

2. “But one cannot legitimately splice these 3 data sets together to get a meaningful single data series covering 100 or so years.”

Yes you can.

1. The process of splicing is well explained.

2. The uncertainty can be estimated and validated. It’s simple.

3. It certainly is meaningful. in fact you can test the meaning for yourself. It’s simple.

take half of the data. Hold it out. conduct your splicing with the other half.

Now use your result to predict the data that is held out. Guess what.. It works. It has meaning.

4. The meaning of the series is simple. Its the best prediction one can form of what would have been

measured had the temperature been sampled in the same way.

You can usually tell someone is talking through their hat when they say

“it cant be done”

oh wait

“it cant be legitamately done”

or

“its meaningless”

It can can be done. It has been done. The method is legit and tested. it has meaning.

Anyone can argue by assertion.

Steven

In my first comment, my use of English was sloppy. I used cannot when I meant should not. This is a very common error when speaking informally, eg., talking around the diner table ‘can you pass me the ketchup’ when will is intented etc.

I stand by my view. They are each in their own way proxies. Different proxies ought not to be spliced together as a matter of good practice. If they are spliced together, each should be clearly identified (by say a plot in a different colour), proper identification of each should be set out under the plot, each should have its own unique error band attached to it, which should be included in the plot. Once the error band is established, one can obviously then see the margin of error not simply in the plot but in the splice since in theory in any splice the correct join could be anywhere between the top of the margin of error of one series against the bottom of the error band of the next series, or the bottom of the error band of one series against the top of the error band of the next series. In other words at the splice point one has to add the error bands,

You talk about calibration, but is this realistically possible? For example, in the case of data from ship’s logs, each ship draws a different draft. In fact each day, the drat of the same ship is slightly different. No attempt is being made to link ship drawn water temperature to the draft at which that water was drawn. If that was done then one would have a vertical profile of the ocean temperature between about 3m to 16m depth in the shipping lanes at all times of the year.

One cannot callibrate ship data to SST until one knows the temperature profile of each ocean basin in each shipping lane at a depth throughout the 3m to 16m range throughout the entire year. Then one would have to work out what % of ships are reporting temperatures at 3m, at 3.1m, at 3.2m, 3.3m etc through to 16m. Then one would have to perform some weighted average of that data to try and reconstruct SST. The data does not exist to even go about performing a realistic callibration.

I understand your claim about what you perceive to be the best prediction, but unfortunately far too often the best prediction is very far from the real position.

,

Thanks for the news, Bob.

I hope NOAA has done what is right, not the usual accelerating scare tactics.

Lets assume a trend of .1C per decade. The argument of man-made or natural variation becomes moot if the earth continues to warm. We have to deal with the results, good and bad.

Anyways .1C per decade means in 500 years the earth will be 5C degrees warmer. 500 years is lets say 125 generations. I find it hard to believe over the course of 125 generations after putting a man on the moon, transplanting arms, hearts and lungs, stopping many diseases, splicing genes, developing a world wide communication and computing network, intercontinental flights, we could not adapt to a changing climate. Scientists used to talk about putting colonies on the very hostile environment of Mars, now climate scientists poop in their pants at the thought of extra rain in Toledo or no snow in England.

This kind of doomsday, we are victims attitude, will kill off homo-sapiens way before climate does.

Let’s NOT say 125 generations in 500 years as that = 3.2 yrs/generation? It’s worse than we thought (to borrow a common CAGW phrase) if this is the effect of climate change.

Shouldn’t the title be “NOAA Is *Adjusting* Their Sea Surface Temperature Dataset”?

Updating = homogenizing/adjusting

It seems to me that if you have established a methodology to produce a data set, for the purpose of “updating or changing” that methodology, you would run them in parallel for at least a couple of years to insure that the new methodology is functioning properly. You certainly wouldn’t run it backwards to change that which you already know as you have then lost the data on which you “calibrated” the new methodology. Changing the past records based on a new methodology seems to me to only have one purpose – to make your belief set on what causes change to be proven by the new system. Did that make sense?

Bob;

On Reynolds retiring, you may be correct. I found a page for the 2014 Ocean Sciences Meeting in February of this year where he co-authored with Banzon and Kearns on

A TWO-STAGE OPTIMAL INTERPOLATION SEA SURFACE TEMPERATURE ANALYSIS FOR REGIONAL CLIMATE APPLICATIONS

His contact e-mail on the abstract page is a gmail account, not an noaa.gov account.

Thanks, D.J.

What is ship bias?

Are ships and their inhabitants regarded as right wing climate den1ers just because they are doing productive economic work?

Bob, as said on another of your blog posts, a revised NOAA data set rings an alarm bell for many here at WUWT, as can be gleaned from the comments above. The latest bounce upwards in 2014 would be what NOAA wants because they appear to be under pressure to declare 2014 as the warmest ever. eg.

http://judithcurry.com/2014/12/09/spinning-the-warmest-year/

They also state that they use satellite records as a reference. However, this new version has a fundamentally different shape to other records whether they be RSS, UAH, or the older datasets you compare in for example your figure 2. This version has 2014 well above the 2004 – 2013 level, whereas the satellite data sets that they use as a reference do not.

This looks like a way to get 2014 as the warmest, in the same way as Cowtan & Way was used to warm the land-based data (Hadcrut 4 versus Hadcrut 3).