Guest Post by Professor Robert Brown from Duke University and Werner Brozek, Edited by Just The Facts:

The above graphic shows RSS having a slope of zero from both January 1997 and March 2000. As well, GISS shows a positive slope of 0.012/year from both January 1997 and March 2000. This should put to rest the notion that the strong El Nino of 1998 had any lasting affect on anything. Why is there such a difference between GISS and RSS? That question will be explored further below.

The previous post had many gems in the comments. I would like to thank firetoice2014 for their comment that inspired the title of this article.

I would also like to thank sergeiMK for very good comments and questions here. Part of their comment is excerpted below:

“@rgb

So you are basically stating that all major providers of temperature series of either

1 being incompetent

2 purposefully changing the data to match their belief.”

Finally, I would like to thank Professor Brown for his response. With some changes and deletions, it is reproduced below and ends with rgb.

“rgbatduke

August 14, 2015 at 12:06 pm

Note well that all corrections used by USHCN boil down to (apparently biased) thermometric errors, errors that can be compared to the recently discovered failure to correctly correct for thermal coupling between the actual measuring apparatus in intake valves in ocean vessels and the incoming seawater that just happened to raise global temperatures enough to eliminate the unsightly and embarrassing global anomaly “Pause” in the latest round of corrections to the major global anomalies; they are errors introduced by changing the kind of thermometric sensors used, errors introduced by moving observation sites around, errors introduced by changes in the time of day observations are made, and so on. In general one would expect measurement errors in any

given thermometric time series, especially when they are from highly diverse causes, to be as likely to cool the past relative to the present as warm it, but somehow, that never happens. Indeed, one would usually expect them to be random, unbiased over all causes, and hence best ignored in statistical analysis of the time series.

Note well that the total correction is huge. The range is almost the entire warming reported in the form of an anomaly from 1850 to the present.

Would we expect the sum of all corrections to any good-faith dataset (not just the thermometric record, but say, the Dow Jones Industrial Average “DJIA”) to be correlated, with, say, the height of my grandson (who is growing fast at age 3)? No, because there is no reasonable causal connection between my grandson’s height and an error in thermometry. However, correlation is not causality, so both of them could be correlated with time. My grandson has a monotonic growth over time. So does (on average, over a long enough time) the Dow Jones Industrial Average. So does carbon dioxide. So does the temperature anomaly. So does (obviously) the USHCN correction to the temperature anomaly. We would then observe a similar correlation between carbon dioxide in the atmosphere and my grandson’s height that wouldn’t necessarily mean that increasing CO2 causes growth of children. We would observe a correlation between CO2 in the atmosphere and the DJIA that very likely would be at least partly causal in nature, as CO2 production produces energy as a side effect and energy produces economic prosperity and economic prosperity causes, among other things, a rise in the DJIA.

In Nicholas Nassim Taleb’s book The Black Swan, he describes the analysis of an unlikely set of coin flips by a naive statistician and Joe the Cab Driver. A coin is flipped some large number of times, and it always comes up heads. The statistician starts with a strong Bayesian prior that a coin, flipped should produce heads and tails roughly equal numbers of times. When in a game of chance played with a friendly stranger he flips the coin (say) ten times and it turns up heads every time (so that he loses) he says “Gee, the odds of that were only one in a thousand (or so). How unusual!” and continues to bet on tails as if the coin is an unbiased coin because sooner or later the laws of averages will kick in and tails will occur as often as heads or more so, things will balance out.

Joe the Cab Driver stopped at the fifth or sixth head. His analysis: “It’s a mug’s game. This joker slipped in a two headed coin, or a coin that it weighted to nearly always land heads”. He stops betting, looks very carefully at the coin in question, and takes “measures” to recover his money if he was betting tails all along. Or perhaps (if the game has many players) he quietly starts to bet on heads to take money from the rest of the suckers, including the naive statistician.

An alternative would be to do what any business would do when faced with an apparent linear correlation between the increasing monthly balance in the company presidents personal account and unexplained increasing shortfalls in total revenue. Sure, the latter have many possible causes — shoplifting, accounting errors, the fact that they changed accountants back in 1990 and changed accounting software back in 2005, theft on the manufacturing floor, inventory errors — but many of those changes (e.g. accounting or inventory) should be widely scattered and random, and while others might increase in time, an increase in time that matches the increase in time in the president’s personal account when the president’s actual salary plus bonuses went up and down according to how good a year the company had and so on seems unlikely.

So what do you do when you see this, and can no longer trust even the accountants and accounting that failed to observe the correlation? You bring in an outside auditor, one that is employed to be professionally skeptical of this amazing coincidence. They then check the books with a fine toothed comb and determine if there is evidence sufficient to fire and prosecute (smoking gun of provable embezzlement), fire only (probably embezzled, but can’t prove it beyond all doubt in a court of law, continue observing (probably embezzled, but there is enough doubt to give him the benefit of the doubt — for now), or exonerate him completely, all income can be accounted for and is disconnected from the shortfalls which really were coincidentally correlated with the president’s total net worth.

Until this is done, I have to side with Joe the Cab Driver. Up until the latest SST correction I was managing to convince myself of the general good faith of the keepers of the major anomalies. This correction, right before the November meeting, right when The Pause was becoming a major political embarrassment, was the straw that broke the p-value’s back. I no longer consider it remotely possible to accept the null hypothesis that the climate record has not been tampered with to increase the warming of the present and cooling of the past and thereby exaggerate warming into a deliberate better fit with the theory instead of letting the data speak for itself and hence be of some use to check the theory.

The bias doesn’t even have to be deliberate in the sense of people going “Mwahahahaha, I’m going to fool the world with this deliberate misrepresentation of the data”. Sadly, there is overwhelming evidence that confirmation bias doesn’t require anything like deliberate dishonesty. All it requires is a failure in applying double blind, placebo controlled reasoning in measurements. Ask any physician or medical researcher. It is almost impossible for the human mind not to select data in ways that confirm our biases if we don’t actively defeat it. It is as difficult as it is for humans to write down a random number sequence that is at all like an actual random number sequence (go on, try it, you’ll fail). There are a thousand small ways to make it so. Simply considering ten adjustments, trying out all of them on small subsets of the data, and consistently rejecting corrections that produce a change “with the wrong sign” compared to what you expect is enough. You can justify all six of the corrections you kept, but you couldn’t really justify not keeping the ones you reject. That will do it. In fact, if you truly believe that past temperatures are cooler than present ones, you will only look for hypotheses to test that lead to past cooling and won’t even try to think of those that might produce past warming (relative to the present).

Why was NCDC even looking at ocean intake temperatures? Because the global temperature wasn’t doing what it was supposed to do! Why did Cowtan and Way look at arctic anomalies? Because temperatures there weren’t doing what they were supposed to be doing! Is anyone looking into the possibility that phenomena like “The Blob” that are raising SSTs and hence global temperatures, and that apparently have occurred before in past times, might make estimates of the temperature back in the 19th century too cold compared to the present, as the existence of a hot spot covering much of the pacific would be almost impossible to infer from measurements made at the time? No, because that correction would have the wrong sign.

So even like this excellent discussion on Curry’s blog where each individual change made by USHCN can be justified in some way or another which pointed out — correctly, I believe — that the adjustments were made in a kind of good faith, that is not sufficient evidence that they are not made without bias towards a specific conclusion that might end up with correction error greater than the total error that would be made with no correction at all. One of the whole points about error analysis is that one expects a priori error from all sources to be random, not biased. One source of error might not be random, but another source of error might not be random as well, in the opposite direction. All it takes to introduce bias is to correct for all of the errors that are systematic in one direction, and not even notice sources of error that might work the other way. It is why correcting data before applying statistics to it, especially data correction by people who expect the data to point to some conclusion, is a place that angels rightfully fear to tread. Humans are greedy pattern matching engines, and it only takes one discovery of a four leaf clover correlated with winning the lottery to overwhelm all of the billions of four leaf clovers that exist but somehow don’t affect lottery odds in the minds of many individuals. We see fluffy sheep in the clouds, and Jesus on a burned piece of toast.

But they aren’t really there.

rgb”

In the sections below, as in previous posts, we will present you with the latest facts. The information will be presented in three sections and an appendix. The first section will show for how long there has been no warming on some data sets. At the moment, only the satellite data have flat periods of longer than a year. The second section will show for how long there has been no statistically significant warming on several data sets. The third section will show how 2015 so far compares with 2014 and the warmest years and months on record so far. For three of the data sets, 2014 also happens to be the warmest year. The appendix will illustrate sections 1 and 2 in a different way. Graphs and a table will be used to illustrate the data.

Section 1

This analysis uses the latest month for which data is available on WoodForTrees.com (WFT). All of the data on WFT is also available at the specific sources as outlined below. We start with the present date and go to the furthest month in the past where the slope is a least slightly negative on at least one calculation. So if the slope from September is 4 x 10^-4 but it is – 4 x 10^-4 from October, we give the time from October so no one can accuse us of being less than honest if we say the slope is flat from a certain month.

1. For GISS, the slope is not flat for any period that is worth mentioning.

2. For Hadcrut4, the slope is not flat for any period that is worth mentioning.

3. For Hadsst3, the slope is not flat for any period that is worth mentioning.

4. For UAH, the slope is flat since April 1997 or 18 years and 4 months. (goes to July using version 6.0)

5. For RSS, the slope is flat since January 1997 or 18 years and 7 months. (goes to July)

The next graph shows just the lines to illustrate the above. Think of it as a sideways bar graph where the lengths of the lines indicate the relative times where the slope is 0. In addition, the upward sloping blue line at the top indicates that CO2 has steadily increased over this period.

When two things are plotted as I have done, the left only shows a temperature anomaly.

The actual numbers are meaningless since the two slopes are essentially zero. No numbers are given for CO2. Some have asked that the log of the concentration of CO2 be plotted. However WFT does not give this option. The upward sloping CO2 line only shows that while CO2 has been going up over the last 18 years, the temperatures have been flat for varying periods on the two sets.

Section 2

For this analysis, data was retrieved from Nick Stokes’ Trendviewer available on his website <a href=”http://moyhu.blogspot.com.au/p/temperature-trend-viewer.html”. This analysis indicates for how long there has not been statistically significant warming according to Nick’s criteria. Data go to their latest update for each set. In every case, note that the lower error bar is negative so a slope of 0 cannot be ruled out from the month indicated.

On several different data sets, there has been no statistically significant warming for between 11 and 22 years according to Nick’s criteria. Cl stands for the confidence limits at the 95% level.

The details for several sets are below.

For UAH6.0: Since November 1992: Cl from -0.007 to 1.723

This is 22 years and 9 months.

For RSS: Since February 1993: Cl from -0.023 to 1.630

This is 22 years and 6 months.

For Hadcrut4.4: Since November 2000: Cl from -0.008 to 1.360

This is 14 years and 9 months.

For Hadsst3: Since September 1995: Cl from -0.006 to 1.842

This is 19 years and 11 months.

For GISS: Since August 2004: Cl from -0.118 to 1.966

This is exactly 11 years.

Section 3

This section shows data about 2015 and other information in the form of a table. The table shows the five data sources along the top and other places so they should be visible at all times. The sources are UAH, RSS, Hadcrut4, Hadsst3, and GISS.

Down the column, are the following:

1. 14ra: This is the final ranking for 2014 on each data set.

2. 14a: Here I give the average anomaly for 2014.

3. year: This indicates the warmest year on record so far for that particular data set. Note that the satellite data sets have 1998 as the warmest year and the others have 2014 as the warmest year.

4. ano: This is the average of the monthly anomalies of the warmest year just above.

5. mon: This is the month where that particular data set showed the highest anomaly. The months are identified by the first three letters of the month and the last two numbers of the year.

6. ano: This is the anomaly of the month just above.

7. y/m: This is the longest period of time where the slope is not positive given in years/months. So 16/2 means that for 16 years and 2 months the slope is essentially 0. Periods of under a year are not counted and are shown as “0”.

8. sig: This the first month for which warming is not statistically significant according to Nick’s criteria. The first three letters of the month are followed by the last two numbers of the year.

9. sy/m: This is the years and months for row 8. Depending on when the update was last done, the months may be off by one month.

10. Jan: This is the January 2015 anomaly for that particular data set.

11. Feb: This is the February 2015 anomaly for that particular data set, etc.

17. ave: This is the average anomaly of all months to date taken by adding all numbers and dividing by the number of months.

18. rnk: This is the rank that each particular data set would have for 2015 without regards to error bars and assuming no changes. Think of it as an update 35 minutes into a game.

| Source | UAH | RSS | Had4 | Sst3 | GISS |

|---|---|---|---|---|---|

| 1.14ra | 6th | 6th | 1st | 1st | 1st |

| 2.14a | 0.170 | 0.255 | 0.564 | 0.479 | 0.74 |

| 3.year | 1998 | 1998 | 2014 | 2014 | 2014 |

| 4.ano | 0.482 | 0.55 | 0.564 | 0.479 | 0.74 |

| 5.mon | Apr98 | Apr98 | Jan07 | Aug14 | Jan07 |

| 6.ano | 0.742 | 0.857 | 0.832 | 0.644 | 0.96 |

| 7.y/m | 18/4 | 18/7 | 0 | 0 | 0 |

| 8.sig | Nov92 | Feb93 | Nov00 | Sep95 | Aug04 |

| 9.sy/m | 22/9 | 22/6 | 14/9 | 19/11 | 11/0 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 10.Jan | 0.277 | 0.367 | 0.688 | 0.440 | 0.81 |

| 11.Feb | 0.175 | 0.327 | 0.660 | 0.406 | 0.87 |

| 12.Mar | 0.165 | 0.255 | 0.681 | 0.424 | 0.90 |

| 13.Apr | 0.087 | 0.174 | 0.656 | 0.557 | 0.73 |

| 14.May | 0.285 | 0.309 | 0.696 | 0.593 | 0.77 |

| 15.Jun | 0.333 | 0.391 | 0.728 | 0.575 | 0.79 |

| 16.Jul | 0.183 | 0.289 | 0.691 | 0.636 | 0.75 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 17.ave | 0.215 | 0.302 | 0.686 | 0.519 | 0.80 |

| 18.rnk | 3rd | 6th | 1st | 1st | 1st |

If you wish to verify all of the latest anomalies, go to the following:

For UAH, version 6.0beta3 was used. Note that WFT uses version 5.6. So to verify the length of the pause on version 6.0, you need to use Nick’s program.

http://vortex.nsstc.uah.edu/data/msu/v6.0beta/tlt/tltglhmam_6.0beta3.txt

For RSS, see: ftp://ftp.ssmi.com/msu/monthly_time_series/rss_monthly_msu_amsu_channel_tlt_anomalies_land_and_ocean_v03_3.txt

For Hadcrut4, see: http://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/time_series/HadCRUT.4.4.0.0.monthly_ns_avg.txt

For Hadsst3, see: http://www.cru.uea.ac.uk/cru/data/temperature/HadSST3-gl.dat

For GISS, see:

http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts+dSST.txt

To see all points since January 2015 in the form of a graph, see the WFT graph below. Note that UAH version 5.6 is shown. WFT does not show version 6.0 yet. Also note that Hadcrut4.3 is shown and not Hadcrut4.4, which is why the last few months are missing for Hadcrut.

As you can see, all lines have been offset so they all start at the same place in January 2015. This makes it easy to compare January 2015 with the latest anomaly.

Appendix

In this part, we are summarizing data for each set separately.

RSS

The slope is flat since January 1997 or 18 years, 7 months. (goes to July)

For RSS: There is no statistically significant warming since February 1993: Cl from -0.023 to 1.630.

The RSS average anomaly so far for 2015 is 0.302. This would rank it as 6th place. 1998 was the warmest at 0.55. The highest ever monthly anomaly was in April of 1998 when it reached 0.857. The anomaly in 2014 was 0.255 and it was ranked 6th.

UAH6.0beta3

The slope is flat since April 1997 or 18 years and 4 months. (goes to July using version 6.0beta3)

For UAH: There is no statistically significant warming since November 1992: Cl from -0.007 to 1.723. (This is using version 6.0 according to Nick’s program.)

The UAH average anomaly so far for 2015 is 0.215. This would rank it as 3rd place, but just barely. 1998 was the warmest at 0.483. The highest ever monthly anomaly was in April of 1998 when it reached 0.742. The anomaly in 2014 was 0.170 and it was ranked 6th.

Hadcrut4.4

The slope is not flat for any period that is worth mentioning.

For Hadcrut4: There is no statistically significant warming since November 2000: Cl from -0.008 to 1.360.

The Hadcrut4 average anomaly so far for 2015 is 0.686. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.832. The anomaly in 2014 was 0.564 and this set a new record.

Hadsst3

For Hadsst3, the slope is not flat for any period that is worth mentioning. For Hadsst3: There is no statistically significant warming since September 1995: Cl from -0.006 to 1.842.

The Hadsst3 average anomaly so far for 2015 is 0.519. This would set a new record if it stayed this way. The highest ever monthly anomaly was in August of 2014 when it reached 0.644. The anomaly in 2014 was 0.479 and this set a new record.

GISS

The slope is not flat for any period that is worth mentioning.

For GISS: There is no statistically significant warming since August 2004: Cl from -0.118 to 1.966.

The GISS average anomaly so far for 2015 is 0.80. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.96. The anomaly in 2014 was 0.74 and it set a new record.

Conclusion

There might be compelling reasons why each new version of a data set shows more warming than cooling over the most recent 15 years. But after so many of these instances, who can blame us if we are skeptical?

If you are funded to study cars, but only look at red cars then publish science about cars, your results will be scientifically valid, but logically wrong.

So the science says….

It was peer reviewed….

The consensus suggests…

These are all correct statements. However trying to convince people they are logically incorrect is neigh impossible.

I believe that a paper that reviews version of surface data sets and whether they are judged enhance the trend would be most welcomed by the doubter community, but not the believer community.

The trend of annual global land temperature anomalies since 2005 or the last 10 years has been flat or in a pause, but regionally there is cooling in Asia and North America and warming in Europe

Global -0.02 C/decade (flat)

Northern Hemisphere -0.05 C/decade (flat)

Southern Hemisphere +0.06 C/decade (flat)

North America -0.41 C/decade (cooling)

Asia -0.31 C/decade (cooling)

Europe + 0.39 C /decade (warming)

Africa + 0.08 C/decade (flat)

Oceania + 0.07C /decade (flat)

All data per NOAA CLIMATE AT A GLANCE

What does this information do to the claim that we can legitimately extrapolate up to 1,200 km from a station?

BTW, I think here is the first attempt to justify that extrapolation:

http://pubs.giss.nasa.gov/abs/ha00700d.html

With satellite data, this should not be necessary any more. Then we do not have to worry about how legitimate it is.

North America land temperature anomalies show the greatest trend down since 1997 except summers. North America is actually cooling, not warming

WINTER -0.54 C/ decade (cooling)

SPRING -0.08 C/ decade (flat)

SUMMER +0.23 C / decade (warming)

FALL -0.03 C/ decade (flat)

ANNUAL -0.10 C/ decade (cooling)

US temperature anomalies have been trending down the most since 1998 or 18 years except spring and summers

ANNUAL -0.48 C/decade (cooling)

WINTER -1.44 C/decade (cooling)

SPRING +0.11C/decade (warming)

SUMMER +0.23 C/decade (warming)

FALL -0.50 C/ decade (cooling)

All above data per NOAA CLIMATE AT A GLANCE web page)

Canada like US has been mostly cooling since 1998

REGIONAL PATTERN FOR ANNUAL TEMPERATURE ANOMALIES TREND SINCE 1998

• ATLANTIC CANADA – FLAT

• GREAT LAKES & ST LAWRENCE -DECLINING

• NORTHEASTERN FOREST –DECLINING

• NORTHWESTERN FOREST –DECLINING

• PRAIRIES – DECLINING

• SOUTH BC MOUNTAINS – DECLINING

• PACIFIC COAST- RISING ( RISING DUE TO EXTRA WARM NORTH PACIFIC LAST FEW YEARS)

• YUKON/NORTH BC MOUNTAINS – DECLINING

• MACKENZIE DISTRICT- DECLINING

• ARCTIC TUNDRA-RISING ( ANOMALIES HAVE DROPPED 3 DEGREES SINCE 2010

• ARCTIC MOUNTAINS & FIORDS -RISING ( ANOMALIES HAVE DROPPED 3 DEGREES SINCE 2010)

TOTAL ANNUAL CANADA – DECLINING

Canadian data per Environment Canada

Couple the cooling trend with the data coming from the surfacestations.org project, and the TRUE cooling in the US Lower 48 is likely much higher than -.48 C/decade

RBG – There are a lot of competent scientists in the world. Why are there only a few that feel/share your outrage against such raw data corruption/treatment?! I find this anomaly the most disturbing, in regards to the climate scientific community. It trashes all scientist’s integrity and renders careers effectively useless. GK

I would assume that one reason is the increased specialization in academia. It is not considered proper to criticize someone outside your specialty. You should defer their ‘authority’ unless or until you have published as many pier review papers a they have on the same subjects. /sarc

Sort of a chicken and the egg dilemma, Physics, Astronomy and Chemistry have linages that go back around 4 millennia, Climatology is barely decades and at that it is more of an area of interest for Scientists in other specialities; We’ve had Psychologists publishing papers embraced by Climatologist like “Recursive fury: Conspiracist ideation in the blogosphere in response to research on conspiracist ideation,” by Stephan Lewandowsky, John Cook, Klaus Oberauer, and Michael Marriott.

The problem is how do you get a Climatology Paper peer reviewed, when there are almost nobody formally credential in the speciality?

+1

I had this exact argument with my sister who is an accomplished doctor (Gen Practice) . She must “trust” the experts as a mandate in her profession. Since the “experts” in climate science profess warming, it must be true.

Of course, the climate experts are financially corrupted politicians, but they still wear the cloak of credibility to other experts.

Why do you think there are only a few? I think there are many. I just don´t think there are economic, career and social incentives for a scientist to expose published papers to severe testing and extensive criticism. The perspectives on the issues at hand are governed or heavily influenced by the funding processes. Processes governed by the grantors. There are few incentives to expose own and other´s ideas, hypothesis and theories to the fiercest struggle for survival – even though that is fundamental to the scientific process – fundamental to the accumulation of knowledge. Theories are merited by the severity of the tests they have been exposed to and survived – and not at all by inductive reasoning in favor of them.

“Review unto others as you would have them review unto you.”

https://twitter.com/AcademicsSay/status/647806270934638594

there are so few because scientists are smart

At least you made me chuckle, as that reply can be interpreted, in a couple of ways. I wonder which way you implied. GK

G.Karst, because the science is settled and 97% of ” climate scientists” agree. rgb is part of the 3% (the 97% get most of the grants).

Zeke’s “excellent discussion on Curry’s blog” is most interesting. To highlight the need for adjustments to the original observations, Zeke describes at length what poor quality the data is due to station moves and all manners of problems. What amazes me is why the discussion doesn’t just end there?

The discussion amounts to “of course we have to adjust the data- look how awful it is!”

That they have convinced themselves they can convert bad data to good is the real problem.

“The discussion amounts to “of course we have to adjust the data- look how awful it is!”

Actually, its more like, “we’ve merged our awful historical thermometer data to even more awful proxies, and run these through models that are even more awful than the proxies. What we need is another term for awful. Let’s go with “the best available data in the hands of the best climate scientists”.

If I “homogenized” measurements in my profession, in the same manner as is done with temperature measurements, I would end up in jail.

I work in a slightly less complicated world than climate science (health care data). I was trying to explain to a warmist (debate is the incorrect word) that I was on a project where we were no better than 60% certain the data was correct – and that was being optimistic. Most of us thought that it was closer to 50%, i.e., flip a coin.

Of course, by the time Pointy-Haired Boss had slimed all over it, we were confident (and that’s the Royal “we” of course) in the 75 to 80% range. What changed? Possibly a performance contract that wouldn’t give a bonus for 60%. We never knew, just laughed quite a bit when the data moved forward, acquiring more and more consensus as time went on. Forecasts piled on assumptions piled on tortured data.

We still have the raw data, and the emails (hello Climategate). All just in case the Auditor General asks why the numbers don’t add up…after all, we didn’t get a bonus.

do you adjust for stock splits?

inflation?

historical observationla data has to be corrected when the method of observation changes

on the other if we use raw data the record will be WARMER…

Thats right.. raw data is WARMER than adjusted data.

Mosher – I have asked the source and never got an answer. You say the raw data is warmer. Since you have worked on “world wide data” maybe you can answer this question for me. I have downloaded a lot of locations for Environment Canada for their “posted” publicly available data. It appears to be unadjusted but there is no way to tell. I don’t see warming except from LESS cold which affects the mean temperature. I have looked from 85 N TO 49 N. All much the same. Extreme Maximums and Extreme Minimums and Mean Max and Mean Min all appear to have moderated over the last 50 to 100+ years. I can think of several reasons for this, but I respect your opinion. So where is the warming?

Wayne debelke, My wife and I am are observers (20 years) we check at times to see and compare, we have not seen any deviations from our observations to what is published. Actually only once we had to correct a missed decimal, (well their 4 cm of snow was in reality 40 cm but they corrected that one in a hurry it was a big, almost record dump!)

“Mosher – I have asked the source and never got an answer. You say the raw data is warmer. Since you have worked on “world wide data” maybe you can answer this question for me. I have downloaded a lot of locations for Environment Canada for their “posted” publicly available data. It appears to be unadjusted but there is no way to tell. ”

Call me steven.

I wrote a package to get all the ENV canada data. all of it.

Its been qc’d. there is an adjusted version of it as well

Steven Mosher

That is nice to know – But where is the data?

Many years ago when I was a young engineer, a statistician I was working with, said to me “Too many engineers think that statistics is a black box into which you can pour bad data and crank out good answers.” Apparently that applies to most climate scientists also.

Basic stuff , you cannot correct for error is you do not know the magnitude and direction of the error , you can guess what the correct should be therefore running the risk of added to rather reducing the error .

That is true even in ‘magic climate ‘science ‘ so having ideas there are errors in no way justifies claiming you can correct for them when you have no or little idea of the nature of these errors.

Of course it is just ‘lucky chance ‘ that theses ‘guess ‘ always mean the error correct results in data which is more favourable to professional of climate ‘scientists’ , in the same way chicken pens designed by Fox co chicken pen designers ltd tend to poor for protecting chickening’s but good for those animals that like to eat chickens.

You mean like these guys?

What they say.

Climate Etc. – Understanding adjustments to temperature data by Zeke Hausfather All of these changes introduce (non-random) systemic biases into the network. For example, MMTS sensors tend to read maximum daily temperatures about 0.5 C colder than LiG thermometers at the same location.

http://judithcurry.com/2014/07/07/understanding-adjustments-to-temperature-data/

What He measured

Interviewed was meteorologist Klaus Hager. He was active in meteorology for 44 years and now has been a lecturer at the University of Augsburg almost 10 years. He is considered an expert in weather instrumentation and measurement.

One reason for the perceived warming, Hager says, is traced back to a change in measurement instrumentation. He says glass thermometers were was replaced by much more sensitive electronic instruments in 1995. Hager tells the SZ

” For eight years I conducted parallel measurements at Lechfeld. The result was that compared to the glass thermometers, the electronic thermometers showed on average a temperature that was 0.9°C warmer. Thus we are comparing – even though we are measuring the temperature here – apples and oranges. No one is told that.” Hager confirms to the AZ that the higher temperatures are indeed an artifact of the new instruments.

http://notrickszone.com/2015/01/12/university-of-augsburg-44-year-veteran-meteorologist-calls-climate-protection-ridiculous-a-deception/

Or just call it something else.

Steven Mosher | June 28, 2014 at 12:16 pm | [ Reply in ” ” to a prior post ]

“One example of one of the problems can be seen on the BEST site at station 166900–not somempoorly sited USCHN starion, rather the Amundsen research base at the south pole, where 26 lows were ‘corrected up to regional climatology’ ( which could only mean the coastal Antarctic research stations or a model) creating a slight warming trend at the south pole when the actual data shows none-as computed by BEST and posted as part of the station record.”

The lows are not Corrected UP to the regional climatology.

There are two data sets. your are free to use either.

You can use the raw data

You can use the EXPECTED data.

http://judithcurry.com/2014/06/28/skeptical-of-skeptics-is-steve-goddard-right/

See how easy it is.

If a fully automated, staffed by research scientists has to be adjusted. Anything for the cause.

The issues is that all the old issues that feed into why it hard to make accurate weather predictions , including the problems with taken measurements , never went away just because they decided to claim ‘settled science ‘ for political or ideology reasons . Indeed despite the vast amount of money throw at the area , it remains an oddity that or ability to take such measurements in a meaningful way has not , beyond the use of satellite , not made any real progress for some time.

” I no longer consider it remotely possible to accept the null hypothesis that the climate record has not been tampered with”

Perversely, the fact they were squirming as a result of the pause, gave them some credibility.

But now I just can’t wait until the investigations start an they start being locked up.

I agree. I think this figure alone is sufficient to suspend the immensely costly actions promoted by United Nations to curb CO2 emissions. Credit to Ton Heller who did the brilliant test and produced this curve. The best correlation which has ever been seen within climate science. Unfortunately for the proponents of the United Nations climate theory it demonstrates a correlations between adjustments and CO2:

http://www.sott.net/image/s10/209727/large/screenhunter_3233_oct_01_22_59.gif

We can use that graph to predict what the USHCN adjustments will be in the future – I’m expecting 0.4° of adjustment when CO2 hits 410 ppm.

I think a good determination of the breakpoint between the rapid warming period and the pause is to find where linear trends of the two periods meet each other. When I try this on global temperature datasets in WFT that I consider more reliable (RSS and HadCRUT3), I find this is around 2003. As for how close linear trend lines come to each other if one tries for the leading edge or the trailing edge of the 1997-1998 spike as a breakpoint (two choices where sum of standard deviation from them is minimized), they come a lot closer together when considering the 1997-1998 spike as part of the rapid warming period rather than part of the pause.

Keep in mind that Hadcrut3 has not been updated since May, 2014. And with the 2014 record in Hadcrut4 as well as a potential record in 2015, that point may not be valid.

There is plenty of scientific fraud out there, it is hardly a new thing. If your career, income and prestice depend on getting a positive result, rather than another failied theory/experiment, there is a lot of pressure to get ‘the right result’. Somif there is widespread fraud in climate ‘science’ this would hardly be new. Here are a few examples. Google has hundreds of them.

http://www.the-scientist.com/?articles.list/tagNo/2642/tags/scientific-fraud

Anyone in favor of 100% renewables by 2030, apart from Naomi? The 15,000 people that died in the last UK winter from lack of heating might have disagreed.

http://www.rt.com/uk/317043-carney-warns-climate-chaos/?utm_source=browser&utm_medium=aplication_chrome&utm_campaign=chrome

There is a thread over at Dr Spencer’s regarding the State of MN holding a court case on the negative effects of fossil fuels. Wonder how they will do under a solar panel in -40 conditions.

I find it ironic that the State of MA sued to have the federal government enforce CO2 restrictions due to its risk from global warming. A short time later it was under 9 feet of snow.

I always have to chuckle a bit when a warmist will go on about the almost saintly climate scientist plugging away for a pittance, while his or her oil-insustry funded opponent reels in copious amounts of cash.

Its as if climate science exists on its own little island of purity. No other discipline is put on such a high pedestal, probably because, compared to say, medicine, warmists don’t equate Big Green with Big Pharma.

I had never heard of Big Plant before, the greedy bastards apparently have deep pockets:

“A nearly ten-year-long series of investigations into a pair of plant physiologists who received millions in funding from the U.S. National Science Foundation has resulted in debarments of less than two years for each of the researchers.”

http://retractionwatch.com/2015/09/02/nsf-investigation-of-high-profile-plant-retractions-ends-in-two-debarments/

I believe the climate science of being able to predict what they are going to achieve given their fraud is probably becoming as (if not more) important than exposing any fraud.

In my estimate I place an increase on taxes and regulations to any new startup companies in the West.

As would be the natural inclination response to shipping large crates of imports from China and India with their heavy latent CO2 loads and pollution, more local production would be the answer, also given the current blight filled eco-nomic situation globally.

However those new and local production facilities shall not be made organically… nor given the power to be instituted organically by local peoples. Therefore! all production will remain in the East with China and India, while “global-politics” are given more direct access by a world consensus upon those sovereign nations .

Yes, large amounts of fossil type fuels will still need be consumed to ship all those consumer household items to doors in the west in the interim Until long term ubernatural political/economic conversion energy plans away from fossil type fuels with investment time and money coming directly controlled from those same fossil producers.

Conclusion: CO2 has virtually nothing to do with climate change and human CO2 emissions even less so (lets keep our eve on the ball).

Confirmation bias is extremely difficult thing to defeat. For instance, consider the adjustments for changes in sensors. Studies have concluded that LiG sensors introduce a cooling bias compared to MMTS and adjustments are made accordingly. But how much of that is due to the housing of the sensors? If some or all of the differences relate to the fading of latex paint in MMTS sensor housing that leads to more sun absorption, then old MMTS sensor units would be warmer than new LiG sensors. The problem is that the fading would have led to a warming bias due to a slow increase of temperatures over time that then get locked in when a switch is made to LiG sensors that benefit from an adjustment.

This one of many many potential issues that may be resolved unsatisfactorily because of confirmation bias.

Please, remind us what UAH and RSS measure. I know it is not instrument temperature , but irradiance. OK. but 1), is it a mean temperature you obtain for the “lower troposphere” (from ground surface to approwimately 12 000 m) and then, what is the relevance to the altitudes where we live? You give us “anomalies”, but what is the yearly average temperature they refer to?

2) how come there is such a huge difference from one month to the next, one would think there is some amount of inertia in the system?

Please see:

http://wattsupwiththat.com/2015/09/04/the-pause-lengthens-yet-again/

The temperature of the lower troposphere would vary with height, however the change from one month to the next is what we are really interested in. In general, as the anomaly of the troposphere warms, the surface anomaly should also warm. Unlike oceans with a huge volume and a huge heat capacity, the air can warm and cool quickly. When the sun goes down, it can very quickly cool off, especially if the relative humidity is low.

Anomaly relative to what? In other terms, please give me the absolute temperature of the “lower troposphere”, which is a rather thick slice of the whole atmosphere :above, it is mostly “thin air”!.

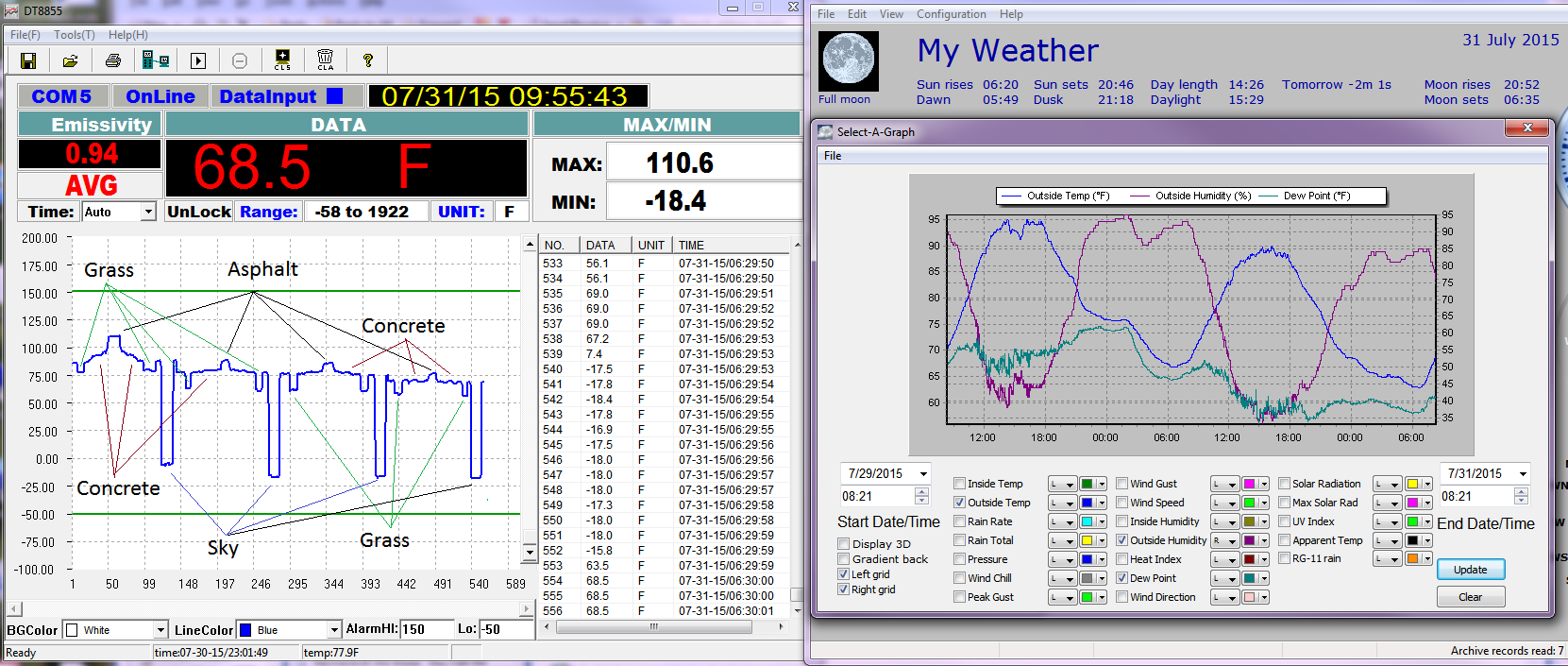

Here is a sample of IR readings from a clear sky day, starting at 6:30pm, 11:00pm, 12:00pm, then 6:30am.

You can see how cold the sky is in 8u-14u, and how the surface warms and cools.

The ground cools until Sunrise.And the Grass acts as if it’s insulation,

ie trapped air allows the top surface to warm and cool quickly.

Yes this doesn’t show the impact of Co2, but you can add it back in, but even at that there is a big window open to space that is cold.

UAH says “The global, hemispheric, and tropical LT anomalies from the 30-year (1981-2010) average”. So since the August 2015 anomaly was 0.28 C, that means that August 2015 was 0.28 C warmer than the average August value for the 30 year period from 1981 to 2010.

There would be a huge range of values for different heights.

For example, at 7.5 km, the temperature averages about -36 C during the year. See: https://ghrc.nsstc.nasa.gov/amsutemps/amsutemps.pl?r=003

And this is why some people consider possibly mistakenly that clouds are ‘warming’

I would like to see a study of night time cloudiness and relative humidity.

I suspect that on cloudy nights the humidity is higher such that the atmosphere contains more energy, and therefore takes longer to give up that energy thus staying warmer for longer. I suspect that it is not so much that clouds increase the DWLWIR and this increased back-radiation inhibits the rate of cooling, but rather a facet of two natural processes; higher humidity, and clouds inhibiting convection, and both of these natural processes slow down the rate of cooling.

rgb

From the previous post on the subject, August 14th, 2015:

http://wattsupwiththat.com/2015/08/14/problematic-adjustments-and-divergences-now-includes-june-data/#comment-2007402

The smoking gun that this egregious alteration of the USHCN is malfeasance is glaringly in the graph credited to Steve Goddard that shows the value of temperature adjustments (-1 to +0.3C(?)) from the 1880s to present in a straight line relationship with R^2 of ~0.99!!! Since delta T should be a logarithmic relationship with delta CO2, an honest, real adjustment should not track CO2 linearly at the levels of CO2 already in the atmosphere. This is virtually prima facie evidence that they constructed the adjustment (their algorithm) directly from such a relationship and that it has nothing to do with the rationalizations they present. Am I wrong here?

Steve Goddard’s T adjustments/CO2 growth for USHCN should become the iconic graph for skeptics that Mann’s now discredited hockey stick was for the IPCC and Algor(ithm)’s “Inconvenient Truth”. We should call it the “Pool Cue”- it puts the Adjusters behind the 8-ball.

Nah, let’s suppose in good faith, surface temperature datasets are correct. Even then there is some serious explaining to do. I mean warming at the surface is much faster than that of the bulk troposphere, measured by satellites.

And that’s a huge problem for theory. It means average environmental lapse rate is decreasing. That’s the opposite of all model predictions, so no, exaggerating surface warming rate can’t be done in order to make a better fit with theory, because it actually makes things worse. Much worse.

Scale height of satellite measured lower troposphere temperature is about 3.5 km, but it includes much of the troposphere from the surface up to 8 km or so. If rate of surface warming is faster than average temperature increase in this thick layer, that is, the bottom of it, the boundary layer warms faster than the whole, the top layers are certainly warming less than that or even cooling. That means temperature difference between top and bottom is decreasing with time.

According to theory the upper troposphere should warm some 20% faster than the surface globally and 40% faster in the tropics (a.k.a. “hot spot”). However, all datasets as they are presented show it is the other way around. If anything, there is a “cold spot”, not a hot one.

Therefore those exaggerating surface warming are doing a disservice to the warmist cause. They are like a guy sitting on a branch, using a chainsaw from the inside and grinning widely. It goes rather smoothly, until the whole thing, including him, comes crashing down.

Uh oh. I was wrong. Don’t know why, tired perhaps. Or was just testing if anyone paid attention.

As higher up in the troposphere it is inherently colder, if the surface warms faster, temperature difference between bottom and top and lapse rate with it is, of course, increasing, not decreasing, as stated above.

And that’s exactly the problem for theorists. The more humid the atmosphere, the smaller the lapse rate. Therefore an increasing lapse rate indicates a troposphere which is getting drier. However, with increasing surface temperature, especially over oceans, rate of evaporation surely increases, which means more humidity, not less.

The only solution to this puzzle is that while humidity does increase in the boundary layer, it is decreasing higher up, because precipitation is becoming more efficient.

This is a strong negative water vapor feedback, as H2O is a powerful greenhouse gas and its concentration is decreasing exactly where it matters, in the upper troposphere.

No computational general circulation climate model replicates this result.

But there is a limit to how much water vapor a cubic volume of air will hold, and every night any excess water is removed.

If this hurricane hit land, consider the volume of water that storm was carrying.

Linear regression of yearly temperature averages shows that LT variability, as shown by satellites, is only ~0.7 as large as surface variability, as shown by properly vetted stations. The straightforward physical interpretation is, of course, that thermalization takes places largely at the surface and only a muted version of the surface record is seen aloft. A similar diminution with altitude is also seen in the diurnal cycle. I’m not sure that present-day GCM’s are at all realistic.

@1sky1,

The continental land masses are the cooling surfaces for warm tropical air that’s full of water vapor. And this hurricane is carrying a lot of water, I estimated David (iirc) dropped about 1/3 of the water in Lake Erie on North America 5-10 years ago.

micro6500:

My remark about disparate scales of temperature variability aloft was intended to point to the importance of moist convection in heating the real atmosphere, rather than the radiation processes so prominently featured in GCM calculations. This mechanism operates over both oceans and continents and is not reliant upon any supposed “cooling surface” provided by land.

The evidence of adjusting data is here and GISS is nothing like RSS or UAH which are almost identical in the precision of data. The precision of data using GISS is hugely different and changed by estimated and infilling. GISS global temperatures are increasingly deliberately changing to be similar to the 1997/98 El Nino, almost on a yearly basis.

http://i772.photobucket.com/albums/yy8/SciMattG/GlobalvDifference1997-98ElNino_zps8wmpmvfy.png

Global temperatures never warm or cool gradually to fit an almost perfect slope like shown in the above graph, so this is deliberate human adjustments added not representing true global temperatures. I am extremely convinced this is down to tampering of data and becomes significant after 2001.

[SNIP Fake commenter, multiple identities, we are on to you David. -mod]

That’s just another reason why satellite are more accurate than surface measurements because they don’t measure two different things.

[SNIP Fake commenter, multiple identities, we are on to you. -mod]

Tell me why they don’t use surface measurements for sea level rise, sea ice extent, snow coverage in NH or glacial coverage of Greenland then if surface is more accurate?

Firstly you need to convince me why 0.1% coverage of the planets surface is more accurate than satellite data. How would you measure the things mentioned above by 0.1% coverage?

They don’t use satellite for temperature because it is not supporting their political government agenda.

Temperatures in the CET showed least variability during the 17th century because the resolution was only 1 c. Later they showed more variability because the accuracy went down to 0.5 c.

correction – “accuracy went up”

[SNIP Fake commenter, multiple identities, we are on to you David. -mod]

The El Nino should start warming the continental land masses sometime in November. (2 months in front of El Nino 1997/98)

http://i772.photobucket.com/albums/yy8/SciMattG/RSS%20Global_v1997-01removal_zpszk83g0xi.png

The liberal Eco-Terrorists are getting desperate !!!!!

[SNIP Fake commenter, multiple identities, we are on to you. -mod]

http://www.scientific-alliance.org/scientific-alliance-newsletter/observer-bias

The tampering is obvious just looking at the difference between Northern and Southern hemispheres:

http://i1136.photobucket.com/albums/n488/Bartemis/temperatures_zpsk5yjxh2s.jpg

For over 100 years, Northern and Southern were in lock step. But, since 2000, the Northern diverges prominently from the Southern.

Satellite temperatures agree with the Southern data set.

What’s even worse is the station data is much better quality overall in the NH and the difference to cause that much extra warming can’t be explained by the lack of polar coverage excuse. It is sticking out like a sore thumb that deliberate tampering of data has caused the recent pause to warming in the surface data sets.

The difference between NH HADCRUT 3 & 4 also shows the confirmation bias.

http://i772.photobucket.com/albums/yy8/SciMattG/NHTemps_Difference_v_HADCRUT43_zps8xxzywdx.png

totally different data sources. CRU3 uses CRU adjustments

CRU4 uses different data and CRU do NO ADJUSTMENTS to the data.

Why? because of climategate.

“totally different data sources. CRU3 uses CRU adjustments

CRU4 uses different data and CRU do NO ADJUSTMENTS to the data.

Why? because of climategate.”

Climategate has nothing to do it although it did highlight their intentions and agenda.

You are correct CRU do not do adjustments to the data like GISS, uses some different data, but also still adjusts data. Although the changes since the 1980’s have reduced cooling in the NH during between the 1940’s and 1970’s by over half. The peak warming in late 1930’s and early 1940’s has been reduced relative to 2000’s. Cooler periods after the 1940’s has been warmed and also the 1990’s onward have been warmed.

The reason for the confirmation bias was due to CR4 using especially added different data including homogenized model that does adjust data. Hundreds of extra stations were also added after previously reducing them by thousands. Just adding more stations causes a warm bias during warmer periods and cooler bias during cooler periods. It adds the chance that one of them will hit a hot spot and there is no coincidence that numerous extra stations have been added were satellite data have been showing warm regions. Numerous added stations were unavailable during some of the warmer periods in the past so this enhances the warm bias recently. With El Nino’s occurring more often over the last few decades there was only going to be one result, especially when we hit a strong one comparing different sampling now, compared to the past.

“2.2. The Land Surface Station Record: CRUTEM4

[15] The land-surface air temperature database that forms the land component of the HadCRUT data sets has recently been updated to include additional measurements from a range of sources [Jones et al., 2012]. U.S. station data have been replaced with the newly homogenized U.S. Historical Climate Network (USHCN) records [Menne et al., 2009]. Many new data have been added from Russia and countries of the former USSR, greatly increasing the representation of that region in the database. Updated versions of the Canadian data described by Vincent and Gullett [1999] and Vincent et al. [2002] have been included. Additional data from Greenland, the Faroes and Denmark have been added, obtained from the Danish Meteorological Institute [Cappeln, 2010, 2011; Vinther et al., 2006]. An additional 107 stations have been included from a Greater Alpine Region (GAR) data set developed by the Austrian Meteorological Service [Auer et al., 2001], with bias adjustments accounting for thermometer exposure applied [Böhm et al., 2010]. In the Arctic, 125 new stations have been added from records described by Bekryaev et al. [2010]. These stations are mainly situated in Alaska, Canada and Russia. See Jones et al. [2012] for a comprehensive list of updates to included station records.”

“Note that the formulation of the homogenization model used to generate ensemble members is designed only to allow a description of the magnitude and temporal behavior of possible homogenization errors to contribute to the calculation of uncertainties in regional averages. Change times are unknown and chosen at random, so realizations of change time will be different for a given station in each member of the ensemble. Additionally, the model used here does not describe uncertainty in adjustment of coincident one-way step changes associated with countrywide changes in measurement practice, such as those discussed byMenne et al. [2009] for U.S. data.”

http://onlinelibrary.wiley.com/doi/10.1029/2011JD017187/full

As someone in the Southern Hemisphere this is a worry, I mean if this continues it looks like we may be flooded by NH Climate Change™ refugees.

For UAH6.0: Since November 1992: Cl from -0.007 to 1.723

This is 22 years and 9 months.

For RSS: Since February 1993: Cl from -0.023 to 1.630

This is 22 years and 6 months.

For Hadcrut4.4: Since November 2000: Cl from -0.008 to 1.360

This is 14 years and 9 months.

For Hadsst3: Since September 1995: Cl from -0.006 to 1.842

This is 19 years and 11 months.

For GISS: Since August 2004: Cl from -0.118 to 1.966

This is exactly 11 years.

Don’t these numbers show that – even though the time spans are different – the linear fits have such large uncertainties that there is no significant difference between all 5 data sets?

What the numbers show me is that the satellites are in a completely different league than GISS and Hadcrut4. And I do not see how both can be right.

Well, apart from them not measuring the same thing, I don’t see how you can argue about any difference if the uncertainties in the analysis are this big. Which criterion defines your “different league”?

There is no formal definition, but in my opinion, if RSS and UAH say there is no warming at all for over 18 years (slope of 0) and others say we have statistically significant (at 95%) warming for over 15 years, then I believe they are in a different league.

Well that does not seem a valid inference based on the numbers above. RSS and UAH actually say that the warming over the past 20+ years might well be higher than what Hadcrut allows it to be over the last 14 years.

Good point!

Hooray!

The end of the world is cancelled!

Good night folks.

Drive safely.

And if someone comes up (probably again) with a cure for cancer, then they had better watch out for the assassins hired by the drug industries who are estimated to pull in around 100 billion dollars in treatments over the next twelve months in the US alone (Just think about what happened to the person who invented 1, The everlasting razor blade 2. The everlasting match 3. The rubber tyre that never wears down etc etc etc!).

That various adjustments to observed temperatures made by UHCN have the net effect of producing a significant “global” upward trend is unmistakably evident to anyone who has been tracking station data world-wide for more than just recent decades. But the reasons why AGW salesmen and their devotees persist in their faith in being able to make patently unsuitable data into something more realistic are far less clear. They seem to be rooted in the premise that all temperature time-series should conform to the simplistic model of deterministic trend plus random noise. This mind-set prompts a suspicion of any data that exhibits strong oscillatory components or fairly abrupt “jumps” is somehow faulty and needs to be “homogenized.”

Meanwhile UHI-corrupted data, which tends to conform to their model, is taken at face value. What they wind up producing are manufactured time series that exhibit bogus trends while concealing natural variability–both temporal and spatial.

“That various adjustments to observed temperatures made by UHCN have the net effect of producing a significant “global” upward trend is unmistakably evident to anyone who has been tracking station data world-wide for more than just recent decades. :

USHCN is 1200 stations in the US.

A berkeley earth we dont use it.

The NET EFFECT of ALL adjustments is to cool the record

Steven Mosher claims “net effect is to lower temperatures”

If so, why is BEST warmer than HADCRU3 since 1850?

http://woodfortrees.org/plot/hadcrut3gl/trend/plot/best/from:1850/trend

And plotting BEST data I now see it begins in 1800 rather than 1850 like HADCRU. Where did you dig up those extra thermometers Steven Mosher, and how many were in the Southern Hemisphere in 1800?

Please provide a plot by year showing net effect of adjustments by year is cooling.

Mosh:

what exactly do you mean?

If I reduce the temperatures over the period of time going from say, 150 years ago to ten years ago, and leave the remaining data unchanged, it may be true that I have “cooled the record” while at the same time, increased the rate of warming my corrected data implies..

So, i find your use of language to be imprecise… what exactly do you mean when you write

“cool the record”?

just asking, since your use of language, to me, at least, is notoriously imprecise…more like a sonnet than scientific literature.

Just saying.

That claim needs proof. Please plot the trends on the adjusted and unadjusted data for the same periods that Brown and Wozcek have use above.

Hint: Cooling the past warms the present.

Sorry Werner I seem to have got your surname and forename melded together in my head. I blame my extreme age and pickled brain.

mods – feel free to correct the last post to read Brozek.

“UHCN” was simply mistyped for GHCN. That the net effect of adjustments upon “global average” temperatures is an increase in trend is obvious from the different “versions” of such averages produced over the decades by NOAA. Berkeley Earth’s methodology suffers from similar problems, but with additional peculiarities. The claim that there’s a net COOLING effect upon actual century-long records is rubbish.

I am curious if we are not understanding each other properly. See the post here by Zeke Hausfather:

http://wattsupwiththat.com/2015/10/01/is-there-evidence-of-frantic-researchers-adjusting-unsuitable-data-now-includes-july-data/#comment-2039768

His graphs clearly show two different things.

One is that if you take the average temperature before adjustments and compare that to temperatures after adjustments, the average afterwards is indeed colder over the entire record.

The second thing to note is that the most recent 15 years are warmer after the adjustments. So exactly what are we talking about when we talk about cooling?

I believe some are talking about the average temperature over the last 135 years while others are talking about the slope changes over the last 15 years.

This article deals with slope changes over the last 15 years and getting rid of the pause by NOAA and GISS.

‘Mosh:

what exactly do you mean?”

I mean this.

if you look at ALL OF THE DATA, land and ocean

if you look at all the adjustments done to all of the data.

1. The NET EFFECT is to DECREASE the warming trend from the begining of the records to today.

Nobody is adjusting data to make the warming appear worse than it is.

SST are adjusted down

SAT is adusted up

the net effect is a downward adjustment.

take off the tin foil hats

OK Let us take Hadcrut3 and Hadcrut4 from 1850 to today. And let us take the slopes of each from 1850 to today as well as from 2000 to the latest. Here are the results:

Hadcrut3 from 1850: 0.0046

Hadcrut4 from 1850: 0.0048

Hadcrut3 from 2000: 0.0022

Hadcrut4 from 2000: 0.0081

See:

http://www.woodfortrees.org/plot/hadcrut3gl/from:1850/plot/hadcrut3gl/from:1850/trend/plot/hadcrut4gl/from:1850/plot/hadcrut4gl/from:1850/trend/plot/hadcrut3gl/from:2000/trend/plot/hadcrut4gl/from:2000/trend

Please explain the apparent contradiction.

But Mosher says…

“If you use raw data then the global warming is worse.

The net effect of all adjustments to all records is to COOL the record

The two authors of this post don’t get it.

Adjustments COOL the record..

Cool the record. Period. [snip]”

Fine. Mosher has made that point here many times. It should be easy to produce a prior version of HadCrut or GISS that has a greater warming trend than the current version. After all, adjustments make the warming trend weaker.

I’ve asked for somebody to produce an example. Any example of an older version with a stronger warming signal. Nobody has. I’m still waiting. Frankly, I don’t know if it exists or not.

Werner:

No doubt there’s plenty of room for ambiguity and misunderstanding here. I am speaking strictly about the adjustments made by NOAA relative to original station data in nearly-intact, century-long records while producing GHCN versions 2 and 3. (This excludes very short, gap-riddled series that carry little useful information which some nevertheless insist upon including.) My experience is that, almost invariably, the trend of such records was progressively increased in the aggregate by the adjustments, IIRC, a few years ago WUWT commenter “smokey” posted numerous “flash” comparisons exhibiting precisely such changes in version 3.

It’s not entirely clear what data Zeke is using, but it’s obvious that, by any sensible use of the term, the past has been warmed–not cooled–by adjustments. This appears contrary to the trend-increasing effect produced by NOAA. Given the oscillatory spectral signature of surface temperatures, it is not very meaningful to think of “trends” much shorter than a century. Nevertheless, it’s sheer polemical cant to ignore UHI while using the apples and oranges of hybrid indices to claim that: “Nobody is adjusting data to make the warming appear worse than it is. SST are adjusted down, SAT is adusted up; the net effect is a downward adjustment.” That’s the legendermain of salesmen who are disinterested in carefully vetted data.

I have no problem believing the past was cooled. If the past is cooled, but the present is unchanged, then the slope or warming rate increases. However if the past is cooled and the most recent 15 years are warmed, then the warming trend is even faster.

Werner:

But Zeke’s mysterious anomaly graph shows warming–not cooling–of the past due to adjustments.

True. I note it shows warming before 1945, cooling from 1945 to 1975, and warming from 1975 to date. Presumably, the carbon dioxide was not a significant factor before 1945. And if you wanted to make adjustments to show it was a factor after 1945, you would cool 1945 to 1975 and warm 1975 to date. Was this intentionally done?

Mary Brown says:

It should be easy to produce a prior version of HadCrut or GISS that has a greater warming trend than the current version. After all, adjustments make the warming trend weaker. I’ve asked for somebody to produce an example… of an older version with a stronger warming signal.

Good point.

But we know that about 97% of all adjustments result in greater apparent warming, not less. And certainly not cooling.

But Mosher and Zeke are insisting that the adjustments actually reduce the global warming trend.

If that is the case, why does GISS v3 show a greater warming trend than GISS v2 ?

If that is the case, why does Hadcrut v4 show a greater warming trend than Hadcrut v3 ?

There must be a simple explanation.

MB,

If there is, I haven’t seen it.

“And plotting BEST data I now see it begins in 1800 rather than 1850 like HADCRU. Where did you dig up those extra thermometers Steven Mosher, and how many were in the Southern Hemisphere in 1800?”

1. HADCRUT 4 doesnt use all the extant data.

2. We use data from GHCN-D, GCOS.. wait where did I put that

http://berkeleyearth.org/source-files/

OPPS I put all 14 data sources on the web..

3. Our data starts in 1750

4. Southern hemisphere

http://berkeleyearth.lbl.gov/auto/Regional/TAVG/Figures/southern-hemisphere-TAVG-Counts.pdf

BUT WAIT there is more

historical archives are being digitized for the southern hemisphere

That new data will be “out of sample” so you can test the prediction we made with a few stations.

http://www.surfacetemperatures.org/databank/data-rescue-task-team

And

http://www.ncdc.noaa.gov/climate-information/research-programs/climate-database-modernization-program

http://www.omm.urv.cat/MEDARE/index.html

For my taste these programs are not getting enough funding

this one was really cool

http://www.met-acre.org/

“If that is the case, why does GISS v3 show a greater warming trend than GISS v2 ?

If that is the case, why does Hadcrut v4 show a greater warming trend than Hadcrut v3 ?

There must be a simple explanation.”

There is no such thing as GISS v3 or v2

Hadcrut 4 is warmer because they included more data.

There allgorithms were changed also. They no longer use the “value added” CRU adjustments.

Yup, legacy of climategate. we wanted their data and code to see what their adjustments were.

Instead, they STOPPED doing the CRU adjustments. They now source data from NWS and dont touch

it. No more ‘value added” CRU adjustments..

So ya, CRU dropped their adjustment code and the record warmed.

Goodbye tin foil hats.

“There is no such thing as GISS v3 or v2”

This is what I am referring to.

http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts.txt

http://data.giss.nasa.gov/gistemp/tabledata_v2/GLB.Ts.txt

As they went from v2 to v3, the global warming trend got stronger.

I am lonoking for similar type of data I can link to where the trend did not get stronger.

WordPress doesn’t allow replies to deeply indented comments. Re your post October 2, 2015 at 8:45 pm

The first recorded landing on Antarctica in the modern era is 1821 (no, I don’t want to get into arguments about Piri Reis map and “knowledge of the ancients”). Members of the crew of an American sealing ship set foot on shore for an hour or so https://archive.org/stream/voyageofhuronhun00stac/voyageofhuronhun00stac_djvu.txt on page [50]

It would be many more years before anybody actually got on top of the ice shelf which covers most of Antarctica. When does decent coverage of Antarctica really begin? Or for that matter the sea surface temperature of the southern oceans?

Werner:

Actually, none of the “global” index manufacturers deliberately cools the period 1945-1975, during which there was a natural deep dip in temperatures throughout the globe. On the contrary, they either conceal it by burying valid non-urban records in a plethora of UHI-afflicted airport stations newly created after WWII or by cooling the data prior to the war. The effect in both cases is to straighten the time-series of anomalies into one that shows only a post-war “hiatus” and a steeper “trend.” All of this is done in the name of “homogenization,” whereby the data are deliberately altered to conform to the aggregate properties of the entire UHI-corrupted data base. Black barbers have a term for such cosmetic alterations; they call it “conking.”

Granted, it depends on the data set. I was talking about:

http://wattsupwiththat.com/2015/10/01/is-there-evidence-of-frantic-researchers-adjusting-unsuitable-data-now-includes-july-data/#comment-2039768

where the black line is below the blue line from 1945 to 1975.

Werner:

Compared to truly egregious adjustments, the discrepancy you point to is miniscule.

True. I should have pointed this out:

It is found here:

http://wattsupwiththat.com/2015/07/09/noaancei-temperature-anomaly-adjustments-since-2010-pray-they-dont-alter-it-any-further/