By Christopher Monckton of Brenchley

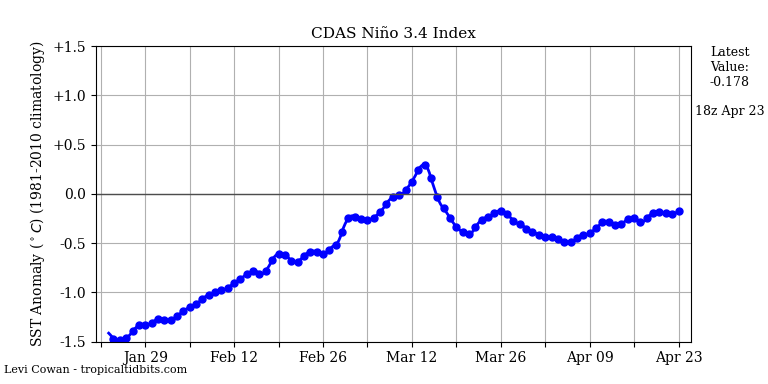

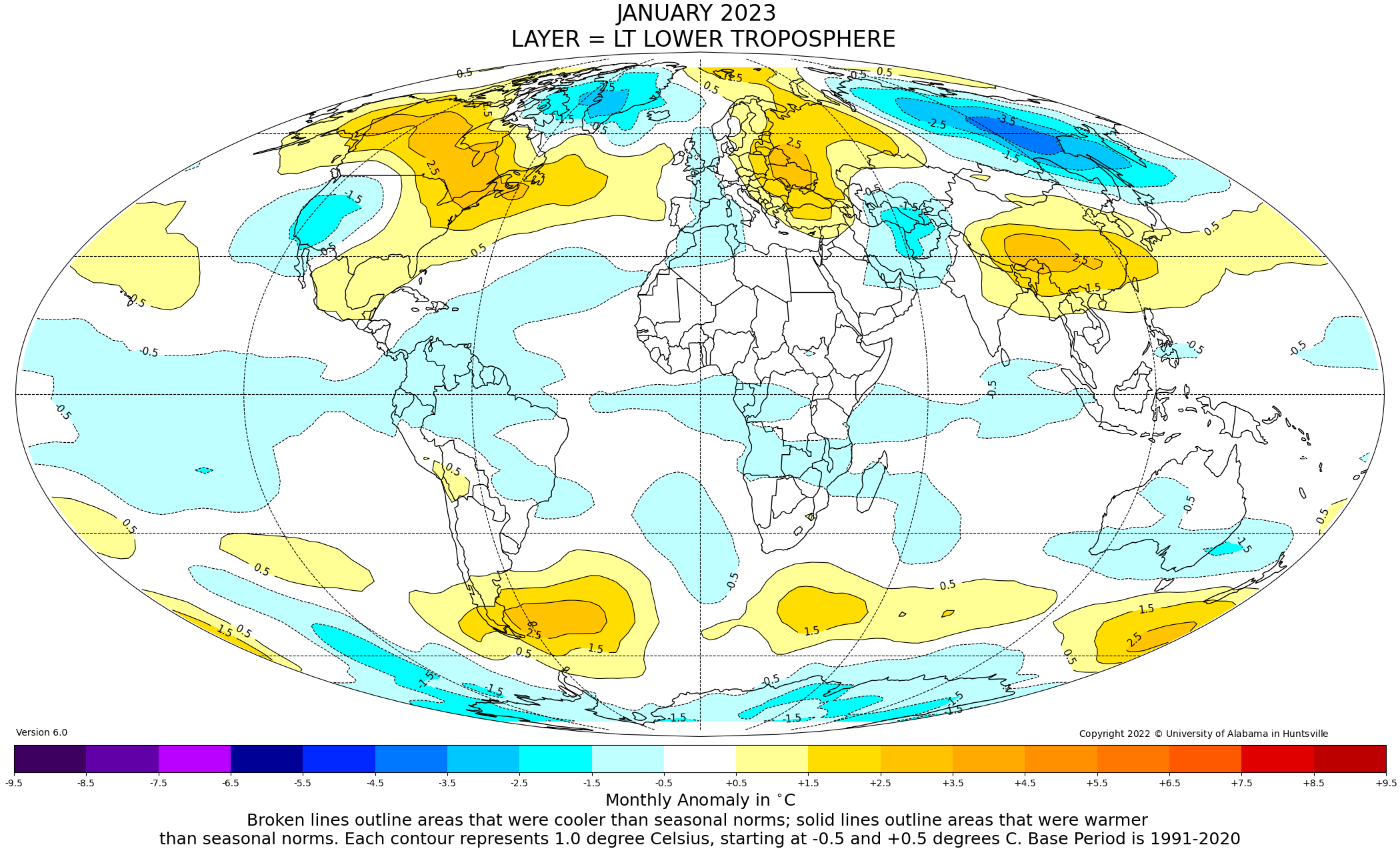

As the third successive year of la Niña settles into its stride, the New Pause has lengthened by another month (and very nearly by two months). There has been no trend in the UAH global mean lower-troposphere temperature anomalies since September 2014: 8 years 5 months and counting.

As always, the New Pause is not a prediction: it is a measurement. It represents the farthest back one can go using the world’s most reliable global mean temperature dataset without finding a warming trend.

The sheer frequency and length of these Pauses provide a graphic demonstration, readily understandable to all, that It’s Not Worse Than We Thought – that global warming is slow, small, harmless and, on the evidence to date at any rate, strongly net-beneficial.

Again as always, here is the full UAH monthly-anomalies dataset since it began in December 1978. The uptrend remains steady at 0.134 K decade–1.

The gentle warming of recent decades, during which nearly all of our influence on global temperature has arisen, is a very long way below what was originally predicted – and still is predicted.

In IPCC (1990), on the business-as-usual Scenario A emissions scenario that is far closer to outturn than B, C or D, predicted warming to 2100 was 0.3 [0.2, 0.5] K decade–1, implying 3 [2, 5] K ECS, just as IPCC (2021) predicts. Yet in the 33 years since 1990 the real-world warming rate has been only 0.137 K decade–1, showing practically no acceleration compared with the 0.134 K decade–1 over the whole 44-year period since 1978.

IPCC’s midrange decadal-warming prediction was thus excessive by 0.16 [0.06, 0.36] K decade–1, or 120% [50%, 260%].

Why, then, the mounting hysteria – in Western nations only – about the imagined and (so far, at any rate) imaginary threat of global warming rapid enough to be catastrophic?

How long is the cooling trend, 6-7 thousand years?

Make that 50 million years. Ahh, the Early Eocene days were warm days.

You don’t get weather like that any more. The planet is going to the dogs.

Indeed, our grandchildren will not know what the ‘climate change’ was.

Vuk,

For Australia, the Monckton method shows a negative temperature trend of 10 years 9 months, starting in March 2012.

If I wanted to spin a story, I could assert that no Aussie school child under 11 years old has felt any warming effect while being taught that global warming is an existential crisis.

Wake up, educators.

Geoff S

http://www.geoffstuff.com/uahfeb2023.jpg

The US 48 state average temperature trend using NOAAs USCRN weather station system has been relatively flat since 2005.

Global Temperature: | Watts Up With That?

USCRN affects 330 million people. While the global average temperature affects no one, because no one actually lives in the global average temperature!

We could say no US 48 state resident has experienced more than a tiny amount of global warming since 2005 — and that’s 18 years. That beats your 10 years and 9 months, using an official government temperature organization’s own numbers too!

RG said: “The US 48 state average temperature trend using NOAAs USCRN weather station system has been relatively flat since 2005.”

The trend is +0.58 F/decade (+0.32 C/decade). I invite you download the data and see for yourself.

RG said: “That beats your 10 years and 9 months, using an official government temperature organization’s own numbers too!”

Using the Monckton Method the USCRN pause is 0 months. That is a lot less than 10 years and 9 months.

I wrote that the US average temperature trend was relatively flat. I did not write that it was flat.

The trend appears to be flat since 2011. Thats from an eyeball view of the chart at the link below. I revise my claim to 11 years, rather than 18 years, and that still beats the Australians.

Those 11 years of flat temperatures included the largest 11-year increase of global CO2 emissions in the history of of the planet. And you can store your statistics where the sun don’t shine, bedofwax.

Global Temperature: | Watts Up With That?

Ignoring NOAA, here in Michigan there has been slight warming in the winters since the 1970s. I noticed mainly because we lived in the same home since1987 and four miles south in an apartment for 10 years before that. If we had moved 20 miles north in those years, the I might not have noticed.

I don’t need any government scientists to tell me how much warming I have personally experienced where I live.

Especially people from NOAA; who I do not trust. They have two different weather station systems with very different weather station siting. But somehow, magically, they both produce almost the same adjusted data. That is not by chance — hat is by science fraud, in my opinion.

What is the range of C/decade that would make something relatively flat?

relatively flat? maybe you head is relatively pointed

Here comes Masher the fool with brilliant not funny in any way put-down

USCRN affects 330 million people. While the global average temperature affects no one, because no one actually lives in the global average temperature!

you win stupidest argument ever.

You would be an expert on stupid, Masher.

The average temperature is not a real temperature, it is a statistic. A statistic is not an actual temperature.

I tried to explain this simply so even a 12year-old child could understand, Go out and find a 12 year-old child to explain it to you.

RG said: “The average temperature is not a real temperature, it is a statistic.”

The Tmax and Tmin you see reported for each station…both 1-minute averages. Do you think they aren’t real?

Because Tavg IS a statistic. What is the variance of that distribution. It is supposed to represent the midpoint of Tmin and Tmax.

Daytime temps resemble a sine curve, yet nighttime temps are an exponential decay. Sometime in late afternoon, the sun’s insolation energy is less than the earth’s radiation and the decay begins. Do you think Tavg is a true average temp or is it simply a statistic describing the midpoint between max and min.

Tmin and Tmax are averages too. And you’ve already said an average of an intensive property isn’t real. I already know your position on the matter because you’ve made it abundantly clear. I’m asking how deep Richard Greene’s conviction goes.

Again, you have no idea why the measurements are averaged. You are not a physical scientist and nothing in statistics will tell you why a 1 minute average is used.

Do you want to know why? My guess is that you really don’t care!

So you think the average temperature is Tmax and Tmin?

ROFL!!

Why do you think the “average” over 1 minute is used? My guess is that you have not one single clue as to why!

https://www.climate.gov/news-features/understanding-climate/climate-change-global-temperature

Earth’s temperature has risen by an average of 0.14° Fahrenheit (0.08° Celsius) per decade since 1880, or about 2° F in total.

The rate of warming since 1981 is more than twice as fast: 0.32° F (0.18° C) per decade.

2022 was the sixth-warmest year on record based on NOAA’s temperature data.

The 2022 surface temperature was 1.55 °F (0.86 °Celsius) warmer than the 20th-century average of 57.0 °F (13.9 °C) and 1.90 ˚F (1.06 ˚C) warmer than the pre-industrial period (1880-1900).

The 10 warmest years in the historical record have all occurred since 2010

UAH temperatures dropped below the zero line in January. If you ignore “trends”, and just trace your finger from the far right to the far left of the UAH graph, you discover our mean temperatures world-wide were the same as they were in May of 1980. Yes, that was the top of a peak and current temperatures are down at the bottom of the dip, but still, they are the same. In essence, we are back where we were, and we have spent trillions making much ado about nothing.

If the argument is that selecting a top of a local peak as the start is valid then surely the reverse is valid too. That gives us (-0.04 C – -0.67 C) / 461 months * 120 months/decade = +0.15 C/decade.

Bring back the dinosaurs!

Those were the days.

We get deer in our yard almost every day

Up to 14 at one time. After 36 years of watching them eat everything green, we could use some new entertainment. Dinosaurs would be exciting.

I have 40-50 at a time at my bird feeders. It is not cheap feeding them. Just before the cold comes and then afterwards when the ground is snow covered, they really hit it hard!

I have an 8 lb, squirrel proof feeder and two seed block cages I keep stocked. When the weather gets hard they will empty that feeder in two days and the seed blocks will be gone in 1 day.

I also cast feed on the ground for the ground feeders like the morning doves and the cow birds.

LIke I said, it ain’t cheap, and costs me about $30.00 a week to do it, but it is worth every dime.

Good job

We have a four-cake suet feeder and one thistle feeder. We also have a heated bird bath about 10 feet from our living room window. Entertainment for our indoor cat too.

We once had five deer lined up to drink some water from the bird bath. Two males got in a fight to see who would drink first. The males always go before the females.

I once helped break up a summer fight between two male deer whose antlers were locked together. They were tearing up the yard. Afterwards, I realized that was risky and I should have stayed away.

I forgot to mention two hummingbird feeders in warmer weather that the wife fills with freshly made sugar water every few days.

We’ve also had ground hogs, skunks, raccoons, opossum, rabbits, squirrels and hawks live in a nest next door — we used to have a lot of chipmunks but they apparently make good hawk food.

I love the animals but always chase pesky kids off my lawn. I think old guys are supposed to do that. It’s in the Constitution. … I could live without the skunks too. … Killed the moles with poison.

I had a kangaroo in my driveway once, and another on a walk near home late January. I live in an urban area.

No kangaroos here in Michigan. But have had one coyote, two foxes and many Jehovah’s Witnesses visiting in past decades.

We have white tails come through every once in a while. We’re a stopping place for a Raccoon family as they make their nightly rounds. Red fox, skunks, etc come through every once in a while.

The critters not welcome are coyotes and moles. Coyotes because they will go after the dog when we let her out on her lead. And moles, well the reason is obvious.

Had a Pileated Woodpeck stop buy in January. And then a red tail hawk came by trying to use our bird feeders as a hawk feeder. He was a young one and soon moved off to look for a better hunting ground.

Since I am coming and going at all hours for my job, I have to watch for deer on the road coming to my house. the road crosses two creeks and it is along that bottom land that the deer like to travel.

Dinosaur watching is a popular activity, with life lists.

My two seed block cages hang from a double shepherds hook outside a dining room window. As Sherry and I have our coffee we can watch them.

I believe the recent 2.8 million years have been the coldest of the last 200 million years including today. Maybe that’s the reason we call it an Ice Age. We are so lucky to live in an interglacial moment when places like Canada or Greta’s homeland are not under a mile of ice.

https://www.climate.gov/news-features/understanding-climate/climate-change-global-temperature

Earth’s temperature has risen by an average of 0.14° Fahrenheit (0.08° Celsius) per decade since 1880, or about 2° F in total.

The rate of warming since 1981 is more than twice as fast: 0.32° F (0.18° C) per decade.

2022 was the sixth-warmest year on record based on NOAA’s temperature data.

The 2022 surface temperature was 1.55 °F (0.86 °Celsius) warmer than the 20th-century average of 57.0 °F (13.9 °C) and 1.90 ˚F (1.06 ˚C) warmer than the pre-industrial period (1880-1900).

The 10 warmest years in the historical record have all occurred since 2010

It’s a modern millenarian apocalyptic secular movement. They flourish particularly around the turn of every millennium.

The human mind is too complex and has quirks that evolution hasn’t been able to iron out.

Ja. It is also what I said….

https://breadonthewater.co.za/2021/03/04/the-1000-year-eddy-cycle/

The primitive human brain is hard wired to respond to fear with heightened attention. It then looks for correlations to confirm there is a threat creating a false impression through narrow focus. Those who desire control over others know this can be used to increase their power.

The evolutionary escape is to understand the limitations and projections of the conditioned mind and examine how cause and effect brings everything into being.

It is easy to see through the fear mongering when you understand its purpose is to manipulate and control you.

I’ve noticed this for several decades now. Man always needs something to worry about. When I grew up, it was the cold war and nuclear annihilation. For a short period, it was terrorism. Big brother has tried to make global warming front of mind.

Now that the world is starting to cool again (my study of the matter is that the temperature varies from warmer to colder in multidecade cycles, moderated by our huge heat sink also known as the oceans).

Look back in history and you will always find something that man was told to fear.

As for global warming, I like to point to the earth’s warming at the end of the Younger Dryas period (~10C temp rise in a decade) when people spew the BS that the world’s temperature has never risen this fast before. The followup question is – do you think that rise was due to early man discovering how to burn coal for warmth?

I would not argue a “never before”, never is just too big a statement. However: How sure are you that you know how warm the Younger Dryas period was?

How do we know how warm the Younger Dryas period was? Warm??? Mate, it was perishing cold. Young Mr Dryas wrote it all down, and it’s now carefully reported in Wikipedia. They cite all the original Mr D manuscripts.

Or maybe it was Young Ms Dryas? That bit got lost.

How sure are you that you know how warm the Younger Dryas period was?

even when its cold the question “how warm was it ? means

what was the temperature.

Oops that was meant to be a reply to Steven Mosher’s reply to me.

The fastest warming ever is a primary lie the media sows to scare the uninformed. I don’t think that was zzz’s claim.

There are more than a few scientific papers on this subject. I’ve read papers dated from the late 90s to present time acknowledging this rapid heating.

As you know, NOAA is pushing the CAGW agenda, yet here’s a link from them on the Younger Dryas period. https://www.ncei.noaa.gov/sites/default/files/2021-11/3%20The%20Younger%20Dryas%20-FINAL%20NOV%20%281%29.pdf

Note this in paragraph one: The end of the Younger Dryas, about 11,500 years ago, was particularly abrupt. In Greenland, temperatures rose 10°C (18°F) in a decade (Alley 2000).

If you dig through other papers on the subject, you’ll find that this temperature increase has been noted worldwide in that time period.

So unless one says that this rapid temperature increase was due to man, my point here is that the climate can change significantly in short timespans due to natural causes.

“ the climate can change significantly in short timespans due to natural causes.” Common Sense. Not Taught. Not ‘Learned’.

Everytime I see the word “unprecedented” in the press, as they lament so called warming caused events, I ask myself a similar question.

“the BS that the world’s temperature has never risen this fast before.”

That claim came from taking the average increase in temperature over thousands of years from proxies and comparing it to the modern high resolution instrumental record. This is scientific fraud. The two data sets are like comparing apples to frogs.

Exactly right. This ”fast rate of temperature rise” would have happened countless millions of times. Scientists are to blame for not speaking out.

Hmmm. I see your point. Or, I’d see your point IF the modern instrumental record was unaltered. But it’s been altered so many times that it is no longer very accurate. Dr James Hansen not only altered the entire historical temperature database but he also did not save the unaltered data. And then there was the Australia BOM who altered their historical data with Acorn 2.0. https://wattsupwiththat.com/2019/02/22/changes-to-darwins-climate-history-are-not-logical/

I don’t have much faith in the modern historical temperature record, as it’s been heavily altered by people with a bias toward global warming.

My best bet for accuracy would be satellite. There can still be questions of bias in the tabulation. With millions (I assume) data points the tiniest rounding errors could add up significantly, and even with that resolution the data only covers a small fraction of the planet. If we are can panic over 1 or 2 degrees, the planet has much bigger threats to offer, that can make the pain even more exquisite.

Turkey.

Creating general fear is a premier political power play. Political power is always in demand by someone, so creating fear to further that power is always in play somewhere.

“When I grew up, it was the cold war and nuclear annihilation”.

Me too, in the 1960s. But I stopped worrying when we were told by our teachers that we would be safe hiding under our desks during a nuclear attack.

I got in a verbal fight with a grade school teacher when were asked to participate in an under the desk nuclear attack exercise. I had new dark color pants on and didn’t want to be laying on a dirty floor. The start of my career as a juvenile delinquent.

I doubt if hiding under a desk will save children from climate change.

Nice to remember that teachers were always so intelligent, even before the era of leftist brainwashing in schools.

LOL, yes. Same here on the nuke drills, except I wasn’t smart enough to say that hiding under a desk wasn’t going to save anyone from a nuke blast.

But I lived in an area that would have been a primary target – the Washington DC suburbs inside the beltway.

It didn’t matter where you lived. Someone would invent a reason that something near you would be a target.

Nah… the ‘nukers’ would aim for something of use.

At primary school, I had more fear & dread about Sister Mary Constanza and her cane than I did about a nuclear conflagration.

What if they wore a mask as well?

today people fear a green bogeyman that will force them to drive EVs and they fear not having copper,

just read the posts here for loads of fear mongering

Got yer battery car yet, mosh?

no car.

mosh: i think man will go to the moon

wuwt: oh ya, wheres your rocket.

i come here to see how stupid arguments can get, and im never disappointed by you guys

Hypocrite.

Steven Mosher said: “i come here to see how stupid arguments can get, and im never disappointed by you guys”

Here are some of my favorite arguments people have tried to convince me of.

~ The law of conservation of energy holds only after a period of time has elapsed.

~ The law of conservation of mass does not apply to the carbon budget.

~ It is not valid to perform arithmetic operation on intensive properties like temperature.

~ Ocean water below the surface does not emit radiation.

~ The Stefan-Boltzmann Law only applies if a body is in equilibrium with its surroundings.

~ Quantum Mechanics is completely deterministic.

~ If you utilize statistical inference then you aren’t doing science.

~ If you make predictions then you aren’t doing science.

~ Kirchoff’s Law prohibits polyatomic gas species from impeding the transmission of energy.

~ A sum (Σ[x]) is the same thing as an average (Σ[x]/n)

~ A quotient (/) is the same thing as a sum (+).

~ Computer algebra systems like MATLAB and Mathematica output the wrong answers when given the equations from the GUM.

~ The NIST uncertainty machine does not compute uncertainty correctly.

~ Category 4 Hurricane Ian was not even a hurricane because this one really small wind observation hundreds of kilometers from the radius of maximum was less than hurricane force.

And the list goes on and on…

But I don’t come here to see absurd arguments. I come here to learn first and because I still (perhaps naively) think that I can convince people of scientific truths using the consilience of evidence.

My point was not that we should fear not having copper. Sorry if I was unclear.

It was that copper will become much more expensive in the future as the ores being mined become progressively less rich in copper. And as a result, it is foolish to design an energy system dependent on copper.

Regards,

w.

Copper has been expensive enough that thieves will risk getting fried to steal it. Been going on for a long time now.

People under the influence of powerful drugs aren’t exactly thinking clearly. They steal the copper wire, or try to, because they are addicted to Chinese Fentanyl. Stealing energized wire could be done, but it requires specialized training, expensive equipment and huge <<redacted>>.

My point was not that we should fear not having coal. Sorry if I was unclear.

It was that coal will become much more expensive in the future as the ores being mined become progressively less rich And as a result, it is foolish to design an energy system dependent on coal.

Regards,

Mosh, first, we have ~130 years of proven coal reserves and about 20 years of proven copper reserves. Please tell us which one will increase in price faster?

Second, we are being forced by governmental edicts and subsidies to shift to a copper based energy system. If it were a good idea the shift would occur by itself.

But I suspect you know all of that, and are just trying to stir the pot …

My best regards to you, get well, stay well,

w.

Coal is just something to burn to heat water to make steam to use as a working fluid for the steam turbines which drive the generators,

There are alternative heat sources.

An engineer friend from Uni used to consult on converting coal fired boilers to gas when coal was relatively expensive, and converting gas to coal when gas was relatively expensive.

NO! What we fear is overbearing government working for their own and other agendas that have a negative effect on the liberty and welfare of their citizens, and the bone heads that support those totalitarians.

The Green Bogeyman is real. That’s how we got in this screwed-up position in the first place.

NetZero, the Green/Authoritarian Delusion.

real? what screwed up position

Look around you.

Good Grief!

Yes, politics, especially left wing, as usual. H.L. Mencken said:

The whole aim of practical politics is to keep the populace alarmed — and hence clamorous to be led to safety — by menacing it with an endless series of hobgoblins, all of them imaginary.

Ah, last century we had ‘flappers’ …This century they are flapping like a big girls blouse…

Leftism (anyone who wants more government power) uses fear to create a demand for more “government powers”, This has been a strategy for many centuries. Not just once in a while — at all times.

And this is not secular. Religions use tall tales to create fear (in the opinion of this long-term atheist) of God and hell to control people. Also, the claim of heaven. It’s all nonsense to me, just like the fear of the future climate.

I see little difference between people who fear God and hell, versus other people who fear climate change. Unproven fears are irrational. At least the religions have some good commandments. The Climate Howlers don’t even have that. They could not care less about actual air, water and land pollution in Asia, for one example. Instead, they falsely define the staff of life, CO2, as pollution. The climate change secular religion is of no value to mankind.

Honest Climate Science and Energy

In addition, The Golden Rule is one ‘commandment’, and is one which covers any and all. It appears to be as difficult to apply that ONE to one’s life, as to apply any few of the various.

Yes!

Thank you

As of this month we have cooled 0.7C the last 7 years. Hard to keep the existential crisis narrative going with this data but that won’t stop the media from pushing the irrational fear mongering incessantly.

The trend is at 0.24 C / decade since 2016. If this continued the cooling would get hard to ignore.

The trend here is +6 deg C PER DAY this weekend. Now that’s really hard to ignore.

That trend is because of a rare triple La Nina. The next El Nino will change the slope dramatically.

From what I’ve heard based off the amount of warm water volume, the upcoming El Niño will present a 2009/2010 like situation.

We will see. I expect the trend to increase over the next few months due to this La Nina. I’m hoping the next ENSO phase is neutral as that will give us a better feeling for where we are. Of course, the big change will occur when the AMO goes negative. Coming soon to a planet near you.

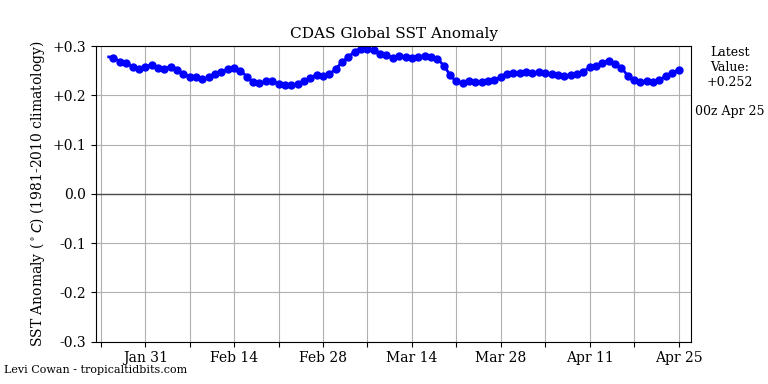

I don’t think that we will see cooling in the long run until the SSTS in the oceans drop. And that isn’t happening yet.

That would be a good thing.

At the last AMO transition there’s was a reduction in cloudiness. If the reverse happens in the coming AMO transition, this should cause ocean cooling.

Any cooling is counter to the claims of CO₂ addled.

They already choke when CO₂ levels continue to increase while temperatures dive.

That’s when they start claiming CO₂ causes every kind of weather; cold, hot, rainy, arid, stormy, windy, calm, jada jada jada.

Within a few years, they’re likely to flip the narrative back to global cooling. If you look through the decades with news articles, you’ll find this alternating narrative of ‘the world’s on fire’ to ‘an ice age is coming’ is a common theme that runs for a few decades and then flips to the other fear.

I had a link to a Canadian article about this, but lost it a few years ago. The article went back to the early 1900s where it was cooling, then warming, then cooling, now warming.

The cooling and warming predictions pre-1970s were usually from individual scientist crackpots. The 1970s cooling warnings were bigger, but still a small minority of all scientists. The current global warming warnings are a 59% majority, by a libertarian survey last year — 59% believe in imaginary CAGW. And at least 99.9% believe in real AGW of some amount, no matter how small. But climate change means scary CAGW, not harmless AGW.

Richard, that’s likely due to the lack of climatologists and grant money in prior to the 1980s. Now, the amount of money spent on the subject dwarves previous decades. And that grant money delivers the study results desired by government.

Does CO2 warm the earth? Maybe. My opinion is that it does warm the earth but its impact is de minimis. There are simply too many variables to isolate the impact of a single input into the world’s climate. I think that the earth is warmer today than when I grew up in the 60s, but I attribute that to natural temperature variation on a multidecade heating/cooling cycle for the earth.

We can definitively say that CO2 levels have risen annually since it was first regularly measured (1958), yet the world’s temperature has fluctuated in that time. The correlation is not as strong as it’s hyped to be.

”And at least 99.9% believe in real AGW ”

What is this ”believe” nonsense. They either know or they don’t know.

They know CO2 is a greenhouse gas

They know air pollution blocks sunlight

They know a warmer troposphere holds more water vapor

They know human adjustments to raw temperature data, and infilling, could account for a significant portion of the claimed global warming in the past 150 years.

What else do scientists need to know to believe in AGW?

RG said: “They know human adjustments to raw temperature data, and infilling, could account for a significant portion of the claimed global warming in the past 150 years.”

It’s the opposite. Adjustments reduce the amount of warming relative to the raw data over the last 150 years. Hausfather provides a brief summary of how the adjustments affect the long term trend.

bgwxyz reiterates his approval of fraudulent data mannipulations.

BedOfWax is a liar or a fool, or both/

The 1940 to 1975 period had added warming because the global cooling with CO2 rising reported in 1975 was inconvenient for the CO2 is evil narrative that fools like BedOfWax believe in. That was science fraud, and you know it.

In the US the mid-1930s were warmer than even 1998 with that huge El Nino heat release, but not anymore.

Zeke H. is a deceiver.

His “infamous” argument that climate models are accurate used TCS and RCP 4.5, which the IPCC never publicizes, rather than the popular ECS and RCP 8.5 which the IPCC does publicize, and very likely over predict global warming.

Zeke H. Sleight of hand that you warmunists.loved.

Anyone wo thinks there was a real; global average temperature before the 1940s, with so few Southern Hemisphere measurements, is a liar. Pre-1900 is mainly infilling, not data. Still a lot of infilling today — you have no idea how much because you don’t care.

The chart you presented is bogus — it completely ignores the huge data changes for the 1040 to 1975 period based on what was reported in 1975 versus was reported today.

Honest Climate Science and Energy: NOAA US average temperature from 1920 to 2020, Raw Data vs. Adjusted Data presented to the public (science fraud)

Honest Climate Science and Energy: Pre-1980 global average temperature “revisions” from 2000 to 2017 (science fraud)

Honest Climate Science and Energy: Click on READ MORE and watch US climate history get changed to better support the CO2 is evil narrative

Honest Climate Science and Energy: Global Average Temperature History Keeps Changing — National Geographic in 1976 versus NASA-GISS in 2022

Honest Climate Science and Energy: Watch climate history change to better support the false CO2 is evil — it’s magic science fraud

The graph includes all adjustments. This is probably the source of confusion. If you are used to getting your information from contrarian bloggers then you probably were only aware of the adjustment that bump up the temperature anomalies later in the period and had no idea about the more significant bump up earlier in the period. I also recommend reading about the details of the adjustments. They are important because there are subtle implications when deciding which of the seemingly equivalent approaches of bumping up before the changepoint or nudging them down after the changepoint to correct the changepoint bias.

BTW…all of the adjustments and infilling in the traditional surface datasets are done in UAH as well. In fact, UAH not only performs the same adjustments, but they do so more aggressively and then perform other adjustments that the surface datasets don’t even have to worry about. Most people are not aware of this.

More fraud, par for the course for CAGW kooks.

bgwxyz: “UAH does data mannipulations, so it must be ok!”

Do you expect to be taken seriously after making statements like this?

“contrarian bloggers” — ah, poor baby

“They are important because there are subtle implications when deciding which of the seemingly equivalent approaches of bumping up before the changepoint or nudging them down after the changepoint to correct the changepoint bias.“

No, it is all unscientific fraud, and you are a disgrace to the profession.

karlomonte said: “bgwxyz: “UAH does data mannipulations, so it must be ok!””

Can you post a link to the post in which those exact words you have in double quotes appear?

Its a paraphrase, you clown.

The global warming religion posits that warming since 1950 is primarily anthropogenic and that it is somehow remarkably different from all warming periods in the past. The sharp pre-anthropogenic warming of ~1910-1945, and the overall warming trend from 1880-1945 show this to be evidently untrue. So Hausfather cooks up a reason to warm the entire period of 1880-1940, thereby vastly reducing the warming that the new Climate Faith holds cannot have been possible prior to 1950.

The fact that this reduces the amount of warming relative to the raw data over the last 150 years is not the relevant issue. What is relevant is that Hausfather’s adjustment largely erases the inconvenient “pre-anthropogenic” portion of the overall warming, thereby making the climate religion look less ridiculous. This should be rather obvious.

“The cooling and warming predictions pre-1970s were usually from individual scientist crackpots. The 1970s cooling warnings were bigger, but still a small minority of all scientists.”

I wouldn’t say that. The climate scientists were reporting on actual cooling. It cooled significantly from the 1940’s to the late 1970’s by about 2.0C (according to the U.S.temperature chart). No crackpottery there.

Now claiming the world was going into a new Ice Age might be a little bit much, but the climate scientists of the era did have a reason to note the cooling that was taking place at the time.

I was there. I saw all these claims about human-caused global cooling. At first, I thought maybe the climate scientists claiming humans were causing the cooling might have something, so I waited for them to present some evidence proving their case. And I waited, and I waited, and I waited and I waited. And I’m still waiting to see some proof of their claims.

So, when the human-caused global warming crew showed up claiming humans were causing the Earth to warm, I was naturally skeptical from my earlier experiences with these unsubstantiated climate claims, and to this day have not seen one bit of evidence proving humans are causing the Earth’s climate to change. Either way, cold or hot.

I wish I had the internet back in the 1970’s. I would have blistered the ears of all those charlatan climate scientists.

WUWT is like Heaven to me. I get to say just what I think about modern day climate science. 🙂

The reason for that uptick in hysteria is precisely because the facts are beginning to give the lie to climate alarmism, hence it must be restated at each and every opportunity.

Disagree

Hysteria must escalate because it loses its ability to scare people if it remains the same decade after decade.

This is the “Worst than we thought” propaganda strategy.

The actual temperature is not changing enough for many people to notice where they live. People rarely know “the facts”. They usually “know” what they are told by government authorities (who can’t be trusted, but they usually are trusted).

So the climate propaganda must escalate to be effective in creating fear. And creating fear gives leftists in power the opportunity to expand leftist government powers. Which they do. Never letting a crisis go to waste — whether a real Covid crisis, or a fake climate crisis.

.

Isn’t it 102 months?

https://woodfortrees.org/plot/uah6/plot/uah6/last:102/trend

I make it 102 months is you count the start month August 2014 to the end of Jan 2023.

Slope is -2E-05x in Excel notation.

Geoff S

Thanks, Christopher, for continuing to research and present it.

Regards,

Bob

Mike, how very kind and polite you are. The real thanks should go to Roy Spencer and John Christy, who have kept the UAH dataset honest when all others have tampered with theirs.

Which Christy and Spencer do VOLUNTARILY without payment!

Therefore, no financial conflicts of interest are possible.

No Christopher. My thanks for the post go to you. As you will recall, I used to prepare graphs of climate-and-weather-related data and discuss them in blog posts that were cross posted here at WUWT, so I know how much work goes into what you prepared above and into responding to comments.

Regards,

Bob

PS: I’ve been called lots of things, but this is the first time I’ve been called Mike.

I predict much whining.

You mean our biggest threat is all this global whining?

I think you are onto something important here.

The Climate Howlers do Global Whining

I like that and will use it.

As a blog editor, if I read something good, I steal it.

And please note how I am now confirmed as a psychic prophet…

There are a couple of problems with this type of analysis. One is that it does not adjust the statistics for autocorrelation.

The Hurst exponent of the UAH MSU dataset is 0.82. This means that the “effective N”, the number of data points for statistical purposes, is only 9 …

Now, this doesn’t remove the statistical significance of the trend in the full dataset. Here’s that calculation.

With a p-value of 6.30e-5, the trend is obviously statistically significant.

However, properly adjusting the analysis for autocorrelation means that shorter sections of the dataset cannot be said to have a statistically significant trend. Here, for example, is the same analysis for the most recent half of the UAH dataset. There, the effective N drops to a mere three data points.

With a p-value of 0.128, we cannot say that there is a statistically significant trend in the latter half of the MSU data.

As a result, I fear that the analysis of Lord Monckton isn’t valid.

Finally, it should not be a surprise that there are periods of increase and decrease in temperature data. Here, for example, is a breakpoint analysis of fractional Gaussian noise (FGN) with a Hurst exponent of 0.8, with an underlying trend added to the FGN.

Note the similarity to a natural temperature dataset. However, this is just random fractional Gaussian noise plus a linear trend. We know for a fact that there is an underlying increasing trend throughout the data … but despite that, there’s a decreasing section from 1980 to 2000 … is this a significant “pause”?

w.

I was thinking the same thing. e.g. How likely is it to find periods with a valid trend in a volatile, heavily censored dataset? We need a satellite and a time machine to gather enough data to support anything.

nope. satellite data is heavily censored and adjusted. 100 or so locations

will get you a good dataset.

Shen, S. S. P., 2006: Statistical procedures for estimating and detecting climate changes, Advances in Atmospheric Sciences 23, 61-68

You aren’t going to get it. In fact, UAH is one of the most heavily adjusted datasets in existence. And their infilling technique interpolates missing values (and there are a lot) up to 4160 km away spatially and 2 days temporally. Compare that with GISTEMP which only interpolates using data from 1200 km away spatially with no temporal infilling.

Year / Version / Effect / Description / Citation

Adjustment 1: 1992 : A : unknown effect : simple bias correction : Spencer & Christy 1992

Adjustment 2: 1994 : B : -0.03 C/decade : linear diurnal drift : Christy et al. 1995

Adjustment 3: 1997 : C : +0.03 C/decade : removal of residual annual cycle related to hot target variations : Christy et al. 1998

Adjustment 4: 1998 : D : +0.10 C/decade : orbital decay : Christy et al. 2000

Adjustment 5: 1998 : D : -0.07 C/decade : removal of dependence on time variations of hot target temperature : Christy et al. 2000

Adjustment 6: 2003 : 5.0 : +0.008 C/decade : non-linear diurnal drift : Christy et al. 2003

Adjustment 7: 2004 : 5.1 : -0.004 C/decade : data criteria acceptance : Karl et al. 2006

Adjustment 8: 2005 : 5.2 : +0.035 C/decade : diurnal drift : Spencer et al. 2006

Adjustment 9: 2017 : 6.0 : -0.03 C/decade : new method : Spencer et al. 2017 [open]

That is 0.307 C/decade worth of adjustments jumping from version to version netting out to +0.039 C/decade. And that does not include the unknown magnitude of adjustments in the initial version.

“As a result, I fear that the analysis of Lord Monckton isn’t valid.”

I have that fear too. The analysis won’t stand up to any kind of uncertainty analysis, Hurst or otherwise.

I show trends with uncertainty calculated using a Quenouille correction for autocorrelation. This is equivalent to an Ar(1) model. For UAH V6 fron August 2014 to January 2023, I do indeed get a slightly negative trend, -0.027°C/Century. But the 95% confidence intervals are from -2.838 to 2.783°C/Century. That is, you can’t rule out an underlying trend of 2.783°C/Century, which is higher than predicted by the IPCC. That is because of the short interval on which the trend is calculated.

Is the 30 year interval climate “science” uses too SHORT?

Of course it is.

Any interval would, by necessity, be over 10,000 years since that is back to the beginning of this interglacial period. So we are looking at the “climate” and temperature trends of an interglacial, and MUST include that ENTRE period if we wish to determine if there are any changes in trends caused by CO2.

Without that entire period, we cannot eliminate natural variation as the cause of the warming of the recent years. Heck, climate “scientists” have NO explanation of the very warm 1930s, or how it got so cold in the LIA, or so warm in the Roman optimum or Medieval Warm Period.

All of climate “science” is a fraud if the “average” of the models can not be used to hind cast those periods back to the year 0. Heck again, the models can’t even hind cast to the year 1900, 50 years after the end of the LIA.

It is all a very scary and very expensive fraud that is costing the poorest or the world a much better life. Since WWII, there have been skirmishes and a cold war, but no MAJOR conflicts involving the majority of the world’s nations and ALL of society should have been rising on the rising tide of excess production of houses, household items, food production, clean water and improved sanitary waste systems and LOWER “energy” expenses along with greater “energy” availability.

60 years of very good, overall, worldwide conditions wasted for the poor of Asia and Africa, especially, but ALL worldwide poor generally especially over the last 30+ years.

Nick, please defend you position of promoting the climate hysteria agenda in relation to the effect on the poor.

Thank you in advance,

Drake

In theory adding CO2 to the atmosphere all else being equal would increase temperature.

Climate variations prior to around 1880 when the CO2 concentration started to increase are irrelevant to the current climate that is the product of the effect of CO2 forcing and ongoing natural fluctuations acting on various time scales at times enhancing the warming and at times countering it.

As you say the inevitable economic advancement of world’s poor is all that matters in the end.

An excellent comment, Drake.

We live in the best climate in 5,000 years, based on climate proxies, and should be celebrating our current climate. Living during a warming trend in an interglacial is about as good as the climate gets for humans and animals on our planet. The C2 plants would prefer two to three times ambient CO2, but 420ppm is a good start — the best CO2 level for C3 plants in millions of years.

That a trend is not significant doesn’t mean it is not real. I called the attention to the change in Arctic sea ice in 2015 and was told the same. Now the new trend is 15 years old, and the “experts” are scratching their heads.

“That a trend is not significant doesn’t mean it is not real”

Indeed so. But it is a question of what you can infer from it. Clearly here we are asked to infer that it isn’t really warming. But what the insignificance says is that it really could be warming at quite a high rate, with overlying random factors (weather) leading to a chance low result.

The chance for any one instance is low (2.5% at the CI limit). But it becomes much higher if you select from a range of possible periods on the basis of trend. And that is exactly what Lord M’s selection procedure does.

No, I don’t “select from a range of possible periods on the basis of trend”. I calculate the longest period, working back from the present, that exhibits a zero trend or less. By that definition, which is made explicit in the head posting, there is only one possible period.

Since I draw no conclusion from the zero trend except that it provides a general indication that there is not a lot of warming going on, it is fascinating how upset the climate fanatics are about these simple graphs.

If it were indeed true that “it could be warming at quite a high rate”, then it would also be true that it could be cooling at just as high a rate. With so absurdly large an error margin, one wipes out the global warming problem altogether. For Mr Stokes is, in effect, admitting that climate scientists have no idea how much it is warming or cooling, or even which effect is predominant. If so, there is no legitimate basis whatsoever for doing anything at all to cripple the economies of the hated West in the specious name of Saving the Planet.

Stokes is just a propagandist shill for the IPCC, has no compunctions against posting anything that he knows to be false, to keep the hockey stick alive.

“No, I don’t “select from a range of possible periods on the basis of trend”. I calculate the longest period, working back from the present, that exhibits a zero trend or less”

The second sentence describes exactly the process of ““select from a range of possible periods on the basis of trend””

“With so absurdly large an error margin, one wipes out the global warming problem altogether.”

The error margin is just a consequence of your decision, against much advice, to try to make inferences from short term trends. That difficulty is present in any kind of data, and says nothing about global warming.

Climate scientists look at much longer time periods where the trend is indisputably positive.

Nick,

How many days of daily data do you recommend to define a short term trend? For making inferences? Change happens forever over a range of many orders of magnitude of time. You are selecting “short term” to suit your argument in the sense of “You are wrong because you used too short a short term”. Does not compute. Geoff S

Geoff,

As I showed with diagram, you can calculate the error bars, and they diminish as the term gets longer. You can make inferences accordingly.

Mr Stokes is, as usual, on a losing wicket here. I do not “select from a range of possible periods on the basis of trend”. I take a single trend – zero – and then simply enquire how far back one can go in the most trustworthy of the global-temperature datasets and find a zero trend. It is a matter of measurement by the satellites and calculation by me, using the method which, whether Mr Stokes likes it or not, is the method most often used in climatology to derive the direction of travel of a stochastic temperature dataset. If he wishes to argue that climatology should not use the least-squares linear-regression trend, then his argument is with climatology and not with me.

Mr Stokes is at his most pompous when he is most clearly aware that he is in the wrong and is not going to get away with it. For a start, we are not going to take “advice” from a paid agent of a foreign power.

Secondly, if Mr Stokes would take some lessons in elementary statistics he would realize that a zero trend has a correlation coefficient of zero (all one has to do is look at the diagram in the head posting, which is worth a read). What that means is that at any moment the trend might diverge in one direction or another, since the fact that it is zero gives little indication of what may happen next.

Thirdly, it is self-evident that the longer the period of data the narrower the uncertainty interval.

Fourthly, the effect of the uncertainty interval is to lengthen, not to shorten, the period over which it is uncertain whether there has been any global warming or not. The 101 months shown in the head posting is thus a minimum value.

Finally, I do not draw any “inference” from the fact that there has been no global warming for almost eight and a half years. I merely report the fact, explain how I derived it, compare it with the full dataset precisely to avoid the nonsense allegation of cherry-picking, and point out that the longer the zero trend becomes, and the more frequent such long trendless periods are, the clearer it becomes, and the more visible it becomes, that the rate of global warming over the past 33 years since IPCC (1990) is well below half what was then confidently predicted.

To all but those with a sullen vested interest in the Party Line, such observations are of more than passing interest.

“For Mr Stokes is, in effect, admitting that climate scientists have no idea how much it is warming or cooling, or even which effect is predominant.”

You put this in a previous post in the thread. You nailed it!

“point out that the longer the zero trend becomes, and the more frequent such long trendless periods are, the clearer it becomes, and the more visible it becomes, that the rate of global warming over the past 33 years since IPCC (1990) is well below half what was then confidently predicted.

I like the way you state this. It’s not that CO2 doesn’t have any impact on temperature but that it is far less than predicted – which also means that other control knobs can mask CO2 contributions rather easily. Tying all temperature increase to CO2 is just plain wrong. It then becomes a matter of trying to identify the entire range of contributions and when+how they impact temperature.

It is obvious when the Party Line has been shown to be bankrupt when all they have is to blindly press the downvote buttons, as is the case with your carefully crafted and succinct comments here.

If that were actually your selection criteria you would have to stop when you got to January 2022 because your 0 or negative trend would flip positive:

https://woodfortrees.org/plot/uah6/from:2022/plot/uah6/from:2022/trend

So it seems that there’s more to your selection than starting at the present day and seeing how far back you can get a flat or negative trend.

But as Nick shows it is all silliness, since you never actually consider the confidence intervals of your trends.

My thoughts entirely.

We do not use these numbers to predict or hindcast.

The main reason why I am now making an Australian subset each month is to keep alive the possibility that a cusp is under way. Caution is urged in case it foreshadows a temperature downturn.

I have also started an informal comment along the lines that if I wanted to spin a weather story, I could claim that children under 11 here have not felt global warming, despite CO2 increases.

Geoff S

I just look at when our gently warming globe first achieved January’s UAH temperature.and that’s 1988. So no warming for over 30 years

I don’t think we are being asked to infer anything except the obvious, that the surface is not warming faster over time, as it should according to a popular hypothesis.

The existence of multi-year periods of cooling is obvious and expected, but they should become less frequent and shorter if the warming accelerates as expected given the CO2 acceleration. The observation is contrary to the expectation, and Lord Monckton reminds us every month about it. Considering that we are reminded every single day how awful climate change is and how guilty we are, I don’t find his lordship’s reminders out of order.

I am most grateful to Mr Vinos for his kind comment. It is indeed interesting that the rapid decadal rate of warming so confidently predicted in Charney (1978), IPCC (1990) and, most recently, in IPCC (2021) is not coming to pass.

JV said: “he existence of multi-year periods of cooling is obvious and expected, but they should become less frequent and shorter if the warming accelerates as expected given the CO2 acceleration.”

I’ve not heard that hypothesis before. When I get time I’ll look at the CMIP model data and see if the frequency of pauses increases or decreases with time. There is so many little pet projects like these on my plate already though…

I did test the hypothesis that the expectation is for a decrease in the frequency of pauses using the CMIP5 data from the KNMI Explorer. There was no change in the frequency of pauses from 1979 to 2100.

The percent of time we expect to be in a pause depends on the length of the pause. For a 5 yr pause it is 30%. For a 10yr pause it is 13%. And for a 101 month pause like what is occurring now it is 18%.

What does this tell us? Despite Monckton assertion to the contrary it is not unexpected or even notable that we find ourselves in a pause lasting 101 months.

I encourage everyone to download the data from the KNMI Explorer and see for yourself.

And now he tells how he thinks the CHIMPS garbage-in-garbage out “climate” models are somehow valid.

Your need to apply statistics to obtain a looong trend from what appears to me to be a control system with short term excursions is unexplainable. Have you ever done control charts to see if these excursions are out of control?

When Nick the Stroker agrees with Willie E., I know I am living in bizarro land. That is impossible. I’m going back to sleep and hope this agreement has disappeared by the time I wake up. A Willie E. versus Nick the Stroker argument is a highlight of this website.

That is, you can’t rule out an underlying trend of minus 2.838C per century either. But the hypothesis that the trend is not statistically different from zero cannot be rejected. Thus your analysis, using a better technique more appropriate to the data, supports Monckton’s conclusions. The width of the confidence intervals is being driven by the extreme heteroscedascity in the data around the El Nino spike.

What is perhaps rather more revealing is to evaluate how much trend there is across the whole dataset in an AR1 model. When you do that the confidence intervals narrow considerably, but the estimate for the trend drops to an unexciting third of a degree per century.

Don’t panic.

“but the estimate for the trend drops to an unexciting third of a degree per century”

Really? I get 1.33°C/Century, in agreement with Roy Spencer’s calculation. And it is a bit more exciting down here where we live.

UAHV6:

Temperature Anomaly trend

Jan 1979 to Jan 2023

Rate: 1.332°C/Century;

CI from 1.013 to 1.651;

t-statistic 8.180;

RSSV4: 2.112°C/Century;

GISS: 1.868°C/Century;

HADCRUT 5: 1.887°C/Century;

(all except UAH to Dec 2022)

Mr Stokes is perhaps unaware that RSS, which showed a sudden uptick in its warming rate just a month after one of the Pause graphs here was debated in the U.S. Senate by Senator Ted Cruz, uses a defective satellite dataset that is now out of date. Without it, RSS would show much the same warming as UAH.

As to HadCRUT5, Dr Spencer has recently written at his excellent blog about the substantial influence of the urban heat-island effect on this and other terrestrial datasets.

Hmm. Tony Heller predicted that the RSS would suddenly change to showing more warming a couple months before it actually did.

Collusion Is Independence | Real Science (wordpress.com)

And then recently showed that what they have done is move their reported temperatures up to the top limit of the error bars in order to be in closer agreement with NASA data.

Adjusting Good Data To Make It Match Bad Data | Real Climate Science

Nick,

For monthly UAH Australia, from the 2016 high to the 2022 low I get a trend of nearly MINUS 30 C per Century equivalent over 6.5 years, in a time of CO2 induced catastrophic global warming, but I only quote this number to show absurdity of data torture.

Geoff S

Did you use an AR1 model for that, or just OLS? If I use OLS I get results that agree with yours. But the data are clearly autocorrelated, so OLS in NOT appropriate. Try again.

AR1 vs OLS makes very little difference. I get 1.33 with OLS, 1.30 with AR1. Here is my R working:

There is very little need to hunt for autocorrelation in the global temperature record, though it is a relevant consideration in the regional records thanks to seasonality. If the uncertainty in the UAH datea is indeed +/- 0.28 K/decade, as Mr Stokes suggests, then there subsists no basis whatsoever for the sedulously-peddled conclusion that we must Do Something to Save The Planet, because we have no idea whether the globe is warming rapidly or cooling rapidly.

I prefer to base my analyses on the real-world data and the uncertainty intervals issued by the keepers of the datasets. Those intervals are considerably narrower than Mr Stokes’ interval. If, therefore, Mr Stokes thinks the uncertainty interval is greater by an order of magnitude than the keepers of the datasets say it, then he should take up the matter with official climatology and not with me.

Professor Jones at the University of East Anglia, who used to keep the HadCRUT record, was happy to recommend the simple least-squares linear-regression trend as the best way to get an idea of the direction of travel in global temperature datasets. I do not claim anything more than that the least-squares trend has been zero for 101 months. I do not base any prediction on this fact: I merely report it, as well as the 44-year trend on the entire UAH dataset, for context.

The truth is that the world is not warming anything like as fast as had been, and still is, predicted. No amount of fluff and bluster will conceal that fact.

And there is absolutely no reason to try cramming battery cars down the throats of the entire world population.

“There is very little need to hunt for autocorrelation in the global temperature record”

The residuals are autocorrelated, and you absolutely have to allow for that in the confidence intervals. To show what is going on here, I plot the trends for each interval ending at present (Jan 2023). The start of the interval is shown on the x axis. Near 2023, the intervals are short, and the trend is wildly variable. As you go back in time (longer periods) the trend settles to a generally positive value (1.3 at 1979). Between the short term gyrations and the stabler long term, it crosses the axis one last time at August 2014. That is the point Lord M calls the pause.

I have plotted the confidence intervals in color. Blue is the OLS 95% CI, calculated as if there were no autocorrelation. It diminishes as you go back in time, and the trend stabilises. But there is autocorrelation, so OLS exaggerates the confidence. I have plotted the Ar(1) CI’s in red. They are more than double the breadth. The most recent year in which you can be 95% confident that the trend is positive is about 2011.

Mr Stokes digs himself further and further into a hole of his own making. Consider the entire UAH dataset, and compare the observed 0.134 K/decade warming rate since December 1978 or the observed 0.137 K/decade warming rate since January 1990 with the predicted 0.3 K/decade warming rate in IPCC (1990, 2021).

One of two conclusions follows. First, that the real-world rate of warming is indeed well below half what was originally predicted and is still predicted, strongly suggesting at least one systemic error in the models, in which event the expenditure of trillions, bankrupting the West, will achieve nothing.

Secondly, that the uncertainties in measurement of global temperature are so large that we are incapable of drawing any conclusion at all about whether or at what rate the planet is warming or cooling, in which event there is no empirical method by which the rapid-warming hypothesis that Mr Stokes and his paymasters so cherish may be verified, in which event it is merely a speculation that has no place in science.

Furthermore, I performed a detailed autocorrelation analysis on the datasets a few years ago, and found that there is very little of it in the global datasets, though it becomes noticeable in the regional datasets.

Stochasticity and heteroskedasticity are of more significance than autocorrelation in the global datasets. And, as I have pointed out to Mr Stokes before, it is official climatology that likes to use the least-squares linear-regression trend as the simplest way to get an idea of the direction of travel of the global-temperature datasets. If he wishes to quarrel with that custom, let him take up the cudgels with official climatology and not with me.

And once again, all they can do is push the downvote button…

CMoB said: “First, that the real-world rate of warming is indeed well below half what was originally predicted”

You can say that as many times are you want (and undoubtedly will), but it won’t make it any less wrong than any of the other times you’ve said it over last decade. As we’ve repeatedly shown you with the actual diagrams and text of the IPCC FAR their prediction was actually pretty close.

CMoB said: “Secondly, that the uncertainties in measurement of global temperature are so large that we are incapable of drawing any conclusion at all about whether or at what rate the planet is warming or cooling”

Christy et al. 2003 disagrees with you. They say that with just 24 years of data the trend is statistically significant. We now have 43 years of data. And as you can see with Nick’s plot above the more data you have the lower the uncertainty of the trend becomes.

Christy’s conclusion is totally out of line with signal analysis. There are oscillations much longer than that. There are drifting phases that combine to cause differing outputs. Orbital changes. Much longer times are needed to know what is going on. Millennia at least. Twenty or 30 years is somebody’s excuse for poor science.

The last para, along with your plot, is instructive to rookies like me. I.e., I now know why the expected values from my “raw” evaluations are the same as yours, but your confidence intervals are (somewhat) larger. I wish that your tool showed standard errors, or am I missing that? Yes, I can back calc them….

It shows the t-value. But sorry, no, you’ll have to back-calc. I think it is 1/4 (actually 1/3.92) of the CI range.

No problem. I use opencalc and solver to back into these all the time.

You missed part of the conclusion. Probably due to your bias.

You can’t rule out a “-2.838 °C/Century” underlying trend either. That is certainly lower than the IPCC prediction. Ain’t statistics a bi**h?

“Probably due to your bias.”

The only “bias” is in your faux claim that he “missed part of the conclusion”. The chance of the lower value was not only noted, but was quantified.

FYI, your bias also made you miss his larger point. That, unlike evaluations of data with enough physical/statistical rigor to use, the whole “pause” evaluation is bogus, due to the data spread.

Funny how that “spread” only applies to the pause and not the trend. It’s like how the uncertainty of the mean in surface temps data is ignored also. Funny how when I brought that up in another thread, NIST was said to be wrong.

“Funny how that “spread” only applies to the pause and not the trend.”

It does. It’s just that the relative spreads are night and day. The UAH 6 trend, corrected for autocorrelation, for the last 40 years is 1.4 degC/century, with a standard error of 0.2 degC/century. The chance that it is positive is 99.99999999998%. OTOH, the comparable trend, again corrected for autocorrelation, for the last 101 months, is -0.228 degC/century*, with a standard error of 1.43 degC/century. The chance that it is positive is 43.6%.

https://mojim.com/usy129026x6x51.htm

What a terrific tool. I can see why the fora here objects to it. It channels the “48 Hours” fear of a “”****** with a Badge and A Gun”.

FMI, do you have a link to the Quenouille correction for autocorrelation? I can find an evaluation of it, but not how you correct for it.

No TDS today, blob? Did you finally get treated?

“FMI, do you have a link to the Quenouille correction for autocorrelation? “

I wrote about the general methods here. There are links to some earlier posts, and also to a quite informative post on Climate Audit. Basically you work out the lag 1 autocorrelation r, and then multiply the OLS σ by sqrt((1+r)/(1-r)) to get the expanded CI.

In that post I also worked out Q-type corrections for higher order Ar().

Thanks, I’ll read it all tomorrow.

That was super informative. I have this technique in my workflow now. Well…the AR(1) v correction anyway. The ARMA v correction is a lot harder so I’ll punt for now.

Hey, Willis, it’s a “pause”, just like the ones I get with my investments. I just know that they will go up if I sell them.

When I retired age 51 in 2005, my computer model said my net worth would be over $1 million by 2023. My actual net worth has been going up, up, up, as predicted, and today is $129. I must have programmed my computer wrong.

Funny, my investments go down when I buy them.

Fair enough. From the results of your model, what is the physical meaning of the line’s intercept at -27.446997?

My simpleton version:

101 months is short term global average weather trend data mining, not a long term 30 years or more global climate trend

The short term trend is temporarily flat.

That fact predicts nothing

It does show the expected warming effects of the largest 101 month rise of manmade CO2 in history were completely offset by net cooling effects of all other climate change variables.

That is evidence CO2 is not the climate control knob, as the IPCC has claimed since 1988.

The past 101 month trend is not likely to have any predictive ability for the next 101 month trend.

I’m not qualified at the statistics level to agree with or dispute Willis’s discussion above——but I do understand the scientific process well, and the politics of “climate change” even better.

Lord M’s contribution re the lengthening “pause” is highly relevant in the context of the general worldwide climate change discussion.

It is asserted that we must approach Zero Carbon soon or something terrible will happen. It is asserted that rising carbon dioxide levels are a powerful driver of climate, indeed talked about as if the only important driver.

CO2 levels continue to rise steadily. If global temps are not rising hand in hand, something other than CO2 must also be in play. Solar cycles, ocean currents, thunderstorms ….something.

But admitting something might work in a cooling direction such that continuously rising CO2 is not followed by continuously rising temperatures means that when temperatures are rising, maybe that other factor is the reason are rising and not CO2.

This is politically important.

CO2 was rising from 1950-1980, too. But temperatures were not, indeed maybe even falling.

CO2 was minimal in 1910-1940, but temps were rising.

This gross non-correlation is both scientifically and politically important.

The CO2-climate change argument is scientifically interesting. Exacting statistics are important for this.

But the ongoing disaster is political: the ongoing destruction of our economy in the war against carbon/based energy.

Lord Moncton’s “lengthening pause” will become scientifically interesting if it extends enough years to reach statistical significance as per Willis’s analysis.

But it is of immediate politically significance. There is a palpable apocalyptic hysteria surrounding the question of climate change. Any analysis based on data that tends to quiet the hysteria is important.

So, yes, Lord M’s observation is immediately important. And time will tell if scientifically important, in addition to politically important.

Kwinterkorn is right. These monthly Pause columns are clear and simple. They are a great deal easier to understand than the more complex statistical methods which, if used, would merely confirm that global temperature might be rising or falling at a rate of almost 0.3 K/decade (the midrange being zero, which is exactly what my monthly graphs show).

Precisely because these graphs are easy to understand, they are very widely influential. And that is why there is so much screaming about them by real and faux skeptics here each month.

kwinterkorn said: “CO2 levels continue to rise steadily. If global temps are not rising hand in hand, something other than CO2 must also be in play.”

Yep. Scientists have long known that CO2 is not the only thing that modulates Ein and Eout of the UAH TLT layer of the atmosphere. And it has little contribution to the variability of the energy flows especially on shorter timescales like months or years.

BTW…climate models predict a lot of these extended pauses. I encourage you to go the KNMI Climate Explorer and download the data and see for yourself just how prevalent pauses like these are predicted to be.

Yet you delight in posting your zettajoules hockey stick chart over and over.

of garbage.

Fixed it for you.

“BTW…climate models predict “

No, climate models make projections, they do *NOT* make predictions. Prediction allows for changing conditions in the future, projections assume no changing conditions in the future, a projections just assumes that what has happened in the past determines what happens tomorrow.

Predictions allows for cyclical processes to produce different outcomes. Projections don’t allow for cyclical process to provide different outcomes, the linear trend line will just continue forever.

Willis Eschenbach raises the interesting question of autocorrelation. I had a good look at this some years ago and did a straightforward analysis, comparing regional with global temperature records and looking for autocorrelation. Unsurprisingly, there was plenty of seasonally-driven autocorrelation in the regional datasets, but nothing like enough to worry about in the global datasets.

Interestingly, heteroskedasticity is really of considerably more significance than autocorrelation in the global temperature datasets. For this reason, it is not particularly useful to analyze the 2-sigma uncertainty interval: it keeps changing over time. Stochasticity is also important, given the strong and unpredictable el Nino/la Nina peaks and troughs.that lead to frequent and often sharp departures from the least-squares linear-regression trend.

For these and other reasons, Professor Jones at East Anglia, with whom I discussed this some years ago, is on record as having said that the least-squares linear-regression trend is the best way of getting a general idea of what is happening to global temperatures.

Willis says that there is no statistically-significant trend in the most recent half of the UAH dataset. Quite so: but that conclusion reinforces the argument in the head posting a fortiori. The head posting finds a zero trend over the past eight years five months: but there has been no statistically significant trend for something like 22 years. And yet we are all supposed to panic about global warming and blame every transient extreme-weather event on the West’s sins of emission.

It is important not to overthink these things. When I show the slide showing no warming trend for many years, audiences get the point at once. That is why the usual suspects spend so much of their time trying to challenge what is, at root, a very simple exercise, which I began to do many years ago because nobody else was doing it.

The ineluctable fact remains that there has been no global warming to speak of for the best part of a decade, and that the longer-run trend over the entire 44 years of the UAH dataset is well below half the originally-predicted midrange value.

Unfortunately, some of the piece I wrote was truncated without notice or explanation, so it looks as though the scientific discussion on this and related points that are of great interest to readers here will have to take place elsewhere from now on, which is a shame.

“The ineluctable fact remains that there has been no global warming to speak of for the best part of a decade, ”

The rate of warming over the last ten years according to UAH has been 0.16°C / decade. Faster than the overall rate.

Given that 85% of that period is on pause might give some indication of why just looking at the pause in isolation is misleading.

Bellman should follow Monckton’s Rule: read the head posting before commenting on it. The graph showing the entire UAH trend since December 1978 is also in the head posting. And “the best part of a decade” does not mean “a decade”: it means “most of a decade”.

The arguments against the conclusion that global warming is not occurring at anything like the originally-predicted or currently-predicted midrange decadal rate are becoming feebler and feebler.

Hear, Hear!

lordmoncktonmailcom should also follow that rule. I’m not sure what point he thinks I got wrong. I’ve been pointing out on the UAH comments section that Monckton shows the linear trend over the whole of UAH series. I keep being told that’s the wrong thing to do, and a meaningless value, but I defend Monckton’s right to do it.

I never claimed that a decade was the same as the best part of a decade. I specifically said it was around 85% of the decade. My point was just to demonstrate how much of a difference there is depending on how carefully you select your start dates. Start in August 2014 and there is zero trend. Start less than two years earlier and there is a faster rate of warming. This is a good indication of insignificant the pause is so far. It’s done less than zero to reduce the overall rate of warming.

And this is not an argument against the idea that UAH shows less warming than predicted. It’s simply an argument about how misleading focusing on the length of “the pause” is.

Bellman fails to take account of the fact that the reason for the frequency of these long Pauses is the failure of global temperature change to approach even half the midrange prediction. The previous Pause was 18 years 9 months. This Pause is already 8 years 5 months (or well over 9 years on most other datasets). Cherry-picking, as Bellman here does, to take improper advantage of a short-term el Nino spike, is inappropriate and anti-scientific.

As I keep pointing out, the correlation between length of pause and rate of warming is slim. The length of these so called pauses depends a lot on the strength of the spikes and troughs along the way.

Take RSS for example. A much faster rate of warming, but it’s pause is just as long as UAH’s. Monckton himself says that other data sets now have pauses over 9 years long, but other data sets also show more warming.

As always he accepts that it is cherry picking to use short term el Niño spikes to show a short term accelerated warming trend, but will reject the idea that starting a trend just before a major spike in order to show no warming is also cherry picking.

And in the case of the trend over the last decade, it isn’t the 2016 spike that causes the rate of warming, that happened befor the midway point, so would be expected to reduce the rate of warming. Just as all those la Niñas at the end. No, the reason there is a warming trend over the last ten years but not eight years, is because those last eight years have all been substantially warmer than the years before them.

Short, long, and cherry-picking is in the eye of the beholder. Climate is considerably longer than 100 years even. I haven’t seen any refinement of the climate zones lately so those folks must not have gotten the message.

Your trend forecasts the past correctly, right? If not, then it must be cherry picked too.

“because those last eight years have all been substantially warmer than the years before them.”

So what? If warming is supposed to follow CO2 in the atmosphere then it obviously isn’t doing so. We have yet to see a comprehensive explanation for why based on physical science.

All you have to offer is that based on your 40 year linear regression the earth is going to turn into a cinder because the warming will never stop.

“We have yet to see a comprehensive explanation for why based on physical science.”

Do you keep unseeing graphs like this. The only explanation needed is that ENSO is doing it usual thing.

“All you have to offer is that based on your 40 year linear regression the earth is going to turn into a cinder because the warming will never stop.”

Not a word of that is anything I claim or believe.

TG said: “So what? If warming is supposed to follow CO2″

It’s not supposed to follow CO2 and only CO2. Remember, the temperature change is given by ΔT = ΔE/(c * m) and the law of conservation of energy says ΔE = Σ[Ein_x, 1, n] – Σ[Eout_x, 1, n]. CO2 is only one many of the Ein_x and Eout_x terms.

I know…you don’t fully accept the law of conservation energy. That doesn’t make it any less true.

“””””From this law follows that it is impossible to construct a device that operates on a cycle and whose sole effect is the transfer of heat from a cooler body to a hotter body. I”””””

https://www.thermal-engineering.org/what-is-second-law-of-thermodynamics-definition/

You need to reconcile your conservation of energy with the second law of thermodynamics. In other words, cold CO2 warming the hotter surface of the earth!

JG said: “You need to reconcile your conservation of energy with the second law of thermodynamics.”

No I don’t. Both the 1LOT and 2LOT are indisputable laws of physics that no one seriously challenges except a handful of contrarians on the WUWT blog.

JG said: ” In other words, cold CO2 warming the hotter surface of the earth!”