Sheldon Walker – (agree-to-disagree.com)

The slowdown/pause/hiatus, would probably be only a dim memory, if Alarmists didn’t keep digging up the imaginary corpse, in order to show that it really is dead.

The website called “The Conversation”, recently featured an article called “Global warming ‘hiatus’ is the climate change myth that refuses to die”, by Stephan Lewandowsky and Kevin Cowtan.

It was dated “December 20, 2018”, and the web address is:

https://theconversation.com/global-warming-hiatus-is-the-climate-change-myth-that-refuses-to-die-108524

Both of the authors have also recently co-authored 2 scientific papers, with a large number of other well-known Alarmists (they now write scientific papers in “gangs”, to show how tough they are). The 2 scientific papers claim to “demonstrate convincingly that the slowdown/pause/hiatus wasn’t a real phenomenon”.

It is rare to find a “scientific” article, which features so much “woolly-headed” thinking. And so much misdirection.

It starts badly. Just reading the first 2 paragraphs made me annoyed. They used the word “denier” in the first sentence, and the phrase “science-denying” in the second paragraph.

When did the word “denier”, become a scientific term? What do these arrogant Alarmist jerks, think they are doing. I took a deep breath, and continued reading the article.

The third paragraph really made me sit up, and take notice.

They repeated a common Alarmist lie, about the slowdown, which I talked about in a recent article.

They said, “But, more importantly, these claims use the same kind of misdirection as was used a few years ago about a supposed “pause” in warming lasting from roughly 1998 to 2013.”

They talk about “deniers using misdirection”, and then THEY misdirect people to a false weak slowdown (1998 to 2013). This is part of an Alarmist myth, which claims that the recent slowdown only exists because of the 1998 super El Nino.

In my article, I said:

– The strongest slowdown (the one with the lowest warming rate), went from 2002 to 2012. It had a warming rate of +0.14 degrees Celsius per century. Because it went from 2002 to 2012, it had nothing to do with the 1998 super El Nino.

– The average warming rate from 1970 to 2018, is about +1.8 degrees Celsius per century. So the slowdown from 2002 to 2012, had a warming rate that was less than 8% of the average warming rate.

– If the average warming rate was a car travelling at 100 km/h, then the slowdown was a car that was travelling at less the 8 km/h. Doesn’t that sound like a slowdown?

– The strongest slowdown WHICH INCLUDED THE YEAR 1998 (the one with the lowest warming rate), went from 1998 to 2013. It had a warming rate of +0.96 degrees Celsius per century.

[this is the slowdown interval that Lewandowsky and Cowtan used]

– So the false Alarmist slowdown (1998 to 2013), had a warming rate which was 6.9 times greater than the warming rate of the real slowdown (2002 to 2012).

-If the real slowdown (2002 to 2012) was a car that was traveling at 100 km/h, then the false Alarmist slowdown (1998 to 2013), would be a car that was traveling at 690 km/h.

Perhaps this is one of the reasons why Alarmists don’t believe that there was a slowdown. They are not even looking at the real slowdown.

====================

Lewandowsky and Cowtan seem to be under the impression that, because “the past two years were two of the three hottest on record”, that there could NOT have been a slowdown. Have they never noticed, that when a person takes their foot off the accelerator in a car, the car keeps moving forward (but at a slower rate, i.e. a slowdown)? So the car is still setting records, becoming further from where it started, even though it has slowed down.

This “everyday” observation (about a person taking their foot off the accelerator of a car), appears to be too complicated for them to grasp. Perhaps they are chauffeur driven, everywhere.

====================

Lewandowsky and Cowtan say, “In a nutshell, if you select data based on them being unusual in the first place, then any statistical tests that seemingly confirm their unusual nature give the wrong answer.”

There is a well-known saying, “If it looks like a duck, and walks like a duck, and quacks like a duck, then it probably IS a duck”.

We could rephrase that as, “If it looks like a slowdown, and the warming rate is lower than normal, and the statistical test says that it COULD be a slowdown, then it probably IS a slowdown”.

But Lewandowsky and Cowtan want you to believe that, “If it looks like a slowdown, and the warming rate is lower than normal, and the statistical test says that it COULD be a slowdown, then it DEFINITELY IS NOT A SLOWDOWN”.

Lewandowsky and Cowtan don’t want skeptics to look for slowdowns in places that look like slowdowns. They want skeptics to only look for slowdowns in places that DON’T look like slowdowns.

I would like to suggest that skeptics start looking for slowdowns, on the moon. There isn’t much chance of finding one, but if you do find one, it is almost certainly real.

====================

I am amazed at how Lewandowsky and Cowtan don’t seem to be able to understand simple logic. They give an example, “If someone claims the world hasn’t warmed since 1998 or 2016, ask them why those specific years – why not 1997 or 2014?”

If somebody got run over by a truck in 1998, would you ask them, “Why 1998, why didn’t you get run over by a truck in 1997 or 1999”? If something happens in a particular year, or over a particular interval, then that is a fact. There is little point in questioning why it didn’t happen at a different time.

The reason that Lewandowsky and Cowtan ask, “Why those specific years – why not 1997 or 2014?”, is because they CAN’T PROVE that there wasn’t a slowdown since 1998, and they want to misdirect people, with a stupid question.

====================

Lewandowsky and Cowtan are concerned that skeptics will cherry-pick intervals which “look like” a slowdown, but are not really a slowdown.

I developed a method to analyse date ranges, for slowdowns and speedups, which does NOT cherry-pick date ranges. It does this, by giving equal weight to EVERY possible date range. So when I analyse 1970 to 2018, I calculate about 150,000 linear regressions (one for every possible date range). Then I look at which date ranges have a low warming rate. To make it easier, I colour code all of the results from the 150,000 linear regressions, and plot them on a single graph. I call this graph, a “Global Warming Contour Map”.

If I find that 2002 to 2012 has a low warming rate, then that means that it had a low warming rate, compared to the thousands and thousands of other date ranges that I checked. Every date range has an equal chance of being a slowdown or a speedup, based on its warming rate. The warming rate is an objective measurement, based on a temperature series.

But wait. I don’t stop there. I check every temperature series that I can find. This includes GISTEMP, NOAA, UAH, RSS, BEST, CLIMDIV, RATPAC (weather ballon data), etc.

But wait. I don’t stop there. I check every type of measurement that I can find. Land and Ocean. Land only. Ocean only. Lower troposphere. Upper troposphere, Stratosphere.

But wait. I don’t stop there. I check every region that I can find. Northern hemisphere. Southern hemisphere. Tropical. Extratropical. Polar.

But wait. I don’t stop there. I check every latitude that I can find. 90N to 48N. 48N to 30N. 30N to 14N. 14N to Equator. Equator to 14S. 14S to 30S. 30S to 48S. 48S to 90S

When I say that there was a slowdown, that means that I have found evidence of a slowdown, in most of the major temperature series, types of measurements, regions, and latitudes.

I have made literally hundreds of global warming contour maps, for nearly every type of global warming data, that you can imagine. Each one, based on about 150,000 linear regressions.

I have probably done more linear regressions, than any other person in the world. I may have even done more linear regressions, than everybody in the world, put together.

And all of those linear regressions, tell me that there was a slowdown, sometime after the year 2001. It was strongest from 2002 to 2012. You can measure it in different ways, and get slightly different results. But there is overwhelming evidence for the slowdown.

I didn’t cherry-pick 2002 to 2012. This interval leapt out of my computer screen, slapped me on the face, and yelled, “I am a slowdown, stop ignoring me !!!”

Alarmists, are the real “Deniers”. They ignore the evidence that they can’t explain away. They insult the people who try to show the truth. They lie, when other methods don’t work.

It is time for Alarmists to admit the truth. There was a slowdown. It was not enormously long. It was temporary. It is now over. The fact that it existed, didn’t prove that global warming isn’t happening.

My personal belief, is that the slowdown was caused by ocean cycles, like the PDO and AMO. There are climate scientists, who believe the same thing. We need to acknowledge the slowdown, so that we can learn more about climate. Lying about the slowdown, won’t solve global warming. Understanding the slowdown, might help us to understand global warming.

If anybody would like to learn more about my method, and “Global Warming Contour Maps”, then there are lots of them, on my website. I wrote a special article, called “Robot-Train contour maps”, which explains how contour maps work, using simple “train trips”, as an analogy for global warming.

Here is a small selection of articles about slowdowns, and “global warming contour maps”.

– No, I am not obsessed with slowdowns.

– I didn’t choose slowdowns, they chose me.

– Being the “proud father” of “global warming contour maps”, I am always happy to answer questions, and show you pictures, of my clever baby.

[ this article shows how “global warming contour maps” work ]

https://agree-to-disagree.com/robot-train-contour-maps

[ this article shows why Alarmist thinking on slowdowns, in one-dimensional ]

https://agree-to-disagree.com/alarmist-thinking-on-the-slowdown

[ this article investigates the Alarmist myth, that the slowdown was caused by the 1998 super El Nino ]

https://agree-to-disagree.com/was-the-slowdown-caused-by-1998

[ this article shows why the slowdown is so special (No, no, no, no, no! It only LOOKS special. It isn’t really special.) ]

https://agree-to-disagree.com/how-special-was-the-recent-slowdown

[ A guide to the CORRECT way to look for slowdowns. Please try to stay quiet. Slowdowns scare easily, and then they run away and hide. ]

https://agree-to-disagree.com/how-to-look-for-slowdowns

[ this article investigates warming in the USA, using NOAA’s new ClimDiv temperature series ]

https://agree-to-disagree.com/usa-warming

[ this article investigates regional warming, by dividing the earth into 8 equal sized areas, by latitude]

https://agree-to-disagree.com/new-regional-warming

[ this weather balloon article has global warming contour maps with very nice colours ]

https://agree-to-disagree.com/weather-balloon-data-ratpac

[ this article uses global warming contour maps to compare GISTEMP and UAH ]

https://agree-to-disagree.com/gistemp-and-uah

For the life of me, I can never understand why anybody who valued their reputation would want to attach their name to a paper co-authored by Lewandowsky. There must be some kind of reward we are not being made aware of for the people willing to take such a risk.

Stephan Lewandowsky is the Hank Johnson of climate science.

If it walks like a duck, quacks like a duck, and looks like a guy in a duck suit, it’s probably Lewandowsky.

I wonder if Lewandowski has psychoanalyzed Cook about his Nazi cross-dressing.

I tried to watch this but softball questions which give the answer required is no way to interview any one much less this guy. Why is it called Cook vs Lewandowsky why isn’t it called Cook sucks up to Lewandowsky?

Lewandowsky represents the 50+% of psychology that is junk science

he’s a chief hack in a field of hacks

The field of psychology has done more harm than good to date, the psychobabble has made the latest generations the most mentally fragile humans in history

Cook vs Lewandowsky – there is the fraud right up front.

Stephan Lewandowsky is not so much an CAGW ‘alarmist’ as an hystericist, imo.

I am still creeped out at his weird facial expressions in some videos he did years ago when I think he was still at the University of Western Australia.

Lysenko relied on the likes of Lewandowsky to enforce his politically correct version of evolution.

You can be a scientist or you can be an activist. You can’t be both.

If you use the term ‘denier’ in a paper purporting to examine the temperature record, you are clearly not someone dispassionately seeking the truth.

Why not? Pauling got both the Nobel Prize in Chemistry for his science and the Nobel

Peace prize for his activism against nuclear weapons.

Pauling wasn’t fighting against the science of nuclear power. He was fighting against the use. Not the same form of activism.

These people are fighting against the use of FFs, and for the destruction of the world’s economy.

Pauling didn’t put his activism into his science.

Wasn’t the analysis done by Lord Monkton a statistical one which is date independent? If so, doesn’t the set of data have to be changed to eliminate the pause his analysis showed?

You had me at Lew… Then I skipped that paragraph and read the rest for fun.

It does look like Sheldon Walker is taking the right approach, of using all the data bases available on a subject, so as to avoid any selection bias.

Not really. All these little 5, 10, 30 year blips are pretty meaningless.

You can’t know that a priori. Even if you suspect it, it’s simple honesty to run them all.

“I developed a method to analyse date ranges, for slowdowns and speedups, which does NOT cherry-pick date ranges. It does this, by giving equal weight to EVERY possible date range. So when I analyse 1970 to 2018, I calculate about 150,000 linear regressions (one for every possible date range). Then I look at which date ranges have a low warming rate. To make it easier, I colour code all of the results from the 150,000 linear regressions, and plot them on a single graph. I call this graph, a “Global Warming Contour Map”.”

What was the greatest rate and when was that?

Sheldon, trouble is you are playing with their loaded dice. The data you used has been Karlized, specifically to erase the pause by Tom Karl in 2015 on the eve of his retirement, no less! When you redo your analysis a few years hence, it will have shrunk further. Apples and blueberries.

Gary,

Except that Karl (2015) used the buoy trick to adjust ocean temperatures. Therefore, it only affects SST and composite land-water temperatures. The land only and atmospheric temperatures should be free of any influence by Karl.

Gary,

winning, when I am using their loaded dice, makes the win so much sweeter.

As I said elsewhere:

If I can show that there is a recent slowdown in the GISTEMP data, then nobody will accuse NASA, Gavin Schmidt, or James Hansen, of adjusting the data to create a slowdown. People may believe that NASA, Gavin Schmidt, or James Hansen, might adjust the data to hide a slowdown, but they wouldn’t adjust the data to create one. Therefore, if I can show that there is a recent slowdown in the GISTEMP data, then you can be fairly certain that it is real.

Even in RSSv$ ….

there is no warming from 1980-1997

And no warming from 2001-2015

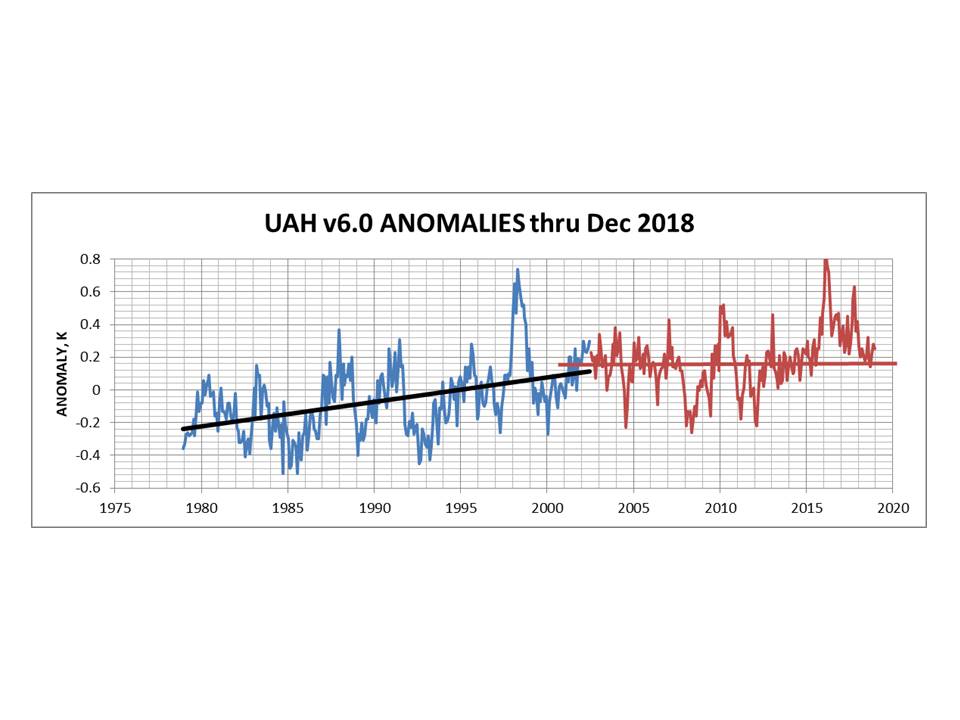

UAH v6.0 data shows end of warming trend in about 2002-2005 temporarily interrupted in about 2013 by el Nino aberration that peaked Jan 2016.

All the sea ice data sites shows a similar flat pattern from 2005

The Jason 3 sea level rise data since it has come online is also very flat

With world emission control basically dead and CO2 levels still rising it will be interesting to see what happens in the next few years.

As I have always said I am not concerned if there really is a problem proper hard sciences and engineers will get involved and there are plenty of options to tackle it if it is a problem.

Sheldon, aren’t you conflating “haitus” or “pause” with “slowdown”. They are different things.

Mat,

a slowdown doesn’t necessarily have a constant warming rate. For part of the time, the warming rate may be zero, or even cooling.

On top of that, the definitions of what a pause is, what a hiatus is, and what a slowdown is, are not clear.

I prefer the term slowdown, with the understanding that there may be short intervals when the warming rate is zero.

What would you call a 10-year interval, where the warming rate was a constant +0.1 degrees Celsius per century? (assume that the average warming rate is +1.8 degrees Celsius per century)

If the pause, hiatus, slowdown, deceleration, break in the action, whatever isn’t real, why were they coming with all those endless explanations for it? What about the missing heat hiding in the ocean. It’s not missing any more?

Why are they arguing for warming? If there is warming without evidence of the positive feedback mechanisms, they disprove their CO2 Armageddon hypothesis. If the world is warming, how is CO2 doing it?

If someone believed that climate change is a natural event, then the idea of a slowdown is just common sense. Sometimes the change will slow down, and sometimes it speeds up – sometimes it even reverses.

BUT…if someone is just plain determined to prove that climate change is caused by CO2 despite any rational argument otherwise, and if it were fairly clear that more and more CO2 is being added to the atmosphere per the measurements that are taken (showing the ppm of CO2 in the atmosphere)…then they would be determined to deny any slowdown as that disproves their belief in the CO2-controls-all scenario.

So, it is actually quite easy for me to belief that people refuse to accept a slowdown, even though it is a perfectly rational and natural event. They are fact-refusers! 🙂

It is always a mistake to closely examine Lew Paper.

Especially a used Lew Paper, Oh wait all Lew paper is used.

100% michael hart top comment in more ways than one.

The alarmists often want to cherry-pick a starting point for their regressions between the 1997-1998 El Niño and the 1999-2000 La Niña. If you start your regression either before or after that pair of ENSO events, there’s no avoiding the “slowdown.” But if you carefully choose a starting point just as ENSO transitioned from El Nino to La Nina, you’ll low-bias the left endpoint, and effectively hide the decline in the rate of warming.

A 2014 analysis by MIT’s Ben Santer et al found that, when the effects of ENSO cycles and volcanic aerosols are accounted for, there’d been no significant global warming since about 1993. Here’s a graph from their paper, which shows that:

http://sealevel.info/Santer_2014-02_fig2_graphC_1_100pct.png

Here’s the paper:

http://dspace.mit.edu/handle/1721.1/89054

They sought to subtract out the effects of ENSO (El Niño / La Niña) and the big Pinatubo (1991) & El Chichón (1982) volcanic eruptions, from measured (satellite) temperature data, to find the underlying temperature trends. In the graph, the black line is averaged CMIP5 models, the blue & red are measured temperatures.

Two things stand out:

1. The models run hot. The CMIP5 models (the black line) show a lot more warming than the satellites. The models show about 0.65°C warming over the 35-year period, and the satellites show only about half that. And,

2. The “pause” in global warming began around 1993. The measured warming is all in the first 14 years (1979-1993). Their graph (with corrections to compensate for both ENSO and volcanic forcings) shows no noticeable warming since then.

Note, too, that although the Santer graph still shows an average of almost 0.1°C/decade of warming, that’s partially because it starts in 1979. The late 1970s were the frigid end of an extended cooling period in the northern hemisphere. Here’s a graph of U.S. temperatures, from a 1999 Hansen/NASA paper:

http://www.sealevel.info/fig1x_1999_highres_fig6_from_paper4_27pct_1979circled.png

Christy & McNider (2017) (or preprint) did a similar exercise, and found a similar rate of warming (0.096°C/decade), and calculated a tropospheric TCR climate sensitivity of +1.10 ±0.26 °C per CO2 doubling, about half the average IPCC AR5 estimate. The paper is quite long, but here’s a readable discussion.

The fact that when volcanic aerosols & ENSO are accounted for the models run hot by about a factor of two is evidence that the IPCC’s estimates of climate sensitivity are high by about a factor of two, and it suggests that a substantial part, perhaps half, of the global warming since the mid-1800s was natural, rather than anthropogenic.

The maximum effect of CO2 since 1950 was 0.18C . It could be lower but that is the maximum.

http://applet-magic.com/cloudblanket.htm

Clouds overwhelm the Downward Infrared Radiation (DWIR) produced by CO2. At night with and without clouds, the temperature difference can be as much as 11C. The amount of warming provided by DWIR from CO2 is negligible but is a real quantity. We give this as the average amount of DWIR due to CO2 and H2O or some other cause of the DWIR. Now we can convert it to a temperature increase and call this Tcdiox.The pyrgeometers assume emission coeff of 1 for CO2. CO2 is NOT a blackbody. Clouds contribute 85% of the DWIR. GHG’s contribute 15%. See the analysis in link. The IR that hits clouds does not get absorbed. Instead it gets reflected. When IR gets absorbed by GHG’s it gets reemitted either on its own or via collisions with N2 and O2. In both cases, the emitted IR is weaker than the absorbed IR. Don’t forget that the IR from reradiated CO2 is emitted in all directions. Therefore a little less than 50% of the absorbed IR by the CO2 gets reemitted downward to the earth surface. Since CO2 is not transitory like clouds or water vapour, it remains well mixed at all times. Therefore since the earth is always giving off IR (probably a maximum at 5 pm everyday), the so called greenhouse effect (not really but the term is always used) is always present and there will always be some backward downward IR from the atmosphere.

When there isn’t clouds, there is still DWIR which causes a slight warming. We have an indication of what this is because of the measured temperature increase of 0.65 from 1950 to 2018. This slight warming is for reasons other than just clouds, therefore it is happening all the time. Therefore in a particular night that has the maximum effect , you have 11 C + Tcdiox. We can put a number to Tcdiox. It may change over the years as CO2 increases in the atmosphere. At the present time with 409 ppm CO2, the global temperature is now 0.65 C higher than it was in 1950, the year when mankind started to put significant amounts of CO2 into the air. So at a maximum Tcdiox = 0.65C. We don’t know the exact cause of Tcdiox whether it is all H2O caused or both H2O and CO2 or the sun or something else but we do know the rate of warming. This analysis will assume that CO2 and H2O are the only possible causes. That assumption will pacify the alarmists because they say there is no other cause worth mentioning. They like to forget about water vapour but in any average local temperature calculation you can’t forget about water vapour unless it is a desert.

A proper calculation of the mean physical temperature of a spherical body requires an explicit integration of the Stefan-Boltzmann equation over the entire planet surface. This means first taking the 4th root of the absorbed solar flux at every point on the planet and then doing the same thing for the outgoing flux at Top of atmosphere from each of these points that you measured from the solar side and subtract each point flux and then turn each point result into a temperature field and then average the resulting temperature field across the entire globe. This gets around the Holder inequality problem when calculating temperatures from fluxes on a global spherical body. However in this analysis we are simply taking averages applied to one local situation because we are not after the exact effect of CO2 but only its maximum effect.

In any case Tcdiox represents the real temperature increase over last 68 years. You have to add Tcdiox to the overall temp difference of 11 to get the maximum temperature difference of clouds, H2O and CO2 . So the maximum effect of any temperature changes caused by clouds, water vapour, or CO2 on a cloudy night is 11.65C. We will ignore methane and any other GHG except water vapour.

So from the above URL link clouds represent 85% of the total temperature effect , so clouds have a maximum temperature effect of .85 * 11.65 C = 9.90 C. That leaves 1.75 C for the water vapour and CO2. CO2 will have relatively more of an effect in deserts than it will in wet areas but still can never go beyond this 1.75 C . Since the desert areas are 33% of 30% (land vs oceans) = 10% of earth’s surface , then the CO2 has a maximum effect of 10% of 1.75 + 90% of Twet. We define Twet as the CO2 temperature effect of over all the world’s oceans and the non desert areas of land. There is an argument for less IR being radiated from the world’s oceans than from land but we will ignore that for the purpose of maximizing the effect of CO2 to keep the alarmists happy for now. So CO2 has a maximum effect of 0.175 C + (.9 * Twet).

So all we have to do is calculate Twet.

Reflected IR from clouds is not weaker. Water vapour is in the air and in clouds. Even without clouds, water vapour is in the air. No one knows the ratio of the amount of water vapour that has now condensed to water/ice in the clouds compared to the total amount of water vapour/H2O in the atmosphere but the ratio can’t be very large. Even though clouds cover on average 60 % of the lower layers of the troposhere, since the troposphere is approximately 8.14 x 10^18 m^3 in volume, the total cloud volume in relation must be small. Certainly not more than 5%. H2O is a GHG. Water vapour outnumbers CO2 by a factor of 50 to 1 assuming 2% water vapour. So of the original 15% contribution by GHG’s of the DWIR, we have .15 x .02 =0.003 or 0.3% to account for CO2. Now we have to apply an adjustment factor to account for the fact that some water vapour at any one time is condensed into the clouds. So add 5% onto the 0.003 and we get 0.00315 or 0.315 % CO2 therefore contributes 0.315 % of the DWIR in non deserts. We will neglect the fact that the IR emitted downward from the CO2 is a little weaker than the IR that is reflected by the clouds. Since, as in the above, a cloudy night can make the temperature 11C warmer than a clear sky night, CO2 or Twet contributes a maximum of 0.00315 * 1.75 C = 0.0055 C.

Therfore Since Twet = 0.0055 C we have in the above equation CO2 max effect = 0.175 C + (.9 * 0.0055 C ) = ~ 0.18 C. As I said before; this will increase as the level of CO2 increases, but we have had 68 years of heavy fossil fuel burning and this is the absolute maximum of the effect of CO2 on global temperature.

So how would any average global temperature increase by 7C or even 2C, if the maximum temperature warming effect of CO2 today from DWIR is only 0.18 C? This means that the effect of clouds = 85%, the effect of water vapour = 13.5 % and the effect of CO2 = 1.5%.

Sure, if we quadruple the CO2 in the air which at the present rate of increase would take 278 years, we would increase the effect of CO2 (if it is a linear effect) to 4 X 0.18C = 0.72 C Whoopedy doo!!!!!!!!!!!!!!!!!!!!!!!!!!

Alan, I didn’t understand all that, and I don’t know what “Tcdiox” is. But it sounds like you’re calculating the only direct effect of CO2, assuming that the effects of water vapor and clouds are independent of it. I doubt that assumption is correct.

I’ve attempted to calculate climate sensitivity by examining the result of the “experiment” which we’ve performed on the Earth’s climate, by raising the atmospheric CO2 level from about 316.91 ppmv in 1960 to about 398.65 in 2014. The strategy is to simply examine what happened to temperatures when the atmospheric CO2 level was raised by 25.79%, and extrapolate from those observations. (I chose 1960-2014 to cover most of the Mauna Loa measurement period, while avoiding distortions from major ENSO spikes.)

https://sealevel.info/sensitivity.html

If 57% of the warming is deemed anthropogenic (which is the “average” guess of American meteorologists, in the latest AMS survey, which is probably pretty realistic), and if we trust the surface temperature measurements (less realistic!), I calculate a TCR sensitivity of around 0.8 °C per doubling of CO2.

If 100% (instead of 57%) of the warming is deemed anthropogenic, I calculate TCR sensitivity of around 1.4°C per doubling.

ECS is usually estimated to be between 1.25× and 1.65× TCR, which would make its plausible range about 1.25×0.8=1.0°C to 1.65×1.4=2.3 °C per doubling of CO2.

One might object that we should expect a bit of delay in effect between CO2 increase and temperature response, so perhaps we should use an earlier pair of dates for the CO2 level. But it turns out not to matter much (except that Mauna Loa measurements only go back to 1958, and before that we have to use less accurate ice core data).

E.g., if we compare CO2 levels from two years earlier, i.e., from 1958 (315.97 ppmv) to 2012 (393.85 ppmv), then CO2 increased 24.65%; using those figures would increase the calculated sensitivity by less than 5%. Or if we compare CO2 levels from five years earlier, i.e., from 1955 (313.7 ppmv) to 2009 (387.43 ppmv), then CO2 increased 23.50%; using those figures would increase the calculated sensitivity by a little less than 10%.

Downwelling IR and longwave IR in general is absorbed by clouds, not reflected. A good emitter of longwave IR or any wavelength in question is a good absorber, not a good reflector of that wavelength.

Also, water vapor is often significant in deserts. The dewpoint is even often above freezing in many hotter deserts.

Dave Burton, I think this is key. If you look at trends of noisy data and that noise just happens to enhance warming, then no analysis is going to give you any idea what is really happening with the climate. This just happens to be the situation over the past 40 years.

1) Periods starts with the PDO just finishing 30+ years in negative mode.

2) The AMO and its effects are also in negative territory early in the data and then moves to positive.

3) Both major volcanoes happen early in the data which makes it look substantially cooler over 6 of the years. They also happen to coincide with strong El Nino events thus removing their effects.

4) A 2+ year super El Nino happens very near the end of the period.

The only way to understand what is actually happening is to remove the influence of this kind of noise. My attempt to do so ends up with an overall warming trend for UAH 6 of .06 C /decade with no warming at all this century. Hence, the pause/hiatus was real and it is still ongoing.

I think my analysis shows even a lower trend than the Santer et al and Christy/McNider papers because I also get a lot of the AMO effects out with my analysis.

“I am amazed at how Lewandowsky and Cowtan don’t seem to be able to understand simple logic.”

I’m not, as this is the modus operandi of all alarmists who claim to be scientists. If any of them did understand simple logic, climate alarmism would be a distant memory.

The inescapable logic that the climate scientists have locked themselves into is the graph that shows a straight line increase of net CO 2 in the atmosphere no matter whether man emits more or less per year. Looking at the non ice proxies of CO2 millions of years ago; being all over the map and then looking at the last 400000 years of ice proxies of CO2 being almost completely stable at 280 and then looking at the straight line upward increase since 1958 of Mauna Loa readings; doesnt allow any sense to be made of CO2 correlation emissions since 1958 with temperature increases. The relentless upward straight line increase of CO2 into the atmosphere doesn’t seem to depend on any variable we can measure. The alarmists are caught in their own assumption of net atmospheric CO2 causing warming . They are in a straightjacket which they can’t get out of. The relentless small increase of net CO2 in the atmosphere doesnt seem to be explained by anything and it itself doesn’t explain anything.

Alan,

“The relentless small increase of net CO2 in the atmosphere doesnt seem to be explained by anything and it itself doesn’t explain anything.”

The relentless increase in atmospheric CO2 is explained by burning fossil fuels. While this doesn’t explain any perceptible temperature trend, it does explain how climate alarmism has centered itself around windmill and solar cell idolatry.

The straight jacket is the IPCC which requires a massive effect from CO2 to justify the anti-west agenda of the UNFCCC. That the IPCC was allowed to maneuver itself to become the arbiter of what is and what is not climate science is so anti-science, all scientists, especially climate scientists, should be embarrassed.

The ice records over the past 400,000 years do not show CO2 being almost completely stable at 280 PPM, but bouncing up and down between 180-200 PPM and 280 PPM with global temperature. The usual argument I hear using ice records of the past 400,000 years against CO2 being a cause of warming is that CO2 lagged global temperature, did not lead global temperature, over the 400,000 year ice core record. (CO2 lagged temperature because back when the amount of carbon in the sum of the atmosphere, hydrosphere and biosphere was largely constant, atmospheric CO2 – along with water vapor – was a positive feedback that reinforced a temperature change started by something else.

DLK,

The lag is absolutely true, although the idea that positive feedback has anything to do with it is not. I’ve analyzed several ice core proxies and the lag is always present. The Vostok core shows a lag of about 800 years, although the resolution is not very good. The DomeC cores have much finer temporal resolution and shows about a 200 year lag.

The same kind of cross correlation analysis that identifies the delay between temperature and Co2 when applied to auto correlate the temperature proxy identifies many periodic influences with periods ranging from decades to millennia.

http://www.palisad.com/co2/docs/co2forcing.ppt

There are lots of notes in the powerpoint slide set that explain the many plots. This slide set was prepared over 10 years ago and may be a little out of date.

My hypothesis on the lag is biology, where it takes centuries for a forest to be established and once it is, CO2 will necessarily rise to support a larger planet wide biomass. As I see it, ice core CO2 levels are a proxy for the amount of biomass that the planet can support at a given temperature.

Temperature dependent absorbing and releasing CO2 from the oceans has a small effect, but it will be nearly instantaneous as compared to the multi-century lags observed in the ice cores.

” The relentless upward straight line increase of CO2 into the atmosphere doesn’t seem to depend on any variable we can measure.”

It primarily depends on sea surface warming via Henry’s Law, following Henry’s experimentally derived absorption of CO2 in water curve.

Except that the effect of Henry’e Law will not be delayed by centuries. This effect is concurrent with temperature change, relative to the sample period of the ice cores. The delayed increase in CO2 is significantly larger and the most likely explanation is biology. Moreover; only the top 100m or so of the oceans vary in temperature as the planets temperature changes.

Ice cores and stable 280 ppm. It should be noted that CO2 diffuses in ice. Its also a tad mobile in the firn as the ice forms and gets buried. In fact until the pressure get so high that it forms a clathrate it remains mobile. So at best any minor peaks and troughs would be smeared out and at worst it would be useless. Plant stomata are a better proxy for CO2. Add in the poor chronology of ice cores and I’m surprised they bother to bore them out

One point about the ice cores is that the D2O based temperature proxy has a much finer temporal resolution than the CO2 since it takes a bigger slice of snow/ice to get a CO2 data point. When I do my correlation analysis, I smooth out the temperature to have a resolution close to the CO2 measurements and limit the correlation to the last 100K years when the resolution is relatively good.

The ice cores also have variable length sample steps which need to be carefully normalized.

The cause of the pause and the status of the hiatus always makes me think of Danny Kaye in “ The Court Jester”-

“ The pellet with the poison’s in the vessel with the pestle;

the chalice from the palace has the brew that is true.”

Great comedy.

” Lying about the slowdown, won’t solve global warming”

Earth has ‘solved’ global warming many times perhaps its time for the ‘hairy apes’ to move on.

“… perhaps its time for the ‘hairy apes’ to move on.”

Surely they’re relatively hairless apes?

Or relatively hairless apes that dig-up the ground?

Or maybe ground excavating, hairless ape that like to sail on water?

Now where’s that banana?

There’s more to us than meets the eye,

or even brain

as thoughts will fly

in all directions, near & far,

to try decoding who we are.

Just bodies full of working parts?

Recycled spirits from the past?

Perhaps we might

just only be

a buzzing field of energy.

Experiments from an alien race,

who regularly check the pace

of how we’re getting on

& why their plan just went so wrong.

Children of God; the chosen ones

or hairless apes with big pink bums.

We try to add up all the parts

but somehow cannot do the sums.

You should always start your trend with 1998. When this El Nino year took place it was the “Canary-in-the-coal-mine event” that the alarmists were looking for. It became the seminal year for shoving CAGW down our throats. Over and over we were told that this was the warming that CAGW brought. It only lasted a year, but it was continous and it was loud.

So use it; just like the alarmists did twenty years ago.

“You should always start your trend with 1998. When this El Nino year took place it was the “Canary-in-the-coal-mine event” that the alarmists were looking for. It became the seminal year for shoving CAGW down our throats.”

And then temperatures cooled after 1998, and it took 18 years for temperatures to again reach the level of 1998, in 2016.

Now, after the high of 2016, temperatures are cooling. If this pattern follows the pattern from 1998, then it will take about 18 years to get back to the temperatures of 2016. Natural variation, natural cycles, up and down, up and down. Not up, up, up, up, up, up, up.

The UAH satellite chart:

http://www.drroyspencer.com/wp-content/uploads/UAH_LT_1979_thru_December_2018_v6.jpg

Yes!

On a more serious note,here is the abstract from England et al 2014 in Nature Climate Change.

I don’t intend to single out these 10 scientists but it is useful to remember what was being promulgated-

“Recent intensification of wind driven circulation in the Pacific and the ongoing warming hiatus.”

Abstract: “Despite ongoing increases in greenhouse gases,the Earth’s global surface air temperature has remained more or less steady since 2001.A variety of mechanisms has been proposed to account for this slowdown in surface warming.A key component of the global hiatus that has been identified is cool Eastern Pacific sea surface temperature, but it is unclear how the ocean has remained relatively cool in spite of ongoing increases in radiative forcing.Here we show that a pronounced strengthening in Pacific trade winds over the past two decades – unprecedented in observations / reanalysis data and not captured by climate models-is sufficient to account for the cooling of the tropical Pacific and a substantial slowdown in surface warming through increased subsurface ocean heat uptake.

The extra uptake has come about through increased subduction in the Pacific shallow overturning cells, enhancing heat convergence in the equatorial thermocline.At the same time the accelerated

trade winds have increased equatorial upswelling in the central and eastern Pacific, lowering sea surface temperature there, which drives further cooling in other regions.The net effect of these anomalous winds is a cooling in the 2012 global average surface air temperature of 0.1 to 0.2 C, which can account for much of the hiatus in surface warming since 2001.This hiatus could persist for much of the present decade if the trade wind trends continue, however rapid warming is expected to resume once the anomalous trade winds abate.”

No comment is necessary.

Sheldon: You didn’t look closely enough. Temperature fell at a rate of -28 K/century (+/-8 K/century)! It happened between 1/98 and 6/99 in the HadCRUT record. (I’m playing the devil’s advocate to get you to think about your data.)

Are you going to tell me that your 120 month period is more meaningful than my 18 month period? How long does a period does one need to be to be meaningful? Why?

You might want to say that the cooling after the 97/98 El Nino is merely noise and therefore not meaningful. I can say that your Pause is merely noise and therefore not meaningful. How do we know who is right about what is meaningful and what isn’t?

That is where statistics becomes important. To do statistics, you need a model. Since warming is supposed to be proportional to forcing and forcing has been increasing roughly linearly with time, my model is that temperature is increasing linearly with time. All of those other bumps are noise in the data. (In this case the noise could be due to chaotic fluctuations in the currents bringing cold deep water to the surface and burying warm surface water. El Nino involves a slowing of upwell of cold water off Peru and a slowing of subsidence in the Western Pacific. Its end produced -28 K/century of cooling for 18 months! There is a lot of noise in climate data.) Then I’m going to assume that my data has been displaced upward or downward by noise that has a normal distribution. (I can check both assumptions by looking at the distribution of the noise or the “residuals” when I have completed a linear fit. It turns out that the noise in temperature data is highly auto-correlated, not randomly distributed, and we need to correct for that problem too.)

When you do a linear fit, you get both a trend and a confidence interval for that trend. The reason you need a confidence interval is that the noise might be distributed differently; by definition it is random. In your Pause, there were several strong La Nina’s near the end of the period, reducing the trend. If those La Ninas had occurred earlier in the period, the trend would have been higher. If you added random noise 10,000 times to perfectly linear data and then did a least-squares fit, you will get 10,000 different trends. However, roughly 95% of those trends should lie within the 95% confidence interval obtained from one of those data sets. Therefore, when you have a trend, YOU KNOW IT IS LIKELY WRONG, because there is noise in the data. The 95% confidence interval tells you how much the observed noise could have distorted the observed trend SIMPLY BY CHANCE ARRANGEMENT OF THE NOISE IN THE DATA (including those La Ninas).

The 95% confidence interval for your 10-year trend is about +/-1 K/century – assuming you correct properly for auto-correlation in the noise. Nick Stokes trendviewer does. When you have an El Nino or a La Nina, more than a dozen consecutive data points all lie above above or below the trend line, they aren’t randomly distributed! So your 0.14 K/century Pause for 10 years turns out to be a trend ranging from -0.86 to +1.14 K/century. Accounting for the presence of noise, you can’t conclude whether it was warming, cooling or plateauing SIGNIFICANTLY during this period. Scientists don’t draw conclusions when statistics shows a reasonable possibility their conclusions may have been due to change arrangement of noise in the data. (Would you want to take medicine, when the efficacy seen in clinical trials might have been due to chance? The FDA usually demands two studies with less than 5% probability that the efficacy could be due to chance.)

Then there is the question of whether the trend has changed. Suppose you have two trends: 0.14 +/- 1.0 K/century and 1.0 +/- 1.0 K/century. These aren’t statistically significantly different from each other. If you compare 0.14 +/- 1.0 K/century and 1.8 +/- 0.4 K/century (the trend for the last 40 years), these will be significantly different statistically. (You use the test for the statistical significance of the difference between two means with confidence intervals.) So you could conclude there was a SLOWDOWN in warming (compared to the trend for the last half-century), but not a PAUSE (a period with no warming).

So, I’m a regular reader but not commenter. Why don’t we use 1936 as a starting date for measuring temperature anomalies? If I remember my reading correctly, at least in the NH we would have a reduction in temperature if we started in 1936. Nobody seems to mention that, so I’m wondering why.

“Why don’t we use 1936 as a starting date for measuring temperature anomalies?”

Because the Climategate manipulators spent a lot of time and effort erasing the warmth of the 1930’s from the surface temperature record, so if you use the official surface temperature charts there is no warmth showing in the 1930’s/40’s.

The Climategate conspirators were especially concerned with the “1940’s blip” because it put the lie to their claims that CO2 was heating up the atmosphere enough to cause unprecedented warming. You can’t claim unprecedented warming in 2018 if it was just as warm or warmer in the 1930’s. So they made the 1930’s warmth disappear.

Where they didn’t make the 1930’s warmth disappear is in all the numerous unmodified local surface temperature records from around the world which still show the 1930’s as being as warm or warmer than subsequent years. The Climategate conspirators modified many of these charts but they did not erase the original data so we still have that.

We are in a temperature downtrend from the 1930’s, but many scientists, including some skeptics still give credence to the Hockey Stick lie and act like it is a true representation of reality. And everyone of them knows about Climategate and the manipulation of the temperature record but they proceed as if it is accurate. Go figure.

Frank,

I look very closely at the data. I calculate 150,000 linear regressions, for the date range from 1970 to 2018.

I know that you are not serious, about a date range under 10 years. Yours is only about 1.5 years in length.

If you want to play it that way, then I have a truck load of cooling trends for you. Tell me your address, and I will send the truck around.

You said, “Are you going to tell me that your 120 month period is more meaningful than my 18 month period?”

Yes.

You said, “How long does a period need to be to be meaningful?”

It depends on the circumstances. In general, periods of less than 10 years are not meaninful. But if it begins to cool at over 10 degrees Celsius per century, then periods less than 10 years can become meaningful.

You said, “How do we know who is right about what is meaningful and what isn’t?”

It is simple. I am right, and you are wrong. Next question please.

====================

Alarmists DON’T understand statistical significance.

A slowdown is a “negative” event. You can’t get a statistically significant slowdown. That is why a slowdown must be specified as the null hypothesis. You accept the null hypothesis (and the slowdown), when you cannot accept the alternative hypothesis (that there is statistically significant warming).

Alarmists do NOT understand this. Alarmists don’t do hypothesis testing correctly. They don’t even specify a null hypothesis, most of the time. They consider the null hypothesis unnecessary, because they “KNOW” that global warming is happening.

Many Alarmists say that they won’t accept that there was a slowdown, unless it is statistically significant.

That is like saying, that you won’t accept that the apple barrel is empty, until there are a statistically significant number of apples in it.

Do you see how stupid that is?

Temperature data is very noisy. You often can’t get definite proof of something like a slowdown. Before you begin cheering, and claim that there was no slowdown, consider this:

Because the temperature data is very noisy, you can’t get definite proof that there wasn’t a slowdown.

So how do we decide whether there was a slowdown or not?

In the absence of statistical proof, for or against a slowdown, you must be guided by the calculated warming rate (calculated from a linear regression).

If the calculated warming rate is considerably less than the average warming rate, then there was a slowdown. Statistically significant proof, is for data that is not too noisy.

Variables calculated using linear regressions are BLUE. They are the Best Linear Unbiased Estimates. EVEN IN THE PRESENCE OF AUTOCORRELATION.

So the calculated warming rate, is the best estimate of the warming rate, that we can get. In other words, trust the results of a linear regression, because you can’t get a better estimate.

Alarmists want to ignore the results of linear regressions, when they don’t like the result. That is cheating. But of course, Alarmists are experts at cheating. They do it all of the time.

Ask a supposed statistics expert, like Tamino.

====================

I am not an “expert” in statistics, but I do have some experience. As part of a Bachelor of Commerce degree, majoring in Finance and Economics, I did the following papers:

Stage 2 – Introduction to Econometrics ……….. (grade for paper = A+)

Stage 2 – Mathematics for Commerce …………… (grade for paper = A+)

Stage 3 – Applied Econometrics ………………. (grade for paper = A+)

Stage 3 – Optimisation in Operations Research …. (grade for paper = A+)

In case you are not familiar with the term “econometrics”, it is the economics version of statistics.

I was also awarded the following scholarships and prizes:

The Senior Prize in Economics

The Senior Prize in Accounting and Finance

The Stock Exchange Prize

and 2 Scholarships in Finance, from private companies

====================

You might think that I am being boastful, because of my university marks. I am actually a modest person, but I want you to be aware, that I am not stupid.

I will repeat again, I am not an “expert” in statistics. I am well aware of my limitations.

I am not a climate scientist, or even a scientist. But I have a good science education, and I like science, mathematics, and computing. These skills allow me to produce “interesting” data visualisations.

I have another useful skill. Persistence. I don’t think that many people would spend the time that I have, collecting the temperature statistics for over 36,000 locations on the Earth.

My persistence is strengthened, when people call me a “denier”.

Another valuable attribute, is a good sense of humour. A love of Monty Python helps (it allows you to laugh at the absurdity of global warming, rather than get depressed).

Always remember the words from one of Monty Python’s famous songs, and “always look on the bright side of life”.

Also, never forget that the Hitchhiker’s Guide to the Galaxy has the words DON’T PANIC inscribed in large friendly letters on its cover.

Sheldon: I’m not an alarmist (IMO), nor an expert in statistics. I’m just trying to ask a few questions and provide a little information that may clarify our situation. As Steve McIntyre might say, a statistics expert like Tamino might “torture data until it confesses” what he wants it to say. Or cherry-pick it. Isn’t sorting through 150,000 regressions to publicize one of them a form of cherry-picking?

Don’t you find it uncomfortable to arbitrarily conclude that periods of 10-years are meaningful? Do you want the alarmists to also arbitrarily decide what is meaningful and what is not? I don’t! Shouldn’t the DATA ITSELF tell us what is meaningful and what is not?

After all, we are all flawed humans, a species that suffers from confirmation bias – a phenomena that makes it difficult to assimilate information that conflicts with our deeply held beliefs. By letting your beliefs, rather than the data, determine what is true, you are taking us back to the time when Galileo was imprisoned for contradicting the church – a forward to the time when those who doubt the consensus may be jailed, fired or fined for their beliefs! You can hear the rabble on the left calling for these things, can’t you?

In fact, the 95% confidence interval for a 10-year temperature trend is typically +/-1 K/century. For a trend of 2 K/century, that would be 1-3 K difference by 2100, a massive difference! If +/-1 K/century is all the accuracy you need, publicize the central estimate with the confidence interval. Don’t pretend the ci doesn’t exist or isn’t needed!

Sheldon wrote: “A slowdown is a “negative” event. You can’t get a statistically significant slowdown.”

Respectfully, this is total BS. I showed you that the warming rate for 2001-2012 was statistically significantly different from the overall warming rate for the last 40-years: 0.14 +/- 1.0 K/century vs. 1.8 +/- 0.4 K/century. I didn’t carry out the proper statistical test for the significance of the difference between two means (with confidence intervals), but the conclusion is obvious.

Sheldon wrote: “In the absence of statistical proof, for or against a slowdown, you must be guided by the calculated warming rate (calculated from a linear regression).”

Respectfully, no one wants to be guided conclusions from biased researchers that are statistical equivalent to flipping a coin or two and looking for heads. That is the kind of “more likely than not” and “likely” BS that the IPCC has been delivering. You can say the central estimate is +0.14 K/century, but you must be honest and say that the 95% confidence interval runs from -0.86 K/century to +1.14 K/century. Politicians and attorneys provide only the facts that agree with their position, but ethical scientists do not. This is supposed to be a science blog.

Sheldon writes: “Statistically significant proof, is for data that is not too noisy.”

Scientists don’t draw conclusions from data that is too noisy. Period. Despite the existence of a central estimate.

Sheldon writes: “I will repeat again, I am not an “expert” in statistics. I am well aware of my limitations.”

Neither am I. We all need to learn new things when we start working in new areas. Use your persistence! I wanted to know for myself if SLR data showed any statistically significant acceleration. So I did the linear regression to t and t^2 and the painful (and large!) correction for autocorrelation in the data and looked to see if the confidence interval for the coefficient for the t^2 term included zero. Or would you want me to simple declare SLR was accelerating because the central estimate for the coefficient for the t^2 term was positive? For temperature trends, I cheat and get confidence intervals from Nick Stokes trendviewer. You should too, or calculate them for yourself.

Sheldon wrote: “Many Alarmists say that they won’t accept that there was a slowdown, unless it is statistically significant. That is like saying, that you won’t accept that the apple barrel is empty, until there are a statistically significant number of apples in it.”

There is no significant noise in the data when you are talking about whether an apple barrel is empty!

Frank,

you said, “Isn’t sorting through 150,000 regressions to publicize one of them a form of cherry-picking?”.

Frank, if you lost a diamond, what would you do? Search for it in just a few places, and then give up. Or search for it everywhere that you could? Until you found it.

In statistics, unusual events, that probably wouldn’t have occurred by chance, are valued. They are called “statistically significant”. What are the chances of finding one of these rare events, if you don’t search exhaustively?

I do my 150,000 linear regressions, without showing any bias towards a particular date range. It is only AFTER I have done the calculations, that I look at the warming rates. So I don’t calculate the warming rate for only the date ranges that I think might be slowdowns. Every date range has an equal chance of being a slowdown or a speedup. This is the climate equivalent of democracy. Any person can be president, and any date range can be a slowdown (or a speedup).

If I find a slowdown with a certain warming rate, and there are a lot of other slowdowns with a similar warming rate, then that shows that slowdowns with that warming rate, are not unusual. They are probably a feature of the normal climate. If I don’t search exhaustively, and I find a potential slowdown, then I can’t tell how unusual it is. So exhaustive searching is necessary, to establish what the “normal” distribution is.

But we need to go further than just look at the warming rate. We need to also look at the “length” of each slowdown. The warming rate may not be unusual, but the length might be. See my article called “Alarmist thinking on the recent slowdown is one dimensional”. I show that the recent slowdown is the second longest climate event, since 1970. [a climate event is a period of warming (or cooling), that is considerably above or below, the long-term warming rate].

https://agree-to-disagree.com/alarmist-thinking-on-the-slowdown

You said, “Don’t you find it uncomfortable to arbitrarily conclude that periods of 10-years are meaningful?

I didn’t “arbitrarily conclude that periods of 10-years are meaningful”. The data did. Look at one of my Global Warming Contour Maps, and you will see why.

Frank, sometimes, when nobody is looking, I have a look at the date ranges under 10 years. They are actually quite interesting, You can see the short-term climate events, like El Nino’s and La Nina’s.

But I can’t tell people about the short-term climate events, because Alarmists attack me for looking at date ranges which are “too short”, and too variable.

So when I say that date ranges under 10 years are usually not meaningful, part of the reason that I say that, is because Alarmists don’t want me to look at date ranges under 30 years. I defy them, by publicly looking at date ranges down to 10 years, but I can only push the boundaries, so far.

====================

You said, “Sheldon wrote: “A slowdown is a “negative” event. You can’t get a statistically significant slowdown.” Respectfully, this is total BS.”

A statistically significant variable, is one that is statistically significantly different from zero.

By definition, a pause and a slowdown, have a warming rate that is NOT significantly different from zero.

The t-value, calculated for a t-test, divides the variable by the standard error, and compares it to the t-critical value.

When you divide a number near zero by the standard error, you get a number near zero, which is unlikely to be greater than the t-critical value.

So the variable fails the t-test, and is called NOT statistically significant.

I agree that you can statistically test for the “difference” from another warming rate, but the high noise level in temperature data makes even this test unlikely to give a significant result.

Alarms need to learn, that high noise levels mean that you cannot conclude ANYTHING, with confidence. You can not prove statistically that there WAS a slowdown, but you also can’t prove statistically that there WASN’T a slowdown.

====================

You said, “Respectfully, no one wants to be guided conclusions from biased researchers that are statistical equivalent to flipping a coin or two and looking for heads.”

So you want biased Alarmists to flip a coin or two, and tell us that a slowdown didn’t happen.

====================

You said, “Scientists don’t draw conclusions from data that is too noisy. Period. Despite the existence of a central estimate.”

Scientists who are HONEST, don’t draw conclusions from data that is too noisy. They advise people that the data does not support ANY conclusion.

Unfortunately, scientists who are honest, have trouble getting funding.

Alarmist scientists have no trouble getting funding, and are happy to draw conclusions from data that are not justified. Global warming will cause:

animals to shrink

maleria to spread

snow to disappear

drinking water to disappear

deserts to increase (the Sahara desert is “greener” than ever, thanks to CO2)

more rain to fall

less rain to fall

insects to disappear

dangerous bacteria to increase (global warming kills all the good bacteria, that is, the ones that survive the chemicals that humans use, which kill 99.9% of bacteria)

people to die from high temperatures (but it doesn’t save anybody from low temperatures)

fish to lose the ability to navigate

turtles to all become females

oceans to acidify dangerously

sea levels to rise excessively

weather to become extreme

hurricanes to become more frequent

hurricanes to become stronger

food will become less nutritious

the sperm count of male humans (and probably all other animals) will go down

monarch butterflies to die (they can’t find Swan plants any more, because global warming killed them all (or did humans spray them with herbicide?))

It seems odd to me, that everything that global warming does, is BAD. How does global warming know what is BAD. Is it sentient?

According to Alarmists, global warming has absolutely no GOOD consequencs. That seems strange, since CO2 is plant food (don’t deny it), and cold kills a lot of people.

Frank, how about some “balance”, when talking about global warming. Skeptics might actually believe some of the things that Alarmists say.

Sheldon wrote: “How about some “balance”, when talking about global warming. Skeptics might actually believe some of the things that Alarmists say.”

The problem is confirmation bias – how does one help someone learn something that conflicts with his deeply-held beliefs. Psychologists say this is nearly impossible for ordinary people, but scientific progress depends on people learning something new (or waiting for those incapable of learning to die off, which was too often the case with relativity and quantum mechanics). You obviously deeply believe the 10-year slowdown – but not halt – in warming is important to the skeptical position opposing the IPCC consensus. I believe it is merely random noise, but am nevertheless skeptical about the IPCC consensus. One of us is likely wrong about the slowdown, but we agree at least partially about the IPCC consensus. One of us may needs to learn something new or (less likely) this is one of those issues where there are multiple ways of looking at the problem. I need to learn from you or you need to learn from me, or we both need to learn from each other. The same goes for anyone still interested in our debate. Is “balance” really going to help us overcome our confirmation bias and learn, or is being confronted by uncomfortable information and challenging questions the right approach?

I think we both know that personal attacks won’t help. Bobbing and weaving, creating strawmen, and changing the subject don’t help. Agreeing to disagree doesn’t help. Complaining about the quality of climate science in general won’t help. Proposing a test that will clarify matters could help. Creating some artificial data with auto-correlated noise might help, but you rejected a paper that did that.

Frank,

you might not believe this, but I believe that the recent slowdown is NOT important, in terms of its effect on temperature.

The recent slowdown was not enormously long.

It was temporary.

It is now over.

The fact that it existed, didn’t prove that global warming isn’t happening.

The most important thing about the recent slowdown, is that it shows the lengths that Alarmists will go to, to avoid admitting the truth.

Would it hurt Alarmists to say, “Yes, there might have been a small, temporary slowdown, that doesn’t have any significant long-term implications for global warming”?

But no Alarmist will say this.

Why not?

I am happy to cooperate with honest people.

Sometimes I will even cooperate with people, when I think that they are wrong, but I think that they genuinely believe what they claim.

But I refuse to cooperate with liars. Especially when they spew nastiness at everyone who disagrees with them.

As I said before, Alarmists have shot themselves in both feet. By being nasty, and by calling everyone who disagrees with them, a “Denier”.

Frank,

do you think that it is me, who is suffering from “confirmation bias”, or you?

You have mentioned a paper several times. You said, “Creating some artificial data with auto-correlated noise might help, but you rejected a paper that did that.”

I don’t know what paper you are talking about. Please tell me, and I will have a look at it.

I started playing with generating temperature series with the same autocorrelation structure as real temperature data. But I got interested in something else. One day I might get back to it.

Sheldon wrote: “You have mentioned a paper several times. [Frank] said, “Creating some artificial data with auto-correlated noise might help, but [Sheldon] rejected a paper that did that.”

Sheldon’s post began with a disdainful comment about authors of a paper discussing their work at “The Conversation”. That paper – which created artificial data by adding noise to a linear trend I just describe in a comment – is found at this link:

http://iopscience.iop.org/article/10.1088/1748-9326/aaf342#erlaaf342fn1

The paper discusses the problem of intentionally or unintentionally “data mining” to find unusual events that might be due to chance. The warming trend for 2001-2012 is much less than for 1978-2017 (the last half century) and the 95% ci for the confidence interval for the difference in trends does not include 0. So the slowdown in warming appears to be meaningful – statistically significant.

However, a problem develops when we “data mine” for “unusual events” by picking 2001-2102 from thousands of other possible periods. With annual data and periods at least 10 years long, there are 40 possible starting years. For 11-year periods, 39 periods. Etc. 420 possibilities in all. 60,489 possible periods with monthly data. The width of a 95% confidence interval no longer has any meaning when you can cherry-picked a trend from hundreds or thousand of possibilities. With 420 trends and 95% confidence interval, you expect about 21 trends to lie outside the confidence interval PURELY BECAUSE OF CHANCE ARRANGEMENT OF THE NOISE IN THE DATA that was used to calculate a central estimate for the trend. By looking through many trends, you can “mine” or “cherry-pick” noise from data – events that have real significance because they occur by chance

The long term trend for the last half century is about 0.18 K/century. In Figure 11c of the paper linked above, the authors created 1000 sets of artificial data 50 years long with the same noise as in observations. (At least, I think this is what they did.) Then they asked what fraction of the time the artificial data contained a period (anywhere in the 50 years) with a lower trend than observed during a particular period. Look above the year 2011. In the artificial data, 7% of artificial data contained (at least one) 10-year period with a lower trend than observed for 2002 through 2011, 9% contained an 11-year period with a trend lower than observed for 2001 through 2011, 24% a 12-year period with a lower trend than for 2000 (La Nina ending) through 2011, and 35% lower than for 1999 (massive La Nina) through 2011. However, only 4% of the artificial data contained a 14-year period with a trend in the artificial data lower than observed for 1998 through 2011. In other words, given the typical noise in temperature trends and the long-term temperature, 4% of the time one would expect to find a trend as low as was observed for 1998 through 2011 somewhere in 50 years of HadCRUT record. (This was for HadCRUT before it was adjusted for ERSST4.) I think the correct interpretation of a 4% chance of being due to chance it that 1998 through 2011 was event sufficiently unlikely to be due to chance to call it statistically significant.

However, this wasn’t the case with GISS temperatures, nor HadCRUT4 after ERSST4, not with a more stringent test that requires the two trends to be “continuous – to intersect at the beginning of the second trend.

Sheldon: Thanks for the reply

When I said: “How long does a period need to be to be meaningful?”

You replied: “It depends on the circumstances. In general, periods of less than 10 years are not meaningful.”

I asked, “How do we know who is right about what is meaningful and what isn’t?”

You replied: “It is simple. I am right, and you are wrong. Next question please.”

That was purely arbitrary. Now you are trying to tell me: “I didn’t “arbitrarily conclude that periods of 10-years are meaningful”. The data did. Look at one of my Global Warming Contour Maps, and you will see why.”

The data determines the 95% confidence interval for the central estimate of the trend provided by linear regression. That determines what meaning you should apply the trend.

You, however, have moved into “data mining” – looking for unusual events in noisy data. The problem is that you will always find unusual events in noisy data. About 5% of events in random noise will lie outside of 2 standard deviations of the mean. About 0.3% will lie outside of 3 STDs. If you look at 10-year warming trends starting with every month, you will have about 1000 ten-year trends from a century. You will expect a few by chance to fall outside 3 STD. You will think these three events are “significant” even though you expect about 3 such events to occur purely by chance. If you work with annual data, then there will be about 100 trends. A few of those will fall outside 2 STDs and you may think those are “significant”. However, one expects about 5 such events to occur in any set of random data!

Now look at your graph where you have added lines for 1, 2 and 3 STDs. Is there really anything present in that data that one wouldn’t expect to find in 100 or 1000 data points composed of random noise with a high degree of auto-correlation? The problem is made more complex by autocorrelation. Large deviations are found next to each other, not randomly scattered.

If you go looking through enough data that is purely random noise, you will always find something that looks significant. The paper you complained about generated lots of pseudo-data with autocorrelated noise similar to that found in temperature records (Monte Carlo calculations) and looked to see how often events of a certain type occurred merely by chance.

Data miming can be useful under carefully controlled conditions. One can divide the data in half, look for relationships in one-half of the data, and then test the second half to determine if the relationship is found in the second half.

Consider a human clinical trial with a new drug that fails to show statistically significant efficacy. All companies look through the data for sub-populations of patients who responded well to a drug. Maybe women or less sick patients or younger patients or patients with a particular genetic marker responded much better than the whole treatment group. If you have 10 possible subpopulations of the treatment group and one of those subpopulations shows statistically significant efficacy (p less than 0.05), the p score is meaningless, because you had 10 chances to achieve that p score. If you have a 95% chance of failing and you try ten different times, you now have a 40% (1- 0.95^10) chance of one success. If you ask the FDA for permission to see to that subpopulation, the FDA will tell you to run another expensive clinical trial using only the patient population you expect to benefit.

Frank,

Did you look at a global warming contour map, to see why periods of less than 10 years are not as meaningful?

If you didn’t look, then you will not see the reason why.

Notice how a global warming contour map, shows you periods of many different lengths. The period lengths start at 1 or 2 months, and go up to the maximum possible for the data.

You can see that I am not hiding anything from you. I show you everything. It is up to you to decide which parts you want to use. You have to justify what you use.

====================

Frank, you talk about data mining, as if it was a bad thing. They find diamonds, by mining.

Who needs confidence intervals, when you know what all of the data is !!!

People use confidence intervals, because they don’t know what most of the data is.

I know exactly what all of the data is. I can give you an answer that is EXACT (the confidence interval is zero).

====================

I showed people that even though the warming rate is not unusual, a slowdown could be unusual because of its length. The recent slowdown was the second longest climate event, since 1970. A climate event being a period that has a warming rate considerably higher or lower than the long-term warming rate.

Note that I said “considerably higer or lower”, NOT “more than 3 standard deviations from the long-term warming rate.

Does the length of a slowdown mean nothing to you?

====================

You said, “If you go looking through enough data that is purely random noise, you will always find something that looks significant.”

If you find what looks like a meaningful event, how do you know whether it was caused by random, or non-random factors. Do you let your prejudices decide?

Are YOU needlessly throwing away real events, because you claim that they are random. That is very naughty. I can’t remember whether that is a type 1 error, or a type 2 error, but you are guilty of one of the worst crimes in statistics.

What you are doing, is judging data by what you expect, or want, to find. I don’t do that. I use all of the data.

Sheldon writes: “Who needs confidence intervals, when you know what all of the data is !!! People use confidence intervals, because they don’t know what most of the data is.”

This is wrong. Think of statistics (confidence intervals, hypothesis testing, etc.) as “getting meaning from data”.

Let’s ASSUME that temperature should be rising linearly with time because forcing is rising roughly linearly with time. However, we also know that El Ninos and La Ninas introduce noise in temperature data (because they are purely internal slowdowns and speed ups in mixing with cold deep water in the ocean. So we want to separate a linear temperature signal from this noise. Use the trend to calculate a “linear temperature” for each year, subtract the raw data and look at the “residuals” that we believe are noise. IF THEY ARE NOISE, THEN THESE DISPLACEMENTS FROM THE LINEAR TREND COULD HAVE HAPPENED DURING ANY YEAR.

For simplicity, let’s imagine that we are working with annual temperature data, in which case our residuals will turn out to be randomly distributed. (Monthly residuals are not random and show auto-correlation.) Write down the value of your 12 residuals for 2001-2012 on separate pieces of paper, put them in a hat, select them randomly and add them to the “linear temperature” for each year. The 2010 residual (a La Nina year) might be added by chance to the 2001 linear temperature, and the 2005 residual (an El Nino year) might be added by chance to the 2012 linear temperature. Now do another least-squares fit to this artificial data. Since we – by chance – started with a La Nina year and ended in an El Nino year, the trend for this artificial data will – by chance – be higher. Now repeat this process 100 times and get 100 artificial trends – all of which are perfectly consistent with the idea that the temperature is rising linearly with time and contain noise – exactly the same noise as found in your raw data.

Now let’s be more sophisticated and create 10,000 samples of noise for each of the 12 years with the same standard deviation (and mean = 0) as our residuals and repeat this process. All resulting trends will be consistent with the idea that there is a linear trend in the data with noise. About 9,500 of those trends will lie within the 95% confidence interval for the trend, 250 will be higher (by chance) and 250 will be lower (by chance). Now, in addition to the CENTRAL ESTIMATE for the trend we have some idea of how much NOISE MIGHT HAVE DISTORTED that central estimate. MEANING FROM DATA. Knowledge of the potential for such distortion will be critical, for example, if the alarmists try to tell us that the rate of warming has increased since the 2000s! We can use the formula for the difference of two means with confidence intervals and calculate the confidence interval for the difference between the trends. If that confidence interval includes zero, the difference isn’t statistically significant.

By not including confidence intervals along with the trends in your post, you are denying your readers access to critical information about the trends you report only as central estimates.