Guest Essay by Kip Hansen (with graphic data supplied by William Ward)

One of the advantages of publishing essays here at WUWT is that one’s essays get read by an enormous number of people — many of them professionals in science and engineering.

One of the advantages of publishing essays here at WUWT is that one’s essays get read by an enormous number of people — many of them professionals in science and engineering.

In the comment section of my most recent essay concerning GAST (Global Average Surface Temperature) anomalies (and why it is a method for Climate Science to trick itself) — it was brought up [again] that what Climate Science uses for the Daily Average temperature from any weather station is not, as we would have thought, the average of the temperatures recorded for the day (all recorded temperatures added to one another divided by the number of measurements) but are, instead, the Daily Maximum Temperature (Tmax) plus the Daily Low Temperature (Tmin) added and divided by two. It can be written out as (Tmax + Tmin)/2.

Anyone versed in the various forms of averages will recognize the latter is actually the median of Tmax and Tmin — the midpoint between the two. This is obviously also equal to the mean of the two — but since we are only dealing with a Daily Max and Daily Min for a record in which there are, in modern times, many measurements in the daily set, when we align all the measurements by magnitude and find the midpoint between the largest and the smallest we are finding a median (we do this , however, by ignoring all the other measurements altogether, and find the median of a two number set consisting of only Tmax and Tmin. )

This certainly is no secret and is the result of the historical fact that temperature records in the somewhat distant past, before the advent of automated weather stations, were kept using Min-Max recording thermometers — something like this one:

Each day at an approximately set time, the meteorologist would go out to her Stevenson screen weather station, open it up, and look in at a thermometer similar to this. She would record the Minimum and Maximum temperatures shown by the markers, often she would also record the temperature at the time of observation, and then press the reset button (seen in the middle) which would return the Min/Max markers to the tops of the mercury columns on either side. The motion of the mercury columns over the next 24 hours would move the markers to their respective new Minimums and Maximums for that period.

With only these measurements recorded, the closest to a Daily Average temperature that could be computed was the median of the two. To be able to compare modern temperatures to past temperatures, it has been necessary to use the same method to compute Daily Averages today, even though we have recorded measurements from automated weather stations every six minutes.

Nick Stokes discussed (in this linked essay) the use and problems of Min-Max thermometers as it relates to the Time of Observation Adjustments. In that same essay, he writes

Every now and then a post like this appears, in which someone discovers that the measure of daily temperature commonly used (Tmax+Tmin)/2 is not exactly what you’d get from integrating the temperature over time. It’s not. But so what? They are both just measures, and you can estimate trends with them.

And Nick Stokes is absolutely correct — one can take any time series of anything, find all sorts of averages — means, medians, modes — and find their trends over different periods of time.

In this case, we have to ask the question: What Are They Really Counting? I find myself having to refer back to this essay over and over again when writing about modern science research which seems to have somehow lost an important thread of true science — that we must take extreme care with defining what we are researching — what measurements of what property of what physical thing will tell us what we want to know?

Stokes maintains that any data of measurements of any temperature averages are apparently just as good as any other — that the median of (Tmax+Tmin)/2 is just as useful to Climate Science as a true average of more frequent temperature measurements, such as today’s six-minute records. What he has missed is that if science is to be exact and correct, it must first define its goals and metrics — exactly and carefully.

So, we have raised at least three questions:

1. What are we trying to measure with temperature records? What do we hope the calculations of monthly and annual means and their trends, and the trends of their anomalies [anomalies here always refers to anomalies from some climatic mean], will tell us?

2. What does (Tmax+Tmin)/2 really measure? Is it quantitatively different from averaging all the six-minute (or hourly) temperatures for the day? Are the two qualitatively different?

3. Does the currently-in-use (Tmax+Tmin)/2 method fulfill the purposes of any of the answers to question #1?

I will take a turn at answering these question, and readers can suggest their answers in comments.

What are we trying to measure?

The answers to question #1 depends on who you are or what field of science you are practicing.

Meteorologists measure temperature because it is one of the key metrics of their field. Their job is to know past temperatures and use them to predict future temperatures on a short term basis — tomorrow’s Hi and Lo, weekend weather conditions and seasonal predictions useful for agriculture. Temperature predictions of extremes are an important part of their job — freezing on roadways and airport runways, frost and freeze warning to agriculture, high temperatures that can affect human health and a raft of other important meteorological forecasts.

Climatologists are concerned with long-term averages of ever changing weather conditions for regions, continents and the planet as a whole. Climatologists concern themselves with the long-range averages that allow them to divide various regions into the 21 Koppen Climate Classifications and watch for changes within those regions. The Wiki explains why this field of study is difficult:

“Climate research is made difficult by the large scale, long time periods, and complex processes which govern climate. Climate is governed by physical laws which can be expressed as differential equations. These equations are coupled and nonlinear, so that approximate solutions are obtained by using numerical methods to create global climate models. Climate is sometimes modeled as a stochastic [random] process but this is generally accepted as an approximation to processes that are otherwise too complicated to analyze.” [emphasis mine — kh]

The temperatures of the oceans and the various levels of the atmosphere, and the differences between regions and atmospheric levels, are, along with a long list of other factors, drivers of weather and the long-term differences in temperature are thus of interest to climatology. The momentary equilibrium state of the planet in regards to incoming and outgoing energy from the Sun is currently one of the focuses of climatology and temperatures are part of that study.

Anthropogenic Global Warming scientists (IPCC scientists) are concerned with proving that human emissions of CO2 are causing the Earth climate system to retain increasing amounts of incoming energy from the Sun and calculate global temperatures and their changes in support of that objective. Thus, AGW scientists focus on regional and global temperature trends and the trends of temperature anomalies and other climatic factors that might support their position.

What do we hope the calculations of monthly and annual means and their trends will tell us?

Meteorologists are interested in temperature changes for their predictions, and use “means” of past temperatures to set an expected range to know and predict when things are out of these normally expected ranges. Temperature differences between localities and regions drive weather which makes these records important for their craft. Multi-year comparisons help them to make useful predictions for agriculturalists.

Climatologists want to know how the longer-term picture is changing — Is this region generally warming up, cooling off, getting more or less rain? — all of these looked at in decadal or 30-year time periods. They need trends for this. [Note: not silly auto-generated ‘trend lines’ on graphs that depend on start-and-end points — they wish to discover real changes of conditions over time.]

AGW scientists need to be able to show that the Earth is getting warmer and use temperature trends — regional and global, absolute and anomalies — in the effort to prove the AGW hypothesis that the Earth climate system is retaining more energy from the Sun due to increasing CO2 in the atmosphere.

What does (Tmax+Tmin)/2 really measure?

(Tmax+Tmin)/2, meteorology’s daily Tavg, is the median of the Daily High (Tmax) and the Daily Low (Tmin) (please see the link if you are unsure why it is the median and not the mean). The monthly TAVG is in fact the median of the Monthly Mean of Daily Maxes and the Monthly Mean of the Daily Mins. The Monthly TAVG, which is the basic input value for all of the subsequent regional, statewide, national, continental, and global calculations of average temperature (2-meter air over land), is calculated by finding the median of the means of the Tmaxs and the Tmins for the month for the station, arrived at by adding all the daily Tmaxs for the month and finding their mean (arithmetical average) and adding all the Tmins for the month, and finding their mean, and then finding the median of those two values. (This is not by a definition that is easy to find — I had to go to original GHCN records and email NCEI Customer Support for clarification).

So now that we know what the number called monthly TAVG is made of, we can take a stab at what it is a measure of.

Is it a measure of the average of temperatures for the month? Clearly not. That would be calculated by adding up the Tavg for each day and dividing by the number of days in the month. Doing that might very well give us a number surprising close to the recorded monthly TAVG — unfortunately, we have already noted that the daily Tavgs are not the average temperatures for their days but at are the medians of the daily Tmaxs and Tmins.

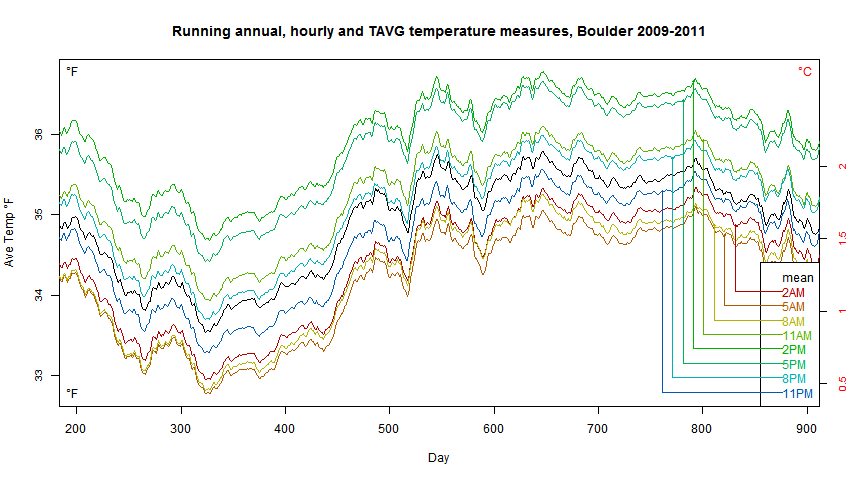

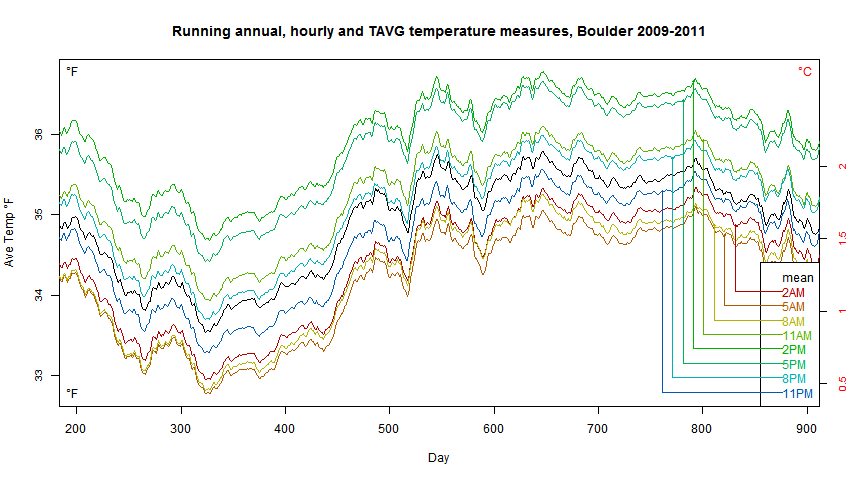

The featured image of this essay illustrates the problem, here it is blown up:

This illustration is from an article defining Means and Medians, we see that if the purple traces were the temperature during a day, the median would be identical for wildly different temperature profiles, but the true average, the mean, would be very different. [Note: the right hand edge of the graph is cut off, but both traces end at the same point on the right — the equivalent of a Hi for the day.] If the profile is fairly close to a “normal distribution” the Median and the Mean are close together — if not, they are quite different.

Is it quantitatively different from averaging all the six-minute (or hourly) temperatures for the day? Are the two qualitatively different?

We need to return to the Daily Tavgs to find our answer. What changes Daily Tavg? Any change in either the daily Tmax or the Tmin. If we have a daily Tavg of 72, can we know the Tmax and Tmin? No, we cannot. The Tavg for the day tells us very little about the high temperature for the day or the low temperature for the day. Tavg does not tell us much about how temperatures evolved and changed during the day.

Tmax 73, Tmin 71 = Tavg 72

Tmax 93, Tmin 51 = Tavg 72

Tmax 103, Tmin 41= Tavg 72

The first day would be a mild day and a very warm night, the second a hot day and an average sort of night. The second could have been a cloudy warmish day, with one hour of bright direct sunshine raising the high to a momentary 93 or a bright clear day that warmed to 93 by 11 am and stayed above 90 until sunset with only a short period of 51 degree temps in the very early morning. Our third example, typical of the high desert in the American Southwest, a very hot day with a cold night. (I have personally experienced 90+ degree days and frost the following night.) (Tmax+Tmin)/2 tells us only the median between two extremes of temperature, each of which could have lasted for hours or merely for minutes.

Daily Tavg, the median of Tmax and Tmin, does not tell us about the “heat content” or the temperature profile of the day. If daily Tmaxs and Tmins and Tavgs don’t tell us the temperature profile and “heat content” of their days, then the Monthly TAVG has the same fault — being the median of the mean of Tmaxs and Tmins — cannot tell us either.

Maybe a graph will help illuminate this problem.

This graph show the difference between daily Tavg (by (Tmax+Tmin)/2 method) and the true mean of daily temperatures, Tmean. We see that there are days when the difference is three or more degrees with an eye-ball average of a degree or so, with rather a lot of days in the one to two degree range. We could punch out a similar graph for Monthly TAVG and real monthly means, either of the actual daily means or from averaging (finding the mean) of all temperature records for the month).

The currently-in-use Tavg and TAVG (daily and monthly) are not the same as actual means of the temperatures during the day or the month, they are both quantitatively different and qualitatively different — they tells us different things.

So, YES, the data are qualitatively different and quantitatively different.

Does the currently-in-use (Tmax+Tmin)/2 method fulfill the purposes of any of the answers to question #1?

Let’s check by field of study:

Meteorologists measure temperatures because it is one of the key metrics of their field. The weather guys were happy with temperatures measured to the nearest full degree. One degree one way or the other was not big deal (except at near freezing). Average weather can also withstand an uncertainty of a degree or two. So, my opinion would be that (Tmax+Tmin)/2 is adequate for the weatherman, it is fit for purpose in regards to the weather and weather prediction. For weather, the weatherperson knows the temperature will vary naturally by a degree or two across his area of concern, so a prediction of “with highs in the mid-70s” is as precise as he needs to be.

Climatologists are concerned with long-term ever changing weather conditions for regions, continents and the planet as a whole. Climatologists know that past weather metrics have been less-than-precise — they accept that (Tmax+Tmin)/2 is not a measure of the energy in the climate system but it gives them an idea of temperatures on a station, region, and continental basis, close enough to judge changing climates — one degree up or down in the average summer or the winter temperature for a region is probably not a climatically important change — it is just annual or multi-annual weather. For the most part, climatologists know that only very recent temperature records get anywhere near one or two degree precision. (See my essay about Alaska for why this matters).

Anthropogenic Global Warming scientists (IPCC scientists) are concerned with proving that human emissions of CO2 are causing the Earth climate system to retain increasing amounts of incoming energy from the Sun. Here is where the differences in quantitative values, and the qualitative differences, between (Tmax+Tmin)/2 and a true Daily/Montly mean temperature comes into play.

There are those who will (correctly) argue that temperature averages (certainly the metric called GAST) are not accurate indicators of energy retention in the climate system. But before we can approach that question, we have to have correct quantitative and qualitative measures of temperature reflecting changing heat energy at weather stations. (Tmax+Tmin)/2 does not tell us whether we have had a hot day and a cool night, or a cool day and a warmish night. Temperature is an intensive property (of air and water, in this case) and not properly subject to addition and subtraction and averaging in the normal sense — temperature of an air sample (such as in an Automatic Weather Station – ASOS) — is related to but not the same as the energy (E) in the air at that location and is related to but not the same as the energy in the local climate system. Using (Tmax+Tmin)/2 and TMAX and TMIN (monthly mean values) to arrive at monthly TAVG does not even accurately reflect what the temperatures were and therefore will not, and cannot, inform us properly (accurately and precisely) about the energy in the locally measured climate system and therefore when combined across regions and continents, cannot inform us properly (accurately and precisely) about the energy in regional, continental or the global climate system — not quantitatively in absolute terms and not in the form of changes, trends, or trends of anomalies.

AGW science is about energy retention in the climate system — and the currently used mathematical methods — all the way down to the daily average level — despite the fact that, for much of the climate historical record, they are all we have — are not fit for the purpose of determining changing energy retention by the climate system to any degree of quantitative or qualitative accuracy or precision.

Weathermen and women are probably well enough served by the flawed metric as being “close enough for weather prediction”. Hurricane prediction is probably happy with temperatures within a degree or two – as long as all are comparable.

Even climate scientists, those disinterested in the Climate Wars, are happy to settle for temperatures within a degree or so — as there are a large number of other factors, most which are more important than “average temperature”, that combine to make up the climate of any region. (see again the Koppen Climate Classifications).

Only AGW activists insist that the miniscule changes wrested from the long-term climate record of the wrong metrics are truly significant for the world climate.

Bottom Line:

The methods currently used to determine both Global Temperature and Global Temperature Anomalies rely on a metric, used for historical reasons, that is unfit in many ways for the purpose of determining with accuracy or precision whether or not the Earth climate system is warming due to additional energy from the Sun being retained in the Earth’s climate system and is unfit in many ways for the purpose of determining the size of any such change and, possibly, not even fit for determining the sign of that change. The current method does not properly measure a physical property that would allow that determination.

# # # # #

Author’s Comment Policy:

The basis of this essay is much simpler than it seems. The measurements used to form GAST(anomaly) and GAST(absolute) — specifically (Tmax+Tmin)/2, whether daily or monthly) are not fit for the purpose of determining those global metrics as they are presented to the world by AGW activist scientists. They are most often used to indicate that the climate system is retaining more energy and thus warming up….but the tiny changes seen in this unfit metric over climatically significant periods of time cannot tell us that, since they do not actually measure the average temperature, even as experienced at a single weather station. The additional uncertainty from this factor increases the overall uncertainty about GAST and its anomalies to the point that the uncertainty exceeds the entire increase since the mid-20th century. This uncertainty is not eliminated through repeated smoothing and averaging of either absolute values or their anomalies.

I urge readers to reject the ever-present assertion that “if we just keep averaging averages, sooner or later the variation — whether error, uncertainty, or even just plain bad data — becomes so small as not to matter anymore”. That way leads to scientific madness.

There would be different arguments if we actually had an accurate and precise average of temperatures from weather stations. Many would still not agree that the temperature record alone indicates a change in retention of solar energy in the climate system. Energy entering the system is not auto-magically turned into sensible heat in the air at 2-meters above the ground, or in the skin temperature of the oceans. Changes in sensible heat in the air measured at 2-meters and as ocean skin temperature do not necessarily equate to increase or decrease of retained energy in the Earth’s climate system.

There will be objections to the conclusions of this essay — but the facts are what they are. Some will interpret the facts differently, place different importance values on different facts and draw different conclusions. That’s science.

# # # # #

For climate, this is not very relevant because we only have long records from many stations of Tmax and Tmin. So, we are forced to use what we have. You can use hourlies over the last 40 to 50+ years or so if you want, but then the time of observation isn’t exactly on the hour, either…it varies. In fact, as a former NWS weather observer, I can tell you it’s generally not even at the reported time (e.g. 1753 GMT) because of observer laziness. And ALL results will change if the sensor height is only 1 meter rather than 2 meters. This stuff is splitting hairs. There are bigger issues to deal with (UHI) which are being ignored.

Roy ==> It is only relevant if we are concerned with whether or not the tiny change –< 1degree — is fit-for-purpose of judging AGW validity and its effects.

I really liked this essay. It’s a factor I have often thought about, but this is a good discussion of a very real issue

Anyone versed in the various forms of averages will know that this is a tie breaker solution where the MEAN is SUBSTITUTED for the median due to lack of data !!

When you have only two data points , talking of the median is meaningless. As anyone versed in the various forms of averages will recognize. Apparently the author is not so versed.

Greg,

You said, “…the MEAN is SUBSTITUTED for the median due to lack of data !!” it seems to me that you stated it backwards. Two points are selected from a much larger (potential) sample population (that could be used to calculate a useful mean), and used in lieu of the many points that could be used to construct a PDF. One goes from a collection of a large number of values, to two values, that are then further reduced to the mid-point of those two values!

Greg ==> In modern station records we have temperatures recorded every five minutes. Yet the same (Tmax+Tmin)/2 method is still used at GHCN to find Tavg. See my reply to RCS.

I fully agree, that taking (Tmax+Tmin)/2 is not the correct approach to modern records.

Kip, please go back to your Khan Academy definitions. They say a Median is: “Median: The middle number; found by ordering all data points and picking out the one in the middle (or if there are two middle numbers, taking the mean of those two numbers).” And, they are correct.

Please notice that even your source says that, if there are two middle numbers, then take the Mean of those two. For a two number set (Tmax & Tmin), it may be a difference without a distinction, but the proper naming of the process – either way – comes down to taking the Mean of the two numbers involved. You may call it what you like, but using Median when Mean is proper may devalue your essay in the eyes of many readers.

Bob ==> I’ll let you explain to the class what we should call the procedure actually followed:

1. Order all data points in the set

2. Throw out all but the highest and the lowest.

3. Find the middle point between the two remain data points.

PS: To call it the MEAN of the original set is obviously fallacious and easily misunderstood.

(PS: If you had read previous comment or the essay itself carefully, you would already know that this is my opinion.)

I think you were very clear that the data are:

1) fit for the purposes of the meteorologist; 2) fit for the purposes of the climatologist; but, 3) not fit for the purposes of those splitting hairs of fractions of a degree. Roy is correct that its all we have for long-term data but that doesn’t mean its fit for purpose. Similar argument: getting significant figures correct.

Clarification: The sentence “Roy is correct that its all we have for long-term data but that doesn’t mean its fit for purpose” should have said “fit for purpose for analyzing change in fractions of a degree.” My apologies.

Also, Kip, I see some discussion about median/not a median farther down and you were very clear also in stating you were talking about a median of a two-point dataset. People don’t read anymore.

tiny change?

hardly.

the LIA was only about 1.5c cooler than today. is it safe to go back to that cool time.

you sure?

show your work, if you answer

Mosher ==> All the absolute GAST values for the current century. We are currently still less than a single degree above the average for cited for the start of AGW — 1951-1980 average — 0.8K. And that’s with the wonky metrics….

We are certainly glad the LIA ended and things have warmed up.

Using Tmax NOAA says USA:

August 2011 is warmest August. only .04F warmer than 1936.

July 1936 is warmest July (followed by 1934 and 1901/2012 tied.)

It isn’t hotter than the 1930s.

—-seem to have truncated a sentence in mine above. The first sentence should read:

“All the absolute GAST values for the current century fall within the uncertainty range for GASTabsolute — thus cannot properly be said to be larger or smaller than one another.

Bruce ==> Quite right — current temperatures are about the same as the Dust Bowl days, but without the horrible drought in the midwest.

Says the man who never shows his work! And if you want us to answer a question, make it answerable – what is “safe”? How was it “cool”?

And you have to tell us then why you believe temperatures 20-50 years ago were optimum, since that is what your question implies.

Oh and show your working for your assumption.

All errors matter.

Having a large list of reasons why the numbers aren’t fit for the purpose they are being used for helps to drive home the point.

It’s true that the historical records are not what we would like. That doesn’t mean we should stay with the current system of measurement. We can create a better measurement system now even though we are stuck with Tmin and Tmax for the historical record. After all in 30 years the measurements we make now will be part of the history. Why not fix the issue since we have the capability?

You can get the data from the recently implemented USCRN on a 5-minute basis if you want, but the fact is, if you want to compare today to the same date in 1885, Tmax and Tmin are all you have to work with. Only Mosher or Stokes would claim you can “infill” or “reconstruct” a daily temperature profile from 133 years ago.

huh.

you can in fact estimate the second by second temperatures using tmin and tmax and an empirically derived diurnal cycle.

wont be especially accurate. isnt needed however

Mosher ==> Gonna quote you on that “wont be especially accurate. isnt needed however”

Let’s hope that all those every-six-minute data points have been archived as raw data and that no one has homogenized them. Then, if future researchers want to look at the actual average temperature, the data will be available.

Retired ==> Well, they are, in fact 5 minute data points (my error)…there is no telling if they have been adjusted and at what point. GHCN carries data but doesn’t guarantee that its “raw” version has not been adjusted before arrival at the GHCN.

tmaz = apples

tmin = oranges.

you don’t average them to get anything meaninful

you do 2 separate charts.

this is an example- not so well performed, but properly done

Roy,

“In fact, as a former NWS weather observer, I can tell you it’s generally not even at the reported time (e.g. 1753 GMT) because of observer laziness.”

Actually, DeGaetano made a neat study of observing times. In the US, the observers filled out (B-19) not only the min/max, but also the temperature at time of observation. Analysing the diurnal pattern, you can deduce the average time of obs and compare with the stated time. It compared pretty well.

Tosh.

As, proven by your following statement; “It compared pretty well”.

It is neither “pretty” nor “well”.

It is sloppy reasoning.

Here is the plot of trend of observations, using both stated times and times inferred from the temperature recorded. Judge for yourself. I think the match is good.

“…stated times and times inferred…”

What is missing is the actual time standard for comparison.

Even aside from that, your plot shows “Percent of HCN stations with morning and afternoon observation times.”

Nick, “It compared pretty well.”

I’d say that it was a bloody horrible result.

Also, it does not answer the criticism put.

Geoff

so lets add “observer laziness”

to the long list of reasons

not to trust surface temperatures,

not to mention more infilled grids

than those with actual data.

It’s too bad so many surface “thermometers”

do automatic readings now because if they were

still the old fashioned glass thermometers,

the global warmunists would be trying

get more “global warming”

by finding shorter and shorter people

to read those thermometers —

preferably dwarfs and midgets,

to get a sharper upward vision angle

= more global warming!

But seriously now:

we should all give Dr. Spencer three cheers

for being one of the last honest climate scientists left,

for providing unbiased estimates

of surface temperatures

that are real science,

not junk science

over-adjusted,

excessively infilled nonsense,

with a pro-warming bias,

political agenda.

It is a HUGE conflict of interest

that the same government bureaucrats

who make warming predictions,

also own the surface temperature

actuals, and can adjust them

at will, to make their predictions

come true.

Richard Greene

I’m not a climate scientist, nor a scientist, nor even well educated, but I am a keen observer of human activities.

I have posted time and again that the historic records of temperatures are wholly unreliable as human intervention was vital and as you pertinently pointed out, the height of the one reading the thermometer is but one interesting variable.

When the global significance of temperature data wasn’t quite as closely scrutinised by the media, the public and everyone with a profitable interest in climate change itself, record keeping would have been a hit and miss affair.

The scientist with the responsibility for reporting local temperature measurements over the last 100 years or so couldn’t possibly have been in attendance for every hourly measurement 24/7/365 so the job would have been delegated.

The delegated individual was probably not as conscientious as the reporting scientist, so he probably despatched the tea boy, who went out in the snow/wind/rain/heat for a quick ciggy in a sheltered place and recorded the temperature as it was the day before.

The guy who chucked the bucket overboard to sample water temperature wouldn’t have been the officer on watch, it would have been the cabin boy, when conditions allowed, with readings taken on a heaving deck, in the wind/snow/rain/heat when he would rather be having his tot of rum.

All this, of course, in addition to the other work they had to do.

Then there’s the condition of the screens themselves. Were they painted with the correct material. Highly doubtful as even localised paint makers had their own versions of white paint. Indeed, were they maintained at all, and if so, where’s the evidence of that?

We know that modern satellite data isn’t perfect. We know that modern land based temperature data is riddled with UHI distortions. And we know that modern ARGO buoy data isn’t conforming to the party line so is largely sidelined.

So why do we imagine that data from anything before satellite and digital data recordings should be accurate to within less than 1˚C? Instead, those calculations are, as far as I can gather, relied upon to within the margin of error reserved for contemporary digital, 24/7/365 measuring devices.

Do I see allowances for this made in historical data? Well, from a layman’s perspective, no I don’t, but perhaps allowances have been made, I just don’t see them in any error bars which should be enormous from 100 years ago.

Also, when we talk about temperature change, we talk about anomalies, right? So the actual composition

of the metric is less important. The factors that make the metric less accurate, like UHI and other poor siting, would seem more important.

Spalding Craft,

If you haven’t already, you might check out this page, some of which talks about siting issues:

https://www.ncdc.noaa.gov/monitoring-references/faq/temperature-monitoring.php

Roy,

At the National Centers for Environmental Information, ncei.noaa.gov, we are informed that the August 2018 Temperature across global land and ocean temperatures was 1.33 degrees Fahrenheit above the 20 th Century Average of 60.1 degrees Fahrenheit.

August is said to mark the 404th consecutive month above the 20th Century Average.

Does it matter if the stated 20th Century Average is wrong or only roughly correct?

What if,in terms of this post, the 20th Century Average is not exactly known but lies between 59.6 degrees Fahrenheit and 60.6 degrees Fahrenheit or some wider margin?

Is it just that we have a smaller or larger anomaly going forward,or are other issues in play?

+10

I’m obviously not Roy, but I might take a stab at this question.

It seems to me that since anomalies aren’t based on the global average, but on the difference between absolute temperatures and the local baseline average, that it would depend on whether the local averages are differently biased relative to each other.

The period taken as the baseline is largely irrelevant to the calculation of anomalies – whether the average is 15 or 16 C won’t make a difference to the slope or scatter (variance) of the trend as a whole. This would in turn imply that if all the averages (for whatever baseline period) for all the sites were off by 1.5 C due to error, the anomalies would still follow the same trend.

However, if only some of the baselines were off by 1.5 C, that could make a difference, as it would add to the error (variance) in the anomalies.

Actual baseline, station A: 15.5

Monthly mean, station A: 18

Anomaly: 2.5

Actual baseline, station B: 17

Error in baseline, station B: -1.5

Apparent baseline: 15.5

Monthly mean, station B: 19.5

Anomaly: 4.0

So, in reality the anomalies are the same for these two stations, and the apparent baseline is the same (due to error in measurement) but in station B the error in the baseline gets transferred to the anomaly. This would result in artificial scatter of the data, and a higher “error” (variance due to actual and measurement-error differences) calculated in the trend. If the baseline were off mainly in sites that only have older (or newer) measurements (i.e., the record is only for part of the time period), it could also change the trend of the line. If, on the other hand, all baselines had the same error, that error would be transferred to the anomalies across the board, and the slope of the trend would be the same (just offset by 1.5 degree).

So, baseline measurements do matter, not only to anomalies going forward but those in the past.

Does that make sense? Hopefully others will chime in.

“Does it matter if the stated 20th Century Average is wrong or only roughly correct?

What if,in terms of this post, the 20th Century Average is not exactly known but lies between 59.6 degrees Fahrenheit and 60.6 degrees Fahrenheit or some wider margin?”

Well, canonical answer to that is as follows: we may have significant measurement uncertainties indeed. However, having very large sample size all those errors should average and cancel out. Therefore we should be able to detect changes very accurately.

Paramenter ==> The assumption that “all those errors should average and cancel out. Therefore we should be able to detect changes very accurately.” does not hold and is a large part of what is allowing Climate Science (and a raft of other scientific endeavors) to fool themselves.

Kip,

Why does that not hold? Error does get smaller with a bigger sample size, which is one reason error bars are wider in the early part of the century – that and the less precise measurement instruments, but even before they were switched the error narrowed. As I understand it, one reason there are fewer stations in the U.S. than there were decades ago is because they found statistically that coverage was ample.

“The size of our sample dictates the amount of information we have and therefore, in part, determines our precision or level of confidence that we have in our sample estimates. An estimate always has an associated level of uncertainty, which depends upon the underlying variability of the data as well as the sample size. The more variable the population, the greater the uncertainty in our estimate. Similarly, the larger the sample size the more information we have and so our uncertainty reduces.”

https://select-statistics.co.uk/blog/importance-effect-sample-size/

When anomalies are calculated, there are two main sources of error: that associated with the baseline averages, and that of the monthly average of the station measurements. As I’ve said before, one of the purposes of using anomalies is that it reduces the variance due to geographic differences, decreasing the error that is simply a function of where on the globe the station is (latitude, altitude, proximity to ocean, etc.).

Maybe if you think error is incorrectly calculated you should analyze the methods given here https://www.ncdc.noaa.gov/monitoring-references/docs/smith-et-al-2008.pdf, (or in some other relevant paper) then write up and submit your results. If you are right, you should have no trouble publishing it, as scientists want to get their statistics correct – I imagine climate scientists in particular are worried enough about bad publicity that they don’t want to be caught doing things poorly. If rejected and you think it’s reviewed improperly, post the reviews or have a statistician look over it – that is the time to make accusations of wrongdoing. Until then, it may be a bit presumptuous to say that climate scientists are fooling themselves, especially since you have not shown in what way they are doing so without comparing their methods with yours. Or am I missing something here? Where have you actually calculated error based on real-world data or described how error is calculated by climate scientists?

(A related question: when did you compare statistically the results of your way of analyzing monthly means with their way, and find that their way results in significant bias or greater error? How do you know your way is better?)

Kristi ==>See my essay Durable Original Measurement Uncertainty: https://wattsupwiththat.com/2017/10/14/durable-original-measurement-uncertainty/

They are not “my way” and”their way” — if you still think that, you’ll have to read the essay and the entire comments section again. They are the historical method, which is acknowledged to be poor and the modern way — being instituted for newer records by such as USCRN but not in use by GHCN.

But Roy,

Was not the MaxMin thermometer designed to lesen, hopefully eliminate, the errors arising from the time of day that the observer acted?

One can understand the establishment description of TOBS corrections, but surely the time of day bias operated on only a few days while the MaxMin thermometer overcomes most or all of the problem on the many other days.

It is hard to understand a TOBS correction ap[plied on days when it is not needed.

Geoff.

I go into more below but if the thermometer record was good, the larger uncertainty is something that needs to be calculated, otherwise, its still a useful indicator.

The thermometer record is a dogs breakfast and getting a global average requires considering this mean/median as an intensive property, which its not.

Thanks Kip

Anthony ==> My pleasure and labor of love….

One would have to assume that in order to determine the maximum and minimum temperatures for each day that numerous measurements and recordings are made throughout the day and then reviewed for max/min values. To where did all the other measurements disappear, and why are they not used?

the longest-running technology was analog (obviously), and the liquid-in-glass thermometers had little tiny “sticks” in the liquid that got pushed up (for the Tmax) and down (for Tmin), showing the highest and lowest temperatures the thermometers experienced. There did not have to be any intermediate recording of temperatures.

Maximum thermometers used by the UKMO that I read back in the day were mercury-in-glass but instead of the an indicator being pushed up the stem by the mercury column, they had a constriction in the capillary that broke the mercury column when the max temp was passed.

Rocket: The min and max were read off a mechanical device which “records” them automatically. See the diagram and the text.

“She would record the Minimum and Maximum temperatures shown by the markers” – was it really necessary to follow the PC gender madness? I doubt that many maintainer were female.

Van Doren ==> Many volunteer weather stations are manned by women.

“Manned” by women?

Michael ==> cute!

Wouldn’t a median be the temperature where and equal number of samples are greater and less than that.

(Tmax + Tmin)/2.

ie. Not = (Tmax+Tmin)/2

Googling, I can’t find any definition of median that is other than the middle value in a set. example In particular, I focused on university sites in case there was a special meaning that I didn’t know about.

The article explains why the ‘average’ temperature matters for different applications. At the top of the article is an illustration that shows why the difference between mean and median may matter a lot depending on how the data is distributed.

(Tmax + Tmin)/2 is the mid-range value.

The median is the value is value with half the samples greater and half the samples less.

The mean is the arithmetic average.

For a two sample set, all three values are the same.

Kip, I got as far as the following comment and I stopped reading. You say:

I’m sorry, but the median is NOT the midpoint between the max and the min. It’s the value which half of the data points are above and half below.

For example, the median of the five numbers 1, 2, 3, 4, 41 is three. Two datapoints are larger and two are smaller.

The median is NOT twenty-one, which is the midpoint between the max and the min [(Tmax + Tmin)/2].

And since you started out by making a totally incorrect statement that appears to be at the heart of your argument … I quit reading.

Regards,

w.

Actually, if you have only 2 data points, then mean (average) and median are the same. This article was a little pointless and just added confusion. Mean (average) of TMAX and TMIN is what is used over ANY time frame.

I’m glad Willis and Dan said this because I was going to.

I think the explanation is more than vague… it is downright misleading.

First, as Dan points out, (Tmax + Tmin)/2 is BOTH the median AND the mean… but just for those two values.

The “illustrative” graph further confuses the issue. When you change the temperature profile, the position of the median doesn’t change, but it’s value can (as shown). That contradicts the statement that the median doesn’t change… it can. At least its value can. Only the position is necessarily the same.

The mean’s value can obviously change but its position can vary, with the caveat that it must lie somewhere on the curve.

The second part of the illustration that might confuse is that it’s stated that the right-hand endpoints correspond (and so they must if X is time)… but given the shape of the dashed profile, that endpoint must be some distance off the page, in order for the mean to be shown where it is.

Well if we are going to be annoyingly pedantic, an average is not ncessarily a mean:

Average

noun

1.

a number expressing the central or typical value in a set of data, in particular the mode, median, or (most commonly) the mean, which is calculated by dividing the sum of the values in the set by their number.

“the housing prices there are twice the national average”

synonyms: mean, median, mode; More

I see we responded to that at almost the same time (I was first).

It is called a mid-range.

Willis,

You said, “… the median is NOT the midpoint between the max and the min. It’s the value which half of the data points are above and half below.”

When one interpolates the midpoint between Tmax and Tmin, half the data points ARE above and below the interpolated median. As I have pointed out previously, when dealing with with a set of even numbered points, it will always be necessary to interpolate between the two innermost values in the sorted list. Tmax and Tmin can be thought of as a degenerate, even-numbered list consisting of only the two innermost intermediate values.

You complain that the median is “NOT twenty-one.” Yet, as a measure of central tendency, 21 is closer to the arithmetic mean of 25.5 than 3 is, which is what one would normally expect.

In your example, depending on just what is being measured, one might justifiably consider the “41” to be an outlier, and be a candidate for being discarded as a noise spike or malfunction in the measuring device.

I think that you are being unnecessarily critical. The point that Kip was making is that interpolating the midpoint between two extreme values (Whatever you want to call it!) results in a metric that is far more sensitive to outliers than an arithmetic mean of many measurements.

I don’t think it is unnecessarily critical to point out an error in his post. It should be easy to correct.

D. Anderson,

If it NEEDS to be corrected. Willis has yet to respond to defend his complaint.

w. ==> Nonsense — ANY time one arranges the data in a data set in value order, largest to smallest, and then finds the mid-point, one is finding the median. The median of a two value set is found by adding the two and dividing by two. It is the same as the mean of the two values, but not the same as the mean of the whole set. It is the MEDIAN of the Max and the Min. It is the procedure that tells us.

Ok, so the next question is, are two samples a day enough to characterize the daily temperature?

Andersen ==> Read the essay.

I got distracted by your bizarre definition of median,.

yes.

You have tmin

you have tmax

you have TAVG

you dont have TMEAN,

but the trend in TAVG is an unbiased estimator of the trend in TMEAN.

trend is what we care about.

would TMEAN be best? yup, but not needed.

we can after all test against TAVG.

Mosher,

On what do you base the claim that “the trend in TAVG is an unbiased estimator of the trend in TMEAN.”? Medians are not amenable to parametric statistical analysis. Variance and SD are not defined for a median. Yet, from what I have read here, the monthly ‘average’ is the median of the arithmetic mean of the monthly Tmax and the arithmetic mean of the monthly Tmin.

Thanks, Kip. In that case you really need to emphasize that that is only true for a two-point dataset. However, for most temperature datasets these days that is far from the truth. Most temperatures are taken with thermistors sampled at regular intervals, and in that case, your statement is far from true.

And as you yourself say:

However, given that there are a number of “temperatures recorded for the day”, then (Tmax + Tmin)/2 is NOT the median of the daily temperatures.

You then say:

“… we are only dealing with a Daily Max and Daily Min for a record in which there are, in modern times, many measurements in the daily set, when we align all the measurements by magnitude and find the midpoint between the largest and the smallest we are finding a median (we do this , however, by ignoring all the other measurements altogether, and find the median of a two number set consisting of only Tmax and Tmin. )

Here, you claim that when you “align all the measurements by magnitude and find the midpoint between the largest and the smallest we are finding a median”, but then you say you are only finding the median of a two number set. In that case, you are NOT finding a median of “all the measurements”. And you note this later, which makes your earlier statement very misleading.

Are your statements correct? I guess so, if you read them in a certain way and kinda gloss over parts of them. You say that “we are finding a median” of all of the measurements, and then immediately contradict that and say we are finding a median of just two points.

Are they confusing as hell? Yep, and if you look at the comments you’ll see that I’m not the only one who is confused.

OK, now that I understand your convoluted text, I’m gonna go back and read the rest.

My thanks for the very necessary clarification,

w.

w. ==> You have confused yourself.

lol @ convoluted…

fonzie says: w…w…w…willis

Kip, but in this case the two values are the whole set.

I don’t know why you insist on using the term “median” if it ends up being confusing for people.

“This illustration is from an article defining Means and Medians, we see that if the purple traces were the temperature during a day, the median would be identical for wildly different temperature profiles, but the true average, the mean, would be very different.[Note: the right hand edge of the graph is cut off, but both traces end at the same point on the right — the equivalent of a Hi for the day.] ”

This doesn’t make sense to me. The graph is of temperature on the X axis and frequency on the Y axis, right? Could you send the link? Take a look at this illustration, you might see my confusion: http://davidmlane.com/hyperstat/A92403.html (Another thing is that what you’re calling the high for the day can’t be right because the line on the more normal distribution drops to zero – the min and max for each line is different)

Tmax 73, Tmin 71 = Tavg 72

Tmax 93, Tmin 51 = Tavg 72

Tmax 103, Tmin 41= Tavg 72

Are these not all showing the same estimates of daily heat radiating from the Earth’s surface? Sometimes the heat is much higher during the day, sometimes it’s spread out. It’s not exact, no, but given the number of estimates it seems to me you get a pretty good total estimate.

I think there’s probably a reason monthly average is calculated the way it is. It surprises me that it’s the median of daily averages, and I can’t figure it out at the moment, but I’m inclined to give the experts the benefit of the doubt. Silly, huh? Naive to trust the researchers to know what they’re doing, rather than assume they’re frauds, eh?

“This graph show the difference between daily Tavg (by (Tmax+Tmin)/2 method) and the true mean of daily temperatures, Tmean. ”

How is the “true mean of daily temperatures” calculated in your graph with the blue lines?

……………………………………………..

“Anthropogenic Global Warming scientists (IPCC scientists) are concerned with proving that human emissions of CO2 are causing the Earth climate system to retain increasing amounts of incoming energy from the Sun and calculate global temperatures and their changes in support of that objective. ”

So those who worked on the IPCC are now “Anthropogenic Global Warming scientists” rather than climate scientists? All versions? That includes the skeptics?

The idea that climate scientists are out to “prove” (a completely non-scientific term) anything is just more propaganda, Kip. Scientists try to discover what is happening. What they are finding is that most of the warming in the last several decades is anthropogenic.

Scientists don’t prove a hypothesis, they test it. They accumulate evidence through hypothesis testing, and if enough evidence supports is, they eventually call it a theory.

If a scientist came up with a different explanation and had lots of supporting evidence for it, and others validated the results, he would be instantly famous. Nobody has.

Scientists have tested the theoretical foundations developed over a hundred years ago through satellites that measure outgoing radiation at the top of the atmosphere, statistical models that look at different forcing mechanisms that might account for global temperature change, paleoclimate reconstructions, and GCMs. Scientists have been working on this steadily for half a century. Researchers from Exxon and Shell were estimating the temperature increase due to anthropogenic fossil fuel emissions in the 1980s (and kept their findings from the public). Were they out to “prove” AGW, too?

You are trying to discredit the ability of 1000s of scientists and spread distrust of the science. Do you really think they are all idiots??? It’s either that or all frauds. I just don’t understand!!! This question is more important to me, and to our society as a whole, than whether AGW is a problem. When people distrust any scientist that believes AGW is true, and trusts anyone who thinks scientists are making things up, no matter how little evidence they can muster, it shows how little truth matters in society today and how driven we are to see the Other as the enemy. And it shows how pervasive and successful the propaganda has been. Likewise, the alarmist liberal media profit from spreading propaganda and hatred. What is the country coming to?

I don’t want my fellow Americans to be my enemies. I don’t want them to think of me as the enemy. I bet if I sat down with most of you (not all at once) over a beer or a coffee, we could have a nice chat. I like all kinds of people, and people generally like me (believe or not!). I DON’T like manipulation, which is rampant on both the right and the left. …Sigh. I’m sorry. This is off topic.

“If a scientist came up with a different explanation and had lots of supporting evidence for it, and others validated the results, he would be instantly famous. Nobody has.”

deliberate logical fallacy ^

your attempts to cause disturbance of sane consciousness is aggressively manipulative and disrespectful.

you are saying that truth = popularity.

i have an allergy to stupid.

don’t give me tourettes.

gnomish,

Logical fallacy? Where? It’s a simple “if…then” statement.

Kristi ==> The fallacy is that because someone hasn’t come up with a “better” explanation for the warming since the end of the LIA, the current obviously wrong explanation (pixies, unicorns, evil spirits, or CO2 concentrations) must be true.

Nonsense, no truer than your grandmother’s folk wart remedy, which, after all, “worked” for Uncle George in 1902.

Kip, to date, CO2 offers the best explanation for the current warming. If you have something better to offer that the majority of scientists will agree to/accept, please post it.

Remy,

You claimed, “…to date, CO2 offers the best explanation for the current warming.” There quite a number of people here who would disagree with your assertion. Can you succinctly make your supporting argument, or cite something that does? Myself, I tend to lean to Occam’s Razor.

Clyde, unless you can offer a “better” explanation than CO2, I’m not going to change my mind. You would need to provide an alternative theory, and data to back it up. I don’t care if you disagree with what I’ve said , if you can’t meet my challenge, go away.

Remy Mermelstein,

You said, “…unless you can offer a “better” explanation than CO2, I’m not going to change my mind. You would need to provide an alternative theory, and data to back it up. I don’t care if you disagree with what I’ve said , if you can’t meet my challenge, go away.”

I did offer an alternative theory. Perhaps it was too subtle for you. Occam’s Razor basically says that the simplest explanation is usually the best. Earth started warming after the end of the Maunder Minimum, well before CO2 from the industrial revolution and population exploded, and has continued warming. The simplest explanation is that whatever initiated the warming after the Little Ice Age, it continues to be at least the predominant driver of warming. There is no reason to believe that the natural cycles suddenly stopped working and were replaced exclusively by anthropogenic forcing.

If you don’t want to play nice, I’ll gladly go away.

Clyde:

1) I don’t have a wife.

2) Occam’s razor doesn’t explain the recent/current warming

Kip,

There is absolutely nothing in this statement:

““If a scientist came up with a different explanation and had lots of supporting evidence for it, and others validated the results, he would be instantly famous. Nobody has.”

to suggest:

“The fallacy is that because someone hasn’t come up with a “better” explanation for the warming since the end of the LIA, the current obviously wrong explanation (pixies, unicorns, evil spirits, or CO2 concentrations) must be true.”

That is YOUR logical fallacy!

Kristi ==> You are often way to literal, and can not, apparently, see analogies and parallels.

Alternate explanations are not required in real science to say or show that another hypothesis does not hold up to close scrutiny.

The method is used in pseudoscience to support quake cures, conspiracy theories, and the like.

Clyde:

.

1) Occams’s razor is not a “theory.”

..

2) The following is not a “theory”: “The simplest explanation is that whatever initiated the warming after the Little Ice Age, it continues”….. WHATEVER is not specified. For all we know “whatever” could be unicorns in your “theory.”

..

3) “natural cycles” is not an explanation. We have a “natural” 24 hour cycle, but that does not explain the recent warming. We have a “natural” 365.25 day cycle, but that doesn’t explain the recent warming. What “natural cycle” are you talking about?????

…

You have not provided a viable alternative to CO2 to explain the recent warming.

Clyde,

‘The simplest explanation is that whatever initiated the warming after the Little Ice Age, it continues to be at least the predominant driver of warming.”

“Whatever” is not an explanation. “Natural variation” is not an explanation. The null hypothesis is a randomly changing (or an unchanging) climate.

The best-supported hypothesis for the LIA that I know of is that it was triggered by a period of high volcanic activity and exacerbated by another big volcano. After that the influence of relatively strong solar radiation (in the absence of high aerosols) led to warming, but that ended in about 1940, and since then CO2 has been a main forcing agent. In mid-20th C there was a period of high aerosols due mostly to anthropogenic air pollution, leading to cooling, but in the ’70s several countries enacted pollution control measures, and that cooling decreased in importance.

This is not the definitive explanation, but it is Occam’s Razor. “Something did it” is not.

Kristi,

Carl Sagan was fond of saying, “Extraordinary claims require extraordinary evidence.” It isn’t necessary to look for new and different forcing agents if the climate is within the normal range of temperature changes, which it is. It has been much warmer in the past, before humans evolved. It was warmer during the Holocene Optimum than it currently is. There is poor to no correlation between CO2 and prehistoric temperature reconstructions. More recent temperature proxies from ice cores strongly suggest that temperature increases occur 800 years before CO2 increases.

The fact that we many not know the complex interrelationships between all the natural forcing agents, or have names for them, doesn’t make them any less real. Even the IPCC admits that climate may be chaotic and unpredictable. Basically, to apply Occam’s Razor simply requires one to accept that climate is what it is, and in the absence of unprecedented temperatures, or unusual rates of warming, there is no need to appeal to some new agent forcing temperatures. The most recent episode of warming started before humans began using large quantities of fossil fuels. If temperatures had been declining or flat until WWII, and then suddenly starting climbing, then I’d say that there was a need to explain the change. However, warming started a century before then, and was probably warmer in the 1930s than it currently is!

The impact of historic volcanic activity has been shown to only last two or three years, even for the largest such as Krakatoa. That hardly explains a period of exceptional cold that may have extended from 1300 to 1850. Many competing hypotheses have been offered. But, once again, just because we can’t be sure which one(s) is correct, doesn’t mean that they weren’t in play. Clearly, something happened for which we have multiple lines of evidence. Just because we can’t definitively assign the cold to something in particular, doesn’t mean that we can’t use a ‘place holder’ such as “natural variability” The question is whether recent changes are great enough to warrant a different explanation, such as anthropogenic influences. Looking at sea level rise for about the last 8,000 years strongly suggests a linear rise that doesn’t require “Extraordinary Claims.”

Kristi ==> You are arguing for the sake of arguing. Climate science has no supportable explanation for the advent or end of the LIA — they have some suggested possibilities, none with strong evidence. The LIA lasted, by some reckoning, 300 years…..

You are conflating “possible causes”, suggested “it might have been…causes” with proven or scientifically supported causes. You seem to grant these possibilities the value of proven due to coming from the right side of the Climate Divide.

The simple truth is we know far less about past and present climate shifts than is pretended — close reading of the actual science sections of the IPCC reports makes this plain.

Some day, we may get past the guessing stage….but it doesns’t look like anytime soon.

Thank you Kristi.

Hi Kristi,

Regarding CO2:

The ancient reconstruction from proxies shows that CO2 was between 7,000 – 12,000 PPM. Over 600 million years there appears to be no correlation between CO2 and temperature. See graph here:

No one knows how accurate the proxies are, but there is no evidence.

If we look at the 800k year ice core records from Vostok, we do see a correlation between CO2 and temperature, but the cause is the temperature and the effect is atmospheric CO2. Not the other way around. The lag for the effect is about 800 years. I assume you know this and know the reason, but let me know if my assumption is bad.

If we look at the modern instrument record, we see no correlation between CO2 and temperature. CO2 has been rising for 150 years with accelerations of rise in the past 20 years. During the 150 year we have 30-40-year cooling periods, warming periods and periods of “pause” or no upward or downward trend.

Climate sensitivity is defined as the expected increase in average global atmospheric temperature (degrees C) for a corresponding doubling of atmospheric CO2 (ppm). As it relates to the scientific thought around this, I can show you over 30 peer reviewed scientific papers that claim zero or near zero sensitivity. I can show you another 45 that claim a very low sensitivity (0.02C, 0.1C, 0.3C, etc.). I can show you a half dozen that claim the atmosphere will cool with increasing CO2 concentration. I’m sure there are hundreds more papers with higher figures. The IPCC probably gives us the maximum figure – which keeps changing – but I think they are up to 8C. So, the world of science gives us a 400:1 range of results as determined by their ”science”. Actually, the range is infinite if I include the papers claiming zero sensitivity. Darned divide by zero! This shows that the world of science doesn’t have a shadow of a clue about climate sensitivity. Man, who paid for all of these garbage papers?

When V=IR was derived by Ohm, how many papers did it take to finally know he was right? Were there hundreds of competing equations, like V=0.267IR, V=5.937IR, V=1×10^29IR? No. You can test this in any physics lab. When the charge of an electron was first measured, did other scientists come out with values that varied by 400:1? No. There are many, many more examples I could give. If you review the real world of repeatable science, we don’t have these problems that climate “science” brings to us.

There is no record (ancient, long ago or recent) that provides evidence to the theory that CO2 drives climate. We don’t even have an equation that tells us what the relationship is. Many scientists do tell us that CO2 drives climate, but if they are honest, they tell you it is a theory with no actual support. Many scientists speak but speak not in their capacity as scientist. Instead they speak as advocates for a social and political ideology. They propagate a narrative.

You don’t need to solve the riddle to point out that the theory is unsupported. A fair statement is that CO2 might drive climate, but we have no historical or current proof and have no mathematical relationship figured out that would define the process.

Kip,

” You are often way to literal, and can not, apparently, see analogies and parallels.”

When we are talking about a logical fallacy, the only way to address it is literally.

“Alternate explanations are not required in real science to say or show that another hypothesis does not hold up to close scrutiny.”

But when hypotheses do hold up to close scrutiny, both on theoretical and observational grounds, the burden is on the doubters to provide an alternative explanation. “Natural variation” and “coming out of the LIA” are not explanations.

“Climate science has no supportable explanation for the advent or end of the LIA — they have some suggested possibilities, none with strong evidence.”

Depends what you call “strong evidence.” I never suggested, and never will suggest anything is “proven.” That’s not a scientific word. It’s hard to demonstrate with confidence what happened in the distant past, there is no denying that. But there is the process of looking at what factors we know changed (and their effects in the modern record,) lining those up with the past temperature record, and making plausible, supported arguments. Aerosols, solar activity, ice extent, vegetation, written records…these are the kinds of things scientists can take into account. Then they can make a hypothesis. Then others can look at the hypothesis and debate it, come up with other hypotheses, debate those, etc. If over time and after debate a hypothesis is still the best explanation, one can take it as a “working hypothesis,” and build on it.

Considering all the evidence available, there is no better hypothesis for the events of the last 80 years than the one that was posited 120 years ago, despite work by many thousands of scientists over the last 50 years. The evidence keeps accruing. The alternate hypotheses offered have been refuted.

So when is the public going to accept the ideas of the vast majority of climate scientists? Even many “skeptic” scientists agree that AGW is the best explanation, they just don’t necessarily agree on the dangers or sensitivity.

“You are conflating ‘possible causes’, suggested ‘it might have been…causes’ with proven or scientifically supported causes. You seem to grant these possibilities the value of proven due to coming from the right side of the Climate Divide.”

That’s all nonsense! That’s what you want to think, what you assume. You think I’m just a brainless parrot because that makes you able to dismiss me. It says far more about you than about me.

I read your posts and I consider them carefully. Even if you are right about the error problem, you simply aren’t at all convincing because you don’t at any point demonstrate your hypotheses – you don’t do the statistics. You don’t show that the way scientists calculate error (or average) is statistically significantly different using the actual data. (You don’t even know that sometimes you CAN average averages! There is NOTHING WRONG with averaging the averages of sets of 30 (or 31 or 29) numbers – as long as the sets have the same number of values. Your way would be incorrect if even 1 5-minute reading was absent.) Without the statistics, you have nothing, but you still make firm conclusions: scientists are fools. All you are demonstrating is your own bias. It’s very odd.

Kristi ==> “the burden is on the doubters to provide an alternative explanation.” This idea is simply fallacious — wouldn’t it be nice to be able to just know the right answer to the complex scientific problems facing us today. The fact is, WE DON’T KNOW.

Pretending we know or continuing to use an idea that is demonstrably wrong or way to weak to to be granted the classification as Known Fact is just poor science and a lousy way to order one’s mind.

In the case of the AGW hypothesis, which is not the subject of this essay, there are many top flight scientists who consider it weakly supported and have written extensively on their views. You know this. AGW is a matter of scientific opinion — and valid opinions vary — not only in this field of study, but in many fields of study.

Remember, you have at least two heads of departments of atmospheric sciences (which is where Climate Science happens) at top state universities that are leading skeptics. They, too, are Authorities. (One, Dr.Curry, recently retired).

If Heads of University Departments of your subject can have their doubts and offer alternate hypotheses, then certainly others can too.

(One subject per reply…sorry)

The climategate emails prove you are wrong Kristi Silber. You should read them sometime.

Akan ==> You must be referring to my distinguishing bewteen Climate Scientists and AGW Scientists.

Alan, what proof do you have that the stolen emails have not been tampered with?

Remy,

What proof do you have that you have quit beating your wife? 🙂

Clyde, see my comment above re “wife beating.”

remy, being as how absolutely nobody disputes the veracity of the emails, your question is moot.

like a used condom kind of moot.

Gnomish, being that multiple authorities investigating the emails have found no fraud, deceit or deception on the part of the climate scientists, your response is also moot. If you have a point, could you please make it? For example, do you consider stolen property authoritative?

Alan,

What makes you think I haven’t?

Remy:

“Alan, what proof do you have that the stolen emails have not been tampered with?”

This is not a very good argument. What has been “tampered with” is the meaning and significance of the emails. Some of the worst accusations based on them are faulty. A few are legitimate. There was a lack of professionalism, but that doesn’t mean there was scientific misconduct.

do try to keep the focus, [pruned].

nobody disputes the emails veracity.

gish gallop right off, now.

[yes, it was pruned. .mod]

..

..

..

Sticks and stones.

…

Name calling is a logical fallacy.

…

Per our host:

..

https://www.realskeptic.com/2013/12/23/anthony-watts-resort-name-calling-youve-lost-argument/

…

Gnomish has lost the argument.

Kristi,

It concerns me that I have seldom seen a climate researcher delve into the fine detail of Tmax and Tmin in the way that Kip has here. I have the impression, be it right or wrong, that the topic is glossed over by establishment workers.

If you can show me publications where these points of Kip’s are dissected and discussed and conclusions drawn, then I might agree with you. Until then, I think you are being too kind to the assumption of logical processes in climate science.

It is a little like formal errors. Have you ever seen a (Tmax + Tmin)/2 with an associated error envelope? Ever read how the envelope was constructed? I have not, but there is a high probability that I have not read the appropriate papers. Geoff.

Geoff,

Here is Boulder last July using CRN 5 minute measurements. http://cfys.nu/graphs/Boullder_CRN_JulyFull_2018.png

As you can see (Tmax/Tmin)/2 is not very precise and the deviation is random. I am not sure it matters for trends over longer time periods but it does i, in my mind, disqualify adjustments like TOBS!

MrZ ==> Can I use your graph in the future?

If you have created it programmatically, I’d like to see the code — it is nice work.

You can email me at my first name at i4.net

Hi Kip!

You are welcome to use the graph. I have put the Excel here: http://cfys.nu/graphs/Boulder_July_2018.xls

Please note Excel does not like 8000 entries in a graph and it crashes sometimes if you edit too fast.

I”ll send you a hello mail later today.

MrZ ==> Thank you, very kind.

I am green with envy of your obvious skill with Excel.

Geoff,

Ascertaining the error in such a basic calculation is something that scientists would learn in school, not discuss in a research paper. The absence of such a discussion in the literature is no reason to assume that scientists don’t know how to do it. I, for one, and not going to ASSUME that scientists would make such a basic mistake, and in so doing, discredit all of climate science.

More difficult are calculating the errors in a reanalysis of the full dataset. My knowledge of statistics is not good enough to evaluate these. I rely on scientists who read these papers to do such evaluations, and where they find errors in the statistics or better ways of doing reanalysis to account for errors, to publish their results.

In other words, I have trust in the scientific community to make improvements or corrections where applicable. That’s what science is about: improvement. Even if I found an error somewhere, there is no guarantee that the error wouldn’t have already been corrected in another publication. This is why part of a scientist’s job is to keep up with the relevant literature. In my experience, it takes hundreds of hours/year to do so, and that is with the expertise to understand it all.

Am I being kind in trusting scientists? Not in my opinion. I just have the humility to realize that they know more than I do, and I’m not going to distrust them based on no evidence. Nor will I buy into the assumptions made by others that the whole profession is populated by fools and frauds. To me that doesn’t seem a reasonable assumption, especially coming from those who will use any means, however prejudicial, to convince others it’s true.

But that’s just me. Others are welcome to their own opinions.

Kisti Silber,

You said, “Am I being kind in trusting scientists? Not in my opinion. ”

The problem is, you have admitted that you don’t have experience in programming, and have a weak statistics background, so you trust published scientists. However, you dismiss scientists and engineers here who raise issues with what is being done. Implicitly, you are appealing to the authority of those who go through formal peer review because you are personally unable to critique what they are doing. That is unfortunate, because in science, an argument or claim should stand on its own merit and not be elevated unduly because someone is a recognized as an authority. There is the classic case of Lord Kelvin pronouncing the age of the Earth based on thermodynamic considerations, and his stature was such that no one would challenge him. It turns out he wasn’t even close!

Clyde,

It’s not that I automatically dismiss the scientists and engineers around here or I wouldn’t read and consider the arguments. However, when people have shown repeatedly that their arguments are intended to promote distrust in the majority of scientists, it diminishes their credibility.

I’m not devoid of ability to evaluate science. Kip’s analysis of anomalies, concluding, ” Thus, their use of anomalies (or the means of anomalies…) is simply a way of fooling themselves….’” (etc.) was not convincing to me because I know enough to realize that anomalies are a far better alternative to absolute temperatures for calculating trends, and Kip didn’t provide any better way of doing it.

Nor am I convinced that, “The methods currently used to determine both Global Temperature and Global Temperature Anomalies rely on a metric, used for historical reasons, that is unfit in many ways…”

simply because it is assumed that scientists don’t know how to handle error given the way the measurements were taken. If he had found a recent paper discussing methods of reanalysis and found statistical errors in it, that would be different.

Many of the posts here include assumptions about how the science is done while bearing little or no demonstrated relation to how it’s actually done. It’s not the same as critiquing a method described in a paper using scientific methods to show that it’s wrong.

There is also a lot of evaluation of science based not on an actual publication, but on press releases, and everyone here should know by now that press releases are not adequate representations of what’s in the original literature. This results in countless cases of erroneous dismissal based purely on assumption (I often do read the original, when available).

Although I’m not able to evaluate the more complex statistics, I do know something about simpler analyses. I am, for instance, aware that tests that are available in Excel (and elsewhere) rely on assumptions to be valid, a fact often ignored or unknown, resulting it the use of such tests indiscriminately and sometimes erroneously.