Guest Essay by Kip Hansen

Why do we even talk about sea level and sea level rise?

There are two important points which readers must be aware of from the first mention of Sea Level Rise (SLR):

There are two important points which readers must be aware of from the first mention of Sea Level Rise (SLR):

- SLR is a real concern to coastal cities, low-lying islands and coastal and near-coastal densely-populated areas. It can be real problem. See Part 1 of this series.

- SLR is not a threat to much else — not now, not in a hundred years — probably not in a thousand years — maybe, not ever. While it is a valid concern for some coastal cities and low-lying coastal areas, in a global sense, it is a fake problem.

In order to talk about Sea Level Rise, we must first nail down Sea Level itself.

What is Sea Level?

In this essay, when I say sea level, I am talking about local, relative sea level — this is the level of the sea where it touches the land at any given point. If we talk of sea level in New York City, we mean the level of the sea where it touches the land mass of Manhattan Island or Long island, the shores of Brooklyn or Queens. This is the only sea level of any concern to any locality

There is a second concept also called sea level, which is a global standard from which elevations are measured. This is a conceptual idea — a standardized geodetic reference point — and has nothing whatever to do with the actual level of the water in any of the Earth’s seas. (Do not bother with the Wiki page for Sea Level — it is a mishmash of misunderstandings. There is a 90 minute movie that explains the complexity of determining heights from modern GPS data — information from which will be used in the next part of this essay. Yes, I have watched the entire presentation, twice.)

And there is a third concept called absolute, or global, sea level, which is a generalized idea of the average height of the sea surface from the center of the Earth — you could think of it as the water level in a swimming pool which is in active use, visualizing that while there are lots of splashes and ripples and cannon-ball waves washing back and forth, adding more and more water (with the drains stopped up) would increase the absolute level of the water in the pool. I will discuss this type of Global Sea Level in another essay in this series.

Since the level of the sea is changing every moment because of the tides, waves and wind, there is not, in reality a single experiential water level we can call local Sea Level. To describe the actuality, we have names for the differing tidal and water height states such as Low Tide, High Tide, and in the middle, Mean Sea Level. There are other terms for the state of the sea surface, including wave heights and frequency and the Beaufort Wind Scale which describes both the wind speed and the accompanying sea surface conditions.

This is what tides look like:

Diurnal tide cycle (left). An area has a diurnal tidal cycle if it experiences one high and one low tide every lunar day (24 hours and 50 minutes). Many areas in the Gulf of Mexico experience these types of tides.

Semidiurnal tide cycle (middle). An area has a semidiurnal tidal cycle if it experiences two high and two low tides of approximately equal size every lunar day. Many areas on the eastern coast of North America experience these tidal cycles.

Mixed Semidiurnal tide cycle (right). An area has a mixed semidiurnal tidal cycle if it experiences two high and two low tides of different size every lunar day. Many areas on the western coast of North America experience these tidal cycles.

This image shows where the differing types of tides are experienced:

Tides are caused by the gravitational pull of the Moon and the Sun on the waters of the Earth’s oceans. There are several very good tutorials online explaining the whys and hows of tides: A short explanation is given at EarthSky here. A longer tutorial, with several animations, is available from NOAA here (.pdf).

There are quite of number of officially established tidal states (which are just average numerical local relative water levels for each state) — they are called tidal datums and they are set in relation to a set point on the land, usually marked by a brass marker embedded in rock or concrete, a “bench mark” — all tidal datums for a particular tide station are measured in feet above or below this point. An image of the benchmark for the Battery, NY follows, with an example tidal datums for Mayport, FL (the tidal station associated with Jacksonville, FL, which was recently flooded by Hurricane Irma):

The Australians have slightly different names, as this chart shows (I have added the U.S. abbreviations):

Grammar Note: They are collectively correctly referred to as “tidal datums” and not “tidal data”. Data is the plural form and datum is the singular form, as in “Computer Definition. The singular form of data; for example, one datum. It is rarely used, and data, its plural form, is commonly used for both singular and plural.” However, in the nomenclature of surveying (and tides), we say “A tidal datum is a standard elevation defined by a certain phase of the tide.“ and call the collective set of these elevations at a particular place “tidal datums”.

The main points of interest to most people are the major datums, from the top down:

MHHW – Mean High High Water – the mean of the higher of the day’s two high tides. In most places, this is not much different than Mean High Water. In the Mayport example, the difference is 0.28 feet [8.5 cm or 3.3 inches]. In some cases, where Mixed Semidiurnal Tides are experienced, they can be quite different.

MSL – Mean Sea Level – the mean of the tides, high and low. If there were no tides at all, this would simply be local sea level.

MLLW – Mean Low Low Water – the mean of the lower of the two daily low tides. In most places, this is not much different than Mean Low Water. In the Mayport example, the difference is 0.05 feet [1.5 cm or 0.6 inches]). Again, it can be very different where mixed tides are experienced.

Here’ what this looks like on a beach:

On a beach, Mean Sea Level would be the vertical midpoint between MHW and MLW.

The High Water Mark is clearly visible on these pier pilings where the growth of mussels and barnacles stops.

And Sea Level? At the moment, local relative sea level is obvious — it is the level of the sea. There is nothing more complicated than that at any time one can see and touch the sea. If one can note the high water mark and observe the water at its lowest point during the 12 hour and 25 minutes tide cycle, Mean Sea Level is the midpoint between the two. Simple!

[Unfortunately, in all other senses, sea level, particularly global sea level, as a concept, is astonishingly complicated and complex.]

For the moment, we will stay with local Relative Mean Sea Level (the level of the sea where it touches the land).

How is Mean Sea Level measured, or determined, for each location?

The answer is:

Tide Gauges

Tide Gauges used to be pretty simple — a board looking very much like a ruler sticking up out of the water, the water level hitting the board at various heights as the tides came and went, giving passing vessels an idea of how much water they could expect in the bay or harbor. This would tell them whether or not their ship would pass over the sand bars or become grounded and possibly wrecked. One name for this type of device is a “tide staff”.

Tide Gauges used to be pretty simple — a board looking very much like a ruler sticking up out of the water, the water level hitting the board at various heights as the tides came and went, giving passing vessels an idea of how much water they could expect in the bay or harbor. This would tell them whether or not their ship would pass over the sand bars or become grounded and possibly wrecked. One name for this type of device is a “tide staff”.

Since that time, tide gauges have advanced and become more sophisticated.

The image above gives a generalized idea of the older style float and stilling well tide gauges and the newer acoustic-sensor gauges with satellite reporting systems and a back-up pressure sensor gauge. Modern ships and boats retrieve tide data (really, predictions) on their GPS or chart-plotting device which tells them both magnitude and timing of tides for any day and location. Details on the specs of various types of tide gauges currently in use in the U.S. are available in a NOAA .pdf file, “Sensor Specifications and Measurement Algorithms”.

The newest Acoustic sensor — the “Aquatrak® (Air Acoustic sensor in protective well)” — has a rated accuracy of “Relative to Datum ± 0.02 m (Individual measurement) ± 0.005 m (monthly means)”. For the decimal-fraction impaired, that is a rating of plus/minus 2 centimeters for individual measurements and plus/minus 5 millimeters for monthly means.

Being as gentle as possible with my language, let me point out that the rated accuracy of the monthly mean is a mathematical fantasy. If each measurement is only accurate to ± 2 cm, then the monthly mean cannot be MORE accurate than that — it must carry the same range of error/uncertainty as the original measurements from which it is made. Averaging does not increase accuracy or precision.

[There is an exception — if they were averaging 1,000 measurements of the water level measured at the same place and at the same time — then the average would increase in accuracy for that moment at that place, as it would reduce any random errors between measurements but it would not reduce any systematic errors.]

Thus, as a practical matter, Local Mean Sea Levels, with the latest Tide Gauges, give us a measurement accurate to within ± 2 centimeters, or about ¾ of an inch. This is far more accuracy than is needed for the originally intended purposes of Tide Gauges — which is to determine water levels at various tide states to enable safe movement of ships, barges and boats in harbors and in tidal rivers. The extra accuracy does contribute to the scientific effort to understand tides and their movements, timing, magnitude and so forth.

But just let me repeat this for emphasis, as this will become important later on when we consider the use of this data to attempt to determine Global Mean Sea Level from Tide Gauge data, although Local Monthly Mean Sea Level figures are claimed to be accurate to ± 5 millimeters, they are in reality limited to the accuracy of ± 2 centimeters of their original measurements.

What constitutes Local Relative Sea Level Change?

Changing Local Relative Mean Sea Level determined by the tide station at the Battery, NY (or any other place) could be a result of the movement of the land and not the rising of the sea. In reality, at the Battery, it is both; the sea rises a bit, and the land sinks (or subsides) a bit, the two motions adding up to a perceived rise in local mean sea level. I use the Battery, NY as an example as I have written about it several times here at WUWT. (see the important corrigendum at the beginning of the essay there – kh) In summary, the land mass at the Battery is sinking at about 1.3 mm/year, about 2.6 inches over the last 50 years. The sea has actually risen, during that same time, at that location, about 3.34 inches — the two figures adding up to the 6 inches of apparent Local Mean Sea Level Rise experienced at the Battery between 1963 and 2013 reported in the New York State Sea Level Rise Task Force Report to the Legislature — Dec 31, 2010.

This is true of every tide gauge in the world that is attached directly to a land mass (not ARGO floats, for instance) — the apparent change in local relative MSL is the arithmetic combination of change in the actual level of the sea plus the change resulting from the vertical movement of the land mass. Sinking/subsiding land mass increases apparent SLR, rising land mass reduces apparent SLR.

We know from NOAA’s careful work that the sea is not rising equally everywhere:

[Note: image shows satellite derived rates of sea level change]

nor are the seas flat:

This image shows a maximum difference of over 66 inches/2 meters in sea surface heights — very high near Japan and very low near Antarctica, with quite a bit of lumpiness in the Atlantic.

The NGS CORS project is a network of Continuously Operating Reference Stations (CORS), all on land, that provide Global Navigation Satellite System (GNSS) data in support of three dimensional positioning. It represents the gold standard for geodetic positioning, including the vertical movement of land masses at each station.

In order for tide gauge data to be useful in determining absolute SLR (not relative local SLR) — actual rising of the surface of the sea in reference to the center of the Earth — tide gauge data must be coupled to reliable data on vertical land movement at the same site.

As we have seen in the example of the Battery, in New York City, which is associated with a coupled CORS station, the vertical land movement is of the same magnitude as the actual change in sea surface height — 2.6 inches of downward land movement and 3.34 inches of rising sea surface. In some locations of serious land subsidence, such as the Chesapeake Bay region of the United States, downward vertical land movement exceeds rising water. (See The Chesapeake Bay Bolide Impact: A New View of Coastal Plain Evolution and Land Subsidence and Relative Sea-Level Rise in the Southern Chesapeake Bay Region ) In some parts of the Alaskan coast, sea level appears to be falling due to the uplifting of the land resulting from 6,000 years of glacial melt.

Who tracks Global Sea Level with Tide Gauges?

The Permanent Service for Mean Sea Level (PSMSL) has been responsible for the collection, publication, analysis and interpretation of sea level data from the global network of tide gauges since 1933. In 1985, they established the Global Sea Level Observing System (GLOSS), a well-designed, high-quality in situ sea level observing network to support a broad research and operational user base. Nearly every study published about Global Sea Level from tide gauge data uses PSMSL databases. Note that this data is pre-satellite era technology — the measurements in the PSMSL data base are in situ measurements — measurements made in place at the location — they are not derived from satellite altimetry products.

This feature of the PSMSL data has positive and negative implications. On the upside, as it is directly measured, it is not prone to satellite drift, instrument drift and error due to aging, and a host of other issues that we face with satellite-derived surface temperature, for instance. It gives very reliable and accurate (to ± 2 cm) data on Relative Sea Levels — the only sea level data of real concern for localities.

On the other hand, those tide gauges attached to land masses are known to move up and down (as well as north, south, east and west) with the land mass itself, which is in constant, if slow, motion. The causes of this movement include glacial isostatic adjustment, settling of land-filled areas, subsidence due to the pumping of water out of aquafers, gas and oil pumping, and the natural processes of settling and compacting of soils in delta areas. Upward movement of land masses results from isostatic rebound and other general movements of the Earth’s tectonic plates.

For PSMSL data to be useful at all for determining absolute (as opposed to relative) SLR, it obviously must be first corrected for vertical land movement. However, search as I may, I was unable to determine from the PSMSL site that this was the case. The question in my mind? — Is it possible that the world’s premier gold-standard sea level data repository contains data not corrected for the most common confounder of the data? — I email the PSMSL directly and asked this simple question: Are PSMSL records explicitly corrected for vertical land movement?

The answer:

“The PSMSL data is supplied/downloaded from many data suppliers so the short answer to your question is no. However, where possible we do request that the authorities supply the PSMSL with relevant levelling information so we can monitor the stability of the tide gauge.”

Note: “Leveling” does not relate to vertical land movement but to the attempt to ensure that the tide gauge remains vertically constant in regards to its associated geodetic benchmark.

If PSMSL data were corrected for at-site vertical land movement, then we could determine changes in actual or absolute local sea surface level changes which could be then be used to determine something that might be considered a scientific rendering of Global Sea Level change. Such a process would be complicated by the reality of geographically uneven sea surface heights, geographic areas with opposite signs of change and uneven rates-of-change. Unfortunately, PSMSL data is currently uncorrected, and very few (a relative handful) of sites are associated with continuously operating GPS stations.

What this all means

The points made in this essay add up to a couple of simple facts:

- Tide Gauge data is invaluable for localities in determining tide states, sea surface levels relative to the land, and the rate of change of those levels — the only Sea Level data of concern for local governments and populations. However, Tide Gauge data, even the best station data from the GLOSS network, is only accurate to ±2 centimeters. All derived averages/means of tide gauge data including daily, weekly, monthly and annual means are also only accurate to ±2 centimeters. Claims of millimetric accuracy of means are unscientific and insupportable.

- Tide gauge data is worthless for determining Global Sea Level and/or its change unless it has been explicitly corrected by on-site CORS-like GPS reference station data capable of correcting for vertical land movement. Since the current standard for Tide Gauge data, the PSMSL GLOSS, is not corrected for vertical land movement, all studies based on this uncorrected PSMSL data producing Global Sea Level Rise findings of any kind — magnitude or rate-of-change — are based on data not suited for the purpose, are not scientifically sound and do not, cannot, inform us reliably about Global Sea Levels or Global Sea Level Change.

# # # # #

Author’s Comment Policy:

I am always eager to read your comments and to try and answer your on-topic questions.

Try not to jump ahead of the series in comments — this essay covers only the issues of Tide Gauges, the accuracy of their data and the implications of these details.

I will cover, in future parts of the series: How is sea level measured by satellites? How accurate are satellite sea level measurements anyway? Do we know that sea level is really rising? If so, how fast is it rising? Is it accelerating? How can we know? Should I sell my sea front property?

Please remember, Sea Level Rise is an ongoing Scientific Controversy. This means that great care must be taken in reading and interpreting new studies and especially media coverage of the topic — bias and advocacy are rampant, opposing forces are firing repeated salvos at one another in the journals and in the press. In the end, the current consensus — both the alarmist consensus and the skeptical consensus — may well simply be an accurate measure of the prevailing bias in the field from each perspective. (h/t John Ioannidis)

# # # # #

Dr Roy Spencer:

http://www.drroyspencer.com/wp-content/uploads/Sea-level-data-since-1855.jpg

http://www.drroyspencer.com/2017/08/an-inconvenient-deception-how-al-gore-distorts-climate-science-and-energy-policy/

Don B ==> The central point of this essay is that any study trying to show Global Sea Level changes (rise, fall, steady state) are TOTALLY INVALID if they use tide gauge data uncorrected for vertical land movement.

I disagree Kip. The land movement at any place is essentially constant* in the hundred year time frame. Ignoring the land movement will affect the numerical rate in Dr. Spencer’s graph, but it will not affect whether or not there’s an acceleration in the sea level rise, which was his point.

* Yes, there are places where water or oil pumping would change the rate of land movement over time, but these are few in number and not likely to affect the average. If they did it would show up as an increase in the rate, which isn’t seen in Dr. Spencer’s graph.

scarletmacaw ==> One can not determine how much of the change is the result of land movement and how much is the result of actual rising water — without an accurate measure land movement. If he were using data for ONE tide gauge, then he could determine the relative local sea level change at that location….but he wouldn’t know how much of that was water rising and how much from land subsiding.

Spencer however is looking at 1227 different tides gauges — some of the tide stations are on land masses that are rising up and some are on land masses that are sinking. You see the problem here?

Kip: scarletmacaw’s response is carefully worded and absolutely correct in each point he makes, given his own stated conditions. He is referring to the acceleration, not the rate of rise.

Scarletmacaw,

I think that you underestimate the rate of land elevation change for coastal sediments. See this article: https://arstechnica.com/science/2017/10/silicon-valley-rose-as-water-use-restrictions-kicked-in/

While vertical displacements along coastal faults may only happen every few hundred years, there can be several meters of change each time. One then has to take the average over the recurrence interval.

Stephen ==> The assumption that vertical land movement at all locations is static — unchanging in rate — over a century is unsupportable — GIA vertical movement can sort-of be assumed to be steady, but most stations are affected by subsidence due to landfilling, pumping of aquifers, oil extraction, simple subsidence as delta areas not being replenished, etc. Cities are built, islands like Manhattan enlarged with stone and soil, piers extended out on the river muck, and tide gauges installed on them.

So, no, rate-of-change, acceleration, etc can not be determined without correction for vertical land movement of the tide gauges themselves.

Clyde, how can sediments affect a tide gauge? Sediments would deposit around the foundation of the gauge, not under the gauge. I can see how enough sediment would render a gauge inoperable by blocking the sea water, but that’s another matter entirely.

Scarlet ==> When harbors and their piers, to which tide gauges are generally attached, are built on sediments or landfill, the pilings not driven down to bedrock, then as the sediment settles, the piers subsides, and results in an apparent sea level rise.

The Mississippi River delta area is subsiding at a very rapid rate, as it has been denied replenishment of sediments. Existing delta sediments settle and get washed away, and islands are percieved to be being overrun by rising seas — which is not the case. Oil and gas extraction from under the delta causes even more subsidence and thus more apparent SLR.

Kip

I think the important thing here is whether the rate of change is changing, rather than the absolute numbers themselves.

The IPCC admit that sea levels were rising as fast between 1920 and 1950, as in the last 30 yrs, with a lsow down in between. The Jevrejeva figures show the same.

The figure of 1.92mm pa may not be accurate for global sea levels, but that is a separate issue

Paul ==> Thanks for stopping by. The CAGW point is acceleration, as a necessary part of the message that CO2 driven warming will cause catastrophic sea level rise. Doubling next-to-nothing adds up to not much.

The point of concern for most everywhere is that the sea is rising, as it has for a long long time, and localities need to know how much it may rise where they are in the next 50/100 years.

…and for global tide gauges adjusted for land movement I believe the rate of rise is aboutv1.49 mm per year.

As with satellite data I rarely, if ever, see error bars on SL graphics.

David ==> Yes — true. To be covered in a subsequent part in this series.

It can’t show whether global sea level is rising, falling, or in a steady state.

It *can* show that globally no dangerous sea level rise acceleration has been observed at actual tide gauges. And since the local relative sea level is the *only* sea level that actually matters for anything, that’s a very comforting thing to know.

Here’s a 2016 paper in Nature Climate Change, by Aimée Slangen, John Church, and four other authors, which told us when it was that anthropogenic forcings had kicked in and begun driving sea-level rise:

http://www.nature.com/nclimate/journal/v6/n7/full/nclimate2991.html

Slangen & Church were both at CSIRO (in Australia), so I annotated a NOAA graph of sea-level at Australia’s longest tide gauge, to illustrates the findings of that paper:

http://www.sealevel.info/680-140_Sydney_2016-04_anthro_vs_natural.png

Now, why do you suppose they didn’t didn’t include a graph like that in their paper?

“Anthropogenic forcing dominates global mean sea-level rise since 1970”

The title of church’s article in nature – hat tip daveburton

This is awesomely funny! Yeah, why not, that would finally finish out the unnecessary debate.

Of course, the people touting CAGW-SLR talk about open water, satellite measured SLR with sea botton adjustment, and mix that with local relative mean sea level in informal cmmunication. Add some cherry picking and you get +100% acceleration, add some exponential fit and we’ll all drown, DROWN I tell you.

Kip will return to this later…

um…if its a linear trend (doubtful) are the authors implying that some where between 1950 and 1970 natural forcings began shutting down?

If so, its a damn good thing mankind had stepped up to take over so that old mother nature could put her feet up for a bit. What astounds me is that mankind was able to pick up the slack in the natural decline so perfectly so as not to allow a dip in the linear trend. [sarc]

Whilst that plot clearly demonstrates that there is no correlation between rising levels of CO2 and rising levels of sea level rise (no acceleration in the rate of change), one of the important points to emerge from that plot is that tide level rise was virtually flat, for some 20 years, between ~1960 and 1980, and there was only a very slight rise, during the 30 year period, between 1960 and 1990.

To avoid confusion, my comment is referring to the plot posted by Don B

Scarlet’s statement: “The land movement at any place is essentially constant* in the hundred year time frame.”

It is quite well documented that localized phenomena, such as large scale pumping of hydrocarbons or groundwater, can have a very rapid effect on land elevations relative to the center of the earth. Other phenomena include construction of dams that create large freshwater impoundments, and erection of tall buildings, can effect local land elevations. The Wilmingon oil field near Long Beach is an extreme example, having experienced over 30 feet of subsidence since production of oil began in the mid 1920s.

As for the other processes that operate on geologic timescales, such as rebounding from the former ice sheet in northern North America, or tectonic subduction at plate boundaries, yes, those are going to be relatively constant over a 100 year timeframe.

Yet another case, like temperature, of claiming more accuracy in a compilation than existed in the original measurement. How repeated measurements of different things somehow becomes more accurate is beyond me.

Tom,

I think that you mean precision rather than accuracy.

Precision, in principle, may be increased with number of measurements. Accuracy may be biased due to unknown factors in data taking, in which case increased number of data may not increase accuracy.

If one measures the length of a rope QUICKLY using a ruler, increasing the number of measurements can increase precision of the rope’s length.

But if one thought the ruler was graduated in inches, but it really was graduated in centimeters, the accuracy of the measurements would be poor, no matter what the precision.

donb, using anomalies fixes the problem of bias, when you are interested in measuring the change that occurs over time.

Only if the bias is constant.

Yes. An example being if the ruler is made of metal and the temperature changes during the measurements.

For a quick visualization of accuracy v. precision:

Accuracy is having 3 shots land within the center target.

Precision is having a tight 3 shot grouping regardless of location on the target.

>>

rocketscientist

October 8, 2017 at 9:37 am

<<

That explains the difference between accuracy and precision; however, in real life, there is no target present. Precision is the easy part. Accuracy can only be inferred from repeated measurements–preferably using different techniques and hoping that systematic and random errors are cancelled out.

Jim

My understanding of precision is the level of refinement in a measurement. Meaning a micrometer which reads down to the nearest .0001″ is more precise than one which is graduated down to the nearest .001″. Where as accuracy is a measure of the difference between measured and actual. Averaging repeated measurements of a characteristic of the same object will not yield greater precision as precision is determined by the instrument used. You cannot produce a higher resolution than that provided by the individual measurements.

Repeating measument lowers random errors. That’s I measure twice to use the circular saw only once.

when the water is over your head make sure you can swim or have a boat.

If the water is up to ears, recycling it is an option. Old toilet adage.

This is true, but you have to be measuring the same thing, which means it can’t be changing between measurements. Usually we accomplish that by measuring it quickly multiple times. For example, for a tide gauge say we are recording a single datum once each hour. For that hourly datum we might actually make a thousand measurements during a one minute period and average them together. That would reduce the single measurement error due to noise in the system. However averaging the 24 readings for one day to a single value won’t reduce the error any further. This is the common mistake, and an elementary one at that.

But when a thousand people measure a thousand different pieces of wood using a thousand different tools, how can there be any increase in either accuracy or precision in the sum and average of all those measurements?

Andy ==> Correct, sir.

Paul Penrose wrote, “…for a tide gauge say we are recording a single datum once each hour. For that hourly datum we might actually make a thousand measurements during a one minute period and average them together.”

Tide gauges do that short-term averaging mechanically.

You start with what’s called a “stilling well” — just a vertical pipe, fastened securely to something solid, with the low end submerged, sealed at the bottom except for a small hole. Inside the pipe you have a nice, quiet “sea level” which rises and falls with the tides, but not with the waves. That’s why they call it a “tide gauge.”

The stilling well averages out the waves, but not the tides. There are no waves, chop, swell or foam in a stilling well.

You use surveying techniques to precisely measure the height of the tide gauge relative to nearby geodetic “survey markers” (permanent geographical benchmarks), so that if a big storm washes away your tide gauge you can replace it without introducing a step-change in the data.

Then, in the simplest case, you just dip a measuring stick (a “tide pole” or “tide staff”) into the pipe, and read the water level periodically.

If you read it on a rigorous schedule, based on the timing of the tides, you can get a good quality sea-level record with nothing more than a stilling well and a measuring stick. Some such measurement records go back more than 200 years!

As long as you follow well-established best practices (don’t let the pipe fill up with mud, don’t let the hole near the bottom get plugged, etc.), tide gauges are simple, elegant, precise, and reliable.

Note that even in the 19th century they had strong incentives to not botch or fudge their readings, because the measurement sites were usually near channels and harbors, and if they didn’t know the correct water levels and accurately predict the tides, ships might run aground! I trust 19th century tide gauge measurements, done by hand with a tide stick, more than I trust 21st century satellite altimetry, for sea-level measurement.

An improvement is to put a float in the stilling well, and connect the float to a pen on a strip-chart recorder, for continuous readings, as shown in this diagram:

http://www.sealevel.info/tide_gauge_diagram_01.jpg

Here’s a photograph of one such tide gauge, on display in a Swedish museum:

Of course, modern tide gauges use somewhat fancier methods. But it really doesn’t matter very much whether you have a human being reading a tide stick on a schedule synchronized with the tides, or a float attached to a strip-chart recorder, or an acoustical sounder phoning home its readings 10× per hour. You’ll get pretty much the same numbers for MSL, HWL, LWL, etc.

Note: when upgrading your tide-gauge to use improved technology, it is very easy to ensure that the new system doesn’t bias the data. Just keep an old-fashioned tide stick in the stilling well, and check it against your strip-chart recorder or acoustic sounder readings, for consistency.

The contrasts with temperature measurements and satellite altimetry are pretty obvious:

With temperatures you never know when the minimum and maximum will be reached, so even if you used a min-max thermometer your time-of-observation (“TOBS“) could introduce a bias (“correction” of which is an opportunity for introducing other biases). That’s not a problem for sea-level measurement with tide gauges.

With temperatures, the surroundings can greatly influence the readings. That’s generally not a problem for sea-level measurement with tide gauges (though channel silting and dredging can sometimes have an effect on some locations, especially on tidal range).

With temperature measurements, changes in instrumentation, or even in the paint used on the Stevenson Screen, can change your readings. Analogous issues affect satellite altimeters, too, as is obvious by the differences between the measurements from different satellites. But it’s not a significant problem for sea-level measurement with tide gauges.

Also, unlike tide gauges, which are referenced to stable benchmarks, there’s no trustworthy reference frame in space, to determine the locations of the satellites with precision. NASA is aware of this problem. In 2011 NASA proposed (and re-proposed in 2014 / 2015) a new mission called the Geodetic Reference Antenna in SPace (GRASP). The proposal is discussed here, and its implications for measuring sea-level are discussed here. But, so far, the mission has not flown.

Satellite measurements are affected/distorted by mid-ocean sea surface temperature changes, and consequent local steric changes, which don’t affect the coasts.

The longest tide-gauge measurement records are about 200 years long (with a few gaps)! The longest satellite measurement records are about ten years, and the combined record from all satellites is less than 25 years, and the measurements are often inconsistent from one satellite to another:

http://sealevel.info/MSL_Serie_ALL_Global_IB_RWT_NoGIA_Adjust_2016-05-24.png

With temperatures, researchers often go back and “homogenize” (revise) the old data, to “correct” biases that they believe might have distorted the readings. The same thing happens with satellite altimetry data. But it doesn’t happen with sea-level measurement at a particular location by a single tide gauge.

Unlike tide-gauge measurements (but very much like temperature indices), satellite altimetry measurements are subject to sometimes-drastic error and revision, in the post-processing of their data (h/t Steve Case):

http://www.sealevel.info/U_CO_SLR_rel2_vs_rel1p2_SteveCase.png

http://www.sealevel.info/2061wtl.jpg

Those are graphs of the same satellite altimetry data, processed differently. Do you see how much the changes in processing changed the reported trend? In the case of Envisat (the last graph), revisions/corrections which were made up to a decade later tripled the reported trend.

I wonder what place on the oceans has the least tide?

Thinking that the poles would be less directly affected by sun/moon gravity. Perhaps I should say differently, as the pull would be less of an effect at poles. But then I am not a scientist.

John ==> Tidal ranges are typically smallest in the open ocean, along open ocean coastlines and in almost fully enclosed seas, such as the Mediterranean. The Canadian Bay of Fundy has the largest tides, 16 meters.

Tides are extremely complex. The Wikipedia article on tides https://en.wikipedia.org/wiki/Tide will tell you more than you want to know about the subject. There are a regions — the Mediterranean and Gulf of Mexico that have minimal tides. I think I read a few years ago that there are a few spots (six I think) in the open oceans where tides would be close to zero were there any land there from which to observe tides. I have no idea where I read that or whether it is correct.

A study of the hydrographic surveys done in preparation for D-day Normandy brings home just how much tidal conditions can vary across a stretch of just 50 miles of coast line. I imagine most people don’t know that the landings at the British beaches started a full hour after those on the US beaches. Part of that difference due to the presence of sandbars off some of the British beaches which sloped even more gradually than the US beaches and part due to a later high tide.

It would vary with 18 year lunar cycles….

This is all far too simple.

If we allow the public to understand that sea level is measured at a number of relevant locations on the coast, and over a relevant period of time before and after industrialization then they may spot that nothing all that remarkable or concerning has occurred.

What needs to be done, is that we should show the tidal gauge methodology until 1993 and then jump to another methodology generated via a flawed interpretation of satellite altimetry data.

Then chuck in some dodgy calibrations and adjustments.

And – BINGO!! – a hockey stick graph.

Everybody likes a hockey stick. Don’t they?

http://www.columbia.edu/~mhs119/SeaLevel/SL.1900-2016.png

Two points

1) Prior to the satellite adjustment, the tide gauge ran at 1.5mm ish per year and the satellite ran at a rate of rise of 3.xmm ish per year, both with the same rate of doubling of the rate of rise (basically a doubling of the rate over 150years. ) Ie the rate of sea level rise would reach about 6mm a year after 150 years.

2) They adjusted / “recalberated” the rate of sea level using satellite data such that the rate of sea level matched the tide gauges in order to make satellite match the tide gauges in 1993. Not withstanding the satellite doesnt match tide gauges today.

indefatigablefrog ==> I will discuss sea level rise by satellite in the next part of this series.

Looks a little like what you might expect from 2mm/y in orbital decay

Good point – wish I had remembered that adjustment a few months ago –

Skeptical science ran their typical article on the acceleration in the rate of SL along with the likelihood of a doubling of the rate in just 20 or so years and their frequent commentary on 3 – 6 foot rise by the end of the century.

They posters did not seem to grasp that almost the entire increase in the rate of accelleration was due to the change in the method of measurement – not with the empirical / reality rate of sea level rise

Well they really do seem to behave like a throng of uncritically starry-eyed true believers.

Nobody contributing to SkS seems to have the capacity to question even the most grotesquely blatant distortions and misdirections. The fact that they call themselves skeptical is really quite shocking.

Perhaps they do really unskeptically believe that they are skeptical.

Even when they discovered that Al Jazeera wanted to promote their website, nobody there was capable of noticing that a propaganda outlet funded by Qatar might have skewed motives:

http://www.populartechnology.net/2012/09/skeptical-science-from-al-gore-to-al.html

” Nobody contributing to SkS seems to have the capacity to question even the most grotesquely blatant distortions”

Indeed! Real skeptics are censored at SKS.

Indefatigablefrog, I think that graph is Hansen’s, right?

Tony Heller (a/k/a Steven Goddard) memorably called that the “IPCC Sea Level Nature Trick,” to make the point that such spliced graphs molest the sea-level data much like Mann molested temperature data with his “Nature Trick.” Both conflate two very different kinds of data to create a misleading apparent trend.

(In fairness to Hansen, though, at least his bogus sea-level graph draws the two different sorts of measurements in different colors. Mann didn’t do that.)

Andy & MarkW…..adjustments are adjustments are adjustments are adjustments. Doesn’t matter if they are temperature or sea level adjustments, they are all ADJUSTMENTS.

Still haven’t figured out the difference between validated technical engineering adjustments…

… and agenda driven fantasy adjustments, have you Mark’s johnson.

I think that that particular example may be “Hansen on steroids”.

But, a similar examples can be found by googling “sea level rise columbia”.

I found it originally in Columbia University educational material.

And yes, Hansen’s name is associated with a very similar presentation.

It’s shocking to think that university students are being presented with this guff, and then expected to uncritically believe what the graph appears to show.

Quite clearly there has NOT been a critical step change in SLR rate occurring in 1993.

If an apparent step change is produced by the switch between methodologies, then surely we should suspect that the switch is the only cause. Obviously.

The fact that Hansen was happy to attempt to pass this off, is only more evidence of his progressive derangement, as his earlier predictions fail to manifest within his lifetime.

Sorry, when I wrote “Indefatigablefrog, I think that graph is Hansen’s, right?” I meant James Hansen, not Kip, and that’s a link to the web page where he and Makiko Sato have a very-frequently-updated version of the hockey stick sea-level graph which Indefatigablefrog posted:

http://www.columbia.edu/~mhs119/SeaLevel/

The graph is the 2nd figure on that page

Also, some of the older versions can be retrieved from TheWaybackMachine:

http://web.archive.org/web/*/http://www.columbia.edu/~mhs119/SeaLevel/

Mark: adjustments

are necessary to correct

for known biases.

how would you prefer to

correct for these

biases?

Early satellite “adjustments” .. Started around 2002/3 just when they needed some actual SLR.

Current satellite temperature data is also “adjusted.” For example, UAH 5.6 versus UAH 6.0

The reason and method for the satellite adjustments are published and are very defensible.

Neither is true for the ground based network.

You really are a brain-washed AGW sychophant/cultist, aren’t you Mark’s johnson.

So funny watching your ignorant inane remarks.

Adjustments:

UAH ..known technical engineering issues, validated

Satellite SLR..: agenda whim, non-validated.

Note that early TOPEX matched tide gauges well….. then the AGW scám got started.

Everything above about 2mm/year in the satellite SL is from “adjustments™”

AndyG55: ” You really are a brain-washed AGW sychophant/cultist, aren’t you”

…

LOL name calling?

..

https://www.realskeptic.com/2013/12/23/anthony-watts-resort-name-calling-youve-lost-argument/

Do you DENY you are brain-washed?

Do you DENY you are an AGW cultist.

Not name-calling at all.

Just facts.

Learn the difference.

(Andy,drop this useless chatter,debate instead) MOD

Mark: UAH data are

adjusted each and

every month.

it’s not difficult to

understand why, if you

read their papers.

(Crackers: Warning — I will not tolerate off-topic trolling on this essay. This essay is about Tide Gauges and Sea level. Stick with that please. — kh)

Having worked as a GPS engineer for (too) many years, I can tell you that no satellite can produce millimeter accuracy of sea level. Orbits are just not that stable. GPS birds are not accurate to mm/year. Even with adjustments to their ephemeral data on a daily basis.

And if someone says the satellites used for altimetry rely on GPS data, they should appreciate GPS is not very accurate in the vertical dimension.

Thank you for that, EW3. Dr. Willie Soon agrees with you. He explains the problems starting at 17:37 in this very informative hour-long lecture:

NASA agrees with you to, I think. At least it seems like they agree with you, when they argue for the proposed GRASP (Geodetic Reference Antenna in SPace) mission.

BTW, if your identity is not a secret, I’d be grateful for an email. My address is here:

http://sealevel.info/contact.html

GPS is not accurate on a day-to-day basis but once a GPS station is operating for 5 years or so, a definitive signal emerges which is accurate to the tenth of a millimetre.

Sonel.org maintains a database of GPS stations which are co-located with Tide Gauges and there are more than 200 co-located stations which are operating past the 5 years now.

This is the local land uplift around the world (there is newer version of this now but the graphic available is not very good).

http://www.sonel.org/IMG/png/ulr5_vvf.png

The data can be obtained here:

http://www.sonel.org/-Sea-level-trends-.html?lang=en

1960-1992, GPS adjusted tide gauges – 1.82 mms/year.

1992 to 2013, GPS adjusted tide gauges – 2.12 mms/year.

In 2013, GPS adjusted tide gauges -0.345 mms.

In 2012, GPS adjusted tide gauges +4.25 mms.

In 2011, GPS adjusted tide gauges +2.79 mms.

Since sea level changes with the ENSO, we should expect a large rise in 2015 and then a decline in 2016 and 2017.

Bill Illis wrote, “Since sea level changes with the ENSO…”

It depends on where you are. In San Diego, and in the satellite-altimetry graphs, sea-level changes with ENSO. But in the western tropical Pacific sea-level changes opposite to ENSO.

Here’s the J.Hansen / M.Sato graph showing the strong positive correlation between ENSO and satellite altimetry measurements of sea-level:

http://www.columbia.edu/~mhs119/SeaLevel/SL+Nino34.png

But look how San Diego and Kwajalein are mirror-opposites of each other:

http://sealevel.info/1820000_Kwajalein_San_Diego_2016-04_vs_ENSO.png

With proper weightings, it should be possible to build a “global sea-level” index/average from coastal tide-gauges which mostly eliminates the ENSO influence.

Because in the western tropical Pacific sea-level changes opposite to ENSO, I posit that you should be able to also construct a good ENSO proxy by calculating the ratio of news stories about “record high temperatures” to news stories about “drowning island paradises.”

Bill Ellis thank you. I will keep that Sonel link. Now I have a question.

How can there be GPS data available for the 1960s? Though the Navy had a limited system up in the 70s, the 24 satellite NAVSTAR system as we know GPS today did not become fully operational until 1993.

@ Bill Illis,

I always try to read your carefully constructed comments.

So it surprises me that you say ” a definitive signal emerges which is accurate to the tenth of a millimetre.”

Our little orb is being stretched by forces, call it gravity or what you will, and you really think there is some kind of center point where all these forces can be measured from ?

The idea is that a local land uplift or subsidence rate is a geologic phenomenon. The rate will be stable for decades if not thousands of years.

Most of the local GPS uplift/subsidence rates will defined by the Earth rebounding/adjusting from the last ice age glacial loads. These rates have probably changed some through time but for the last several thousand years, they would have been very stable.

The other two impacts will be from:

– tectonic movement (which is again a million year type time-frame although a recent local earthquake can influence the GPS signal occasionally which is treated as a break-point when they happen); and then,

– underground water depletion or resupply (which is stable enough in terms of a decade or more).

Thus, the GPS rate of the last 5 years is probably the rate that has existed for at least a few decades if not thousands of years.

Bill ==> As a note, GPS rate of the last five years is accurate for now — but is no guarantee of the century or millennial scale. Tide Gauges, and their benchmarks, and their associated GPS stations, are located where the sea hits the land, as modified by humans at our whim — filled, built up, cities added, islands created, etc. Local effects at tide gauge CORS stations are often much more than their inland neighbors.

Bill Illis ==> The Sonel network is an attempt at creating a true network of GPS corrected Tide Gauges — but it is still in its infant stages. Many Tide Gauges are linked to GPS stations many kilometers distant (5 km, 7 km, etc). GPSs not attached to the same structure, in other words.

Sonel is better than nothing — but it does not yet give an accurate picture when we are looking at millimeters of change over multi-annual time scales.

I will look for the link to the standard for associated Continuous GPSs for this use — kilometers away is not within the standard for sure.

I will email Dr, Richard Snay, at the NGS, the question …. there is a standard, if I recall correctly.

In a general sense, the Continuous GPS should be attached to the same ‘immovable” structure as the tidal benchmark — this would mean the same bedrock-supported pier, or some such. Just having one within a few miles and then assuming the same vertical movement is an error — as it ignores all the causes (and their results) other than continental GIA movement.

u.k. (u.s.) ==> The reference on this is the CORs site, which explains how all this is done. For the complexity of the calculations that must be performed to arrive at the long-term trend or vertical movement, you might watch the 1.5 hour presentation on how this needs to be done to be accurate.

Bill Illis.

You said, “Thus, the GPS rate of the last 5 years is probably the rate that has existed for at least a few decades if not thousands of years.” Major faults such as the San Andreas have an average lateral motion of about 2 cm per year, but sections can become locked and move much less — until they release! These dominantly strike-slip faults also have a vertical component as well. The only way that the average vertical motion over thousands of years can be calculated is to calculate the average of the episodic events, not through monitoring a short, quiet interval in-between events.

EW3 – that’s why the GRACE

mission was so important.

(2 sats)

GPS can measure vertical movement and East-West and North-South Movement. This has revolutionized continental drift movement and theory (we actually know now).

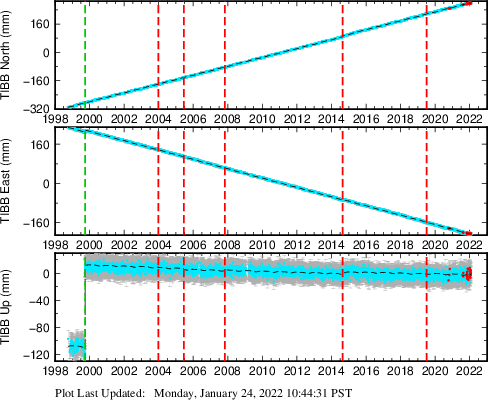

This is the data from the GPS station on the western side of the San Andreas fault at San Francisco (Tiburon Peninsula). The data is actually quite stable other than the earthquakes. Almost all GPS stations are like this with fairly stable trends. Wait five years and that is enough to be reasonably sure.

– west at 19.0 mms/year;

– north at 25.0 mms/year; and,

– down up 1.0 mms/year (although a Magnitude 5 Earthquake in 1999 shifted the station up by 120 mms)

Bill Illis ==> Dr. Richard Snay at NGS is my go-to guy for understanding the CORS results. He recommends waiting for the published analysis for each station as the calculation of long-term means is not as straight-forward as we might like to be.

Can you tell me the source of the graphs you provided?

Kip Hansen October 8, 2017 at 3:07 pm

The data comes from the USGS although Sonel.org also uses this station (Sonel’s charts can’t be linked to since I imagine they are right in the middle of the great global warming debate so they need to be careful and obscure at times).

https://earthquake.usgs.gov/monitoring/gps/SFBayArea/tibb

http://www.sonel.org/spip.php?page=gps&idStation=3024

Bill ==> Thanks for the links.

Bill Ellis,

The two blue diagonal lines (EW & NS) represent the nominal 2 cm/yr ‘creep’ that takes place along unlocked sections of the fault line. It is generally thought that the creep does not relieve all the stress and therefore abrupt movements (earthquakes) can be anticipated at multi-centennial intervals to release the stored strain. The blue lines do not represent the long-term behavior of the faults.

Kip wrote, “For the complexity of the calculations that must be performed to arrive at the long-term trend or vertical movement, you might watch the 1.5 hour presentation on how this needs to be done to be accurate.”

When I started to play the video, it reported that the total length is 3 hours! Yikes!

The web version uses FlashPlayer, so the speed is not adjustable. But there’s a link to an .mp3 version. I guess the thing to do is download the .mp3 version and play it in VLC or similar, so that it can be sped up to save some time.

Thanks for the link!

Dave ==> Good suggestion. I use VLC player as well….very useful. (Not an official endorsement by the management of this blog.)

This grafting exercise has a certain taste of Excel in it. Who was the author, by the way?

Hansen I’m told, but not sure?

Are you talking about the 2nd graph on this J.Hansen / M.Sato page?

http://www.columbia.edu/~mhs119/SeaLevel/

Sato and Hansen. Yes, I’m surprised. OTOH, Hansen was ready to do the congress scam, so why not.

I think I might see a way to average over a month and improve the accuracy somewhat, although I’m not sure how to calculate the improvement. If we assume (as would be reasonable) that actual sea level doesn’t rise more than .17mm per month (which would be the average gain if the actual rise was 2mm/year), then for monthly purposes, we could assume that the daily measurements would likely center around this very small variation, and the kind of accuracy improvement that the author refers to (multiple measurements at a single point in a single day) could be applied, at least within the theoretical variance over the course of a month. Now that’s a lot of assumptions (including the notion that sea level rise is truly constant vs. fits and starts), but if you made those assumptions, you could (theoretically) improve the precision of the monthly measurement.

Am I off base here? Please chime in if so.

Taylor Pohlman ==> You are talking of determining Sea Level Rise from tide gauge data. Certainly, with a long enough time series, and data on vertical land movement, it would possible to determine LOCAL absolute sea level change — this would still tell us nothing of Global Sea Level change.

I was talking about local sea level rise, which, as you pointed out, is the only relevant metric for people who might have concerns. Given different ocean bottom configurations, prevailing winds and currents, one would expect variations between locations, including trend variations. A single number for Global sea level rise, would therefore seem pretty meaningless, in much the same way that a single number for Global temperature does.

I was just pointing out that there should be ways to improve precision locally, vs. the +-2cm for each single measurement

Hi Kip,

I am enjoying to read your post and subsequesnt comment and counter-comments. Can you please tell me about any specific source(s) where i can find data for vertical land movement? And, if you dont mind i would be grateful to receive your email address. My one: https://www.researchgate.net/profile/Bishwajit_Roy5

Looking forward to the next post on SLR.

Let us be clear about our topic.

OK, good. We are talking about local and relative, as pertains to the people living there.

OK, good. On a beach, and the same at a tide gauge.

The gauge constantly measures the tide coming in, and going out. This gives me 2 opportunities a day to measure high water and low water. True, the tides get larger and smaller according to the phase of the moon, but the change is symmetrical (or close enough). So I calculate MSL twice a day or 60 per month.

It seems to me that as we average all the individual MSL readings, we do, in fact, gain precision.

Standard statistics requirements:

1) No systematic error in the instrument calibration. (a topic unto itself)

2) Measurement errors are what is said to be random, and evenly distributed about the mean.

3) With conditions 1 and 2 satisfied, precision increases proportional to the square root of N.

Tony ==> Almost right — the precision of the mean — the mathematical midpoint of all the measurements — is more precise — but the original measurement error/measurement uncertainty must be added back onto the resultant mean. So while we could get a very precise midpoint (mean) — it will still only be properly represented by adding back on the +/- 2 cm.

The only way this makes sense to me is if you are talking about calibration error, not a measurement error.

I appreciate your comments, but I stand my ground.

(I would concede the point if it could be shown that individual determinations of MSL can *not* be averaged together.)

TonyL ==> I will have to write a separate essay to convince you — promise I will. I used to think as you do, until it was demonstrated to me that what I say is the actuality. You will have to wait for the essay — no time here.

Kip is absolutely correct. It doesn’t matter how many times you take a measurement, the accuracy of the instrument determines the error band. There is no reduction in the probability of the actual event being anywhere in the band +-2cm.

This basic misunderstanding of how errors should be dealt with is endemic throughout ‘climatology’. It is the underlying reason behind the results of the ‘random walk’ analysis paper recently published on this site. The error bands that should surround all the data points used in the ‘climate change’ debate completely swamp any perceived ‘trends’.

Basically its all numerology , with no foundation in science at all.

James ==> Thank you, I don’t usually get much support for this oh-so-basic idea.

The correlation between emissions and sea level rise

https://ssrn.com/abstract=3023248

chaamjamal ==> The referenced study uses “The primary sea level dataset for this work is a global sea level reconstruction from 1807 to 2010 created by Dr. Svetlana Jevrejeva of the Permanent Service for Mean Sea Level (PSMSL) group at NOCL from a large number of sea level datasets worldwide (Jevrejeva, 2014).”

Tide Gauge data is not fit for the purpose of determining Global Sea Level change — because is it uncorrected for a known confounder — vertical movement of the land, which is of the same magnitude as the posited local change in sea level.

You are correct, Kim: at about 1/3 of the world’s long-term, high-quality tide gauge locations, vertical land motion (VLM) affects local sea-level more than does global sea-level rise. That’s why at many location local sea-level is falling, rather than rising.

Tide gauge data from long-record gauges is, however, fit for the purpose of determining the derivative of global sea-level change (i.e., the 2nd derivative of global sea-level, i.e., sea-level rise acceleration), because the confounder, VLM, is known to be very nearly linear over centennial time scales, at most gauges, as scarletmacaw mentioned, above. Since the derivative of a linear component is zero, linear VLM cannot affect “acceleration” calculations.

What the longest tide gauge records tell us is that sea-level rise accelerated very slightly (by at most 1.5 mm/yr) during the latter 19th century and the first quarter of the 20th century, but there’s been little if any acceleration since then.

Brest, France, a/k/a PSMSL tide gauge #1, has the longest extant sea-level measurement record. In the 19th century it experienced no sea-level rise. Since 1900 it has experienced about 1.5 mm/yr sea-level rise (6 inches/century). Over the “satellite era” (since 1993) it has measured 2.1 ±1.8 mm/yr of sea-level rise, which is not significantly different from the long-term rate since 1900. Here are the graphs (sea-level in blue, juxtaposed with CO2 in green):

http://www.sealevel.info/MSL_graph.php?id=Brest&boxcar=1&boxwidth=3&thick&c_date=1800/1-1899/12

http://sealevel.info/190-091_Brest_1807-1900.png

http://www.sealevel.info/MSL_graph.php?id=Brest&boxcar=1&boxwidth=3&thick&c_date=1900/1-2019/12

http://sealevel.info/190-091_Brest_1900-2016.png

http://www.sealevel.info/MSL_graph.php?id=Brest&boxcar=1&boxwidth=3&thick&c_date=1993/1-2019/12

http://sealevel.info/190-091_Brest_1993-2016.png

Here’s Brest with six feet of projected sea-level rise by 2100:

Linear:

http://www.sealevel.info/MSL_graph.php?id=Brest&boxcar=1&boxwidth=3&thick&lin_ci=0&lin_pi=1&xtraseg=1&x1=7.130&x2=8.959&g_date=1800/1-2099/12&c_date=1900/1-2019/12&x_date=2017/10-2099/12&co2=1

http://sealevel.info/190-091_Brest_France_plus_6ft_linear.png

Constant acceleration:

http://www.sealevel.info/MSL_graph.php?id=Brest&boxcar=1&boxwidth=3&thick&lin_ci=0&lin_pi=1&co2=1&xtraseg=2&g_date=1800/1-2099/12&c_date=1900/1-2019/12&x_date=2017/10-2099/12&x1=7.130&x2=8.959&xslope=2.100

http://sealevel.info/190-091_Brest_France_plus_6ft_constaccel.png

IMO, anyone who thinks that is plausible needs to get his meds adjusted, or something.

not a peer reviewed

journal paper, just an

amateur

(Crackers: I repeat my warning one last time — no trolling. If you have something constructive to say, and it is on topic, you may do so. Sniping not allowed here. — kh)

Please repair the link to Part 1.

majormike1 ==> Link repaired, thank you for the tip.

Do a daily search on “Sea Level” in the news. The usual story is a meter or more by 2100 and what are we going to do about it.

Here’s a story from Marinij.com this past Thursday:

California has a very low rate of sea level rise. The San Francisco tide gauge records back to 1856 has an over all rate of 1.5 mm/yr and for the last thirty years the rate has been 1.9 mm/yr. Over much of the 20th century that 30 year rate was between 2 and 3 mm/yr.

Source

http://www.psmsl.org/data/obtaining/rlr.annual.data/10.rlrdata

Three feet over the next 100 years comes to an average rate of over 9 mm/yr the question to ask the folks who write these articles is when is this acceleration to these higher rates going to begin to happen? I sometimes doubt that these people even know that there’s a tide gauge in their area.

Steve Case ==> Again, no correction to the Tide Gauge data for vertical land movement — settlement of the river delta mud. It would be possible to come up with valid data, there are several CORS stations around SF Bay.

?????????????

Relative sea level is a function of land movement and sea level change. Correcting tide gauges for vertical land movement is an attempt to measure absolute sea level rise. Absolute sea level rise is what the satellites are trying to measure.

The folks on the West Coast don’t have a problem. Relative sea level is what they need to know and the professors they are listening to are quoting satellite data. It’s a giant bait & switch shell game of propaganda and good old fashion B.S.

If you really want to correct for land movements, there’s the Peltier data

http://www.psmsl.org/train_and_info/geo_signals/gia/peltier/

where values are listed for land movement by tide gauge location.

Steve ==> I was speaking in support of your case — SF Bay is a delta area, where the land subsides on its own as the mud settles.

The Peltier data is an estimate of expected GIA rise or fall of land masses — Pelitier data are not measurements and have not be ground-truthed against continuously operating GPS stations.

PSMSL data sets are not corrected for vertical land movement.

The Western US doesn’t have major concerns about Sea Level Rise (see Part 1).

However, SF Bay locations may have, when subsidence is taken into account.

Thanks for the article, very interesting and I look forward to more. It coincides with a recent experience I had with water levels. I just came back from muskie fishing in Minnesota and had a conversation with my fishing buddy about “tides” on one of the large lakes we fished. I hadn’t thought about it much until I observed what was obviously an “inter tidal zone” along the shore. Not wanting to leave it at that I checked it out with some research when I came home. While there apparently are tides of a few centimeters on large lakes there is a significant change in lake levels caused by seiches. It is a phenomenon where wind and barometric changes can make standing waves that come and go with very low frequencies and is related to the same physics as storm surges. Interesting stuff. Thanks, again.

Steve Lohr ==> Interesting story of the “tides” on Minnesota lakes …thanks for sharing.

Years ago camping one of the the many islands I watch the level of the Lake of the Woods shift a foot with a wind change. Some fisherman unknown to them were running over a reef in a middle of a channel for two day in a roll, did not make over it the third day. The Aluminum boat returned after the grounding the fiberglass boat did not. Yes in large lake wind does make a difference. Of course all could have been avoided it those fisherman had bought a lake map.

“Averaging does not increase accuracy or precision.”

That is a point that has been totally lost in climate ‘science’. They even think that taking averages of uncorrelated model results somehow adds information. We have a hell of a battle to overcome this one.

LewSkannen ==> The idea has infected much of science. I blame computers and the Department of Statistics.

Kip sounds like he’s never progressed beyond the level 101 “Introduction to Statistics” class. Tell me Kip, what does increasing the number of samples have on the estimator for the population mean? Does it not reduce the error of the estimator?

MSJ, I can see how multiple measurements of the same thing would increase accuracy. This is a case of multiple measurements of different things, so it is more analogous to shooting at multiple moving targets. Tell me, O great guru of statistics, how that increases accuracy?

“…I blame computers and the Department of Statistics…..” And I blame people all to willing to support a narrative for ideological reasons. Very informative, thank you.

Mark S Johnson ==> For an in-depth look at the issue, see my recent Series on Averages. Many measurements increase the apparent precision of the mean, but does not evade the original measurement uncertainty (a result of its maximum accuracy — for tide gauges, +/- 2 cm) which must be attached to the resultant mean after calculation.

It is the accuracy/precision of the mean that is in question…..original measurement error does not reduce through averaging.

Original measurement accuray never changes. However, the average of increasing numbers of measurements does in fact increase the accuracy of the population mean estimator. You can’t measure the population mean with a single measurement, hence the accuracy of an individual measurement of said population mean does in fact increase with increasing samples.

You are incorrect to say that: ” which must be attached to the resultant mean after calculation.”

…

The accuracy is determined by the standard error: https://wikimedia.org/api/rest_v1/media/math/render/svg/49c74c15865d7fac09955b1b958feb8ada7362cf

…

Sigma is your “measurement precision.” Don’t confuse precision with accuracy which you apparently are doing.

@ Kip Hansen

I see!

You seem to have made the assumption that the absolute calibration of the instrument is no better than the precision of an individual reading. That is, if a reading is +/- 2 cm, then the final mean must be +/- 2 cm.

Not True!

An instrument can be calibrated far better than the precision of any individual reading. How so, you may ask?

Simple. You lock the unit down on it’s calibration/test stand and let it run *all*day*long*. When you are done, it can be calibrated very accurately, even if individual readings are a bit flaky.

You seem to be mixing up accuracy and precision in some difficult ways, in regards to calibration, and then measurement.

Cheers.

You hit the nail on it’s head TonyL.

…

For example, I can measure the average height of an American male with a 8 foot stick that has markings every foot. Each individual measurement will be to the closest foot, but the average of all the readings will yield the measurement of the population mean to the nearest inch if I take enough samples.

Kip,

If we are defining the uncertainty as the standard deviation (+/- 1 or 2 SD) derived from the probability distribution of the measured quantities, then it appears that the diurnal tidal variations (smallest range) will have the smallest uncertainty, and the mixed semi-diurnal (largest range) will have the largest uncertainty. Thus, a weighted-average should probably be used to describe the average uncertainty for the tidal variations.

You remarked, “…the level of the sea is changing every moment because of the tides, waves and wind, there is not, in reality a single experiential water level we can call local Sea Level.” It should be noted that the instantaneous sea level also changes with barometric pressure, such as when weather ‘highs’ and ‘lows’ (especially hurricanes) pass over an area. To properly assign an uncertainty, all of these factors should be used to construct a probability distribution for a particular interval of time. That is, apparent sea level change varies with locality and date, and the uncertainty varies correspondingly.

TonyL ==> Maybe PSMSL and NOAA will institute increased calibration of there tide gauges. To date, they have not done so. Until then……

Clyde ==> “If we are defining the uncertainty as the standard deviation (+/- 1 or 2 SD) derived from the probability distribution of the measured quantities, ”

You are talking statistics — a language unto itself. Probabilities are not measurements — they are something else altogether.

I am talking measurement error, uncertainty in the actual real measurements. I will demonstrate my point in a future essay. It took a statisticians months to beat this truth into me — I had to do assigned homework, but I learned my lesson.

Kip says: “Probabilities are not measurements”

…

“….. It took a statisticians months to beat….” and they failed miserably. Contact any actuary working in life insurance. They’ll tell you all about the probabilities gleaned from measured life spans.

Troll Johnson demonstrates once again that it is he who doesn’t understand anything.

If you aren’t measuring the same thing, then averaging them doesn’t improve accuracy.

TonyL, in such a situation you have the same sensor reading the same thing thousands of times.

If you took a thousand sensors, in a thousand locations, then averaging those readings would not increase accuracy.

@ MarkW:

Correct. I was specifically addressing *one* tide gauge used for determining MSL at *one* location.

As you know, averaging a heterogeneous collection of readings from all kinds of locations is problematic at best, and invites utter chaos at worst.

Mark’s johnson is displaying a junior high level understanding of measurement .. if that. !!

Please keep going.. Its funny to watch. 🙂

Mark S Johnson writes

No. If you have an 18 foot stick with markings every 6 feet will you still measure the average height with enough measurements?

Now convince me that GMSL is measured accurately with “lots” of inaccurate measurements.

Mark S Johnson

October 7, 2017 at 4:54 pm

Mods – This MSJ seems to be just a troll by its style of insult.

Kip,

If you are measuring something with a constant value, such as the weight of an object, then with respect to the precision of the weight, one only needs to be concerned with the precision (and accuracy) of the measurement instrument. Taking multiple measurements can reduce the random error and improve the precision through the standard error of the mean. Remember, the standard deviation means that ~68% of the readings will be expected to fall within +/- 1 standard deviation. That sounds like probability to me!

However, when measuring something that is changing all the time, such as sea level, then probability does certainly come into account. If one takes a single reading over a day, there is very low probability that the reading will reflect the average level of the water during the day. Despite measuring to the nearest millimeter, a reading taken at high tide might be two or three meters higher than a reading taken at low tide. Thus, even two or three readings averaged does not much improve the probability that one has a representative measurement of the average water level. As the number of measurements increases, the average will approach an accurate estimate of the mean water level for the period of time over which the readings were taken. However, the precision of the individual measurements will remain constant, and will not be improved. The extreme values (high and low tide, large waves, etc.) will have infrequent occurrences and most readings will fall in between and cluster around the actual mean. That is, the probability distribution will provide guidance on both what the mean value is, what the standard deviation is, and whether or not the distribution is skewed. Actually, because of slack water between tides, I suspect the distribution will be bimodal for diurnal tides and maybe multimodal for semi-diurnal.

To summarize, one can take a measurement of the instantaneous water level with reasonable accuracy and precision (you claim +/- 2 cm precision and I have no reason to question that). However, the Empirical Rule states that the estimate for the standard deviation is related to the range of values. That is, for something that is varying, the standard deviation will be larger than if it had a constant value. That is why one cannot average a large number of readings and justify reporting more significant figures. So, I stand by my original claim that one can expect a higher level of precision for the average sea level in the mid-Pacific than for, say, the Bay of Fundy.

Clyde ==> And with that, I wouldn’t disagree.

Mark S Johnson ==> Despite a lot of jargon and definitional proofs, in the real world — where things are measured with rulers and thermometers and yardsticks, the fact remains that when uncertain measurements are averaged to find a mean, the uncertainty of the original measurements devolves on the mean.

As I have promised TonyL, I will write an essay to demonstrate this in simple grade school arithematic.

I will look forward to it.

We can give this topic a rest until then.

Kip, you are confusing the difference between measuring a physical item with estimating a population mean. As I noted above, your understanding of sampling theory (which is a branch of mathematical statistics) is seriously lacking.

TonyL and MSJ,

While we are waiting on Kip to respond, you might want to read these:

https://wattsupwiththat.com/2017/04/12/are-claimed-global-record-temperatures-valid/

https://wattsupwiththat.com/2017/04/23/the-meaning-and-utility-of-averages-as-it-applies-to-climate/

Basically, increasing the number of measurements of some variable will increase the accuracy of the estimate of the mean, but the precision is constrained by the precision of the measurement device. If the thing being measured is constant, then one can improve the precision by eliminating the random error, which should be (in a well-designed experiment) very much smaller than the magnitude of change of some variable.

Before you insult someone, I think that you would do well to be sure that what you believe to be factual actually is. It may avoid some embarrassment and a need to apologize.

Clyde, when calculating a mean, the number of samples is a “perfect” number, hence, the number of digits in the result is the precision of the estimator. Don’t confuse an individual measurement with the measurement of the population mean

One is not measuring a single population mean in climate, one is measuring the change in the average over time. The large number principle might apply to the temperature of Timbuctoo January 20, 2017 @ 1200, but not to single measurements over time of different places.

Mark S Johnson,

I get the sense that you did not bother to read either of the links I provided.

They think that if they take a few thousand measurements from a few thousand locations using a different instrument at each location and a few dozen different types of instruments, on approximately the same day, they can improve their precision by averaging all those readings.