Guest Essay by Kip Hansen

This essay is the third and last in a series of essays about Averages — their use and misuse. My interest is in the logical and scientific errors, the informational errors, that can result from what I have playfully coined “The Laws of Averages”.

Averages

As both the word and the concept “average” are subject to a great deal of confusion and misunderstanding in the general public and both word and concept have seen an overwhelming amount of “loose usage” even in scientific circles, not excluding peer-reviewed journal articles and scientific press releases, I gave a refresher on Averages in Part 1 of this series. If your maths or science background is near the great American average, I suggest you take a quick look at the primer in Part 1 then read Part 2 before proceeding.

Why is it a mathematical sin to average a series of averages?

“Dealing with data can sometimes cause confusion. One common data mistake is averaging averages. This can often be seen when trying to create a regional number from county data.” — Data Don’ts: When You Shouldn’t Average Averages

“Today a client asked me to add an “average of averages” figure to some of his performance reports. I freely admit that a nervous and audible groan escaped my lips as I felt myself at risk of tumbling helplessly into the fifth dimension of “Simpson’s Paradox”– that is, the somewhat confusing statement that averaging the averages of different populations produces the average of the combined population.” — Is an Average of Averages Accurate? (Hint: NO!)

“Simpson’s paradox… is a phenomenon in probability and statistics, in which a trend appears in different groups of data but disappears or reverses when these groups are combined. It is sometimes given the descriptive title reversal paradox or amalgamation paradox.” — the Wiki “Simpson’s Paradox”

Averaging averages is only valid when the sets of data — groups, cohorts, number of measurements — are all exactly equal in size (or very nearly so), contain the same number of elements, represent that same area, same volume, same number of patients, same number of opinions and, as with all averages, the data itself is physically and logically homogenous (not heterogeneous) and physically and logically commensurable (not incommensurable). [if this is unclear, please see Part 1 of this series.]

For example, if one has four 6th Grade classes, each containing exactly 30 pupils, and wished to find the average height of the 6th Grade students, one could go about it two ways: 1) Average each class by summing the heights of the students then finding the average by dividing by 30, then summing the averages and dividing by four to get the overall average – an average of the averages or 2) combine all four classes together in one set of 120 students, sum the heights, and divide by 120. The results will be the same.

The contrary example is four classes of 6th Grade students, each of differing sizes — 30, 40, 20, and 60. Finding four class averages and then averaging the averages gives one answer — quite different from the answer if one summed the height of all 150 students and divided by 150. Why? It is because the individual students in the class with only 20 students and the individual students in the class of 60 students will have differing, unequal effects on the overall average. For the average to be valid, each student should represent 0.66% of the overall average [one divided by 150]. But when averaged by class, each class then accounts for 25% of the overall average. Thus each student in the class of 20 would count for 25%/20 = 1.25% of the overall average whereas each student in the class of 60 each count for only 25%/60 = 0.416% of the overall average. Similarly, students in the classes of 30 and 40 each count as 0.83 % and 0.625%. Each student in the smallest class would affect the overall average twice as much as each student in the largest class — contrary to the ideal of each student having an equal effect on the average.

There are examples of this principle in the first two links for the quotes that prefaced this section. (here and here)

For our readers in Indiana (that’s one of the states in the US), we could look at Per Capita Personal Income of the Indianapolis metro area:

This information is provided by the Indiana Business Research Center in an article titled: “Data Don’ts: When You Shouldn’t Average Averages”.

As you can see, if one averages the averages of the counties, one gets a PCPI of $40,027, however, aggregating first and then averaging gives a truer figure of $40,527. This result has a difference — in this case an error — of 1.36%. Of interest to those in Indiana, only the top three earning counties have PCPI higher than the state average, by either system, and eight counties are below the average.

If this seems trivial to you, consider that various claims of “striking new medical discoveries’ and “hottest year ever” are based on just these sorts of differences in effect sizes that are in the range of single digit, or even a fraction of, percentage points or a tenth or one-hundredths of a degree.

To compare with climatology, the published anomalies from the 30-year climate reference period (1981-2011) for the month of June 2017 range from 0.38 °C (ECMWF) to 0.21°C (UAH) with the Tokyo Climate Center weighing in with a middle value of 0.36°C. The range (0.17°C) is nearly 25% of the total temperature increase for the last century. (0.71°C). Even looking at only the two highest figures, 0.38°C and 0.36°C, the difference of 0.02°C is 5% of the total anomaly.

How exactly these averages are produced matters a very great deal in the final result. It matters not at all whether one is averaging absolute values or anomalies — the magnitude of induced error can be huge

Related, but not identical, is Simpson’s Paradox.

Simpson’s Paradox

Simpson’s Paradox, or more correctly the Simpson-Yule effect, is a phenomenon that occurs in statistics and probabilities (and thus with averages), often seen in medical studies and various branches of social sciences, in which a result (a trend or effect difference, for example) seen when comparing groups of data disappears or reverses itself when the groups (of data) are combined.

Some examples of Simpson’s Paradox are famous. One with implications for today’s hot topics involved claimed bias in admission rations ratios for men and women at UC Berkeley. Here’s how one author explained it:

“In 1973, UC Berkeley was sued for gender bias, because their graduate school admission figures showed obvious bias against women.

Men were much more successful in admissions than women, leading Berkeley to be “one of the first universities to be sued for sexual discrimination”. The lawsuit failed, however, when statisticians examined each department separately. Graduate departments have independent admissions systems, so it makes sense to check them separately—and when you do, there appears to be a bias in favor of women.”

In this instance, the combined (amalgamated) data across all departments gave the less informative view of the situation.

Of course, like many famous examples, the UC Berkeley story is a Scientific Urban Legend – the numbers and mathematical phenomenon are true, but there never was a gender bias lawsuit. Real story here.

Another famous example of Simpson’s Paradox was featured (more or less correctly) on the long-running TV series Numb3rs. (full disclosure: I have watched all episodes of this series over the years, some multiple times). I have heard that some people like sports statistics, so this one is for you. It “involves the batting averages of players in professional baseball. It is possible for one player to have a higher batting average than another player each year for a number of years, but to have a lower batting average across all of those years.”

This chart makes the paradox clear:

Each individual year, Justice has a slightly better batting average, but when the three years are combined, Jeter has the slightly better stat. This is Simpson’s Paradox, results reversing when multiple groups of data are considered separately or aggregated.

Climatology

In climatology, the various groups go to great lengths to avoid the downsides of averaging averages. As we will see in comments, various representatives of the various methodologies will weight weigh in and defend their methods.

One group will claim that they do not average at all — they engage in “spatial prediction” which somehow magically produces a prediction that they then simply label as the Global Average Surface Temperature (all while denying having performed averaging). They do, of course, start with daily, monthly, and annual averages — but not real averages…..more on this later.

Another expert might weigh in and say that they definitely don’t average temperatures….they only average anomalies. That is, they find the anomalies first and then average those. If pressed hard enough, this faction will admit that the averaging has long before been accomplished, the local station data — daily average dry bulb temperature — is averaged repeatedly, to arrive at monthly averages, then annual averages, sometimes multiple stations are averaged to achieve a “cell” average, and then these annual or climatic averages are subtracted from the present absolute temperature average (monthly or annual, depending on the process) to leave a remainder, which is called the “ anomaly” — oh, then the anomalies are averaged. The anomalies may or may not, depending on system, actually represent equal areas of the Earth’s surface. [See the first section for the error involved in averaging averages that do not represent the same fraction of the aggregated whole]. This group, and nearly all others, rely on “not real averages” at the root of their method.

Climatology has an averaging problem but the real one is not so much the one discussed above. In climatology, the daily average temperature used in calculations is not an average of the air temperatures experienced or recorded at the weather station during the last 24 hour period under consideration. It is the arithmetic mean of the lowest and highest recorded temperatures (Lo and Hi, the Min Max) for the 24 hour period. It is not the average of all the hourly temperature records, for instance, even when they are recorded and reported. No matter how many measurements are recorded, the daily average is calculated by summing the Lo and the Hi and dividing by two.

Does this make a difference? That is a tricky question.

Temperatures have been recorded as High and Low (Min-Max) for 150 years or more. That’s just how it was done, and in order to remain consistent, that’s how it is done today.

A data download of temperature records for weather station WBAN:64756, Millbrook, NY, for December 2015 through February 2016 gives temperature readings every five minutes. Data set includes values for “DAILYMaximumDryBulbTemp” and “DAILYMinimumDryBulbTemp” followed by “DAILYAverageDryBulbTemp”, all in degrees F. DAILYAverageDryBulbTemp is the arithmetical mean of the two preceding values (Max and Min). It is this last that is used in climatology as the Daily Average Temperature. A typical December day the recorded values look like this:

Daily Max 43 — Daily Min 34 — Daily Average 38 (the arithmetic mean is really 38.5, however, the algorithm apparently rounds x.5 down to x)

However, the Daily Average of All Recorded Temperatures is: 37.3….

The differences on this one day:

Difference between reported Daily Average of Hi-Lo and actual average of recorded Hi-Lo numbers = 0.5 °F due to rounding algorithm.

Difference between reported Daily Average and the more correct Daily Average Using All Recorded Temps = 0.667 °F

Other days in January and February show a range of difference between the reported Daily Average and the Average of All Recorded Temperatures from 0.1°F through 1.25°F to a high noted at 3.17°F on the January 5, 2016.

This is not a scientific sampling — but it is a quick ground truth case study that shows that the numbers being averaged from the very start — the Daily Average Temperatures officially recorded at surface stations, the unmodified basic data themselves, are not calculated to any degree of accuracy or precision at all — but rather are calculated “the way we always have” — finding the mean between the highest and lowest temperatures in a 24-hour period — that does not even give us what we would normally expect as the “average temperature during that day” — but some other number — a simple Mean between the Daily Lo and the Daily Hi, which the above chart reveals to be quite different. The average distance from zero for the two month sample is 1.3°F. The average of all differences, including the sign, is 0.39°F.

The magnitude of these daily differences? Up to or greater than the commonly reported climatic annual global temperature anomalies. It does not matter one whit whether the differences are up or down — it matters that they imply that the numbers being used to influence policy decisions are not accurate all the way down to basic daily temperature reports from single weather stations. Inaccurate data never ever produces accurate results. Personally, I do not think this problem disappears when using “only anomalies” (which some will claim loudly in comments) — the basic, first-floor data is incorrectly, inaccurately, imprecisely calculated.

But, but, but….I know, I can hear the complaints now. The usual chorus of:

- It all averages out in the end (it does not)

- But what about the Law of Large Numbers? (magical thinking)

- We are not concerned with absolute values, only anomalies.

The first two are specious arguments.

The last I will address. The answer lies in the “why” of the differences described above. The reason for the difference (other than the simple rounding up and down of fractional degrees to whole degrees) is that the air temperature at any given weather station is not distributed normally….that is, graphed minute to minute, or hour to hour, one would not see a “normal distribution”, which would look like this:

If air temperature was normally distributed through the day, then the currently used Daily Average Dry Bulb Temperature — the arithmetic mean between the day’s Hi and Lo — would be correct and would not differ from the Daily Average of All Recorded Temperatures for the Day.

But real air surface temperatures look much more like these three days from January and February 2016 in Millbrook, NY:

Air temperature at a weather station does not start at the Lo climb evenly and steadily to the Hi and then slide back down evenly to the next Lo. That is a myth — any outdoorsman (hunter, sailor, camper, explorer, even jogger) knows this fact. Yet in climatology, Daily Average Temperature — and all subsequent weekly, monthly, yearly averages — are calculated based on this false idea. At first, out of necessity — weather stations used Min-Max recording thermometers and were often checked only once per day, and the recording tabs reset at that time — and now out of respect for convention and consistency. We can’t go back and undo the facts — but need to acknowledge that the Daily Averages from those Min-Max/Hi-Lo readings do not represent the actual Daily Average Temperature — neither in accuracy or precision. This insistence on consistency means that the error ranges represented in the above example affect all Global Average Surface Temperature calculations that use station data as their source.

Note: The example used here is of winter days in a temperate climate. The situation is representative, but not necessarily quantitatively — both the signs and the sizes of the effects will be different for different climates, different stations, different seasons. The effect cannot be obviated through statistical manipulation or reducing the station data to anomalies.

Any anomalies derived by subtracting climatic scale averages from current temperatures will not tell us if the average absolute temperature at any one station is rising or falling (or how much). It will tells us only that the mean between the daily hi-low temperatures is rising or falling — which is an entirely different thing. Days with very low lows for an hour or two in early morning followed by high temps most of the rest of the day have the same hi-low mean as days with very low lows for 12 hours and a short hot spike in the afternoon. These two types of days to not have the same actual average temperature. Anomalies cannot illuminate the difference. A climatic shift from one to the other will not show up in anomalies yet the environment would be greatly affected by such a regime shift.

What can we know from the use of these imprecise “daily averages” (and all the other numbers) derived from them?

There are some who question that there is an actual Global Average Surface Temperature. (see “Does a Global Temperature Exist?”)

On the other hand, Steven Mosher so aptly informed us recently:

“The global temperature exists. It has a precise physical meaning. It’s this meaning that allows us to say…The LIA [Little Ice Age] was cooler than today…it’s the meaning that allows us to say the day side of the planet is warmer than the night side…The same meaning that allows us to say Pluto is cooler than Earth and Mercury is warmer.”

What such global averages based on questionably derived “daily averages” cannot tell us is that this year or that year was warmer or cooler by some fraction of a degree. The calculation error –the measurement error — of commonly used station Daily Average Dry Bulb Temperature is equal in magnitude (or nearly so) to the long-term global temperature change. The historic temperature record cannot be corrected for this fault. And modern digital records would require recalculation of Daily Averages from scratch. Even then, the two data sets would not be comparable quantitatively — possibly not even qualitatively.

So, “Yes, It Matters”

It matters a lot how and what one averages. It matters all the way up and down through the magnificent mathematical wonderland that represents the computer programs that read these basic digital records from thousands of weather stations around the world and transmogrify them into a single number.

It matters especially when that single number is then subsequently used as a club to beat the general public and our political leaders into agreement with certain desired policy solutions that will have major — and many believe negative — repercussions on society.

Bottom Line:

It is not enough to correctly mathematically calculate the average of a data set.

It is not enough to be able to defend the methods your Team uses to calculate the [more-often-abused-than-not] Global Averages of data sets.

Even if these averages are of homogeneous data and objects, physically and logically correct, averages return a single number which can then incorrectly be assumed to be a summary or fair representation of the whole set.

Averages, in any and all cases, by their very nature, give only a very narrow view of the information in a data set — and if accepted as representational of the whole, the average will act as a Beam of Darkness, hiding and obscuring the bulk of the information; thus, instead of leading us to a better understanding, they can act to reduce our understanding of the subject under study.

Averaging averages is fraught with danger and must be viewed cautiously. Averaged averages should be considered suspect until proven otherwise.

In climatology, Daily Average Temperatures have been, and continue to be, calculated inaccurately and imprecisely from daily minimum and maximum temperatures which fact casts doubts on the whole Global Average Surface Temperature enterprise.

Averages are good tools but, like hammers or saws, must be used correctly to produce beneficial and useful results. The misuse of averages reduces rather than betters understanding, confuses rather than clarifies and muddies scientific and policy decisions.

UPDATE:

[July 25, 2016 – 12:15 EDT]

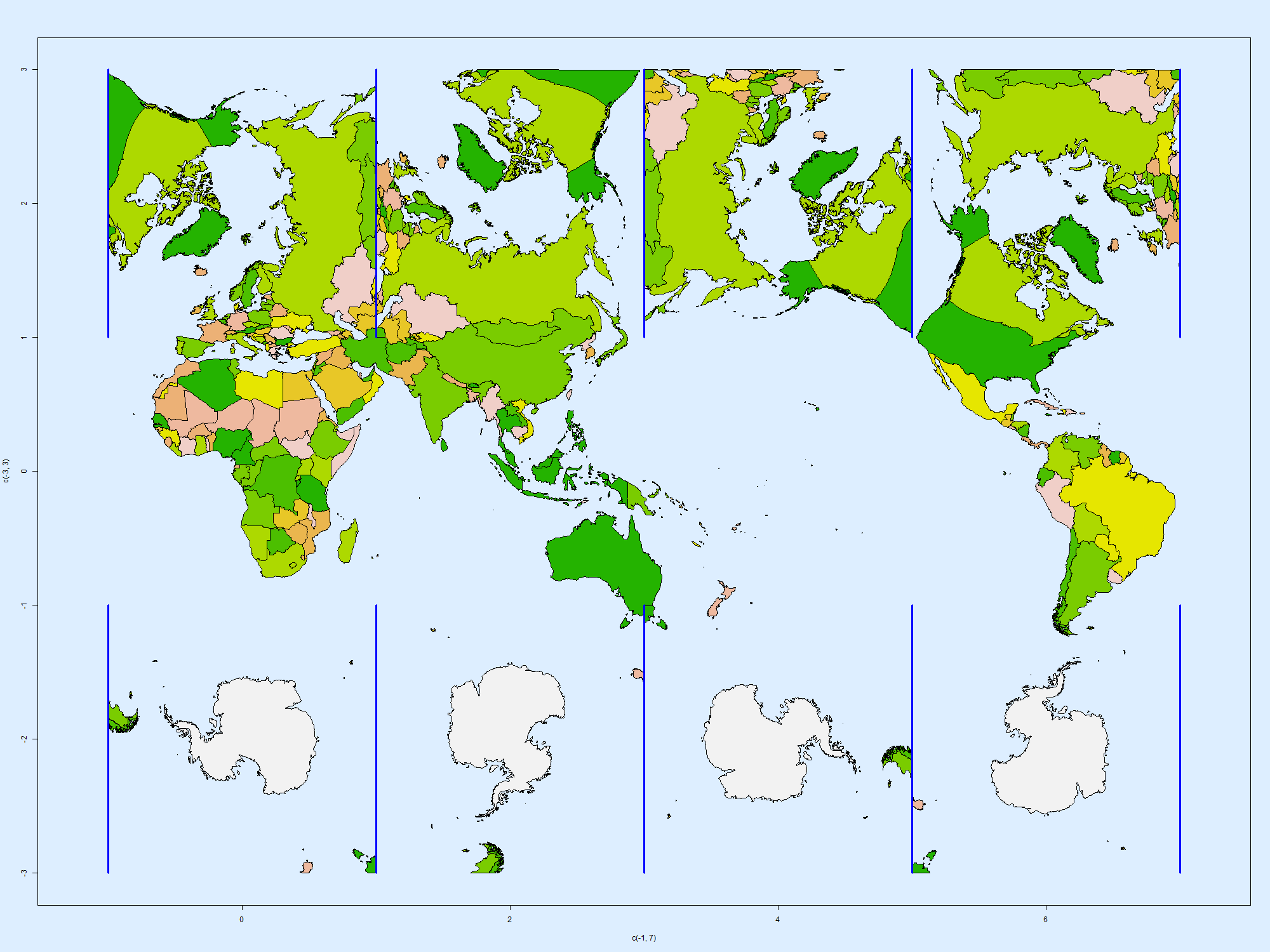

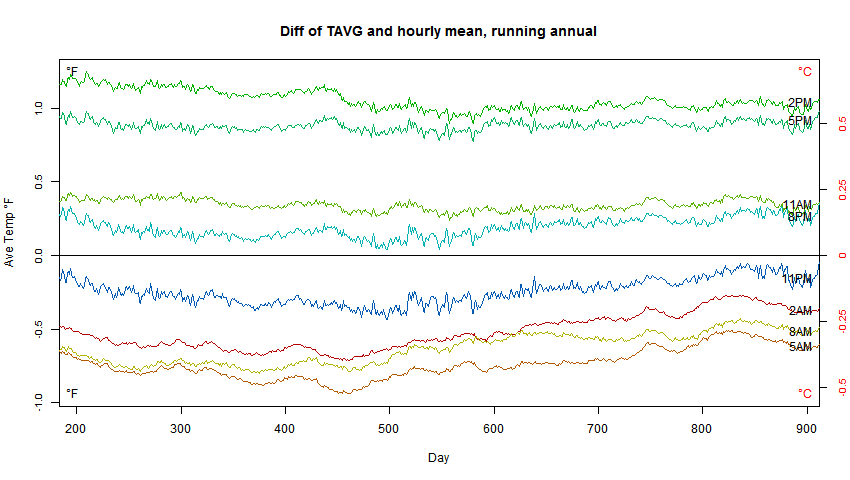

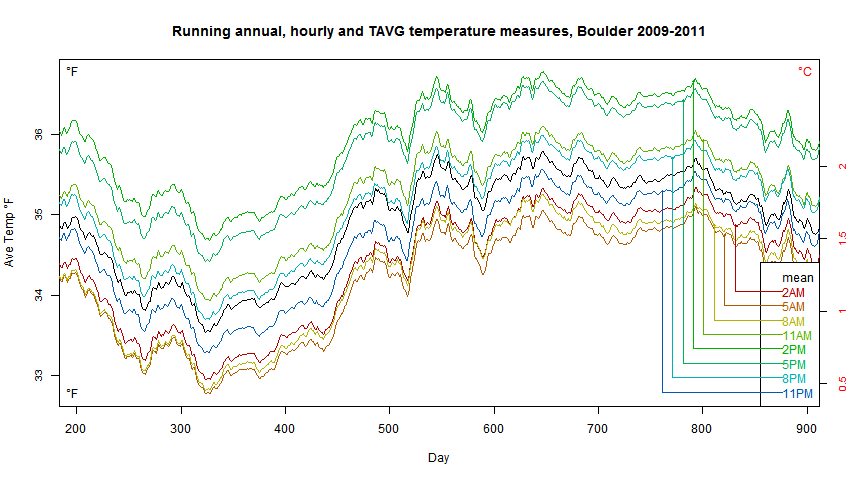

Those wanting more data about the differences between Tmean (the Mean between Daily Min and Daily Max) and Taverage (the arithmetic average of all 24 recorded hourly temps — some use T24 for this) — both quantitatively and in annual trends should refer to Spatiotemporal Divergence of the Warming Hiatus over Land Based on Different Definitions of Mean Temperature by Chunlüe Zhou & Kaicun Wang [Nature Scientific Reports | 6:31789 | DOI: 10.1038/srep31789]. Contrary to assertions in comments that trends of these differently defined “average” temperatures are the same, Zhou and Wang show this figure and cation: (h/t David Fair)

Figure 4. The (a,d) annual, (b,e) cold, and (c,f) warm seasonal temperature trends (unit: °C/decade) from the Global Historical Climatology Network-Daily version 3.2 (GHCN-D, [T2]) and the Integrated Surface Database-Hourly (ISD-H, [T24]) are shown for 1998–2013. The GHCN-D is an integrated database of daily climate summaries from land surface stations across the globe, which provides available Tmax and Tmin at approximately 10,400 stations from 1998 to 2013. The ISD-H consists of global hourly and synoptic observations available at approximately 3400 stations from over 100 original data sources. Regions A1, A2 andA3 (inside the green regions shown in the top left subfigure) are selected in this study.

[click here for full sized image]

# # # # #

Author’s Comment Policy:

I am always anxious to read your ideas, opinions, and to answer your questions about the subject of the essay, which in this case is Averages, their uses and misuses.

If you hope that I will respond or reply to your comment, please address your comment explicitly to me — such as “Kip: I wonder if you could explain…..”

As regular visitors know, I do not respond to Climate Warrior comments from either side of the Great Climate Divide — feel free to leave your mandatory talking points but do not expect a response from me.

The ideas presented in this essay, particularly in the Climatology section, are likely to stir controversy and raise objections. For this reason, it is especially important to remain on-point, on-topic in your comments and try to foster civil discussion.

I understand that opinions may vary.

I am interested in examples of the misuse of averages, the proper use of averages, and I expect that many of you will have lots of varying opinions regarding the use of averages in Climate Science.

# # # # #

I’ll dissent a bit. There are situations where an average of averages are not only allowed, but necessary. In our re-evaluation of the sunspot group numbers with annual time resolution we first compute the average for each month, then the average of the 12 months. This is necessary because number of observations vary greatly from months to month, e.g. is usually much larger during the summer months [better weather].

Yes, but the point contained in your example is that each of the dataset sizes is also nearly constant. Equal weighted, so to say.

If you gave equal weight to the sunspot average of say a 2 week period, and another one that’s 4 months wide, then whatever of the average-of-averages is is nearly meaningless. If instead you use

A = 1/∑(N + M …) • ∑( N an, M am … )

or the WIDTH of the dataset, times the average of that dataset, for each dataset, then divided by the sum of the widths of the datasets …

What you get is exactly what you would get had all the individual data points of all the datasets (each with ‘width = 1’) been added, then divided by their count.

I think that’s what the OP was getting at. In some circumstaces (as per your example), averaging averages is perfectly OK in practice. But it is only OK because the weights of each average are nearly the same.

GoatGuy

What you get is exactly what you would get had all the individual data points of all the datasets (each with ‘width = 1’) been added, then divided by their count.

No, that is exactly not what to do. In each month the number of data points [their width or weight?] varies very much. Take the year 1713 where M.M. Kirch observing from Berlin found the following for each month: 1 (0,-), 2 (0,-), 3 (0,-1), 4 (0,-), 5 (10, 1,1,1,1,1,1,1,1,1,0), 6 (0,-), 7 (1, 0), 8 (1, 0), 9 (1, 0), 10 (2, 0,0), 11 (3, 0,0,0), 12 (1, 0), where m(n, s,s,s,s,…) is month m, number of observations n, and s,s,s,s,… the count of spots for each of the observations. When no observations were made, s was ‘-‘. The 12 monthly averages are now – – – – 0.9 – 0 0 0 0 0 0 and the annual mean is 0.9/12 = 0.075. The average of all observations would be 9/16 = 0.5625, which is not representative for the whole year. In all of this, the underlying basis is that sunspot numbers have very large ‘positive conservation’, or to use a more modern word: high autocorrelation.

lsvalgaard and GoatGuy ==> I’ll re-visit this later in the day…interesting application.

GoatGuy

“What you get is exactly what you would get had all…”

Indeed so. As you say, the answer is weighting, and people know how to do this. Kip doesn’t. He should learn.

The answer to Leif’s problem is proper infilling. I discuss that in some detail here and here.

Leif … we’re STILL arguing essentially the same point:

• when one has a regular, well-spaced (in time) sampling, then the bin-size of smaller averages is that bin’s average weight. Per my comment.

• when one has irregular (in time) sampling, then the small-bin average is itself subject to weighting each sample’s “duration” according to its span.

I’m pretty sure that you and I both actually agree on this, being scientists and respecting statistics. Indeed: I wasn’t really arguing with you, but rather pointing out the underlying weighting assumptions that you didn’t state, that made your premise work.

That’s all.

Weighting. Really important to embrace.

My only significant addition to your comment.

GoatGuy

“The answer to Leif’s problem is proper infilling.”

If, by infilling, you mean making up data, well, that’s been a standard practice in the global warming industry for a long time. How else do you come up with “record hottest year” for so many years in a row?

“The answer to Leif’s problem is proper infilling.”

I shouldn’t have been so nasty. I will say it a different way. I am only aware of two possible types of infilling: interpolation and transposition (my word for it).

Interpolation involves a mathematical curve fitting (usually simple averaging) of data points before and after the missing ones. I don’t believe that this method is used in climate applications. In any case, it is equivalent to averaging and therefore it is not valid to use such data points in an average, because that creates an average of averages.

Transposition involves taking data points from another (but assumed equivalent) series and inserting them into the missing positions. From recollection, the BOM takes data from up to 600 km away and uses it to calculate a substitute value when it doesn’t like the real data. It calls it “homogenisation” and is obviously an invalid thing to do.

This doesn’t seem right. What’s been done is a calculation of the average sunspots per observation per month. Then it’s stated that this “monthly” mean divided by 12 months is an annual mean. I’m hoping that either (1) you explained yourself poorly, or (2) I’ve misread you, rather than the calculations were actually done in that manner.

If one is looking at one year’s worth of sunspot observations, and one has monthly numbers of 0, 0, 0, 0, 9, 0, 0, 0, 0, 0, 0, and 0, then those are your monthly averages. They’re kind of useless since you’ve only one year’s worth of data, but 9 sunspots/June equals a 9 sunspots per June average.

Then, it seems, the error gets compounded by dividing the “monthly” average by 12 months and claiming that to be an annual average. This doesn’t even pass a basic sanity test: how can 9 sunspots be observed in one month, but claim that the annual mean was only 0.075 sunspots that year? What’s actually been calculated here is the average number of sunspots seen per observation for the year — not the annual mean of sunspots.

I did not explain myself clearly enough. The metric we are suing is the number of spots per day. If you observe every day and every day see one spot, the number of spots seen in e.g. January is 31, which when divided by the number of days, 31, gives 1, which is the average number of spots per day for that month. If you observe every day of June and see one spot every day, then the average number of spots per day for June is also 1, and so on for all the other months. The average of the twelve monthly ones is 1, which is the average number of spots per year for the year.. If you do not observe every day, but only, say, every other day, the monthly averages will still be 1, and so will the yearly average. This holds for any number of observations, down to the extreme case where you only observe the one spot on ONE day in the whole year: the yearly average is still 1 spot.

What’s actually been calculated here is the average number of sunspots seen per observation for the year — not the annual mean of sunspots.

The metric we are after is the average number of sunspots per observation. That is: if you take a random day of the year, how many spots would you see on average on the sun for that day. Just like with temperature: if you measure every day and the value is always 30 degrees, then the yearly average is 30 degrees, not 10950 degrees [=30*365]

“The metric we are suing ”

Another infestation of lawyers.

using…

He didn’t say it was NEVER valid:

“Averaging averages is only valid when the sets of data — groups, cohorts, number of measurements — are all exactly equal in size (or very nearly so), contain the same number of elements, represent that same area, same volume, same number of patients, same number of opinions and, as with all averages, the data itself is physically and logically homogenous (not heterogeneous) and physically and logically commensurable (not incommensurable). [if this is unclear, please see Part 1 of this series.]”

Being the Sun-the measurements represent the same area, same volume, same number of patients (1), and the data sets are equal (or very nearly equal) 30/31 days per month except Feb. Right?

Leif,

I think that an important point to be made is that procedures and caveats should be stated clearly. It seems to me that, basically, you are saying that there are practical considerations that make it impossible to state definitively what the actual number of sunspots is and you have to use a ‘best practices’ approach that is really an index that you believe has a high correlation with the actual number of sunspots. As long as you don’t try to claim that you are reporting the number of actual sunspots, which are ambiguous because of shape and resolution limits, and claim a high degree of precision in the average of the count, then no one is going to argue issues of precision. However, your problem of how to count coalescing features, or features that subsequently break apart, is not analogous to reading a temperature.

However, your problem of how to count coalescing features, or features that subsequently break apart, is not analogous to reading a temperature.

Since the result is a simple number for each observation, counting features is exactly analogous to reading a temperature: the result is just a number.

lsvalgaard ,

I respectfully disagree. While reading a temperature with a conventional mercury thermometer may require some subjectivity in assigning precision to a continuous scale, it is nothing like making the subjective decision that one is looking at one or two spots and assigning a discreet count to the decision. One is comparing irrational numbers with discreet integers.

Another reason why error bounds should always be calculated and stated accurately. Geoff

lsvalgaard ==> Now, I’m surprised to read you write that “The metric we are after is the average number of sunspots per observation. ”

That calculation is trivial — sum of all known observations/number if observations. Chop the data set into time intervals desired, same calculation.

So what is all the discussion about?

Personally, I don’t think that gives us much information about the Sun itself or Sunspots — but at least it avoids the perils of infilling unknown data with imagination.

That calculation is trivial — sum of all known observations/number if observations.

I went to some lengths to show that it is not trivial. Let me make an even simpler situation: In one month there are ten observations all of one spot. In the rest of the year there are only one observation per month, all of zero spots. The number we are after is then (1+0+0+0+0+0+0+0+0+0+0+0)/12 = 0.083, not 10/21.

Why is that? Because the 10 observations of the one spot are most likely all of the same spot which may have been the only one during that year. Same thing with temperature: imagine we only measure once a month except in one month (e.g. July) we measure every day and think about what the most representative value would be for the year..

Personally, I don’t think that gives us much information about the Sun itself or Sunspots

It gives us very much information about the sun because of the very high auto-correlation of the sunspot number. Even a single observation for a year is enough to tell us if solar activity is high or low for that year. And in some years that is about all we have.

lsvalgaard ==> I think the problem here is one of language. Your expanded description is something more along the line of:

Number of UNIQUE sunspots, per observation, per time period….

with UNIQUE being carefully defined as New, Never Counted Before, Sunspots.

So you do not really mean “The metric we are after is the average number of sunspots per observation.”

I knew there had to be something else in there, because that calculation is trivial. But that is not the metric you are after at all.

Can the others in this Sunspot thread help lsvalgaard write out a definition of the metric he is after?

It is not a question about language. It is a question about physics and the Sun. Imagine that the 10 observations of one spot in the month were made by 10 different observers, then for every observer the observation was of a UNIQUE sunspot. Many spots only live for a day or two, so we in general don’t know if a spot is new or an old one just living yet another day.

So you do not really mean “The metric we are after is the average number of sunspots per observation.”

No, that is not what we want. What we want is the average number of spots on the sun for a random day in a given year, even if on that days there 100 observers looking at that same spot.

Well, two comments:

1. Sadly disappointed when the Simpson Paradox wasn’t related to Homer or Bart Simpson.

2. It’s all moot when it comes to climate numbers because it’s all modeled/adjusted anyway, complete with experts explaining why this is superior to actual data. You can take data every five minutes all you want but after the algorithms get finished with it, it becomes magic numbers not related to averages, means, averages of averages or anything like it.

It should be intuitively understood that two temperature data points cannot possible contain the data

represented by even three daily data points, much less a hundred or a thousand. If it could, then one should be able to recreate all those missing hourly (or by minute) temp data points by using the average based on two points, a ridiculous notion.

I like electrical analogies for climate. Looks like the old temperature data is like me calculating the kWhr consumption of my washing machine by measuring the highest current and the lowest current taken during the wash cycle and dividing by 2. Clearly stupid but perhaps more relevant to Global Warming than might first appear. If one is interested in the heat balance in the earth and atmosphere then the quantity of interest is the energy itself, i.e. that in the earth, that in the atmosphere, the energy input from the sun, etc. It should be energy we want to measure not just temperature. Furthermore like my washing machine it has an alternating input though at a somewhat lower frequency 0.00027777777777778 Hz and with a square wave component too.

The flow of energy in the various components of the earth-atmosphere system takes place on a second by second basis (or is it pico seconds for CO2 absorption / re-radiation) so a simple measurement of any temperatures taken once a day is not going to get you anywhere near the right answers.

The Reverend Badg.er ==> One point not similar in your analogy is that Temperature does not follow or depend on Energy In — except on very very long time scales — as far as we know to date, anyway. It is simply assumed to be true, but not shown by empirical data.

No, it is not a ridiculous notion. The max-min temperature practice assumes a model. The model is that the daily temperature curve approximates a sine curve, with different beginning and ending points, perhaps, but still roughly a sine curve. If the actual daily temperature curve is close to a sine wave, then the max-min temperature practice will provide a rather good estimate of the average temperature. The problem is that a sine wave is NOT a good model for daily temperature curves, so some information is lost. However, a sine wave is an OK model. It just isn’t good enough, IMHO, to capture the very tiny global warming signal.

Kip,

Thank you for an illuminating and useful post.

I once picked a random temperature chart for Denver to bolster an argument. The chart I chose had a 30 F degree drop in a single hour. Is that the plus/minus error range we should apply to all temperature readings? +/- 30 F

Amusing example, but no. Got me to laugh tho! Thx.

I know it’s anecdotal, but which temperature reading was more representative of Denver on that day?

And, how did that heat escape the ‘trap’ so quickly?

how did heat escape the trap that day? … by being “pushed away” by a passing cold front of substantially colder air. How do you get wet when standing on the beach? When a WAVE gets ya. Water displacing air. Same for cold / warm fronts. Big temperature changes in a matter of minutes are relatively rare, but definitely more prevalent in certain special locations. Denver is one of them. A huge wall of mountains on one side, and an even larger expanse of “the plains” on the other. Even “still air” does weird things near that juncture. Not so much so in Kansas City (short of the tornadoes).

GoatGuy

Actually violent temperature changes ar probably more common in the Midwest than anywhere else in the World. Reason: it is the only place in the World where there is a continuous lowland with no physical obstacles stretching from the Arctic ocean to the Tropics, so very warm and very cold air can come into direct contact. Tornados are also extremely rare everywhere else, for the same reason.

Memory time: One November day in 1961, I, a freshman at Indiana U in Bloomington, was having an outdoor day in ROTC, with summer uniform on because the temperature at class time was 72 degrees F. Soon after class began, while we were marching down the street near old Memorial Stadium, clouds came streaming across the sky, and the wind arose from the northwest. The new breeze was chilly, and got chillier, and by the end of class we were all shivering; it was snowing briskly, blowing straight across our sight. I found out later that the temperature dropped 45 degrees in less than 30 minutes, and we escaped the rain that fell to our south, gaining 2″ of quick snow instead. That was a morning class, so for the first nine hours of the day the temp was between 60 and 72, and the last 14.5 hours of the day it was between 27 and 17, with the remaining half hour being the transition between 72 and 27. What was the average temperature of that day, and what real meaning would that figure have? My main impression is that that was a nasty cold day with a biting wind; I totally forgot about the warm beginning, except I do remember thinking what a waste of cloth that summer uniform was on a day like that (with no time to get to my room until after 4 p.m., I had to walk across campus for several more hours in freezing cold wind).

Actually, that was my sophomore year; I didn’t have both summer and winter uniforms in my freshman year.

Well John, I was in 10 years old that month and about 10 miles west in a school building in Ellettsville. My memory of that event is not with me now. Maybe if I was out in it I would remember it too.

Not unlike my experience of unexpectedly finding myself on an airplane headed for Greenland, wearing my Summer khaki, short-sleeve uniform in 1966. When I arrived at Thule Airbase, it was 32 F and windy.

Not that unusual, no matter where you are. Extremely dry deserts – soon as that Sun goes down (or comes up).

Right now, I am not in an extremely dry desert – monsoon season, you know. As in every year, I have watched my outside thermometer go from close to 105 (F) to upper 70s in less than fifteen minutes. Several times.

In South-East Australia we have what’s known as the Southerly Buster as a cold front sweeps through from the Antarctic after a few days of very hot weather. It almost invariably happens and is a blessed relief. You can see the front coming in the clouds as the prevailing westerly winds die down and drop to nothing and then, literally, BANG, the Buster hits and the temperature plummets in minutes tens of degrees. It’s a wonderful moment after days of suffering!

Not an hour, but I live in SE Virginia, and a few years ago when we had one of those polar vortexes come through in early January the temperature dropped about 52 degrees in less than 24 hours, from a spring-like mid-60s one afternoon to the low teens by the next morning. The average temperature over the two days was probably about…average… for that time of the year. Go figure.

I think it is a good thing to use, and record, as much data as possible. There is a possibility that whatever filtering method one is using could hide the signal one is looking for.

Following the Original Poster’s point tho, while you pine for more data, I must insist that we also never forget sample WEIGHTING.

If “this” temperature represents 150 km² and “that” temperature reading is for 5 km² (because of closer sensor spacing), then it is a poor idea to average them as ½(A + B). Better is ¹/₅₅₀(150 A + 5 B). Much better.

Just saying.

WEIGHTING.

GoatGuy

That should have been ¹/₁₅₅( 150 A + 5 B ). Typed in wrong fraction. Duh.

Well, that SEEMS better, but actually it depends on the reality of the area. If the measurement used for 150km^2 is a poor sample, then its error is propagated through a higher weight. My real world example is using a temperature station near a city/airport in Alaska being used to fill in a vast unmeasured arctic area.

So while weighting is the right approach, one must be aware of the consequences of using just any data. The more weight the value has, the more important it be accurate.

“Just saying.

WEIGHTING.”

Exactly. And that is what they do.

So very true, Robert.

One could make the argument that each measurement should be weighted with the inverse of the uncertainty it introduces to the global value. This would mean that the more area it represents the less weight it would get.

Which actually makes a lot of sense, take the average where you have the data, don’t make up data where you don’t have it.

Weighting only seems applicable if we were trying to determine the average temperature of the Earth per square kilometer. Using a 5 deg X 5 deg cell is doing the same thing, actually, only the number of square kilometers in a cell changes with latitude. What ends up happening is that the weighting factor accounts for that decreasing number of square kilometers.

Consider: a 5X5 deg. cell at the North Pole (from 85N to 90N) represents about 3915 square NM. A 5×5 cell at the Equator is 90,000 square NM. Does it really figure that the North Pole temperature is less or more representative of the nearest 90,000 square NM than the Sahara Desert temperature is of its cell?

Obviously, by careful cherry-picking of locations, one could make the Earth’s “average” temperature anywhere from -40C to 45C. Trying to pick locations that give us a Normal distribution of temperatures around some value is impossible, because we don’t really know the true distribution of temperatures on Earth. All we can do is try to pick locations geographically well-distributed across the planet, and run with those.

No interpolation, no infilling, no homogenization, no weighting — just take the raw data as it is, check it by its quality flags, and run the numbers. I don’t think it can be proven that all the adjustments actually give a “better” number.

James Schrumpf July 25, 2017 at 6:30 am

“All we can do is try to pick locations geographically well-distributed across the planet, and run with those.

No interpolation, no infilling, no homogenization, no weighting — just take the raw data as it is, check it by its quality flags, and run the numbers. I don’t think it can be proven that all the adjustments actually give a “better” number.”

Right on point. What some are trying to find as a ‘Global Temperature’ is really a baseline so they can take the output numbers from a model and say, “see, lookee at what our computer says is going to happen.” The numbers mean nothing. They are not the actual ‘temperature’ of the earth, they are a made up farce. If they were real, you could take the output of a GCM and say here, Kansas City be this temperature, Seoul will be this temperature, and Moscow will be this temperature. As you say, we don’t even know the actual temperature distribution at points on the planet at any given time.

I have said the same thing as you in the past. Pick some well-distributed points on the planet and track them closely. If the ‘earth’ is warming it should become obvious pretty quickly using this method since most sites would show the higher temperatures. No more super-computers and millions of data points needed for tracking global temperatures. Also, a lot less money to the government for financing all this.

If NOAA or other agency wants to use the current method for forecasting go for it. They won’t because they haven’t done the legwork to calculate actual temperatures.

Are there temperature measurements that use a large thermal mass so that there is an integration of temperature over long periods of time without the need of max/min thermometers?

The greater part of GMST is sea surface, which has that property.

Do the adjustments for sea surface temperature have the same property? 😉

Nick and Phil ==> Satellite sea temperatures are not the actual temperatures of the bulk water — thus do NOT have that property. Satellite Sea Surface Temps are skin surface temperatures. They are not the same as, not identical to, bulk sea surface temperature — the temperature of the water 0.5 to 6 or 7 meters below the surface.

The sea itself does have a huge thermal mass — but that thermal mass is not necessarily well represented by skin sea surface temperature — the bulk thermal mass has more to do with El Nino/La Nina, overturning, layer mixing, and other short and long-term ocean movements.

i am coming to the conclusion that satellite sea surface temps are a good indicator of cloudiness and possibly type of cloud and not a lot else. they certainly bear no relation to actual measured temperatures as current north sea temperatures off the east coast of scotland and north east england show.

currently noaa showing around 1 c positive anomaly , actual temp 13.5 c . 13.5 c for this time of year is around 1.5 c below average.

NS – “The greater part of GMST is sea surface, which has that property.”

Nick – regrettably water — being a liquid — has the unfortunate property of moving around and taking its heat content with it. Examples, the Gulf Stream or ENSO. Don’t get me wrong. Including SST in “Global Temperature” is quite likely better than not doing so. But inclusion does have the unhappy result that “global temperatures” rise in El Nino years and (often) fall back when the warm water in the Eastern Pacific moves back to the West. A lot of folks seem to have an inordinate amount of difficulty dealing with both the rise (OMG – warmest temps ever. we’re all gonna die) and the fall (Ulp — We’ve already proved the Earth is burning up — Let’s talk about Polar Bears)..

Malcolm, yes. They’re called large cave systems.

Thank you for the post! A great example of this is the oft-repeated claim that a woman makes 70 cents for every dollar a man makes at the exact same job. First, the original data is for the same job *industry category*, not the same job (a bank president and a bank teller would fall into the same category). Second, the “70 cents” is an average of all categories, exactly the paradox you illustrate. The end result is that, in a gross sense, the “70 cents” figure is close in a gross sense, but not exact, and represents an average for the entire group (men vs. women), not men vs. women in the same job or same industry category.

Yep. Especially since the sampling doesn’t weight the “career path point”. A 50 year old male might be 25 years into his banking career. A 50 year old female on the average might have spent only the last 10 to 15 years in her banking career. She, however, became an expert at juggling home budgets, nurturing kids and their friends, buzzing around town delivering and picking up soccer team players, and interpreting what the pediatrician was saying, endlessly. Should both 50 year olds be branch vice presidents? Maybe so! … but then again, maybe not.

GoatGuy

OK, getting off the main topic, but I just need to add: According to very same data set that the “70 cents” figure comes from, men also work 4 more hours per week to get that extra 30 cents. That alone explains 1/3 of the difference.

Steve and GoatGuy ==> The Gender Wage Gap is an example of improper uses of averaging — in several different ways.

Actually it’s also just a gosh awful abuse of statistics.

If we take into consideration Bill Nye’s view that gender is a spectrum and not merely a matter of being male or female, then all discussion of the gender wage gap issue starts to look like a macro-aggression against all non-cis-gendered and non-binary members of H.sapiens who live outside of gender binary and cisnormativity.

Of course, I don’t suggest that we actually should take into consideration anything Bill says.

http://www.wnd.com/2017/05/bill-nye-now-the-gender-spectrum-guy/#WTHxHQPDi3dCOtfd.99

I remember doing some stats class work (6 sigma quality training bs) and it bored me to tears, that was an interesting read, thanks a bunch Kip

Michael,

Thanks!

At last, someone else who thinks 6-Sigma is pure south-excreted output from a north-facing male bovid.

Quality, of itself, is good.

Much of the (current) ISO 9001 certification is a lark [or a con-job].

My gut feeling is that is also true of many other standards – 14000; OHSAS 18000; 22000; 23000; 27000; etc. etc.

For a decent guide to introducing quality, look at the old BS 5750 of1987, or, at a pinch, BS EN ISO 9001/9002 from 1994.

For a laugh look at the intangibles in ISO 9001 of 2015.

Possibly good things to bear in mind – but as necessities for certification – I think it has been pushed too far.

Auto

Career in certification. Careful colleagues! Creative certification can cause cashflow crises.

re your last sentence, indeed , just ask british nuclear fuels .

Typo—change “weight” to “weigh” in:

“various representatives of the various methodologies will weight in and defend their methods.”

Roger ==> Thanks, as always, for paying close attention to the words used….you are right, of course — they will weigh in. The horrors of auto-spelling correction (and poor editing skills).

Well my comment relates to a more fundamental issue.

“Statistics” is a branch of mathematics; and like ALL mathematics it is pure fiction. We made ALL of it up in our heads; every bit of it.

There not one element of any branch of mathematics that exists in the real physical universe. Mathematics is an Art form, and a very useful one; but it is NOT science. It is a tool of science, and exceedingly powerful as a tool.

When it comes to statistics, there are books and books on statistical mathematics that cover ever more complicated algorithms; all of which can only be applied to sets of already exactly known real numbers.

The are no statistics of variables.

So statistics depends on the algorithms, and if you don’t like the algorithms that are already in the books, you are quite free to make up your on algorithms, to define new combinations of data set of real known numbers.

Nothing in the physical universe is even aware of statistics or can respond to any of it.

the universe responds immediately to the real state of the universe, and doesn’t wait for anything average to come along before acting. If something can happen it will happen and the instant that it can happen it will happen. Nothing will happen before it can happen.

So the usefulness of statistics is entirely dependent on the “meaning” that users assign to whatever algorithm they are using to operate on their data set.

If I want to define the “average” of a data set of “complex numbers” : Ai + jBi I can do that; perhaps as simple as Av(Ai) +j.Av(Bi).

So far as I know. nobody has ever ascribed ANY physical meaning to the “average” of a data set of complex numbers.

There is no intrinsic meaning to any statistical computation: only what meaning that users have ascribed to such results.

So I don’t dispute Dr. S when he says he has a use for the average of averages.

If he says it has useful meaning to him for some circumstance; that is ALL that is needed to justify it.

Other than that, Statistics is numerical Origami; just fold the paper where centuries of tradition say to fold it, and in the correct order, to get a frog that can jump. But it still is just a 100 mm square of paper, which can be recovered by reversing the folding sequence.

Just try if you wish, to recover the raw data of any data set, from the statistical algorithm that somebody applied to it.

G

Another way to state the above: Statistics is (are?) an attempt to ascribe meaning when there is none.

Hey Sage ! ….. I think you done just put my post into a legal Tweet …..

Outstanding ! President Trump may have started a new trend.

G

Statistical manipulations are methods of data compression, for distilling large volumes of data into a few numbers that can be readily grasped based on commonly occurring distributions.

They are not methods for divination. They are not magic. They do not provide comprehensive understanding of the processes at hand, nor do they reveal “truth” that could not otherwise be apprehended by visual inspection.

Great post, beautiful explanation. And its just the basement level math of the tower of fallacies used to justify AGW.

Does this apply to the fact that each of the IPCC climate models in CMIP5

http://cmip-pcmdi.llnl.gov/cmip5/availability.html

produce an average of several average runs. This average result is combined with the output of the other computer models to produce an average result.

Dr. Ball ==> The idea that averaging the results of chaotic processes — the chaotic output of climate models — somehow creates a valid picture of the underlying system is so wrong that words often fail me.

Averaging the output of a thousand runs of a non-linear system will only inform us of the Mean of the BOUNDARIES of those thousands runs. Another thousand runs might add to the boundaries (lie outside of those of the first thousand runs.) It tells us nothing about the underlying system’s future.

So true. Given the nature of chaos, all we can really do is draw boundaries and assign probabilities.

Especially when modelers change the published output to overcome model drift, Kip. Even IPCC AR5 had to “cool off” mid-term “average” projections. Let’s throw another Trillion on the CAGW modelturbation bonfire.

To paraphrase Dr. Curry, IPCC climate models are not fit for the purpose of fundamentally altering our society, economy nor energy systems. IPCC climate models are bunk. Going off to Wander in the Weeds with Mr. Mosher leads one to ignore that fact.

Leo ==> Associated in this problem is that “probabilities are assigned”…..and then taken to be valid true versions of the future. When a chaotic process “predicts” everything from Ice Ball Earth to Fire Ball Earth, one can’t assume the average (exactly in the middle) is the “most likely outcome” — that is not how chaotic processes work.

Well the result of applying any statistics algorithm to any finite data set of finite exactly known numbers, is always valid, and always gives an exact result. It is after all little more than 4-H club arithmetic.

So there is no uncertainty whatsoever about what you get by doing statistics on some data set.

The problems arise when you try to assert that the result means something.

The result as no intrinsic meaning at all. You are just playing around with numbers: ANY numbers (finite real).

But you can assign any meaning or importance you want, to that exact result.

It might even catch on.

G

What the average of a nonlinear system tells you depends on the nonlinear system itself and it’s stability. If the system has a single stable fixed point then all trajectories will converge to it and the average will give you the position of the fixed point. If there is a stable limit cycle then an average will give you the average position of the limit cycle etc. Even a chaotic attractor has a fixed boundary and so taking the average of a trajectory tells you where in phase space the attractor is located. As with any dynamical system what you learn depends on how you choose to study it.

Germinio ==> To do any of those things, one must understand the non-linear system to a very deep degree. We currently have almost no understanding of the climate system in that regard, other than that climate models, despite their tuning and byuilt in constraints, demonstrate unequivocally that the climate system is in fact a complex, complicated chaotic non-linear system.

Kip –> Is there any evidence you can provide that shows that the climate is chaotic? There is none that I am aware of. The weather certainly is but that is not the climate. And more importantly over what time-scale is the climate chaotic? Historically the climate is roughly constant over centuries and very rarely has abrupt shifts. For example I would predict that in 1000 years that the average temperature in July in the USA will be higher than the average temperature in January. And so would almost anyone else. Thus we can probably agree that there are many aspects of the climate that are stable and highly predictable.

Going back over 100’s of thousands of years the evidence seems to suggest that the climate is bi-stable,

either there is a ice-age or not. And these occur roughly periodically due to solar forcing. Again there is nothing that looks chaotic about that. Certainly it is nonlinear with 2 fixed points but the switching between the two is roughly regular.

Germinio ==> Regarding climate and chaos, please read my four part essay that ends with “Chaos & Climate – Part 4: An Attractive Idea“. Links to the first three essays are in thr first paragraph. After reading those four essays, if you still are uncertain, check back with me.

Kip ==> Your essays do not answer the question. Have you calculated the Lyapunov exponents for any climate variable and shown that the largest one is positive and hence that the system is chaotic. Unless you have done so or can point to a published study that does so the claim that the climate is chaotic is unproven.

Germinio ==> It is not my job to supply to you the proof you demand. If the climate were a simple single formula, or even a set or simple related formulas, then one could calculate the Lyapunov exponents for those formulas and their outputs. The formulas for the physics involved in Climate are non-linear and have extreme dependence on initial conditions.

The observation is that “The climate system is a coupled non-linear chaotic system,…….” – IPCC TAR WG1, Working Group I: The Scientific Basis

This has been known for over 50 years — since 1963. Because it comprises coupled non-linear systems (oceans and atmosphere), things re not so simple — yet the truth of the non-linearity of the overall climate system is not in doubt.

Kip ==> There is a large difference between a nonlinear system and a chaotic one. A chaotic system has a precise mathematical definition in terms of sensitivity to initial conditions. All I am asking for is evidence for the assertion that the climate is chaotic. And you have failed to provide any. There are numerous time series for different climate variables going back thousands of years. These can be analysed using the appropriate techniques to look for signs of chaos. Unless you can show that this has been done then the claim that the climate is chaotic is unproven.

Over time scales of hundreds to thousands of years the climate is stable and shown no signs of being chaotic. It is warm in summer and cold in winter. Then over longer time-scales (40,000 to 100,000 years) there are abrupt shifts when the earth enters/leaves an ice-age. These shifts appear to be periodic and due

to oscillations in the earth’s orbit and so again are not signs of chaos – although they do suggest a strong nonlinear element to the climate.

Germinio ==> I appreciate your interest in Chaos and Climate. If you’ve read my four previous essays and the entire comments section for each, you will find that this has already been answered.

If you wish to prove it for yourself, feel free to do so — though I point out that the approach you seem to advocate will not take you there.

I suggest that you re-read my four chaos essays, not for what I say, but for the references to the historic research, the foundational papers referred to and linked. You will find your answer there.

Germinio July 24, 2017 at 9:06 pm

“Over time scales of hundreds to thousands of years the climate is stable and shown no signs of being chaotic. It is warm in summer and cold in winter. ”

You are being obviously obtuse. The problem is not hot/cold, it is HOW hot or HOW cold and what is the deterministic algorithm for determining these values at any given time and any given place.

Think about this one, Tim: The various models differ in absolute base line temperatures of up to 3 degrees C. That being the case, they are describing different worlds; different physics. Try averaging around that one, Gavin.

Dave Fair

The AVERAGE is that committed Climate Scientists need at east $200,000 per year (before tax).

More name begins with, say, M.

You my not like their definition of “committed Climate Scientists”, but hey . . . . .

They get the 200k

Auto

Has always seemed odd that if the science is settled why would you need 100+ climate models. If you are going to use many models why average them, why not pick the single model with the most predictive value?

Back to the old saw that a broken clock is right twice a day.

So what about the average of the times shown on 100 broken clocks. Is that a better estimate of the current time? Or is it still only right twice a day?

Regarding the average of 50 climate models each claiming to be right to within a ridiculously small number and each differing by more than that number, we can at least say at least 49 of them are wrong.

Well If the clock is broken in the sense that it is running backwards at the correct speed, then it would be correct four times per day.

g

Clocks ==> Clocks that do not keep even, regular time, but speed up and slow down due to temperature, voltage, frequency fluctuations or any other reason are only ever accidentally and randomly correct. The stopped clock is only correct for fleetingly small instants — also accidentally.

Science that is only accidentally correct is not useful.

Law of Large Numbers

============

The law of large numbers occurs with a coin toss or a pair of dice, because the coin and dice do not change over time. They have a constant average that does not vary with time.

As a result, as we collect more samples, the sample average can be expected to converge on the true average. This makes a coin toss of roll of the dice somewhat predictable in the long run, which can be used by casinos to make money.

However, we know from the paleo records that climate does not have a constant average temperature. There is no true average for the sample average to converge on, and thus you cannot rely on the law of large numbers to improve the reliability of your long term forecast (average).

As such, the Climate Science practice of using averages to improve the reliability of their forecasts in fact is unlikely work long term. Which explains why the IPCC average of climate model average is not converging on the observed average temperatures.

Since the models used by “Climate Science” presume that all variability is due to the atmospheric concentration of CO2, amplified by a magical “sensitivity” parameter, there is no statistical manipulation that will allow their work product to converge to a physically meaningful “observed average temperature”. In fact, it is painfully obvious that the custodians of our environmental data invest an inordinate amount of their energy correcting the existing “observed average temperature” so that is bears some resemblance to the models’ output. There can be little doubt that our “custodians” are aware of the futility of seeking a true “convergence”. That being the case, the uselessness of the historical temperature records for computing a meaningful average is really of no significance. It is what it is, and it will be modified as needed by the cultists. Your point about the nonstationarity of climate data is really the fundamental problem that dooms the current efforts of the activities of those engaged in “Climate Science”.

ferdberple and Robert ==> Thank you for expanding on the Law of Large Numbers in regards climatology.

The Law of Large Numbers will not save the subject — thousands of poor measurements about thousands of different temperatures in thousands of different places at thousands (or millions) of different times can not be combined to produce accurate, precise answers — by averaging or any other means.

The gambler’s fallacy, also known as the Monte Carlo fallacy or the fallacy of the maturity of chances, is the mistaken belief that, if something happens more frequently than ‘normal’ during some period, it will happen less frequently in the future, or that, if something happens less frequently than ‘normal’ during some period, it will happen more frequently in the future (presumably as a means of balancing nature) (wikipedia)

and i’ll go one step further: the idea that there is a statistical ‘normal distribution’ which nature must obey is a fallacy.

gnomish ==> The coin toss experiment can be done by anyone with infinite patience or with basic programming skills and a random number generator (which, since we only need concern ourselves with odd and even numbers, is close enough to random, since we don’t care about the order). My 12 year old programmer a coin tosser in Basic years ago.

It is not NATURE that determines the outcomes in a coin toss — but simple mathematics and elementary probabilities (which, when it wakes up in the morning, just means possibilities — in this case, only two possibilities each throw.

I have been corrected — it produces a binomial distribution.

Thee is a part of coin tossing that produces a normal distribution –`if I recall correctly – dealing with the number of tosses that produces two heads in a row, two tails in a row, three heads in a row, three tails in a row, etc. Try it out, I’m sure you can find a free coin tosser pgm online.

Kip, no computer can generate random numbers. All algorithms in computers are pseudo-random.

Kip says: “Thee is a part of coin tossing that produces a normal distribution”

and…

.

“dealing with the number of tosses”

…

Kip, please, stop……

…

A normally distributed random variable is a REAL NUMBER.

…

You are confusing discrete variables (integers) with real numbers.

…

Continue and you’ll be just continuing to make a fool of yourself with people that know mathematical statistics.

Luis Anastasia ==> Please take this opportunity to explain this issue to gnomish, I would appreciate it (but only if you can do so politely in a collegial manner).

Kip, I’m not going to discuss this with gnomish. I will discuss it with YOU, because you seem to lack an understanding of statistics.

Luis ==> You will not be discussing it with me, you seem to lack a basic understanding of the basics required for civil discussion and conversation — come back when you taken a few classes in communications and human interaction.

Kip, I’m fully capable of conducting a civil discussion. However, it seems that someone that does not understand the difference between a continuous and a discrete random variable should not be pontificating about anything to do with statistics.

…

Now, for your continuing education…. a binomial distribution of a coin toss cannot be equated to a normally distributed random variable.

…

If you need me to explain the difference between an integer and a real number, I’d be more than happy to do so.

…

If you are unable to discuss these things, and attempt to divert the discussion to irrelevant diversions, I can understand. It’s evidence that you cannot confront someone that knows much more about “statistics’ than you.

” the sample average can be expected to converge on the true average”

why? can you demonstrate any logical principle why that must be?

i dispute it.

any sequence is independent of any previous one

and

any sequence is equally improbable

and

nature’s timeline is infinite

so nope- i don’t believe the premise of the numerologists

and the casinos love you longtime if you do believe it.

A data set which contains say the single integer 22 as its only element, has an average value of 22. A data set containing say the integers 22 and 11 has an average of 16.5, which isn’t even a member of the set, and in this case is not even an integer. The average of a data set is (usually) different for every different data set.

Remember the algorithms of statistical mathematics, are valid for any finite real numbers in a finite data set. Statistics presumes no relationship between any of the members of the set.

The data set containing as its elements all of the numbers printed in today’s issue of the New York Times, yields exact answers to any question or algorithm of statistical mathematics, including having an exact average value.

Statistics does not even know what variables are. It deals only in finite real numbers, each of which must have an exact already known value, otherwise it cannot participate in ANY statistical computation.

Averages are not converging on anything; they have a unique value for any finite data set of known finite real numbers.

G

Try this experiment :

1) Toss a coin and record whether it comes up heads or tails.

The theoretical probability of each outcome is 0.5 but the result will be either one or the other.

2) Repeat the experiment with 10 tosses and record the number of heads and of tails.

The probabilities of each of the theoretical outcomes, 0,10; 1,9; … 10,0 will approximate a normal distribution with a maximum at 5,5.

3) Repeat the experiment with increasing numbers of tosses per trial and the probabilities will converge on the normal distribution.

This is proof that the sample average of heads or tails converges on the theoretical probabilities of 0.5 for an unbiased coin. This is foundational for the theory of statistics. This is why “The house always wins” despite the occasional player who makes a windfall “winnings”

pgtips91

(i like em too- but i like hubei silver tip the most)

the contradictory proof of your conjecture is that you are here and there’s nothing more improbable in the universe.

but that’s the case for every single event or chain of events – a royal straight flush is just as likely as any other hand, i.e. equally improbable.

you have not stated any principle or valid causal relationship between flips and outcomes – you are simply adhering to a supposition. correlation yadda yadda. it is numerology with an academic title.

and you really don’t understand how the casino works, either. they are betting on stupid- that’s why they win.

free drinks at the tables and a hard coded microprocessor in every patchouli scented slot machine.

heh- the more coin flip trials, the more the results converge on any outcome whatsoever. every time they are not 50/50, that is what you must deny in order to persist in the numerology narrative.

and they are not 50/50 most of the time- but will that empirical fact matter to a fine established narrative that is the rationale for ever so many state sponsored witchdoctors? what are the odds of that?

but the underlying false premise is that this is not an ordered universe and that cause & effect do not apply – and that’s not how it works. nothing is random. there is always a cause for every effect.

pretending to be able to enumerate that which one does not know is the hallmark of a religion.

i ching, mon. statistics is the i ching of western witchdoctors.

pgtips91 and gnomish ==> Coin flipping, a random system with only two possible outcomes (assuming fair coin and fair toss) eventually does produce something approaching a normal distribution.

Non-random systems, with infinite or finite outcomes, do not necessarily produce normal distributions — in fact rarely do,

Physical dynamic systems in the real world are almost all non-linear to some extent (many totally at everyday ranges) and are extremely unlikely to produce normal distributions except accidentally.

Daily temperature at any one given weather station generally has a sine-wave-ish look to it, because of the rotation of the Earth exposing to location to varying amounts in isolation. If there were no other factors involved in air temperature, the graph would be wholly dependent on the Sun strength/angle. That is not the case so temperature is NOT normally distributed.

Kip, coin tossing produces a binomial distribution, not a normal distribution. They are very different, even though their shapes look similar. http://staweb.sta.cathedral.org/departments/math/mhansen/public_html/23stat/handouts/normbino.htm

hi Kip.

‘eventually does produce something approaching a normal distribution.’

is simply a restatement of the monte carlo fallacy.

the get.out.of.jail.free word is the ‘eventually’. it’s the no.true.scotsman fallacy.

it makes the proposition unfalsifiable – you know what that means

it’s also unprovable and i know it means the same.

btw- i do value your writings, thanks for all you do.

Well Ferd, whenever you compute the average of a group of numbers, there is only so many numbers you have in that group. And so long as they are finite real numbers, they have one unique exact sum. And the number of them is also a finite real number. It is even an integer.

so if you divide the sum, by the integral count of the numbers in the set, you ALWAYS get an exact real number; and it ALWAYS IS the EXACT average of those numbers. The algorithm NEVER yields an answer that is NOT the average of the numbers in the set; it cannot ever happen. And the average number for any set, may not even be a member of that set. The average of any set of integers, is not always going to even be an integer, but it will be the average for that set.

If you keep on adding new numbers to the set, you now have a different set, and it likely will have a different average; but that will be the exact average for that set.

G

The inevitable response by the CAGW types is: We can’t go back and redo the pre-digital data; we are doing the best we can with the data we have.

My response: Great, you get an “A” for effort. But this does not mean that it is fit for the purpose of analyzing global temperature trends over the last 150 years.

Paul Penrose ==> It is the fact that the Historic Temperature Record should be properly considered a Range — quite wide, at least several degrees F – because the data cannot inform us of anything more precise.

It has to be obvious that the problem (one of the problems at least) with Climate “Science” isn’t that statistical work is misunderstood. It is that the statistical work is deliberately misused. Michael Mann deliberately chose data points that were not representative before he “interpreted” them through his algorithm and then tacked on additional and deceptive information to produce his Hockey Stick. The entire thing was a fit for purpose fabrication of pseudo reality that was intended to fool, not to enlighten. We would be closer to the truth if these charlatans were less adept at statistics!

Kip,

I gather from your paper then that the only way to come up with a global average temperature that is meaningful is through satellites – using technology that tells us that Pluto is colder and Mercury warmer than Earth, to use Steven Mosher’s example.