…A Reminder of How Horribly Climate Models Simulate the Surface Temperatures of Earth’s Oceans.

Guest Post by Bob Tisdale

This post serves as the annual model-data comparison for sea surface temperatures.

INTRODUCTION

Oceans cover about 70% of the surface of the Earth, yet climate models are far from properly simulating the surface temperatures of those oceans on global, hemispheric and individual ocean-basin bases over the past 3 decades. In past annual model-data comparisons, we’ve used the multi-model mean of all of the climate models stored in the CMIP5 archive, but for this post we’re using only the average of the outputs of the 3 CMIP5-archived climate model simulations from the Goddard Institute of Space Studies (GISS) with the “Russell” ocean model. And in past model-data comparisons, we’ve used the NOAA’s original satellite-enhanced Reynolds OI.v2 sea surface temperature dataset, but for this one we’re using NOAA’s Extended Reconstructed Sea Surface Temperature version 4 (ERSST.v4) data.

Why the different models and data?

Both NASA GISS and NOAA NCEI use NOAA’s ERSST.v4 “pause buster” data for the ocean surface temperature components of their combined land-ocean surface temperature datasets, and, today, both agencies are holding a multi-agency press conference to announce their “warmest ever” 2016 global surface temperature findings. (Press conference starts at 11am Eastern.) And we’re using the GISS climate model outputs because GISS is part of that press conference and I suspect GISS is not to going to expose how horribly their climate models simulate this critical global-warming metric.

PRELIMINARY INFORMATION

The sea surface temperature dataset being used in this post is NOAA’s Extended Reconstructed Sea Surface Temperature dataset, version 4 (ERSST.v4), a.k.a. their “pause buster” data. As noted above, the ERSST.v4 data make up the ocean portion of the NOAA and GISS global land+ocean surface temperature products. The data presented in this post are from the KNMI Climate Explorer, where they can be found on the Monthly observations webpage under the heading of “SST”.

The GISS climate models presented are those stored in the Coupled Model Intercomparison Project, Phase 5 (CMIP5) archive. They are one of the latest generations of climate models from GISS and they were used by the IPCC for their 5th Assessment Report (AR5). The GISS climate model outputs of sea surface temperature are available through the KNMI Climate Explorer, specifically through their Monthly CMIP5 scenario runs webpage, under the heading of Ocean, ice and upper air variables. Sea surface temperature is identified as “TOS” (temperature ocean surface). I’m presenting the average of the GISS models identified as GISS-E2-R p1, GISS-E2-R p2 and GISS-E2-R p3, where the “R” stands for Russell ocean. (I’ll present the GISS models with HYCOM ocean model in an upcoming post.) The Historic/RCP8.5 scenarios are being used. The RCP8.5 scenario is the worst-case scenario used by the IPCC for their 5th Assessment Report. And once again we’re using the model mean because it represents the forced component of the climate models; that is, if the forcings used by the climate models were what caused the surfaces of the oceans to warm, the model mean best represents how the ocean surfaces would warm in response to those forcings. For a further discussion, see the post On the Use of the Multi-Model Mean, which includes a quote from Dr. Gavin Schmidt of GISS on the model mean.

The model-data comparisons are in absolute terms, not anomalies, so annual data are being presented. And the models and data stretch back in time for the past 30 years, 1987 to 2016.

The linear trends in the graphs are as calculated by EXCEL.

WHY ABSOLUTE SEA SURFACE TEMPERATURES INSTEAD OF ANOMALIES?

The climate science community tends to present their model-data comparisons using temperature anomalies, not absolute temperatures. Why? As Dr. Gavin Schmidt of GISS claims “…no particular absolute global temperature provides a risk to society, it is the change in temperature compared to what we’ve been used to that matters”. (See the post Interesting Post at RealClimate about Modeled Absolute Global Surface Temperatures.)

But as you’ll discover, globally, the GISS climate model simulations of sea surface temperatures are too warm. Now consider that the ocean surfaces are the primary sources of atmospheric water vapor, the most-prevalent natural greenhouse gas. If the models are properly simulating the relationship between sea surface temperatures and atmospheric water vapor, then the models have too much water vapor (natural greenhouse gas) in their atmospheres. That may help to explain why the GISS models simulate too much warming over the past 3 decades.

WHY THE PAST 3 DECADES?

The World Meteorological Organization’s (WMO) classic definition of climate is weather averaged over a 30-year period. On their Frequently Asked Questions webpage, the World Meteorological Organization asks and answers (My boldface):

What is Climate?

Climate, sometimes understood as the “average weather,” is defined as the measurement of the mean and variability of relevant quantities of certain variables (such as temperature, precipitation or wind) over a period of time, ranging from months to thousands or millions of years.

The classical period is 30 years, as defined by the World Meteorological Organization (WMO). Climate in a wider sense is the state, including a statistical description, of the climate system.

By presenting models and data for the past 3 decades, no one can claim I’ve cherry-picked the timeframe. We’re comparing models and data over the most recent climate-related period.

A NOTE ABOUT THE ABSOLUTE VALUES OF THE NOAA ERSST.v4 DATA

The revisions to NOAA’s long-term sea surface temperature datasets were presented in the Karl, et al. (2015) paper Possible artifacts of data biases in the recent global surface warming hiatus. There Tom Karl and others noted:

First, several studies have examined the differences between buoy- and ship-based data, noting that the ship data are systematically warmer than the buoy data (15–17). This is particularly important because much of the sea surface is now sampled by both observing systems, and surface-drifting and moored buoys have increased the overall global coverage by up to 15% (supplementary materials). These changes have resulted in a time-dependent bias in the global SST record, and various corrections have been developed to account for the bias (18). Recently, a new correction (13) was developed and applied in the Extended Reconstructed Sea Surface Temperature (ERSST) data set version 4, which we used in our analysis. In essence, the bias correction involved calculating the average difference between collocated buoy and ship SSTs. The average difference globally was −0.12°C, a correction that is applied to the buoy SSTs at every grid cell in ERSST version 4.

For model-data comparisons where anomalies are presented, shifting the more accurate buoy-based data up 0.12 deg C to match the ship-based data makes no difference to the comparison. This shortcut was a matter of convenience for NOAA since there are many more years of ship-based data than buoy-based data. However, when models and data are compared on an absolute basis, shifting the more accurate buoy-based data up 0.12 deg C to match the ship-based data makes a difference to the comparison. Because the model-simulated sea surface temperatures are too warm globally, this shortcut helps to better align the data with the models. And that sounds typical of climate science at NOAA.

In other words, the differences between models and data are likely greater than shown in all of the examples in this post where the modeled sea surface temperatures are warmer than observed…and vice versa when the models are too cool.

Enough of the preliminaries…

GLOBAL TIME-SERIES

Figure 1 presents two model-data comparisons for global sea surface temperatures, not anomalies, for the past 30-years. I’ve included a comparison for the global oceans (90S-90N) in the top graph and a comparison for the global oceans, excluding the polar oceans (60S-60N), in the bottom graph. Excluding the polar oceans doesn’t seem to make a significant difference. It’s obvious that global sea surfaces simulated by the GISS climate model were warmer than observed and that the GISS model warming rate is too high over the past 3 decades. The difference between modeled and observed warming rates is approximately 0.07 to 0.08 deg C/decade, more than 60% higher than the observed rate. And in both cases the 30-year average sea surface temperature as simulated by the GISS models is too high by about 0.6 deg C.

Figure 1 – Global Oceans

TIME SERIES – TROPICAL AND EXTRATROPICAL SEA SURFACE TEMPERATURES

In June of 2013, Roy Spencer presented model-data comparisons of the warming of the tropical mid-troposphere prepared by John Christy. See Roy’s posts EPIC FAIL: 73 Climate Models vs. Observations for Tropical Tropospheric Temperature and STILL Epic Fail: 73 Climate Models vs. Measurements, Running 5-Year Means. The models grossly overestimated the warming rates of the mid-troposphere in the tropics. So I thought it would be worthwhile, since the tropical oceans (24S-24N) cover 76% of the tropics and about 46% of the global oceans, to confirm that the models also grossly overestimate the warming of sea surface temperatures of the tropical oceans.

It should come as no surprise that the models did overestimate the warming of the sea surface temperatures of the tropical oceans over the past 30 years. See Figure 2. In fact, the models overestimated the warming by a wide margin. The data indicate the sea surface temperatures of the tropical oceans warmed at a not-very-alarming rate of 0.11 deg C/decade, while the models indicate that, if the surfaces of the tropical oceans were warmed by manmade greenhouse gases, they should have warmed at almost 2 times that rate, at 0.22 deg C/decade. For 46% of the surface of the global oceans (about 33% of the surface of the planet), the models doubled the observed warming rate.

And of course, for the tropical oceans, the model-simulated ocean surface temperatures are too warm by about 0.9 deg C.

For the extratropical oceans of the Southern Hemisphere (90S-24S), Figure 3, the observed warming rate is also extremely low at 0.06 deg C/decade. On the other hand, the climate models indicate that if manmade greenhouse gases were responsible for the warming of sea surface temperatures in this region, the oceans should have warmed at a rate of 0.14 deg C/decade, more than doubling that observed trend. The extratropical oceans of the Southern Hemisphere cover about 33% of the surface of the global oceans (about 23% of the surface of the planet) and the models double the rate of warming.

Figure 3 – Extratropical Southern Hemisphere

The models are too warm in the extratropical oceans of the Southern Hemisphere, by roughly 0.6 deg C.

And the climate models seem to get the warming rate of sea surface temperatures just right for the smallest portion of the global oceans, the extratropical Northern Hemisphere (24N-90N). See Figure 4. The extratropical oceans of the Northern Hemisphere cover only about 21% of the surface of the global oceans (about 15% of the surface of the Earth).

Figure 4 – Extratropical Northern Hemisphere

Curiously, the model-simulated surface temperatures are too cool in the extratropical oceans of the Northern Hemisphere. That won’t help their simulations of sea ice.

TIME SERIES – OCEAN BASINS

Figures 5 to 11 provide comparisons of modeled and observed sea surface temperatures for the individual ocean basins…without commentary. I’d take the comparisons of the Arctic and Southern Oceans (Figures 10 and 11) with a grain of salt…because the models and data may account for sea ice differently.

Figure 5 – North Atlantic

# # #

Figure 6 – South Atlantic

# # #

Figure 7 – North Pacific

# # #

Figure 8 – South Pacific

# # #

Figure 9 – Indian

# # #

Figure 10 – Arctic

# # #

Figure 11 – Southern

CLOSING

It would be nice to know what planet the climate models from GISS are simulating. It certainly isn’t the Earth.

We live on an ocean-covered planet, yet somehow the whens, there wheres, and the extents of the warming of the surfaces of our oceans seem to have eluded the climate modelers at GISS. In this post, we presented sea surface temperatures, not anomalies, for the past 3 decades and this has pointed to other climate model failings, which further suggest that simulations of basic ocean circulation processes in the models are flawed.

Depending on the ocean basin, there are large differences between the modeled and observed ocean surface temperatures. The actual ocean surface temperatures, along with numerous other factors, dictate how much moisture is evaporated from the ocean surfaces, and, in turn, how much moisture there is in the atmosphere…which impacts the moisture available (1) for precipitation, (2) for water vapor-related greenhouse effect, and (3) for the negative feedbacks from cloud cover. In other words, failing to properly simulate sea surface temperatures impacts the atmospheric component of the fatally flawed coupled ocean-atmosphere models from GISS.

PLUG FOR A FREE EBOOK

I believe it’s been a while since I’ve promoted my most recent free ebook on global warming and climate change. On Global Warming and the Illusion of Control – Part 1 presents the basics and the illusions behind global warming and climate change. (Click here for a copy. 700+ Page, 25MB .pdf) Happy reading.

“It would be nice to know what planet the climate models from GISS are simulating. It certainly isn’t the Earth.”

It would take yuuge hunks of grant money to figure this out…

Planet Giss?

Planet Gore?

Planet Imaginarium?

Planet Nonexistium?

Planet Theiranus (rhymes with Uranus)?

Planet Headupassium?

Got to be one of those.

/grin

Planet Theiranus (rhymes with Uranus)?

Ha ha ha ha ha ha ha ha ha ha ha ha ha ha ha ha!

First chuckle of the day (-:

Planet sum nill plus constant times variable divided by sum best guess plus l really know!

“It would be nice to know what planet the climate models from GISS are simulating. It certainly isn’t the Earth.”

That’s easy. By applying well-known rules of physics, we can easily arrive at any one of a multitude of alternate-earth entities in any one of a multitude of alternate universes, where any temperature we might wish to apply in THIS universe is undeniably valid in one or more of those OTHER universes.

Come on people, this is basic multi-verse math !

It would be nice to know what planet the climate data from NOAA are measuring. !!

I predict once the adjusted, manipulated and hidden data has been investigated by honest scientist we will get a cooling effect on the warmist zeal – Coming soon to a taxpayer financed institutions!

Its called the Trump effect!

So, is the Arctic Temperature increase related to sea ice decrease allowing the natural ambient ocean temps to be reflected in the data?

Ice is below 29F while the ocean underneath would be consistantly several degrees warmer about 34F. As 34F ocean below, this would lead to a 5 deg average temp increase in the measurements for the arctic area when in fact the ocean below hasn’t warmed. It was 34F below the ice and 34F without the ice

“The classical period is 30 years”

Classical? What kind of science is that? What if I prefer jazz?

Climate science sucks. There’s no getting around it.

Andrew

30 years was a terrible choice for ‘averaging’ weather – there is a 70 year cycle present and that 30 years can be anywhere on that cycle. Rising, falling, at the top, at the bottom – yet it is completely meaningless, as is riding on a roller coaster and observing that you are going up or down. That is what it is supposed to do!!

That’s true, but if you use accepted Nyquist sampling techniques, you can only accurately measure data that is less than half of this frequency – < one cycle per 140 years. Than you need a sharp anti-aliasing filter about half of that – one cycle per 280 years. Given that you need forward and backward averaging, the latest date you could possibly accurately measure now would be 2017-280/2, which is 1877. This means that in 140 years, we will have accurate climate data for 2017 (assuming that there will be consistent thermometer data back to 1877).

Not quite. If we are measuring at 1 month intervals, Nyquist says we can only see cycles of down to two months period – 1 month up, 1 month down to complete the cycle. But, we can see longer periods.

The problem is that we have a reliable data window of maybe about 120 years. We can envisage this as the data over all time multiplied by a window of 120 years. Multiplication in the time domain becomes convolution in the frequency domain, so the frequency response is smoothed by the sinc response of the window, W(f) = sin(pi*f*T)/(pi*f*T), the effective width of which is about 1/T. That means we can only resolve frequency peaks of up to about 120 year in theory, but maybe about half that in practice.

The cycle we are talking about is closer to 60 years than to 70, somewhere in the range of maybe 60-65 years. It is very regular, and is in evidence for almost two full cycles now, assuming the recent monster El Nino dissipates to the point where the temperature anomalies start tracking it again, which I expect it will do. We shall see. The next few months should be interesting.

http://i1136.photobucket.com/albums/n488/Bartemis/temps_zpsf4ek0h4a.jpg

This is entirely correct, at the least, the 61.6 year cycle has to be used as classical time span in order to compensate this cyclic nature ……

…. The 30-year time span is a completely unscientific nonsensical period: Back in 1935,

the weathermen had their first congress in Warsaw: “Folks, we need a time span to call it climate” Lets make it in round numbers: Lets take year 1900, wonderful round and, today, 1935, is not so round…well…lets take 1930 then and we say, the WMO takes 30 year als

WMO-period….this is our science, because of majority decision……No joke.

At later dates in the ´50s, they did not want to fiddle with the date and now we have the

enormous WMO-achieved 30 year period for the climate……

The models look suspiciously like they have a CO2 warming dial set to a standard amount of additional heat based on gas properties determined in the lab, not in situ. And there in lies the mistake. Gas properties determined in a closed lab in minute amounts, on their own, may have no bearing whatsoever when mixed into an atmosphere with boat loads of other gases in an essentially infinite open container.

I also think they are set at different amounts of heat in each basin based on the previously determined averaged gloppy goopy drifts of CO2 measured by that AIRS satellite or similar.

+1!

Pamela Gray January 18, 2017 at 6:23 am

“when mixed into an atmosphere with boat loads of other gases in an essentially infinite open container”

WR: And when put in an environment with lots of water, water vapour, vegetation, fauna, clouds, changing weather systems etc. and all theire interactions.. The lab measured CO2 effect is an INITIAL effect. We should first study what the Earth does do (natural variation) when there is a mutation somewhere. When we exactly know ALL natural reactions on a certain ‘change’, we can make models that predict. We can not predict before that moment. As is proven time after time, also in this post: thanks Bob!

I once read about the predicted rise in methane. Methane should go up nearly exponentially. But forgotten was the fact that there are lots of bacteria that eat methane. More methane > more bacteria > less methane. The following graph shows the present situation – no exponential rise: http://www.eea.europa.eu/data-and-maps/daviz/atmospheric-concentration-of-carbon-dioxide-2#tab-chart_2

But studying and understanding the natural environment was not the role the IPCC laid down for herself. Her role was about “(….) risk of human-induced climate change, its potential impacts and options for adaptation and mitigation.” Not about ‘First understanding the Earth’:

PRINCIPLES GOVERNING IPCC WORK

(….)

ROLE

2. The role of the IPCC is to assess on a comprehensive, objective, open and transparent basis the scientific, technical and socio-economic information relevant to understanding the scientific basis of risk of human-induced climate change, its potential impacts and options for adaptation and mitigation. IPCC reports should be neutral with respect to policy, although they may need to deal objectively with scientific, technical and socio-economic factors relevant to the application of particular policies

Source: https://www.ipcc.ch/pdf/ipcc-principles/ipcc-principles.pdf

As Wikipedia says:

“The aims of the IPCC are to assess scientific information relevant to:[6]

1. Human-induced climate change,

2. The impacts of human-induced climate change,

3. Options for adaptation and mitigation.”

Source: https://en.wikipedia.org/wiki/Intergovernmental_Panel_on_Climate_Change#cite_note-principles-6

Hey Wim, those charts look like methane tripled, 600 to 1800. While CO2 about a fifth, 350 to 400. Seems pretty exponential…

Macha January 19, 2017 at 4:37 am ” those charts look like”

Macha, the link above is for one chart, the methane chart. Methane is measured in parts per billion (ppb) and not per million like CO2, what might have confused you. Furthermore, the line representing the rise in methane which seemed to be exponentional untill 1990, quitted the curve around that year and became (much) more flat. Still rising a bit, but far from exponential which is a very important difference. And a strong indicator of ‘other factors at work’.

Amen sister. I’ve been saying this for some time now. They’re extrapolating from lab conditions to the open field and making vast conclusions from half vast data (JLA, 1975). This is compounded by failing to do a proper error analysis and propagation. They fail my chemistry lab.

This is no problem at all, rule one of climate ‘science ‘ states that ‘ when models and reality differ in value , it is reality which always in error ‘

Having spent all that time and effort in making the data give the ‘right results ‘ how could it be otherwise .

Entirely off topic but i couldn’t help remarking WUWT has racked up 299,076,823 views. Big milestone in view.

Perhaps a little gift for the 300,000,000 th ?

I’m rooting for 299,792,458 (speed of light in m/s).

10:20AM 299,099,476 views

ALMOST THREE HUNDRED MILLION VIEWS, EVERYONE!

#(:))

At a rate of roughly 7,500 views/hour,

about 120 hours to go!

(Note: that rate/hour needs to be corrected down, for, 7,500 was the rate during peak viewing time, roughly, 10AM PST — 9PMPST, when the most WUWTers are viewing).

My guess: ~ 10 days.

You read it here, first! 🙂

Do NOT tell me that 10 doesn’t follow from 120 hours. I know. I did a highly sophisticated “adjustment” to arrive at the 10. 🙂

you obviously used climath janice 🙂

lol, Bit. 🙂 LOOKS like I did, however, my guess is genuine and based on reality. Climath is an intentional warping of reality.

References:

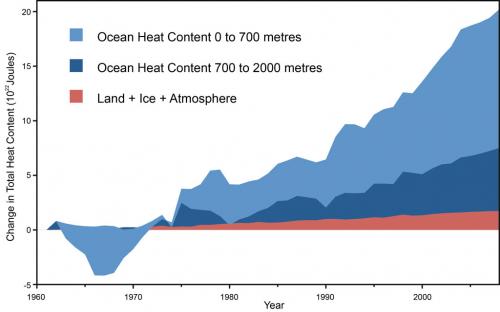

Trenberth et al 2011jcli24 Figure 10

This popular balance graphic and assorted variations are based on a power flux, W/m^2. A W is not energy, but energy over time, i.e. 3.4 Btu/eng h or 3.6 kJ/SI h. The 342 W/m^2 ISR is determined by spreading the average 1,368 W/m^2 solar irradiance/constant over the spherical ToA surface area. (1,368/4 =342) There is no consideration of the elliptical orbit (perihelion = 1,416 W/m^2 to aphelion = 1,323 W/m^2) or day or night or seasons or tropospheric thickness or energy diffusion due to oblique incidence, etc. This popular balance models the earth as a ball suspended in a hot fluid with heat/energy/power entering evenly over the entire ToA spherical surface. This is not even close to how the real earth energy balance works. Everybody uses it. Everybody should know better.

An example of a real heat balance based on Btu/h follows. Basically (Incoming Solar Radiation spread over the earth’s cross sectional area) = (U*A*dT et. al. leaving the lit side perpendicular to the spherical surface ToA) + (U*A*dT et. al. leaving the dark side perpendicular to spherical surface area ToA) The atmosphere is just a simple HVAC/heat flow/balance/insulation problem.

There are a number of issues that the American Statistical Society should be addressing as a group as the way statistics are applied to climate data are not valid in a great many ways. One of the most egregious errors being made is averaging models to get a value that is said to represent generalized model output. Models are not data and there is no way to claim that there is a pool of model data from which a representative random sample can be drawn to use for calculation of an average value.

A point that should be made is that of the 102+ models that are available for use, all are based in one way or another on the exact same atmospheric physics yet they produce vastly different outputs. As they are not data and cannot be averaged, one cannot state that there is some generic model output for comparison to measured earth temperature (which in and of themselves are not statistically analyzed correctly).

All this discussion about models and data make me think of the arguments in Medieval Times of how many angles can dance on the head of a pin when no one knew how big the head of a pin was. A totally pointless discussion. So we waste billions of taxpayers dollars on Pseudo-Science that will never produce results worth discussing.

I believe this is a topic that people with strong statistical backgrounds should be addressing.

From the Wikipedia article, “However the idea that such questions had a prominent place in medieval scholarship has been debated, and it has not been proved that this particular question was ever disputed.[7] One theory is that it is an early modern fabrication,[a] as used to discredit scholastic philosophy at a time when it still played a significant role in university education.”

If the question ever was asked, the point of it was probably practice on the distinction between having a location and occupying space at a location, which is actually very close to our modern distinction between intensive properties and extensive properties, which is to say that the frequent claim here that temperatures can’t be averaged (or models can’t be averaged) is closer to the question about angels than Dr Bob might be comfortable with.

Or to put it another way, mediaeval people might have been WRONG but they weren’t STUPID and any popular meme that says they were deserves close reinspection

I’d say the question is whether doing a mathematical operation for the purposes of statistical analysis is being done properly such that the operation has meaning. In a system with multiple properties, causes and effects, averaging certainly can be done but the calculated result may not only not mean what you think it means, it may mean nothing at all in describing reality. Existence exists and reality is what it is, embedded in existence.

v’, cdq:

Obviusly these models are based on false assumptions.

Significant parameters are not considered.

Parameters of minor influence are overestimated.

Using these models is actually a risk.

Thank you Dr. Bob!

This reminds me of the recent election prediction fiasco a mere two months ago, when self-described “statisticians” formulated election prediction models based upon aggregating polling data from various sources and then by somehow mashing them altogether they were able to produce a result that said Hillary Clinton had a 98% probability of winning the election.

The oldest saying in the world of computer modeling is GIGO – garbage in, garbage out.

It seems that that is one of the immutable laws of nature.

Climate models aren’t data. Polling results aren’t data. Both are simply synthetic simulations of data. “Virtual data”, if you will.

Statistical calculations based upon either climate models and polling results still aren’t data-based. They’re all just simulations. None of them actually represents representative random samples of actual populations.

Climate models are like Hollywood movies, they don’t make money for the group producing them because they are accurate, they make money for their group if they effectively scare you. In writing they call it embellishment. Al Gore understands this very well.

” “…no particular absolute global temperature provides a risk to society, it is the change in temperature compared to what we’ve been used to that matters”.

This Gavin Schimdt quotation is a bit bizarre. Taken to a conclusion it suggests that coming out of the last glacial maximum represented a risk to society. At a minimum it requires some justification, but the press are generally not capable of asking for such.

Then he forgot to consider being at the bottom of a stadial period when folks and crops in northern states tain’t thar no mo.

Here’s a particular absolute global temperature: 320K (= 47C). The idea that it would not provide a risk to society is crazy.

By that logic then no one should ever move from New York to Florida. The average yearly temperature in New York city is 55 F. The average yearly temperature in the Florida Keys is 77 F. Thats 22 F difference millions of people have exposed themselves to by moving and that has never been identified as a health hazard has it? If an instantaneous change in 22 F is not harming people how would 2 or 3 F over 30 to 50 years be harmful?

Florida is a good example:

– on the one hand ‘rising sealevels’

– on the other hand ‘population growth ‘ –

https://www.google.at/search?q=florida+population+growth+chart&oq=florida+population+growth+chart&aqs=chrome.

Should “.. there wheres…” actually be “…the wheres…”?

I’m still waiting for someone to explain why taking the average of “n” different, bad models yields a value we should care about.

Okay. Here’s an example to help.

— You are buying parts and stuff to make a rocket to send people to the moon.

— You need a guidance system.

— Vendor, AGW, Inc., gives you 73 different versions of its system.

— You test each version with the same set of parameters.

— All of AGW’s versions send your rocket to another planet (most, to Venus).

— You average AGW’s test results to be fair. That is, AGW isn’t likely as incompetent as its worst system, nor is it likely as competent as its best system.

— The average still ……….>———->”””””””@#!

sends your rocket to Venus.

Q: Did you need to average all those bad results?

A: No. You did it to make your argument for rejecting AGW more persuasive.

Q: Why would one NEED to be more persuasive, given those terrible results?

A: Going with Reality, Inc., is going to prevent some people from earning a lot of money. Your boss, an elected public official, is under a lot of pressure from AGW-friendly donors to his campaign (not right, but, that’s the way it is) to “just give them a chance — they have scientists saying their systems are very, very, close!”

So. Bottom line: The model results are averaged because human beings are not angels.

Absolutely love your analogy, Janice! A conspiracy of self interest is what they are engaged in.I’m pretty sure a lot of them know it. I am reading “The hockey Stick Illusion” and I can’t believe Mann’s findings were due to incompetence. It’s just beyond that. For what they have done to human aspirations the world over-may they rot on Venus!

Thank you, SO MUCH, Mr. Harmsworth. 🙂 And, yes, unless MM is actually a robot, it was intentional.

Tide gauges worldwide show linear Sea Level rise, and they are biased towards areas experiencing subsidence, thus exaggerating reported rise. Satellite data is also linear. Trouble is: their directions (slopes) differ. Tectonically inert coastlines show a linear 1.1mm annual rise and are linear too. Guess which of those three you should rely upon!

Obviously, the coast line on which you live as that is the only data from the area that will affect you.

Given the many, many, documented problems with ship based temperature readings, there is no excuse for using it at all.

I know that many warmists will whine that for most our history, it’s the only data we have.

If so, that’s still no excuse for using it.

If no data exists, you aren’t allowed to just make up data.

Fixing bad data just takes already huge error bars, and makes them even bigger.

“…already huge error bars, and makes them even bigger.”

Just a pass through the error reduction filter, aaaand presto!

Wouldn’t competent scientists do a calibration check against the data on a few ships to establish whether there is any reasonable degree of accuracy to these measurement and what adjustment factor might be appropriate? Then again, what would incompetent scientists do? I guess we know that.

hysterical fact…Adjustments = Warmer

Bob,

You have chosen to compare absolute model temperatures with NOAA ERSST. But ERSST results are normally presented as anomalies, as is the more official NOAA SST. it’s not clear to me where you got the absolute data. Did you just add an offset to the anomaly average?

The question is important, because most of the discrepancies you are showing are in fact discrepancies of an offset, with a fairly constant difference between the curves.

Nick, as noted in the post, I used the KNMI Climate Explorer for data and model outputs. Both are presented in absolute form there.

Cheers

Bob,

So it sounds to me as if you used their grid data, as here, and integrated the absolute values. This is not exactly a NOAA official product. Simply integrating absolute values is not something they recommend .

Nick, as far as I know, with the exception of HADSST, every sea surface temperature dataset is prepared in absolute terms.

Bob,

“every sea surface temperature dataset is prepared in absolute terms”

Yes, it is, and has to be, in gridded form. But all the regular index makers average, not the absolute grid temperatures, but the anomalies. The reason is homogeneity. NOAA explains why that must be done. If you average absolutes, you get an answer that works only for the particular set of points you chose. Otherwise, your results may show an offset for no particular reason, and it seems to me that that is happening here.

Statistical concepts can be used here. With absolute data that might over or underestimate area wide actual absolute temperature, error bars would be helpful. Would this answer Nick’s objection?

If you are using anomalies you are assuming that you can decompose the absolute temperatures at time t position p over an area into a linear combination of one part, that is location specific and invariant in time over a defined time period (the base temp) and another that is time specific but invariant over the locations (the anomaly). Various way can be used to estimate the weightings given each in scaling up to give an estimate of average temp across a region, the simplest being some form of weighting based on the extent each point is deemed to be representative of the region.

The temp series that gets reported is just the anomaly, but the absolute temp can still be recreated by adding back in a term that reflects the base temps across the area of interest. These will be known, but I’m not aware of them being published. These are Nick’s offsets, and depending on the area these will differ.

Now the models happily model absolute temps at the resolutions they work at. Anomalies are derived. So we can reasonably make the comparison between these and anomalies from the temp record. The trends should be the same (which they aren’t).

Moving to the offset/base temp issue, we do know that at a global level an absolute ave temp of 14C at some recent date is accepted, and therefore we know what the base temp is at the global level. But it is unclear what adjustment is being applied at each sub-global level, but the above results don’t appear consistent between models and actuals.

HAS,

“we do know that at a global level an absolute ave temp of 14C at some recent date is accepted”

We don’t really. GISS says

That is a pretty unenthusiastic acceptance.

” These will be known, but I’m not aware of them being published.”

They shouldn’t be. Unfortunately, NOAA is not unwavering here. The point is that anomalies are homogeneous enough to be spatially averaged at the resolution available. Adequately spatially sampled. The normals are not, and so any average is only valid for that station set for that month. And it’s different every month. That’s why NOAA, in foolishly calculating an absolute average for USHCN (it was pushed), made sure all missing values were infilled before averaging.

But the bottom line here is that the NOAA ERSST figures quoted by Bob are numbers that NOAA did not calculate, and for good reason. They are numbers that Bob calculated.

Nick, IPCC AR5 WG1 seemed pretty comfortable with giving that value.

Nick, in response to your initial comment on this thread:

You wrote, “The question is important, because most of the discrepancies you are showing are in fact discrepancies of an offset, with a fairly constant difference between the curves.”

Apparently, you didn’t study the curves or their offsets very well or read the text of the post. There is no “fairly constant difference between the curves”. The differences between the modeled and observed 30-year average surface temperatures are listed below the x-axes on each graph. They vary from about +0.9 deg (models warmer than data) for the tropical oceans, to +0.6 deg C for the extratropical Southern Hemisphere, to -0.5 deg C (models cooler than data) for the extratropical Northern Hemisphere. How is that “fairly constant difference between the curves”?

Then there are the individual ocean basins, the “offsets” for them vary from about +1.0 deg C for the South Atlantic to less than +0.1 for the North Pacific. How is that “fairly constant difference between the curves”?

It looks to me as though your initial complaint is invalid, which undermines any value of the remainder of your replies to me on this thread.

Regardless, you later wrote, “So it sounds to me as if you used their grid data, as here, and integrated the absolute values. This is not exactly a NOAA official product. Simply integrating absolute values is not something they recommend .”

Not something they recommend? Any discussion on that webpage you linked…

https://www.ncdc.noaa.gov/monitoring-references/faq/anomalies.php

…regarding their preference for anomalies has to do with land surface, not sea surface, temperatures, which is why their land surface temperature data and consequently their combined land+ocean data are presented as anomalies. Yet their SST data ARE available in absolute terms.

Cheers.

PS: Yes, the SST data suppliers start with absolute values, convert to anomalies for their adjustments and then convert back to absolutes using a climatology.

Bob,

“Apparently, you didn’t study the curves or their offsets very well or read the text of the post.”

I didn’t mean constant between graphs; that would actually be manageable. I meant constant within graphs. In each there is a fairly constant offset between curves. And that is what you expect from direct integration. The temperature consists of normal+anomaly. The anomalies integrate properly, but the normal give an unpredictable outcome, which leads to apparently random offsets for the time period of the graph. That is why it isn’t done.

“It looks to me as though your initial complaint is invalid”

No, you misunderstood it. But the real complaint is that ‘Data: NOAA ERSSTv4 “Pause Buster”‘ that you have graphed consists not of NOAA numbers, but NOAA data that you have subjected to a procedure which I think is faulty. But at least it should be properly described.

“which is why their land surface temperature data and consequently their combined land+ocean data are presented as anomalies. Yet their SST data ARE available in absolute terms.”

The reason they give for using anomalies relates to spatial averaging generally; they use land examples as being more familiar. The land data corresponding to ERSST is GHCN, and that is given in absolute terms.

“Yes, the SST data suppliers start with absolute values, convert to anomalies for their adjustments and then convert back to absolutes using a climatology.”

I don’t understand that, and don’t think it is true. But the key thing is that anomaly formation is done for averaging, and that is when it matters. Until you average, you can always make or unmake the anomaly just by subtracting or adding the normal. There would be no benefit (or harm) in doing this for adjustments, and I don’t think they do.

“Until you average, you can always make or unmake the anomaly just by subtracting or adding the normal.”

And create an absolute value that corresponds to doing the same transformation as doing the anomalies.

Just to be clear, my earlier point is as Bob Tisdale wrote above. The relationship between the models and actuals don’t appear consistent across the globe. Not in trend, not in offset.

I should add that while GISS opine that in 99.9% of cases anomalies will be an adequate simplification, if you want, as here, to compare absolute model temps to actuals you are in the 0.1%.

HAS,

“you want, as here, to compare absolute model temps to actuals you are in the 0.1%”

The usual and logical way is to convert the model temps to anomalies. Or you can resort to GISS’s unenthusiastic thought. What you can’t do is average absolute temperatures. If you compute a valid numeric integral expression of something which is an adequately resolved integral, then it will not matter if, for example, you shuffle integration points (keeping adequate resolution). That works for anomalies. But if you don’t have adequate resolution, you don’t have that anchor. And that is true for the normals. The sum may change over time, as stations come and go. And it will probably not compare with a whole different lot of stations, as may be present in models.

Now it’s true that here grids are regular and missing values are unusual. But the grids would have to correspond, and of course that may not. That shouldn’t matter, but would. And there are more subtle issues like whatever is done about sea/land boundaries.

Nick, I’m sure you know that anomalies are just a technique for representing the absolute temps that make a number of simplifying assumptions. They happen to be a transformation that partials out the local variation from a base climate (ultimately derived from absolute measures and no doubt subject to change as stations come and go) and it therefore depends on a second hidden (but known) averaging process for its accuracy. The combination of these two factors allows you to also produce an absolute temp for the region, that is in fact a de facto weighting schema, that should be consistent with the actual absolute measures even as they come and go.

I should add that claiming to be able to add anomalies reliably is contingent, something that ex-cathedra claims from the temp series producers shouldn’t be allowed to conceal.

Turning to why one may well be interested in the absolute temp output from the models, this is an important variable because bits of the medium of interest (sea and air) are non-linear particularly when it comes to energy dynamics, and in the case of air particularly the models don’t resolve to this level of detail.

So of course it makes sense to test how well the models compare with absolute temp reconstructions adding back in the base climatology assumptions from the latter. If you find inconsistencies, as has been shown here, one needs to do more work to find out where the failures are. We do know that the various models run at different temperatures (not tested here) so they are unreliable on that score, but if the GISS model is internally consistent then this suggests something wrong with NOAA’s base hidden climatologies.

“media” not “medium”

HAS,

” They happen to be a transformation that partials out the local variation from a base climate “

Let me put that mathematically. I don’t see that second dependence. As you may know, I compute a monthly global index, TempLS. It works from a linear model

Tₘₙ=Lₘ + Gₙ + εₘₙ (1)

where T is temperature of a particular month, say June, m is site number, G is a global function over years n, and ε are residuals. There is an extra dof, because you could add a constant to L and subtract from G, so there is the base condition

ΣcₙGₙ=0, Σcₙ=1

where the c’s are some set of coefficients representing mean over perhaps some 30 year period.

I (and now BEST) fit this by some weighted least squares, solving jointly for L and G. I use area weighting, as if for spatial integration; BEST complicates with quality weighting. There is an implied assumption of iid ε, which isn’t right, but that doesn’t affect the coefficients too much (though it does the variance).

What the indices do is solve by:

1. Get normals; take Σcₙ of (1) to get

Nₘ = ΣcₙTₘₙ = ΣcₙLₘ+Σcₙεₘₙ ≃ Lₘ

2. Subtract for anomalies

Aₘₙ = Tₘₙ-Nₘ = Gₙ + εₘₙ (2)

solve this for G

In both cases the actual solution involved spatial integration of residuals that are approx iid. There is no integration of L (or N) and no claim that it is possible.

I have here a post showing what goes wrong when you try to average absolutes (and how you can show it to be so, and why).

Yes, and having done so you can use the parameters to give the corresponding absolute for the region/globe based on actuals. The comparison of that with the output from the models is interesting as I have noted. Unfortunately any mismatch doesn’t tell you if it is the model that is out or the integration estimates, but they seem to be far enough apart to suggest a need for more investigation of both.

Nick,

measured values are absolute values.

Whenever you need anomalies you have to calculate it in time.

But ALWAYS leave the original data untouched !

Thanks for the discussion here on the topic presented by Bob Tisdale –

conclusion is:

– 30 years of ‘climate modelling’ is for the gutter

– even worse: instead of improving the SOPs, StandardOperationProceures,

– installed models flawed frome the beginning were ‘bettered’ to the point that

– some data points to positive feedback with aberration over time where it’s never been experienced in the real world in real-time.

JW,

“Whenever you need anomalies you have to calculate it in time.

But ALWAYS leave the original data untouched !”

Did you read it? Anomalies are calculated in step 2. Original data is unchanged.

Anomaly form is presented because of two reasons:

1. Anomaly is a scary word and right away suggests that there is something wrong with perfectly normal values.

2. Showing anomalies hides the sheer discrepancy between the predicted/munged data and reality.

a question about margins of error……..when you have say a million data points and each has errors…..does having such a large number of points lessen the margin of error???? OR could the errors be compounding each other and making the margin of error much larger?….another way of asking is does having a larger number of data points automatically decrease the margin of error?

Since each of those data points is measuring something different, either in time, space or both, then no. Multiple data points does not reduce the margin of error.

This goes double since the sources of error don’t follow a Gaussian distribution.

ty, in another location i was told merely having more data points decreases the margin of error, and knew that was simply not true.

If the average error of each reading is say +/-1ºC (and that is probably being generous)…

… then that is the approximate error of the final result.

Applied to the surface data, where AW’s surface station investigations have shown that even in the USA can be as much as +/- 5C with only a few stations being +/-1C .. (going from memory)

Then surface data is probably only accurate to +/- a couple of degrees, historically even worse, especially after GHCN, NOAA, GISS have done their bit to “fix ‘ it.

You are assuming a symmetrical error. From my recollection the vast majority of the possible error modes were positive. So instead of +/-5C, it’s more like -1C to +9C.

Since it is impossible to be 100% sure that your adjustments are complete and completely accurate, any “adjustment” to the data MUST also increase the error bars. If the error bars don’t increase, than the researcher doing the adjustment is by definition a fraud.

Beyond that there are two rules of thumb for adjustments.

1) If your adjustment is less than the error bars for your adjustment, don’t do the adjustment. IE +0.1C +/- 1.0C is not worth doing.

2) If the signal you claim to have found is smaller than the adjustments you have made, then you have in reality found nothing.

Yep, I am almost certainly underestimating.

I like that you have allowed for “adjustments™™” 🙂

ty all for the responses, i summarize the claims being made of hottest year ever by HUNDREDTHS of a degree are total BS, because the margin of error is at a bare minimum plus or minus 1 full degree…….they are using statistical NOISE to make claims they cant possibly back with science.

Of course Bill – statistically insignificant. What is statistically far more unusual is that there have been so many “hottest” years in a row. Like nine of the ten or is it 14 of the last 15.

30 years of above average temperatures and the new normal is a rising.

Tony, weather depends on an energy imbalance. Therefore any short term averaged temperature will most likely be above or below an overall longer term average. On the chance it may be the same, a reasonable educated person would not expect that to continue. Further, given the nature of oceanic atmospheric teleconnected regimes, some which continue for many more decades than 3, there will be long periods now and then of short term averages being consistently above or below a longer term average. It seems then that the present warming would not be unusual in the natural state. Yes?

This NOAA and NASA fabrication is like the last outburst from an Xmas whoopee cushion before it’s put in the bin.

A.P.’s quote from Gavin Schmidt:

” . . only 12% due to el Nino . .”

Is he scientifically positive that it wasn’t 13.1%?

Clearly Schmidt has “lost his cool.” It may be record breaking for him, but not the U.S., nor the last 2000 years or last 8000 years. Significant climate change has a very long time-constant and that is not the 30 years that NASA’s Schmidt and the IPCC use. Over 80% of our global warming and cooling are driven by our sun, which is currently in the most significant “minima” in over 120 years. The time delay due to the global response is on the order of 10 years, so the real data shows that we have entered the cooling period.

It has been well documented that El Nino and La Nina are tied directly to the solar activity as well as our winds and the high-pressure / low-pressure systems. Our clouds and amount of moisture in our atmosphere are also tied closely to our sun.

It would certainly be interesting to see how Schmidt came up with his 12% number. The relation to burning of oil, coal and gas as related to CO2 is probably less than this 12% number. What causes the balance?

Heh.

Dom: “This one is huge. Compare what Trenberth says here : http://fortcollinsteaparty.com/index.php/2009/10/10/dr-william-gray-and-dr-kevin-trenberth-debate-global-warming/ …while exactly at the same moment he was writing:

From: Kevin Trenberth

To: Michael Mann

Subject: Re: BBC U-turn on climate

Date: Mon, 12 Oct 2009 08:57:37 -0600

Cc: Stephen H Schneider , Myles Allen , peter stott , “Philip D. Jones” , Benjamin Santer , Tom Wigley , Thomas R Karl , Gavin Schmidt , James Hansen , Michael Oppenheimer

i recall driving cross country long ago and seeing signs every few hundred miles saying how far it was to RENO and the burma shave thing on the signs.

http://3.bp.blogspot.com/-JP6EY9vSh-I/UvjdvWEug7I/AAAAAAAAdGg/d01OvnEils0/s1600/Screenshot+2014-02-10+at+09.08.33.png

#(:))

TY Janice, being a young hillbilly driving from Ky. to Travis AFB in Calif……i very much enjoyed seeing those signs along the way right after i wrote it i recalled there were as you just showed multiple signs showing the message and i confused the Reno signs they were Harrahs hotel signs interspersed along the way having nothing to do with the Burma shave signs.

Bill Taylor,

Thank YOU for serving in the United States Armed Forces. We are so grateful, all of us who truly love America. That was quite an adventure for a Kentucky “hillbilly.” You may not have felt very brave. But, you were.

With gratitude and respect,

Janice

P.S. Thanks for chatting, here. Much appreciated.

Odd that there is absolute NO CO2 warming signal at all in either satellite data set, ?w=720

?w=720

In the satellite data ALL warming has come from EL Nino events.

I’m guessing the “Warming™” that Gavin is talking about, and the reason he know what percentage it is, is because he and his mates CREATED it.

In USCHN, the “Adjustments™” have an R² = 0.99 correlation with CO2 increase.

That doesn’t happen by accident.

Bob, Thank you for your research and compilation and analysis of observed data. I believe, largely from your presentations of the observed data and discussion, as well as having read thousands of other climate change articles and discussion, that it’s our planet’s oceans that are the biggest players in climate warming and cooling, especially on short timescales.

Absolutely hollybirtwistle, the atmosphere is the tail the ocean wags.

+1!!! So true. I must add Atmospheric pressure drives temperature. Winds move the heat from the ocean surfaces to various parts of the land surfaces ( where we mostly live). Hardly anything global or average about any of that, especially when southern hemisphere is vastly more water than land compared to northern hemisphere.? No mountains either.

An interesting find.. apparently Europeans can’t use thermometers, so readings need massive adjustment.

http://themigrantmind.blogspot.com.au/2009/11/giss-says-european-thermometers-dont.html

And that is only to 2008.. and we know Hansen, Karl, Petersen, Schmidt, Muller et al have gone rampant with their fabrication and adjustment since then.

What?! Actual TEMPERATURES on a temperature graph?!?!

Someone help me understand why they model temperatures to get the average ? Is this just to get the figure they want ? Or was my fifth grade math teacher wrong when she taught me that to work out an average temp for one month you add up all temps for the month then divide by number of days in the month !

Lack of measurements for much of the world.

Since when is climate just weather averaged over 30 years? Who made such a stupid statement? What a crock of horse patooties. Climate is the long term balance between energy in and energy out. There are so many buffering systems and state changes for water and ice that both consume and store energy, 30 years seems a painfully insignificant period with which to determine “climate.” I might go for 500s or even better, one thousand. But not 30. That’s bureaucratic nonsense.

that is what the term “climate” means the average weather stats from the previous 30 years for a given area…the climate has no power, is not a force, and cant be called the “cause” of any weather event…….

Bill Taylor writes: “the climate has no power, is not a force, and cant be called the “cause” of any weather event…….”

In the short term sense, I agree with you, Bill. But there are root causes of climate shifts between glaciation and inter-glacial periods. Something has to turn ON “thousands of years of more snow falling on N. Hemisphere land during winter than can be melted during summer.” And something has to turn OFF “thousands of years of more snow falling on N. Hemisphere land during winter than can be melted during summer.” If someone thinks they can pin those changes on a hundred parts per million of CO2 in the atmosphere, 800 years too late, please bring it on. The burden of proof is on you, doomsday soothsayers. And not before you show me you understand the big picture, will I find any of your silly 30-year trends even slightly compelling. Let me help you with something. In statistics, you need a REPRESENTATIVE SAMPLE in order to make any meaningful comparisons. 30 years of anything in terms of climate simply will never be representative of the behavior of Earth’s climate.

But, on the remote chance any of you DOES manage to successfully explain the big shifts in climate, and your explanation stands the test of time, eventually one of you really should change that RealClimate.org page that tries to explain away the 1st 800 years of warming prior to CO2 increases in each end-of-glaciation cycle in the ice core record. Don’t forget that you need to worry about both sides of the curve, not just the warm-up side. If CO2 is at it’s peak when cool-down begins, well, that is a real bitch of a fact for your stupid CO2 drives climate change hypothesis, isn’t it?

OT but oz ABC is reporting Trumps pick for the EPA job won’t touch the current carbon policy for now .

He is not confirmed yet. All speculation.

Past data has been been molded by programmers who seem to be hockey fans.

Unfortunately, what else is new?

Molding it in “real time”?

21, 2017 at 3:08 am

Thanks for the discussion here on the topic presented by Bob Tisdale –

conclusion is:

– 30 years of ‘climate modelling’ is for the gutter

– even worse: instead of improving the SOPs, StandardOperationProceures,

– installed models flawed frome the beginning were ‘bettered’ to the point that

– some data points to positive feedback with aberration over time where it’s never been experienced in the real world in real-time