Guest essay by Eric Worrall

h/t Janice – This video dates back to July, so it might not be news for some viewers. But the video elucidates in clear and simple terms why climate model error is actually far worse than those pretty spreads provided by the IPCC. I thoroughly recommend watching the video to anyone keen to understand why climate models are so bad at prediction.

YAY!!!!!!

Oh, Eric, THANK YOU, so much, for posting this — Christmas started early (well, ho, ho, ho, with Trump’s win, it started very late November 8!).

Excellent lecture, Dr. Frank!

Best wishes getting it unstuck from that monster of a peer review situation!

Janice

#(:))

Janice – you need to write for this web space – I have your 10 year compendium so I know you can …

I have two or three pieces already and have vowed (I have outlines but little time) for several more this winter. The Green Blob will NOT go quietly; we must be loud and constant.

Best,

Jim

Thank you, Jim. That was so kind and GENEROUS of you to say that. If I have anything I think worthy of the attention of this truly distinguished group (the WUWTers), I will do that. Thank you for your encouragement!

And you, with your 2 or 3, GO FOR IT! 🙂

Green Mob

I’d like to second Bubba Cow’s sentiments. I always smile when I see your name in the Comments, and I know I will appreciate what you have submitted. Janice, you are one of the regulars here that I can count on to help me connect this or that group of dots. I can honestly say this is one of the more interesting campaign seasons I’ve seen in my decades, and the issue of politicized science–and Mr. Trump’s recognition of its importance–has just made the headlines, for once in my memory for the better. Fastening my seat belt for a bumpy ride; I can’t wait to see the next budget discussions unfold.

Anyway, cheers, and have a very Happy Thanksgiving!

Oh, Mr. Newkirk. Thank you, so much. That really boosted my spirits today. HAPPY THANKSGIVING to you, too. Janice 🙂

Gang-Green guys! Gangrene!

And BRILLIANT, Janice. AGAIN. You are on a roll! 😀

Janice Moore,

What about the Cubbies? When the cubs won, I thought anything was possible.

Very happy about the Chicago Cubs’ win, too! (but, TRUMP trumped all, this year 🙂 )

Janice ==> Congrats, you have bullied Eric, at least, into posting the YouTube — which you had already included in yesterday’s comments.

I hope the moderator allows this here in reply, but — Thank-you Anthony for permitting my work here at WUWT. It does me honor.

Thank-you Eric for posting it, and Janice, thank-you Janice. Your support has been unflagging, good-hearted, and positive right from the start. Thank-you so much! 🙂

Thanks to everyone for your interest and comments. I’ll try to get to them all, but that’ll be mostly after work-hours. 🙂

Dr. Frank ==> It would be of great service to the readers here if your could supply a transcript of your lecture, with slides (which can be stills from the lecture video).

If a full transcript is not possible (I know I would not want to be asked to transcribe 42 minutes of lecture, even my own), maybe you can write out the main points as an essay with illustrations, or maybe you have a Powerpoint that accompanied your lecture, and you could combine that with commentary.

Most of the readers here are just that: readers rather than listeners or viewers.

I understand that you have had trouble getting papers published on this topic. If you have a paper you are willing to put into the public domain, it is quite possible that Dr. Judith Curry would post it — as a technical post – at Climate Etc. — which, frankly, is a more appropriate venue than WUWT for an in-depth technical discussion of the shortcoming of numeric climate models. Email me at my first name at the domain i4 decimal net and I’d be glad to send you Judith’s email address.

Moreover, if you are not a writer….not everyone is….I would be glad to whip whatever you can put down on [electronic] paper into a passable essay [which I have done for others in the past]. Same email address.

I will point out that you could have done this at any time in the past…Anthony has issued an open invitation to all for well crafted essays not only the topic of climate science, but “News and commentary on puzzling things in life, nature, science, weather, climate change, technology, and recent news “. (here)

You are so very welcome, Dr. Frank. Glad to do it. And I think Mr. Hansen is mistaken. Your lecture is exceptionally thorough, high-calibre, analysis. I doubt that anyone at WUWT needed to be “bullied” into publishing it. Reminded, yes. Forced, no. (as if I have the power…. wry smile) No, no forcings, lol, to post it just came naturally, I feel quite certain. 🙂

Hi Kip — thanks, you’ll find a presentation of the main findings of the model error analysis on WUWT here.

I also posted a discussion on WUWT of the truly incredible review comments I’ve received here.

The review comments post also discusses the problem that unique solutions are required for model expectation values to qualify as predictions. Climate models do not attain that standard.

Pat,

One thing that stuck out in the video was the remarkable improbability of the models aligning in the way they do, given the different inputs. I wonder though whether some viewers will appreciate the significance of this. It’s a bit like a dozen people leaving New York in different directions and randomly all turning up in Los Angeles exactly three days later. Either they had determined their destination in and arrival time in advance or there was some incredible subliminal force drawing them to that conclusion.

Nick, do you mean how the model cloud errors so strongly correlate? If I understand you, then you’re right. The correlated error clearly shows a problem common to them all.

“Nick, do you mean how the model cloud errors so strongly correlate? If I understand you, then you’re right”

But the whole basis of your cloud error calculation is the differences between the models.

That isn’t correlated.

Nick writes

You can clearly see each model does its own thing with clouds. There is is systemic error that they all share and that stems from the fact that clouds cant be modeled because we dont sufficiently understand them. I think it very likely that they all implement similar ad-hoc strategies to deal with them. Some have been tuned to be closer to “cloud reality” as far as overall coverage has been concerned, some so less so. The error, however, is shared.

I’m in agreement with your New York example, Nick. Though, my first mental image was of people from various New York City Environs, e.g. Bronx, Manhattan, Newark, Connecticut, Catskills, etc., leaving for their destination at the same time.

The chart of RMS errors appears to be misdirection.

In a 25 year retrodiction global average cloudiness calculation:

Is the RMS solely for the final calculated average or is it the sum of hourly, daily, weekly, monthly, annual averages of cloudiness?

Such a small standard error of deviation for global cloudiness averages over 25 years of calculations or measurements, appears rather disingenuous; for surely there must be days/weeks where the error is much higher, if not 100%.

A GCM 25 year run, that bases an error rate solely upon the final determination, implies we should all ignore 24.9 years of the GCM run.

As the actual GCM model runs demonstrate so well, errors summarize; even when calculated in quadrature, the result is a sum. Every module, every formula run has it’s possibility of error and error summation that should be carried forward to the end. An end is not after 25 years, but every determination point reached by the program during processing; rainfall, temperature points, humidity, cloudiness, winds, fronts, SST, etc.

Nick Stokes, “But the whole basis of your cloud error calculation is the differences between the models.”

No. The basis of the cloud error calculation is the difference between the simulated cloud cover and the observed cloud cover.

“The basis of the cloud error calculation is the difference between the simulated cloud cover and the observed cloud cover.”

The models are on both sides of the observed, so the errors clearly aren’t correlated. But in fact, it’s the model differences that you actually know. The uncertainty in observed is much greater.

Lauer and Hamilton Table 4:

That is 10% in CA (cover), which means the uncertainty on observed is as large as the total range of the CMIP5 numbers. And the SCF/LCF, which is the actual TOA flux, has an uncertainty on observation of 5-10 W/m2. That’s more than the discrepancy of 4 W/m2 you are quoting for GCM “error”. The errors you are attributing to GCM’s are less than the uncertainty of the observations you are comparing with.

Nick Stokes, “The models are on both sides of the observed, so the errors clearly aren’t correlated.”

Not correct. Model cloud errors are correlated. The 20th slide shows the error correlation matrix. Of 66 inter-model pairs, 58 show correlation R≥0.5.

You’re right about the uncertainties in the observations, Nick. I didn’t include them because I wanted a focus on model error alone.

Including observational uncertainty doesn’t help your case at all, though. We’re interested in the uncertainty in a projection. The total uncertainty entering into any given projection would be the simulation uncertainty relative to observations added in quadrature to the observational uncertainty against which the model calibration simulations are measured.

So, taking the average of your 5-10 W/m^2 uncertainty in observations as representative, the total uncertainty in the tropospheric thermal energy flux in every simulation would be sqrt(4^2 + 7.5^2) = ±8.5 W/m^2. That value should then be propagated through a projection.

The uncertainty bars expand accordingly. You’ve made the situation worse for yourself.

These are only cloud uncertainties, of course. Total energy flux errors of GCMs are easily an order of magnitude greater. A full accounting of uncertainty propagated through a projection would produce sky-high uncertainty bars.

Pat,

“The total uncertainty entering into any given projection would be the simulation uncertainty relative to observations added in quadrature to the observational uncertainty against which the model calibration simulations are measured.”

No, that is completely backward. The fact that a result is hard to observe does not detract from a model; it’s often the reason the model was used in the first place, eg in astrophysics or seismic modelling to locate oil. Models quantify what you can’t observe well. If you could, you would have less need of a model.

That is what is wrong with the logic you have here. The fact that cloud cover differs from observed means nothing, because the difference is less than the uncertainty of the observation. The fact that models differ among themselves is more significant, but just says that it’s one of the things models don’t do that very well. There is no reason why a model might not predict temperature well, as Hansen’s did, even with uncertainty about cloud cover. The thing is, you have created a toy model which makes it an input, which it isn’t in GCMs. And then you claim that the error propagates as in your toy model.

Nick, “No, that is completely backward.”

No, that’s exactly the way uncertainty is calculated. In the physical sciences, anyway. Physical magnitude uncertainty and modeled magnitude uncertainty combine in quadrature to give the total uncertainty in the model expectation value.

You wrote, “Models quantify what you can’t observe well. If you could, you would have less need of a model.”

First, in the physical sciences, physical models make explicit falsifiable predictions, regardless of whether the observable is obvious or not. Observables test the model. That well-verified models are used to make useful predictions is beside that point.

Second, strongly verified falsifiable physical models provide the meaning of observables; the why of why is oil found here and not there; of why stars of certain masses have certain temperatures; of why why n-butyl lithium is instantly pyrophoric in air. None of it is due to angels or demons. Physical theory provides the causal meaning.

You wrote, “The fact that cloud cover differs from observed means nothing, because the difference is less than the uncertainty of the observation.”

But the observation is what the models are trying to simulate, Nick. Their systematic difference from the observation puts an uncertainty in the model expectation value. The uncertainty in the observation puts an uncertainty in the model target.

The total uncertainty in the model expectation value is the combination of the two.

The simulation uncertainty is a property of the physically incomplete and uncertainly parameterized models. It would almost not matter what the target cloud cover was. The models would produce a similar simulation uncertainty.

This is demonstrated, for example, in the similar systematic model uncertainty produced in cloud cover when the target was the previously accepted value of 58% global average cloudiness. See the SI (892 kb pdf) of my Skeptic article for this example.

You wrote, “There is no reason why a model might not predict temperature well, as Hansen’s did, even with uncertainty about cloud cover.”

So you’re saying that despite not knowing the annual tropospheric thermal energy flux to within 2 orders of magnitude of the CO2 perturbation, the consequent air temperature change can be accurately modeled. Earth to Nick, over . . . . 🙂

Hansen’s model did not predict air temperature in any physically meaningful way.

You wrote, “The thing is, you have created a toy model which makes it an input, which it isn’t in GCMs.

It’s not a “toy model.” It’s a GCM air temperature emulator. And it’s demonstrated to do a great job. GCMs do indeed include cloud uncertainty as an input, Nick, whenever uncertain cloud parameters are inserted. What isn’t given is the output uncertainty following directly from the input uncertainty. Seriously negligent oversight, anyone? How competent is that?

You wrote, “And then you claim that the error propagates as in your toy model.”

No. I claim uncertainty propagates as the models simulate. And GCMs are demonstrated to simulate air temperature as a linear extrapolation of forcing. Linear propagation of error follows rigorously from that.

I’m an uneducated simpleton from the heartland.

I visit this site everyday

I am personally responsible for about 25 tons of steel and 100 gallons of high solids baking enamel paint, everyday. I pass out paychecks every Friday to the people that make that happen.

Our paint line runs at 60fpm, proud of what we do.

We get inspected twice a year, not by the lo.al guys, they come from Atlanta

thanks for your work – and vote

You caught the essence, Nashville. It’s all about professional competence and integrity.

Way to go Nashville. You are the real american engine!

If I recall correctly, his graphs had error bars +/- 15 C. That’s unreasonable because the planet has never seen temperature changes that big in the last five million years. link I think we are looking at a statistical exercise which is at least as far removed from physical reality as the models themselves.

I agree that the models are crap. I’m not sure this exercise actually proves that point.

Pat Frank is careful to distinguish the model error from what is physical possible – the +/- 15C is evidence the models are rubbish, not evidence that global warming could be +15C.

Bob, this is just saying that, given the methods used in the models, results of +15, -15 degrees and everything in between are equally as likely of being true, that is all we can infer from them. The nice colored lines in the middle are not the only results.

I thought the error bars usually indicate the 2 sigma spread.

Hi, Bob (c.),

Watch the video around 16:50 and you will see that Dr. Frank shows that average cloud error (of models) is + or – 140%.

Then, at around 26:40 on and you will see that Dr. Frank is discussing the GCM annual thermal cloudcover uncertainty (error) propagation.

Try watching the whole video — it really is worth the time!

Janice

That just about describes the whole fiasco. The feedback or in reality the damping is far higher than they need to make the the C in CAGW stick.

It proves mathematically that the models are useless. Period.

Not exactly, but .the models used by ipcc to predict temperature evolution,If you want to understand how climate works on a physical point of view, models are needed.

Models are not useless but you have to be very careful dealing with them, and at least test them the way this gentleman do. You shoudl even torture them a bit.

No, as process models (tools to study weather/climate) they could very well be quite useful. That’s what they were originally designed for. But for the purpose of telling us what the climate could be in the future, they are indeed rubbish.

commieBob

If I can help, the errors that are being discussed are not those that might actually occur in nature. They are just telling us if you used this model to describe the world how far you could end up away from where the world was really at. It tells you how good your model is as a predictor.

I should add that it relies on the assumption that the simple linear model would still fit the GCMs with different theoretical assumptions about cloud behaviour, and that the error paths are each equally likely. These both seem unlikely given what we know about actual climate behaviour, as you point out.

HAS, the emulation model error does not say all paths are equally likely. It takes the average CMIP5 error and calculates an average uncertainty. Average error is not the same as “equally likely paths,” and does not constitute an assumption. It is representative, as are the uncertainty bars.

The uncertainty bars don’t tell us how far the GCM simulations could end up from reality. Instead, they show that CMIP5 simulations have no predictive content.

Finally, the model emulates how GCMs treat forcing. How GCMS treat net forcing would not depend on how models calculate clouds. Different theoretical assumptions about cloud behavior would just change the cloud error modulus of the GCMs. The structure and magnitude of their cloud error might change. This could impact the magnitude of the long wave cloud forcing error.

That, in turn, would affect the size of the uncertainty bars as the error is propagated step-wise through a simulation. One doubts, however, that the uncertainty would be much smaller or that there’d be any significant improvement in the predictive content.

CommieBob, you comment illustrates one of the very points Dr. Frank was making, which is that there is a very poor understanding of the difference between precision and accuracy. After many thousands of runs of a climate model you will probably get a nice distribution of variances from the mean forecast, but all you have is a pile of rubbish shaped like a normal distribution. He was very clear that the climate will not produce actual temperatures in the +/- 15 C range.

commieBob, the ±13 C in the head slide is an uncertainty statistic, not a physical temperature. It marks a kind of ignorance width.

The large uncertainty just says that after 100 years of model simulation error the accumulated ignorance is so large that the future simulated climate has no knowable correspondence with the true future physical climate.

Eric Worrall’s reply captures this idea. So does oeman50’s, although I wouldn’t want to defend that all temperatures between ±13 C are equally likely. 🙂 Better to say that the models give us no idea what the future temperature will be.

Mark W, believe it or not, those ±13 C uncertainty bars are 1 sigma. 🙂 They are the result of systematic error, of course, which means they’re not strictly statistically valid. But they do give a good indication of the reliability of the projection.

Listen carefully to the last part again. He specifically says that those error bars do not represent physically realizable temperatures, they represent a range that the truth must be within – somewhere. The whole point is that the error bars are so huge that the projected temperatures are nonsense.

Right on, Paul Blase. 🙂

I would assert that the error bars are so huge (and so far from reality) that the modeling approach is useless and hence nonsense. They should toss the current “model” because it fails the definition of “model”.

Agreed, Rocky. 🙂 Doesn’t mean they shouldn’t try to improve them. But that would require getting back to the hard gritty work of experimentally-driven, detail-oriented, reductionist science. No more video gaming.

I don’t really know what to make of this.

Parts of it I understood:

The reduction of these immense piles of moldy Fortran to close approximation by a simple linear function of GHG levels was lovely.

Also, he convinced me that the various Global Climate Models (GCMs) share major systematic errors w/r/t the modeling of clouds (which is completely unsurprising).

He was also persuasive in pointing out the critical difference between model precision and predictive accuracy.

However, it looked to me like he added the imprecisions of ten GCMs in quadrature to reach the conclusion that the ensemble projection was far less precise than any of the individual GCMs. It appeared that he neglected to divide by ten!

Surely not? What am I missing?

Ask Pat. I missed that, I’ll have to rewatch.

I understand what you are saying, if all the models share the same systemic error it’s difficult to see why the ensemble would be worse than individual models.

Be fascinating to see Pat’s explanation.

Well, if their errors were entirely independent, combining ten of them would yield an large improvement in precision, because you’d be adding 1/10 of each of their error margins in quadrature. (E.g., if each of the ten had an error margin of ±1 the ensemble error margin would be ±sqrt(10×(1/10)) = ±0.316.)

But if the errors were entirely systematic then combining them would yield no improvement in precision at all. just the simple average of their error margins of all ten.

Reality is certainly somewhere between those two extremes. My guess is that they’re more systematic than independent, but that’s just a guess.

Pat stated quite clearly (at least I thought it was clear) that the model errors were systemic. That was the point of the intra- and cross-model correlations and the probabilities of 10^-17 and 10^-5.

If the climate is modelled as the chaotic system that it is, then that doesn’t have to be true whether we are talking about accuracy or precision. The best we can do is look for attractors.

Taking the average works for linear time-invariant (LTI) systems. Time-invariant means the system behaves the same today as it did yesterday. Here’s an example of where that doesn’t work:

If I average the tests I do on Wednesday, the result might look accurate and precise. If I repeat the tests on Saturday and include those results in the data set, my supposed accuracy and precision have gone out the window.

commieBob, a central point is that I’m investigating climate model behavior, not the climate itself.

The emulation model shows that climate models merely linearly extrapolate forcing, to get air temperature. There’s no evident chaos in their temperature output. Therefore, direct linear propagation of error directly follows.

daveburton,

It is my understanding that when the same observer takes multiple readings with the same instrument, on the same measured parameter, the increase in precision is proportional to the square root of the number of readings. Violate any of those assumptions, and the relationship fails. That is, taking the average of a large quantity of random numbers does not justify reporting a mean with more precision than the number with the least number of significant figures. Furthermore, in an ensemble, logically there can only be one “best” result. Taking an average of it along with all the poor results should result in a less accurate representation of reality. The precision of an inaccurate value is of little value.

Just a nit, but GCM is an initialism for general circulation model, not global climate model.

Thanks, Mark. Sometimes I amaze myself with my own flubs. I managed that one despite having GCM in my own web site’s climate glossary. Impressive, eh?

Hehe.

Mark T,

Even Judith Curry makes that mistake.

Thank you, Clyde. You’ve just made me feel a lot better! 🙂

But I just noticed another flub. I had a typo in my 2nd comment.

±sqrt(10×(1/10))

should be

±sqrt(10×(1/10)²)

Sorry about that.

He used the simple model and insert the error into it to give himself a measure of how the GCMs would have performed across the theoretical error range. It saves on re running the models adjusted for the theoretical error. It does however make some critical assumptions that I’ve noted above.

HAS, if by “theoretical error range” you mean a ‘perturbed physics’ study, or a ‘parameter sensitivity study, with variances relative to an ensemble mean, that’s not what the model shows. Those two sorts of studies only reveal model precision.

The uncertainty bars derived from cloud forcing error reflect the lack of predictive accuracy.

Dave ==> You see now one of the reasons that this “important” lecture, which was delivered to a conference of Doctors for Disaster Preparedness in July this year, did not make headlines in the climate skeptic blogosphere.

The blog meteoLCD did their homework and presented a essay with slides on it, and posted a corrected/revised version recently here.

The topic of the unreliability and uncertainty of climate models has been a major topic of discussion in the climate skeptic world, and recently covered several times, once by myself, at Judith Curry’s Climate Etc.

The “Climate Models Are NOT Simulating Earth’s Climate” series by Bob Tisdale and the Chaos and Climate series by myself, as well as more technical discussion of the details of climate models have taken place at Dr. Curry’s blog and here at WUWT.

In case you’ve missed it, it is common knowledge in the mathematics world that climate models, as well as fully acknowledged by the IPCC, that climate models cannot provided long-term prediction/projection of future climate states. They simply can not.

One more lecture on the subject (or even one more blog essay, for that matter, even mine) is hardly earthshaking.

Kip, my study is the first ever to propagate error through an air temperature simulation, and to provide physically valid uncertainty limits. Bob’s presentation is acute, but he presents no error bars. Neither did you, in your essay.

There are a number of reasons why the seminar might “not make headlines in the climate skeptic blogosphere,,” the simplest being that none of the principals knew of it. Other reasons might be less complimentary.

If it really is true that, “it is common knowledge in the mathematics world [and] fully acknowledged by the IPCC, that climate models cannot provided long-term prediction/projection of future climate states,” as you have it, then it must be that the IPCC is consciously lying about there being 95-100% probability that human GHG emissions are affecting the climate.

And that the IPCC has been playing that lying game ever since 1995, when Ben Santer injected his “discernible human influence” into the 1995 SAR. Is that what you’re implying?

We all know that the claim of a CO2 impact on climate relies 100% on the reliability of climate models. Mine is the first study to put a quantitative face on their unreliability.

It also puts the IPCC and its alarmist claims to bed as incompetent, and shows that every single air temperature modeling study since at least 1987 has been physically meaningless.

Is that “hardly earthshaking“?

Dr. Frank ==> Having read your two previous WUWT posts, and your comments here, I can see why you are having trouble getting your papers published and gaining an accepting audience with climate modelers.

Your approach seems to be that used by Sir Richard Francis Burton, English explorer of the 19th century — attack the living *bleep* out of anyone showing the slightest sign of opposition or disagreement. You don’t seem to be able even to get along with people who agree with you and offer their help. Note: This didn’t sit so well with his colleagues either.

You do seem to grasp the essence of the Climate Wars — both sides vehemently attacking the other, both blind to viewpoints of those who ought to be their colleagues — and when (unsurprisingly) these attacks don’t change the minds of their opponents, descend to name calling and denigration. You have already pointed out how well this has worked for you.

Good luck with your research and career.

Thanks, Kip. I’ll leave you to your opinion.

daveburton, the thermal cloud error is systematic, not random. Your second post picked up on that. The cloud error is heavily correlated across models, so it appears they’re making a common theory-bias error. That is, an error stemming from a mistake in the deployed theory common to all the tested models.

In the systematic error case, the step-wise errors across a given simulation combine into a root-sum-squared uncertainty.

The average of simulations then is subject to the root-mean-square of the uncertainties in the individual runs.

Randy Stubbings, you got it exactly right. 🙂

“In the systematic error case, the step-wise errors across a given simulation combine into a root-sum-squared uncertainty.”

There is so much bizarre wrong stuff here. Systematic errors just add, with sign. It is random errors, with cancellation, that accumulate with summed squares (variance).

We’re talking about the accumulated uncertainty, Nick, not accumulated error. Uncertainty accumulates as the rsse of each step in a simulation.

“We’re talking about the accumulated uncertainty, Nick, not accumulated error. “

Really bizarre. The statement says, again:

“In the systematic error case, the step-wise errors across a given simulation combine into a root-sum-squared uncertainty.”

So what measure of “uncertainty” makes it different? And what is the basis for sum-square accumulation?

Nick, the rss uncertainty follows from the fact that the projected temperatures are a linear sum.

The uncertainty is propagated using the CMIP5 average long wave cloud error statistic, ±4 W/m^2; entering it into the linear emulator as an uncertainty in the simulated tropospheric energy flux, of which CO2 forcing is a part.

” the rss uncertainty follows from the fact that the projected temperatures are a linear sum”

No. RSS would follow if the changes are random. Or possibly fluctuating in some other way with cancellation. But you say here:

“the thermal cloud error is systematic, not random.”

And that seems to be the theme of your talk.

The confusion is in your slide at 13:14. You do show the errors ε₂₀₀₁, ε₂₀₀₂ etc adding. But you say the year to year “uncertainty” adds via RSS. That makes no sense. If you have a systematic error in forcing, then yes, with your linear formula you can say that has maybe a 0.01°C effect in each year, additive. There is no associated “uncertainty” that adds differently. Even if you are uncertain about the 0.01, and maybe it should be 0.011, that doesn’t fluctuate year to year in a way that would justify RSS.

Nick writes

It does make sense. Ask yourself what it means in how the model works that there are demonstrable errors in clouds when compared to reality. And yet the model appears to behave…

Nick Stokes, “No. RSS would follow if the changes are random. Or possibly fluctuating in some other way with cancellation.”

RSS is how the uncertainty propagates through any sum, Nick. For z = a+b+c+…, then ±u_z = sqrt[(u_a)^2+(u_b)^2+ …], where “u” is ‘uncertainty in.’ GCM projected air temperatures are mere linear sums.

Nick, “The confusion is in your slide at 13:14. You do show the errors ε₂₀₀₁, ε₂₀₀₂ etc adding. But you say the year to year “uncertainty” adds via RSS. That makes no sense.”

It makes complete sense. Uncertainty is not error. Error adds with sign. Uncertainty adds as RSS. Uncertainty reflects the mistakes in the underlying physical model. Those mistakes in physics remain regardless of off-setting errors.

An answer of correct magnitude obtained because of off-setting errors tells you nothing about the physical system, and does not indicate that the physical model has predictive powers. Large uncertainty bars correctly informs that the result from off-setting errors has no physically meaningful content.

He looked at one (!) source of error not accounted for by the models and demonstrated that the error produced by this one source alone (the failure to properly model cloud cover) is far greater than any predicted “greenhouse effect”, to the point where the models are useless. He also pointed out that for all of their complexity, the models boil down to one simply linear function, meaning that they do not and cannot actually be modeling the complex non-linear behavior of the real world.

” He also pointed out that for all of their complexity, the models boil down to one simply linear function, meaning that they do not and cannot actually be modeling the complex non-linear behavior of the real world.”

Well, that’s completely wrong. What he might have reasonably claimed is that the ensemble average surface temperature of a group of models progresses linearly. It’s clear from the graphs shown that that is not true for individual model runs.

But the notion that that is all they do is just absurd. They deal with the whole atmosphere. Winds, water vapor, radiation, heat transport. It isn’t just surface air temperature. And the fact that one derived ensemble average means “they do not and cannot actually be modeling the complex non-linear behavior of the real world” is just silly. I’ll show again, just one example of a GCM modelling lots of complex non-linear stuff and getting it right:

Nick writes

No. That video shows weather, not climate. Climate change is due to the gradual accumulation of energy by the earth. That’s what the GCMs must resolve. The video you keep presenting as evidence of them getting complexity right simply shows a whole lot of weather and has nothing to do with climate.

GCM energy-flux errors show that the models do not partition the energy correctly through the climate sub-systems, Nick. The video you posted shows impressively complex numerical modeling, but does not indicate any of the uncertainty in the result.

One of the hazards of modern science is that computer graphics make intuitively compelling displays. Your video is a case in point. How can something so pretty be wrong? And yet, the known large-scale flux errors of GCMs tell us that it must be so.

I recall Carl Wunsch pointing out that the ocean models do not converge. I doubt this problem has been resolved since then, and recall asking you on Steve McIntyre’s CA what the meaning was of the results from a non-converged model. I don’t believe you ever answered. The question applies equally well to your video.

Excellent presentation, well organized and easy to follow.

It saddens me that the climate community is so corrupt and are unwilling to consider other scientific concepts such as this.

Thanks, keep trying, there may be a new Sheriff in town to straighten things out, it will be a challenge though.

Thanks, Catcracking. We’ll see what happens.

video at 17:30 f “The average error in all of these climate models is 12.1 percent error in cloudiness…. The total excess greenhouse gas forcing since 1900 is 2.8 watts per square meter which means that the error in the thermal content of the atmosphere is larger than the total forcing of all of the greenhouse gases since 1900.”

Amazing, isn’t it. Mike. Strange, too, how the IPCC and the climate modelers somehow neglected to pass along this bit of information, isn’t it. 🙂

Pat

I remember your post about the temperature uncertainty and the real systematic uncertainty being in the order of degrees. Something that should be blindingly obvious to any technical person that if you don’t design your measurement equipment to measure to a certain resolution with uncertainty don’t expect it to suddenly read at a better resolution than that. And that if you aren’t maintaining what resolution and accuracy you do have with regular characterisation and calibration, don’t expect even that to be met.

I’ve had discussions on Bishop Hill about the SST adjustments and it’s the same story. Uncertainties are treated theoretically rather than realistically. My take on this is the same: I don’t mind science papers about this but I do mind if this is used to drive policy. Because once it is in the realm of policy then it needs to conform to safe use as do all other performance data sets. Or it comes with a massive disclaimer which makes its use moot.

mickyhcorbett75, I completely agree with your take on the matter of instrumental resolution, and the need for professional integrity in its application to policy. A lower limit of accuracy in the land-surface air temperature record is about ±0.5 C; all due to systematic measurement error.

Like you, I’ve looked at SST as well, and have found a couple of calibration studies that show even Argo floats show temperature errors also of about ±0.5 C. Sometimes more. The lab-calibrated accuracy of Argo sensors is at least an order of magnitude better than that, indicating a source of systematic error in the field (ocean surface) measurements.

I’ve also contacted researchers about this problem. They’re resistant to it, and they’ve expressed confidence that the Central Limit Theorem plus the Law of Large Numbers reduce all that error to zero. As with you, to me, that confidence is completely unwarranted.

The models (the GCMs) are junk.

They predict a tropospheric hot spot. Not found.

The cloud forcing errors prove the CO2 hypothesis is JUNK science.

All models are wrong. Some models are useful. The hotspot hypothesis is pure rubbish as it ignores reality of thermal mechanics.

Odd that a few of the loudest CAGW acolytes hold John Tyndall’s work on high yet have never read his full conclusion of water vapor being the dominant polyatomic gas species responsible for atmospheric insulation.

Climate (weather) 10,000,000- Climate modellers ( weather witchdoctors) No score!

Would really appreciate if Anthony (or Pat Frank or somebody) could please link to some of the more serious critiques of this work. I realize that Dr. Frank thinks the criticisms are rubbish, but would like to see them and a dialog about them if at all possible. Thanks for posting the very interesting video.

Hard to get serious criticism until he puts it in writing. There is no written account linked here – not even the slides.

Is a picture not worth a thousand words?

Facepalm. He has put it in writing. That is quite clear from the submissions to Journals that he stated he has made. As he currently has submissions in place, he probably does not want to self-publish. My understanding is journals would not accept his work if it was already splattered over the internet.

“Facepalm. He has put it in writing.”

Where? What use is that if we can’t read it? I’ll say it again – you can’t expect serious criticism unless it’s in writing. That means, unless people can read it.

Nick

There are 5 points in the final slide. You have not addressed any of them. They are written loud and clear. Answer those questions and global warming scam will be back on track.

Nick

Strawmen ! Respond to his critique. You make yourself look utterly stupid with remarks such as “put it writing”

Pat makes several very concise statements no longer than the average english sentence which you could sensibly critique.

Cloud libido 4w/m error bars are systemic not real etc

“Cloud libido …”

See! You need it in writing.

Cloud libido?? Interesting concept. So rain is what happens when clouds make out?

I think you possibly mean Albedo.

A good place to publish this work regardless of pending peer review would be http://arxiv.org – many scientists post their pre-prints there ahead of or during submission for regular publication, and this practice is accepted by journal publishers.

Nick Stokes ==> You are right, of course, we quite simply don’t really know what he has to say. I [almost] never watch long videos, they go too slow for me.

If and when Dr. Franks puts his work out where we can see it, word for word, we can take a look.

I was deeply offended by Janice Moore’s actions yesterday in her [successful] attempt to bully WUWT into posting the video. Lazy lazy lazy….if she was that impressed, she could/should have done the work.

The blog meteoLCD did [at ;least some of] their homework and presented a essay with slides on it, and posted a corrected/revised version recently here, but it is so short that it is not helpful.

His promoters should help him (Frank) get something published/posted somewhere — even if just at Curry’s — if they are sure he is on to something that others have overlooked.

Stokes,

“Hard to get serious criticism until he puts it in writing.”

I think that is a cop-out, You are essentially saying that there is no point in having science conferences with slides because no one can critique it unless the presenter also hands out a transcript. If you watched the video, and nothing jumped out at you that appeared to be an egregious error, then I think that we can at least tentatively conclude that it is a thesis that deserves serious consideration. If someone has speech-to-text conversion software it is relatively easy to provide a “written account.” However, as someone remarked, the graphs carry more information than any English sentences could, and they deserve to be responded to by someone such as yourself. You could try your classic ad hominen that “There is so much wrong here that I don’t know where to start!”

Nick, it’s a pretty clear description. Intermodel correlated cloud error. Expectation values linear with forcing. Linear propagation of error. What’s so mysterious?

” Linear propagation of error. What’s so mysterious?”

Well, for a start, why you take sum of squares if it is supposed to be systematic error. And secondly, why you feed it in as a proportional change to your toy “model of models”. That toy is at best empirical, and doesn’t predict response to such a change outside what it was based on. And it’s not at all clear that the variation in cloud cover translates into a proportional forcing change. The paper of Lauer and Hamilton that you cite as authority says:

“The problem of compensating biases in simulated cloud properties is not new and has been reported in previous studies. For example, Zhang et al. (2005) find that many CMIP3 models underestimate cloud amount while overestimating optically thick clouds, explaining why models simulate the ToA cloud forcing reasonably well while showing large biases in simulated LWP (Weare 2004).”,/i>

IOW, the disagreement can be in the description of the clouds rather than the forcing.

Nick, rss follows from the linear sum of projection delta-T. The CMIP5 long wave cloud forcing error enters the model as an uncertainty in tropospheric thermal energy content.

Call the emulator a “toy model” all you like, but it has reproduced the air temperature projection of every single climate model I’ve tested.

Lauer and Hamilton calculated and reported the average cloud error I used. As noted prior, I’m not averaging error. I’m propagating the annual average error statistic through the air temperature projection.

Kip, I don’t have any “promoters.” Janice has taken an interest in my work of her own accord. She recommended it to Anthony. That all seems very standard.

I don’t see how anyone could be “deeply offended” just because she drew attention to the work, and Eric decided to publish it. I very much doubt that Janice pressured Eric unwillingly into it. Or would want to. Your description as bullying seems to unfairly dishonor both Janice and Eric.

My DDP presentation pretty much included all the analysis, except for details of the statistical approach to error propagation and a mean free path argument concerning CO2 forcing. Enough is there for anyone to mount a critique.

As noted before, I’ve published elements of this analysis here at WUWT already, here, here, and an earlier analysis here. Not to mention the Skeptic article, which also appeared on WUWT.

I don’t recall many comments from the skeptical blogosphere community at any of them. Just now searching, I didn’t find your name among any of the comments, either.

“Lauer and Hamilton calculated and reported the average cloud error I used.”

They reported differences in cloud cover. You used that as differences in forcing. But as they pointed out, that’s not the same thing at all, and models with different cover still get forcing right.

There is a reason for that. The fluid flow solution process of GCM’s can’t model individual clouds, and they have to have separate models for that, which aren’t always consistent. But what they can do is calculate the mass of water in the region, and the temperature. So they get the right amount of condensed water. Some will describe that as diffuse clouds with high cover. Some will have less cover, but higher optical density. What Lauer and Hamilton are saying, in the part I quoted, is that both can give about the same effect on forcing. It doesn’t amount to a proportional difference in forcing, as you assume.

Nick writes

There is extreme irony in this statement when you understand that the “complex” models do the same.

Besides, thats not the point of the simple model which is to evaluate the errors, not predict climate.

Nick Stokes, “They reported differences in cloud cover. You used that as differences in forcing.”

They reported the CMIP5 annual average long-wave cloud forcing error of ±4 W/m^2. I used that.

A good running discussion can be found at realclimate where Dr’s Frank and Browning discuss this with Dr Schmidt. The discussion is about how errors propagate in the mathematical sense as Frank discusses above and how modellers use such items as constant adiabatic or hyperviscosity to contain the exponential increase in error. The best take away is that despite the claims, these models are engineering models not physics models. Unless one reads the actual works one would think modellers consider the models as physics models. They don’t. However, as engineering models, there are requirements of V&V that it appears have been avoided. To get an idea of what these models can and can’t do, and tests that should be performed but at last reading had not been done I would suggest reading Tebaldi and Knutti (T&K). In essence, it may well take up to 120 years or more to tell if a 100 year prediction is correct (T&K). This poses real problems for the verification. The claim by modellers is that their assumptions should be accepted; Dr. Frank is about why one should take that with a big grain of salt. The bottom line is that mapping X:Y is not the same as Y:X. There is only one independent run of temperature. Additional since the constraints used are often physics, temperature is not what can be solved rather mass and enthalpy, there are additional assumptions necessary to convert to a global temperature that also would need V&V, an added chance for error and its propagation if the assumptions are not well defined or initialized.

Boy, John, you put your finger right on the central issue. Quoting you, “despite the claims, these models are engineering models not physics models.” And you’re right, climate modelers, the IPCC, and all the consensus AGW people treat them as physics models when they assign physical meaning to their projections.

You’re right there’s been no V&V, and as I recall modelers themselves have derided the idea they should submit to it. Engineering models are valid within their empirical bounds. Climate projections extrapolate far, far beyond them. There’s zero reason to think they’re reliable.

S. Geiger, I posted about reviewer criticisms on WUWT here. There’s plenty of commentary below.

Anthony also let me post about the analysis itself on WUWT here, some time before the DDP presentation.

I also published a thoroughly peer-reviewed earlier version of the analysis in Skeptic magazine here. Caution, though, the air temperature uncertainties are plotted as variances in this article, rather than as the SDs. To compare with the ones here, the square roots must be taken.

http://www.realclimate.org/index.php/archives/2008/05/what-the-ipcc-models-really-say/#comment-88393

This post is orthogonal to the matter in hand. It doesn’t address systemic bias in the models.

At 13′ in my reading is that plus and minus error cancellation took plus in the models instead of taking the square root of the sum of the errors squared to produce the uncertainty. This method avoided that cancellation.

It seems obvious that plus and minus deviations are a journey either side of a mean, should be summed and definitely should not be subject to cancellation.

I believe Dr. Frank DID take the square root of the sum of the errors (around 13:35). He said that random errors will cancel out. Theory error will not. Thus, the error here is with the theory.

That’s my understanding too, square rooting the errors removes the negative sign and shows a correct uncertainty range.

I like the end comment …..This should have been sorted out 25 years ago, where were the physicists then ?

Exactly; The propagation of errors was in the first undergrad curriculum as well. Its massive and I’m surprised it amounted to no more than 14° or 114%

Keith, I’ve been wondering that, too. Where are the physicists? That question remains a huge conundrum to me.

Dr. Frank for Keith’s comment, if you would remember, G. Browning with H O Kriess published “Problems with different time scales for non linear partial differential equations”, and with a modeller, can’t remember the citation, peer reviewed work that show the exponential increase in errors in climate models. At realclimate this is where you, Dr. Browning, and others took the models for task for for using constant adiabatics or for using hyperviscosity in order to keep small non linear changes from propagating and causing the models to crash or come up with ridiculous output. Gavin defending himself well, but proved the models were really engineering models, and no one can justify X:Y, rather than Y:X, since it is an assumption that fails when trying to justify its use by comparing it with the work done on airplanes and other boundary value and initial condition non linear partial differential equations. Browning’s work was basically ignored through modellers maintaining the assumptions were justified without providing verification, as I stated above.

John, your point about Browning and Kreiss being ignored is well-taken. Even though modelers ignored the problem, I continue to wonder why physicists have let them get away with it.

‘It seems obvious that plus and minus deviations are a journey either side of a mean, should be summed and definitely should not be subject to cancellation’

In other words, once presumed systematic error has been minimised, by careful measurement of original, untampered data, one adds the errors for each run of a model.

Some notes by a non-technically trained person (for what it’s worth)

Notes on Dr. Pat Frank’s “No Certain Doom…” video

7:35 — No published study shows uncertainties or errors propagated through a GCM projection.

10:23 — “Ensemble Average” – add all ten together and divide by ten.

10:48 – A straight line equation (Frank’s “model-model”) mimics ensemble average closely,

10:58 — i.e., GCM’s merely linearly extrapolate GHG forcing,

12:45 — i.e., you could duplicate the GCMs, run on supercomputers, with a hand calculator.

13:00 — Discusses total error propagation of GCMs over time.

13:35 — Calculating uncertainty (formula) – yields a measure of predictive reliability.

13:47 — Q: Do climate models make a relevant thermal error? Answer: Yes.

14:10 — Cloud modeling is highly uncertain (discussion).

14:27 — 25 years of global cloudiness data (satellite).

16:25 — Average cloud error + – 140% (discussion of cloud error estimation — “lag-1 error autocorrelations are all at least 95% or more).

18:35 — Essentially, with that large an error, you can’t know anything about cloud effect on climate using the models.

19:00 — The cloud error is not random; it is structural (i.e., there is systematic data that models are not explaining).

19:35 — Worse, not only is there error, ALL the models are making the same kind of error.

20:00 — The errors are not random errors. Structural coherence of cloud error shows models share a common faulty theory.

21:00 — How + – 4 w/m*2 average theory error propagates in step-wise model projection of future climate (conventional time-marching method) – stepping out into 100 years in future; error is propagated out, step by step –THEORY error does not cancel out (random error does).

24:30 — Having calculated average thermal error of models, enter that error factor into the linear “model-model” (from the start of lecture, the one which accurately mimics all the GCMs) and use it to make a temperature projection.

25:02 — Result: BIG GREEN MESS (the error bars for future projections go right off the page).

25:42 — Error after 100 years: = + – 14 degrees; Error is 114 times larger than the variable. This does NOT mean (as many modelers mistakenly think) that the temperature could GET 14 deg. higher or lower – it means that the errors are beyond any physical possibility. That is, these are uncertainties, not temperatures.

26:46 — The error bars are larger than the temperature projection even from the FIRST year. The error is 114 times larger than the variable from the GET GO. Climate models cannot project reliably even ONE year out.

28:20 — James Hansen example: 1) as presented in 1988; 2) with error propagation (off the chart)

(Note: 29:00 The modelers never present their projections with a physically valid uncertainty shown – never.)

30:00 — That is, Hansen’s projections were meaningless.

32:19 Conclusions: What do climate models reveal about future average global temperature? Nothing

… about a human GHG fingerprint on the terrestrial climate? Nothing.

… “Have the courage to do nothing.”

************************************************************

{For those who want to see a corroborating opinion on the reliability of the GCMs}

Dr. Judith Curry:

(Source: https://judithcurry.com/2016/11/12/climate-models-for-lawyers/#more-22472 — emphases mine )

This is a well known issue in non-climate related technical models. You have to know and understand the fundamental underlying phenomena, how they work and how they variously interact, in order to have any confidence that the intergrated code is producing useful results. If you don’t understand a phenomon very well, you might be able to substitute a “conservative” model, that makes sure that your widget won’t fail because you could not predict all the stresses or material properties.

You cannot do this when you are trying to do a “best estimate” calculation and the results have to be correct, not conservative. Only engineers who are building something can use conservative assumptions.

The biological response of the planet to the increase in [CO2] is another phenomenon that is not well known, much less well understood, characterized, and modeled. The climate modelers use very crude approximations with lots of dials and switches. And, it is my understanding that the model runs are not made continuously throughout an epoch, but instead are stopped and restarted with new initial conditions based on data to accomodate for model drift. And what is this “hyperviscosity” thing that they are using? Have they discovered a new type of fluid?

This is absolute madness.

rxc, I can only laugh in appreciation of your eloquent description of being so entirely nonplussed by the whole business. 🙂

“The biological response of the planet to the increase in [CO2] is another phenomenon that is not well known, much less well understood, characterized, and modeled.”

Pardon Me? The biological response to increases in atmospheric CO2 are very well known and demonstrated by numerous real world experiments (not models) and agricultural practices: atmospheric enrichment of CO2 produces enhanced growth of the biosphere… always. CO2 availability is the rate limiting step at the bottom of the food chain. CO2 is the chemical feed stock for life. No model required.

Richard G.: the systemic response is not well modeled. This is one of the feedback loops that in reality makes the whole environment “non-linear and chaotic”.

https://wattsupwiththat.com/2016/10/22/chaos-climate-part-4-an-attractive-idea/

Good job Janice! You’ve provoked ( or invited) a brisk debate; and I think you’re winning! Nothing but sour grapes grumbling from Nick and his munchkins. Keep up the good work. We’ll bring you water and work your corner if that’s all we can do!

Aw, John, (smile), thank you. You are very kind and too generous — I’m just the ticket selling lady out front who kept walking back to the manager’s office and insistently knocking on the door. I got yelled at, but — it was worth it! Yay! 🙂 Glad the WUWT science giants are on the job, here!

Wow, Janice! Your summary is perfect! 🙂 You hit every major high note.

I’m really impressed with your effort. Shoot, I really owe you one. What would you like for Christmas? 🙂

Oh, Pat, you already gave me the wealth of that free lecture/education (and a generous compliment, too!). That is gift enough. THANK YOU, so much. (and, though I saw someone else offer to help you with any writing projects, I also offer to you my proofreading/editing/writing, gratis — it would be an honor — my e mail address is…… ask a mod, heh)

Also, I would like to be able to post my “Virtual Advent Calendar” again on WUWT, but, things like Veteran’s Day, Thanksgiving Day, etc., are no longer mentioned officially here. 🙁 Too many hens with ruffled feathers if I did, though, so, I understand. It was amazing, as you can see from the summary of WUWT I did, how many were angry even at Anthony rejoicing over the Fourth of July! No wonder he stopped. I wish, though, that he would start up again (and just BE HIMSELF, his wonderful, patriotic, faith-filled, self).

And

HAPPY THANKSGIVING! #(:))

While you’re there, Janice.. if indeed you ARE there… I would like to wish YOU a very Happy Thanksgiving – and to all our American cousins across the pond, too.

I came back, Luc. 🙂 Thank you! Hope the trips to the dentist are done for awhile, now. Hope it went well there last week. Have a lovely evening over there. Janice

Thanks, Janice. Your good heart is a light in the world. Happy Thanksgiving to you, too. 🙂

Trump needs to see this before he flips.

Actually it doesn’t matter. As we all know, the world is on track for 1.5C this century but on Monday in Melbourne it was 20C hotter than the day before and every living thing died…including me…er…

“30:00 — That is, Hansen’s projections were meaningless.”

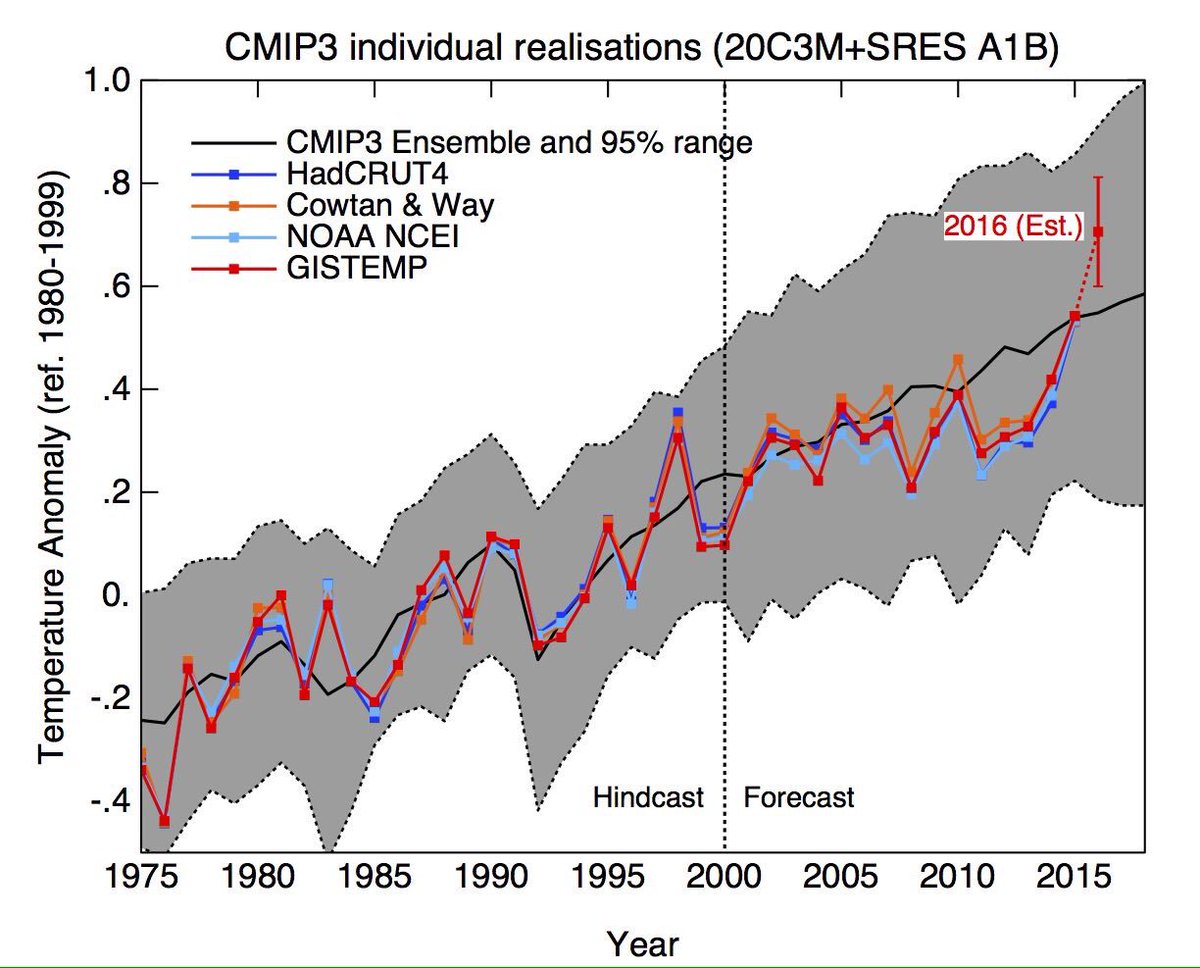

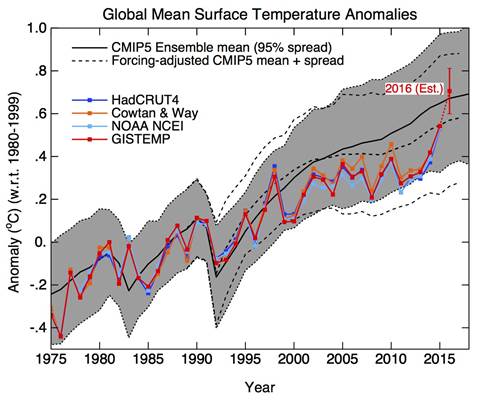

And that just isn’t true. I’ve updated the viewer here to show with latest data how Hansen’s predictions have stood up. I have superimposed in blue the index (Ts – met stations only) which is the one he was predicting. It is the index from Hansen and Lebedeff (1987). The scenarios A, B and C are showing. What has unfolded in GHG forcing is somewhere between B and C, closer to B. And GISS Ts has followed that closely. It lagged a bit around 2010, but has now overshot slightly, even exceeding Scenario A. I have shown the average of 2016 to end Oct; it will be a little lower by end year.

I have also shown the modern GISS version Ts+SST, IOW land/ocean, with SST, in brown. This has risen a little more slowly, and is now almost exactly on scen B. You can argue about whether it is tracking as closely as it should. But you certainly can’t say it is meaningless.

The ridiculously large errors claimed here are also refuted simply by the agreement of the various model projections. It isn’t perfect, but it isn’t the spread you would expect if the errors were 114 times the variable.

Nick writes

Then you dont understand what the video is about.

OK, Tim, please do tell. I’m reading Janice:

“26:46 — The error bars are larger than the temperature projection even from the FIRST year. The error is 114 times larger than the variable from the GET GO. Climate models cannot project reliably even ONE year out.”

I didn’t measure it myself, but it did seem to be what was said (very hard to be sure if people won’t put stuff in writing).

Nick wonders

The analysis shows not that the models haven’t “projected” vaguely correctly, it can be argued that they have when you just look at the graphs…but it shows that they cant have projected based on anything meaningful because the errors on clouds alone, when accumulated, wipe out any signal there may be.

So what’s left is a result of tuning. Which really means fitting against elements of climate that aren’t properly modeled. Clouds are an obvious one…

Nothing I can say will convince you of it, of course. But hey…one day Nick…

“but it shows that they cant have projected based on anything meaningful”

No, the projection worked. It is meaningful. You’re sayin g that follows from tuning. If so, it’s still meaningful.

But it doesn’t follow from tuning. Frank’s error analysis is just wrong.

Nick writes

In what way? Where is his error?

(reposted – as I’d somehow put it below)

Oh and

You know better than that.

“You know better than that.”

Nonsense. It’s a simple proposition, and anyone can see. Hansen made a projection nearly 30 years ago, and it has stood up well. That is meaningful. It doesn’t matter if PF claims it’s impossible. It happened. And for my part, I think Hansen got it right for the right reasons.

I’ve responded to your other (duplicate) below.

Nick is making the undergrad error that Pat explains at the end. The difference between systemic errors and physical errors. Go back and listen to the questions Nick.

Nick writes below (but lets bring it back up here

But he showed the errors were systemic. There is no cancelling.

And

No. A fitted projection will vaguely work if we continue to warm but it has no actual meaning. An analogy might be that the stock market tends to overall increase in the long term perhaps due to more new and improving businesses but you can never project what it’ll be at any given point because whatever method of projection you use, it has no basis in reality.

Nick writes

Re-watch it. He said he takes the average error of the variances and uses that to calculate his +-4W.

You said: No, the projection worked. It is meaningful. [Tim is] saying that follows from tuning. If so, it’s still meaningful.

What I heard from that: “The numbers are close, so the GCMs must be meaningful; even if it takes tuning to do it, being close enough that tuning helps still makes them meaningful.”

I could say the same thing about “projections” of my local surface air temperature based on throwing at a dart board or rolling a 20-sided die, with “tuning” to account for seasonal variation, lat/long., etc. The fact that a large percentage of my “projections” & actual observed temperatures will be within a narrow distance of one another doesn’t mean I’ve found something meaningful about the relationship between dart-throwing and weather forecasts. It just means the two number sets happen to overlap — and that’s it.

Just so we’re clear, I’m NOT trying to compare the average GCM to a random d20 roll or throw of darts, per se, even facetiously; I AM trying to say that, based on Frank’s presentation, the current GCMs may be little more relevant than such ‘random’ number generators to the actual prediction/projection of future climate, despite their complexity. Judging from the presentation, one might even be persuaded to think it a strong probability at this point.

Put more simply, & in another way: creating a computer model which predicts that Alabama will beat Florida 61 – 48 (or vice versa; FL can win 70 to -2 for all I care) is all very well, and looks pretty impressive if the actual result ends up AL-59 to 46- FL… but what does it really mean if the two teams were actually playing basketball when the model programmers THOUGHT the two teams were playing football?

Stokes,

You said, “And for my part, I think Hansen got it right for the right reasons.” Your belief may be misguided. One of the greatest intellectual ‘sins’ is to be right for the wrong reason. It isn’t sufficient to just believe that Hansen got it for the right reason. One might easily complain that Hansen’s prediction is no more than a spurious correlation. TTTM has suggested that Hansen’s predictions are the result of tuning, and not that the underlying physics are correct. I think that you have to demonstrate that TTTM is wrong and PF is wrong. So far, you haven’t presented what I would accept as a compelling argument for either.

Oh come on. Were the GCMs run in 1960? So amazingly they handcart accurately when tuned to handcart accurately. Pointless to show that part if the graph, and deliberately misleading. And a sharp spike probably due to a big El Nino now makes the GCMs accurate? If you can’t be honest about this, we have to assume the GCMs are not working. Otherwise why fudge and mislead?

“Were the GCMs run in 1960?”

No, Hansen’s GCM was run (for the 1998 paper) during years 1983 to 1987. But as usual, they ran for a long time on historic forcings to set the state for recent and present (1988).

There was a much criticised period from about 2000-2014 when the predictions seemed a bit warmer than observed. Now they are quite a bit cooler. That difference will reduce again. But the claim here is not that there are discrepancies, but that the Hansen projection is meaningless because of claimed huge errors. And that is clearly not so.

Exactly Nick:

Just the usual from the usual here.

“Post-truth” indeed and if it wasn’t for you and Leif, and maybe me and a few others, then, we’d just have the fan-boys cheering on the the “post-truthers” (vis “non-technically trained person” ) in the echo-chamber.

“(for what it’s worth)” . Worse than zero actually, when combined with ideological bias.

Toneb writes

And you didn’t understand the video either.

I do not know about the rest but HadCRUT4 is only four years old. (Morice et al. J. Geophys. Res. 2012) So it couldn´t have been used for hindcasting. And if HadCRUT4 has not used in hindcasting it cannot be used to validate the model.

Funny how the hi cast predicts golcanic activity, yet it isn’t tuned to match the past.

Omg auto spell check

Hind not hi

Volcanic not golcanic

Should probably use the actual base periods rather than recenter on 1990.

Nick writes

In what way? Where is his error?

As someone noted above, he adds variances but doesn’t divide by N. Actually I think the error there is more subtle. But he did a similar analysis here. That at least was in writing. It concerned surface indices, and again claimed that they were subject to huge errors. Basically he refused to acknowledge the effect of cancelling. But again, that flies in the face of common knowledge. SATs are not meaningless. People here are discussing them all the time. Different folks, including me, calculate them, and they agree. People here make all sorts of arguments about their meaning. Not always to good effect, but very few seem to think they have the kind of error overlay that Pat Frank asserts.

I’m certainly not going to give any credit to this very similar analysis until I see it in writing.

Nick, “As someone noted above, he adds variances but doesn’t divide by N.”

The propagated uncertainties are not averages, Nick. There’s no reason to divide by N.

Nick, “But he did a similar analysis here.” No, I did not. That was a different approach entirely, which in fact did involve taking averages. The problem for you there, is that the systematic error averages as rms, and does not diminish as 1/sqrtN.

“Basically he refused to acknowledge the effect of cancelling.” Wrong again, Nick. You completely failed to understand that limited instrumental resolution introduces errors into measurements. Those errors result in incorrect rounding. The measurement errors are then transmitted right into the average, proper rounding notwithstanding. It seems you never got that point.

You wrote, “Different folks, including me, calculate [SATs], and they agree.” Sure. They are the same data and all have the same systematic errors.

The “error overlay” I used has been reported in the literature from sensor calibration experiments, Nick. The systematic errors are known and known to be large. I’ve merely applied them to the global record, which no one else has seen fit to do.

You wrote, “I’m certainly not going to give any credit to this very similar analysis until I see it in writing.”

It’s not a similar analysis. It’s a different analysis.

Uncertainty in the SAT is rmse. Propagated uncertainty in air temperature projections is rsse. The analysis varies with the sort of data being assessed. They both deal with the large systematic errors contaminating the data. But that is beside the point you intend.

Nick, Hansen’s ‘prediction’ ignores the facts that disproves CAGW, that disproves AGW. If observations disprove a theory, the theory in question predictions are pointless.

Whether the planet will or will not warm or cool in the future has nothing to do with anthropogenic CO2 emission.

There is observational evidence that greenhouse gases cause no warming which is a paradox. A paradox is an observation that is not possible if a theory is correct.

There is a reason the cult of CAGW talks on and on and on the warmest year in ‘recorded’ history. ‘Recorded’ history is the temperature in the last 150 years. There is a reason every CAGW ‘presentation’ is a monologue with restricted scope.

1) Latitudinal warming paradox (the corollary of the latitudinal warming paradox is the no tropical tropospheric hot spot paradox) i.e. Global warming is not global.

2) The earth has warmed and cooled in the past paradox

It is a fact that the earth cyclically warms and cools, with the majority of the warming occurring at high latitudes. It is also a fact that solar cycle changes correlate with past cyclic warming and cooling of the planet.

The warming that has occurred in the last 150 years is primarily high latitude warming.

As CO2 is evenly distributed in the atmosphere and as the most amount of long wave radiation emitted to space is at the equator, the most amount of ‘greenhouse’ gas warming should have occurred at the equator.

There is cyclic warming in the paleo record both poles.

3) The lack of correlation in the paleo record

For example, there are periods of millions of years when atmospheric CO2 has been high and the planet is cold and vice versa. There is not even correlation in the paleo record.

http://wattsupwiththat.files.wordpress.com/2012/09/davis-and-taylor-wuwt-submission.pdf

I wonder what caused cyclic warming and cooling on the Greenland Ice sheet in the past? Curious that the same periodicity (time between events, 1500 years with a beat of +/- 400 years) between all warming and cooling events/cycles (including the massive ‘Heinrich’ Event is the same (same periodicity, same forcing function). It is also really weird that the warming and cooling periodicity is observed in both hemisphere.

Greenland ice temperature, last 11,000 years determined from ice core analysis, Richard Alley’s paper. William: As this graph indicates the Greenland Ice data shows that have been 9 warming and cooling periods in the last 11,000 years.

http://www.climate4you.com/images/GISP2%20TemperatureSince10700%20BP%20with%20CO2%20from%20EPICA%20DomeC.gif

oh my god, CO2 has an negative correlation wit air temperature!!1!

I theorize that we will all freeze to death. in 2100.

The entire scientific basis in the IPCC reports is incorrect. The IPCC reports are propaganda (see climategate emails). The IPCC reports for example did not include the long term paleo record (temperature last 11,000 years which shows the planet has warmed and cooled cyclic, same high latitude warming, and the planet was roughly 1C to 1.5C warmer during this current interglacial.)

1) Latitudinal temperature paradox (Strike 1)

The latitudinal temperature anomaly paradox is the fact that the latitudinal pattern of warming in the last 150 years does match the pattern of warming that would occur if the recent increase in planetary temperature was caused by the CO2 mechanism.

The amount of CO2 gas forcing is theoretically logarithmically proportional to the increase in atmospheric CO2 times the amount of long wave radiation that is emitted to space prior to the increase. As gases are evenly distributed in the atmosphere the potential for warming due to the increase in atmospheric CO2 should be the same at all latitudes.

http://arxiv.org/ftp/arxiv/papers/0809/0809.0581.pdf

As the amount of warming is also proportional to amount of long wave radiation that is emitted to space prior to the increase in atmospheric CO2, the greatest amount of warming should have occurred at the equator.

There is in fact almost no warming in the tropical region of the planet. This observational fact supports the assertion that majority of the warming in the last 50 years was not caused by the increase in atmospheric CO2.

http://www.eoearth.org/files/115701_115800/115741/620px-Radiation_balance.jpg

2) The planet cyclically warms and cools with the majority of the temperature change occurring at high latitudes. (See Greenland ice sheet temperature above last 11,000 years).

“2) The planet cyclically warms and cools with the majority of the temperature change occurring at high latitudes. (See Greenland ice sheet temperature above last 11,000 years).”

Err, it’s obviously escaped your notice, but Greenland is not “the planet”.

Neither is high latitude plateau with average temps ~ -30C in any way a proxy for it.

And BTW: this is what it would look like updated with modern temps.

(The Alley graph ends in 1855 – before any modern warming began)

http://2.bp.blogspot.com/-hksiecM4u3Q/VLYC3ecYOKI/AAAAAAAAAP4/ZsJFpmrxgZo/s1600/GISP2%2BHADCRUT4CW%2BHolocene.png

“And BTW: this is what it would look like updated with modern temps …” (Toneb).

================================================

That is a ridiculous comment, the red trend line is at a much higher resolution than the Alley graph.

Besides the peak around 1940 could not possibly be due to human CO2 emissions which according to the US Carbon Dioxide Information Analysis Center (CDIAC) were relatively negligible prior to ~1950.

And besides a highly smoothed time series graph should never be extended beyond its formal limits.

“That is a ridiculous comment, the red trend line is at a much higher resolution than the Alley graph.”

No, sorry the Alley graph does NOT show modern warming.

Anthony knows that, as it is in the “Disputed graphs” section here.

Now why didn’t you?

https://wattsupwiththat.com/2013/04/13/crowdsourcing-the-wuwt-paleoclimate-reference-page-disputed-graphs-alley-2000/

NB: That page is from April 2013, so add on the last 4 years of warming from GISS.

(~0.3C).

Christopher:

Could you please give me a solitary (as at a single geographic location) proxy that you would accept in the “hockey-stick”…… for the Globe.

Exactly.

This is a link to a graph that shows the amount of short wave radiation (sun light) that strikes the earth by latitude and the amount of long wave radiation that is emitted by the earth also by latitude.

The link I provided above to the same graph did not work.

http://www.physicalgeography.net/fundamentals/images/rad_balance_ERBE_1987.jpg

The correspondence doesn’t mean a physical thing, Nick. The underlying uncertainty is hidden from view. See the discussion of that point around Figure 1, here. None of those projections represent unique solutions (or tightly-bounded solution sets) to the problem of the climate energy-state.

You could also find the same correspondence using a family of polynomials. Would the one with the best correlation to the observations have physical meaning? That’s your analysis, isn’t it. Correlation = causation.

And in light of your certainty, what do you make of this statement of Hansen’s “Close agreement of observed temperature change with simulations for the most realistic climate forcing (scenario B) is accidental, given the large unforced variability in both model and real world.” in Hansen, et al., 2006 PNAS 103(39), 14288–14293; doi: 10.1073/pnas.0606291103

He seems to disagree with you.

“You could also find the same correspondence using a family of polynomials.”

You could, after the fact. But Hansen was predicting, not fitting polynomials. And he got it right.