Guest Post by Bob Tisdale

If you’ve read the first two posts in this series you might already believe you know the answer to the title question. Those two posts were:

- Do the Adjustments to Sea Surface Temperature Data Lower the Global Warming Rate? (WattsUpWithThat cross post is here.)

- UPDATED: Do the Adjustments to Land Surface Temperature Data Increase the Reported Global Warming Rate? (WattsUpWithThat cross post is here.)

In this post, we’ll compare “raw” global land+ocean surface temperature data and the end products available from Berkeley Earth, Cowtan and Way, NASA GISS, NOAA NCEI and UK Met Office.

END PRODUCTS

Berkeley Earth – This land+ocean dataset is made up of the infilled land surface air temperature data created by the Berkeley Earth team and their infilled version of the HADSST3 sea surface temperature product from the UK Met Office (UKMO). For their merged land+ocean product, Berkeley Earth also infills data missing from the polar oceans, anywhere sea ice exists. They accomplish this infilling two ways, creating separate datasets: First, using sea surface temperature data from adjacent ice-free oceans. Second, using land surface air temperature data from adjacent high-latitude land masses. For this post, we’re using the data with the land-based infilling of the polar oceans to agree with the Cowtan and Way and the GISS Land-Ocean Temperature Index, both of which rely on land-surface temperature data for infilling. The annual Berkeley Earth Land+Ocean data can be found here.

Cowtan and Way – The land+ocean surface temperature data from Cowtan and Way is an infilled version of the UKMO HADCRUT4 data. (Infilled by kriging.) As noted above, Cowtan and Way also infill areas of the polar oceans containing sea ice using land-based surface air temperature data. The annual Cowtan and Way data are here.

NASA GISS – The Land-Ocean Temperature Index (LOTI) from the Goddard Institute of Space Studies (GISS) is made up of GISS-adjusted GHCN data from NOAA for land surfaces and NOAA’s ERSST.v4 “pause buster” sea surface temperature data for the oceans, the latter of which has already been infilled by NOAA. GISS infills missing data for land surfaces by extending data up to 1200km. GISS also masks sea surface temperature data in the polar oceans (anywhere sea ice has existed) and extends land surface air temperature data out over the polar oceans. The GISS LOTI data are here.

NOTES – For summaries of the oddities found in the new NOAA ERSST.v4 “pause-buster” sea surface temperature data see the posts:

- The Oddities in NOAA’s New “Pause-Buster” Sea Surface Temperature Product – An Overview of Past Posts

- On the Monumental Differences in Warming Rates between Global Sea Surface Temperature Datasets during the NOAA-Picked Global-Warming Hiatus Period of 2000 to 2014

Even though the changes to the ERSST reconstruction since 1998 cannot be justified by the night marine air temperature product that was used as a reference for bias adjustments (See comparison graph here), and even though NOAA appears to have manipulated the parameters (tuning knobs) in their sea surface temperature model to produce high warming rates (See the post here), GISS also switched to the new “pause-buster” NCEI ERSST.v4 sea surface temperature reconstruction with their July 2015 update. [End notes.]

NOAA NCEI – The NOAA Global (Land and Ocean) Surface Temperature Anomaly reconstruction is the product of the National Centers for Environmental Information (NCEI). NCEI merges their new “pause buster” Extended Reconstructed Sea Surface Temperature version 4 (ERSST.v4) (see notes above) with the new Global Historical Climatology Network-Monthly (GHCN-M) version 3.3.0 for land surface air temperatures. The ERSST.v4 sea surface temperature reconstruction infills grids without temperature samples in a given month. NCEI also infills land surface grids using statistical methods, but they do not infill over the polar oceans when sea ice exists. When sea ice exists, NCEI leave a polar ocean grid blank. The source of the NCEI values is here. Click on the link to Anomalies and Index Data.

UK Met Office – The UK Met Office HADCRUT4 reconstruction merges CRUTEM4 land-surface air temperature product and the HadSST3 sea-surface temperature (SST) reconstruction. CRUTEM4 is the product of the combined efforts of the Met Office Hadley Centre and the Climatic Research Unit at the University of East Anglia. And HadSST3 is a product of the Hadley Centre. Unlike the other reconstructions, grids without temperature samples for a given month are not infilled in the HADCRUT4 product. That is, if a 5-deg latitude by 5-deg longitude grid does not have a temperature anomaly value in a given month, it is left blank. Blank grids are indirectly assigned the average values for their respective hemispheres before the hemispheric values are merged. The annual HADCRUT4 data are here, per the format here.

“RAW” DATA

For the “raw” global land+ocean surface temperature data, we’re using a weighted average of the global (90S-90N) ICOADS sea surface temperature data (71%) and the global “unadjusted” GHCN data from Zeke Hausfather (29%). (To confirm the percentage of Earth’s ocean area, see the NOAA webpage here.)

ICOADS – This is the source sea surface temperature data used by NOAA and UKMO for their sea surface temperature reconstructions. The source of the ICOADS data is the KNMI Climate Explorer. Also see the post Do the Adjustments to Sea Surface Temperature Data Lower the Global Warming Rate?

For the unadjusted land surface air temperature data, Zeke Hausfather (a member of the Berkeley Earth team) has graciously updated his monthly unadjusted global GHCN land surface temperature data through March 2016, using the current version of the GHCN data. (Thank you, Zeke.) See Zeke’s comment here on the cross post at WattsUpWithThat of the original land surface air temperature post. The link to that current version of the “raw” data is here. Also see the post UPDATED: Do the Adjustments to Land Surface Temperature Data Increase the Reported Global Warming Rate?

GENERAL NOTES

The WMO-preferred base years of 1981-2010 are used for anomalies for the ten comparison graphs.

We excluded the polar oceans in the post Do the Adjustments to Sea Surface Temperature Data Lower the Global Warming Rate?. That is, we limited the latitudes to 60S-60N because the sea surface temperature reconstructions account for sea ice differently. We’re taking a different tack in this post: because the suppliers of the end products handle sea ice differently (Some manufacturers infill data when and where sea ice exists, others don’t infill. Infilling is another form of adjustment.), I’m including the polar oceans in the “raw” sea surface temperature product, including the data for the latitudes of 90S-90N and comparing that to the global end products.

For the “raw” data from ICOADS, if a 2.5-deg latitude by 2.5-deg longitude grid does not contain observations-based data, it is left blank. This means there are no temperature measurements in the polar oceans when sea ice exists…like the UKMO HADCRUT4 data and the NOAA/NCEI data.

In past posts I have mentioned one of the problems with infilling the temperature data for the polar oceans by extending land surface air temperature data out over sea ice. That problem: the method fails to consider that polar sea ice during the summer likely has a different albedo than surface station locations where snow has melted and exposed underlying land surfaces. That is, sea ice will tend to reflect sunlight while exposed land surfaces would absorb it. That problem is compounded in the Arctic Ocean when ice-free ocean exists between land and sea ice. The ice-free ocean has yet another albedo, which is not the same as the ice surface or the snow-free land mass. Those problems do not exist in winter when snow covers both sea ice and land surfaces and when the sea ice covers the Arctic Ocean to the shoreline, so the problem is seasonal.

Regardless of the season, any polar temperature data created by infilling is make-believe data.

Let’s start with the long-term data.

LONG-TERM TREND COMPARISON

This comparison starts in 1880 because the GISS and NOAA/NCEI data begin then. See Figure 1. Because the adjustments to the sea surface temperature data reduce the amount of warming during the early 20th Century, the “raw” data have the highest long-term warming rate. Or phrased differently, the adjustments have reduced the reported global warming since 1880.

Figure 1

Note: The excessive warming of the “raw” surface temperature data from the early 1900s to the mid-1940s (due to the sea surface temperature component) presented a problem for climate models. Most of the warming then was not caused by man-made greenhouse gases (according to the models), but the warming trend of the “raw” data from the early 1900s to the mid-1940s was much higher than their recent warming rates. For confirmation, see the graph of 30-year running trends here. The bias corrections for the data prior to 1940 reduced those problems for the models, but did not eliminate them. That is, the models still cannot explain the initial cooling of global sea surface temperatures from 1880 to about 1910, and, as a result, the models cannot explain the warming from about 1910 to the mid-1940s. [End note.]

TREND COMPARISONS FOR 1950 TO 2015

1950 was one of the mid-20th Century start points used by NOAA in their study Karl et al (2015) Possible artifacts of data biases in the recent global surface warming hiatus…the “pause buster” paper. As shown in Figure 2, for the period of 1950 to 2015, the GISS and NCEI data have noticeably higher warming rates that the other datasets. As you’ll recall, both GISS and NCEI use NOAA’s ERSST.v4 “pause-buster” sea surface temperature data, which have not been corrected for the 1945 discontinuity and trailing biases presented in Thompson et al. (2008) A large discontinuity in the mid-twentieth century in observed global-mean surface temperature. On the other hand, the other datasets (Berkeley Earth, Cowtan and Way, and HADCRUT4) use the UKMO HADSST3 data, which have been corrected for those mid-20th Century biases.

For a more-detailed discussion of NOAA’s failure to account for those biases with their ERSST.v4 “pause-buster” data, see the post Busting (or not) the mid-20th century global-warming hiatus, which was also cross posted at Judith Curry’s ClimateEtc here and at WattsUpWithThat here.

TREND COMPARISONS FOR 1975 TO 2015

1975 is a commonly used breakpoint for the transition from the mid-20th Century cooling or slowdown (depends on the dataset) and the recent warming period. Figure 3 compares the “raw” and “adjusted” global warming rates from 1975 to 2015. There is a 0.019 deg C/decade spread in the trends, with the Cowtan and Way data having the highest warming rate and the NOAA/NCEI data having the lowest…even lower than the “raw” data.

Figure 3

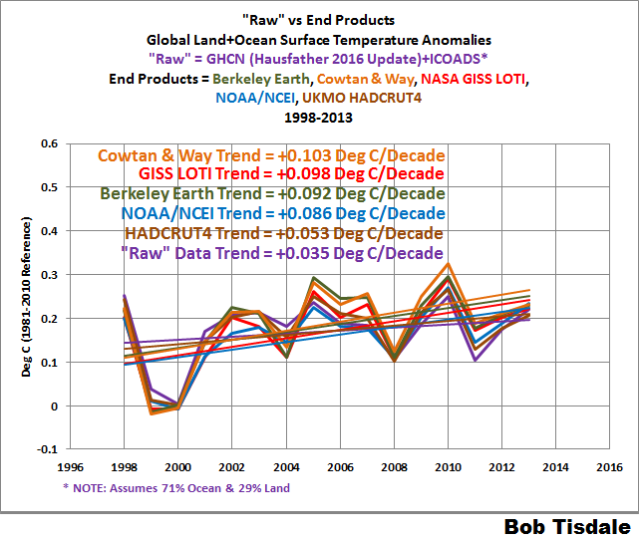

TREND COMPARISONS FOR 1998 TO 2015

Figure 4 compares the “raw” and “adjusted” global surface temperature anomalies starting in 1998, which is often used as the start year of the slowdown in global warming. The GISS LOTI and NOAA/NCEI data have the highest warming rate, a result of NOAA’s excessive tweaking of the parameters (tuning knobs) in the model that manufactures NOAA’s “pause buster” ERSST.v4 sea surface temperature data, creating a trend near the high end of the parametric uncertainty range. At the other end of the spectrum, the UKMO HADCRUT4 data has a trend that’s very similar to the “raw” data. Keep in mind that the HADSST3 sea surface temperature data (used in the HADCRUT4 combined land+ocean data) have been adjusted for ship-buoy biases.

Figure 4

Both the Berkeley Earth and the Cowtan and Way land+ocean data rely on and infill HADSST3 data for the ocean portion, yet the Cowtan and Way data have noticeably higher warming rate than the Berkeley Earth data during this period. (Maybe Kevin Cowtan or Robert Way will stop by and explain that for us.)

As a reference, based on the model-mean of the climate models stored in the CMIP5 archive, which represents the consensus of the modeling groups, the expected warming rate during the period of 1998 to 2015 is 0.233 deg C/decade with the worst-case RCP8.5 scenario, and that’s about 0.1 deg C/decade to 0.13 deg C/decade higher than observed…thus my use of the term “slowdown”.

WAS THERE A “HIATUS” IN GLOBAL WARMING?

For this heading, I’m going to borrow and update the text from the post Do the Adjustments to Sea Surface Temperature Data Lower the Global Warming Rate?

Of course there was a hiatus, but the extent of the slowdown depends on the global land+ocean temperature dataset and the period to which the slowdown is compared. Figure 5 includes the “raw” and “adjusted” global sea surface temperature anomalies for the period of 1998 to 2013. We ended the data in 2013, because:

- 2013 was an ENSO neutral year…that is there no El Niño or La Niña. (See NOAA’s Oceanic NINO Index here.)

- The Blob and the weak El Niño conditions were the primary causes of the naturally occurring uptick in global surface temperatures in 2014 and,

- The continuation of The Blob and the strong El Niño conditions were the primary causes of the naturally occurring uptick in global surface temperatures in 2015.

Figure 5

Note 1: To confirm the second and third bullet points, we discussed and illustrated the natural causes of the 2014 “record high” surface temperatures in General Discussion 2 of my free ebook On Global Warming and the Illusion of Control (25 MB). And we discussed the naturally caused reasons for the record highs in 2015 in General Discussion 3.

Note 2: Some may claim the start year of 1998 is cherry-picked because it’s an El Niño decay year. That’s easily countered by noting that the 1997/98 El Niño was followed by the 1998 to 2001 La Niña. (Once again, see NOAA’s Oceanic NINO Index here.) Also, 1998 was used as a start year by Karl et al. (2015) and the period of 1998 to 2013 is also one year longer than the period of 1998 to 2012 used by NOAA in that paper.

[End notes.]

Karl et al. (2015) also used a sleight of hand in their trend comparisons by using 1950 as the start year of the recent warming period. The IPCC did the same thing in their analyses of it in Chapter 9 of their Fifth Assessment Report (See their Box 9.2). Both groups referenced the hiatus warming rates to periods starting in 1950 or 1951. Why does that indicate they were using smoke and mirrors? The trends from those 1950 or 1951 start dates include the slowdown or cooling of global surfaces that occurred from the mid-1940s to about 1975. So let’s present the trends from the start of the recent warming period (1975) to the end of the 20th Century (1999). See Figure 6. As you’ll recall, the year 1999 was used by NOAA in Karl et al. (2015). (Again refer to Figure 1 from Karl et al. (2015).)

Figure 6

Only the UKMO’s HADSST3 data have a higher warming rate than the “raw” data during this period. Some readers might believe the other data suppliers have reduced the reported global warming during the period of 1975 to 1999 to suppress the extent of the slowdown that followed.

Now let’s compare the trends for the periods of 1975 to 1999 (Figure 6) and 1998 to 2013 (Figure 5). The slowdowns (1975-1999 trends minus 1998-2013 trends) are:

- HADCRUT4 slowdown = 0.137 deg C/decade (compared to +0.190 deg C/decade for 1975-1999)

- NOAA/NCEI slowdown = 0.087 deg C/decade (compared to +0.173 deg C/decade for 1975-1999)

- Berkeley Earth = 0.086 deg C/decade (compared to +0.178 deg C/decade for 1975-1999)

- GISS LOTI = 0.080 deg C/decade (compared to +0.178 deg C/decade for 1975-1999)

- Cowtan and Way = 0.079 deg C/decade (compared to +0.182 deg C/decade for 1975-1999)

Not too surprisingly, the “adjusted” dataset with the no infilling (HADCRUT4) shows the greatest slowdown.

The “raw” data show a slowdown of about 0.148 deg C (compared to +0.183 deg C/decade for 1975-1999), a slightly greater slowdown than the HADCRUT4 data.

And for those of you wondering about climate models, the model mean (the model consensus) of the CMIP5-archived models (with historic and RCP8.5 forcings) shows a higher warming rate (+0.223 deg C/decade) during 1998 to 2013 than during 1975 to 1999 (+0.154 deg C/decade). That is, according to the models, global warming should have accelerated in 1998 to 2013 compared to the period of 1975 to 1999. Instead, the data show a deceleration of global warming.

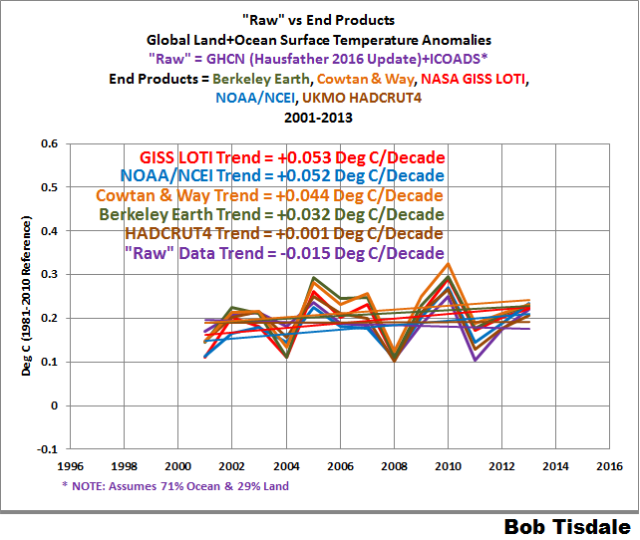

But other start years have been used for the recent “hiatus” in global warming. NCAR’s Kevin Trenberth used 2001 in his 2013 article Has Global Warming Stalled? for the Royal Meteorological Society. (My comments on Trenberth’s article are here.) Figure 7 compares the trends for the “raw” global land+ocean surface temperature data and the end products for the period of 2001 to 2013. The “raw” data show a slight cooling over this short time period. The trend of the UKMO HADCRUT4 data is basically flat at 0.001 deg C/decade. At the high end are the GISS LOTI and NOAA/NCEI data, which should result from NOAA’s excessive parameter tweaking.

Figure 7

The slowdowns (1975-1999 trends minus 2001-2013 trends) are:

- HADCRUT4 slowdown = 0.189 deg C/decade (compared to +0.190 deg C/decade for 1975-1999)

- Berkeley Earth = 0.146 deg C/decade (compared to +0.178 deg C/decade for 1975-1999)

- Cowtan and Way = 0.138 deg C/decade (compared to +0.182 deg C/decade for 1975-1999)

- GISS LOTI = 0.125 deg C/decade (compared to +0.178 deg C/decade for 1975-1999)

- NOAA/NCEI slowdown = 0.121 deg C/decade (compared to +0.173 deg C/decade for 1975-1999)

Because the “raw” data trend is negative over this timeframe, the slowdown is greater than the trend for 1975 to 1999.

And once again, the climate models shows a higher warming rate (+0.184 deg C/decade) from 2001 to 2013 than for the period of 1975 to 1999 (+0.154 deg C/decade).

LET’S LOOK AT THE TRENDS FOR THE EARLY COOLING PERIOD, THE EARLY 20TH-CENTURY WARMING PERIOD AND THE MID 20TH-CENTURY SLOWDOWN/COOLING PERIOD

In past posts and in my book Climate Models Fail, I used the breakpoints of 1914, 1945 and 1975 when dividing the data prior to the recent warming period. The years 1914 and 1945 were determined through breakpoint analysis by Dr. Leif Svalgaard of a former GISS LOTI dataset (the version that used ERSST.v3b data). See his April 20, 2013 at 2:20 pm and April 20, 2013 at 4:21 pm comments on a WattsUpWithThat post here. And for 1975, I referred to the breakpoint analysis performed by statistician Tamino (a.k.a. Grant Foster). With the inclusion of the NOAA ERSST.v4 “pause-buster” sea surface temperature data in the GISS LOTI data, I suspect there may be new breakpoints for that dataset (and that the breakpoints may be slightly different for the other datasets), but I’ll continue to use 1914, 1945 and 1975 for consistency with past posts and that book.

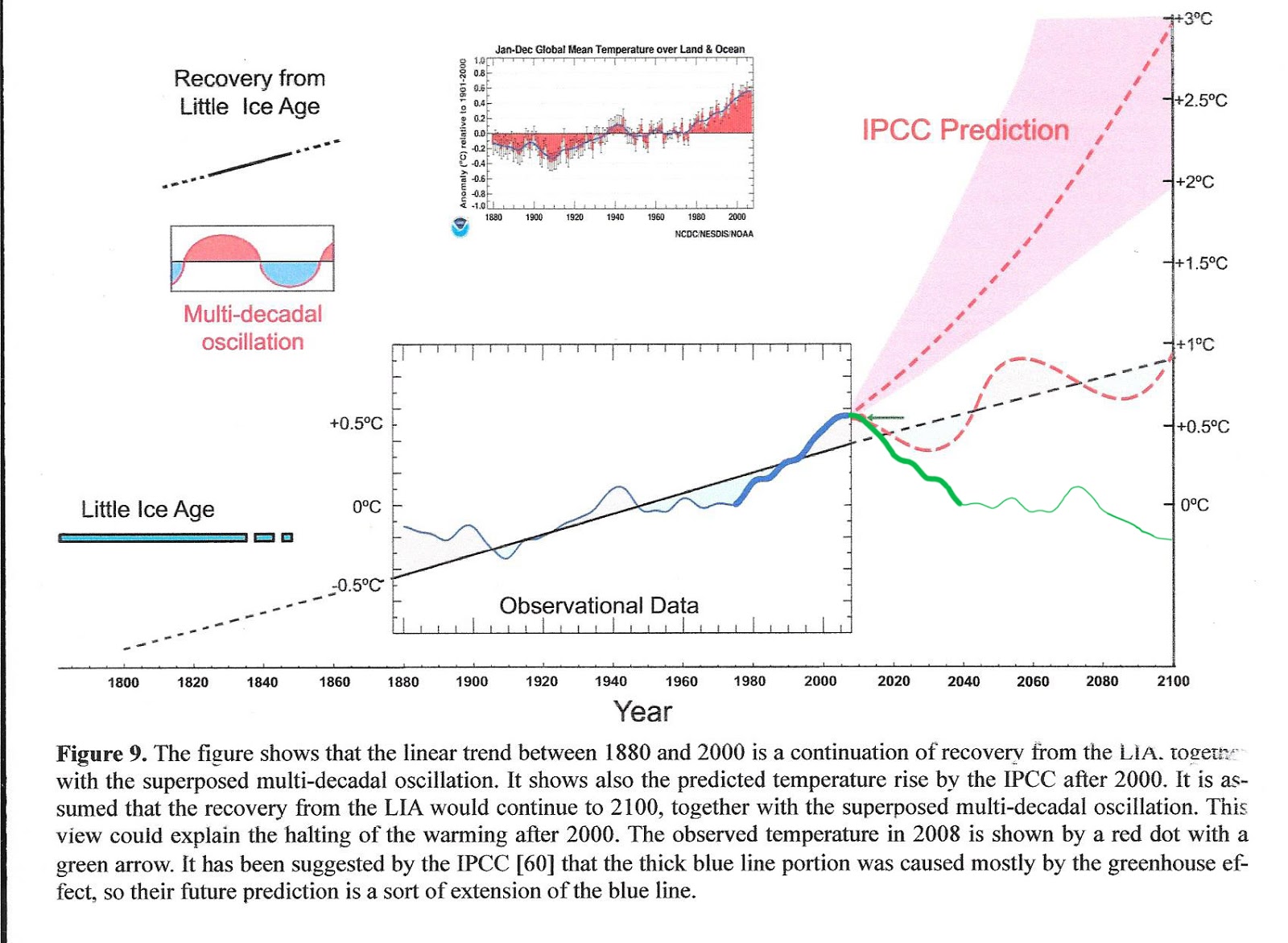

Specifically, 1880 to 1914 is used for the early cooling period (Figure 8), 1914 to 1945 is used for the early 20th-Century warming period (Figure 9), and the mid-20th Century slowdown/cooling period is captured in the years of 1945 to 1975 (Figure 10).

During the early cooling period of 1880 to 1914, Figure 8, most of the end products have cooling trends that are lesser negative value than the cooling trend of the “raw” data. Curiously, the trend of the NOAA/NCEI data is the same as the “raw” data. The spread in the cooling rates of the end products is about 0.04 deg C/decade. As a reference, the model mean of the CMIP5-archived model (historic/RCP8.5) show a slight warming trend (+0.032 deg C/decade) for this cooling period. Models wrong again.

Figure 8

In Figure 9, we can see that the “raw” data have the highest warming rate for the early 20th Century warming period of 1914 to 1945. The adjustments during this time period are primarily to the sea surface temperature data…an effort to account for biases resulting from the transition in temperature-sampling methods, from buckets to ship inlets. Regardless, the spread in the warming rates is about 0.02 deg C/decade for the end products.

Figure 9

Of course, since the models do not simulate the cooling from 1880 to 1914, they fail to properly simulate the warming from 1914 to 1945. The model consensus only shows a simulated warming rate of +0.057 deg C/decade during this period. Because the models can’t explain the extent of the warming that took place in the early part of the 20th Century, apparently natural variability is capable of warming Earth’s surfaces at a rate of 0.07 to 0.09 Deg C/decade above that hindcast by the models. That of course makes one wonder how much of the recent warming was caused naturally.

The mid-20th-Century slowdown/cooling period of 1945 to 1975 is last, Figure 10. The “raw” data and those datasets that are based on the HADSST3 data sea surface temperature data (Berkeley Earth, Cowtan and Way, UKMO HADCRUT4) show slight cooling trends during this period. Once again, they have been adjusted for the 1945 discontinuity and trailing biases that were determined in Thompson et al. (2008). On the other hand, the two datasets that rely on NOAA’s very odd ERSST.v4 “pause buster” sea surface temperature data (GISS LOTI and NOAA/NCEI) show a slight warming trend for 1945 to 1975. And once again, the reason those two differ from the others is that the ERSST.v4 “pause-buster” data were not corrected for the 1945 discontinuity and trailing biases.

Figure 10

How awkward is NOAA’s failure to correct for the 1945 discontinuity and trailing biases? Even the consensus of the climate models (CMIP5 model mean with historic and RCP8.5 forcings) shows a cooling trend (-0.014 deg C/decade, slightly more than observed) during the mid-20th-Century period of 1945 to 1975.

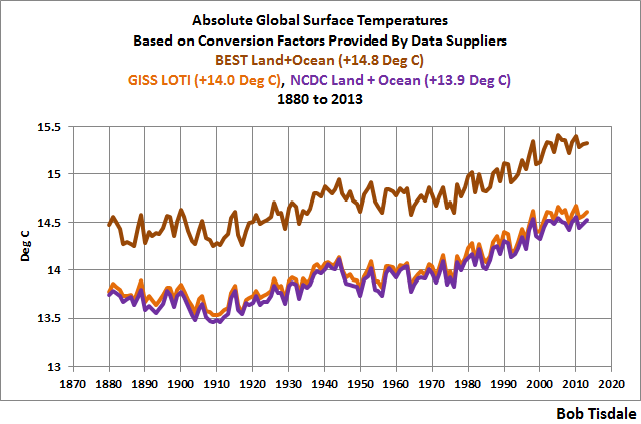

THE SPREADS BETWEEN ANNUAL AND MONTHLY GLOBAL LAND+OCEAN SURFACE TEMPERATURE END PRODUCTS

Since we’re discussing global temperature products, I thought I’d illustrate something else…the extents of the disagreements between annual and monthly global surface temperature anomalies.

Figure 11 (annual) and Figure 12 (monthly) show the spreads between the 5 global land+ocean surface temperature end-products. (Please note the differences in the scales of the y-axis.) The anomalies are all referenced to the full term of the data (1880 to 2015) so not to bias the results. The minimum and maximum values for the 5 datasets were first determined. Then the spread was calculated by subtracting the minimums from the maximums.

Figure 11

# # #

Figure 12

Curiously, referring to the annual data because it’s easier to see, the spread in the early 1900s is less than the spread for much of the mid-20th Century. Again, the anomalies are all referenced to the full term of the data (1880 to 2015) so not to bias the results.

CLOSING

We often hear people state that the adjustments to global land+ocean surface temperature data have decreased the global warming rate. That’s very true for the long-term data (1880 to 2015) but not necessarily true for the periods after the mid-1940s.

For the post-1998 or post-2001 slowdown in global warming, the adjustments have increased the global warming rates in all datasets, with the UKMO HADCRUT4 adjustments having the least impacts.

NOAA’s failure to correct for the 1945-discontinuity and trailing biases causes the GISS LOTI and NOAA/NCEI to have relatively high warming rates for the period of 1950 to 2015. That failure on NOAA’s part also shows up during the mid-20th-Century period of 1945 to 1975…the GISS LOTI and NOAA/NCEI show a light warming during this period, while the datasets that have been corrected for the 1945-discontinuity and trailing biases show a slight cooling.

Some persons believe the adjustments to the global temperature record are unjustified, while others believe the seemingly continuous changes are not only justified, they’re signs of advances in our understanding. What are your thoughts?

Does this exercize contribute to science in real terms or serve the time pass by filling the pages? Some time back USA raw data and adjust data series were presented, wherein earlier part the series were lowered and in later part the series were raised. That means, the trend of adjusted data is far higher than the raw data series that clearly presented a 60-year cyclic pattern.

Recently, when media talking on heat waves, IMD Hyderabad office reported the raise in Hyderabad temperature was only 0.01 oC in 100 years. In some parts of the city with heat-island effect the temperature raised by 3 to 5 oC.

Dr. S. Jeevananda Reddy

This exercise combats misinformation about adjustments to the global temperature record. The US temperature adjustments are a totally different topic.

Sorry Bob Tisdale — in all your exercizes you have not taken into account the 60-year cycle factor. In fact in the sine curve by 2016 it reached peak and also El Nino. So also Sea temperature cycles over different parts of the oceans. Simply linear regression will not provide or serve the science.

If you really wanted to help science and educate common man to scientific community, you do some thing like raw data over different parts of the globe including oceans.

Dr. S. Jeevananda Reddy

Dr. S. Jeevananda Reddy

What equation are you using for that assumed 60 year short cycle? (Obviously: the zero point, the period, the assumed amplitude. Anything else?)

How do you account for the semi-sine wave of the 1000 year long cycle rising since 1650’s minimum? Should that curve not be added to the short cycle?

Also, the adjustments affect on trend will be realistically seen when we use full data series [downward & upward adjusted] only truncated data series trend has no meaning. As the natural variability component automatically change the trend and not reflecting the real scenario.

Dr. S. Jeevananda Reddy

This global warming debate has been ongoing for 20-30 years or more and yet as a novice and non-specialist the debate still seems to be bogged down at the level of arguing the equivalent of how many angels can sit on the point of a needle and even that contaminated by ya boo inputs. There is nor even any agreement on the basic data supposedly available, let alone what mechanisms are in place, what changes will occur and over what time frame!

The UN is a global organisation, with all countries being members. The IPCC is a UN based organisation with disproportionate influence and control of this debate. If this topic is so critical for mankind’s future why haven’t our leaders not insisted that the UN set up a firm and agreed basis for obtaining and maintaining the raw data needed for the required scientific assessments world-wide – to some agreed frequency, by some agreed standard method/instrumentation and with measuring stations having the same “independent” environment not affected by local external influences other than natural causes, mainly solar inputs.

If this quite obvious and sensible action had been taken from the outset, at least the world would by now have had an ongoing agreed raw temperature, raw CO2 data record which various agencies around the world could use as the basis of a proper scientific investigation and research project designed to determine what, if any problems exist and how best they can be managed/accommodated. This would be to the benefit of all, except possibly or even probably, those who have jumped on the academic CAGW gravy train or have entered the renewable energy market.

£billions of unaffordable money has been wasted and much needed scientific resources have been diverted away from real problems affecting the world, and yet we seem no closer to any properly agreed and ratified outcome/policy on this matter. If man-made CO2 is the driver of some near future apocalypse then practically, nothing is in place to manage and/or avoid it; effectively 75% of the world’s countries is ignoring it, and will be so doing for many years, following their own separate agendas!

The UN, yet again, is only conspicuous by its own ineffectiveness and incompetence! When, as still now, politics gets in the way of proper and adequate science and technology then the scientists and engineers of this world should have been kicking in doors and organising protests and not, as too often is now the case, simply jumping with avaricious politicians onto the passing CAGW gravy train!

Dr. S. Jeevananda Reddy says, “Sorry Bob Tisdale — in all your exercizes you have not taken into account the 60-year cycle factor…”

This was a post about adjustments to the global land+ocean temperature record, not about any cycles it might contain.

I leave posts about “cycles” to others. Feel free to write one.

Bob Tisdale – You rarely like to respond positively on comments. When we raise cyclic variation, you tell us write a separate article but this is not correct approach on comments. Let me present two practical example: On global temperature anomaly in 2015 US Academy of Sciences & British Royal Society jointly brought out a report wherein they presented 10, 30 and 60 year moving average of the this data. The 60-year moving average pattern showed the trend. That means in this data series, if we use a truncated series, depending upon the series is decreasing arm and increasing arm of the sine curve [through these columns sometime back somebody presented a figure to explain this] the trend shows different type. This is obvious.

[1] In the case of Indian Southwest Monsoon data series from 1871, IITM/Pune Scientists [they are part of IPCC reports] briefed the Central minister [which was later briefed the members of parliament in parliament session in 2013/2014] that the precipitation is decreasing. They have chosen the data of descending arm of the sine curve. This has dangerous consequences on water resources and agriculture. This I brought to the notice of ministry of environment & forestry.

[2] In the case of Krishna River water sharing among the riparian states, central government appointed a tribunal [retired judges] to decide on this in 1970s. The tribunal used the data available to them at that time on year-wise water availability [1894 to 1971] for 78 years and then computed 75% probability value for distribution among the riparian states. Probability values were derived from graphical estimates [lowest to highest] and using the incomplete gamma model or other models nor tested for its normality.

Now, the central government appointed a new tribunal [three retired judges] to look in to the past award and give their award on this issue. Though this tribunal has the data for 114 years, chosen a period of 47 years [1961 to 2007] and decided the distribution. The mean of the 47 years series is higher than 47 years series by 185 TMC. This 47 years series positively skewed and far from normality. The 114 years data series showed normality [mean is at 48% probability which is very close to 50% probability] and as well the precipitation data series showed a 132 year cycle.

Prior to 1935 the series presented 24 years drought conditions and 12 years flood conditions; from 1935 to 2000 the data series presented 12 years drought condition and 24 years flood condition; since 2001 on majority of years drought conditions were seen similar to prior to 1935. With the new tribunal award the downstream riparian state s the major casualty. This I called it as “technical fraud” to favour upstream states. This I brought to the notice of the Chief Justice of the Supreme Court, Respected President of India and Respected Prime Minister of India but they did little as per the constitution the powers of the tribunal beyond question even if they commit fraud. Now the downstream state approached the Supreme Court. Here the discussion goes on legality but not on technicality.

In science we should not do the same mistake.

Dr. S. Jeevananda Reddy

Thanks Bob Tisdale, after having read this in a first, inevitably “ultradiagonal” pass, I think this is a carefully written guest post, very informative, and above all free of unnecessary, destructive polemics. Great job.

Bob, as you’ve studied this issue in several blogs post and have the ‘raw data’. Would you be able to make your own corrections? This might be more useful to compare this to the existing temperature datasets.

Comparing temperature datasets to the raw data over different time periods is a bit repetitive and doesn’t really answer the question ‘are the corrections valid’ and ‘if not what should they be’. Do you think there is a possibility to do some sort of crowd sourced project and come up with a set of corrections you are happy with?

John asks, ‘are the corrections valid’ and ‘if not what should they be’?

Those are very valid questions, but beyond my capabilities. Weren’t those a few of the underlying questions behind the Berkeley Earth project?

Cheers.

I believe our SST data (anything about ocean temps) is too short, to wide spread, too subject to position movement we couldn’t track to be of use.

If we limit our concern to terra firma we can go back to about 1880 in the US and 1700 in the Central England. Other places have credible records covering decades. From all these data sets we can reconstruct trends. We can integrate the trends into a knowledge base that will give us a pretty good answer to the existence (or not) of a CO2 effect.

Ice cores are great but we just don’t know what changes occur in air bubbles. We can date snowfall/melting balances, volcanism depositions and isotope ratios. They just don’t tell us much about temps. If we KNEW what the trapped air means cores would answer our questions but we DON’T.

Alcohol and mercury thermometers are terrible. They are still better than anything ELSE. People, some drunks, some liars, some lazy read them and wrote down numbers. They are STILL the best we have.

“Note 2: Some may claim the start year of 1998 is cherry-picked because it’s an El Niño decay year.”

Yes, indeed. Inevitably here, because you also said:

“We ended the data in 2013, because:…”

The reasons given for this cutoff are that subsequent warming was El Niño (and Blob) related. You say that 1998 is different, because it was followed by a La Nina. But that is hand-waving without the arithmetic. What happens if you don’t start with the El Niño peak of 1998? As it is, you’ve explicitly excluded an El Niño peak at one end, and included it at the other.

If you are not going to use the 1998 start point, you start from a La Nina which is the same thing.

If you are going to start after the La Nina then the second half of 2015 and start of 2016 should also be left out Nick.

You claim cheery pick and offer another cherry pick :p

I’m sure the value of Nick’s contribution to this discussion will be discussed in replies to his comment.

My reply focuses on Nick’e comment as it illustrates the “settled science” aspect of warming: in addition to disagreement about the actual temp data, it’s pretty obvious there’s no general agreement about how to analyze the data.

How ya call that science (let alone settled science) is beyond me.

http://www.esrl.noaa.gov/psd/enso/mei/ts.gif

If you start after 1998 nick it looks nice doesn’t it if you want to see warming.

But, if you want to take out 1998 you cant leave 2015\16 in can you if you want to be honest.

Take out 1998 hot spike, and 2015\16 and there is no trend really in ENSO warming but there is a trend in ENSO cooling even if you take out the la nino cooling after 98, or leave it in. same result

Th really shamfull thing from NOAA was trying to separate land surface temps from El Nino effects.

Really shameful dishonesty, political garbage, almost as bad as Schmidt’s record temp in 2014 that was an order of magnitude smaller than the margin of error.

Nick Stokes complained about Fig. 5, “you’ve explicitly excluded an El Niño peak at one end [the right], and included it at the other” [the left].

That’s incorrect. By starting with January 1, 1998, Bob has included only about 3/4 of the 1997-1998 El Niño, but all of the subsequent 1999-2000 La Niña, as you can see here:

http://ggweather.com/enso/oni.jpg

That gives a modest cool bias to the left end of the graphs which start with 1998, and thus somewhat exaggerates the warming during “the pause.”

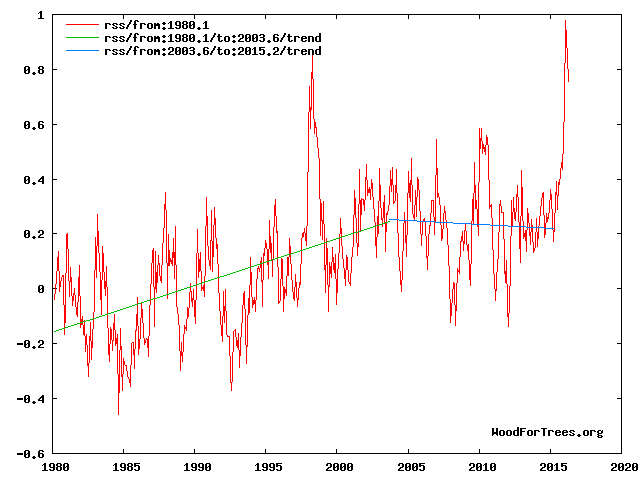

Here’s a wood-for-trees graph of several temperature indices, starting four months earlier (8/1/1997), to pick up the full 1997-1998 El Niño:

http://www.woodfortrees.org/plot/hadcrut3vgl/from:1997.6667/plot/hadcrut4gl/from:1997.6667/offset:0.4/plot/rss/from:1997.6667/offset:1.2/plot/uah/from:1997.6667/offset:1.9/plot/hadcrut4gl/from:1997.6667/offset:0.4/trend/plot/hadcrut3vgl/from:1997.6667/offset/trend/plot/rss/from:1997.6667/offset:1.2/trend/plot/uah/from:1997.6667/offset:1.9/trend

Over the last 50 years, strong El Niños have always been followed by strong La Niñas. They come in pairs: a strong El Niño, then a La Niña of similar overall magnitude. (El Niño tends to be shorter and sharper, La Niña tends to be longer but not as sharp.)

Bob’s graph of 2001 to 2013 is most instructive, because it excludes both El Niño/La Niña pairs: the 1997-2000 pair at the left end, and the 2014-2018 pair at the right end.

Climate activists usually do the opposite. They like to start their graphs with 1999, so they can pick up the 1999-2000 La Niña at the left end, without the preceding 1997-98 El Niño, thus cool-biasing the start of the graph, to exaggerate the warming trend.

Likewise, they currently prefer to end their graphs right now, because the current El Niño is ending right now.

Over the next 2-3 years, as the commencing La Niña progresses, many climate activists will be tardier and tardier updating the data used in their analyses, conveniently holding onto that warm bias at the right end of their graphs for as long as they can.

If you “subtract out” the effects of ENSO (El Niño / La Niña) and the biggest volcanoes, you find that “The Pause” is now well over two decades long. A 2014 paper by MIT’s Ben Santor (with many co-authors, including NASA’s Gavin Schmidt) did that interesting exercise. They tried to subtract out the effects of ENSO (El Niño / La Niña) and the Pinatubo (1991) and El Chichón (1982) volcanic aerosols, from measured (satellite) temperature data, to find the underlying temperature trends. Here’s their paper:

http://dspace.mit.edu/handle/1721.1/89054

This graph is from that paper:

http://www.sealevel.info/Santor_2014-02_fig2_graphC.png

Two things stand out:

1. The models run hot. The CMIP5 models (the black line) show a lot more warming than the satellites. The models show about 0.65°C warming over the 35-year period, and the satellites show about half that. And,

2. The “pause” began around 1993. The measured warming is all in the first 14 years (1979-1993). Their graph (with corrections to compensate for both ENSO and volcanic forcings) shows no noticeable warming since then.

Note, too, that although the Santor graph still shows an average of almost 0.1°C/decade of warming, that’s partially because it starts in 1979. The late 1970s were the frigid end of an extended cooling period in the northern hemisphere. Here’s a graph of U.S. temperatures, from a 1999 Hansen/NASA paper:

http://www.sealevel.info/fig1x_1999_highres_fig6_from_paper4_27pct_1979circled.png

The fact that when volcanic aerosols & ENSO are accounted for the models run hot by about a factor of two is more evidence that the IPCC’s estimates of climate sensitivity are high by about a factor of two, suggesting that about half the warming since the mid-1800s was natural, rather than anthropogenic.

Oops, I used an obsolete (.jpg) link for that that GGWeather ENSO graph. The current (.png) link is:

http://ggweather.com/enso/oni.png

I would add that there is a 3rd element that stands out. That would be that the shifts in the ENSO regions are major players in the climate patterns of Earth. It always puzzled me how the IPCC and similar minded types thought that the ENSO regions could be disregarded as non important to the global warming story.

Thanks daveburton for the link to Santer & alii (Volcanic Contribution to Decadal Changes in Tropospheric Temperatures, 2014).

That paper I didn’t know about. But years ago I read a paper written by Grant Foster and Michael Rahmstorf, in which the two operated in a similar manner:

http://iopscience.iop.org/article/10.1088/1748-9326/6/4/044022/meta

The paper was target of some critique, but I found it was at that time an excellent contribution to the discussion.

Bob Tisdale for example did not accept their idea of considering ENSO be an exogenous factor that might simply be extracted off a temperature record.

daveburton, very good comment, and I was not aware of the paper removing ENSO affects. Curious how that paper is not promoted by alarmists. One suggestion, instead of using the US chart show this one, https://stevengoddard.wordpress.com/1970s-ice-age-scare/#comment-235495

You will find that the 1979 to 1995 warming almost precisely recovers from the .3 plus of global cooling in the 1945 to 1975 period.

Nick asked, “What happens if you don’t start with the El Niño peak of 1998?”

Compare the trends of Figure 5…

…and Figure 7.

Cheers

Easy refutation. Thanks Bob. Nick strikes out. Again.

OUCH! That’s gonna leave a mark. I’m sure Nick Stokes now wishes he hadn’t asked.

2010 was also a strong El Nino. The proper comparison with be where we end up in La Nada years after we go to a negative AMO and PDO.

According to the ENSO index it was average but La Nina cooling was significant afterwards, this balances the 1998 significant spike followed by a smaller dip into cooling thereafter.

Starting at 1998 is valid if you are going to use the data through to 2016. OR you exclude both and La Nina post 1998 if you want to see the slowdown in what is absolutely ENSO driven warming. (not CO2)

Mark, what was average? The AMO is just coming down from the peak, yet still quite high. 1979 to 2000 was positive PDO, and dominant El Nino’s to go with it, positive AMO and a very high correlation just from that to GMT, matching RSS almost perfectly.

From Steven Goddard, amo / rss graphic.

http://realclimatescience.com/wp-content/uploads/2016/05/2016-05-08110456.png

We have not had the oceans in sync in a comparable downward cycle yet, but that looks to be coming. The step up after 1998 MAY have been due to all up cycles working in harmony and, as Bob T’s detailed posts illustrate the after affects of those warm waters moving from the equator region.

Remember the 1940s blip they wanted to remove? Biffa’s trees also showed the decline to the late 1970s, as did information on rapid arctic sea ice growth, which was also verified by the early satellite record prior to 1979.

Bob:

Figure 10 above shows 2010 (as 1998 was earlier) as El Nino years. There is a very evident spike in temeprature when averaged over all 12 months. 2015-2016 is, obviously, also an El Nino year. But while El Nino’s are associated with warmer waters off of South America at Christmas, they are not really a “yearly thing” with explicit start and stop dates that are tied to any human’s calendar.

Nevertheless, El Nino’s (and La Nina’s) do begin and end.

If I am looking for co-relations between other information (other measurements of various values taken over months (not just yearly averages), what are considered “El Nino” times since 1980?

For example, 2010 was an El Nino year.

Would the value are Antarctic sea in January, 2011 still be considered within the 2010 El Nino, or had it “returned to normal” by January 2011? Would a June 2011 value be considered inside the 2010 El Nino?

Today is early May 2016. Is the 2015 El Nino considered to have begun Sept 2015 and lasted until March 2016?

RACookPE1978, I typically refer to NOAA’s Oceanic NINO Index to avoid arguments:

http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ensoyears.shtml

RACookPE1978 asked, “Is the 2015 El Nino considered to have begun Sept 2015 and lasted until March 2016?”

I think you mean Sept 2014, not 2015, for the beginning date.

It’s not clear whether or not it has quite ended, yet. But if not yet, then probably June or July.

daveburton

My question was phrased that way (assuming a Sept 2015 start) exactly because I did not have access to the ENSO table Bob Tisdale linked above.

For one project, for example, I clearly want to use his “black months” (months where the ENSO index is between -0.5 and +0.5) and specifically exclude both very high (red) and very low (blue) months if I want to look at ice under “normal” ENSO conditions. That would drop a few months in early 2012, and most of 2015. But the rest of 2012, all of 2013, 2014, and the first months of 2015 might be informative. Or might not be – We don’t know yet.

Sept 2015 through Feb 2016 are the ONLY recent months since early 2012 that the Antarctic sea ice anomaly has been negative.

Since 1992, Antarctic sea ice extents have been increasing, and are lately been at substantial, record-breaking maximums. –

Do the dips and changes between 1992 and early 2011 also correspond the ENSO peaks and dips?

Don’t know yet.

Do the rapid but irregular peaks and dips in Antarctic sea ice satellite record since 1979 lead, coincide, or lag the ENSO index?

Don’t know yet.

But it is a very good track in recent years.

Has to be from neutral to neutral, peak to peak or trough to trough. Never sure why anyone on any side of the debate would do anything else.

This is oh so obvious. But cherry picking is the “mode du jour” at both sides.

UAH shows a +0.1°K increase between the peak of the 1997-98 El Niño and the peak of the 2015-16 El Niño

http://www.drroyspencer.com/wp-content/uploads/UAH_LT_1979_thru_April_2016_v6.png

This simple approximation suggests a warming of 0.1°K for 18 years, or about 0.056°K/decade for the pause. This value is lower than what everybody is showing for the period in Bob’s figure 4.

Notice that by comparison, from the El Niño peak of 1987 to the El Niño peak of 1998 the warming was +0.35°K, or 0.32°K/decade, The warming was over 5 times higher.

Javier, good comments, except I do not think Bob D cherry picked.

Define neutral. Are you including the PDO and the AMO?

David S wrote, “Has to be from neutral to neutral, peak to peak or trough to trough.”

Yes, but even that isn’t necessarily sufficient to avoid biasing the trend. If you measure peak-to-peak, or trough-to-trough then you need to choose peaks or troughs of similar magnitude. Or, if you measure from neutral-to-neutral It matters very much which neutral points you choose.

Over the last half-century, major ENSO cycles have generally begun with a very strong El Niño, followed by a prolonged, strong La Niña (often with a double peak), not the other way around. So if you do a linear regression which starts and ends at the the brief neutral periods between very strong El Niños and the subsequent long, strong La Niñas, e.g., 1999 to 2017, it amounts to a perfect pair of cherry-picks to maximally exaggerate the trend. Your graph will start with a couple of years of La Niña (while excluding the adjacent El Niño), and ending with a year or more of El Niño (and exclude the adjacent La Niña),

So to avoid biasing the trend, you need endpoints in the neutral period either preceding a strong El Niño / La Niña pair, or following it. You should not use endpoints during the brief

[oops, accidentally truncated, sorry]

…neutral period in the middle of an El Niño / La Niña pair.

daveb, you have to include the AMO and the PDO at a minimum.

You’re right, David A., in principle. But the AMO & PDO are 50-60 years duration, which makes it very difficult, if not impossible, to distinguish those cyclical factors from the effects of GHG forcings.

You can only do what you can do. ENSO is short enough that one can easily pick endpoints for trend analysis which minimize (or maximize) ENSO bias. Unfortunately, that isn’t true for PDO & AMO.

BTW, there seems to be some problem with Dr. Spencer’s server at the moment, so that graph that Javier posted isn’t showing up. But The Wayback Machine has a copy of it, here:

http://web.archive.org/web/20160502230728/http://www.drroyspencer.com/wp-content/uploads/UAH_LT_1979_thru_April_2016_v6.png

DaveB, how does this look?

http://realclimatescience.com/wp-content/uploads/2016/05/2016-05-08110456.png

It is compelling, David A.

OTOH, http://www.sealevel.info/resources.html#secretofclimate

I used to think the same thing, but it isn’t valid. As daveburton mentioned above we generally have strong El Nino events paired with following La Nina events. If you go peak-peak you include the La Nina on the front side but not on the back side, Same for trough-trough as you miss the El Nino on the front side.

What this means is you need to make sure you get both pairs in any trend (or neither). If you don’t, you always get a warm bias. In fact, it is almost impossible for skeptics to get a cold bias due to this feature of ENSO.

David S, the debate on start and end dates for trend analysis has been covered many times before on this site. The following article shows a global warming contour map that shows the results of every possible trend interval.

https://wattsupwiththat.com/2016/03/12/investigating-global-warming-using-a-new-graph-style/

You will note in the comments that several readers pointed out their own versions of an “all trend” approach.

But that is not the point of Bob’s article here. He is showing the amount of variance in the temperature reconstructions that are made from supposedly the same raw data. Which makes me wonder why we still call them data sets.

In 2001 the IPCC made a prediction of at least 1.4C warming from 1990 to 2100 (in the then current metrics).

As such I would recommend using 2001 as the start year for any future comparisons. And any trend less than 0.14/decade is a “pause”. This means all dataset show a “pause” – or warming less than the lowest predicted.

To attempt to ‘measure’ Global Average Temperature is folly.

To argue over whether it is fluctuating by 0.1˚C 1/10th of ONE degree, is laughable.

We seem to be playing the Warmist game by ‘their’ rules.

+1

-1, charles nelson…

Simply because plus minus a tenth of a degree means, accumulated over a century, a huge quantity of energy which the planet will be able to evacuate to space – or won’t.

Yes: the Earth did the like 1,000 or 2,000 or 3,000 years ago.

But at these times there were no 7.5 billions of humans, no infrastructures, no industry, no trade, no stock exchanges, no (re)insurances.

So feel free to speak about Warmists if you love to do… but you won’t see the essentials: lots of people want to know what’s really going on, and other people try to create tools making their answers more and more accurate. Some underestimate, some overestimate.

Bob Tisdale gives us here a pretty good view on all that stuff.

Check the ‘adjustments’ being made to the data you are so carefully considering.

And there were 30 million buffalo trampling and eating plants as well across N. America. How much methane did that produce?

These Malthusian self-aggrandizing views of humans role on the planet are tiresome. The entire human population would fit in Texas. 90% of the earth is either water or uninhabitable.

Man can’t heat the ocean, and the ocean (see arguments on El Niño) drives the climate. As soon as you recognize how unimportant man is, your concerns will fade away.

FTOP_T “As soon as you recognize how unimportant man is, your concerns will fade away.”

BRILLIANT. Every alarmist should have that on a plaque over their bed.

FTOP-T your comparison between buffalo and people would get you slapped down with the crowd I debate with. If comparing buffalo and people farts, okay, but they would bring up the issue of people created infrastructure.

FTOP_T,

Totally agree. While I find this subject interesting I am certainly not worried in the least about future warming, sea level rise, lower ocean alkalinity, coral bleaching, sea ice conditions, polar bear extinction, glacier retreat, and the list goes on.

What I try to do is understand the mechanisms that cause cooling events such as the little ice age. Cooling events that have the potential to cause famine especially when coupled with major volcanic eruptions are very concerning to me. History tells us prosperity occurs during warm periods, famine occurs during cold periods. I would like the intelligence to stock up on dry beans and rice for times of famine that the bitterly cold brings.

@nc

Agreed that man has built roads and dams and cities and re routed rivers, but we are also erased abruptly by significant natural events like the current fires in Ft. McMurray. One wave wiped out Eastern Japan and a few years earlier Thailand. One quake can change the West coast. Mt. Vesuvius erased an entire city.

Nature dwarfs our influence on the planet. Archeology validates that man’s footprint is tertiary. You can watch nature devour houses in Detroit in 20 short years.

This in no way minimizes the ingenuity of the human race, but we are like a gnat on an elephant and nature’s tail can swat us away as casually as we brush a few grains of sand off our hands.

This is why we must develop our fossil fuels and leverage highly dense energy sources like coal. If she (nature) decides to blow a frigid wind across the Northern Hemisphere for a few decades, we must fend for ourselves. Nature is not uncaring, just completely unconcerned.

Aye, we are arguing over statistical artifacts made from very uncertain data, and besides, Global average is a residue, NOT a metric of anything. It is no more use than measuring an average attendance of a football game and trying to use the data to tell you why each person went to the game and what they did.

Okay Mark, pick up your toys, and go home.

This here website is for scientific debates on 0.1 C. degree changes in a VERY IMPORTANT statistic called average temperature, a temperature that not one person on Earth lives in.

“statistical artifacts” ?

“very uncertain data” ?

“a residue” ?

Are you mocking SCIENCE ?

How can you so casually dismiss these average temperature data, which NASA reports to the nearest one hundredth of a degree C. ?

NASA has sent men to the moon.

Have you?

NASA hires scientists with advanced degrees.

Do you have a PhD ?

NASA has really BIG computers.

Do you have a really BIG computer?

So, if you haven’t sent a man to the moon, don’t have a PhD, and don’t have a really BIG computer, what could YOU possibly know about the climate?

This morning I went out side at 9am, as I always do, to check the temperature, and it was 0.5 degrees C. warmer than Monday 9am one week ago.

This is proof we are moving toward summer, and that will make almost everyone in Michigan, where I live, happy.

I called three local TV channels, and they would not report this good news.

A global warmunist scientist also says the temperature has increased 0.5 degrees C.,

but he claims and that’s proof life on Earth will end as we know it,

and NYC subways will be filled with water and submarines in the future.

He called the local TV channels and all three sent reporters and cameramen to his office.

If you want a happy life and want to enjoy the best climate in at least 500 years, you have to be logical about climate data — the climate in 2016 is GREAT for people and animals, and the plants are happy about more CO2 !

If you want to be miserable, fear the future, and not appreciate our wonderful climate, be a warmunist.

In my opinion, the only good news about getting old — that affects everyone — is the climate keeps getting better!

The leftists keep telling us the climate is getting worse.

So who are you going to believe?

Climate blog for non-scientists

No ads. No money for me.

A public service.

http://www.elOnionBloggle.Blogspot.com

But I don’t need to check them, charles nelson.

Because I did quite a while ago. Did you ever?

Did you ever download any of these datasets for a sound comparison?

RACookPE1978 May 7, 2016 at 2:23 am

Here is a comparison plot for 3 El Niños since 1980 (anomalies from: GISS / UAH6.0beta5 / RSS3.3):

http://fs5.directupload.net/images/160507/yu22yeo6.jpg

Bottommonst is 1982/83, in the middle 1997/98 and topmost the actual one.

I think we can conclude that

– 1997/98 was, as far as the lower troposphere is concerned, the heviest event of that kind since longer time;

– 2015/16 might well have shot its last bullets in february: look at the UAH/RSS anomalies for april 1998 in comparison with this april (GISS april data not published yet).

Ooops! I forgot to mention that these three event anomaly records each start with the first year’s january and end with the second year’s april. Mea culpa.

Baseline: 1981-2010.

Well, you asked.

Besides the obvious observation that the people who think continuous

cheatingchanges are “advances in our understanding” are always government paid propagandists, one wonders why modern climate “scientists” can not determine what temperature it was on a given day in the 1930s but must change that temperature every day. Mindless heifer dust!If the globe were truly “the hottest it has ever been” we would not need all the confirmation bias and cheating out of the government paid shills to

scare the publicshow it. And we would be able to model the planet as a 3 dimensional sphere with day and night conditions. Oh, and blatant disregard for the laws of physics and thermodynamics would not be needed either.By all indications, we are close to a new ice age. The interglacial is nearly over and all warming periods in this interglacial have been cooler than what came before. I wish that the warmists were right and CO2 had some magical warming property — then we could burn the heck out of carbon fuels and perhaps delay the coming ice. But the magic of CO2 is only in their propaganda.

~ Mark

Too many people confuse science the institution with science the method Mark

“But the magic of CO2 is only in their propaganda.”

The Magic of CO2 is that it greens the planet, with no known costs.

I favor a 2,000 PPM CO2 level, with the goal of growing enough food (plants) to eliminate malnutrition and starvation on our planet.

The warmunists want to deny fossil fuels, and more food from more CO2 in the air, to the poorest people on our planet.

Bob another good overall post, and IMV shows much of the current manipulation, and refutes (perhaps properly defines would be better) claims about the raw producing less warming.

I am however curious about how GMT graphics used to be produced in about 1980, as the “raw” data used then, is clearly not the same “raw” data used now. Those graphics showed a .6 degree drop in NH from about 1945 to 1978 or so, and a .3 plus degree drop in global over the same period. Over that time there was rapid increases in NH sea ice, supportive of the graphics at the time, and of course the well documented ice-age scare. As large as the spread is in you 2001 to 2013 chart, (.013 raw vs. .053 GISS) it pales in comparison to the removal of the blip, and other evidence and time frames from the global charts of the 1980s.

Do you know about the composition or formation of the 1980 time frame GMT graphics, and the difference in “raw” then, vs “raw” now?

Is it true that the current IPCC models cannot produce a GMT anywhere close to what we think it to be, and this is also part of the reason to use anomalies? I understand, but have not confirmed, that the models produce a GMT that is far lower then what is observed?

Thanks again for all your hard work.

Over the longest records..Over the entire stretch of the record..The raw shows more warming.

Why is this important.

1. We care about the long trends…not the short trends.

2. ECS can only be calculated over long periods.

So. From day one we told you to look at long trends.

From day one we told you the most important variable

Is ECS..and that can only be calculated over long periods.

So. We care most about the long records and the long trends.

Yet some people. .Continue to think that there is some sort of fraud going on. That is hilarious.

People clamor for raw data. Raw is warmer.

A smart skeptic will drop the stupid arguments and look for better ones.

Only use raw data. Stupid

Never adjust data. Stupid.

The adjustmen’s are a fraud. Stupid.

Global temperature has no meaning. Stupid.

Infilling Is bad. Stupid.

Do you see what all those stupid arguments share?

Now look at all the charts Bob did

Those charts represent a tiny fraction of all the various attempts to estimate the temperature of the planet. All those

Other variants… urban only, rural only, only coastal stations, different methods. .etc they all fall in the same space.

In other words the structural uncertainty in surface temperature estimates isn’t that great.

If you believe in an LIA you believe that areal estimates of temperature have meaning. When we say Florida is warmer than Michigan that has meaning. When we say the planet was colder in the LIA that has meaning. How much warming has there been since the LIA? Or how much since 1850? Good question. Here is a clue. Adjustments are not a big part of those most important questions.

Are there good skeptical arguments left?

On the temperature record? No. There are technical issues like uhi and microsite. But no skeptical argument can vanish the warming since 1850 or vanish the warming since the LIA ended. The world is warming .

Are there good arguments for skeptics left?

Ya.

1. How much of the warming is natural

2. How much will it warm in the future

3. Is warming bad.

1. All of it.

2. Don’t know. Trends points to cooling.

3. No.

Mosher, there is nothing “wrong” with the work you guys do, what is irritating is the silence from you guys when the result of these analysis are misused. Misrepresented.

This is the same across the board, silence when Obama talks absolute garbage, which is primarily based off of the data sets you types produce.

There are plenty of good skeptical arguments, the foremost being there is no actual data that confirms AGW is a valid theory, data now, not magic.

It appears ye are all quite content to sit by and watch bogus science and misuse of scientific studies and statistical analysis hype up this nonsense.

My argument is you don’t know AGW is valid, and neither does anyone else.

All else is irrelevant as are these “products” you produce because temperature residuals are collected after the climatic horse has left the barn, you are measuring a residue and a global average has no meaning whatsoever.

Because rising temperatures have more than one explanation, no one explanation can cite it as evidence exclusively supporting a theory.

When I see you types shooting down the hysteria, I will believe you have integrity. Otherwise you are just parasites on the global warming gravy train and any defense that is not empirical science is questionable.

The fact you refer to “skeptics” says you “believe” in AGW, belief… funny that, and you have to believe because you have no evidence.

You can’t step outside of your own concept of what is true, hence some of the awful arguments you put forward that are easily deconstructed

“Over the longest records..Over the entire stretch of the record..The raw shows more warming.”

^^ is meaningless.

It actually points out the fallacy of a global average, it tells you nothing of individual values, and ^^ tells you nothing of the overall manipulation of data.

Lack of integrity leads to much skepticism and do you think that is because “exxon” caused this lack of faith? Do you really think that? If you do, then there is no hope for you

OK, let’s look at the long term trend.

Since there is no difference between what’s observed now and what was observed before CO2 began to rise, the climate Null Hypothesis is not falsified.

That means what we’re observing now is natural, not man-made.

ECS vs precision. The most precise study in the survey was less than 1 and not shown. The top of confidence interval for the most precise measurement is around 0.5.

This graph should be a pyramid. The shape of the graph would indicate several thumbs on the scales for low precision ECS estimates..

This is bad news for global warming claims. If CO2 ECS is low, we are discussing the wrong warming cause.

Steven Mosher wrote: “Over the longest records..Over the entire stretch of the record..The raw shows more warming.”

Really?

http://www.sealevel.info/fig1x_1999_highres_fig6_from_paper4_27pct_1979circled.png

Looks like cooling to me from the 1930’s to the present. A Hansen chart, that goes into the satellite era, and shows the 1930’s as hotter than 1998, which is hotter than any subsequent year except for Feb. 2016.

We are in a cooling trend currently. We happen to be right at a point where this “longterm” cooling trend could be broken, and was broken in Feb. 2016, but now the temperature has dropped back down to the trend line.

You might get a little crowing in, if the trendline is broken in the near future, but not yet because it may very well go lower.

In the meantime, we have been in a “longterm” cooling trend since at least the 1930’s. We have not been getting hotter.

The “hotter” years of the 21st century are all within the margin of error, so they are really a flatline, not a trend.

Well, yeah. The Obama era adjustments (and just the Obama era adjustments):

The effect of the adjustments is to roll about 1/4 C of warming from the pre-1950s to the post 1975 period.

This takes what was a relatively equal pre-CO2, post-CO2 warming increase and makes it look mostly due to CO2.

If you back out the adjustments as indicated to the “Pre-Obama” state::

Now there are two relatively equal warming periods separated by a cooling period. Post 1975 there was a little more warming than the pre-1940 period but the weak less than 1C ECS explains that.

Steven;

Raw shows more warming in the early part of the last century, adjusted shows later in the century, got it. That couldn’t possibly effect CAGW theory right, so it’s meaningless? Thanks your help.

1. all of it ?

2.will it warm or cool in the future ?

3. no

they say it is global. look at winter cet then try and convince people billions of tax payer dollars have been spent wisely.

Steven Mosher, perhaps you missed the evidence presented here. To take your argument about trends over the longest record, those trends show 0.065-0.078degC rise per decade, if you start at 1880. Every data set presented here falls within that range.

The IPCC and all the supporters of CAGW make the point that temperature increases due to atmospheric CO2 levels increasing will result in 2-4degC rise per century. However, there is no evidence that such temperature increases are going to occur. As Bob’s charts show, the warmest trend comes from HADCRUT4 from 1975 to 2000, and that managed to reach 0.19degC per decade.

As the skeptics keep pointing out, the “science” behind CAGW is flawed. And the fundamental scientific flaw is the IPCC’s presumption that atmospheric CO2 levels have a causal relationship to surface temperature. Once that one piece of bad science is removed from the discussion, climate science reverts to a study of the many complex natural factors that influence temperature.

The problem is that climate science has spent 25 years chasing a chimera, with very few scientists studying those natural factors. The primary result is that we have no good models of how climate works on our planet, and so we have no valid method of predicting where our planet’s climate will go. The secondary result is that continual generation of bad science by IPCC supporting climate scientists brings disrepute to the entire field of climate science.

You are pointing the finger at the wrong people, because skeptics don’t have a problem with good science.

Self contradiction Stephen?

Yeah, every one of those arguments are a data engineer’s worst nightmare for how data is collected, kept, manipulated and presented.

Only in climate anti-science is ‘data’ adjusted without explicit meta data for exactly why the datum required adjustment.

That adjustment is direct evidence of a minimal error range and should be tracked as such.

Only in climate anti-science is ‘data’ collected from unverified likely uncertified unique individual instrumentation and circumstances and treated as equal in cause, meaning and quality.

Only in climate anti-science is ‘data’ collected at a pitiful number of illogically located and often worst installation sites; simply summed and averaged, then presented as a ‘global’ representation, without true error ranges for the data and result.

Only in climate anti-science is ‘data’ ‘in-filled’ and the in-filled data is treated as actual observational data! Which goes a long way to explaining why the climateers lover their non-performing models.

In-filling is a sloppy lazy method to ease an extremely gross approximation calculation that is far less accurate and representative than using data without in-fills.

Raw data is historically accurate for each implementation and temperature data collection operation.

Raw may be warmer long term, in pitiful and very tepid temperature increases. Raw may also be cooler for many locations.

What raw data at unique individual temperature data collection implementations does indicate is a near complete lack of direct CO2 temperature effects.

From Anthony’s Surface Stations Project through the various Temperature Data Quality discussions held here on WUWT makes obvious the terrible quality and maintenance of temperature data.

What is perhaps worse, there is no apparent intentions of climateers to correct the multiple deficiencies in temperature data nor to truly determine realistic documented error ranges.

A smart warmist, luke or otherwise, would wring every bit of useful information from actual raw data rather than maul, adjust, overwrite and disguise the pitiful few temperature datums they do have.

S MOSHER WROTE:

“Over the longest records..Over the entire stretch of the record..The raw shows more warming.

Why is this important.

1. We care about the long trends…not the short trends.”

MY COMMENT:

You appear clueless about what “long trends” are.

– On a planet that is 4.5 billion years old, a few hundred years are likely to be meaningless short-term random variations.

You appear clueless about normal climate change.

– On a planet where the climate is always changing, a change of a degree over one or two hundred years is a short-term trend, and can not be extrapolated into a long-term trend.

You appear clueless about climate scaremongering.

– Since the warmunist propaganda is to focus on tiny temperature anomalies — in tenths of a degree C. — and many skeptics foolishly join that focus — tiny “adjustments” become much more important than they should be.

You appear clueless about science.

– A good scientist must be skeptical about everything, and not only what YOU say they should be skeptical of.

They should be skeptical about “adjustments”, skeptical about “infilling”, skeptical about the usefulness of the global average temperature statistic when 99.999% of historical data are unknown.

They should be more skeptical because the predictors of the future climate, also own the “actuals”, and consider spewing character attacks, ridicule, and bellowing “the science is settled”, as their primary form of “debate”.

And most important, scientists (and everyone else) must be extremely skeptical about predictions of the future — the future climate, or the future anything else, since predictions of the future are usually wrong … as almost all climate predictions have been in the past 40 years.

YOU WROTE:

“The world is warming”

MY COMMENT:

So what ? The world is always warming or cooling.

Many scientists believe the “world” has been warmer than it is today.

With a different starting point, they would say the world is cooling.

YOU WROTE:

“But no skeptical argument can vanish the warming since 1850”

MY COMMENT:

– Would you have us believe sailors with buckets could measure 70% of the planet accurately?

– Would you have us believe 1800s thermometers were accurate, when those that survived tend to read low ?

– Would you have us believe in the 1800s and early 1900s people could measure average sea temperature with an accuracy better than +/- 1 degree C. ?

If you claim the average temperature has increased about +1 degree C. since 1850, using very rough non-global data, and apply a very conservative +/- 1 degree C. margin of error, it’s possible the average temperature at the end of 2015 was about the same as the average temperature in 1850.

YOU WROTE:

“Are there good arguments for skeptics left?

Ya.

1. How much of the warming is natural

2. How much will it warm in the future

3. Is warming bad.”

MY COMMENT:

The answer to 1 is not known, and there is no indication it will ever be known.

The answer to 2 is not known, and there is no indication it will ever be known.

The answer to 3 depends on the starting point:

— Let’s say we start with the coldest point in the late 1600s during the Maunder Minimum, and end in February 2016, during the current El Nino peak — I’d say the average temperature had increased at least +2 degree C. between those two points — perhaps even +3 degrees. Do you think that warming was bad?

I think it was great news.

YOU WROTE:

“Yet some people. .Continue to think that there is some sort of fraud going on. That is hilarious”

MY COMMENT:

Al Gore’s movie was not fraud?

Mann’s Hockey Stock was not fraud?

The ClimateGate e-mails did not show that politics had infected government employee scientists?

The entire Coming Climate Change Catastrophe prediction is a fraud !

No one knows whet the future climate will be.

No one has any scientific evidence that after 4.5 billion years of natural climate change, CO2 suddenly took over as the “climate controller” after World War II.

There is no scientific evidence that the change in average temperature since 1880, even if you assume the data are 100% accurate, is anything abnormal for our planet, or even bad news.

You used the word “stupid” quite a few times in your meandering comment, intending to insult climate science skeptics for not going after the “right” things (and exactly who appointed you the Grand High Exalted Mystic Ruler of Skeptics?)

After reading your misguided comment, I will use the word stupid one more time.

Stephen Mosher. Stupid (on the subject of climate change skepticism).

Steve M, first of all you are incorrect as far as CAGW is concerned only about 1950 onwards counts per the IPCC. (From this period your claim is incorrect)

Also, I am however curious about how GMT graphics used to be produced in about 1980, as the “raw” data used then, is clearly not the same “raw” data used now. Those graphics showed a .6 degree drop in NH from about 1945 to 1978 or so, and a .3 plus degree drop in global over the same period. Over that time there was rapid increases in NH sea ice, supportive of the graphics at the time, and of course the well documented ice-age scare. As large as the spread is in you 2001 to 2013 chart, (.013 raw vs. .053 GISS) it pales in comparison to the removal of the blip, and other evidence and time frames from the global charts of the 1980s.

Do you know about the composition or formation of the 1980 time frame GMT graphics, and the difference in “raw” then, vs “raw” now?

“Also, I am however curious about how GMT graphics used to be produced in about 1980, as the “raw” data used then, is clearly not the same “raw” data used now.”

No, it isn’t. It’s a very different set of stations. There was a huge effort in late ’70s and ’80s to digitise the records, which before that were only in print (or hand-writing). That was gathered in the GHCN compilation of early ’90s. Before then, you either needed access to the GISS or Phil Jones collections, from mid 80’s on. In 1981 when Hansen plotted climate averages, he used a collection of “several hundred stations” made by Jenne. And most significantly, none of the global averages published before the ’90s combined land and SST.

Nick S, thank you for your reply. The fact that it was not in digital form means little to me, as that simply means it took more time to compile the data. Data that did not exist then essentially does not exist now.

Nick, you also stated, “It is a very different set of stations.” Why? What was excluded before, but included later, and vice versa, and WHY, and WHO made those decisions WHEN??? Also, yes, I know Phil Jones lost some of the original records. Were those ever recovered?

Nick you stated this, “And most significantly, none of the global averages published before the ’90s combined land and SST. Why not? Are the SSTs from prior to 1980 to sparse to be meaningful? How were they then LATER determined, and where is the HISTORY of those changes??? Do not land Ts follow ocean Ts quite consistently? Are the ocean events not the dog that wags the tail of the land trends? Excluding UHI of course? (You know they are looking at correlations to ENSO) Did the relationship of land to ocean T change somehow?

Bob T, thank you for your reply, and on my way to read them now. (-;