John McLean writes relative to our previous story on this issue: Friday Funny: more upside down data

A few hours ago I received an email from John Kennedy at the Hadley Centre to say that HadSST3 data had been corrected.

A message now appears at the top of http://www.metoffice.gov.uk/hadobs/hadsst3/ , saying:

“08/04/2016: An error in the format of some of the ascii files was brought to our attention by John McLean. Maps of numbers of observations and measurement and sampling uncertainties provided in ascii format ran from south to north rather than north to south as described in the data format. This has now been fixed. In some cases, the number of observations in a grid cell exceeded 9999 and were replaced by a series of asterisks in the ascii files. This too has been fixed with numbers of observations now represented as integers between 0, indicating no data, and 9,999,999, indicating lots of data. “

“9,999,999 indicating lots of data”? Why not just say an 8 digit integer field? On the bright side, the data is now integer form and overflows have been corrected.

This correction joins the early corrections of HadSST3-nh.dat and HadSST3-sh.dat made by the CRU, with the note on https://crudata.uea.ac.uk/cru/data/temperature/ saying

Correction issued 30 March 2016. The HadSST3 NH and SH files have been replaced. The temperature anomalies were correct but the values for the percent coverage of the hemispheres were previously incorrect. The global-mean file was correct, as were all the HadCRUT4 and CRUTEM4 files. If you downloaded the HadSST3 NH or SH files before 30 March 2016, please download them again. “

Note that neither makes any reference to how long the problems have been there!

Note that neither makes any reference to how long the problems have been there!

Moon walking!

Garbage In – Climate Policy Out???

Surely this is quite important, and it is equally important to find out the effect this might have, say if the data was used as initial data in GCMs, or used to ‘tune’ model output?

Perhaps, since the order of the data of the data was directly opposite to reality, if the inputs are corrected to the GCM, will they show the opposite predictions to now and we are all in deadly danger of dangerous global cooling? Is this why they switched from another ice-age scare in the 70s to global warming in the 90s?

quite right, Paul. And, surely, what’s equally important is, how many other datasets might have been compromised/are still compromised, or have been quietly corrected without anyone outside the bubble knowing? I also note, from the post, that there was no mention of an apology to the academics who must have been innocently (?) using the data. Nor from the legions of commenters on the original thread who were so quick to dis JMc.

As Osborne says, the temperatures were at all stages correct. The issue were the coverage numbers, given in an inconsistent latitude order convention. I can’t imagine anyone who might have been seriously misled by that. As John McLean said in his original email

“The HadSST3 observation count problems won’t be used by many people, maybe I’m even the first if no-one else has hit the problems.”

You still missed the problem Nick, are your eyes broken or are you not all that? 😀

Nick Stokes: Your comments just remind me of the Morecambe and Wise sketch where Morecambe was playing (!) Grieg’s piano concerto very badly, much to the annoyance of Andre Previn. Morcambe says to Previn, “I’m playing all the right notes, mate, just not necessarily on the right order”.

Quoting Nick S. “Yes, I can’t see any problem with NH and SH. HadSST3-nh.dat (and -sh) is just a file of monthly averages. The numbers in the file correspond to the familiar graphs shown. NH (-nh) temperatures are higher, as expected. Eg the 2015 average for NH was 0.737; for SH was 0.425. The files were last updated 8 March, so I don’t think there is a recent change. It looks to me as if John Maclean may have been reading the netCDF gridded file wrongly.” So once again, the apologist gets it wrong and can’t reconcile the effects this like this mean in his head.

When data is published and available mistakes get found. This shows how important transparency is. And thoughtful analysis instead of knee jerk defensive responses are important. I see to remember Zeke fell on this side as well claiming their is no issue.

Timo Soren,

“So once again, the apologist gets it wrong”

Where? Each of the statements you have quoted is correct. Except the last – apparently he wasn’t using the netCDF file. But what JM said was that the hadsst3-nh.dat and hadsst3-sh.dat files had been interchanged, and in your quote I am going through the process of checking that out. That is thoughtful analysis, as requested; Zeke did it too, but who else? I’m checking the data (SST), and no, it’s all in the right place. The files haven’t been interchanged. Much later, JM tells us that he didn’t mean that. He meant that the metadata (coverage) had been exchanged, not the data. And yes, it had. But how are we to know that is what he meant? It’s quite a different proposition.

One wonders who is minding the store in these government funded organizations. Does anybody check the “garbage” they put out. Why does it take a skeptic to find the errors?

“Why does it take a skeptic to find the errors?”

Skeptics look.

Must be part of their DNA, or maybe being lied to by devoted parents.

A pal of mine phoned the Met Office recently to ask why they don’t mention GCRs in their section on space weather. . It says on the contact page that ‘trained staff will help you find the info you need.’ The ‘trained’ member of staff had never heard of GCRs, and actually said to my friend ‘sorry, I thought it might mean Great Central Railway’. Now I know that the people answering the phone aren’t qualified in meteorology, but you’d think they’d be a bit more knowledgeable than that! What kind of ‘training’ do they get? Bet they can wax lyrical on AGW.

“Skeptics look.”

Isn’t that why climate scientists like Phil Jones refuse to give their data to skeptics?

With an attitude like that, it’s not surprising that it takes other eyes to find their errors. That’s because they’re not even looking. It saddens me to think there are prominent scientists out there who don’t want their errors to be found. Einstein invited other scientists to find errors in his theories. He actually wanted to improve his work so it might be useful to science and stand the test of time. Apparently, some scientists today are either ignorant of the scientific method, or they simply don’t like it. Instead of inviting the discovery of errors as a way to improve their work, they see it as a personal attack on them, which hurts their pride. Can anyone see Michael Mann inviting others to examine his data and uncover errors so he might improve his hockey-stick graph? You’re more likely to see him sue someone who tried that. When ego is more important than science, it is science that suffers.

…‘trained staff will help you find the info you need.’

Ahhh, but what they are trained in is not specifically mentioned, so… (there’s that semantics thing again)

Seems like a lot of CAGW people and politicians have advanced degrees in obfuscation.

Quality procedures are an alien concept to UK Government funded organisations.

I often wonder how forgetful Phil Jones and his hapless crew of CRU acolytes managed to do all their HADCRUx data adjustments and never provide an audit trail……..

“Quality procedures are an alien concept to UK Government funded organisations.”

Yes, agreed. But it is the same with all Government Funded Operations. (GFOs) NASA of the USA may be the worst GFO on “climate change” by far.

I hate to say it, but USA is number 1. USA, USA, USA!!! — in climate data fudging of course.

In the specific case of the CRU, they perfected the lack of quality procedures to some kind of art.

If back in 2009 you didn’t read the climategate HARRY_READ_ME.txt file, read it now. If you read it read it again. It tells you all about climate data reliability.

http://www.yourvoicematters.org/cru/documents/HARRY_READ_ME.txt

Example:

“19. Here is a little puzzle. If the latest precipitation database file

contained a fatal data error (see 17. above), then surely it has been

altered since Tim last used it to produce the precipitation grids? But

if that’s the case, why is it dated so early? “

Catcracking

“…One wonders who is minding the store in these government funded organizations…”.

You’re kidding, right?

Because when you tell government employees pot is heroin, they’ll bankrupt a nation’s morality to push that (other) chemistry scam into place, and have you peeing in a cup to see if you dare think different than government employees, or the parasites feeding off pretending you’re a criminal.

Government employ is where people go when they can’t make it in the real world. Again and again this is found to be the case.

You’re assuming they were errors….I’m cynical enough to think it is intentional! 😉

well, at least they fixed it. Good job John McLean!

Cheers

Ben

Yep, show they sort things if a mistake has been made. Well done to both sides on this one.

Agree with Simon. They acknowledged the error, fixed it and gave public credit to discoverer of that error. They deserve a little appreciation and encouragement here!

Hear Hear indeed!

Exactly, but the zealots rather than see this went into denial mode “there is no problem”.

I was trying to find humble pie but I think Mosher Nich ATTP and Zeke ate it all.

Hi Mark. At the time I was a bit p*ed with Zeke for claiming there was no problem when he’d looked at the temperature but not the coverage, both being in the hemisphere files. Then I realised that I hadn’t made it clear that coverage was the problem so the blame is a bit 50:50. Don’t feel too bad about missing out on your piece of humble pie.

I added a note that the problem was the coverage to either this blog or Bishop Hill where it first appeared (or maybe to both) and the CRU got the message, or maybe it just checked both anyway.

The end result is the same – the data has been corrected and a wide audience is aware of it. Individuals will draw their own conclusions about it.

John McLean on April 12, 2016 at 3:34 am

Did you ever get an answer for how a given gridcell in a given month could have greater than 10,000 counts in the Number-of-Observations file (or even greater than 5000)?

No, OldUnixHead, I received no explanation of that from John Kennedy who seems to head the HadSST team. Presumably they think that it’s worth having that number of observations per month, although perosnally I can’t see it. A count of 9999 is 1 every 4 minutes or thereabouts. Surely 1 every 30 minutes would be more than ample still, we have the technology …

In contrast to SST’s, the temperatures from automatic observation stations are recorded at 30 minute intervals and even then it’s only the minimum and maximum temperatures that matter when calculating the mean. (The recording convention is that today’s minimum is from 9am yesterday to 9am today, but today’s maximum is from 9am today to 9am tomorrow. Okay, so no-one wants to get up at midnight to record the temperatures for the last 24 hours, but what we have doesn’t overlap.) The average monthly minimum temperature and average monthly maximum temperatures are calculated and the month’s mean temperature is the average of those two.

So we have 1 recording every 4 minutes for some – but far fom all – SST recordings and we have about 60 recordings for the entire month (average of 1 every 12 hours) for land-based data.

Much amusement was had by all.

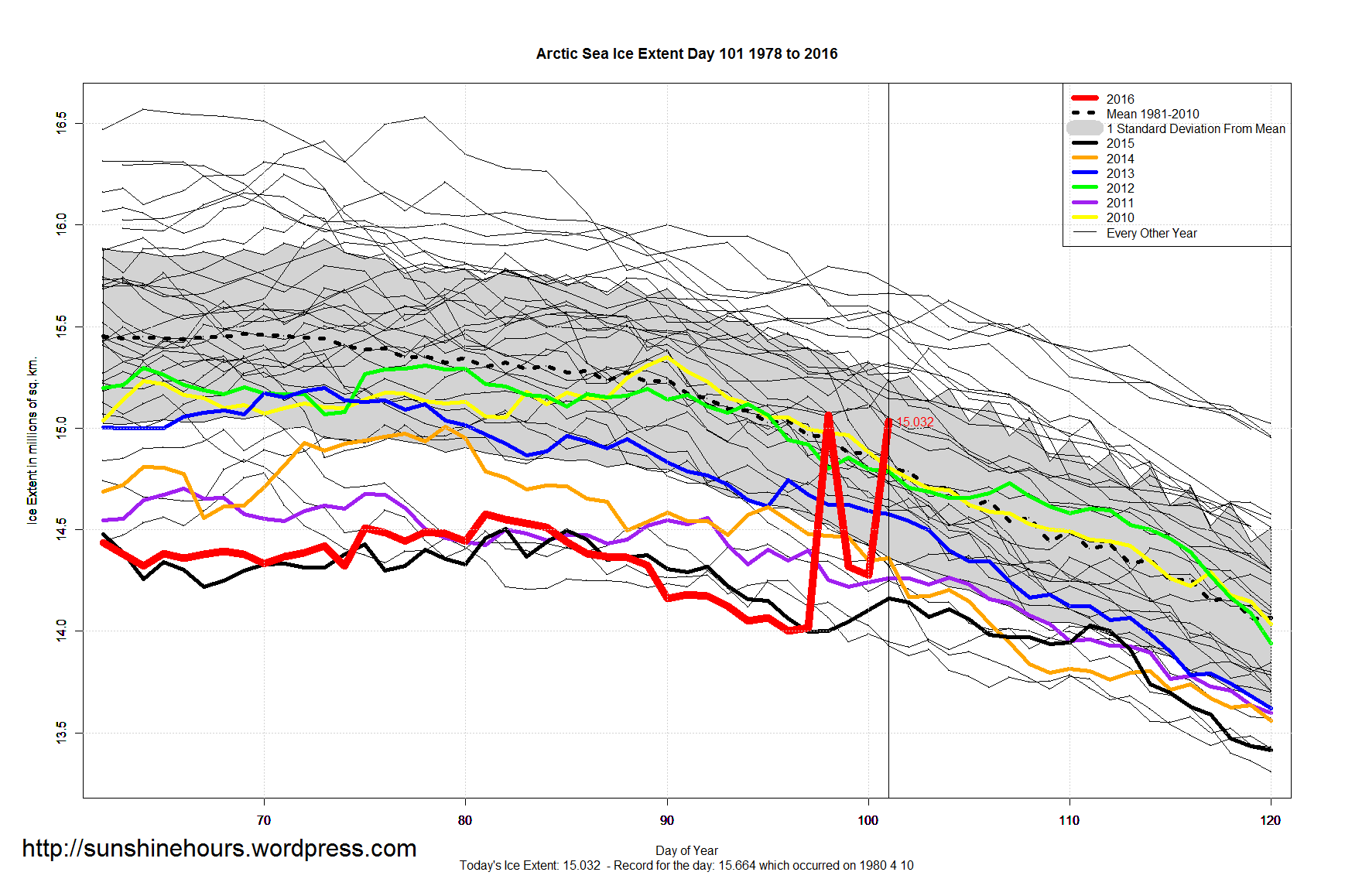

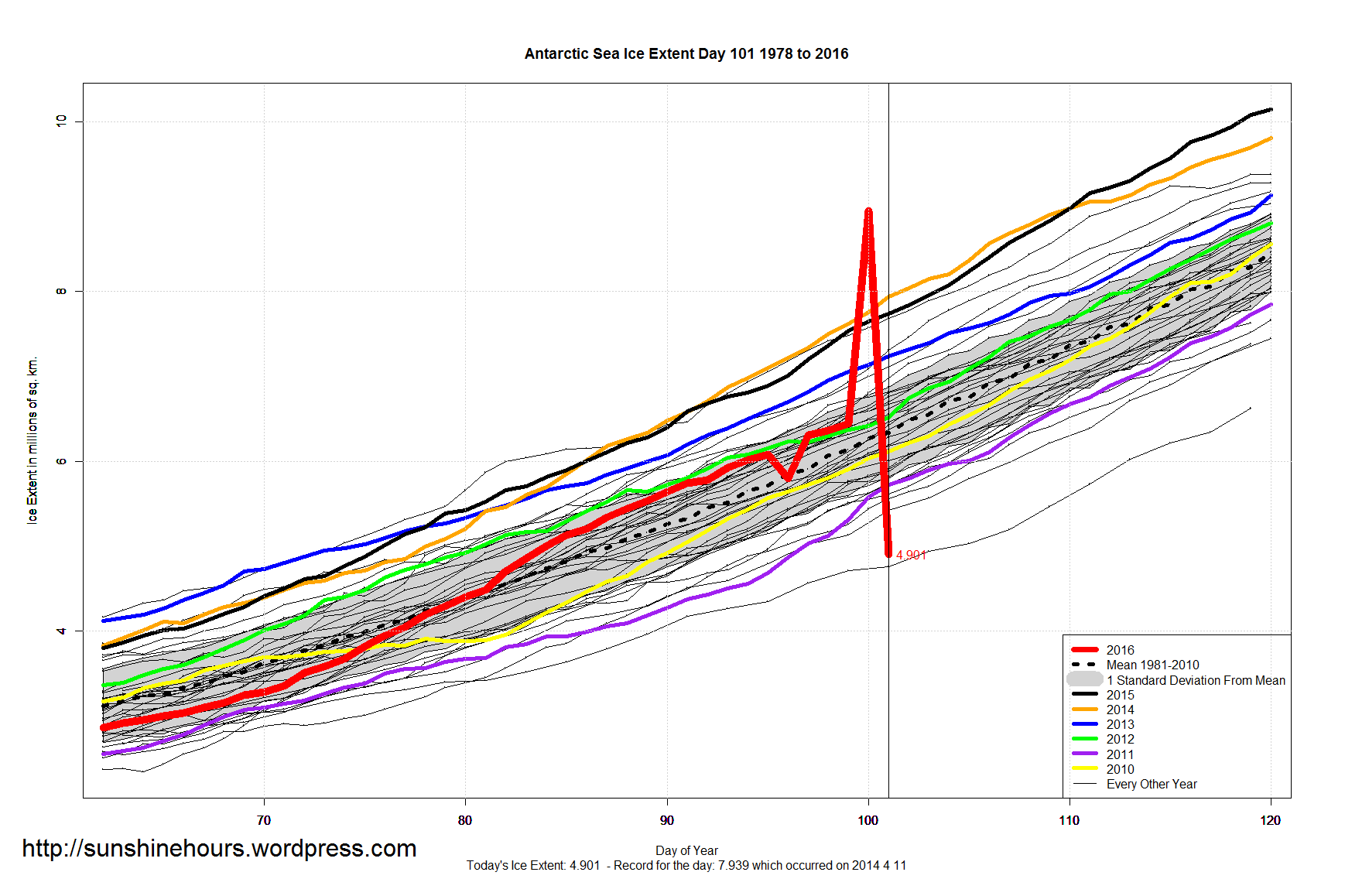

This is misplaced. It belongs in https://wattsupwiththat.com/2016/04/11/more-satellite-problems-with-arctic-sea-ice-measurement/

Why Ric? It’s not an Arctic sea-ice measurement issue. It’s a global sea surface temperature issue.

Aphan April 11, 2016 at 10:02 pm

Why Ric? It’s not an Arctic sea-ice measurement issue. It’s a global sea surface temperature issue.

Aphan I think Ric means “ironicman April 11, 2016 at 8:56 pm” contribution. Those graphs are also found in the earlier Post Ric references. Which is about Satellite malfunctions. different problems.

michael

I wonder how long it would have taken if it were from internal review only.

The reason is that it is *not* an 8 digit integer field, it *is* a nine character ASCII field.

This is a classic example of where data file format bugs come from. This kind of misunderstanding is exactly what bugs are made of. It also is a perfect example of why I HATE ASCII files. I detest reading them, and will only write them under duress of extreme torture and bodily harm.

yes 9, but 9.999.999 is only 7 integers so why not 8 if 7 is ok?

I haven’t checked the data yet but I think you’re wrong, Tony, and so am I. (That will teach me to just glance at the figure in an email before I use it!) I believe the correct answer is 7 digits, without any commas. The presence of a comma would cause an error if read with Fortran’s I7 format and if read in free format, as READ (1,*) we’d end up with three values … 9, then 999, then 999 again.

@jdmcl;

I saw that but did not mention it. When you see commas used as thousands separators, you pray to the gods above that the file is semicolon delimited, or some such. Even still is would be a huge PITA to parse them out before doing the numeric conversion.

**************************************

If there were actually commas used as thousands separators in a comma delimited file, it would be a bit of programming ineptitude of galactic proportions. Thank mercy nobody did that.

**************************************

Yes I know, too many or too few field separators, and your data reads lose correspondence with your variable arrays from that point on. And that leads to a crash, an unexpected EOF, or a totally unintelligible data set, as you know.

Suppress the read error, reset to the beginning of the next line, carry on, and you get a mostly coherent data set with random blown station records sent on for further processing, and nobody is aware that something is amiss.

Pity me, then: I worked with ASCII files that were CREATED in Excel then CONVERTED to ASCII. I then had to either re-convert the ASCII to Excel, or beg for the original Excel.

Can you guess that this was government work?

So lets see if Nick and Zeke would like to apologize and say they were wrong

I am backing the original comment in the original discussion

kim April 1, 2016 at 11:46 am: Zeke and Nick not likely to be heard from again on this matter

…… crickets

“So lets see if Nick and Zeke would like to apologize and say they were wrong”

No, we were not wrong. The original claim of John McLean, on which Josh’s cartoon was based, was:

“1 – Files HadSST3-nh.dat and HadSST3-sh.dat are the wrong way around.

About 35% down web page https://crudata.uea.ac.uk/cru/data/temperature/ there’s a section for HadSST3. Click on the ‘NH’ label and you go to https://crudata.uea.ac.uk/cru/data/temperature/HadSST3-nh.dat, which has ‘nh’ in the file name. But based on the complete gridded dataset that data file is for the Southern Hemisphere, not the Northern. The two sets are swapped. The links to named files are correct but the content of those files is wrong, likely due to errors in the program that created these summary files from the SST3 gridded data.”

We both checked it. That claim was wrong. The two sets were not swapped. The contents of

https://crudata.uea.ac.uk/cru/data/temperature/HadSST3-nh.dat

and the SH version were correct, and have not been changed. The key link is

“But based on the complete gridded dataset…”

JM did a calc based on the ascii gridded set, interpreting the format as per the guide, got something different, and asserted that the .dat files were wrong. But they aren’t. His calc went wrong, not through his fault. The latitudes were listed in the opposite order to what was stated in the guide. HAD has corrected what made it go wrong.

The thing is that people commenting here mostly not only did not check themselves, but don’t know what the files being talked about are. The ascii grid output files which contained the error are obsolete. Hadley now produce a netCDF version which do not have these format issues, and are what serious researchers use. So JM’s claim

” the content of those files is wrong, likely due to errors in the program that created these summary files from the SST3 gridded data”

is wrong on two counts. The file content was not wrong, and there was no way that it would have been generated by someone reading the ascii files.

The issues (2 and 3) on observation counts (order and format overflow) were not disputed at any stage, and Hadley has corrected them. As JM said:

“The HadSST3 observation count problems won’t be used by many people, maybe I’m even the first if no-one else has hit the problems.”

“His calc went wrong, not through his fault. The latitudes were listed in the opposite order to what was stated in the guide.”

To clarify that, the grid of temperatures was at all stages in the correct order, as were anomaly listings. The error was in the latitude ordering of uncertainties and observation numbers.

“The contents of HadSST3-nh.dat and the SH version were correct, and have not been changed.”

Again, a clarification. The temperature data have not been changed. But based on Osborne’s comments, it seems that the percent coverage numbers on the CRU files may have been exchanged.

This is denial ^^

Nick said there was no problem, that John was wrong.

John was asking if there was a problem and asked others to check, Nick checked and seen nothing wrong, Hadley did see something wrong, ergo Nick should stay away from climate science

“John was asking if there was a problem and asked others to check, Nick checked “

And who else? Well, Vuk drew a graph of temperature. And then John McLean explained a small omission:

“Try looking at the coverage from (a) the HadSST3-nh.dat file and (b) calculated from the gridded data. (I didn’t make it clear enough that coverage was the issue when I emailed Bishop Hill.)”

Yup.

Nick, I said nothing about the temperature in those files being wrong. I could be as pig-headed as you and say that you only checked 50% of the file – the temperature part – and ASSUMED that the other part was correct.

Aren’t you lucky that I’m not pig-headed but will admit that I could have made my statement clearer. Of course if you’d come back to the blog post and checked for follow-up you would have seen that.

But in your position I might not have either.

I don’t think you have a lot to apologise for … other than being too hasty and failing to check COMPLETELY.

John,

“Nick, I said nothing about the temperature in those files being wrong”

Well, your first point was simply headed:

“Files HadSST3-nh.dat and HadSST3-sh.dat are the wrong way around.”

And they are SST files.

Nick, your excuse is weak and you know it. You would have seen that the files included coverage when accessed them to compare their temperature data with yours.

Watching (well, reading about) Nick jump thru the aft end of his alimentary canal with regards to “data integrity” is amusing, in fact, highly amusing to this retired CFO.

It’s only been corrected for 2015 onwards.

Cargo Cult Science. I thought WUWT readers might enjoy this from renown physicist by Richard P. Feynman — http://calteches.library.caltech.edu/51/2/CargoCult.pdf

Good read. “If you are doing an experiment you should report anything that you think might make it invalid”

Can someone list the times climate science has tried to invalidate itself.

Same can be said for Astrophysics, no attempts are made to invalidate experiments, I could give numerous examples.

The more expensive the research the less likely a negative result will be reported. Never mind that no one can afford to repeat the research, so scientists know no one else can afford to redo their work, like at the large Hadron Collider, the HIGGs Boson, never seen or detected gets a Nobel. All because of 2 extra photons detected and interpreted as a positive result. But their methods and such is off limits, CERN dont share.

So this work was never validated and verified by someone else and Nobels get handed out. Particle physics is also in a mess. When I seen the whole crowd all cheering the success, it was a disturbing image, there was no skepticism in that room.

You’re dead right! I would go so far as to observe that ALL the inexact branches of science are characterised by their domination by elites of ‘gurus’ or ‘doyens’ whose Olympian pronouncements are unchallengeable by mere mortals. Climate science is just the most prominent example.

The engineering sciences, being strictly empirical and frequently constrained by legal liability, are not fertile ground for dodgy ideas.

Even Einstein’s famous quip that one experiment could prove him wrong reveals him as either disingenuous or naive. Once a powerful Establishment has appeared, whose credibility depends on the maintenance of a certain paradigm, whole rafts of damaging experiments can be ignored, swept under the carpet, arbitrarily declared invalid, etc. Planck’s observation that science advances one funeral at a time is more accurate.

It must be remembered that Ideal Science is only conducted by ideal people. Unfortunately, scientists are real people, with real human failings.

I notice that the weekly update for 3.4 NINO was not done on Monday, as is usually the protocol….

Perhaps they’re “fixing” this data, too…..

http://www.bom.gov.au/climate/enso/monitoring/nino3_4.png

The Arctic and Antarctic ice data has also not been updated for the last few days… Perhaps more “fixing” is being done…

Who are these guys?

No wonder they work in public sector… They’d be fired for incompetence if they worked in the private sector.

It has been but for some reason doesn’t show on the page sometimes (caching?). For example, if I go to the ENSO page here I see the graph for last week showing a +1.25 anomaly. But if I click the graph which takes me to http://www.bom.gov.au/climate/enso/monitoring/nino3_4.png then I see the +1.15 anomaly more recent graph.

The file format remains the same from month to month, same fields, same process. Why — or more accurately, how — do these scientists invert entire gridded fields? I understand humans are fallible but good gosh almighty, the data comes in the same every month and simply needs to be compiled to get a grid result. Inversion seems an involved process to have happen.

The data for each month is just a lat-lon array, with one row (line) per latitude every 5°. You can order them with S Pole at the top, or N Pole. For the obs counts, they were using a different convention to temps.

Inversion will occur if they write the array out in the reverse order. The coverage for the wrong hemisphere probably appeared because of a subscript error. Easy mistakes to make in IT, just a few characters in the software.

– John McLean

..But why were they ” rewriting ” the software ?

..Attempted “Adjustments” Gone Wild ??

Marcus, the CRU software to create HadSST3-nh.dat and -sh.dat could have been modified when HadSST2 was replaced by HadSST3, but even that’s unlikely if the data format didn’t change. My suspicion is that the error was in the software for years. Maybe someone with more time than me can use the Wayback Machine to find old versions and test my hypothesis.

I think the Hadley Centre software is a different matter because (IIRC) the data for the number of observations has only been published since HadSST3 was released. Well, yes, that was a couple of years ago now, which the software bugs have been there for a while .. and no-one noticed either within Hadley or outside.

psst.

Nobody uses the *.dat files.

we all use the netcdf…. you know the STANDARD.

As an engineer I do a bid of ‘expert’ witness work, typically in civil litigation regarding insurance matters. One thing the lawyers have educated me in is the need to be credible as a witness and so make sure of your facts and do not over reach in your narrative. Getting stuff just plain wrong and having to ‘correct’ it with a second report or, heaven forbid, in the the witness box under cross examination is just a disaster and a ‘free kick’ to ‘the other side’.

I just roll my eyes when I hear about these suposedly top notch research centres such as CRU, Hadley, NOAA, GISS etc have to ‘adjust’ their data every now and again and often for the most basic , asswipe type of mistakes like what this one appears to be.

As I said, whither their credibility as witnesses. These turkeys would not want to be put into a witness box you would think.

“…suposedly top notch research centres…”

They are top notch in facilities, computer hardware, location, benefits, pay, perks, PR representation…what am I missing?

“…what am I missing?” Agenda-driven data is always better data, especially when the audience is composed mainly of people who are cheering the agenda on. I haven’t seen a data “correction” yet that doesn’t improve the “warming” conclusion. Yet more convergence towards the collectivist goal.

…pensions…

And that is EXACTLY why they repeatedly refuse to engage in debate.

That’s unfair Perry, at least in this instance. The CRU fixed their code a couple of days after my information appeared here and on Bishop Hill blog. The Hadley Centre took a while longer due, I believe, to the team leader, John Kennedy, being out of the office. Kennedy’s email to me, which I regard as private correspondence, was very civil and not at all defensive.

Nick ATTP and co are in denial mode lol. How ironic.

There was a problem with the data, Nick looked Zeke looked and seen no issue.

John did, Hadley did.

So the bottom line is Nick Zeke and ATTP didn’t know what they were looking at, this is obvious, as they should have spotted upside down observational data, instead a denier rant appeared about McClean.

Food for thought folks, Nick aint all that.. ATTP is a clueless regurgitation pretending to be a scientist. Activism and science are a poor mix, ideology and science are a poor mix. Facts, they are poor a poor mix.

“Nick ATTP and co”

Flunkies.

Andrew

Ahh the damage limitation by NIck and ATTP is funny, Nick you missed the issue, -cred mate. Now you are shedding your skin to avoid accepting you failed to see the issue when you looked, food for thought, not all your cracked up to be mate.

Ideology is a poor bedfellow for science, are you listening ATTP?

The sheer number of Nick’s posts show he is manic and trying desperately to limit damage. Too late Nick.

When I say sheer number I mean the posts on other blogs as well as this one. He’s been scurrying around since hadley thanked McClean

“sheer number”

You’ve almost caught up.

“to avoid accepting you failed to see the issue”

Would you like to explain the issue I failed to see?

Yep Nick I am trying to match your number of posts 😀

Cheer up, we are all wrong mate, I just like to point out that you were a bit bent on not seeing any errors because that is your disposition, the rest, is merely yanking your chain mate.

Next time try to be objective and you might see the problem. We’re all guilty of being a little shortsighted at times

I shall put my wooden spoon away so.

There is too much actual nastiness in this whole mess. Most of what I say is tongue in cheek, probably because I am clueless when it comes to climate science 😛

Mark, not to pick on you in particular but to illustrate how easy it is to make a mistake when typing … What’s the spelling of my surname?

– John M

Apologies John McLean. I think my brain someone how got fuddled between Mc and Mac and so but a C before L.

Duly noted.

John was great in those Die Hard movies.

Now if only he would perform the scientific rendition of dropping [pruned] off of a skyscraper, we’d be advancing science according to that sicko [pruned] of the UK green party.

well done to both parties concerned in sorting out this issue . the people at the hadley centre are certainly doing their best to restore reputation after the severe battering uk climatologists took due to phil jones attitude to data sharing.

unfortunately i believe it is much ado about nothing. all these “data” sets are nothing of the sort. they are estimated products . the anomaly map at the top of the page is a long way from reality .

well worth having a read of these to get an understanding of how these historical “data sets” are compiled.

http://www.metoffice.gov.uk/hadobs/hadisst/HadISST_paper.pdf

http://www.metoffice.gov.uk/hadobs/hadsst3/

I agree that the datasets are not accurate but there’s no reason why you can’t have a dataset of “best estimates” and make that fact clear. This isn’t made clear about CRUTEM4, HadSST3 and HadCRUT4, nor about any of the other temperatures datasets, from the likes of GISS.

You can have a dataset of data. You can have an estimate set of estimates. You cannot have a dataset of estimates.

The data are not accurate, though they could be, if those assigned to collect and analyze them really cared.

While I’m not singling out this particular problem or dataset, it is symptomatic of government run, funded and/or influenced datasets. When we first set out to deceive, oh the tangled web we weave. You can see this by the continuous adjustments that have to be made.

I don’t trust any of the climate datasets to reflect real world conditions. They are now simply political tools being used as a means to an end. It is a shame as some hard working individuals originally designed these datasets and they were quite useful at one time.

Richard , it’s not that simple. Someone once said if it’s a choice of a conspiracy or a stuff-up (i.e. error) most of the time it’s the latter. The real problem is that the software used by Hadley and the CRU to create these files was never verified and in the case of the observation counts, no-one looked at the data.

Temperature data adjustments are another issue entirely. A thermometer always measure the temperature of the surrounding micro-environment. If an observation station is moved then it’s regarded as desirable to adjust the old data to the values that theoretically at least would have been measured at the new location. This is the only way to create a long-term record for that location. Several different methods are used for adjustment but whether any of them is 100% accurate is very uncertain.

jdmcl

Not quite. If, as you say, you can “adjust the old data to the values that theoretically at least would have been measured at the new location”, why do you need to “measure” in the first place? Wouldn’t a “model” be easier, better, faster, more accurate, less embarrassing, predictable, more explainable, better at predicting the future?

Some time ya just gotta admit stuff changed and you don’t have a complete record. It becomes part of the risk (aka error bars).

The problem is government wants confirmation of CAGW. Money is available for those who would help them reach this goal. The work that is produced is not widely available for scrutiny to look for errors to improve upon the work.

The rally cry seems to be “why should I show you my work when your just going to try to find something wrong with it”. When the government only pays for a desired result, that is the result you will get.

HadSST3 now updated to March 2016: http://www.metoffice.gov.uk/hadobs/hadsst3/

It was the warmest March on record for HadSST3 and up slightly from February. The only change I can find from the previous global HadSST3 data set released last month is a slight adjustment to the February 2016 figure; up from 0.604 to 0.611.

I find it interesting that they talk about corrections Reassessing bias corrections since 1850. There is no data back to 1850, well almost none, it’s all made up for the SH. How can you correct for bias with no data for most of your record for much of the oceans

hmmm

http://www.metoffice.gov.uk/hadobs/hadsst3/part_1_figinline.pdf

“How can you correct for bias with no data for most of your record for much of the oceans”

Well, they don’t use ALL the unicorn farts for renewable energy. They obviously have some left over for magic calculations.

Mark,

There were certainly fewer samples and spatial coverage was less, but data go back to 1850 in both the southern and northern hemisphere. This is stated in the COBE SST2 which states that it contains SST and sea ice data from 1850/51 for 89.5N – 89.5S, 0.5E – 359.5E: http://www.esrl.noaa.gov/psd/data/gridded/data.cobe2.html#spat

As the link you provided explains, fewer samples and coverage in the early part of the record result in this period having wider error margins than the more modern records.

Climate science is a special case, in which it is not possible to rerun the experiment. Yesterday happened, never to be repeated. Today is happening, never to be repeated. Tomorrow will happen, never to be repeated. If the data taken yesterday were inaccurate, or some data were missing, those data are forever inaccurate or missing. That is also true for today’s data. It need not be true, however, for tomorrow’s data.

In cases in which the experiment cannot be repeated when measurement issues are detected, data accuracy and data integrity are crucial. Data “adjustment” results in estimates of what the data might have been, but not in good data; and, not necessarily in good estimates. In current climate science, we do not have measurements of temperature anomalies, but rather estimates of those anomalies presented to two or three decimal place pseudo-“precision”.

“The first principle is that you must not fool yourself and you are the easiest person to fool.”

Richard P. Feynman

Yes, adjusting 1850 or 1880 several times between 2001 and 2015 is not scientific.

There appears to be a case of revisionism, making past records and data match the models

Models could not produce warming of MWP – A paper was solicited and published, disappearing the MWP (trying to) but failed, there is plenty of supporting data from the NH and little to none for the SH and tropics, meaning “we cant say it was global” but a certain hockeystick paper said it was not at all, without foundation, given those who wanted to believe assessed the statistics as useless beyond 400 years, and obviously not reliable up to 400 years but they used a get out clause “but he could be right”, an unsubstantiated comment, with no place in a scientific debate!

Models could not deal with the warming and cooling in the mid 20th century, and if you look at NASA’s current data, those events have been wiped out on the record.

For example take Iceland in the 70s, they had a difficult time, recorded in records, there to read, if was so cold, NASA changed that history, apparently reality is irrelevant to NASA alterations to data for countries.

So Iceland NO! you didn’t suffer in the 70s because Hansen revised your temp record therefor any claim that the period was bad for Iceland is denial.

As you say, if yesterday’s data is not good enough, you cant go back and change it to make it good, yet NASA NOAA and a host of other revisionists have done just that.

Oh and lets not forget the fudging of aerosols on past data too to make models do what the temperature did.

You can get models to do what you want all you need to do is invent reasons for the adjustments. Given it is the climate science “vague past” no one can prove them wrong really, unless someone goes back in time, more shot to nothing science.

Mark,

Where a known bias, whether warming or cooling, in a temperature data series is identified, how would you suggest it be dealt with?

DWR54

Depends on the meaning of “bias”

Example 1: an instrument is known to drift by x/unit time. Solution: Publish both the legacy & “adjusted” data, referring to “adjusted” data as pro forma (aka may be even more biased).

Example 2: interpretations are always based toward a warmer world. Solution: admit you really don’t have data.

When are the cAGW models going to be corrected , they don’t match measured data ?

…or, even “adjusted” data.

The self-appointed Brightest People in the Room ™ who are The Guardians of the Planet ™ also see themselves as our moral betters. But they consistently seem to be unable to use common sense when it comes to storing and publishing data that is the basis of their demands that the west must surrender to their collectivist demands of bigger government, less freedom and less prosperity. Because climate change!

For the record

Zeke Hausfather March 25, 2016 at 8:55 am I’m not finding any mix up between NH and SH data

Nick Stokes March 25, 2016 at 1:48 pm Yes, I can’t see any problem with NH and SH.

Nick Stokes March 25, 2016 at 4:52 pm m So who’s checking?

Steven Mosher March 25, 2016 at 12:39 pm Lets see the code the guy used to download the data.

Steven Mosher Mar 26, 2016 at 6:39 AM Some guy says he read the data and it was upside down..I am skeptical of his claim. I want to see his code.

kim April 1, 2016 at 11:56 am Hi moshe; there’s an update from Tim Osborn thanking McLean and correcting the record

John McLean March 31, 2016 at 3:59 am The CRU updated its files on March 30. just after the table and about 30% down the page, we find …“Correction issued 30 March 2016. The HadSST3 NH and SH files have been replaced. The temperature anomalies were correct but the values for the percent coverage of the hemispheres were previously incorrect. No correction yet from Hadley Centre, team leader John Kennedy is out of the office until April 6th.

Nick Stokes April 12, 2016 at 2:15 am the temperatures were at all stages correct. The issue were the coverage numbers, given in an inconsistent latitude order convention. I can’t imagine anyone who might have been seriously misled by that.

An apology to Nick,

He meant he is Australian like I am and all those numbers are upside down to us down here so they look normal.

“Steven Mosher Mar 26, 2016 at 6:39 AM Some guy says he read the data and it was upside down..I am skeptical of his claim. I want to see his code.

kim April 1, 2016 at 11:56 am Hi moshe; there’s an update from Tim Osborn thanking McLean and correcting the record”

The claim was the data was upside down.

I was skeptical ( for a reason .. none of you guys figured out the meaning behind the code I posted)

Turns out…. The data was not upside down.

Note..

nobody uses the .dat files.

unless they dont know shit about programming.

[Mr. Mosher, let me say this just once. You have become a condescending jerk. You post code, with no explanation as to what it is or why, then blast people when they don’t rise to your expectations. I’m done with you and your shenanigans – Anthony]

Steven, the ASCII version of the observation count file had its monthly gridded data written 90S to 90N instead of 90N to 90S. The temperature anomaly gridded data file is witten 90N to 90S. It’s a bit simplisitic to say that the data was upside down, which is why I describe it as I do.

You must be correct about people who use the .dat files knowing shit about programming. I only have 40 years of programming experience, but hey, what would I know? Personally I find NetCDF files very cumbersome and I find that some sites that provide climate data have easy-to-modify Fortran code. In the case of HadSST3 data the extra precision of NetCDF means two-fifths of nothing.

It might be worth clarifying that the -nh.dat etc files are actually the files of hemisphere averages. The grid files, which would normally be accessed via netCDF, in ascii form have names like HadSST.3.1.1.0.median.txt.

I think the merit of netCDF is not so much the precision, but the built-in structure and documentation, which should avoid problems like this discrepancy.

I wish everything would be plotted with 95% confidence intervals. Then I can see how often those error bars are exceeded, which would remind us that this stuff doesn’t follow “normal” statistics.

The bottom line is John noticed the issue, never claimed it had significance relating to any scientific findings ect. He openly said he thinks he has seen an issue, and asked others to look.

This was entirely normal in the world of science, yet, all of a sudden Nick and others became skeptics, if only they would don that cloak 24×7 😀

Instead Nick fueled the “denier” screamers delusions as did the others like ATTP, who I cant take seriously because he uses the word denier, I cant take anyone serious when they utter that term, it’s 2015 for god’s sake

Nice to see Nick Stokes on form https://wattsupwiththat.com/2014/03/03/monday-mirthiness-the-stokes-defense/

I love it, Josh. I must admit that I wasn’t familiar with Stokes until he stepped into this issue about flaws in the data.

“inconsistent latitude order convention”

How are the adjustments that are done to the data impacted by such?

No adjustments were required to observation counts, which was the file with two problems. No adjustment was required to the coverage data in HadSST3-nh.dat and .sh.dat because coverage is determined according to whether the temperature anomaly for that grid cell is present (i.e. not ‘missing data’). It’s only temperature recordings that are adjusted.

I’m continually amazed at the number of reports/studies/etc that have been shown to use the initial data incorrectly, yet the result isn’t changed when the data is used correctly.

How is that possible?

Just see what happens when they make the data available? Recall: Why should I make the data available to you, when your aim is to try and find something wrong with it.

lol anomaly difference (C) from 1961-90. The chart average is based on a 29 year cherry pick? Which in reality time wise is too short of period to decide what average means let alone to make policy from.

DWR54@April 12, 2016 at 9:11 am

“Mark,

Where a known bias, whether warming or cooling, in a temperature data series is identified, how would you suggest it be dealt with?”

How is the bias known?

When did it begin?

Was it a step change of progressive?

What is the nature of the bias: instrument choice; instrument calibration; enclosure; siting, etc?

Simple answer: Eliminate the bias going forward. Remove the biased data from the analysis over the period of the bias, if that can be determined. Otherwise, completely eliminate that data from the analysis..

“How is the bias known?

When did it begin?”

A classic case is the ship-buoy bias, which has got Lamar Smith so upwrought. Surface measurements of SST have long been from ships. But over the last thirty years, drifting buoys have been used to an increasing extent, with gains in coverage and consistency of instrumentation. But do they give the same results? You can tell that when a ship and a buoy take readings in nearly the same place and time. With the first few years data, there was no statistically significant difference. Not enough matches. But by the time of Kennedy’s paper in 2011, there were many millions of data points, and a small but undeniable discrepancy of about 0.1°C (some spatial variation) between ship and buoy. By the time of Karl 2015, there was even more data. So there is a mixture of ship and buoy data, with this slight difference. What to do? You have to adjust. Otherwise the estimate of average temperature varies with the percentage of buoys in the mix.

Like the adjustments to the Argo data?

SST data has been collected via water sampling in wooden, canvas and rubber buckets, hull-mounted sensors, thermometers on engine water intakes and via drifting buoys, fixed buoys and recently Argo buoys. For the first five methods no data was recorded to say which method was used, so there’s a bunch of assumptions and estimates that get used.. Further, there’s adjustments for the height of the side of the ship to be taken into account (and usually cooling is assumed despite the possibility that the air might be warmer than the ocean). Then there’s the unanswerable question of whether the bucket was placed in the shade on deck or in the sun. There’s even the question of whether the sampling of the water was made at night and the light or candle used to read the thermometer caused warming either directly or the delay to get into the light altered the temperature indicated on the thermometer.

Adjusting for all this is a black art and I doubt very much that it’s all accurate.

A significance of 0.1. 😉

Perhaps, but since the buoys are purpose-built and, presumably, calibrated before deployment, one would think that the adjustment should have been toward the buoy data.

Can’t wait to see NOAA’s fudge mix or is it too sensitive to provide ELECTED officials ? .

I can`t see why this is such a big deal. This is science in action.

Issue identified, Issue resolved, all in the public domain with reasons etc. To me as an engineer this is just good science / QA in action.

Arguably, “QA in action” would have caught the error before publication. Relying on an unassociated third party to catch the error and bring it to your attention is hardly QA on your part.