By Larry Kummer. From the Fabius Maximus website.

Summary: This post looks at an often asked question about climate science — how accurate are its findings, a key factor when we make decisions about trillions of dollars (and affecting billions of people). Specifically, it examines the oceans’ heat content, a vital metric since the oceans absorbing 90%+ of global warming. How accurate are those numbers? The error bars look oddly small, especially compared to those of sea surface temperatures. This also shows how work from the frontiers of climate science can provide problematic evidence for policy action. Different fields have different standards of evidence.

“The spatial pattern of ocean heat content change is the appropriate metric to assess climate system heat changes including global warming.”

— Climate scientists Roger Pielke Sr. (source).

Warming of the World Ocean

NOAA website’s current graph of Yearly Vertically Averaged Temperature Anomaly 0-2000 meters with error bars (±2*S.E.). Very tiny error bars. Reference period is 1955–2006.

.

Posts at the FM website report the findings of the peer-reviewed literature and major climate agencies, and compare them with what we get from journalists and activists (of Left and Right). This post does something different. It looks at some research on the frontiers of climate science, and its error bars. The subject is “World ocean heat content and thermosteric sea level change (0–2000 m), 1955–2010” by Sydney Levitus et al, Geophysical Research Letters, 28 May 2012. Also see his presentation. The bottom line: from 1955-2010 the upper 700 meters of the World Ocean warmed (volume mean warming) by 0.18°C (Abraham 2013 says that it warmed by ~0.2°C during 1970-2012). The upper 2,000m warmed by 0.09°C, which “accounts for approximately 93% of the warming of the earth system that has occurred since 1955.”

Levitus 2012 puts that in perspective by giving two illustrations. First…

“If all the heat stored in the world ocean since 1955 was instantly transferred to the lowest 10 km (5 miles) of the atmosphere, this part of the atmosphere would warm by ~65°F. This of course will not happen {it’s just an illustration}.”

World Ocean of ocean heat content (1022 Joules) for 0–2000 m (red) and 700–2000 m (black) layers based on running pentadal (five-year) analyses. Reference period is 1955–2006. Each estimate is the midpoint of the period. The vertical bars represent ±2.*S.E. Click to enlarge.

Second, they show this graph to put that 93% of total warming in perspective with the other 7%. …

A large question about confidence

These are impressive graphs of compelling data. How accurate are these numbers? Uncertainty is a complex subject because there are many kinds of errors. Descriptions of errors in studies are seldom explicit about the factors included in their calculation.

Levitus says the uncertainty in estimates of warming in the top 2,000 meters of the world ocean during 1955-2010 is 0.09°C ±0.007°C (±2 S.E.). That translates to 24.0 ±1.9 x 1022 Joules (±2 S.E.). That margin of error is reassuring — an order of magnitude smaller than the temperature change. But is that plausible for measurements of such a large area over 55 years?

Abraham 2013 lists the sources of error in detail. It’s a long list, including the range of technology used (the ARGO data became reliable only in 2005), the vast area of the ocean (in three dimensions), and its complex spacial distribution of warming both vertically and horizontally (e.g., the warming in the various oceans ranges from 0.04 to 0.19°C).

We can compare these error bars with those for the sea surface temperature (SST) of the Nino3.4 region of the Pacific — only two dimensions of a smaller area (6.2 million sq km, 2% of the world ocean’s area). The uncertainty is ±0.3°C (see the next section for details). That’s two orders of magnitude greater than the margin of error given for the ocean heat content of the top 2,000 meters of the world ocean — ±0.007°C (±2 S.E.). Hence the tiny error bars in the graph at the top of this post.

If the margin of error is just the same magnitude as that given below for NINO3.4 SST, then it is a magnitude larger than the ocean temperature change of 1955-2010 for the upper 2,000 m. How do climate scientists explain this? I cannot find anything in the literature. It seems unlikely to realistically describe the uncertainty in these estimates.

From Australia’s Bureau of Meteorology.

Compare with the uncertainty of SST in the Niño3.4 region

Here NOAA’s Anthony Barnston explains the measurement uncertainty of the sea surface temperature (SST) of the Pacific’s Nino3.4 region. This is a comment to their “December El Niño update“. Barnston is Chief Forecaster of Climate and ENSO Forecasting at Columbia’s International Research Institute for Climate and Society. He does not say if the ±0.3C accuracy is for current or historic data (NOAA’s record of the Oceanic Niño Index (based on the Niño3.4 region SST) goes back to 1950). Above I conservatively assumed it is for historic data (i.e., current data has smaller errors). Red emphasis added.

“The accuracy for a single SST-measuring thermometer is on the order of 0.1C. … We’re trying to measure the Nino3.4 region, which extends over an enormous area. There are vast portions of that area where no measurements are taken directly (called in-situ). The uncertainty comes about because of these holes in coverage.

“Satellite measurements help tremendously with this problem. But they are not as reliable as in-situ measurements, because they are indirect (remote sensed) measurements. We’ve come a long way with them, but there are still biases that vary in space and from one day to another, and are partially unpredictable. These can cause errors of over a full degree in some cases. We hope that these errors cancel one another out, but it’s not always the case, because they are sometimes non-random, and large areas have the same direction of error (no cancellation).

“Because of this problem of having large portions of the Nino3.4 area not measured directly, and relying on very helpful but far-from-perfect satellite measurements, the SST in the Nino3.4 region has a typical uncertainty of 0.3C or even more sometimes.

“That’s part of why the ERSSv4 and the OISSTv2 SST data sets, the two most commonly used ones in this country, can disagree by several tenths of a degree. So, while the accuracy of a single thermometer may be a tenth or a hundredth of a degree, the accuracy of our estimates of the entire Nino3.4 region is only about plus or minus 0.3C.“

Examples showing careful treatment of uncertainties by scientists

The above does not imply that this is a pervasive problem. Climate scientists often provide clear statements of uncertainty for their conclusions, such as in these four examples.

(1) Explicit statements about their level of confidence

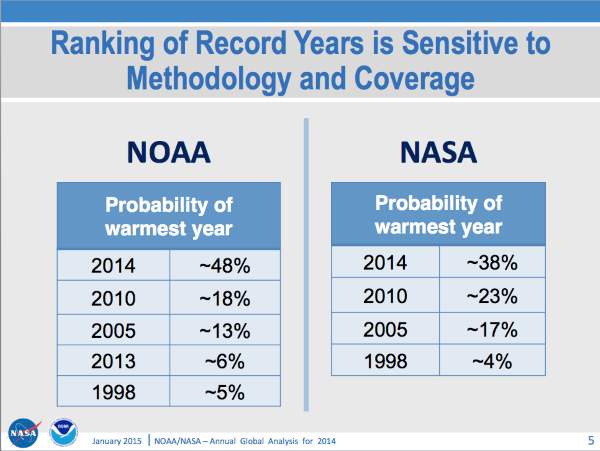

Activists — and their journalist fans — usually report the findings of climate science as certainties. Scientists usually speak in more nuanced terms. NOAA, NASA, and the IPCC routinely qualify their confidence. For example, the IPCC’s confidence statements are quite modest.

NOAA 2014 State of the Climate

(2) Was 2014 as the hottest year on record?

NOAA calculated the margin for error of the 2014 average surface atmosphere temperature: +0.69°C ± 0.09 (+1.24°F ± 0.16). The increase over the previous record (0.04°C) is less than the margin of error (±0.09°C). That gives 2014 a probability of 48% of being the warmest of the 135 years on record, and 90.4% of being among the five warmest years. NOAA came to similar conclusions. This is not a finding from a frontier of climate science, but among the most publicized.

(3) The warmest decades of the past millennium

Scientists use proxies to estimate the weather before the instrument era. Tree rings are a rich source of information: aka dendrochronology (see Wikipedia and this website by Prof Grissino-Mayer at U TN). The latest study is “Last millennium northern hemisphere summer temperatures from tree rings: Part I: The long term context” by Rob Wilson et al in Quaternary Science Reviews, in press.

“1161-1170 is the 3rd warmest decade in the reconstruction followed by 1946-1955 (2nd) and 1994-2003 (1st). It should be noted that these three decades cannot be statistically distinguished when uncertainty estimates are taken into account. Following 2003, 1168 is the 2nd warmest year, although caution is advised regarding the inter-annual fidelity of the reconstruction…”

(4) Finding anthropogenic signals in extreme weather statistics

“Need for Caution in Interpreting Extreme Weather Statistics” by Prashant D. Sardeshmukh et al, Journal of Climate, December 2015 — Abstract…

“Given the reality of anthropogenic global warming, it is tempting to seek an anthropogenic component in any recent change in the statistics of extreme weather. This paper cautions that such efforts may, however, lead to wrong conclusions if the distinctively skewed and heavy-tailed aspects of the probability distributions of daily weather anomalies are ignored or misrepresented. Departures of several standard deviations from the mean, although rare, are far more common in such a distinctively non-Gaussian world than they are in a Gaussian world. This further complicates the problem of detecting changes in tail probabilities from historical records of limited length and accuracy. …”

For More Information

For more information about this vital issue see The keys to understanding climate change and My posts about climate change. Also here are some papers about warming of the oceans…

- “The annual variation in the global heat balance of the Earth“, Ellis et al, Journal of Geophysical Research, 20 April 1978.

- “Heat storage within the Earth system“, R.A. Pielke Sr., BAMS, March 2003.

- “On the accuracy of North Atlantic temperature and heat storage fields from Argo“, R. E. Hadfield et al, Journal of Geophysical Research, January 2007.

- “A broader view of the role of humans in the climate system“, R.A. Pielke Sr., Physics Today, November 2008.

- “World ocean heat content and thermosteric sea level change (0–2000 m), 1955–2010” by Sydney Levitus et al, Geophysical Research Letters, 28 May 2012.

- “A review of global ocean temperature observations: Implications for ocean heat content estimates and climate change“, J.P. Abraham et al, Reviews of Geophysics, September 2013.

- “An apparent hiatus in global warming?“, Kevin E. Trenberth and John T. Fasullo, Earth’s Future, 5 December 2013 — Also see Trenberth’s “Has Global Warming Stalled” at The Conversation, 23 May 2014.

- “Sixteen years into the mysterious ‘global-warming hiatus’, scientists are piecing together an explanation.“, Jeff Tollefson, Nature, 15 January 2014 — Well-written news feature; not research.

A new study about global warming, mentioned at WUWT this morning: “Industrial-era global ocean heat uptake doubles in recent decades” by Peter Glecker et al, Nature Climate Change, Jan 2016. Again little attention to uncertainty of the data. It’s a fascinating study, with several aspect worth discussion.

Also see “Study: Man-Made Heat Put in Oceans Has Doubled Since 1997” by AP’s Seth Borenstein.

There is a well known bias in science for positive results. If a scientist spends years measuring ocean temperature and then states that the error bars are greater than any change in a person’s lifetime then people will ask “what the hell are you wasting your time for.” There is a real motivation to reduce the error estimates so a positive statement about trend can be made. And even more of a motivation to show a positive trend so the scientist will receive accolades from the AGW establishment.

Nice job, having skimmed the article I come away with a wine induced snicker.

To think we knew the temperature of the ocean or its heat content accurate to 0.1 C in 1955.

Twain comes to mind, something about what amazing results one can imagine from so little information.

However you have touched upon the idiocy of Climatology.

Such certainty of accuracy, precision without foundation.

Worshipping the noise of the ethers.

The over view of earth systems and what changes may have occurred are about what we can infer from polar ice extent.

A guess.

But whatever the Team IPCC ™ guess might be this week, one can be sure it is “accurate ” to two decimal places and unprecedented in the chosen record.

Lies, damn lies and statistics

Of course clearly stating the error bars and uncertainties would have prevented Climate Castrology from ever mining a single government grant

John,

I hate to think that was the motive of all the scientists doing research in ocean heat content.

On the other hand, they record the data using thermometers — but also never show it in degrees. The changes in degrees are small, which would raise questions about the significance of this finding (especially before Argo, circa 2005). Perhaps that’s a smoking gun.

The good news is that to understand this work we can ignore the mental states of the scientists involved — which is always a good idea. Motives don’t matter (e..g, some excellent surgeons enjoy cutting people).

As a layman, who nonetheless reads enough sometimes to get the gist of things, if not the details, I have a problem – how to reconcile this statement by Noaa’s Barnston, taken from the article:

with this statement by Dr. Roy Spencer, taken from an article by Paul Homewood ( originally in a post by Spencer, October, 2014), “Roy Spencer On Satellite v Surface Temperature Data”, August 30, 2015:

https://notalotofpeopleknowthat.wordpress.com/2015/08/30/roy-spencer-on-satellite-v-surface-temperature-data/

Hopefully someone can help me out. I have ideas, but I’d rather hear from those with a lot more expertise than I have.

Ken,

People tend to focus on the instruments used to measure climate, rather than the often far larger and more complex issues affecting data accuracy. There are many of those for satellites, giving us data from varying sets of moving objects in ever-changing orbits high above the Earth.

Thank you for your response and, of course, your article. Perhaps those two statements are about Apples and oranges to a degree, the way you put it, but aren’t the adjustments to the UAH data set, as an example, for things like drift, more manageable an issue for physics and math, than adjustments for thermometers located in a corrupted environment, unlike space, and for the huge areas of the globe that have no thermometers at all? Maybe Dr. Spencer’s statement that I posted, does not directly address your particular “adjustment” context, but I’m certain he would challenge Barnston’s assessment of the relative accuracy of surface and satellite measurements.

Ken,

Here Steven Mosher (of the BEST project) lists the steps to process the data from satellite MSU’s to produce the RSS and UAH lower troposphere temperature data.

http://wattsupwiththat.com/2016/01/19/20-false-representations-in-one-10-minute-video/comment-page-1/#comment-2124078

Editor:

Steve Mosher’s list of satellite process is inaccurate with many of his allusions pulled from other posts, out of context.

Steve lists radiosonde data as being bad because prior attempts to mash all radiosonde data together failed. Comparing mashed radiosonde data to their own foolish concepts of modeled radiosonde data is not usable information.

The honest takeaway is that not all radiosonde data is well collected or accurate; yet some is.

Within those various links Steve provides are admissions that subsets of radiosonde data are accurate.

Steve also states a comparison Mears did that identified UAH as in error, forgetting to mention that UAH corrected the algorithm and both RSS and UAH are in agreement now.

Perhaps, if you really want to know how satellites are used to provide temperature reconstructions you could ask Mears, Christy or Spencer? Taking bulls___ from Steve Mosher as if it is truly in the interest of good science is just wrong.

Steve knows his stuff, and perhaps could give a reasonably accurate description of satellite processing, but Steve is all about the land based temperature record

– completely ignoring the rampant activist data adjustments,

– missing data recreated from stations 1200Km (745.7 miles) away,

– poorly sited and maintained temperature stations,

– adjustments of multiple degrees, not tenths, or hundredths,

– the fact that many land based stations are thermister driven, not thermometers and require similar algorithms as the satellite!

ATheoK,

We will know more about the sat data when the RSS or UAH group speaks for themselves — which I suspect they will soon.

As for the surface atmosphere temperature data — I think there are far larger issues then those you mention.

(1) There is no Global SurfaceStations project. Focus on the 7% of the world that us USA misses the bigger point. How many stations in poor nations are CRN cat 4 (error > 2degrees) or cat 5 (>5 degrees)? How many leaders of poor nations care about that? I wouldn’t. If we want good data, we’ll should pay for it.

(2) The marine air data. It is often described in the literature as of lower quality than the land data. Holes in it are filled by proxies, such as satellite and SST data. Which is fine, but that probably does not give 4 significant digit data with good accuracy.

My understanding is that SST measurements from satellites are taken in the infrared, whereas what Spencer is talking about is taken from the radiation of oxygen atoms, in a different wavelength. Sea Surface: https://podaac.jpl.nasa.gov/SeaSurfaceTemperature

Troposphere: https://en.wikipedia.org/wiki/Satellite_temperature_measurements.

I read Mr. Mosher’s comments, and others’ on the page, and appreciate what Quinn pointed out – excellent clarification on something I wondered about – thanks.

There are complex adjustments that need to be made in Satellite data, I get that, but, at the risk of appearing to be a difficult student stubbornly questioning his professor( which I admit to having done at times in the distant past of my college days), it seems to me that a combination of a pristine environment from which those measurements are made, state of the art instruments, and far superior coverage would make that data , even if imperfect, intrinsically superior to any surface data as an indicator of global trends. And the adjustments seem to be free from a discernible bias. Dr. Spencer wrote in his blog:

http://www.drroyspencer.com/2015/03/even-though-warming-has-stopped-it-keeps-getting-worse/

Many thanks for the the input. If I ended up seeming argumentative, nonetheless you have given me, and others like me, additional perspective, from which to view the subject.

There is [no] way even with Argo network to have any precision of less that + or -1 C or greater. The variables will overwhelm any measurement system, they randomly take measurements of the ocean in random time at random places and somehow claim accuracy and precision that daily calibrated precision thermometers can’t do.

Here is what a reference thermometer can do “An example of a reference thermometer used to check others to industrial standards would be a platinum resistance thermometer with a digital display to 0.1 °C (its precision) which has been calibrated at 5 points against national standards (−18, 0, 40, 70, 100 °C) and which is certified to an accuracy of ±0.2 °C.”

Maybe climate scientist need to read this high school lesson. http://www.digipac.ca/chemical/sigfigs/accuracy_and_precision.htm

Having work in electronics and computer for a life time anyone claiming great precision from equipment that is not maintain or calibrated regularly and too top that off use measurement that are not taken under very control conditions generally end up to be a bucket of spit. Argo is better than bucket or intake measurements but it will take decades of such measurement to make sense and a whole lot more fixed measurement to have the slightest clue as to what the true heat content of the oceans might be.

Then they wonder why their so called alarm is not listen to, why on God greens earth would anyone listen to those educated idiots, other than con men and politician who want to extract money from our pockets for no other reason than they want our money, after all as Bill Clinton put the people are to stupid to know how to spend their money. OH I forgot it the con men and politicians who hired them and is paying their salaries, the unfortunate part is they are using our money for this junk.

Currently, “God greens” God’s green Earth with CO2, despite cooling.

Levitus apparently claims an uncertainty of ±0.007°C for the estimated warming from 1955 to 2010 of 0.09°C.

I can’t see how such accuracy is possible.

Sure, with instruments that have been used on ARGO floats the temperature sensor has an accuracy of ±0.002°C. But that is not the measurement error.

The temperature sensor on the float is above sea level when the float is at the surface, so the surface temperature must be inferred from the temperature measurements at depth as the float approaches the surface. This means that the temperature measurement accuracy is dependent on the accuracy of the pressure sensor to estimate depth (at best ±2.4 metre) and on the temperature lapse rate at the depth of the measurement.

At best the surface temperature measurement error is ±0.05 C for the surface temperature and could be more than ±0.3 C at the depths of greatest lapse rate.

Moreover the sensitivity and the offset error of the pressure sensor vary over time, implying even greater measurement error for temperature at depth readings closer to 2000m depths.

A more uncertainty-realistic Levitus estimate of ocean warming may be 0.09°C ±0.5°C.

Here is my idea to test Argo network precision.

Take an Olympic diving pool, turn off all pumps and lights and heating. Wait 48 hours so the temperature is uniform thru the pool. Now take 2 Argo floats and sink into the water. After 8 hours, get the temperature for each. Pull each out of the water for 8 hours, then sink again for 8 hours. Get the temperature again. The precision would be at least the high/low difference. Any bets that all readings are within ±0.007°C?

http://images.remss.com/papers/rsspubs/gentemann_jgr_2014.pdf

Three way validation of MODIS and AMSR-E sea surface temperatures – Lists the many problems with the accuracy of Ocean surface temperatures and just what is being measured.

Biases were found to be 20.05C and 20.13C and standard deviations to be 0.48C and 0.58C for AMSR-E and MODIS, respectively. Using a three-way error analysis, individual standard deviations were determined to be 0.20C (in situ), 0.28C (AMSR-E), and 0.38C (MODIS).

Agreed Mark, well stated:

Except a quibble;

There is nothing wrong with that statement, except some understanding the issues, which are part of what you’re describing.

Temperature:

100°C & 212°F are based on water boiling at sea level.

0°C and 32°F are based on water freezing at sea level.

0°K is considered the temperature of absolute zero; a concept that hasn’t been easy to test.

Thermometers marked in Celsius and Fahrenheit require a viewer to ‘estimate’ temperature. Even the most meticulous near sighted temperature recorder with their nose touching the thermometer and level with the liquid level at best interpolates to one or two decimal places.

Thermometers showing a temperature reading level with the markings is still inaccurate; is the temperature 27.9°C or 28.1°C?

Someone, at some later time, ‘adjusting’ temperature records because of this issue is akin to pedophilia.

Thermometers and electronic devices are calibrated to this concept; exactly calibrating is the acceptance of alternative measure; e.g. electrical resistance of a platinum wire.

as you point out so well Mark, all of these different methods for measuring temperature require accurate calibration when installed and tested.

Anyone who uses a gas pump or elevator can view the inspection sheet for calibration and certification. Casual inspections are not valid certification process.

When properly certified, the accuracy of the equipment is given; e.g. .004°C, which represent the maximum accuracy of that component under ideal conditions; laboratory.

Just because an instrument is capable of recording at .004° accuracy does not mean that it ever does.

Ideally, a device is tested extensively under all conditions it may operate under and usually far worse.

e.g. Paint manufacturers paint multiple shingles with a paint sample. Some of the shingles are put outside, in the weather in full sun; other samples get to have researchers try many extremes on the sample.

On an individual device basis:

Each and every temperature reading device out there is subject to loss of accuracy. If one argo buoy temperature device drops in accuracy from .004°C to .01°C, then it is possible that all of the buoys should be assumed to be .01° accuracy.

Just because the electronics are capable of .004°C accuracy, it is very unlikely that a buoy splashing round in the ocean, collecting seaweed, bird dung or walrus scratchings and growing colonies of algae will ever read temperatures at that level of accuracy. Especially if the buoy is languishing at depth in a fresh eddy of whale urine.

For the aggregate:

Add the temperature readings from a device operating at .01°C to other devices even those operating at .004°C accuracy, than the maximum resulting accuracy in not above .01°C for any results.

This is before tracking the path of data recording path and any possible errors in those handling processes; e.g. when data is transmitted are digits rounded up or down or are they truncated? All three negatively affect the accuracy of the result.

Errors resulting from seaweed, dung accumulations, single cell life colonies must also be part of the data record.

Odd that I’ve never read any recognition of those little issues.

NOAA is free to recognize that all kinds of life likes to colonize their thermometer hovels, but nowhere have I spotted where the errors caused by that colonization is included in the temperature record.

NOAA and other land temperature worshipers are quick to play games with the data, point to a 0.1°C anomaly and scream ‘global warming’; yet the land temperature record is full of temperature recording devices with greater errors and potentially much greater.

I’ve yet to hear of any NOAA thermometer station getting certified and verified? Or any substantial testing and recording of temperatures prior to replacement.

I can’t imagine any self respecting engineer failing to conduct prior and subsequent tests to ensure proper installation and data recording.

In the real world, errors accumulate and even multiply. A thermometer that reads from -10.0°C to +40.0°C has a fifty degree range.

A half degree thermometer range error starts off with 1% error.

A half degree reading error represents another 1% error potential.

Recording that temperature adds in another opportunity for error.

Transmitting or transcribing that error is another opportunity for error.

At least when people were/are recording temperatures every day, they keep the stations relatively bug, bird and rodent free. Even the so called ‘ideal’ surface stations fail to meet this simple metric

And before data abuse. yeah, all bow before the deity of land based abused temperatures…

I don’t understand why anyone would expect to be authoritatively guided through ocean heat content uncertainties and other climate-related questions by a financial advisor but ‘to each his (or her) own.

Why struggle through a rambling collection of cut-and-pasted ocean heat content quotes when over at Climate Etc. you can read Judith Curry’s authoritative take on the subject:

http://judithcurry.com/2014/01/21/ocean-heat-content-uncertainties/

I notice ‘The Editor’ also manages to smuggle in a couple of referrals to articles, one by Trenberth, on how the Global Warming Monster is poised to spring up from the ocean depths and go BOO!

Incidentally when I try to follow the link to “The keys to understanding climate change” I get a message to the effect that the site fabiusmaximus.com is not to be trusted.

Fabius Maximus of course was famous for the Fabian strategy of avoiding head-on up-front conflict and wearing the enemy down by attrition.

The only question here is who The Editor regards as the enemy.

Chris,

(1) You cite Prof Curry. She liked this post, and included it in her Science Week in Review list of readings: http://judithcurry.com/2016/01/15/week-in-review-science-edition-30/

(2) “manages to smuggle in a couple of referrals to articles, one by Trenberth, on how the Global Warming Monster is poised to spring up from the ocean depths and go BOO!”

That’s quite a mad sentence, weird first to last. BTW, Trenberth is a major climate scientist; he works at NCAR, and has an extraordinary H-Index of 94.

(3) “message to the effect that the site fabiusmaximus.com is not to be trusted.”

Can you provide more info? What service were you using. To silence opposing views activists falsely report websites for sexual content and malware. It’s happened twice to the FM website. Easy to fix, but annoying.

(4) “The only question here is who The Editor regards as the enemy.”

Wow. No reply needed.

“… the Global Warming Monster is poised to spring up from the ocean depths and go BOO! …”.

That was just a bit of rhetoric Mr Editor.

Trenberth’s hidden heat is reminiscent of Carl Sagan’s metaphor of the ’dragon in the garage’ when explaining ad hoc hypothesising which is exactly what the hidden heat hypothesis is.

Chris,

“Incidentally when I try to follow the link to “The keys to understanding climate change” I get a message to the effect that the site fabiusmaximus.com is not to be trusted.”

I checked the major services and found we have a problem with Webroot. Thanks for flagging this (although it would have been nice if you had said what service you used).

So that takes care of points #2 and #3 that you raised. I’m interested to hear what you say about #1.

It is simply impossible for the observed increase in downward LWIR flux from a 120 ppm increase in atmospheric CO2 concentration to heat the oceans. This presumed LWIR induced ocean warming is one of the major errors in the global warming scam. The increase in flux from CO2 is nominally 2 W.m^-2 or 0.18 MJ.m^-2 per day. The oceans are heated by the sun – up to 25 MJ m^-2 per day for full tropical or summer sun. About half of this solar heat is absorbed in the first 1 m layer of the ocean and 90% is absorbed in the first 10 m layer. The heat is removed by a combination of wind driven evaporation from the surface and LWIR emission from the first 100 micron layer. That’s about the width of a human hair. In round numbers, about 50 W.m^-2 is removed from the ocean surface by the LWIR flux and the balance comes from the wind driven evaporation. The heat capacity of the cooled layer at the surface is quite small – 4.2 kJ.m^-2 for a 1 mm layer. This reacts quite rapidly to any changes in the cooling flux and the heat transfer from the bulk ocean below and the evaporation rate change accordingly. The cooler water produce at the surface then sinks and cools the bulk ocean layer below. This is not just a diffusion process, but convection in which the cooler water sinks and warmer rises in a complex circulating flow pattern (Rayleigh-Benard convection). This couples the surface momentum (wind shear) to lower depths and drives the ocean currents. At higher latitudes the surface area of a sphere decreases and this drives the currents to lower depths.

In round numbers, the temperature increase produced by a 2 W.m^-2 increase in LWIR flux from CO2 is overwhelmed by a 50 ± 50 W.m^-2 flux of cold water and a 0 to 1000 W.m^-2 solar heating flux.

Over the tropical warm pool the wind driven cooling rate is about 40 W.m^-2.m.s^-1 (40 Watts per square meter for each 1 m/sec change in wind speed). This means that a change in wind speed of 20 cm.s^-1 is equivalent to the global warming heat flux. (20 centimeters per second).

There is a lot of useful information on ocean surface evaporation on the Woods Hole website http://oaflux.whoi.edu/data.html

The heat content of the first 700 m layer of the ocean is of little concern in climate studies. It is the first 100 to 200 m depth that matters. About half of the increase in heat content occurs in the first 100 m layer. This is shown in Figure 2 of the 2012 Levitus paper.

The ocean warming fraud goes back to the early global warming models. In their 1967 paper, Manabe and Wetherald used a ‘blackbody surface’ with ‘zero heat capacity’. They created the global warming scam as a mathematical artifact of their modeling assumptions. These propagated into the Charney Report in 1979. Then an ‘ocean layer’ was added to the model. The layer had thermal properties such as heat capacity and thermal diffusion, but the CO2 flux increase had to magically heat the oceans. This is computational climate fiction. Any computer model that predicts ocean warming from CO2 is by definition fraudulent. The fraud can be found in Hansen’s 1981 Science paper and has continued ever since.

Hansen, J.; D. Johnson, A. Lacis, S. Lebedeff, P. Lee, D. Rind and G. Russell Science 213 957-956 (1981), ‘Climate impact of increasing carbon dioxide’ http://pubs.giss.nasa.gov/abs/ha04600x.html

For a more detailed discussion see:

Clark, R., 2013a, Energy and Environment 24(3, 4) 319-340 (2013) ‘A dynamic coupled thermal reservoir approach to atmospheric energy transfer Part I: Concepts’

Clark, R., 2013b, Energy and Environment 24(3, 4) 341-359 (2013) ‘A dynamic coupled thermal reservoir approach to atmospheric energy transfer Part II: Applications’

http://venturaphotonics.com/GlobalWarming.html

Thanks for highlighting the fact that no plausible mechanism has been identified by which downwelling long wave radiation can increase ocean heat content. This really is the Achilles heal of alarmism, especially as we are talking about seventy percent of the earth’s surface.

The only mechanism that I know off that has been postulated, is that it warms the thin film surface layer, increasing the temperature gradient across it and thus reduces conductive heat loss from the body of water beneath it. In other words, downwelling radiation acts as an insulant that reduces loss of ocean heat, that is primarily gained from insolation. I am not aware of any paper that quantifies the influence on ocean heat content that such a mechanism would have, but it will surely be only a fraction of the figure derived by multiplying the theoretical forcing by the oceans’ area.

Oh dear! Achilles HEEL in my post above.

Is R.Clark documenting a very large negative feedback?

“Over the tropical warm pool the wind driven cooling rate is about 40 W.m^-2.m.s^-1 (40 Watts per square meter for each 1 m/sec change in wind speed). This means that a change in wind speed of 20 cm.s^-1 is equivalent to the global warming heat flux. (20 centimeters per second).

If warming increases wind speeds, increased wind speeds increases cooling greatly.

Job done.

So your argument is that because his model would predict negative feedback, which is in direct contradiction to data, this proves something?

+bizagizazillionmillion

[No Pamela. The bizagizazillionmillion is the business of zig-zagging around to collect all the new profits from the zillionmillion equations needed to draw a straight line through past data points into the future. .mod]

LOL! I’ve done a least squares linear best line of fit the old fashioned way, by hand. And an ANOVA too. Took a while. And missed the part about getting a profit from doing it! Damn!

“Took a while. And missed the part about getting a profit from doing it!”

I can tell that you enjoy self torture too. I made a pact with myself a couple of years ago to do the same. So after fiddling with graph paper, various selections of scales, and searching for data on the web, when reality of watered down data made me stop and think of a new way of doing this stuff. That is when I set up my studio to produce my own data.

I went looking for the best way to present my “plots” so they could be used in the future (by me and any one else that wanted to intrepret nature). I bit off a chunk.

But after the rigorous two years of this, have finally come to the point that I must start looking at all the constant data from 7 instruments taken 24/7/52. And well, I am hooked. I cannot stop recording now even though i have 21 TB of video recordings of the past to be looked at.

I chose my instruments for repeatability and not accuracy. Even then problems with the gathering process popped up (mostly caused by too much beer before I hit the save button).

I have noticed that nothing has been done to improve the readings of local barometric pressure readings. At-best I found only one location that puts out readings 30 minutes apart and none produce a product that is at least 10x the accuracy that is needed to see the data clearly.

And of course the data itself may or may not be accurate. So, I set up a studio with a barometer that reads to the nearest .001″ Hg. For the last year have been using this instrument as my INDEX to the rest of the instruments since it sees very minute changes in atmospheric pressures. When compared to all the various products used for CLIMATE such as SWEPAM, Sun and Moon rise and set times, and those that measure the magnetic field changes, I am still having problems seeing correlations between each. But there is a very strong correlation when all the instruments are compared with my SUNSHINE monitors.

So Pamela, good luck. And, now what do you plan to do with the plots? I find that even tho mine are very detailed with notes and simple references on them, they are useless to the scientists that are comfortable using the prefab data put out by NASA & NOAA. I have also come to the conclusion that the state-of-art research are not using daily readings but averages of the “at-best hourly” readings. Even tho data from the 1 minute products are considered accurate, they provide other products with 5 minute cadences that are just averages (or some sort of statistical conglomeration).

Again, good luck. And when you are able to communicate these plots into the research field, let me know. I have YET been able to have my friends understand what a graph is and how useful they are especially when it is on paper and plotted with a pencil. And worse, when they see me coming with my papers, they find an excuse to leave quickly. This is probably typical for the general public.

BTW, here at my little ranch, the outside temperature on a clear morning, begins to rise when the sun appears each morning. (I have an instrument to tell me when first light appears) That alone flies in the face of a whole bunch of research of the past. Especially the settled science energy budgets.

When the microscope is placed on their data, it looks different, as the posts here at WUWT show.

LeeO

Hello. I suggest trying to publish in respected peer reviewed journals seeing as Energy and Environment has had some controversy. Your work will receive more valuable scrutiny than when preaching to the choir, so to speak.

Dr. Clark, your argument makes no sense to me. You seem to be saying that the temperature increase produced by CO2 radiative forcing is “overwhelmed by” contributions from evaporation and solar heating flux. How is this not begging the question? I don’t see where you justified that CO2 radiative forcing is negligible. You seem to have merely assumed it outright.

Uncertainty at these scales amounts to unacknowledged ignorance.

The last person to explore the deepest hydrosphere, albeit without much intent, was a director of cheesy movies. It’s hard to get a climate scientist to even open a window, let alone step outside (where he might be confronted by some of those confusing cloud thingies).

I know they say one can always learn remotely, what with all the latest doo-dads. And heaven knows if we’d ever want Chris Turney anywhere near a boat again.

But I’m reminded of the learned scholars walking along the Seville docks discoursing on the futility of Magellan’s expedition…

Then the Victoria came sailing up the Guadalquivir.

DMI did an Arctic Sea Ice Extent 30% coverage of the The Northern Hemisphere Sea Ice for 11 years.

As of the start of 2016 they have dropped this link.

The 30% coverage had shown above normal sea ice extent for over 2 months and it was at an all time high for that time of year on the 1/8/2016.

No reasons given.

Obviously inconvenient truth bites the warmists dust.

Have you inquired at DMI as to the reason? I have received relatively prompt responses from DMI in the past.

They have two links. Which one did they drop?

http://ocean.dmi.dk/arctic/old_icecover.uk.php

and

http://ocean.dmi.dk/arctic/icecover.uk.php

Lucia’s blog has discussion on aspects of this on her 1/8/2016 latest blog.

A commentator asked

‘ why do ocean currents run in ten thousand-year (or more cycles).

an answer was

I’m pretty sure the problem is the viscosity, density and heat capacity of water compared to air. Flows are much slower so mixing times are longer.”

“Because the ocean heat capacity is large and changes slow, it generally takes about 10,000 model years to spin up a coupled climate model to equilibrium, although acceleration techniques are available to speed the process. The model must be run at least 1,000 years, starting from near this equilibrium state, to diagnose its climatology and variability. Only after these diagnoses are performed is the climate model ready for use.”

See “Responding to Climate Modeling Requirements | Improving …

http://www.nap.edu/read/10087/chapter/7”

Fantastic overview of computer speeds and operation times for supercomputers with Climate models.

More to the point, if it takes 1000 years to reach an equilibrium state [which it cannot reach due to other inputs] it means that any changes in ocean temperature must only be 1/1000th per year on average. Which makes looking at changes to the ocean heat content for climate clues vanishingly small and laughable

@angech,

That chapter “Responding to Climate Modeling Requirements” was for the year 2001, and you’re right, it’s a fantastic clear overview. Do you know if anyone has updated it?

Anyone claiming an accuracy of ocean temperatures in tenths of a degree or less is delusional!

Dishonest is the correct word.

I spent a lot of years as a test engineer in the nuclear industry. Measuring temperature is a lot more complicated than just looking at the calibrated accuracy in the shop.

I came into the control room the day after a shut down and asked what temperature they were the reactor. My reply to the puzzled look was that I was getting a cup of coffee. I came back and was told an answer. I looked at the panel and said I needed more coffee. When I came back the decay heat removal pump was running. Temperature jumped 10 degrees. The accuracy of the temperature instrument depended on forced circulation.

That is a simple system but the uncertain calculation is backed up with validating the model.

Josh Willis, prior to 2008 wrote several papers on how the Argo buoys showed the oceans to be cooling, particularly the Pacific. In 2010 he started adjusting the argo data to show the oceans warming. The basis for the adjustments relies on estimates of ice melt, guesses of TOA radiation imbalance, models of GIA that assume global effect while Morner has shown it to be regional, guesses about the proportion of sea level rise due to thermal expansion etc

It also used the highly adjusted sea level rise from the satellites where raw data shows 1mm/year rise (or 0.33 for Envisat) being adjusted to 3.2mm/year by many of the same factors mentioned above.

In other words you take accurate temperature measurements at a given locations and depths and adjust them using a whole lot of questionable assumptions and then present it to the world as reality – you have to be joking.

The adjustments are discussed here http://earthobservatory.nasa.gov/Features/OceanCooling/page1.php

The article ends “Models are not perfect,” says Syd Levitus. “Data are not perfect. Theory isn’t perfect. We shouldn’t expect them to be. It’s the combination of models, data, and theory that lead to improvements in our science, in our understanding of phenomena.”

In other words look at this , now the data agrees with the models.

Why is it that some people spend so much time analysing data that has been adjusted by people who refuse to provide justification or computer code for the changes they make.

Such data has no scrutiny by the scientific community and should be treated with the contempt it deserves.

Peter Foster January 20, 2016 at 1:01 am

…

The adjustments are discussed here http://earthobservatory.nasa.gov/Features/OceanCooling/page1.php

Yes, Josh Willis and “Correcting Ocean Cooling” a prime example of adjusting the data to fit the hypothesis.

Then there’s this opening statement from Chapter 5 in the IPCC’s AR4 report:

“The oceans are warming. Over the period 1961 to 2003, global ocean temperature has risen by 0.10°C from the surface to a depth of 700 m.”

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch5s5-es.html

Really? Over 42 years and the entire ocean they can detect a 0.10°C rise? Not 0.1, but 0.10. And why the 1961 starting point? Boggles the mind.

I fail to see how anyone can derive an error bar on a non-random sample size of one. Did they have replicate measuring devices within sites ? Nope. Did they randomly distribute the sampling sites where the (non)replicated samples were to be taken ? Nope. Did they test any of the assumptions about the data, normal distribution, etc before using the statistics that required such tests ? nope.

So exactly how is it possible to derive a measure of variance to hundredths of a degree ? Beats me.

Why do we even discuss such pseudo-science ? They can at least have the grace to find out how many replicate samples would actually be required for each within-site variance at the required error range.

BioBob,

The problem is that these error bars are correctly constructed — but reflect only a part of the various uncertainties. So they are useful so long as readers understand that the actual uncertainties are far larger.

That’s the problem with not reporting the actual ocean temperature reading. Readers lose touch with the actual measurements, and so tend to have no intuitive understanding of their accuracy.

Biobob

This is a product of inductivitis and motivated reasoning. The human mind is capable of convincing itself of anything with enough rationalization. The only cure will be for all the oscillations and natural variability to play out until all the observational data cannot be ignored. That may be awhile though.

“0.09°C ±0.007°C (±2 S.E.)”

Sounds like the old error of CRU’s Phil Jones, in assuming temperature data for the worlds’ oceans is homogeneous. It’s not.

So, the Oceans are boiling. Which begs the question as to why I still have to fry my fish?? Also, how can warming water become so acidic as to be fatal for my pre-cook Flake?? 1+1=3???

regards

It would be interesting to read (again) about the actual techniques used to measure Ocean Temperature prior to the year 2000.

As I remember, the accuracy of these techniques were awful approximations (versus accurate, precise measurements). Then the question, how were these “very inaccurate” raw temperatures “homogenized” to give a nice neat graph and low error bars.

Allen,

Here is an excellent review of the history of measuring ocean temperatures: “A review of global ocean temperature observations: Implications for ocean heat content estimates and climate change“, J.P. Abraham et al, Reviews of Geophysics, September 2013.

Thank you. I’ve begun to read it. About a third of the way through at this point.

The top graph implies that we knew the ocean temperatures at a huge number of places throughout a 2km water column to hundredth degree accuracy in 1960.

I honestly cannot believe that.

Since I cannot believe that, I cannot believe any part of the graph. I come away as ignorant as I arrived: “Myself when young did eagerly frequent doctor and sage, and heard great argument … but evermore came out by the same door as in I went.”

Here is a question. Suppose we measure at n randomly chosen places in the top 2km of the world ocean and report the average of those samples as the average ocean temperature. How big should n be so that we have only a 5% chance of being wrong by as much as 0.1 degrees?

I decided to do some simulation in an over-simplified model. If we have problems in this model, we’ll have worse problems in the real world.

Let us consider a cube with unit sides.

The temperature at the bottom is 5 degrees. The temperature at the top varies linearly from 5 degrees as the (polar) left to 25 degrees at the (tropical right), and doesn’t vary front to back. The temperature varies linearly with depth from the bottom to the top. This is a very very idealised model where by construction there *is* a well defined average temperature: 10 degrees.

We can simulate measuring by taking x, y, and z to be independent uniform random variables, taking T(x,y,z) = 5+20yz, and averaging these results. In R,

oe <- function (n) mean(runif(n)*runif(n)*20+5)

To find the probability of being wrong by simulation, we do this 1000 times, count the number of times we're wrong, and divide that by 1000.

oepe = 0.1))/1000

I then called this for a bunch of n values until I repeatedly got answers about 0.05.

The answer is “about 7400”.

What if we want to be confident of being within a hundredth of a degree? Then we need about 800000 samples.

Now I *know* this can be figured out precisely with suitable theory, but I wanted something easy for everyone to understand where there was no question of a possible mistake in unfamiliar mathematics.

I also understand very well that the real ocean (and for that matter the real atmosphere) are a lot more complicated. That’s why we’d need *more* samples for the real world.

If someone tells you they know the global temperature to within 1 degree, they might be wrong but they’re not completely crazy. Satellites don’t really help much because they don’t see 2km under water.

There is no mystery about these accuracy claims, they derive from statistics rather than measurement. Basically, they rely upon

SD(sample mean)=SD(sample)/sqrt(sample size), SD=standard deviation

Large sample size means much reduced SE=SD(mean) for the sample mean.

Where climate wizards are probably going wrong is not accounting for a great deal of correlation in their samples. The result above relies upon effectively independent sampling. But, to be fair, there must still be independence between samples taken thousands of miles apart. Even making allowance for correlation will still leave a big effective sample size for ocean samples and reduce error accordingly. But most claimed SE’s are probably much too low.

PS This impacts satellite error bars, since presumably these involve a handful of instruments on a handful of satellites. No large sample size statistical benefits from such highly non-independent measurements. Much wider error bars one assumes.

Basic Stats,

TadChem posted this comment at the FM website:

A similar issue was raised by Ross McKitrick regarding the pause-busting Karl (2015) paper. As I understood it, he felt it improper to highlight the tiny standard error (of the mean) while downplaying the much larger sample variance (standard deviation).

So standard deviation tells us how widely dispersed values are. Tell me how that provides any information about the accuracy of that data. Sure we can claim an improvement in PRECISION of a set of simultaneously collect data values but that does not relate to accuracy.

OK. Here is what I believe to be some accurate, well illustrated, science based information. (Hat tip to the wuwt.com researchers!) As a student of common sense, and an associates degree, I am ready to make the following challenge: To the first TEN individuals that opine that the global ecosystem is being effected- IN ANY MANNER – by human activity, and can present UN-ADJUSTED data in a persuasive forum, I will pay each of them $1000. I have the cash and will place it in escrow as soon as the following conditions are met:

1. ALL ASSERTIONS MUST BE SUPPORTED.

2. ALL DATA MUST BE PURE.

3. ALL GOVERNMENT POSITIONS CANNOT BE USED AS DATA, PERIOD.

4. ONLY DATA CAN BE USED AS DATA.

Simple.

To qualify, send me a link to your argument. If I am moved toward your position, the money is yours.

Before you discount and discredit me and my money understand that I HAVE NO POSITION on this matter. I am willing to spend my own money to educate myself. This will be interesting! GO!

Steven Mosher reminds us of an important point in this comment at WUWT (Mosher is with the BEST project): “There is only one dataset that is actually tied to SI standards. ”

He point to this excerpt from “A quantification of uncertainties in historical tropical tropospheric temperature trends from radiosondes” by Peter Thorne et al, JGR-Atmospheres, 27 June 2011:

Here it is folks, the Achilles tendon. Those of us that have sufficient training and interest are happy reading through the mountain of literature on the subject of global warming. Unfortunately, it appears as though only a percentage of the thus either have the motivation or nous to acknowledge the importance of probability. It has the potential to bring back some sanity to the issue that reeks of injustice. More on that later.

35 years ago I visited Germany. The people I stayed with were all wearing a simple cheap badge. It read: “Nuclear Energy? Nein danke”. These had been distributed around the country. I had no feelings on the matter but noted that it was a very powerful initiative. It was not offensive yet projected a very clear message that anyone could relate to

The general public are understandably confused, and researchers are walking roughshod over one the founding principles of the scientific method. They must be brought to heel. This cannot be achieved through just counter-evidence that sometimes contains the same degree of un-acknowledged uncertainly. The key is to relentlessly pressure analysis and prediction authors to demonstrate their calculated margins of error. This then can be subject to independent peer review. Imagine (for example) the impact should all skeptics at conferences wear a simple badge: “Global warming? Margin of error?

“Levitus says the uncertainty in estimates of warming in the top 2,000 meters of the world ocean during 1955-2010 is 0.09°C ±0.007°C” – now there are plenty of statisticians that will like to get their teeth into that. It starts with, “How was the data recorded and collected?”

As for injustice: The New Government have already created climate change departments and ministers. Local bodies are employing surveyors to measure elevations of private properties to create zones at risk from seal level rise. This is already influencing property values and insurance companies are avidly following the process. This is ironical as insurance companies target the best statisticians they can find to employ

A good barrister quickly identifies the weakness in a case and concentrates on that. This what we must do in regards to maximum impact. Keep it simple and don’t give up.

As I see it

The uncertainty or measurement error of a temperature difference between 1955 and 2010 cannot be smaller than the uncertainty of the 1955 data. These people are lying.

So-called “backradiation” can’t affect the simple fact that Earth must be in thermal equilibrium, else the excess heat would simply radiate into outer space. Can we fire all the climate scientists now?

The oceans are, on average, 6,400 meters deep.

This begs the question: What’s going on in the 70% of the ocean we didn’t measure?

So… we think we know that the top 30% has gone up 9/100th of a degree.

I have heard, need reference tho, that the temperature of the deep ocean (2,000-6,400m) has remained unchanged.

Therefore, on average, the entire ocean has only heated up by about 3/100th of a degree.

Boiling indeed. Time to increase taxes on the middle class.

A missing slide in this year’s presentation about the warmest year

It was in last year’s “NOAA/NASA Annual Global Analysis for 2014”, although (embarrassingly) not mentioned until highlighted by skeptics. It’s not in the Annual Global Analysis for 2015.

I just wrote a post about the careful statements about probability and error estimates by NASA & NOAA, specifically mentioning this slide from last year. Gavin and Karl are making me look bad.

Here it is from last year’s slideshow. It should be in this year’s, even if the numbers were 100% for 2015.

The warming of the oceans may very well be the swan song of a modern warm period. Meaning that heat is not being added to the oceans, what was stored is layering out onto the surface and spreading like an oil slick. A diminution of winds would be teleconnected with this. In fact any wind that counteracts the normal winds set up by the Coriolis affect would serve to let the oceans layer up and its warm surface spread out.

We are losing heat, not gaining it.

Pamela Gray

So. Is the loss of Antarctic sea ice between late August and today (averaging about 0.6 Mkm^2 lower than its slowly increasing yearly average levels) due to the strong El Nino conditions in the east Pacific sub-tropics as it spreads out around the usual antarctic circular winds and southern currents?

Last year’s northwest Pacific “blob” of warm weather can be clearly timed to the singular loss of Southerner arctic sea ice in the Sea of Okhotsk north of Japan.

” _Jim

January 19, 2016 at 2:52 pm

to: ECB

Meanwhile, progress continues on *other* energy fronts, such as detailed here:

https://youtu.be/IQ3S3YMH96s Nota Bena: 1 hr plus video (and ignore the refs to GW)”

I stayed with this video for 29 minutes, and watched the closed captions as well (among the worst I’ve ever seen, BTW), and found the explanation for the unanimous rejection by academics, government, and investors quite inadequate.

Assuming the original experiment was honestly performed and reported, governments and private investors with deep pockets should have been all over it. The fact that they were not leaves me with only two possible explanations:

1. the results were NOT as reported, or

2. there was a global conspiracy among academics, government agencies, and investors to discredit the experiment for social, financial, and military reasons.

Lest anyone dismiss #2 as “conspiracy theory” rearing its ugly head, please consider that this forum provides regular documentation of just such a conspiracy in the realm of “climate change”, and consider your objection noted and answered – far more egregious and much more easily confounded misinformation conspiracies have been successfully perpetrated, including the attribution to the German Army for more than 40 years of the Katyn Wood massacre of the Polish Officer corps, and the reporting of the death toll for the Tang Shan earthquake of 1976 for decades at a mere 10,000.

Historians and journalists re-attributed the Katyn Wood massacre, carried out by soviet troops in plain view of the local villagers (who were NOT transported or imprisoned to keep them quiet) only a few years ago. The Polish President, accompanied by his closest advisers and the chief of the Polish defense staff flew to Russia in part to remonstrate about the massacre shortly after this “revelation”, and his plane crashed on Russian soil, killing everyone aboard.

The Tang Shan death toll was tacitly revised upward to some 250,000 by the Chinese government censors when they allowed a recent mainland movie about the earthquake to display this figure. The more likely figure, given the report of a French delegation visiting the city of 1 million people at that time, that every second building was reduced to rubble in the middle of the night, is half a million dead.

There isn’t much discussion in our mass media about the role of energy distribution systems’ in social control, perhaps because we have become so accustomed to this role as a given.

Providing small communities, families, or individuals with enough locally stored energy to assure a scaleable and uninterruptible supply of electricity for years would reduce the power of central government over the individual dramatically throughout the world. And in many regions, it could well cause or fan conflicts more destructive than the breakup of Yugoslavia.

hmm. posting problem. hit “post comment”, post disappears, nothing showing in comments. Try again… please remove duplicate if any.

Hmmm repost impossible, this time “post comment” button doesn’t respond. try once more…nope

closed the forum link. Closed my email account, logged back in to e-mail, clicked on the Jan. 19 WUWT news and this new item. And find that, for the first time ever, my e-mail address and username are automatically displayed in the Post comment identification form.

Most disturbing. I did NOT choose to log in via any social media links, but my subscription to WUWT is via a gmail account. Not happy about this breach of security.

What do we know about the temperature south of about 45S before 1979. Very little or nothing.

The good news is that to understand this work we can ignore the mental states of the scientists involved — which is always a good idea. Motives don’t matter.

____

Sure. With some obesiety or negligent posture a corset helps.

The mental equivalent for a corset is discipline – and that can be passed on in the form of dogma. So the helpers too remain permanently stable.

Motives are handed over

simultaneously, no understanding needed.

The only prerequisite for the commanding encoder are

charisma and the promise of

success.

But that’s banal – same as it ever was.

Regards – Hans