Guest Post by Professor Robert Brown from Duke University and Werner Brozek, Edited by Just The Facts:

The above graphic shows RSS having a slope of zero from both January 1997 and March 2000. As well, GISS shows a positive slope of 0.012/year from both January 1997 and March 2000. This should put to rest the notion that the strong El Nino of 1998 had any lasting affect on anything. Why is there such a difference between GISS and RSS? That question will be explored further below.

The previous post had many gems in the comments. I would like to thank firetoice2014 for their comment that inspired the title of this article.

I would also like to thank sergeiMK for very good comments and questions here. Part of their comment is excerpted below:

“@rgb

So you are basically stating that all major providers of temperature series of either

1 being incompetent

2 purposefully changing the data to match their belief.”

Finally, I would like to thank Professor Brown for his response. With some changes and deletions, it is reproduced below and ends with rgb.

“rgbatduke

August 14, 2015 at 12:06 pm

Note well that all corrections used by USHCN boil down to (apparently biased) thermometric errors, errors that can be compared to the recently discovered failure to correctly correct for thermal coupling between the actual measuring apparatus in intake valves in ocean vessels and the incoming seawater that just happened to raise global temperatures enough to eliminate the unsightly and embarrassing global anomaly “Pause” in the latest round of corrections to the major global anomalies; they are errors introduced by changing the kind of thermometric sensors used, errors introduced by moving observation sites around, errors introduced by changes in the time of day observations are made, and so on. In general one would expect measurement errors in any

given thermometric time series, especially when they are from highly diverse causes, to be as likely to cool the past relative to the present as warm it, but somehow, that never happens. Indeed, one would usually expect them to be random, unbiased over all causes, and hence best ignored in statistical analysis of the time series.

Note well that the total correction is huge. The range is almost the entire warming reported in the form of an anomaly from 1850 to the present.

Would we expect the sum of all corrections to any good-faith dataset (not just the thermometric record, but say, the Dow Jones Industrial Average “DJIA”) to be correlated, with, say, the height of my grandson (who is growing fast at age 3)? No, because there is no reasonable causal connection between my grandson’s height and an error in thermometry. However, correlation is not causality, so both of them could be correlated with time. My grandson has a monotonic growth over time. So does (on average, over a long enough time) the Dow Jones Industrial Average. So does carbon dioxide. So does the temperature anomaly. So does (obviously) the USHCN correction to the temperature anomaly. We would then observe a similar correlation between carbon dioxide in the atmosphere and my grandson’s height that wouldn’t necessarily mean that increasing CO2 causes growth of children. We would observe a correlation between CO2 in the atmosphere and the DJIA that very likely would be at least partly causal in nature, as CO2 production produces energy as a side effect and energy produces economic prosperity and economic prosperity causes, among other things, a rise in the DJIA.

In Nicholas Nassim Taleb’s book The Black Swan, he describes the analysis of an unlikely set of coin flips by a naive statistician and Joe the Cab Driver. A coin is flipped some large number of times, and it always comes up heads. The statistician starts with a strong Bayesian prior that a coin, flipped should produce heads and tails roughly equal numbers of times. When in a game of chance played with a friendly stranger he flips the coin (say) ten times and it turns up heads every time (so that he loses) he says “Gee, the odds of that were only one in a thousand (or so). How unusual!” and continues to bet on tails as if the coin is an unbiased coin because sooner or later the laws of averages will kick in and tails will occur as often as heads or more so, things will balance out.

Joe the Cab Driver stopped at the fifth or sixth head. His analysis: “It’s a mug’s game. This joker slipped in a two headed coin, or a coin that it weighted to nearly always land heads”. He stops betting, looks very carefully at the coin in question, and takes “measures” to recover his money if he was betting tails all along. Or perhaps (if the game has many players) he quietly starts to bet on heads to take money from the rest of the suckers, including the naive statistician.

An alternative would be to do what any business would do when faced with an apparent linear correlation between the increasing monthly balance in the company presidents personal account and unexplained increasing shortfalls in total revenue. Sure, the latter have many possible causes — shoplifting, accounting errors, the fact that they changed accountants back in 1990 and changed accounting software back in 2005, theft on the manufacturing floor, inventory errors — but many of those changes (e.g. accounting or inventory) should be widely scattered and random, and while others might increase in time, an increase in time that matches the increase in time in the president’s personal account when the president’s actual salary plus bonuses went up and down according to how good a year the company had and so on seems unlikely.

So what do you do when you see this, and can no longer trust even the accountants and accounting that failed to observe the correlation? You bring in an outside auditor, one that is employed to be professionally skeptical of this amazing coincidence. They then check the books with a fine toothed comb and determine if there is evidence sufficient to fire and prosecute (smoking gun of provable embezzlement), fire only (probably embezzled, but can’t prove it beyond all doubt in a court of law, continue observing (probably embezzled, but there is enough doubt to give him the benefit of the doubt — for now), or exonerate him completely, all income can be accounted for and is disconnected from the shortfalls which really were coincidentally correlated with the president’s total net worth.

Until this is done, I have to side with Joe the Cab Driver. Up until the latest SST correction I was managing to convince myself of the general good faith of the keepers of the major anomalies. This correction, right before the November meeting, right when The Pause was becoming a major political embarrassment, was the straw that broke the p-value’s back. I no longer consider it remotely possible to accept the null hypothesis that the climate record has not been tampered with to increase the warming of the present and cooling of the past and thereby exaggerate warming into a deliberate better fit with the theory instead of letting the data speak for itself and hence be of some use to check the theory.

The bias doesn’t even have to be deliberate in the sense of people going “Mwahahahaha, I’m going to fool the world with this deliberate misrepresentation of the data”. Sadly, there is overwhelming evidence that confirmation bias doesn’t require anything like deliberate dishonesty. All it requires is a failure in applying double blind, placebo controlled reasoning in measurements. Ask any physician or medical researcher. It is almost impossible for the human mind not to select data in ways that confirm our biases if we don’t actively defeat it. It is as difficult as it is for humans to write down a random number sequence that is at all like an actual random number sequence (go on, try it, you’ll fail). There are a thousand small ways to make it so. Simply considering ten adjustments, trying out all of them on small subsets of the data, and consistently rejecting corrections that produce a change “with the wrong sign” compared to what you expect is enough. You can justify all six of the corrections you kept, but you couldn’t really justify not keeping the ones you reject. That will do it. In fact, if you truly believe that past temperatures are cooler than present ones, you will only look for hypotheses to test that lead to past cooling and won’t even try to think of those that might produce past warming (relative to the present).

Why was NCDC even looking at ocean intake temperatures? Because the global temperature wasn’t doing what it was supposed to do! Why did Cowtan and Way look at arctic anomalies? Because temperatures there weren’t doing what they were supposed to be doing! Is anyone looking into the possibility that phenomena like “The Blob” that are raising SSTs and hence global temperatures, and that apparently have occurred before in past times, might make estimates of the temperature back in the 19th century too cold compared to the present, as the existence of a hot spot covering much of the pacific would be almost impossible to infer from measurements made at the time? No, because that correction would have the wrong sign.

So even like this excellent discussion on Curry’s blog where each individual change made by USHCN can be justified in some way or another which pointed out — correctly, I believe — that the adjustments were made in a kind of good faith, that is not sufficient evidence that they are not made without bias towards a specific conclusion that might end up with correction error greater than the total error that would be made with no correction at all. One of the whole points about error analysis is that one expects a priori error from all sources to be random, not biased. One source of error might not be random, but another source of error might not be random as well, in the opposite direction. All it takes to introduce bias is to correct for all of the errors that are systematic in one direction, and not even notice sources of error that might work the other way. It is why correcting data before applying statistics to it, especially data correction by people who expect the data to point to some conclusion, is a place that angels rightfully fear to tread. Humans are greedy pattern matching engines, and it only takes one discovery of a four leaf clover correlated with winning the lottery to overwhelm all of the billions of four leaf clovers that exist but somehow don’t affect lottery odds in the minds of many individuals. We see fluffy sheep in the clouds, and Jesus on a burned piece of toast.

But they aren’t really there.

rgb”

In the sections below, as in previous posts, we will present you with the latest facts. The information will be presented in three sections and an appendix. The first section will show for how long there has been no warming on some data sets. At the moment, only the satellite data have flat periods of longer than a year. The second section will show for how long there has been no statistically significant warming on several data sets. The third section will show how 2015 so far compares with 2014 and the warmest years and months on record so far. For three of the data sets, 2014 also happens to be the warmest year. The appendix will illustrate sections 1 and 2 in a different way. Graphs and a table will be used to illustrate the data.

Section 1

This analysis uses the latest month for which data is available on WoodForTrees.com (WFT). All of the data on WFT is also available at the specific sources as outlined below. We start with the present date and go to the furthest month in the past where the slope is a least slightly negative on at least one calculation. So if the slope from September is 4 x 10^-4 but it is – 4 x 10^-4 from October, we give the time from October so no one can accuse us of being less than honest if we say the slope is flat from a certain month.

1. For GISS, the slope is not flat for any period that is worth mentioning.

2. For Hadcrut4, the slope is not flat for any period that is worth mentioning.

3. For Hadsst3, the slope is not flat for any period that is worth mentioning.

4. For UAH, the slope is flat since April 1997 or 18 years and 4 months. (goes to July using version 6.0)

5. For RSS, the slope is flat since January 1997 or 18 years and 7 months. (goes to July)

The next graph shows just the lines to illustrate the above. Think of it as a sideways bar graph where the lengths of the lines indicate the relative times where the slope is 0. In addition, the upward sloping blue line at the top indicates that CO2 has steadily increased over this period.

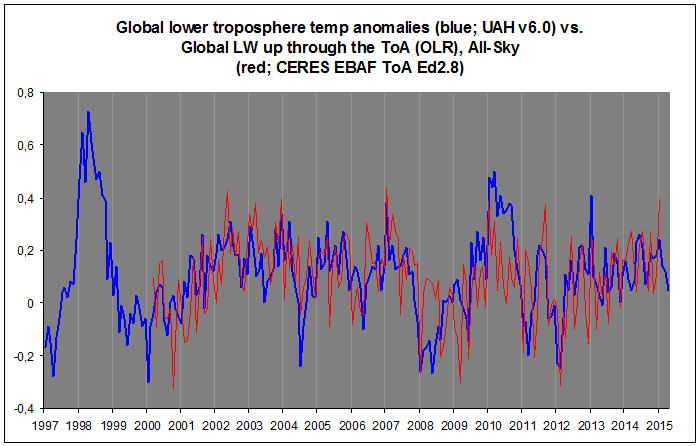

When two things are plotted as I have done, the left only shows a temperature anomaly.

The actual numbers are meaningless since the two slopes are essentially zero. No numbers are given for CO2. Some have asked that the log of the concentration of CO2 be plotted. However WFT does not give this option. The upward sloping CO2 line only shows that while CO2 has been going up over the last 18 years, the temperatures have been flat for varying periods on the two sets.

Section 2

For this analysis, data was retrieved from Nick Stokes’ Trendviewer available on his website <a href=”http://moyhu.blogspot.com.au/p/temperature-trend-viewer.html”. This analysis indicates for how long there has not been statistically significant warming according to Nick’s criteria. Data go to their latest update for each set. In every case, note that the lower error bar is negative so a slope of 0 cannot be ruled out from the month indicated.

On several different data sets, there has been no statistically significant warming for between 11 and 22 years according to Nick’s criteria. Cl stands for the confidence limits at the 95% level.

The details for several sets are below.

For UAH6.0: Since November 1992: Cl from -0.007 to 1.723

This is 22 years and 9 months.

For RSS: Since February 1993: Cl from -0.023 to 1.630

This is 22 years and 6 months.

For Hadcrut4.4: Since November 2000: Cl from -0.008 to 1.360

This is 14 years and 9 months.

For Hadsst3: Since September 1995: Cl from -0.006 to 1.842

This is 19 years and 11 months.

For GISS: Since August 2004: Cl from -0.118 to 1.966

This is exactly 11 years.

Section 3

This section shows data about 2015 and other information in the form of a table. The table shows the five data sources along the top and other places so they should be visible at all times. The sources are UAH, RSS, Hadcrut4, Hadsst3, and GISS.

Down the column, are the following:

1. 14ra: This is the final ranking for 2014 on each data set.

2. 14a: Here I give the average anomaly for 2014.

3. year: This indicates the warmest year on record so far for that particular data set. Note that the satellite data sets have 1998 as the warmest year and the others have 2014 as the warmest year.

4. ano: This is the average of the monthly anomalies of the warmest year just above.

5. mon: This is the month where that particular data set showed the highest anomaly. The months are identified by the first three letters of the month and the last two numbers of the year.

6. ano: This is the anomaly of the month just above.

7. y/m: This is the longest period of time where the slope is not positive given in years/months. So 16/2 means that for 16 years and 2 months the slope is essentially 0. Periods of under a year are not counted and are shown as “0”.

8. sig: This the first month for which warming is not statistically significant according to Nick’s criteria. The first three letters of the month are followed by the last two numbers of the year.

9. sy/m: This is the years and months for row 8. Depending on when the update was last done, the months may be off by one month.

10. Jan: This is the January 2015 anomaly for that particular data set.

11. Feb: This is the February 2015 anomaly for that particular data set, etc.

17. ave: This is the average anomaly of all months to date taken by adding all numbers and dividing by the number of months.

18. rnk: This is the rank that each particular data set would have for 2015 without regards to error bars and assuming no changes. Think of it as an update 35 minutes into a game.

| Source | UAH | RSS | Had4 | Sst3 | GISS |

|---|---|---|---|---|---|

| 1.14ra | 6th | 6th | 1st | 1st | 1st |

| 2.14a | 0.170 | 0.255 | 0.564 | 0.479 | 0.74 |

| 3.year | 1998 | 1998 | 2014 | 2014 | 2014 |

| 4.ano | 0.482 | 0.55 | 0.564 | 0.479 | 0.74 |

| 5.mon | Apr98 | Apr98 | Jan07 | Aug14 | Jan07 |

| 6.ano | 0.742 | 0.857 | 0.832 | 0.644 | 0.96 |

| 7.y/m | 18/4 | 18/7 | 0 | 0 | 0 |

| 8.sig | Nov92 | Feb93 | Nov00 | Sep95 | Aug04 |

| 9.sy/m | 22/9 | 22/6 | 14/9 | 19/11 | 11/0 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 10.Jan | 0.277 | 0.367 | 0.688 | 0.440 | 0.81 |

| 11.Feb | 0.175 | 0.327 | 0.660 | 0.406 | 0.87 |

| 12.Mar | 0.165 | 0.255 | 0.681 | 0.424 | 0.90 |

| 13.Apr | 0.087 | 0.174 | 0.656 | 0.557 | 0.73 |

| 14.May | 0.285 | 0.309 | 0.696 | 0.593 | 0.77 |

| 15.Jun | 0.333 | 0.391 | 0.728 | 0.575 | 0.79 |

| 16.Jul | 0.183 | 0.289 | 0.691 | 0.636 | 0.75 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 17.ave | 0.215 | 0.302 | 0.686 | 0.519 | 0.80 |

| 18.rnk | 3rd | 6th | 1st | 1st | 1st |

If you wish to verify all of the latest anomalies, go to the following:

For UAH, version 6.0beta3 was used. Note that WFT uses version 5.6. So to verify the length of the pause on version 6.0, you need to use Nick’s program.

http://vortex.nsstc.uah.edu/data/msu/v6.0beta/tlt/tltglhmam_6.0beta3.txt

For RSS, see: ftp://ftp.ssmi.com/msu/monthly_time_series/rss_monthly_msu_amsu_channel_tlt_anomalies_land_and_ocean_v03_3.txt

For Hadcrut4, see: http://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/time_series/HadCRUT.4.4.0.0.monthly_ns_avg.txt

For Hadsst3, see: http://www.cru.uea.ac.uk/cru/data/temperature/HadSST3-gl.dat

For GISS, see:

http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts+dSST.txt

To see all points since January 2015 in the form of a graph, see the WFT graph below. Note that UAH version 5.6 is shown. WFT does not show version 6.0 yet. Also note that Hadcrut4.3 is shown and not Hadcrut4.4, which is why the last few months are missing for Hadcrut.

As you can see, all lines have been offset so they all start at the same place in January 2015. This makes it easy to compare January 2015 with the latest anomaly.

Appendix

In this part, we are summarizing data for each set separately.

RSS

The slope is flat since January 1997 or 18 years, 7 months. (goes to July)

For RSS: There is no statistically significant warming since February 1993: Cl from -0.023 to 1.630.

The RSS average anomaly so far for 2015 is 0.302. This would rank it as 6th place. 1998 was the warmest at 0.55. The highest ever monthly anomaly was in April of 1998 when it reached 0.857. The anomaly in 2014 was 0.255 and it was ranked 6th.

UAH6.0beta3

The slope is flat since April 1997 or 18 years and 4 months. (goes to July using version 6.0beta3)

For UAH: There is no statistically significant warming since November 1992: Cl from -0.007 to 1.723. (This is using version 6.0 according to Nick’s program.)

The UAH average anomaly so far for 2015 is 0.215. This would rank it as 3rd place, but just barely. 1998 was the warmest at 0.483. The highest ever monthly anomaly was in April of 1998 when it reached 0.742. The anomaly in 2014 was 0.170 and it was ranked 6th.

Hadcrut4.4

The slope is not flat for any period that is worth mentioning.

For Hadcrut4: There is no statistically significant warming since November 2000: Cl from -0.008 to 1.360.

The Hadcrut4 average anomaly so far for 2015 is 0.686. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.832. The anomaly in 2014 was 0.564 and this set a new record.

Hadsst3

For Hadsst3, the slope is not flat for any period that is worth mentioning. For Hadsst3: There is no statistically significant warming since September 1995: Cl from -0.006 to 1.842.

The Hadsst3 average anomaly so far for 2015 is 0.519. This would set a new record if it stayed this way. The highest ever monthly anomaly was in August of 2014 when it reached 0.644. The anomaly in 2014 was 0.479 and this set a new record.

GISS

The slope is not flat for any period that is worth mentioning.

For GISS: There is no statistically significant warming since August 2004: Cl from -0.118 to 1.966.

The GISS average anomaly so far for 2015 is 0.80. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.96. The anomaly in 2014 was 0.74 and it set a new record.

Conclusion

There might be compelling reasons why each new version of a data set shows more warming than cooling over the most recent 15 years. But after so many of these instances, who can blame us if we are skeptical?

Fraud, often seen on Wall Street over the years, is apparently not limited to the financial world. This is a sad, dark period for science.

One alternative is that the satellite and surface data sets represent reality. Assuming this, I hope that some clever climate scientists are busy figuring out how to modify the current understanding of heat flow in the atmosphere to explain why, for almost two decades, the surface warms while the troposphere does not.

These sorts of ‘ultraviolet catastrophe’ moments in science can lead to tremendous new understanding of our world.

When there are constant adjustments every year, I think the explanation lies elsewhere.

“Those who control the present, control the past and those who control the past control the future.”

― George Orwell, 1984

The Urban Heat Island Effect could be a possible explanation of warmer surface temperatures couldn’t it?

Cal.

“The Urban Heat Island Effect could be a possible explanation of warmer surface temperatures couldn’t it?”

The UHI is not an explanation for the rise in the global average temperature.

There has to be more than ideology behind this. Those who are making money out of this or who intend to make money out of it should be named and shamed.

One has to have a conscience for shaming to be effective.

Don’t underestimate the power of ideology. It’s quite sufficient on its own for True Believers.

I’d put the point a different way. Ideological choices and choices for making money are both influenced by the existing incentive structure.

In the public space, moral preening, boasting of good intentions, and “praying in public” are strongly incentivized. Skillful praying in public is closely correlated with increased status and increased personal power. That has always been true, right back to biblical times.

It is true that the specific ideology used to make the point of personal “good intentions” changes over time. Nonetheless there are common elements throughout history. The key one is a strong claim of altruistic intent. “I deserve status, because I’m dis-interested. If you disagree with me, it must be because you are not well intended. Thus you should be on the moral defensive and silent in my presence while my ideas, and I, take precedence.”

Liberalism and environmentalism are extremely powerful tools for this sort of praying in public, despite being highly defective guides to workable policies. Hence the dominance of liberalism/environmentalism in the media, academia, and the arts.

The people at the very top want an excuse to tax us.

Agreed but It’s not just those at the top, a great many citizens want to pay a carbon tax.

In my lifetime I never thought I’d see regular folks actively campaigning for higher taxes, it is entirely counterintuitive, but this is what we are seeing.

It’s astonishing and deeply troubling to me.

Klem October 1, 2015 at 6:49 am

“Agreed but It’s not just those at the top, a great many citizens want to pay a carbon tax. ”

And that highlights their fraud. Any person can voluntarily pay extra taxes any time they wish.

Klem, remember that the Spanish-American War was based on a set of fables generated by a NYC newspaper and repeated across the nation. Eventually real bullets flew and real people died for reasons fabricated from whole cloth. There was literally nothing behind it.

More recent encounters may have had a similar provenance.

The war on carbon doesn’t have to have a sinister mind looking for fame and other people’s money. Sometimes people do things for baseless or indiscernible or frivolous reasons. Some things were done ‘because they could’.

And a tax for what? A tax that has nothing to actually show for it is money that very easily finds its way into the hands of the “ideologists.”

Oh n-e-v-e-r !

FOUR BILLION DOLLARS PER DAY……buys a lot of ideology.

Buys a lot of naive folks too, apparently ; )

And explains why there are so many pigs at the trough.

Many of them believe that they are saving the world, and this justifies their behavior.

10 years ago that might have been true, but today they just have their snouts so deep in the trough of public money that they cannot see anything beyond the dosh at the end of their snout.

“There has to be more than ideology behind this. Those who are making money out of this or who intend to make money out of it should be named and shamed.”

First, why does there need to be more than ideology behind this? Ideology is a powerful force. See all the true-believing Chicken Littles running around denigrating realists. They’re not making money on it. But they do feel they are “superior” and living on a higher moral plane than all of us mouth-breathing “deniers.”

Second, you should subscribe to Tony Heller’s blog: Real Climate Science. He calls out the scammers by name every day.

Tony has been naming and shaming loudly and clearly for years. WUWT is sheepishly pretending that Tony does not exist.

He does, and he has the scammers by the tail. He’s not interested in being accepted by them, nor in having a peer reviewed paper published. Tony is the climate data fraud whistleblower responsible for this entire discussion.

http://realclimatescience.com/

Tony is falsifying flawed ideas and false claims in brilliant ways, at a tremendous rate – that is science.

The internet never forgets.

The “it’s the money” explanation should itself be examined. There seems to be an intrinsic appeal to explanations of this sort as opposed to ‘force-of-belief’ explanations. But only the latter explains the near-suicidal activities of the current political and intellectual elites. (Among other references, check out the Roosters of the Apocalypse book – that wasn’t done for the money.)

Kent,

“First, why does there need to be more than ideology behind this?”

An odd way to put the question, to my mind. Why not; Why must there be little more than ideology behind this? There are more forces in the world than ideology (and I don’t doubt some of them shape ideologies).

This hardly looks like the work of a few climate modelers, that’s one force that is not exactly overpowering. I see no reason to mock or belittle those who smell a bigger rat than you . . it’s not like such things don’t happen on this planet, ya know? ; )

John,

““First, why does there need to be more than ideology behind this?”

An odd way to put the question, to my mind. Why not; Why must there be little more than ideology behind this? There are more forces in the world than ideology (and I don’t doubt some of them shape ideologies).

This hardly looks like the work of a few climate modelers, that’s one force that is not exactly overpowering. I see no reason to mock or belittle those who smell a bigger rat than you . . it’s not like such things don’t happen on this planet, ya know? ; )”

I apologize for the lack of clarity.

What I meant was: The entire scam is based on ideology!

The ideology is Politically Correct Progressvism.

This ideology is based on a hatred of Normal-America. See here for a brief explanation of its belief system:

http://intelctweekly.blogspot.com/2014/07/politically-correct-progressive-belief.html

While the scammers have figured out how to divert money into their pockets, 99% of true believers make not a penny from the scam. Their reward is social–they feel better than you. They feel superior. They are members of a support network of fellow hate-mongers, reaping the benefits of being the “in-crowd.”

It is pure, 100% ideological.

Those in the realist group who constantly harp, “It’s all about the money.” are missing the power of the anti-civilization PC-Progressive ideology.

Thanks for the thoughtful reply, Kent, and perhaps I should have been clearer myself. Though these days language itself is treacherous it seems, and virtually bound to lead to confusion.

“The entire scam is based on ideology!

The ideology is Politically Correct Progressvism.”

I don’t doubt that many people have some sort of faith in something along the lines of what you named, it’s that I think it’s pretty much a scam too, which the perps and their front-men are hiding behind. A rubbery conceptual hodgepodge that stretches and shifts to suit the propaganda of the day., not a true ideology.

To my mind, you are essentially saying the same thing when you say something this;

“While the scammers have figured out how to divert money into their pockets, 99% of true believers make not a penny from the scam. Their reward is social–they feel better than you. They feel superior. They are members of a support network of fellow hate-mongers, reaping the benefits of being the “in-crowd.”

Perhaps ‘feeliology’ is a better word for such a “movement” ; )

John,

The short description of the PC-Prog ideology is a summary of my extensive research and analysis of the origins of this belief system. I’ve written a book on it, in fact.

For full details, see this short video:

http://willingaccomplices.com/willing_accomplices/videos

Suffice it to say that you’re on the right track (“feelology”), but are missing the historical background of this ideology of hate, destruction, and devastation.

kentclizbe,

Thanks, for the video and your loyal service, past and present.

I agree by and large with your research and it’s implications, and the significant impact on our society what you’ve exposed has had, though I believe this “movement” began long before the Soviet Union existed, and indeed was responsible to no small extent for it’s establishment.

Have you looked into Albert Pike, Blavatsky/theosophy/luciferianism and so on? If not I suggest you do so, for I think this toxic dark cloud on our world can be traced way back to . . well, I’m a follower of certain Jesus, so, a certain garden long ago.

John,

Sorry, all the Theosophists, occultists, and other whacky cults are but pimples on a gnat compared to the Comintern.

The Comintern ran a massive, global covert operation designed to destabilize, denigrate, disorganize, and destroy its enemies. The Number One Enemy, and the greatest obstacle to its domination of the world was the exceptionalism of America and America’s rugged individualism based in capitalism, freedom and liberty.

The operations run by the Comintern had many different objectives, but the most successful, echoing through the decades through the mouths of Willing Accomplices like Gavin Schmidt, Michael Mann, and their allies, was the covert influence operation designed to denigrate American capitalism.

Its objective was to make Americans hate the system that created our greatness.

The key to the operation was Willi Muenzenberg’s popular front approach. He built, using Comintern money, front organizations across the US. These organizations, which are very much like today’s Greenpeace, PETA, and others made their members feel special, smart, cool, better than the mouth-breathers.

That was the genius in the operations–this “coolness” is what gave this anti-Normal-America attitude a life of its own. This attitude continues till today. It is reflected in the beliefs of PC-Progressives. It is our President’s belief system. It is the belief system of the Democrat Party.

Kent Clizbe,

I think this guy reported exactly what’s going on.

(1-page transcript.)

DBS,

“Bezmenov”

Unfortunately Bezmenov is in the category of “fake but accurate.”

He was a project of the John Birch Society–who meant well, but were easily fooled, and played very loosely with the truth and facts.

My extensive research into the KGB ops, including interviews with as many living KGB operators as possible, confirmed my assessment of Bezmenov.

He was actually a journalist for TASS. He was NOT a KGB operator. As KGB used TASS extensively for cover, the journalists saw the KGB ops, and were frequently co-opted for specific uses. But they were not privy to what was happening in the espionage operations.

Interestingly, because of compartmentation of operations, most KGB operators had no understanding of the overall ops and goals of Soviet espionage. Even an actual operator would not have discussed the strategic goals and implications of their covert actions. They were focused on the operational level–recruiting an agent, inserting a piece of misinformation in a paper, at a low level.

Bezmenov, like a trained monkey, just told the story that John Birch wanted told. He was playing a role, not totally out of character, but not who he was. And the script he read, while very close to reality, was also not the truth.

I discuss these issues in detail in my book: Willing Accomplices: How KGB Covert Influence Operations created Political Correctness.

http://www.willingaccomplices.com

kentclizbe,

I’m not denying in any way that the things you speak of have happened, but rather, am suggesting that there aren’t really many communists among the movers and shakers of this world. Instead, the goal is elitism, with a tiny group of “elites” ruling over an essentially captive humanity. Communism is just a cover, a sort of cloak which allows some hyper-wealthy psychopaths to avoid detection.

These folks will use any and all ideologies in whatever way suits they’re purposes, because once you’ve got total control, it really doesn’t matter what you promised anyone. What they have in mind is no more communism than a slave plantation is, even if all the slaves got treated roughly the same, I suggest.

PS~ The current reigning political cloak is socialism, it seems clear to me, and what some call the “social justice” meme in particular, of which the CAWG shake-down is a fine example.

PPS1 From the article you linked to,

“Yuri Bezmenov: The immediate thing that comes to my mind, of course, there must be an immediate, very strong national effort to educate people in the spirit of real patriotism, number one. Number two, explain the real danger of socialist, Communist, whatever welfare state, big brother government. If people will fail to grasp the impending danger of their development, nothing ever can help United States. You might kiss goodbye to your freedoms, including freedoms to homosexuals, prison inmates, all this freedom will vanish, evaporate in five seconds, including your precious life.”

He sees what I see, it seems to me.

John,

Sorry to be redundant, but, regardless of the content of Bezemenov’s comments, he is fake.

His commentary is almost exactly like Dan Rather’s case against George W. Bush: “Fake but accurate.”

Bezemenov is NOT what he claims to be. His commentary is just a regurgitation of the John Birch point of view. His story is actually sort of pitiful. All defectors are pitiful. They usually have minimal actual useful information. Bezemenov clearly had virtually NO useful information, and therefore was sent out to pasture pretty much immediately.

The John Birchers picked him up after he was pumped dry by the USG. And he began reading the script they wanted him to read. His accent gave him credibility.

What you’re seeing as accurate is accurate, to an extent. Exactly like Rather’s info on Bush’s National Guard experience was accurate–but fake.

I’d be happy to send you a copy of Willing Accomplices so you can read the whole story. The context of KGB officers’ access, knowledge, and experience with their own agency’s legacy operations is important: Even actual KGB officers do NOT have knowledge of strategic goals, and historic operations.

Bezmenov was NOT KGB. His “insights” are just regurgitated John Birch talking points.

kentclizbe,

To me, it’s as though we were discussing a snake oil salesman, and you were telling me the guy really believes the stuff in the bottles will cure all sorts of diseases. We agree that stuff won’t cure squat, but I believe the guy knows that, and is not himself a true believer in the miracle of snake oil . . he’s a true believer in lying to get what he wants, I say.

Or we were discussing the drive to ban guns, and you were telling me those behind it were overly concerned about the dangers gun pose to innocent children, and I was trying to explain that’s just a cover, they are faking that concern to get the citizenry disarmed for other reasons.

Kent C,

I agree with your point of view, and I really would like to be 100% on board. But when you write:

…regardless of the content of Bezemenov’s comments, he is fake.

That’s an ad hominem logical fallacy. It doesn’t matter who said it, what matters is if it is factual or not. And he isn’t the only one saying the same thing. Further, it detracts from anything we can do to counter the threat.

Bezmenov’s interviews were made in the 1980’s. They have been amazingly prescient. I don’t give a hoot if he was KGB, or merely was acquainted with the KGB. The things he said would happen have now taken place.

Do you dispute that the country has been ‘demoralized’? Do you dispute that ‘demoralized’ means that the country has lost its moral compass? Just look at all the incessant lionizing of the LBTG&etc. groups, the demonizing of the Boy Scouts, which was built based on morality, the vicious tribalism, promoted even by the President, the government’s flagrant ignoring of our immigration laws, the ‘politically correct’ movement, the attacks on the 1st and 2nd Amendments and the Constitution itself, the intolerance of free speech on campus, and I could go on for about six more paragraphs. You get the point, I’m sure.

Bezmenov accurately outlined the KGB’s plan, in detail. When the Soviets realized they could not defeat the West militarily, they switched tactics and targeted what they called the ‘organs’ of society: the media, the education complex, churches, and now the scientific establishment.

Their plan has been amazingly successful. What they were incapable of doing with force, they have done relatively easily by infiltrating the ‘organs of society’.

Prof Richard Lindzen has written about the corruption of science (see Sec. 2). All it takes is one or two activists on a Board to sharply re-direct the organization. I have been on such boards, and I know how easy it is to plan out a change in direction. Everyone on the Board wants something, so votes are easy to trade. Eventually, a majority of the Board (typically six individuals) agrees to take a public position on ‘climate change’.

Isn’t it amazing that several dozen national professional organizations have all used almost identical language warning of “dangerous climate change” in their position statements? What are the odds of that? Organizations are like people: no two are exactly alike. Certainly a good fraction of those organizations should be voicing the position of scientific skeptics of the ‘dangerous AGW’ alarm. They should at least be saying, “More study is needed.” Or, “The evidence shows that the rise in CO2 is caused by rising temperatures, not vice-versa”. Or, “Global warming stopped many years ago, so those who put forth the man-made global warming hypothesis must reconsider”. Or any number of skeptical positions.

But, no. They all say exactly the same thing: that human emissions are causing climate change. That gives the average person pause: ‘If all the professional organizations are warning us about “carbon”, maybe we should listen.’ Even organizations that have little or nothing to do with science, or with the question of AGW have issued official opinions, and those opinions are all the same.

But human nature being what it is, there will always be disputes, among scientists in particular. A rational person does not accept that because there are still no measurements quantifying what they all insist must be happening, that there are simply no dissenting organizations skeptical of the CAGW narrative.

That is not reasonable. The total agreement on something that lacks evidence, and which many eminent scientist strongly dispute, indicates that the unanimous chorus of professional organizations’ statements must have an external cause. Only credulous, naive people would believe that they all agree on something for which there is no measurable scientific evidence whatever, and which has been flatly contradicted by the absence of any global warming for nearly twenty years.

You can call me a ‘conspiracy theorist’ if you like. But to me it just does not make sense that everything observed in this regard is only a coincidence. Always ask yourself: “Cui bono?” The answer will be pretty clear.

DBS,

“Accurate but fake” won’t cut it.

I’m saying that as an expert on Comintern/KGB covert influence operations designed to destroy America’s culture.

In fact, I wrote a book on this subject.

Here’s a brief overview, in a short video:

http://willingaccomplices.com/willing_accomplices/videos

Bezmenov read John Birch scripts. Their scripts were pointing in the right direction, but could be inaccurate, and based on much fakery. Their bad faith gave the movement to counter-act the destruction a bad name. With very bad results.

My book covers these issues, providing the who/what/where/when/why/how of the ACTUAL operations run by the Comintern.

Would be happy to send you a copy. It’s on Amazon.

Kent,

I have the feeling that my point didn’t register. It doesn’t matter who said what. That only makes the discussion an ad-hom digression.

What matters is: was the prediction accurate? It appears to me that it was very accurate. Morality is under attack from every angle, thus: demoralization. A very effective tactic in a democracy like ours.

I don’t care if Pee Wee Herman said it, the question is, what do we do about it? Any answers?

“I have the feeling that my point didn’t register. It doesn’t matter who said what. That only makes the discussion an ad-hom digression.”

DBS,

The first rule of vetting, sourcing, intelligence collection and reporting, researching is always: Consider your source. That’s not what ad hominem is. A fraud is a fraud. A bad source is a bad source. I’d be happy to provide some lessons on this. I did it for a living.

If your source is a fraud, it does not matter how achingly attractive his sweet words are. He is a bad source, and is making it up.

However much it pains you, you must reject that source.

I’d highly recommend you read my book, Willing Accomplices. It is the true story of the Comintern covert action operations against American culture.

It’s the real who/why/what/where/when/how of the operations.

Suffice it to say that Bezmenov’s imaginings were on the right track, but reality is always stranger than fiction.

http://www.willingaccomplices.com

Kent,

We’re talking about two different things. You’re trying to trash someone, and I’m saying that when someone makes accurate predictions, he has credibility. Rather than argue about it, I’ll just wish you well selling your book.

DBS,

This is not about selling my book. I’ll GIVE you a copy. Send me an email with your address, and you’ll have one asap. kent@kentclizbe.com

This is about sourcing. Proper sourcing. It’s crucial in research. Just because you found a video on the internet, and a guy with a cool accent says stuff you agree with does not make it accurate, or true.

You talk about logical fallicies. You are caught in one yourself. It’s the Dan Rather, “fake but accurate” syndrome.

Wanting it to be so does not make it so.

“Defenders of CBS claimed that while the memos themselves were fakes, the story was nonetheless still true, a laughingstock of an argument that came to be known as “fake but accurate,” and sometimes as “truthiness.” This impulse to dismiss fakery in the service of larger truths…”

http://hotair.com/archives/2012/03/22/the-return-of-fake-but-accurate/

“Bezmenov himself is a fake, but the story he tells is nonetheless still true….”

It’s an easy trap to fall into. And one which should always be avoided, should one wish to remain on the side of truth and rightness.

As Ed Morrissey said above, that’s “a laughingstock of an argument.” Don’t be that guy!

Drop me a note. Happy to send you one.

All the best.

It would consume less space to list the names of those that are not deliberately doing this for the money. But it would ruin their chances of getting funded.

Is the raw data being saved? That is the most important thing.

I wonder why – if we have the raw data – we never see a raw data graph…?

Certainly wasn’t in office moves at the UEA CRU during the 1990’s. Only adjusted data exists now.

Surely raw data exists in the original reports from Met stations? A particular UEA collection may have been lost, but it would be hard to lose ALL the original data. Wouldn’t it?

Apparently not as it, still to this day, cannot be presented as the raw data.

The two MMs have been after the CRU station data for years. If they ever hear there is a Freedom of Information Act now in the UK, I think I’ll delete the file rather than send to anyone.

from: Phil Jones

subject: Re: For your eyes only

to: “Michael E. Mann”

All the raw data still exists.

If you use raw data then the global warming is worse.

The net effect of all adjustments to all records is to COOL the record

The two authors of this post don’t get it.

Adjustments COOL the record..

Cool the record. Period. [snip]

Mosher. You keep saying that. Bring data.

In God we trust, all others bring data

– W. Edwards Deming

See previous posts here:

http://wattsupwiththat.com/2014/11/05/hadcrut4-adjustments-discovering-missing-data-or-reinterpreting-existing-data-now-includes-september-data/

and

http://wattsupwiththat.com/2013/05/12/met-office-hadley-centre-and-climatic-research-unit-hadcrut4-and-crutem4-temperature-data-sets-adjustedcorrectedupdated-can-you-guess-the-impact/

Whether the adjustments cool or warm the overall record is not what this post is about. What is very clear is that the latest 16 years always seem to show warmer anomalies with each revision.

Mosher,

Dave in Canmore, below, has the perfect response to you: “Zeke’s “excellent discussion on Curry’s blog” is most interesting. To highlight the need for adjustments to the original observations, Zeke describes at length what poor quality the data is due to station moves and all manners of problems. What amazes me is why the discussion doesn’t just end there?

The discussion amounts to “of course we have to adjust the data- look how awful it is!”

That they have convinced themselves they can convert bad data to good is the real problem.”

Is that not what we should expect, Mosh? If I were going to try and fool someone with temp. data I would flatten and cool almost the entire record. The only part that needs a little warm bias (or less cold bias) is maybe the last 50 years but certainly the last 15. As today’s data aged it would be cooled and flattened. This not only achieves the desired result but has the added bonus of allowing me to say things like “Cool the record. Period.” Unfair? Probably. But, I consider your post little more than misdirection as is. Maybe all the supporting points were in the snip.

No, the [snip] on Mosher was an insult. I saved him from himself and his poor choice of words.

Mr. Moshers comment seems to be the literary equivalent of the pretty girl, scantily clad, in a magic act.

* raises arms fetchingly…look over here, boys…look over here.*

Patrick [October 1, 2015 at 3:30 am] says that only adjusted data exists now. And then comes Steven Mosher [October 1, 2015 at 7:06 am] and says that “All the raw data still exists.” Who is right?

I certainly would like to know because the biggest temperature theft of all took place in the eighties and nineties when an entire hiatus (warming pause) was covered up by introducing a fake warming into official temperature curves. I discovered this in 2008 while doing research for my book “What Warming?…” There was a no-warming period that lasted from 1979 to 1997, an eighteen year stretch. The raw data still exist in UAH and RSS satellite databases. But when I went to cross check with official temperature records they were gone and in their place was a so-called “late twentieth century warming.” I traced the origin of this false warming to HadCRUT3 and even put a warning about it into the preface of my book when it came out. But absolutely nothing happened. They have been using that fake temperature record with impunity since 1997, the end year of the hiatus. It makes the warming of the eighties and nineties look a lot more formidable than it actually is. Since then I have also determined that GISS and NCDC were co-conspirators with HadCRUT3 in this coverup. All three had their databases adjusted by the same computer and the computer left its footprints on all three publicly available temperature curves, in exactly the same places. This is an actual scientific crime, data falsification to give the wrong impression of global temperature in the eighties and nineties. Recently we heard that twenty pseudo-scientists have written a letter to the President asking him to use the RICO law to prosecute those whose science does not agree with theirs. That is an outrage as wall as a stupidity because they obviously do not know (or pretend not to know) how science works. But having brought up the RICO law, I think there really is something to apply it to. It should be used to investigate how the fake warming in the eighties and nineties was created, who authorized it, who did it, and why they took no action to desist when I exposed their criminal activity. Needless to say, it should be under the criminal and not the civil section of the law and appropriate penalties should be applied.

Mosher, even setting aside the credentials of rgb, after observing the writings of both of you for a few years I would always put my money on him “getting it” before you. Just like instinctively knowing who one would rather share a foxhole with, there is enough evidence to trust rgb implicitly on any of the science he presents.

When you are outclassed Steven, it is best to simply accept it.

“The net effect of all adjustments to all records is to COOL the record” – Steven Mosher

That’s because the past is longer than the present. If you cool the past and warm the present, then of course the “net effect” will be to COOL the record. If that’s not what you mean, you can demonstrate it by showing a side-by-side graph of raw vs adjusted temperature data for recent years. If the raw data for this century is warmer than the adjusted data, you will be proven right. But if not, you are deliberately trying to deceive us.

“To highlight the need for adjustments to the original observations, Zeke describes at length what poor quality the data is due to station moves and all manners of problems. What amazes me is why the discussion doesn’t just end there?

The discussion doesnt end there because your knowledge doesnt end there.

Take TOBS.. easy to detect, easy to correct. Same as correcting for inflation or stock splits.

“Is that not what we should expect, Mosh? If I were going to try and fool someone with temp. data I would flatten and cool almost the entire record. The only part that needs a little warm bias (or less cold bias) is maybe the last 50 years but certainly the last 15. As today’s data aged it would be cooled and flattened. This not only achieves the desired result but has the added bonus of allowing me to say things like “Cool the record. Period.” Unfair? Probably. But, I consider your post little more than misdirection as is. Maybe all the supporting points were in the snip.”

First you argue that the adjustments warm the record

When you find out they actually cool the record,,

pretty funny.

Different groups, different methods, different data sources.

raw data is warmer than adjusted data.

adjustments cool the record..

Unless you cherry pick the 2% of the world with the worst practice ( US ).

but if you look at 100% of the data— adjustments cool the record.

doesnt take a phd to see that

Very fetching Mosh.

Hubba hubba.

To a limited extent, these .PDF files are probably unmolested; the collection is USDA, held at Cornell.

This is one PDF http://usda.mannlib.cornell.edu/usda/waob/weather_weekly//2010s/2012/weather_weekly-12-27-2012.pdf

“That’s because the past is longer than the present. If you cool the past and warm the present, then of course the “net effect” will be to COOL the record. If that’s not what you mean, you can demonstrate it by showing a side-by-side graph of raw vs adjusted temperature data for recent years. If the raw data for this century is warmer than the adjusted data, you will be proven right. But if not, you are deliberately trying to deceive us.”

The past isnt cooled.

https://twitter.com/hausfath/status/564921572096348160

Here is 70% of the data.

See Part 2, Figure 4

red line is raw

black line is adjusted

The past is WARMED!!!

That gives you a lower slope

http://www.metoffice.gov.uk/hadobs/hadsst3/diagrams.html

So

CRU, WARM the past for 70% of the data.

with the land record ( 30%) of the data

The past is cooled

and the NET EFFECT of both is to WARM THE PAST see Zeke figures

Land data

https://twitter.com/hausfath/status/564921572096348160

ocean data

https://twitter.com/hausfath/status/564921572096348160

The ocean which is 70% of the globe has the past warmed by adjustments

The land which is 30% of the globe has the past cooled by adjustments.

The NET of all adjustments is to warm the past… a lower slope

Now, all skeptics got is a cherry pick of the US, USHCN, which we dont even USE.!!!!!

So guess what. If you take a distribution of adjustments you will find that they run from negative to positive

The Mean adjustment COOLS the record.

But because there is a distribution of adjustments, people with Phds can look at one tiny slice

and show “postive” adjustments. almost Mannian

Can you point to a specific newer version of GISS or HadCrut that showed a lower global warming trend than the previous version?

Steven Mosher October 2, 2015 at 10:35 am finally shows us graphs of temperature corrections. Good idea. It might have eliminated some nitpicking in the past. I find that now I can agree with Zeke Hausfather (5:59 PM – 9 Feb 2015) whom Mosher quotes saying that:

“… global temperature adjustments actually reduce the long-term warming trend, mostly due to oceans.”

This does not mean that I am all for corrections. Michael Critchton (thatJurassic guy) gave a talk to the Congress in which he was strongly opposed to correcting any original data. You should read his comments on how this is handled in the bio-medical field. My chief interest at the present time is that the corrections that are huge in the early twentieth century drop to practically nothing after 1980. It so happens that 1980 is the beginning of the hiatus I referred to in my previous comment. I smell a connection, not a coincidence. In the real world the temperature curve turns right at that point and becomes horizontal until the beginning of 1997, the start of the super El Nino of 1998. But the temperature on his graph turns upward instead and by 1997 the temperature rise has reached 0.3 to 0.4 degrees Celsius. This rise is fictional as explained in my previous comment. There are also five El Nino peaks on that interval but they are all wiped out by using his 5-year smoothing factor. Supposedly smoothing is meant to get rid of noise but El Nino is not noise, it is an integral part of global temperature change. Its natural frequency is five years, nicely gotten rid of by 5-year To show El Nino properly you should create a temperature curve with one week intervals. At such high resolution the cloudiness variable becomes visible and makes it necessary to use a magic marker to show the global trend. Only that way will you know what the temperature is really doing. And that is what you will find in my book. Looking at other graphs where they did not wipe out the El Ninos, such as HadCRUT, I have already seen that they raised up the entire curve including all the El Ninos to create the temperature rise in the eighties and nineties. In eighteen years since the fake warming was completed there has been time for this fake warming to contaminate all the so-called “official” temperature records. I have spoken of this forgery periodically for five years but got no response until a week ago. One of my readers has just unearthed a NASA document proving that they knew about the lack of warming in 1997. By a coincidence, the boss at NASA in 1997 was James Hansen. Hansen at one time opined that the real temperature should follow the high points of the observed temperature curve.

“Surely raw data exists in the original reports from Met stations? A particular UEA collection may have been lost, but it would be hard to lose ALL the original data. Wouldn’t it?”

Yes Jones says as much in the mails.

he lost SOME of his copies of the 5% of the data that didnt come from GHCN

he lost his LOCAL COPIES of NWS data.

tin foil hats here are getting annoying

Steven Mosher

Which temperature data product has produced the cuves you are showing?

Are these curves available any other place and followed by more text than the 140 character comment by Zeke at Twitter?

Not sure if the raw is available, but their reports are. I have been updating my list after a H/T from Nick at WUWT for the find. http://wattsupwiththat.com/2015/02/09/warming-stays-on-the-great-shelf/#comment-1856325

Now with August.

August 2015 – The combined average temperature over global land and ocean surfaces for August 2015 was 0.88°C (1.58°F) above the 20th century average of 15.6°C (60.1°F) => 0.88°C (1.58°F) + 15.6°C (60.1°F) = 16.48°C (61.68°F)

http://www.ncdc.noaa.gov/sotc/global/201508

July 2015 – The combined average temperature over global land and ocean surfaces for July 2015 was the highest for July in the 136-year period of record, at 0.81°C (1.46°F) above the 20th century average of 15.8°C (60.4°F), surpassing the previous record set in 1998 by 0.08°C (0.14°F).

=> 0.81°C + 15.8°C = 16.61°C or 1.46°F + 60.4°F = 61.86°F

http://www.ncdc.noaa.gov/sotc/global/201507

May 2015 – The combined average temperature over global land and ocean surfaces for May 2015 was the highest for May in the 136-year period of record, at 0.87°C (1.57°F) above the 20th century average of 14.8°C (58.6°F),

=> 0.87°C + 14.8°C = 15.67°C or 1.57°F + 58.6°F = 60.17°F

(1) The Climate of 1997 – Annual Global Temperature Index “The global average temperature of 62.45 degrees Fahrenheit for 1997” = 16.92°C.

http://www.ncdc.noaa.gov/sotc/global/1997/13

(2) 2014 annual global land and ocean surfaces temperature “The annually-averaged temperature was 0.69°C (1.24°F) above the 20th century average of 13.9°C (57.0°F)= 0.69°C above 13.9°C => 0.69°C + 13.9°C = 14.59°C

http://www.ncdc.noaa.gov/sotc/global/2014/13

16.48°C >> 16.61 >> 15.67 >> 16.92 << 14.59

Note to RBG on your “average anomaly” report. They cannot even keep the same 20th century average of 15.6°C or 15.8°C or 14.8°C or 13.9°C the same 15 years after it was over?

Some fudging you may want to also look into?

Since 1997 was not even the peak year, which number do you think NCDC/NOAA thinks is the record high. Failure at 3rd grade math or failure to scrub all the past. (See the ‘Ministry of Truth’ 1984).

Those are averages and anomalies for those particular months…

The cooling I have noted is the cooling of the temperature spike in the late 30’s-early 40’s, bringing it under the current temp. The second effect is to change the slope of the trend from about 1920 to 1940. This shows a new reader that there was no ‘faster temperature rise’ in the 20’s than there has been in the 1976-1996 period.

Mosher claims that the TOB and station movements have to be corrected for. Well, OK as long as the raw data is available for people to try other corrective measurements. Were there so many changes between 1920 and 1940 that it requires these adjustments?

I train lab staff to process raw data. They are not allowed to delete or modify raw data, but have to show their work on a copy file so reviewers can render their own version from the raw data to see how the overall result is modified, if, or at all. Judgement is frequently required. That is the real world. But there is no good support the climate catastrophism that pervades the media. The temperature record sucks, the models suck, the ad hominem sucks, the profligate waste sucks, the subsidy farming sucks, the destruction of the environment by wasteful green projects sucks. There is nothing about the CAGW movement that makes sense to anyone who has reviewed the data with a knowing eye.

Vuc’s chart showing the modification of temperatures over the past 15 years shows clearly that a steady or tapering-off trend was turned into an accelerating surface temperature trend. That is simply too ‘convenient’ to be believed. Colour me skeptical. Recent claims for the ‘hottest year evah’ are simply too small a margin to be believed. Colour me unconvinced. The surface temperature record is of such poor quality that nothing substantial can be based upon its numbers or trends. Why are so many people dicking around pretending that it can?

Quite right.

The idea that there must be a nefarious plot for these adjustments to always make the record scarier and scarier… it’s ridiculous.

All that’s needed is the kind of thing that proper science avoids with double-blind trials.

The Greens want to avoid such safety precautions. And that is where we must ask why?

It’s good enough to avoid MMR fiascos so why not to avoid AGW fiascos?

“The idea that there must be a nefarious plot for these adjustments to always make the record scarier and scarier… it’s ridiculous.”

Well when considering that anyone in that sector that disagrees (sometimes even a little) is smartly “tarred and feathered” sometimes to the point of leaving the field, just why would anyone NOT think otherwise, especially considering the huge losses to income that would occur if they said otherwise.

Dr Brown.

Thank you for this interesting post. You state:

“This should put to rest the notion that the strong El Nino of 1998 had any lasting affect on anything”

///////////////

I do not understand that statement. One needs to look at the satellite data for the period 1979 (inception) through to the run up to the 1998 Super El Nino. There is no trend, the temperatures are essentially flat between these periods.

http://woodfortrees.org/plot/rss/from:1979/to:1996

It will be noted that the trend is essentially a flat line at around the 0degC level.

Post the 1998 Super El Nino of 1998 to date, once again we see essentially a no trend flat line, but this time lying on the about +0.23degC level;

http://woodfortrees.org/plot/rss/from:1999/to:2014

It seems to me that the correct interpretation of the satellite data is that temperatures were flat as from launch in 1979 through to the run up to the Super El Nino of 1998, and have once again been flat as from the end of that Super El Nino to date.

However, to say that “the strong El Nino of 1998” has not had a lasting affect, seems to fly in face of the data that shows that there has been a step change in the temperature of about 0.23degC coinciding with the 1998 El Nino.

Is that step change caused by the El Nino? well I don’t know, because a temperature data set cannot answer cause 9it can only show what has happened to temperature, not why there has been any change to temperature). But one thing is sure, there is zero first order correlation between rising levels of CO2 and the satellite data set which shows a one off and isolated warming coincident upon the 1998 Super El Nino, which El Nino would appear to be an entirely natural event..

Your further views would be appreciated.

Agree, a step change took place during 1997/1998.

” that there has been a step change in the temperature of about 0.23degC coinciding with the 1998 El Nino. ”

I make it 0.26ºC, and that was the only warming in the whole of the RSS data.

The slight+ trend before has been cancelled by the slight negative trend since.

http://woodfortrees.org/plot/rss/from:1979/plot/rss/from:2001.2/trend/plot/rss/from:1979/to:1996/trend/plot/rss/from:2001.2/trend/offset:-.26

I am responsible for that statement. I agree that you do have a point about the step change. My perspective was on the fact that so many people criticize us for cherry picking times just prior to the 1998 El Nino to get a pause. I was merely trying to show that the pause exists right after the El Nino just as it did before.

On RSS, the slope is 0 from January 1997, but it is also 0 from April of 2000 now.

And for GISS, it is the same, only we have the same positive slope starting before as well as after the 1998 El Nino.

A) You will note that my words are quoted in the article. The specific phrase used was one due to Werner. So in some sense, I can’t reply to your question as it does not necessarily reflect my own view.

B) I think what Werner was referring to is that people who wish to “deny” the pause that had an entire box devoted to it in AR5 (so that the IPCC lead authors of chapter 9 clearly thought that the pause/hiatus was real) often accuse people like Monckton of cherrypicking endpoints to show a flat interval. Werner was showing that the zero slope obtained in UAH/RSS is rather robust. He isn’t addressing the rise on the left side associated with the ENSO event that lifted temperatures to the current near-plateau.

C) As for temperatures before 2000. It is not all that clear that the 1997/1998 ENSO was “the” proximate cause of the comparatively strong warming from 1983 to 1998. For one thing, the warming stretched from 1983 to 1998, a fifteen year period, and one could at least imagine that it started five years earlier in 1978, which is a reasonable end point for the near-neutral “hiatus” that stretched from roughly 1945 through at least 1975 (a period across which CO2 rose by over 10%, from around 310 ppm to around 350 ppm). Obviously causes have to precede effects, so attributing all of the warming in this stretch to ENSO is hardly reasonable. Note:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1943/to:2013/plot/hadcrut4gl/from:1943/to:1983/trend/plot/hadcrut4gl/from:1975/to:2003/trend/plot/hadcrut4gl/from:2002/to:2015/trend

In this plot I deliberately chose overlapping ranges where the character of the data trend is apparently quite different. From 1943-ish through the mid 1980’s, the “linear trend” (whatever that word means, which IMO is “not much”) is clearly flat to slightly negative, depending (obviously) on just where you pick your endpoints. The point of this is qualitative — if you eyeball the data, your eyes will agree with the green line — there is little evidence of warming across this period, and if you couldn’t see the sudden rise in the next fifteen years — if this were the year 1983, for example — you would have no reason whatsoever to think that temperatures were about to spike up based on examination of the data itself. On the contrary, you’d expect them to continue flat, because the weather tomorrow is likely to be like the weather today — one of the most elementary forms of weather prediction and still highly accurate today (asserting the autocorrelation of weather out to around 3 days).

In the next range, if one were only presented with the data from 1975 through anywhere in the early 2000’s, you’d go “Holy S**T! We’re gonna roast!” HadCRUT4 rose by ballpark 0.6 C in no more than 30 years, a rate of at least 0.2 C/decade, 2 C per century. The blue line is still at most marginally “catastrophic” — it certainly caused zero catastrophes, worldwide, to have global temperatures rise by 0.6C across this time frame, and if the media weren’t hammering humanity to make sure they knew about it nobody would have even noticed it as this sort of change is indistinguishable from the “climate shift” associated with driving thirty or forty miles away from your house in any direction.

Then the next range shows that even after all of the late stage adjustments there is a solid range of HadCRUT4 where the temperatures are pretty much flat. I’m not playing the “pick the endpoint” game, again, I’m just noting that the eye can see that temperatures haven’t changed much from somewhere between 1996 and 2003 to the present, in the specific sense that if you were given the data only from 1996 to the present and then asked to predict what the temperatures looked like in the 1980’s or 1950’s, you would never ever guess at the 0.6 rise per 25 years or the complete lack of noticeable change over the 40 years before that.

There are plenty of ways we can try to “explain” this data — for example, CO2 was trying to cause global warming during the green part but our industrial activity was producing smoggy pollution as fast as it was producing CO2 and the aerosols canceled the warming, until, during the 1970’s we cleaned up pollution which paradoxically unmasked the CO_2 forcing so temperatures rose double time to catch up. Or (to be charitable to all hypotheses) there is a 67 or so year natural cycle of temperatures and during the green period we were in the down cycle, which canceled pretty much all of the CO2 driven warming, but in the blue period we were in the up cycle and the two heterodyned to produce the double-time rise. We can even be ecumenical and embrace both at the same time — come, brothers, into my church, if the farmer and the cowman can be friends, why not the denier and the believer in global catastrophe due to CO2?

Of the two, the natural cycle explanation fits a bit better with the purple “hiatus” trend — it is right out there at the 67 year period that I find best fits the data around a CO2-only hypothesis — but OTOH China is polluting like crazy right now so who knows, maybe we have heterodyning again of multiple causes?

The actual science suggests that aerosols have a much smaller effect than the CMIP5 models have used parametrically to cancel the CO2 warming up to the rapid warming trend, which is one reason they are overshooting now — since aerosols haven’t changed and they attributed all of the cancellation to aerosols, we should be warming rapidly. If there are omitted causes, or if aerosols are in fact much less of a factor, one has to adjust CO2 sensitivity down and this is exactly what is happening, in many papers. It seems likely enough to come down to roughly half of the estimates in AR1-AR5, somewhere between 1 and 2 C per doubling, but of course this depends on the future as in any event there is so very little data available, especially reliable, precise data, to try to prognosticate with. The science has yet to address the possibility of non-atmospheric-chemistry cycles associated with e.g. the multidecadal oscillations, dissipation efficiency, ocean-atmosphere-solar coupling, modulation of albedo, and more. Again, there are hypotheses but the data is far, far away from where we can properly support or reject them on the basis of successful predictions of futures that are unlikely according to the competing hypotheses.

rgb

It is my understanding that the variations of ENSO do not create or destroy heat. The heat “released” by ENSO might shift joules from the ocean to the atmosphere, or the reverse. Therefore, it seems prudent to say that the strong El Niño of 1998 didn’t have any lasting affect on anything.

Now let’s try that top fig with UAH vs GISS

http://www.moyhu.org.s3.amazonaws.com/2015/10/WFTuah.png

The troposphere measure is now rising faster than GISS. Of course, WFT still has UAH5.6. Ver 6 would look like RSS. But one should be slow to say that GISS is wrong on the basis UAH changing its mind. And remember the advice of RSS’ Mearns that the surface indices are more reliable than the satellite.

And then there is the issue that the surface and troposphere are very different places. If you do see a difference, it doesn’t have to mean that one is wrong. It’s just a different place.

The physics of the theory of CAGW demand that the troposphere rise considerably faster than the surface.

Instead the surface T is rising faster. Indeed, you include the old UAH and still this clearly shows no warming in the troposphere since 1997 and certainly since 2003. (your picked time line includes the one time bump up in the troposphere) From there the surface record rapidly rises and the divergence increases. Whatever is causing the surface warming, confirmation bias or not, it is not CO2 per the physics of how CO2 warming would manifest.

“The physics of the theory of CAGW demand that the troposphere rise considerably faster than the surface.”

Links? References?

There is theory that warming of any origin should produce a tropical hotspot over some time. But the global average?

“Links? References?”

http://pubs.giss.nasa.gov/docs/2005/2005_Santer_etal_1.pdf

“There is theory that warming of any origin should produce a tropical hotspot over some time.”

No, not “any”.

https://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch9s9-2-2.html

Your Santer link is about tropical warming. It doesn’t say what you’d expect for the global average. And it says that while models predict tropical amplification, there is not much observed. To explain this, they say:

“These results suggest either that different physical mechanisms control amplification processes on monthly and decadal time scales, and models fail to capture such behavior; or (more plausibly) that residual errors in several observational data sets used here affect their representation of long-term trends.”

Mears of RSS was one of the authors.

Also http://www.ipcc.ch/publications_and_data/ar4/wg1/en/faq-3-1.html.

“For global observations since the late 1950s, the most recent versions of all available data sets show that the troposphere has warmed at a slightly greater rate than the surface, while the stratosphere has cooled markedly since 1979. This is in accord with physical expectations and most model results, which demonstrate the role of increasing greenhouse gases in tropospheric warming and stratospheric cooling; ozone depletion also contributes substantially to stratospheric cooling.”

Nick,

I am aware of what it says. The comment you wanted reference for was “The physics of the theory of CAGW demand that the troposphere rise considerably faster than the surface” and this study and many others show that to be the case. The troposphere should be warming faster than the surface, and this is not controversial. If you want to change the question about global average then I suggest you do your own searching. It’s not hard to find.

WRT to their 2005 conclusion, the divergence has only gotten considerably larger since then, especially after surface adjustments.

Nick Stokes

“Mears of RSS was one of the authors»

Carl Mears is Vice President / Senior Research Scientist at RSS

Here is a quote by Carl Mears»:

“(The denialists really like to fit trends starting in 1997, so that the huge 1997-98 ENSO event is at the start of their time series, resulting in a linear fit with the smallest possible slope.)»

It is remarkable that he uses the term «denialists». A term which can be regarded as nothing else than name calling.

http://www.remss.com/blog/recent-slowing-rise-global-temperatures

Wikipedia: “Name calling is abusive or insulting language referring to a person or group, a verbal abuse. This phenomenon is studied by a variety of academic disciplines from anthropology, to child psychology, to politics. It is also studied by rhetoricians, and a variety of other disciplines that study propagandatechniques and their causes and effects. The technique is most frequently employed within political discourse and school systems, in an attempt to negatively impact their opponent.”

Whoever is careless with the truth in small matters cannot be trusted with important matters.

– Albert Einstein

Carl Mears is involved in this current project:

“Improved and Extended Atmospheric Temperature Measurements from Microwave Sounders. The purpose of this project is to completely redo the current MSU and AMSU atmospheric data records using more advanced and consistent methods. This project is funded by the NASA Earth Sciences Directorate.”

As a Vice Precident I imagine that Carl Mears is quite influential in that project.

My guess is that we will soon see dramatic changes in the RSS temperature data series.

I will be greatly surprised if these changes will show a tendency of more cooling.

Now that UAH has found their errors and basically agree with RSS, I would be very surprised to see any real change in either direction. If there is, then they need an explanation as to why the new UAH is wrong that Dr. Spencer would agree with.