Guest Post by Bob Tisdale

In his post Schmidt and Sherwood on climate models, Andrew Montford of BishopHill commented on the new paper by Schmidt and Sherwood A practical philosophy of complex climate modelling (preprint). I haven’t yet studied the Schmidt and Sherwood paper in any detail, but in scanning it, a few things stood out. Those of you who have studied the paper will surely have additional comments.

DO CLIMATE MODELS SIMULATE GLOBAL SURFACE TEMPERATURES BETTER THAN A LINEAR TREND?

The abstract of Schmidt and Sherwood reads (my boldface):

We give an overview of the practice of developing and using complex climate models, as seen from experiences in a major climate modelling center and through participation in the Coupled Model Intercomparison Project (CMIP). We discuss the construction and calibration of models; their evaluation, especially through use of out-of-sample tests; and their exploitation in multi-model ensembles to identify biases and make predictions. We stress that adequacy or utility of climate models is best assessed via their skill against more naïve predictions. The framework we use for making inferences about reality using simulations is naturally Bayesian (in an informal sense), and has many points of contact with more familiar examples of scientific epistemology. While the use of complex simulations in science is a development that changes much in how science is done in practice, we argue that the concepts being applied fit very much into traditional practices of the scientific method, albeit those more often associated with laboratory work.

The boldfaced sentence caught my attention. A straight line based on a linear trend should be considered a more naïve method of prediction. A linear trend is a statistical model and it is definitely a whole lot simpler than all of those climate models used by the IPCC. So I thought it would be interesting to see if, when and by how much the CMIP5 climate models simulated global surface temperatures better than a simple straight line…a linear trend line based on global surface temperature data.

Do climate models simulate global surface temperatures better than a linear trend? Over the long-term, of course they do, because many of the models are tuned to reproduce global surface temperature anomalies. But the models do not always simulate surface temperatures better than a straight line, and currently, due to the slowdown in surface warming, the models perform no better than a trend line.

Figure 1 compares the modeled and observed annual December-to-November (Meteorological Annual Mean) global surface temperature anomalies. The data (off-green curve) are represented by the GISS Land-Ocean Temperature Index. The models (red curve) are represented by the multi-model ensemble mean of the models stored in the CMIP5 archive. The models are forced with historic forcings through 2005 (later for some models) and the worst-case scenario (RCP8.5) from then to 2014. Also shown is the linear trend (blue line) as determined from the data by EXCEL. The data and models are referenced to the full term (1881 to 2013) so not to skew the results.

Figure 1

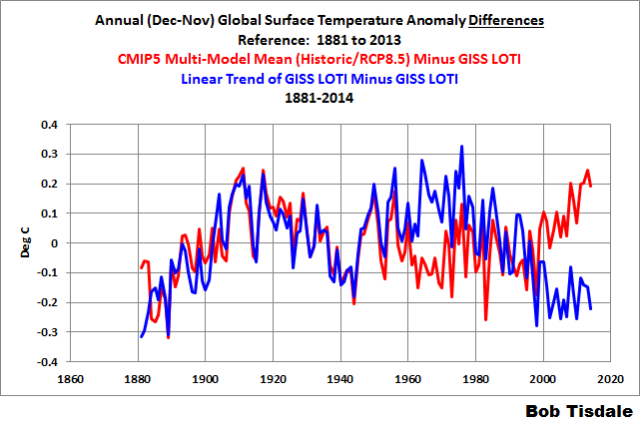

Over the past decade or so, the difference between the models and the data and the difference between the trend and the data appear to be of similar magnitude but of opposite signs. So let’s look at those differences, where the data are subtracted from both the model outputs and the values of the linear trend. See Figure 2. I’ve smoothed the differences with 5-year running-mean filters to remove much of the volatility associated with ENSO and volcanic eruptions.

Figure 2

Not surprisingly, in recent years, the difference between the models and the data and the difference between the trend line and the data are in fact of similar magnitudes. In other words, recently, a straight line (a linear trend) performs about as well at modeling global surface temperatures as the average of the multimillion dollar climate models used by the IPCC for their 5th Assessment Report. From about 1950 to the early 1980s, the models perform better than the straight line. Now notice the period between 1881 and 1950. A linear trend line, once again, performs about as well at simulating global surface temperatures as the average of the dozens of multimillion dollar climate models.

Obviously, the differences between the trend line and the data are caused by the multidecadal variability in the data. On the other hand, differences between the models and the data are caused by poor modeling of global surface temperatures.

For those interested, Figure 3 presents the results shown in Figure 2 but without the smoothing.

Figure 3

SCHMIDT AND SHERWOOD ON SWANSON (2013)

The other thing that caught my eye was the comment by Schmidt and Sherwood about the findings of Swanson (2013) “Emerging Selection Bias in Large-scale Climate Change Simulations.” The preprint version of the paper is here. In the Introduction, Swanson writes (my boldface):

Here we suggest the possibility that a selection bias based upon warming rate is emerging in the enterprise of large-scale climate change simulation. Instead of involving a choice of whether to keep or discard an observation based upon a prior expectation, we hypothesize that this selection bias involves the ‘survival’ of climate models from generation to generation, based upon their warming rate. One plausible explanation suggests this bias originates in the desirable goal to more accurately capture the most spectacular observed manifestation of recent warming, namely the ongoing Arctic amplification of warming and accompanying collapse in Arctic sea ice. However, fidelity to the observed Arctic warming is not equivalent to fidelity in capturing the overall pattern of climate warming. As a result, the current generation (CMIP5) model ensemble mean performs worse at capturing the observed latitudinal structure of warming than the earlier generation (CMIP3) model ensemble. This is despite a marked reduction in the inter-ensemble spread going from CMIP3 to CMIP5, which by itself indicates higher confidence in the consensus solution. In other words, CMIP5 simulations viewed in aggregate appear to provide a more precise, but less accurate picture of actual climate warming compared to CMIP3.

In other words, the current generation of climate models (CMIP5) agrees better among themselves than the prior generation (CMIP3), i.e., there is less of a spread between climate model outputs, because they are converging on the same results. Overall, however, the CMIP5 models perform worse than the CMIP3 models at simulating global temperatures. “[M]ore precise, but less accurate.” Swanson blamed this on the modelers trying to better simulate the warming in the Arctic.

Back to Schmidt and Sherwood: The last paragraph under the heading of Climate model development in Schmidt and Sherwood reads (my boldface):

Arctic sea ice trends provide an instructive example. The hindcast estimates of recent trends were much improved in CMIP5 compared to CMIP3 (Stroeve et al 2012). This is very likely because the observation/model mismatch in trends in CMIP3 (Stroeve et al 2007) lead developers to re-examine the physics and code related to Arctic sea ice to identify missing processes or numerical problems (for instance, as described in Schmidt et al (2014b)). An alternate suggestion that model groups specifically tuned for trends in Arctic sea ice at the expense of global mean temperatures (Swanson 2013) is not in accord with the practice of any of the modelling groups with which we are familiar, and would be unlikely to work as discussed above.

Note that Schmidt and Sherwood did not dispute the fact that the CMIP5 models performed worse than the earlier generation CMIP3 models at simulating global surface temperatures outside of the Arctic over recent decades. Schmidt and Sherwood simply commented on the practices of modeling groups. Regardless of the practices, in recent decades, the CMIP5 models perform better (but still bad) in the Arctic but worse outside the Arctic than the earlier generation models.

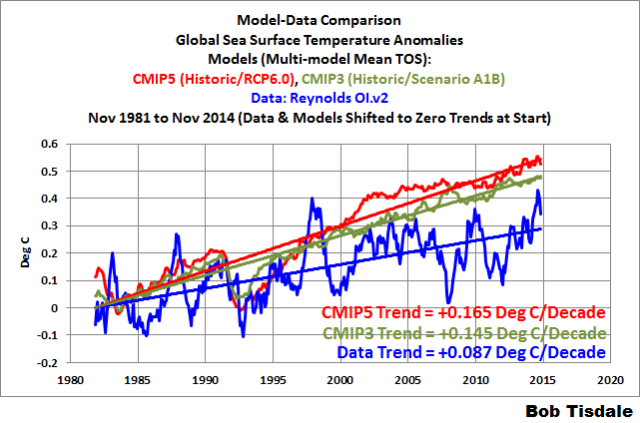

As a result, the CMIP3 models perform better at simulating global surface temperatures over the past 3+ decades than their newer generation counterparts. Refer to Figure 4. That fact stands out quite plainly in a satellite-era sea surface temperature model-data comparison.

Figure 4

CLOSING

Those are the things that caught my eye in the new Schmidt and Sherwood paper. What caught yours?

There is no reason wharever to think that global surface temperature, which is the result of a combination of effects from ackowledged non-linear dynamic systems, can be scientifically and accurately representedby a “straight line — a linear trend”.

There have been papers discussing this recently — how to model a “trend” of a non-linear system.

The ONLY reasons to attempt to use linear trend lines to represent global temperatures are all propagandistic . Up, Down, Flat – all propaganda.

I’m guessing you didn’t actually read the article. The only purpose for the linear model was to compare the CMIP5 ensemble against it.

That is, if they can’t beat even a linear model, then they are pretty pathetic.

Talking about model checking and comparison, is there any reason whatsoever the model code is not open sourced? I am a software professional now ( migrated from Physics ) and the open source approach has revolutionised software engineering. Indeed, open source projects are some of the highest quality code around. That’s because the code gets checked and tested by many mnay sets of eyes – peer review if you like.

So why don’t the modellers open source their code? It might actually help produce better models.

My point exactly — the linear model does not represent anything at all physical — but an idea that intentionally misrepresents the real world in order to shoot down another system that (intentionally or otherwise) misrepresents the real world.

Intentionally using misrepresentations to attempt to convince others of a viewpoint or opinion is the definition (mol) of propaganda. It certainly isn’t science.

Opinions on this will vary, mostly depending on one’s position in the Climate Wars.

Kip Hansen: Intentionally using misrepresentations to attempt to convince others of a viewpoint or opinion is the definition (mol) of propaganda. It certainly isn’t science.

It looks to me like you missed the point of both articles: Schmidt and Sherwood and Tisdale. Schmidt and Sherwood proposed that the test of modeling is how much the model results improve upon the naive expectations. Tisdale took them at their word and suggest a particular naive model for comparison, and using their standard showed that the CMIP5 models do not measure up to the naive model, and that the CMIP5 models do not measure up to the naive model as well as the CMIP3 models did. There was no “misrepresentation” of Schmidt and Sherwood, merely a particular instantiation of their standard, which was left kind of vague: “naive”. The only thing “more naive than a straight line” is a flat line, which even the most naive of readers already knows is false for this problem. “Less naive than a straight line” would be polynomials of higher order, straight lines with break points, splines, and Fourier analysis and harmonic regression.

Can you proffer a better example of “naive”, one that does not “misrepresent” Schmidt and Sherwood?

Reply to Marler ==> I was not and am not writing about either the Schmidt paper or the Tisdale response — just making a general observation.

Both S&S and Tisdale are attempting to do science with what I label, rather cavalierly, propaganda. One has to take several giant steps back to see what they are doing — using sciencey language and words to try to score points in the Climate Wars has a long long history.

There is no science gain– no new knowledge produced — no new understanding produced — by this “Schmidt and Sherwood proposed that the test of modeling is how much the model results improve upon the naive expectations” — in which the true scientific expectation is “we don’t really know how this system works”. In this case, there is NO TRUE NAIVE model to compare to — they are trying to make their model compare to a known incorrect model ==> temps == CO2.

Tisdale makes a new known incorrect model — a simple straight line — to prove them wrong.

Both are — bluntly — full of it — simply propaganda that is.

Kip Hansen: I was not and am not writing about either the Schmidt paper or the Tisdale response — just making a general observation.

ok

Both S&S and Tisdale are attempting to do science with what I label, rather cavalierly, propaganda.

Make up your mind.

Paul Mackey,

Many codes aren’t open-sourced, but quite a few are. This list is a bit dated (2009, CMIP3) but the major CMIP5 players are still well-represented and it gives a pretty good rundown of what can be freely downloaded and compiled and what can’t: http://www.easterbrook.ca/steve/2009/06/getting-the-source-code-for-climate-models/

RealClimate has a short list of GCMs for which source is freely available: http://www.realclimate.org/index.php/data-sources/#sthash.y0npnezM.dpuf

Model codes (GCMs)

Downloadable codes for some of the GCMs.

GISS ModelE (AR4 version, current snapshot)

NCAR CCSM(Version 3.0, CCM3 (older vintage))

EdGCM Windows based version of an older GISS model.

Uni. Hamburg (SAM, PUMA and PLASIM)

NEMO Ocean Model

GFDL Models

MIT GCM

Paul Mackey, PS: EdGCM now requires a paid license which probably means the code is no longer open. This also somewhat dates RealClimate’s list. Google is your friend, however.

The log of the atmospheric CO2 concentrations is going up steadily in a linear fashion. Fitting a straight line is about seeing if the overall trend of temperatures is responding to this, assuming that other factors are only short term.

Fitting a sin t+t^2 function to the data is naive, I suppose.

http://postimg.org/image/jlsuu32hv/

The rate of change of temperature from HadCRUT4 shows how good the fit is. I have no physical explanation for why the fit is so good, just saying that any modelling should be equally as good for it to be taken seriously.

http://postimg.org/image/b0b87m737/

65 years is the apparent base period of the AMO in contemporary times. That term drives the periodicity of the fitted curve. post hoc selection. But your linear term in the equation is simply the post hoc average warming rate coming out of LIA from the mid-19th Century. There is no reason to believe that term is linear over longer terms (millenia) and it likely isn’t. That implicit linearity is such that it will drive a huge divergence with reality if used as a predictive tool. The climate has millenial scale cycles that are not predictably periodic.

“But your linear term in the equation is simply the post hoc average warming rate coming out of LIA from the mid-19th Century.”

+ getting rid of that 40s blip.

I think it’s fair to say, though, that if you have a highschooler with a ruler and graph paper make a forecast, the skill of that forecast ought to be a starting point for evaluation of the skill of a climate model and supercomputer modeling the same data. The 20th century trend is up. I could do “up” reasonably accurately with ruler and graph paper. The model ought to do better and it better be statistically significant or it is unnecessarily complex. A computer model that just says more of the same and maybe a bit more is ultimately pretty uninteresting because there’s not much to distinguish it from the boy and his ruler.

…no reason whatever…

Obvious comment – Footnote 1:

So,

A) How often is the purpose (the “certain task”) described in the papers that use the climate models?

B) Are all climate models made with the same purpose? If so why do they not all agree? If not, how can they be “averaged”.

C) In policy making and in the Summary for Policy Makers is the intended “certain task” of the models described? And is that “certain task” to assist in policy making.

I think the aim of the paper is admirable. Not so sure of the achievement though. It neglects to even consider the possibility that the models may be of negligible usefulness at all.

“Are all climate models made with the same purpose? If so why do they not all agree? If not, how can they be “averaged”.”

All climate models are made with the purpose of modelling the atmosphere/ocean for long enough that climate can be determined. As is well known, weather is chaotic, and as determined from initial conditions is effectively random. That’s why models don’t agree. But they have a common climate signal determined by forcings etc. The way to get an underlying signal obscured by random noise is to average a large number of instances.

Average of random noise=true signal?

Is this what climate scientists do?

Is the noise random? Has that been firmly established? Isn’t it more likely the noise is 1/f?

Given that paleo data appears to be scale invariate – a fractal distribution – isn’t it most likely that cliamte is not bound by the Central Limit Theorem, with all the statistical implications that result?

No, average of instances of signal with random noise = signal with less noise (cancellation).

mpainter: Average of random noise=true signal?

No. We can assume that the ‘true signal’ itself is not random, but just buried in random noise. Using the Central Limit Theorem, we know that the arithmetic mean of a sufficiently large number of random noise variables is approximately normal. Further, the expected value (mean) of additive white Gaussian noise is zero.

This has long been known as a simple but effective means of noise reduction. If multiple samples are taken of the same quantity with a different (and uncorrelated) random noise added to each sample, then averaging N samples reduces the noise variance (or noise power) by a factor of 1/N”

What climate scientists do:

Treat the product of the GCM’s as actual observations and vaporize about “noise” and “signal”.

When one pretends that invented data is real, you twist yourselves into a pretzel trying to explain away actual data, as in the “pause” (57 explanations so far for the “pause” so far)

Nick: I can get the average of the last 20 years of lottery numbers in the UK. They are meant to be random (RGBatDuke would have something to say about that) so, if I had the average, what use would it be? Would I become a millionaire from it? I mean, Mann and a few others of his ilk have from their random averaging….

That doesn’t make any sense. You could initiate all climate models to the same initial conditions and they should not deviate because of chaotic weather.

Nick Stokes December 29, 2014 at 2:17 pm

“That’s why models don’t agree.”

Nick, you know better than that (particularly in the absolute).

Er, no.

That only works for a stationary system, chaotic systems average is no more predictable that the raw data. To accept that you can average weather to climate one must accept that weather data is stationary, that is, it even has a stable average… and this has NOT been shown.

The average of weather data varies markedly depending on what subset you select.

“Nick: I can get the average of the last 20 years of lottery numbers in the UK. “

Lottery managers make very sure that there is no signal there, else you could make money. But averaging to get a common feature is ancient, common, and not invented by climate scientists.

Swedes taller than Greeks? Maybe. But no use checking one Swede and one Greek. Average 1000 heights of each and if there is a tallness signal there, you may be able to quantify it. Nothing new here.

“You could initiate all climate models to the same initial conditions and they should not deviate because of chaotic weather”

They certainly will. That is the defining characteristic of chaos. The tiniest difference grows until the weather diverges.

“Is the noise random?”

Probably not. The essential requirement is that averaging produces cancellation of noise relative to signal. Unsynchronized cycles will cancel too.

HAS

“Nick, you know better than that (particularly in the absolute).”

Well, OK, the indeterminacy of weather is one big reason why models don’t agree, on a decadal scale, with each other or with Earth.

average of instances of signal with random noise = signal with less noise

==================

not true. that only works to cancel random (white) noise. pink cannot be cancelled by averaging, because there is always a frequency with a longer time period than your sample.

The way to get an underlying signal obscured by random noise is to average a large number of instances.

============

that only works for noise of the form 1/(f^n), where n=0.

no matter how many samples you average, 1/f noise is not expected to converge on the true average, because you cannot eliminate the noise component with a larger period than your sample, and that is on average the largest portion of the noise. All you are doing is averaging out the small high frequency error wiggles and leaving the much larger undulating error wiggles untouched.

“Is the noise random?” Probably not.

========

agreed

The difference Nick is that comparing heights is comparing real data. Comparing modeled results is comparing imaginary data.

Doing something to imaginary data doesn’t make it more real.

“not true. that only works to cancel random (white) noise. pink cannot be cancelled by averaging, because there is always a frequency”

No, that’s overstated. White and pink noise both have all frequencies present. And averaging a finite number of samples can’t remove all noise at any frequency. But it does enhance the signal relative to the noise, of whatever color.

Here is the “underlying signal obscured by random noise”.

10 years later the ‘science’ moves on. New model discoveries are made and mankind is grateful.

Now you just wait until we get a trend warmer winters – “THE MODELS PREDICTED IT!” Of course they did darling. It’s called a rightwrong precipitation model.

Good night.

“As is well known, weather is chaotic, and as determined from initial conditions is effectively random. That’s

why models don’t agree. But they have a common climate signal determined by forcings etc. The way to get an underlying signal obscured by random noise is to average a large number of instances.”

All his usual spin and BS. See:

http://www.ig.utexas.edu/research/projects/climate/GCM_uncertainty/wpe6.gif

A substantial number of models go off and do their own thing, which has nothing to do with weather noise. There is no point debating the nuances of Stoke’s statements when they are not even true.

Nick Stokes December 29, 2014 at 4:46 pm

” … the indeterminacy of weather is one big reason why models don’t agree, on a decadal scale, with each other or with Earth.”

Internal variability (the stuff that averages out) is a minor reason why models don’t agree, on a decadal scale, with each other or with Earth. Structural uncertainty probably swamps all others when comparing with earth (but models share the same structure and so artificially hide this) and differing parameter estimations probably exceed the impact on internal variability.

You might get a common signal out of different model runs, but what it is telling you about future climate and its relationship to forcings etc is moot.

Unless of course you define future climates to be what the models predicted once they have been averaged out.

Using the Central Limit Theorem, we know that the arithmetic mean of a sufficiently large number of random noise variables is approximately normal.

====================

that only applies to certain classes of problems. for 1/f noise and a great many dynamic systems in nature this does not hold true.

the problem with climate models is that they have made a large number of simplifying assumptions without establishing that the assumptions are correct.

one of the major flaws remains the assumption that averaging reduces noise and what is left over is signal. but this is complete nonsense.

you cannot remove 1/f noise with a period of 1000 or 10,000 or 100,000 years by averaging 150 years worth of data. Yet the amplitude is much greater than 10 or 100 year noise. So you are simply fooling yourself that you are removing the noise.

Will Nitschke December 29, 2014 at 6:15 pm

To really push the point home perhaps show a graph of the increase in the modeled absolute temperature from a common starting absolute temperature.

how [do] we know that the 60 year quasi cycle seen in ocean currents isn’t simply 1/f noise with a period of about 60 years? Because the frequency is approaching zero 1/f means that the magnitude of the noise will be very big in comparison to higher frequency noise.

How can you determine if the 60 year ocean cycles are indeed cycles or simply long period, high magnitude noise? So, if one was to use a 60 year running average for example to remove the quasi cycle, would you be removing noise or signal? Thus the danger of averaging, and why you don’t perform statistics on averages. You use the underlying data to avoid generating spurious correlations and trends.

“A substantial number of models go off and do their own thing”

Again, I wish people would give their sources. That plot is from Fig 9.6, Cubasch et al, 2001. Things have changed. But yes, as I said above in response to HAS, weather noise is not the only source of deviation. But on a decadal scale, it is a big one.

Nick Stokes wrote: “As is well known, weather is chaotic, and as determined from initial conditions is effectively random.” That is true. But then he wrote: “That’s why models don’t agree”, which is false.

In doing a single long model run to determine the climate sensitivity of the model, most of the random noise should cancel. The resulting sensitivity is then a property of the physics assumed in the model. Look at the graph Nitschke posted; the trends are much larger than the noise, so slightly different noise won’t change the trends. If you run a model with one set of initial conditions, then do a second run with randomly varied initial conditions, the trend will not be very different.

The various models give very different sensitivities due to different assumed physics, mostly to do with how they parametrize clouds. You can not average out errors in physics. Averaging a wrong result with a right result gives you a wrong result.

Mike M.

“But then he wrote: “That’s why models don’t agree”, which is false.”

It was incomplete, as I’ve agreed. I’ll repeat my response to HAS above

“Well, OK, the indeterminacy of weather is one big reason why models don’t agree, on a decadal scale, with each other or with Earth.”

“Look at the graph Nitschke posted”

As I said above, that is from Cubasch et al, 2001. Those are fairly early days for AOGCM’s.

“You can not average out errors in physics.”

Averaging reduces noise, and gives a more accurate version of signal. If models are returning different signals, you don’t get a further improvement in the same way. The ambiguity of sensitivity etc remains. That is what people have been working on since 2001, and will continue to do.

ferdberple December 29, 2014 at 6:34 pm

You are 100% correct as regards time averaging. I think Nick is thinking more of ensemble averaging. What he is claiming isn’t generally true, though, except when the system is linear and dissipative.

Stokes is still full of it. Standard excuse, yes the models of 2001 were wrong, but the models used in 2014 are now fine. Since it’s trivial to disprove this excuse, why make it? In the hopes nobody will check that you’re lying, of course.

You can readily identify a consistent 1.5C spread between models, even the current generation, like shown here –

http://wattsupwiththat.files.wordpress.com/2013/10/cmip5-90-models-global-tsfc-vs-obs1.jpg?w=640&h=576

Or you can consult figure 9.8 in the IPCC, Model Evaluation Chapter 9 AR5. (Except this diagram does its best to try make the information difficult to interpret using the old fashioned spaghetti vomit technique.)

Will Nitschke December 29, 2014 at 8:53 pm

” … you can consult figure 9.8 in the IPCC, Model Evaluation Chapter 9 AR5.”

As I noted above, Fig 9.08 also shows that the models have a range of absolute temperatures of 2.8 C for their 1961 – 1990 mean temp.

Which climate were they converging to Nick?

And just what is the freezing point of water on that planet?

It’s called structural uncertainty etc, not weather variability – and these runs are over the period the models were tuned to and have the same forcings as our planet.

What happens when we move outside their comfort zone?

Will Nitschke,

If you’re into accusations of lying, perhaps you’d like to explain why you showed a plot from 2001 with no indication of source?

But

“You can readily identify a consistent 1.5C spread between models, even the current generation”

In what way consistent? Your CMIP5 plot shows the much greater role now of weather noise vs secular differences, even after 5-yr smoothing. No-one has claimed that models do not still have systematic differences. What I have said is that model runs have differences due to weather noise, and averaging will reduce this.

Nick Stokes December 29, 2014 at 10:19 pm

“No-one has claimed that models do not still have systematic differences. What I have said is that model runs have differences due to weather noise, and averaging will reduce this.”

Nick Stokes December 29, 2014 at 2:17 pm

“As is well known, weather is chaotic, and as determined from initial conditions is effectively random. That’s why models don’t agree. ”

If you are going to agree you over-egged the pudding at 2.17 pm, why not acknowledge this rather than re-write history at 10.19 pm.

The reason I’m being picky about this is that Schmidt and Sherwood are applying lipstick to the pig rather than helping to get things back onto a more scientific basis, and you are singing in the chorus using the same type of song sheet.

Anyone who has looked at climate model runs can see *obvious* structural differences. Many models run consistently hot. A few bump along the bottom of the spread. The rest are all over the map.

And I accuse Stokes of lying because he lies through his teeth. Even a cursory look at the model runs of 2001 (forecast up to 2030) show a spread of 0.5C. But the CMIP5 model runs over the same range show a spread of around 1C. The CMIP5 models are LESS structurally consistent with each other, although that is likely an artifact of comparing 90 models in one chart to 9 in the other.

There are really only two possibilities here. Stokes is a professional BS artist or he is ignorant. I don’t think the guy is ignorant.

Chaotic – in other words recursive and the result is highly sensitive to very small changes to the starting conditions. So if you run it enough times with very slightly different inputs you will eventually get a result you like. Or another way to put it is that the results are not computable, as you never know the starting conditions in sufficient detail.

Lorenz, who discovered this branch of mathematics, was a meterologist, and came across this whilst trying to predict the weather.

So I don’t agree with Nick Stokes when he says they have a common clmate signal. Not if the system is truly chaotic. Unless of course, that common signal has been assumed and programmed in. So let them open source the model code and we can all see for ourselves!

Nick: the unexplained variance in climate data isn’t necessarily “random” its merely unexplained. Theres a big difference, and to assume that the unexplained variance is white noise is perhaps unwarranted. Just because we can’t attribute the variance to a known cause does not make it random.

Mods: this is a repost because my first attempt vanished.

Nick Stokes

You assert

NO! Absolutely not! And you know you have presented falsehood!

The chaotic nature of weather is NOT why the models don’t agree.

The models don’t agree because each model is of a different and unique climate system.

And you know that because I have repeatedly explained it to you.

For the benefit of those who do not know, I again explain the matter.

None of the models – not one of them – could match the change in mean global temperature over the past century if it did not utilise a unique value of assumed cooling from aerosols. So, inputting actual values of the cooling effect (such as the determination by Penner et al.

http://www.pnas.org/content/early/2011/07/25/1018526108.full.pdf?with-ds=yes )

would make every climate model provide a mismatch of the global warming it hindcasts and the observed global warming for the twentieth century.

This mismatch would occur because all the global climate models and energy balance models are known to provide indications which are based on

1.

the assumed degree of forcings resulting from human activity that produce warming

and

2.

the assumed degree of anthropogenic aerosol cooling input to each model as a ‘fiddle factor’ to obtain agreement between past average global temperature and the model’s indications of average global temperature.

More than a decade ago I published a peer-reviewed paper that showed the UK’s Hadley Centre general circulation model (GCM) could not model climate and only obtained agreement between past average global temperature and the model’s indications of average global temperature by forcing the agreement with an input of assumed anthropogenic aerosol cooling.

The input of assumed anthropogenic aerosol cooling is needed because the model ‘ran hot’; i.e. it showed an amount and a rate of global warming which was greater than was observed over the twentieth century. This failure of the model was compensated by the input of assumed anthropogenic aerosol cooling.

And my paper demonstrated that the assumption of aerosol effects being responsible for the model’s failure was incorrect.

(ref. Courtney RS An assessment of validation experiments conducted on computer models of global climate using the general circulation model of the UK’s Hadley Centre Energy & Environment, Volume 10, Number 5, pp. 491-502, September 1999).

More recently, in 2007, Kiehle published a paper that assessed 9 GCMs and two energy balance models.

(ref. Kiehl JT,Twentieth century climate model response and climate sensitivity. GRL vol.. 34, L22710, doi:10.1029/2007GL031383, 2007).

Kiehl found the same as my paper except that each model he assessed used a different aerosol ‘fix’ from every other model. This is because they all ‘run hot’ but they each ‘run hot’ to a different degree.

He says in his paper:

And, importantly, Kiehl’s paper says:

And the “magnitude of applied anthropogenic total forcing” is fixed in each model by the input value of aerosol forcing.

Kiehl’s Figure 2 can be seen here.

Please note that the Figure is for 9 GCMs and 2 energy balance models, and its title is:

It shows that

(a) each model uses a different value for “Total anthropogenic forcing” that is in the range 0.80 W/m^2 to 2.02 W/m^2

but

(b) each model is forced to agree with the rate of past warming by using a different value for “Aerosol forcing” that is in the range -1.42 W/m^2 to -0.60 W/m^2.

In other words the models use values of “Total anthropogenic forcing” that differ by a factor of more than 2.5 and they are ‘adjusted’ by using values of assumed “Aerosol forcing” that differ by a factor of 2.4.

So, each climate model emulates a different climate system. Hence, at most only one of them emulates the climate system of the real Earth because there is only one Earth. And the fact that they each ‘run hot’ unless fiddled by use of a completely arbitrary ‘aerosol cooling’ strongly suggests that none of them emulates the climate system of the real Earth.

And it cannot be right to average the model outputs because average wrong is wrong.

Richard

Mods:

I have twice tried to make a post in reply to N Stokes but both attempts have vanished.

Please see if the attempts are in the ‘bin’ and if so then retrieve one of them and post it. Alternatively, please let me know they are lost so I can make a third attempt.

Richard

Nick writes “As is well known, weather is chaotic, and as determined from initial conditions is effectively random. That’s why models disagree.”

It’s common belief among AGW enthusiasts that the earth cannot maintain different energy levels resulting in global temperature changes far beyond decadal scales. That’s the basis for the “its CO2 wot done it cause we can’t think of anything else” line of reasoning.

If you’re right then the whole “it must be because of the CO2” argument falls apart.

Paul Mackey

“So I don’t agree with Nick Stokes when he says they have a common climate signal. Not if the system is truly chaotic. Unless of course, that common signal has been assumed and programmed in. So let them open source the model code and we can all see for ourselves!”

Weather is truly chaotic. And we do have a climate. Chicago is different to Miami.

There is open source code, and documented too. Eg

GISS

CCSM 4.0

davideisenstadt

Nick: the unexplained variance in climate data isn’t necessarily “random”

I agree. As I said above, the essential feature is that it is subject to cancellation on averaging. Maybe not complete, but enough for climate information to emerge.

Reply to Stokes ==> Please reference the peer-reviewed paper that clearly demonstrates that the climate signal found in common amongst models is in actuality a climate signal and not simply a result of common parameterization.

The IPCC is of the opinion that CLIMATE is a bounded non-linear dynamic system — not just weather. Thus climate is also highly sensitive to initial conditions and sensitive to the tweaking of parameters in the models. Not so?

Johanus: Using the Central Limit Theorem, we know that the arithmetic mean of a sufficiently large number of random noise variables is approximately normal.

that does assume that they are an iid sequence from a population with a finite variance (actually, it assumes slightly more than that, but this is enough for now), neither of which is known for the climate simulations.

For practical purposes, the further assumption is that the mean to which the sample means converge is close to the actual “signal” or mean of the population that you hope you are sampling from — something else not known or demonstrated for the climate simulations. The divergence of the sample mean from the subsequent data suggests indeed that the mean of the population from which the simulations are a sample is different from the true climate trajectory. That is the point that the Schmidt and Sherwood article is attempting to discredit: that the divergence shows the model underlying all simulations is wrong.

Nick Stokes: But they have a common climate signal determined by forcings etc. The way to get an underlying signal obscured by random noise is to average a large number of instances.

As I wrote in response to Johanus, that is only true if the function to which the mean of the samples is converging is in fact the true signal. In the case of the climate simulations, the (growing) disparity between the actual climate and the simulation mean indicates that the “common climate signal” assumed by all the models is not the true climate signal.

Put differently, the means of a large biased sample converge almost surely to a function that is not the signal. A question much addressed by critics of the modeling, ignored by Schmidt and Sherwood, is whether the disparity displayed so far (between climate and model means) is enough evidence that the models are useless for planning purposes.

Nick Stokes: As I said above, the essential feature is that it is subject to cancellation on averaging. Maybe not complete, but enough for climate information to emerge.

I agree. With respect to the climate models, the “cancellation” to date shows that the mean does not in fact lead to climate “information”. The “essential feature” is not present in climate models.

Since Climate is a non-linear system, there is no underlying signal in the noise, what looks like noise is actually the signal, how would you average this?

The ideas of “signal” and “noise” are appropriate in relation to the problem of communication but inappropriate in relation to the problem of control. In the latter case the signal and noise come from the future thus having to travel at superluminal speed. This, however, is impossible under relativity theory.

Well point B has led to some interesting challenges and discussion. Me happy.

But what about Points A and B?

Especially how Point B can be a point at all if peer review is functioning correctly?

I should like to make a request that might appear off topic. Both at University and in industry I was taught to never rely solely on the color of the lines on a graph to differentiate the data. Two reasons were given – they don’t reproduce well in black and white (probably no longer an issue), and there are color-blind people in the community. I was taught to use color and different line types / line weights. Possibly those submitting to WUWT might consider this in future submitals.

Please do not consider this a criticism of this post, or any others. Just a suggestion.

Thanks, and may we all have a happy 2015, with another totally ineffective CoP in Paris next December.

Jim

I second Jim’s suggestion. Different line types is an excellent policy to follow.

My first experience of Excel graphs was as part of a presentation to the Finance Director. We had utilised all the colours for the charts. We went through the presentation only to discover at the first chart that he was colour blind. A lesson that was taken on board for all future presentations.

Your advice is excellent for all bloggers.

A good discussion of this subject here: http://www.climate-lab-book.ac.uk/2014/end-of-the-rainbow/

@retired Engineer – very good point well made.

Thank you Jim.

I am red/green color blind and have an extremely hard time differentiating fine gradient lines of those colors.

PS – I don’t sort socks either…

What we really need, in order to say the models are no better than a line, are hindcast comparisons. For example, fit the line to data from 1880 to 1920, and then see how well it predicts 1921-1940. Repeat for other segments of the record. The analysis shown here is just fun with graphs.

Barry

You assert

That is not true if the purpose of the models is to forecast the future.

Ability to hindcast is NOT an indicator of ability to forecast

The reason for this is that

(a) there are an infinite number of ways to adjust a model to agree with the single known past

but

(c) there is only one way the unknown future will evolve from among the infinite number of possible futures.

Richard

Hi

I commented a couple of times over at Bishop Hill on this, particularly contrasting the the breezy confidence exuded by Gavin Schmidt and Steven Sherwood that all is well with climate models with Leonard Smith’s of CATS at LSE view that “.. climate models were not up to the standards in relation to other computational fluid dynamics groups in terms of validation, verification, uncertainty quantification, and transparency …”, and that “the demonstrated value of weather/climate forecasts as a function of lead time:

– Medium-range: Significant, well into week two +

– Seasonal: Yes, in some months and regions

– Decadal: Not much (global and regional)”

[I’m quoting a summary of a Smith workshop presentation http://www.eas.gatech.edu/sites/default/files/SmithTalkGT.pdf from a Judith Curry post on the workshop in question http://judithcurry.com/2014/02/18/uk-us-workshop-part-iv-limits-of-climate-models-for-adaptation-decision-making/ ]

Smith’s point is that climate modelers need to build greater trust (as other CFD modellers have done) by not concealing the problems. Schmidt and Sherwood are very much in the latter camp i.e. part of the problem rather than part of the solution.

One of the sub plots here is that the current preoccupation with GCMs might be counterproductive on a decadal scale, and we should be investigating other models that would better meet the policy maker users’ needs. The argument is that what GCMs model is not what we need to know, and what we need to know GCMs can’t deliver.

On that score Mr Tisdale is there some accessible literature on using the larger scale atmospheric oscillations as the basis for modelling on a decadal scale?

I was wondering if anybody ever tried training a neural network to predict the global surface temperature??? The program could be fed all sorts of data, including the CO2 and other concentrations, the solar flux, magnetic field strength, forest cover, amount of dirty coal being burned, volcanoes, you name it. I bet the neural network would do a better job.

The advantage of a well-trained neural network is that we don’t really understand how it works – we get a huge matrix of coefficients, and that’s all. A great fit for climatology.

Reply to Fernando ==> CG makes a valid point — since we do not understand (on a deep level) how the climate system as a whole works and since we do know that it is some type of a coupled, bounded, multi-faceted , non-linear dynamical system(s), a neural network might be good a describing the system, but would not be capable of telling us how it works nor predicting its outcomes.

They need to separate policy from science, politicians from scientists, oracles from forecasters. They routinely and intentionally leave the scientific domain and venture into universal (i.e. space) and even extra-universal (i.e. time and space) domains. Likely for the same reasons that science was exploited and corrupted hundreds and thousands of years earlier.

The system is incompletely characterized and unwieldy, or chaotic. Statistical methods are only valid within an indefinite period of time and space. The scientific method is a framework designed to reject philosophical inference and necessarily limit scientific inquiry through deduction.

That’s one step forward for man. Two steps backward for mankind.

The climate models are built without regard to the natural 60 and more importantly 1000 year periodicities so obvious in the temperature record. The modelers approach is simply a scientific disaster and lacks even average commonsense .It is exactly like taking the temperature trend from say Feb – July and projecting it ahead linearly for 20 years or so. They back tune their models for less than 100 years when the relevant time scale is millennial. The whole exercise is a joke.

The entire UNFCCC -IPCC circus is a total farce- based, as it is, on the CAGW scenarios of the IPCC models which do not have even heuristic value. The earth is entering a cooling trend which will possibly last for 600 years or so.

For estimates of the timing and extent of the coming cooling based on the natural 60 and 1000 year periodicities in the temperature data and using the 10Be and neutron monitor data as the most useful proxy for solar “activity” check the series of posts at

http://climatesense-norpag.blogspot.com

The post at

http://climatesense-norpag.blogspot.com/2014/07/climate-forecasting-methods-and-cooling.html

is a good place to start. One of the first things impressed upon me in tutorials as an undergraduate in Geology at Oxford was the importance of considering multiple working hypotheses when dealing with scientific problems. With regard to climate this would be a proper use of the precautionary principle .-

The worst scientific error of the alarmist climate establishment is their unshakeable faith in their meaningless model outputs and their refusal to estimate the possible impacts of a cooling rather than a warming world and then consider what strategies might best be used in adapting to the eventuality that cooling actually develops.

Do these long-term periodicities exist? Aren’t we better off by assuming an auto-correlated random number sequence? Do we really know enough – in 2014?

Curious George – Yes they clearly exist see

http://climatesense-norpag.blogspot.com/2014/07/climate-forecasting-methods-and-cooling.html

Dr. Norman Page wrote: “the importance of considering multiple working hypotheses when dealing with scientific problems”.

This is indeed important and does seem to be a problem with the climate modellers. They downplay possibilities like natural oscillations, random walks in a potential well, and climate being primarily an ocean phenomenon rather than an atmospheric one. Then they all move in a herd.

That seems to be an increasing problem in science in general, not just climate science. With an ultra-competitive environment for funding and increasing reliance on all sorts of performance metrics, woe be to researcher who does not move with the herd. He will be trampled, or left behind to be picked off by the wolves.

Mike M.,

No. Virtually everything you know about oscillating internal climate variabilities comes from the fact that those things are very much not ignored, and are rather studied in extensive detail.

An experimental protocol:

1) Select a penny at random from your spare change bin.

2) Flip it 10,000 times.

3) For each outcome of heads record a 1 in your lab notebook. -1 for each outcome tails.

4) Sum the results.

5) Repeat 2-4 100 times.

Perform whatever statistical test of your choice on the results. If you find that you get a statistically significant non-zero result across your 100 trials, it’s a safe bet that your penny is biased, and no one sane will call you crazy for ingoring random walks.

Oceans are a dominant factor, but they do not create energy, nor destroy it; they only soak it up and dole it back out and have a far greater capacity for doing so than anything else in the climate system. However, energy still has to come in through the atmosphere and get back out through it. Ignore radiative molecules in the atmosphere at the peril of doing very schlocky science.

Brandon Gates wrote: “climate variabilities comes from the fact that those things are very much not ignored, and are rather studied in extensive detail”. Yes but so far as I am aware the models do not reproduce anything with more than a vague resemblance of the decadal oceanic oscillations. If you can cite references to the contrary, I would be very interested in them.

“An experimental protocol:

1) Select a penny at random from your spare change bin.

2) Flip it 10,000 times.

3) For each outcome of heads record a 1 in your lab notebook. -1 for each outcome tails.

4) Sum the results.”

About two-thirds of the time, this will produce a sum between -100 and +100, 1/6 of the time lower, 1/6 of the time higher. The root-mean-square month-to-month variation in global temperature is about 0.1 K.There are about 1800 months in the instrumental T record. So repeat your experiment flipping the penny 1800 times and summing values of -0.1 and +0.1. About 1/6 of the time the sum will be less than -4.5 and 1/6 of the time greater than 4.5. That is much larger than the observed T range. Including negative feedback will narrow the range, which is why I said “random walks in a potential well”.

” Ignore radiative molecules in the atmosphere at the peril of doing very schlocky science.” Absolutely true. Increasing atmospheric CO2 will warm the climate. the question is how much. Treating the ocean as little more than a source of water vapor and a sink for heat, as many models do, also puts one at peril of doing poor science.

Mike M.,

Well you’re in luck, here’s a 2014 open access paper that is CMIP5-specific: http://iopscience.iop.org/1748-9326/9/3/034016/article

Abstract

Abstract

We analyse a large number of multi-century pre-industrial control simulations from the fifth phase of the Coupled Model Intercomparison Project (CMIP5) to investigate relationships between: net top-of-atmosphere radiation (TOA), globally averaged surface temperature (GST), and globally integrated ocean heat content (OHC) on decadal timescales. Consistent with previous studies, we find that large trends (~0.3 K dec−1) in GST can arise from internal climate variability and that these trends are generally an unreliable indicator of TOA over the same period. In contrast, trends in total OHC explain 95% or more of the variance in TOA for two-thirds of the models analysed; emphasizing the oceans’ role as Earth’s primary energy store. Correlation of trends in total system energy (TE ≡ time integrated TOA) against trends in OHC suggests that for most models the ocean becomes the dominant term in the planetary energy budget on a timescale of about 12 months. In the context of the recent pause in global surface temperature rise, we investigate the potential importance of internal climate variability in both TOA and ocean heat rearrangement. The model simulations suggest that both factors can account for O (0.1 W m−2) on decadal timescales and may play an important role in the recently observed trends in GST and 0–700 m (and 0–1800 m) ocean heat uptake.

That last sentence caught my eye in particular because my own dirt simple regression analyses of observational data only suggest that AMO alone can contribute on the order of +/-0.2 W/m^2 of variability throughout its entire ~60 yr cycle.

If you’re looking for a model that nails the timing of ENSO, PDO, AMO, NAO, AO, etc., I got nuthin’. AFAIK it wasn’t attempted by CMIP5 models for AR5, but CMIP6/AR6 and the emerging decadal forecast concept may contain the beginnings of trying to do such a thing.

Yet for an unbiased coin, one would expect 100 seperate series of 10,000 flips to show no clear pattern, yes? I.e., a distribution test ought to come back with “probably normal” if the coin isn’t biased.

Eyah, monthly data are pretty noisy. I ‘spect you’re looking at anomaly data. For HADCRUT4GL absolute, I come up with an RMS month-to-month variation of 0.64 K (tavg = 13.87, tmin = 11.06, tmax = 16.54, trange = 5.47, n=1975). It’s kind of remarkable how well constrained this random walk of yours is, innit.

Tut. Seems a tad hasty to make any conclusions about sloppy researchers doing crappy models in absence of any specifics … especially with things like the ARGO program ever being expanded with more and better buoys, paper after paper based on their data, etc.

Brandon Gates,

Thanks for the reference, I will read it with interest.

“If you’re looking for a model that nails the timing of ENSO, PDO, AMO, NAO, AO, etc., I got nuthin’.” I would not expect a model to do that, it would be like asking to forecast the formation dates of hurricanes. I’d be impressed by a model that can get the statistical properties (duration, amplitude. etc.) of those things right.

Yes, I used anomaly data. The 0.64 K variation you found would be almost entirely seasonal changes. I don’t see why you would say that a 95% confidence of +/-9 K is well constrained.

My complaint with the models is not simply with whether they are crappy. It is with the way the modellers seem to assume they have things right in the absence of adequate attempts at falsification. That includes both inadequate consideration of alternate hypotheses and an insufficient willingness to say “such-and-such a model is wrong because …”. Some models don’t even conserve energy to better than a few W/m^2. But they seem to be deemed just as good as any other model in constructing ensemble averages.

The thing that really got be questioning the quality of science in climate modelling is that 35 years ago the range of estimates in equilibrium sensitivity was 1.5 to 4.5 K and today it is 1.5 to 4.5 K. Not much progress for all the money and effort. But in spite of the lack of progress in narrowing the range, the certainty of the predictions has increased enormously.=, as if they really had pinned things down.

Mike B.,

You’re quite welcome for the reference. I look forward to any response once you’ve read it.

I see my own prejudices bite me — so many here don’t make that latter distinction, and assiduously avoid confronting it when those are pointed out as the most reasonable metrics.

We may be talking past each other, but as you note the largest portion of variability from the comes from those seasonal fluctuations. Even anomalized, the monthly data still retain a lot of fuzz which I usually tamp down with a moving 12 month average if I absolutely want to see things at that resolution.

The main thing here is I have a much different approach to visualizing relative stability, hence predictability — I make some kind of prediction and throw a confidence interval around that based on the standard deviation of the residual. The 95% CI is on the order of +/- 0.2 K for a regression of 2xCO2 alone against temperature, with a clear secular trend. The Hiatus is comfortably inside of that envelope, even when I treat post-2000 temperature data as out of sample.

IOW, the coin is biased. See also: coin-tosses are independent events. Pretty sure you take that into account by suggesting month-to-month variabilities, but I do want to make sure it’s explicitly understood that causal chains matter in climate whereas in pure chance gambling they do not.

If you’re saying that CO2’s radiative function in the atmosphere is an unwarranted assumption, you may as well tell me so is the Big Bang. I don’t think you are, so maybe you should give me a specific or two. In the meantime, go read Schmidt and Sherwood’s paper referenced in this article if you haven’t already — I think it takes fairly unvarnished look at the state of what is amiss in AOGCMs, why those things are difficult to address, and some of what’s being done to fix them. Also referenced here, Swanson (2013) is even more pleasingly pointy-tipped. I’ve got a third paper littering my hard drive from a year or two back that’s got several eye-openers.

Aaack! Like what hypotheses specifically? Speculations are a dime a dozen, hunches two for a dollar. I heard rumors of a special this week: four SWAGs for 99 cents, get ’em while they’re hot. There literally isn’t much new under the Sun that hasn’t already been hashed out in primary literature since the late ’50s. If you tell me a hypothesis you think isn’t getting its due consideration, chances are I can cough up a reference from a decade or three ago where it was considered in earnest.

Harumph. That depends on who you read and when. It’s also quite a matter of personal taste, therefore opinion. I myself prefer, “this is where the thing is b0rked, but this is how it’s still useful,” because all models are always going to be crappy and the trick is to know how best to heed their guidance. Which is very subjective else we wouldn’t be having this debate.

It does suck when something is well and truly busted and nobody realizes it.

Dangit, you made me dredge up that other paper I mentioned above: Mauritsen et al. (2012), Tuning the climate of a global model http://onlinelibrary.wiley.com/doi/10.1029/2012MS000154/full

Open access. Yes, some GCMs leak energy. Oi vey.

They say there are two things you don’t want to see being made if you like them: laws and sausages. GCMs may be a close third. Thing you need to keep in mind is that ALL models probably have some problem you’d find just as glaring and pretty soon there would be no ensemble at all. Which is not an uncommon refrain on the contrarian side of the fence. World I live in, we do the best with what we have, constantly improving as we have time, resources and creativity to do so.

Oh I don’t know, I think it rather speaks to the integrity of those involved in the effort that after 35 years they have the honesty to report the full, unchanged range of uncertainty. Consider the possiblity that figuring out how to simulate an entire planet isn’t exactly rocket science, and update your metaphors and expectations accordingly.

Ah, well that may be mashing two things together which don’t belong. The all but settled part of the science is that our activities are having a noticeable effect. The forward looking portion of things is still considered largely uncertain, which I think is quite appropriate.

“Those are the things that caught my eye… …What caught yours?”

What caught my eye is that the word “performs better” should be replaced by “doesn’t perform as poorly” for this article.

They need a supermodel.

Preferably, one that is low-maintenance.

Do they exist?

What stood out for me was that section 5 (Practical climate model philosophies) was (as far as I’ve been able to determine so far) this and nothing else: an effort to dodge the idea that since the models suck and show little skill, therefore it doesn’t make sense to pay much attention to them.

Hooey I say.

Mark Bofill: What stood out for me was that section 5 (Practical climate model philosophies) was (as far as I’ve been able to determine so far) this and nothing else: an effort to dodge the idea that since the models suck and show little skill, therefore it doesn’t make sense to pay much attention to them.

I agree with you. Model results to date have no demonstrated utility, and Schmidt and Sherwood have written an intellectual exercise of a sort to avoid addressing the issue of how accurate model results should be in order to be considered useful.

Thanks Matthew.

Serious scientists should stop discussing climate models as if these represented serious science.

mpainter – I agree entirely we should be discussing the forecasts made using the natural cycles as illustrated at http://climatesense-norpag.blogspot.com/2014/07/climate-forecasting-methods-and-cooling.html

The problem is that Arctic sea ice is above normal and the only “spectacular” event is the wholesale manipulation of the GISS data. The models are never going to track if they keep diddling with the temperature data. (image from https://stevengoddard.wordpress.com/2014/12/18/arctic-warmed-six-degrees-from-1900-to-1940/ )

“…While the use of complex simulations in science is a development that changes much in how science is done in practice, we argue that the concepts being applied fit very much into traditional practices of the scientific method, albeit those more often associated with laboratory work…”

Sounds like model output as “Data”. Utter Cr*p.

I have a different objection to this business of averaging model outputs. Nick wrote well above that averaging the results of multiple models gives a more precise answer and that it removes noise – do I have that correctly?

That is wrong in a sneaky way. Averaging the runs of One climate model can do that at least in part, assuming there are no big omissions from its representations of physical systems.

However it is quite a different thing if the answers being averaged are from different methods of calculation. The ‘many runs’ averaging has to be for one model, not the averaging of the outputs of many models. They do not work in the same way. It is trying to remove different sets of background noise from multiple signals which are themselves not the same.

Averaging 100 runs of a model that can’t produce the temperature record doesn’t give a ‘better answer’. Averaging the averages of model 1 with the average outputs of model 2 which is worse at producing the temperature record does NOT increase the precision of anything and certainly doesn’t predict future temperatures

This is nonsense. Averaging a warm model with a hot model is done to produce an alarming forecast. They should eliminate all models that can’t reasonably reproduce measured temperatures.

Crispin in Waterloo December 29, 2014 at 9:26 pm

Yes – the models have different structures and parametrisation and so does the earth. There are systemic rather than random errors between models and the earth (I’ve talked about structural uncertainty above). I’ve also just commented above that even when the model families are run using the same forcings and across the period they have been tuned to the 1961- 1990, global absolute temperatures range over 2.8 C. The problem is that a number of really important things in the atmosphere depend on absolute temps – I use the example of when ice freezes – we are talking about models that are reproducing the physics in quite different ways.

Bob

It’s funny you should take this approach in almost “tongue-in-cheek” article. A friend of mine used to work for a hedge-fund and for all the Oxbridge math graduates creating complex models in that company, the methods that were most useful were projections from running averages – non-parametric statistical models.

@CD – Long Term Capital Management – they made the same mistake with models as I recall….

So the deep philosophical conundrum is to determine whether climate models are better than the equation:

N = N + X

Whether climate models are, or are not, better than N = N + X, is apparently not a straightforward question to answer.

I think the problem and not expanded upon in the article is the use of the different methods as a means to testing a hypothesis. I think the best method of doing this as we have three hypotheses – the two methods and the null (no change) – is to use a Bayesian approach from the out-of-sample set. This should at least give us a “score” of suitability (or rather conditional probability of being correct; conditioned on the observations). All this playing around with plots is a bit of arm-waving on everyones’ part.

I once tested whether an autocorrelation-based estimator could produce useful forecasts for a time-series containing several decades of measurements. Call the measurement data d(i) at time ‘i’.

I took a contiguous subset of the measurements from d(1) to some d(n), keeping in-hand some measurements after ‘n’. I calculated autocorrelation parameters using only the data up to d(n), and used these in a moving-average-type estimator to produce forecasts ‘f’. f(n+j) would be the forecast of d(n+j) for a forecasting horizon ‘j’.

As I had only used a subset of the data, I had data in hand to immediately calculate forecast errors for the points immediately following n.

I needed a benchmark forecast whose performance could be compared with the more sophisticated method. The ‘no-brainer’ forecast simply used the last data point d(n) as the forecast f(n+j) for any forecast horizon ‘j’. (My data had no definite trend, but de-trending could be used if there had been issues.)

What would a similar test say about climate models? We have GAST time series. We could take a contiguous subset ending at GAST(n), leaving some already-known data after n. The no-brainer forecast would be f(n+j) = GAST(n), for horizon ‘j’. If a GCM is calibrated using only data up to ‘n’ and initialised at point ‘n’, could it produce better forecasts of GAST(n+j) compared to the no-brainer?

“What caught yours?”

THIS:

“If we had observations of the future, we obviously would trust them more than models, but unfortunately observations of the future are not available at this time. (Knutson and Tuleya 2005)”

Also, a climate paper with no figs? What is the world coming to?

It’s too bad they won’t trust CURRENT observations more than models.

A few things.

(Knutson and Tuleya 2005):

If we had observations of the future, we obviously

would trust them more than models, but

unfortunately observations of the future are not

available at this time.

It has been 10 years now, so they do have observations of the future. Models still not close.

This multi-model ensemble (MME) of opportunity provides an immense resource for climate model evaluation. There are opportunities to assess the structural uncertainty of model predictions, to identify interesting and potentially informative patterns of behaviour across models, to tie future projections to past skill in out-of-sample paleo-climate tests with the same models, and to assess the impact of specific model characteristics (e.g., scope, grid size) on specific aspects of behaviour and fidelity. Many hundreds of papers looking at the CMIP5 results have already been published.

However, the MME was not designed with a particular focus, and consequently the variations in structure

are somewhat haphazard (following the preferences of individual groups rather than any pre-determined

plan) (Knutti et al 2010). The histogram of any particular prediction or result from this set of models should therefore not be interpreted as any kind of probability density function (van Oldenborgh et al 2013; Allen et al 2013), although its breadth offers qualitative guidance if interpreted carefully. Curiously, the multi-model mean is often a more skillful predictor on average across a suite of quantities than is any individual model (Reichler and Kim 2008), though the effect soon saturates (Tebaldi and Knutti 2007). Systematic biases across all models are clearly present that cannot be removed via averaging

So hundreds of papers published all based on particular predictions with no kind of probability density function.

More generally, in response to these challenges and as outlined by Betz (2013), the increasingly dominant way to convey this information (for instance in the Fifth Assessment report from the Intergovernmental Panel on Climate Change (IPCC AR5) (Stocker et al 2013)) has been to supplement key conclusions or predictions with a confidence statement reflecting the expert judgment of how strong is the evidence that underlies it (Cubasch et al 2013). Simulation results, for instance, related to tropical cyclone intensity changes, are given as numerical indications, but with low levels of confidence, reflecting the lack of supporting evidence for skill in modelling changes for this metric in this class of model. In our view, this is effectively an ’informal’ application of the concepts of Bayesian updating but with no actual calculation of the likelihoods or posterior probability3.

Honestly, we are experts with judgment and all that Bayesian math is really hard to do.

Bayesian math is really hard to do

If you’re being serious here then you are wrong. It may seem convoluted when brought up on the frequentist approach. Where we have multiple and competing approaches it is the obvious, and although I’d admit, most attempts to explain it are awful it really is very simple and incredibly eloquent.

On a more technical level, the only problem is that you have to make assumptions to begin with and only with enough time and a recursive approach are the posterior and prior likely to converge to something akin to the “correct answer”. But then how long is long-enough.

cd – if it is only a technical problem, then why don’t they do it, instead of taking the easy, “we’re experts and know all” approach. I always got down marks for not showing my work on math problems.

DD

I guess cause they’re academics they can arm-wave cause in the end academics don’t have to actually produce something that is tested for the real world. They can drop the bomb and walk away unscathed because when they walk into the arena of the real world it’s just for fun.

Listen to one of your greatest living Americans.

My second comment, is: using GISTEMP Global at Wood for Trees, linear trends from 1970-2000, 1980-2000, 1970-2010, and 1980-2010 hit the middle of the temperature bracket from the last 3 months.

Interesting. What happened to the great pause?

(Knutson and Tuleya 2005):

If we had observations of the future, we obviously

would trust them more than models, but

unfortunately observations of the future are not

available at this time.

We do not even have measurements for most of the planet in the past. In 1881, we certainly had no measurements from the interior of Antarctica, and very few ocean temperatures. The temperatures collected are essentially from places people lived. This creates a geographical bias. People did not collect temperatures from places where it was undesirable to live! It looks like all of these computer models are attempting to model fictitious data in the past.

Even observations of the present and recent past require “adjustment”, suggesting that the climate science community does not trust them either.

Excellent point. Climate Scientists never seem to be concerned about the quality, quantity, accuracy or precision of the past data they spout as gospel — a telling sign that the data matters far less than their preconceived foregone conclusions.

I’m guessing you’re correct. But then BEST have at least made an attempt to address these issues using kriging. They’ve never produced any kriging variance maps yet though. And they’ve never produced any maps of locally varying mean (e.g. trend models of altitude and latitude etc.). But on that very issue I’d also expect these to change with time as the effect of urbanistaion, agriculture, deforestation etc. is certainty likely to have resulted in changes in these structural models (used for detrending) with time. They aint been forthcoming with these either. They also may be making the mistake of dealing with the land and ocean as single statistical domains – in my line of work that’s sloppy or naive geostats. Furthermore…oh I’ll stop there.

If data are important enough to analyze, they should be important enough to collect.

What has been proven thus far is no one has shown any capability to forecast seasonal climate trends let along the climate going forward on any semblance of consistency.

They all have one thing in common they do not know what makes the climate system work and reacts the way it does. They prove this each and every time.

Worse they all will come up with excuses to show why they were not really wrong.

I have my own climate forecast which is easily falsified or verified which I have put forth on this site many times. I am not in wait and see mode and will be monitoring the climate.

In other words, “CMIP5 simulations are more consistent in their inaccuracy”.

I guess there is something to be said for consistency, for example career criminals are pretty consistent.

No what it means is that they’re wrong but are right by virtue that they aren’t as wrong. It’s a bit like the idiot who drank a poisonous liquid (that wasn’t water) rather than ate a watermelon because it looked more like water. But then that’s the intellectual capacity and scientific illiteracy in this field.

…than the melon that is

Nick Stokes;

The way to get an underlying signal obscured by random noise is to average a large number of instances.

That is nonsense, and demonstrably so.

If we were to take the 20% of models closest to observations, we’d get a different result than averaging all of the models. We could even cherry pick a small group of models (say 5) that gave the best average of any other group of 5 models. We’d have a different result still. We could run all of these forward and, they would diverge. The “best” 20% and the “best” 5 models would be unlikely to be the same ten years hence. We could even cherry pick model results that are wildly wrong in one direction with model results that are wildly wrong in the other direction, and get a “better” result out of the average of those than we do out of average of all, or 20%, or best 5. We’d have a “more” accurate average based on the least accurate models!

You just can’t take a whole bunch of models with known problems and use them to cancel each other’s errors out. If 500 new models showed up tomorrow, all even worse than the ones we have now, would you add them too? Even if they made the average worse?

Averaging wrong answers in the hopes of getting closer to the right answer is just nonsense. All it does is give a purpose to one set of completely wrong models to off set the answers from another set of completely wrong answers as an excuse for maintaining both sets.

david, I think Nick was saying they didn’t average enough of them together to get a flat line…../snark

Yeah but if your lively hood depends on it, you gotta do what you gotta do.

DavidMHoffer:

“Nick Stokes;

The way to get an underlying signal obscured by random noise is to average a large number of instances.

That is nonsense, and demonstrably so.”

No, read it again. What you are talking about is something different – models which may have different signals. Then averaging still reduces noise, but does not remove the effects of the inconsistency. It is still worth doing.

Nick, DavidM is correct.

It you have to use demonstrably wrong model outputs to get the error – compensated truth, you only know the answer for periods for which you have measurements. Looking forward, there is no way to know which erroneous models to average in order to get the future temperature until they have been measured.

This is not even sleight of hand. It is just increasing the error bar height at vast expense. An ensemble mean is a wrong answer with larger error bars than the components that make it up. That’s an arithmetical fact.

C-i-W

No, my original statement was correct. It said nothing about demonstrably wrong models. It is a simple proposition that a signal obscured by random noise can be revealed by taking an ensemble average.

What you and DMH are talking about is the situation where you can’t get a sufficient ensemble of instances; where the ensemble has members that are not identically distributed. Then indeed you get an approximate answer.