CMIP5 (IPCC AR5) Climate Models: Modeled Relationship between Marine Air Temperature and Sea Surface Temperature Is Wrong

Guest post by Bob Tisdale

UPDATE 2 (February 27, 2013): A problem was discovered with how the KNMI Climate Explorer had processed the MOHMAT data used originally in this post. KNMI has now corrected the bug.

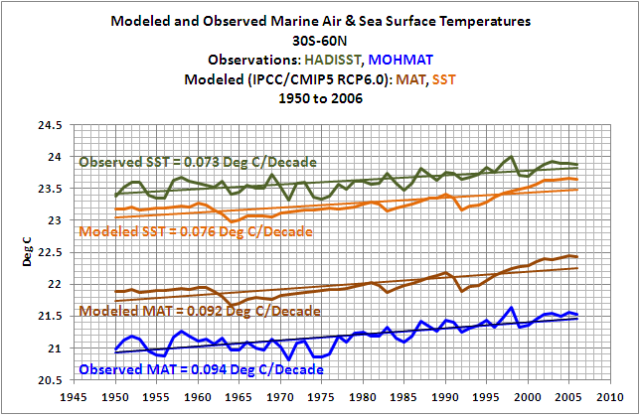

In turn, the modeled relationship between marine air temperature and sea surface temperature now better agrees with the observations. See the Revised Figure 4. In looking at the difference between the modeled and observed marine air temperature, consider that the MOHMAT data includes only nighttime readings while the model outputs do not.

Many thanks to blogger lgl, who found the problem with the KNMI version of the MOHMAT data while reviewing the original cross post at WattsUpWithThat. And many thanks to Dr. Geert Jan von Oldenborgh at KNMI for his immediate investigation and corrections.

Revised Figure 3

I’ve changed the title of the post and most of the text has been given a strikethrough. I’ve also deleted the original erroneous graphs so not to cause confusion when researchers use search engines in the future. Climate models have enough problems without adding wrongly to them.

Regards

Bob

UPDATE February 26, 2013: We’re looking into a possible data problem at UKMO or the KNMI Climate Explorer.

INTRODUCTION

This post is part of a series of model-data comparisons that illustrate the failings of the climate models prepared for the upcoming 5th Assessment Report (AR5) from the Intergovernmental Panel on Climate Change (IPCC). The poor performances of the CMIP3 models prepared for the IPCC’s AR4 have been shown in numerous other posts.

In this post, we’ll compare the Marine Air Temperature (MOHMAT4.3) data from the UK Met Office to two of their Sea Surface Temperature datasets, HADISST and HADSST3. The source of data is the KNMI Climate Explorer. And we’ll present the multi-model ensemble mean (the average of all of the climate model runs) of the simulations of Marine Air and Sea Surface Temperature from the climate models stored in the CMIP5 archive, which have been prepared for the IPCC’s AR5. They’re also available through the Monthly CMIP5 Scenario Runs webpage at the KNMI Climate Explorer. For the modeled Marine Air Temperature, I’ve used the model outputs of “Ocean Only” surface air temperature (TAS). In other words, the outputs of land surface air temperatures have been masked. Modeled sea surface temperature are listed as TOS at the KNMI Climate Explorer.

As you will see, observations indicate the sea surface temperatures warm at a faster rate than marine air temperatures, while the models have the relationship backwards. Not too surprising, since the models simulate very little properly.

ABOUT THE TIME PERIOD AND LATITUDES

The MOHMAT4.3 marine air temperature data is available through May 2007 at the KNMI Climate Explorer, it is no longer updated by the UKMO, so we’ll end the comparisons then.

Animation 1 is a series of maps at 10-year intervals. They illustrate the grids (in purple) where the marine air temperature data is available. As you can see, there’s very little data prior to 1950. There’s also very little data south of 30S and north of 60N, so we’ll also limit the comparisons to the data between those latitudes.

Animation 1

In summary, the data and model outputs presented in this post are for the time period of January 1950 through May 2007 and for the latitudes of 30S-60N. The base period used for anomalies is 1971-2000.

TEMPERATURE ANOMALY COMPARISONS

Figure 1 compares anomalies of the two sea surface temperature datasets (HADISST and HADSST3) to those of the marine air temperature data (MOHMAT4.3). The data are presented on monthly bases. The sea surface temperature datasets exhibit very similar warming trends over this period. On the other hand, the marine air temperature anomalies warm at a significantly slower rate—warming at a rate that’s about 60% of the sea surface temperature datasets. Or to look at the other way, sea surface temperatures are warming at a rate that’s about 1.7 times faster than the marine air temperatures.

Figure 1

As noted in the introduction, the climate models get the relationship backwards. See Figure 2. Modeled marine air temperature anomalies warmed at a rate that’s about 1.2 times faster than the modeled sea surface temperature anomalies.

Figure 2

TEMPERATURE (NOT ANOMALY) COMPARISONS

HADSST3 is only presented as anomalies, so it cannot be included in this comparison.

Figure 3 compares modeled and observed sea surface temperatures and marine air temperatures. As you’ll note, I’ve switched to annual data. Modeled sea surface temperatures and their trend are reasonably close to one another. The models are slightly cooler than the observations. Then there’s marine air temperature. The modeled marine air temperature is too warm and it warms at a rate that’s about 2.2 faster than observed.

Figure 3

Last is Figure 4. It compares the modeled and observed temperature differences between the sea surface temperatures and marine air temperatures, where the marine air temperatures are subtracted from the sea surface temperatures. The observed temperature difference (SST Minus MAT) averages about 2.9 deg C, and the difference has been increasing. But the modeled difference averages about 1.25 deg C and has been decreasing.

Figure 4

CLOSING

As illustrated above, climate models cannot properly simulate the relationship between marine air temperature and sea surface temperature. That shouldn’t surprise anyone who has been following the model-data comparison posts.

Climate models can’t simulate satellite-era sea surface temperatures, as illustrated and discussed in the posts here, here and here. In another post here, we showed that climate models simulated a 0.4 deg C warming of the sea surface temperatures for the Pacific Ocean over the past 19 years, but the observations show Pacific Ocean sea surface temperatures haven’t warmed in that period. As shown in the post here, climate models do a poor job of simulating land surface air temperatures and a good job in other when the models are compared to data regionally. As shown in the post here, models can’t simulate the polar amplified cooling during the mid-20th Century cooling period, they fail to capture the polar amplified warming during the early warming period of the 20th Century, and they fall short of simulating the polar amplification during the recent warming period. Climate models cannot simulate global surface temperatures since 1900, when the data and model outputs are broken down into the two warming periods and the two flat (slightly cooling) periods, as illustrated in the posts here and here. That is, they cannot simulate the rates at which the temperatures cooled during the early 20th Century (1901-1917) and the mid-20th Century (1944-1976) cooling periods, and they definitely can’t simulate the early 20th Century warming period (1917-1944). And they definitely miss the boat when trying to simulate global precipitation. Refer to the post here. Satellite-era precipitation data indicates global precipitation has decreased since 1979, but climate models simulations say it should have increased.

Further, sea surface temperature data during the satellite era and ocean heat content data do not support the hypothesis of manmade greenhouse gas-driven global warming. Worded another way, satellite-era sea surface temperature data and ocean heat content data indicate the oceans warmed naturally. This was illustrated and discussed in detail in my essay titled “The Manmade Global Warming Challenge”. The introductory blog post is here and it can be downloaded here (42MB). This was also presented in my 2-part YouTube video series titled “The Natural Warming of the Global Oceans”. YouTube links: Part 1 and Part 2. And it was illustrated and discussed, in minute detail, in my ebook Who Turned on the Heat? which was introduced in the blog post “Everything Your Ever Wanted to Know about El Niño and La Niña”. It’s available for sale only in pdf form here. Price US$8.00. Note: There’s no need to open a PayPal account. Simply scroll down to the “Don’t Have a PayPal Account” purchase option.

ON THE USE OF THE MODEL MEAN

We’ve published numerous posts that include model-data comparisons. If history repeats itself, proponents of manmade global warming will complain in comments that I’ve only presented the model mean in the above graphs and not the full ensemble. In an effort to suppress their need to complain once again, I’ve borrowed parts of the discussion from the post Blog Memo to John Hockenberry Regarding PBS Report “Climate of Doubt”.

The model mean provides the best representation of the manmade greenhouse gas-driven scenario—not the individual model runs, which contain noise created by the models. For this, I’ll provide two references:

The first is a comment made by Gavin Schmidt (climatologist and climate modeler at the NASA Goddard Institute for Space Studies—GISS). He is one of the contributors to the website RealClimate. The following quotes are from the thread of the RealClimate post Decadal predictions. At comment 49, dated 30 Sep 2009 at 6:18 AM, a blogger posed this question:

If a single simulation is not a good predictor of reality how can the average of many simulations, each of which is a poor predictor of reality, be a better predictor, or indeed claim to have any residual of reality?

Gavin Schmidt replied:

Any single realisation can be thought of as being made up of two components – a forced signal and a random realisation of the internal variability (‘noise’). By definition the random component will uncorrelated across different realisations and when you average together many examples you get the forced component (i.e. the ensemble mean).

To paraphrase Gavin Schmidt, we’re not interested in the random component (noise) inherent in the individual simulations; we’re interested in the forced component, which represents the modeler’s best guess of the effects of manmade greenhouse gases on the variable being simulated.

The second reference is a similar statement by the National Center for Atmospheric Research (NCAR). I’ve quoted the following in numerous blog posts and in my recently published ebook. Sometime over the past few months, NCAR elected to remove that educational webpage from its website. Luckily the Wayback Machine has a copy. NCAR wrote on that FAQ webpage that had been part of an introductory discussion about climate models (my boldface):

Averaging over a multi-member ensemble of model climate runs gives a measure of the average model response to the forcings imposed on the model. Unless you are interested in a particular ensemble member where the initial conditions make a difference in your work, averaging of several ensemble members will give you best representation of a scenario.

In summary, we are not interested in the models’ internally created noise, and we are not interested in the results of individual responses of ensemble members to initial conditions. So, in the graphs, we exclude the visual noise of the individual ensemble members and present only the model mean, because the model mean is the best representation of how the models are programmed and tuned to respond to manmade greenhouse gases.

It would be helpful if you could supply some possible reasons for the gap between model and observation. Perhaps it is the surface boundary layer problem Trenberth pointed out? Or perhaps the missing low cloud/precipitation problem that results in oversensitivity?

Does anyone know what the CO2 release rate from the oceans would be simply from the SST changes?

If we went back to 1820 and 280 ppmv, what would the current pCO2 be because of only degassing?

Thanks, Anthony!

Bob.

“If a single simulation is not a good predictor of reality how can the average of many simulations, each of which is a poor predictor of reality, be a better predictor, or indeed claim to have any residual of reality?”

gavin’s response to this question is about PREDICTION, not matching to past observations.

You are looking at hindcast. So, the objection that you are looking at the model mean and not the full ensemble still holds. Sorry. If you are properly skeptical, you need to make the distinction. If you are a propagandist, then of course you are free to disregard the exact point folks like me have made.

The MOHMAT data description says it is night-time data only – does that make a difference? http://www.metoffice.gov.uk/hadobs/mohmat/

I told NCAR almost a year ago that their CAM 5 (Common Atmosphere Model) uses an incorrect value for the latent heat of vaporization of water. Their value is only correct at a freezing point. In tropical seas, where most evaporation happens, it is off by 2.5% to 3% (about 1% per 10 degrees C).

I take a liberty of interpreting their reaction as “don’t bother us, we have more important things to do”.

http://principia-scientific.org/supportnews/latest-news/135-atmospheric-co2-not-linked-to-humans-says-global-and-planetary-journal.html

“They found that changes in global atmospheric CO2 follow 11-12 months behind sea level surface temperature and 9-10 months behind global surface temperature.”

Slight, sub surface temperatures changes cause surface, the surface air heating. Sea floor heat builds until it reaches a Nino/Nina tipping point raising surface level temps. Then the entrained CO2 is released from it’s saturated, colder water temperature levels. Undersea vent discharge a continous (non-constant) mix of elemental gases including COx, NOx, and SOx which are all potential acids, but moderated by the vast Carbonate basin. The base level changes in Earth fission heat and fission produced elemental atoms (and molecules) is minor….amplified and buffered by our other climate systems. What a beautiful, complex eco-system….completely independent of a measly 28 gigatons (less than 3 cubic miles) of human produced Carbon nano-dust that we free from below and release into the atmosphere.

Thanks Bob

Doug Proctor says:

February 25, 2013 at 7:45 am

“If we went back to 1820 and 280 ppmv, what would the current pCO2 be because of only degassing?”

Doug, you should know better than asking such questions…….

Thanks Bob. Nice article. One comment:

“Averaging over a multi-member ensemble of model climate runs gives a measure of the average model response to the forcings imposed on the model. Unless you are interested in a particular ensemble member where the initial conditions make a difference in your work, averaging of several ensemble members will give you best representation of a scenario.”

This is quite true, especially since climate models are solved as non-linear initial value problems, wherein initial conditions are important in determining the evolution of the time-dependent solution. The non-linearity and coupling of the differential equations which govern the dynamics of the atmosphere and ocean ensures that a stochastic component of the solution will emerge, hence the use of ensembles. I do wonder if averaging across different models is warranted given the vast range of quality of the models (e.g. a good, well-documented model from NCAR versus a poorly document code from the controversial NASA/GISS).

Steve:gavin’s response to this question is about PREDICTION, not matching to past observations.

You are looking at hindcast. So, the objection that you are looking at the model mean and not the full ensemble still holds. Sorry. If you are properly skeptical, you need to make the distinction. If you are a propagandist, then of course you are free to disregard the exact point folks like me have made.

The problem with this objection is that we are all encouraged to use the model mean to guage the success of the models. Right now, we know that the model mean is not very good and the individual members of the ensemble are not very good but there is no way to assess the deficiencies scientifically. It is an interesting thought experiment to try and figure out what precise parameterizations of the various models could be falsified under *any* circumstances.

We know that some of the parameters are wrong in *all* of the models but we don’t have the first clue of which ones are wrong in any of them.

Cheers, 🙂

Rud Istvan @ February 25, 2013 at 7:35 am

“It would be helpful if you could supply some possible reasons for the gap between model and observation.

That question should be asked of the modellers, not the observers.

The flippant answer is that a model is just that, and nothing more.

I hope one day climate all climate models will work and predict as accurately as Dr. Roy Spencer’s…

Steven Mosher says:

February 25, 2013 at 8:00 am

The only correct answer we know is what has actually happened. Even though our knowledge of that is imperfect, the only way to evaluate a model is to compare it with what we know about that one correct answer. If the model cannot cannot reproduce what we know about the only correct answer there is no reason to believe that its predictions have any more value than a linear projection.

If a good model is averaged with bad models, all that has been accomplished is to hide what the good model says.

This failure of the models would seem to be related to their failed prediction of a mid to upper tropospheric “hot spot.” In CO2 driven models warming starts in the predicted hot spot, where the extra CO2 does its heat trapping work, and propagates down from there to create lower tropospheric warming and surface warming, so the upper troposphere warms more than the lower, which warms more than the surface. If this anomaly gradient is not present then the CO2-warming theory is wrong, which seems to be the case.

In contrast, if warming is from more solar radiation reaching the surface (either more solar radiation reaching the planet, or less radiation being blocked by cloud cover from reaching the surface) then warming would begin with the oceans, which absorb the extra sunlight. Here the warmth propagates upwards so the oceans would warm more than the air, and this is what the observations show.

A question on the dataset displayed in the figures above: why does it end in mid-2007? Is this when the model simulations were done? With all the billions the “consensus” gets for climate research it seems there should be something more recent available.

Bob’s December post shows CMIP5 simulations vs. observations for Pacific SSTs up through late 2012:

http://bobtisdale.wordpress.com/2012/12/06/model-data-comparison-pacific-ocean-satellite-era-sea-surface-temperature-anomalies/

This comparison seems to show the mismatch between models and observations still growing. Is AR5 maybe trying to hide this?

@Steve Mosher,

You have a habit of doing drive-by posts attacking people and/or their content, then ride off into the sunset when someone else challenges you. It is likely you’ll do the same here.

Did you ever gather the evidence requested by Don Monfort in this post?

http://judithcurry.com/2012/05/17/cmip5-decadal-hindcasts/#comment-203159

“Mosher,

Why you always fighting with Willis?

Willis may be wrong on the ERL. Is it correct that even if all else is not equal, that increased GHG will result in the ERL moving higher? That the ERL is solely dependent on the concentration of GHGs? If the answer is yes, can you cite the evidence that the ERL has moved measurably higher, since let’s say 1950?”

” I do wonder if averaging across different models is warranted given the vast range of quality of the models (e.g. a good, well-documented model from NCAR versus a poorly document code from the controversial NASA/GISS)”

Personally I don’t wonder but leave it all up to Big Climate to come up with a consensus as to which model is the best one for me to get all excited about. I must confess watching them picking the best one out of the hat or throwing darts at model names on the pinboard would be amusing if it were entirely voluntary on their part rather than consuming my taxes, but therein lies the limit of my tolerance and patience. By all means form a voluntary association for the purpose and spend endless hours on the name of their new club or society as they wish. Then there’s where to hold dinner…

The average of a Messerschmidt is still a Messerschmidt.

;->

I think the this comment from a couple days ago (here) by Olaf Dahlsveen is relevant. An excerpt:

What we now are faced with is a «gang» of sub-scientists who do «blatantly» disreguard data and instead reguard CO2 as the «main greenhouse gas» which therefore is responsible for what is called «The Greenhouse Effect» – They (the gang) are «Model Makers» and as long as they put CO2 into their models as being responsible for any earthly temperature rise, then it is quite correct for them to say that «All our models show that CO2 is responsible for the recent warming.. Well, that was back in the 80ies and 90ties. But then the warming stopped…

Using a model mean is good, but it needs to be compared with the mean of several realisations of the observations which you obviously don’t have.

Model mean + prediction interval would actually be useful. Only if the observed data lie outside the prediction interval might there be anything to get excited about.

The model can not replicate the past and from Schmidt’s own words this is of no concern.. They are only interested in their own fantasy land of made up crap…

That should sum up the AGW or Climate Change meme pretty well..

It always fascinates me to see the amount of technical knowledge and, yes, “science” that is displayed in these articles, as well as most of the comments. With so much ammunition, it would seem the “war on AGW” would have long since been over. It is most unfortunate that the “war on humanity through AGW” is strictly political, and will never be swayed by “scientific proof.” Their catch phrase was, is, and always will be “don’t bother me with facts, I’ve already made up my mind.” But at least we will know we are right as “they” freeze us to death by destroying our ability to generate reliable energy, or starve us to death by converting acreage from food production to biomass for alternative fuels.

Climate models are computer programs. The models are written to show that increased atmospheric CO2 leads to warming. The models work that way because that’s the way they are built to work.

This one tells a different story.

https://ams.confex.com/ams/93Annual/webprogram/Handout/Paper218894/poster_NMAT_AMS13.pdf

I have asked KNMI why

“If we went back to 1820 and 280 ppmv, what would the current pCO2 be because of only degassing?”

The average atmospheric CO2 for the 19th century was significantly higher than the cherry-picked 280 ppm. And atmospheric CO2 was increasing even before the Industrial Revolution.

Fred says:”The MOHMAT data description says it is night-time data only – does that make a difference…”

I’ve seen comparisons of NMAT and SST in the past. An example is the one lgl linked, which present the data globally:

https://ams.confex.com/ams/93Annual/webprogram/Handout/Paper218894/poster_NMAT_AMS13.pdf

I didn’t present the data globally because there was so little data in the Southern Hemisphere, south of 30S.

moshe mumbles about ‘propaganda’, lost in a forest of it, spots a dogwood, and calls the cross a hex sign.

==========================

lgl says: “This one tells a different story.”

Hard to say because they are not for the same latitudes or the same time periods.

No probs with their computer models. Just a wee bit of a problem interpreting them-

http://blogs.news.com.au/heraldsun/andrewbolt/index.php/heraldsun/comments/more_of_those_predictions_to_snow_us/

http://blogs.news.com.au/heraldsun/andrewbolt/index.php/heraldsun/comments/why_is_flannery_still_climate_commissioner/

Bob,

This .txt data from the metoffice also looks much like the SST data

http://www.metoffice.gov.uk/hadobs/indicators/data/MAT_mohmat.txt

Steven Mosher says: “gavin’s response to this question is about PREDICTION, not matching to past observations.”

Did you read Gavin’s reply? It is applicable to hindcasts and predictions. It’s a generic discussion of model simulations.

Additionally, refer to the NCADAC report. See their figure 1 on page 23:

http://i47.tinypic.com/2r4309w.jpg

And their Figure 2.3 on page 35:

http://i49.tinypic.com/2rp7dxd.jpg

As you can see, the NCADAC also does not provide the full ensemble in their model-data presentation, Steven. Looks like they’re also disregarding the points you have tried to make.

Here’s the link to the full NCADAC report (147MB):

http://ncadac.globalchange.gov/download/NCAJan11-2013-publicreviewdraft-fulldraft.pdf

I’m curious. Do any particular models (as opposed to the average) do well in hindcasting this phenomena, and if so what are their characteristics that cause this to be so (or do we not know)?

Rud Istvan says:

February 25, 2013 at 7:35 am

It would be helpful if you could supply some possible reasons for the gap between model and observation.

=======

What is overlooked is that the earth itself is a model. If you ran an identical copy of the earth twice, you would over time get two compeltely different futures, even if all the variables were exactly the same.

Thus, while the earth has a forced component, it also has a random component. So when the model builders train the model to hindcast, they are also training their models to also mimic the random component. This introduces randomness into their models going forward, which we recognize as error in the forceast.

The basic problem is that two identical earths, starting out identical from today forward in all respects, will have two different futures. There is no way for the models to know ahead of time which earth we are on, so there is no way for them to predict which future we will arrive at.

While the models if they are accurate might be able to say “this future is more likely”, but this still doesn’t mean that our future must be the most probably. If you roll a pair of dice, 7 may be the most likely roll, but that doesn’t mean you will roll a 7.

What the model builders are trying to do is say “your future will be a 7”. But reality says it can be anything between 2 and 12.

These guys would screw up a plastic airplane model,never mind a climate one.

Fig.15 here http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.172.4502&rep=rep1&type=pdf

Global, NH and SH.

KNMI must have messed this up.

Nothing wrong with the model , its reality which is in error , see rule one of climate ‘science’

Steven Mosher says:

February 25, 2013 at 8:00 am

“…gavin’s response to this question is about PREDICTION, not matching to past observations.”

Don’t they hindcast to “validate” the models? Surely, if not, then the models can’t replicate what has happened over the past 50 years. What good is that? To me I think you have to have a hindcast fit and if it doesn’t bear much similarity to what then comes to pass, then you have to find another hindcast fit by changing the parameters – hopefully, if you have included all the important variables, you should get a model iteration that has at least a modicum of skill. The problem that seems to have been looming over the past half dozen years is that keeping CO2 as the primary parameter – temperature control knob if you will, even though the physics seems to say it has a role, appears to be putting models further out of the game. It becomes more like an agenda if the models become worse and worse. Has anybody plugged in a climate sensitivity of half or less than the control knob sensitivity and reversed the cloud feedback to net negative and re-run them to see if the projections can at least put observations inside the envelope? When does one look for a more important control knob? I would like to see a half a dozen hindcasts with different weights (and possibly signs) of the parameters and turn them loose. I wish I could do this.

lgl says: “This .txt data from the metoffice also looks much like the SST data

http://www.metoffice.gov.uk/hadobs/indicators/data/MAT_mohmat.txt”

Thanks for the link lgl. The UKMO is presenting a global dataset. I’ve presented an abridged look at the data using latitudes and a time period where they have better coverage. Refer to the gif animation at the beginning of the post.

Regards

Bob

That’s not the problem. It seems KNMI have used the NMAT junk from UAH. More tomorrow.

Re horse racing: If your model gives points for the most winning horse, and points for the most winning jockey, trainer, owner, you will go to the payout window maybe once every 5 to 6 races. If you calculate the fastest time for the last quarter of the race among the field, you find a horse with some reserve energy – that’s worth some points. If a horse has a “did not finish” in a record of a recent race, you remove a point or two or may strike him out. If you end up with two horses that have the same number of points and you see one have a big piddle or poo, choose this one – a one pound handicap weight is equivalent to a length or approximately 1/5 of a second. Mark a couple of points for a mudder when the track is muddy or the forecast is for heavy rain. All this work may get you to the pay window about every third to fourth race. The touts add a layer of betting strategy that, of course, the climate modellers don’t do. Actually, if climate modellers did bet on their performance, they would probably put out better models and be less married to political climate.

This is no better than homeopathy. The final result contains zero molecules of reality.

I really like the way Bob Tisdale gets into the discussion after his article(s) have been published. Thanks Bob for staying on top of it.

lgl: There’s a significant difference between the UKMO MOHMAT marine air temperature data available from the UKMO link you provided and via the Climate Explorer:

http://i49.tinypic.com/2dukt4y.jpg

I’ve advised KNMI. We’ll see what happens.

Regards

lgl: Looking at the difference in trends…

http://i49.tinypic.com/2dukt4y.jpg

…it may be a land-masking problem. That is, the difference is close to the ratio of ocean surface area to land surface area.

Regards

Yes but if those GHG’s do not do what is believed the problem will not improve because GHG’s are a part of the model probably then use as proof that the GHG theory is true.

Bob

Thanks for the graphs. So multiply by 2.25 to get form one to the other, roughly, weird.

I got this reply from Oldenborgh:

“MOHMAT is a gridded field, but the handout of the potser you linked to gives a global mean value, which I guess was computed by the people who made the poster. in order to compute a global mean value you have to do something with areas without observations”

which is similar to what you said but still does not make sense. ‘do something’ can’t possibly mean multiply by 2. Think I’ll try get the metoffice explain the mystery.

Bob

Interesting developement. More from Oldenborgh:

“Looking at it more closely now I discovered an error in my conversion from the Met Office text files into netcdf … This error has been fixed now on my laptop, I am propagating it to the server”

I think you can rerun your analysis now. And I expect you will put an update at the top of this article 🙂

Bob, compare MOHMAT4.3 and ICOADS v2.5 Tair. Some interesting differences and some interesting similarities.

For example, since 1979 (after the switch from ‘cool’ to ‘warm’ phase in the Pacific was finally completed), the two follow each other pretty well. ICOADS has larger amplitudes, but the trends are quite the same (MOHMAT’s actually slightly steeper). Before 1979, however, across the Pacific Climate Shift, ICOADS boasts a much bigger rise than MOHMAT.

lgl, I received a similar email. The two datasets agree much better now, but there’s still a difference over the long term:

http://i55.tinypic.com/ivfn1j.jpg

Over the term we’re dealing with (1950 to 2006) they’re very close:

http://i53.tinypic.com/24nl8jr.jpg

One of the problems: I have no idea which version of the MOHMAT data the UKMO is providing here:

http://www.metoffice.gov.uk/hadobs/indicators/data/MAT_mohmat.txt

We can assume it’s 4.3, but…

I’ll give KNMI another day to see if they can determine the reason for the remaining difference, then I’ll provide an update.

I wonder whether the UKMO deals with missing data differently than KNMI (I used a zero in the “demand at least” field in Climate Explorer, assuming that would get me all of the data), because there’s lots of missing data before the 1950s.

Thanks for your help. I wouldn’t want to bad mouth the models undeservedly—because there are so many other problems where they do deserve to be bad mouthed.

lgl: Yup, that would correct the problem. See preliminary correction to Figure 3:

http://i54.tinypic.com/261j11c.jpg

I’ll give KNMI another day to see if they can find anything else, then I’ll provide the update.

Again, thanks.

@Mosher: models only express hypotheses, they are NOT experiments. All your doing with model(s) is expressing different hypothesis based on the code and the parametrization, you are not doing science.

lgl, you say: “It seems KNMI have used the NMAT junk from UAH.”

I agree. It seems they’ve used UAH-NMAT instead of MOHMAT4.3.

But I wonder, why do you consider the UAH product junk …?

Kristian says: “I agree. It seems they’ve used UAH-NMAT instead of MOHMAT4.3.”

Nope. KNMI used the right dataset. They just had an bug in the way they processed the MOHMAT data.

Bob, you say: “Nope. KNMI used the right dataset. They just had an bug in the way they processed the MOHMAT data.”

I see. Peculiar. I could’ve sworn the ‘old’ KNMI MOHMAT and the UAH NMAT curves were one and the same …

Also I wonder about the ICOADS v2.5 Tair data set. Agreeing much more since the late 70s with the UAH NMAT (and the ‘old’ KNMI MOHMAT4.3). It seems to include both NMAT and DMAT. Do you 1) know if it is corrected for known daytime biases (if those biases affect anomaly trends at all) such as suggested by Berry et al. 2004:

http://journals.ametsoc.org/doi/full/10.1175/1520-0426(2004)021%3C1198%3AAAMOHE%3E2.0.CO%3B2

and do you 2) have any thoughts on what compares MAT better to SST, a data set with only nighttime data or one with both day and night included (what time of day is SST measured? is there a norm?)? We want to compare apples with apples here, I guess …

Kristian: I have not studied NMAT datasets to any great extent, so I can’t answer your questions. Sorry.

I have issued an update/correction to this post:

http://bobtisdale.wordpress.com/2013/02/23/cmip5-ipcc-ar5-climate-models-modeled-relationship-between-marine-air-temperature-and-sea-surface-temperature-is-backwards/

Bob, very good, thanks

Kristian, junk because

– Almost no trend since 1915

– post 2000 unrealistic

– When there is a high trend in SST and lower troposphere, there can’t be half that trend in a layer in between.

But junk is not the right word to use since this is just my personal opinion.

Bob Tisdale says:

February 27, 2013 at 6:05 am

I have issued an update/correction to this post:

http://bobtisdale.wordpress.com/2013/02/23/cmip5-ipcc-ar5-climate-models-modeled-relationship-between-marine-air-temperature-and-sea-surface-temperature-is-backwards/

===================

So now that you’ve corrected your site, how do all of the corrections get replicated over here? The incorrect information and graphs are on full display at WUWT and potential reads would have to delve deep in the comments to realize it’s wrong.

“Modeled sea surface temperature are listed as TOS at the KNMI Climate Explorer.”

How appropriate. That’s also the way I list most of the climate models too 😉

Bob, now the graph is corrected, the one thing that stands out to me is how the data puts the lie on supposed volcanic cooling impact.

el chichon 1982 , something dips a bit later but probably too late to attribute to eruption. Model seems to pre-empt the effect by two years, so it does not add up. If the dip was due to eruption it was compensated for shortly afterwards, there is no permanent offset.

Mt Pinatubo 1991: another massive drop in modelled expectations that just does not happen in data. Nothing more than the usual ups and downs.

Mount Agung 1963 eruption : biggest impact in the models and honestly NOTHING in the data, air or SST.

This is important since the assumed cooling effect allows for an exaggerated CO2 forcing. The fact that there has been no significant volcanic activity since Mt P has brought this to the fore. The lack of warming predicted by exaggerated AGW ‘compensated’ by fictitious volcanic cooling can only work if both continue. Luckily volcanoes have been quiet allowing this false attribution to become evident.

If remove this unwarranted cooling and put in the real physically based CO2 effect without amplifying it with rigged cloud parameters, the whole thing may start working a bit better.