Guest Post by Willis Eschenbach

Anthony Watts, Lucia Liljegren , and Michael Tobis have all done a good job blogging about Jeff Masters’ egregious math error. His error was that he claimed that a run of high US temperatures had only a chance of 1 in 1.6 million of being a natural occurrence. Here’s his claim:

U.S. heat over the past 13 months: a one in 1.6 million event

Each of the 13 months from June 2011 through June 2012 ranked among the warmest third of their historical distribution for the first time in the 1895 – present record. According to NCDC, the odds of this occurring randomly during any particular month are 1 in 1,594,323. Thus, we should only see one more 13-month period so warm between now and 124,652 AD–assuming the climate is staying the same as it did during the past 118 years. These are ridiculously long odds, and it is highly unlikely that the extremity of the heat during the past 13 months could have occurred without a warming climate.

All of the other commenters pointed out reasons why he was wrong … but they didn’t get to what is right.

Let me propose a different way of analyzing the situation … the old-fashioned way, by actually looking at the observations themselves. There are a couple of oddities to be found there. To analyze this, I calculated, for each year of the record, how many of the months from June to June inclusive were in the top third of the historical record. Figure 1 shows the histogram of that data, that is to say, it shows how many June-to-June periods had one month in the top third, two months in the top third, and so on.

Figure 1. Histogram of the number of June-to-June months with temperatures in the top third (tercile) of the historical record, for each of the past 116 years. Red line shows the expected number if they have a Poisson distribution with lambda = 5.206, and N (number of 13-month intervals) = 116. The value of lambda has been fit to give the best results. Photo Source.

Figure 1. Histogram of the number of June-to-June months with temperatures in the top third (tercile) of the historical record, for each of the past 116 years. Red line shows the expected number if they have a Poisson distribution with lambda = 5.206, and N (number of 13-month intervals) = 116. The value of lambda has been fit to give the best results. Photo Source.

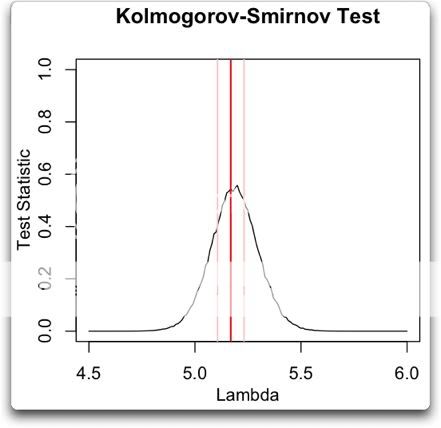

The first thing I noticed when I plotted the histogram is that it looked like a Poisson distribution. This is a very common distribution for data which represents discrete occurrences, as in this case. Poisson distributions cover things like how many people you’ll find in line in a bank at any given instant, for example. So I overlaid the data with a Poisson distribution, and I got a good match

Now, looking at that histogram, the finding of one period in which all thirteen were in the warmest third doesn’t seem so unusual. In fact, with the number of years that we are investigating, the Poisson distribution gives an expected value of 0.2 occurrences. In this case, we find one occurrence where all thirteen were in the warmest third, so that’s not unusual at all.

Once I did that analysis, though, I thought “Wait a minute. Why June to June? Why not August to August, or April to April?” I realized I wasn’t looking at the full universe from which we were selecting the 13-month periods. I needed to look at all of the 13 month periods, from January-to-January to December-to-December.

So I took a second look, and this time I looked at all of the possible contiguous 13-month periods in the historical data. Figure 2 shows a histogram of all of the results, along with the corresponding Poisson distribution.

Figure 2. Histogram of the number of months with temperatures in the top third (tercile) of the historical record for all possible contiguous 13-month periods. Red line shows the expected number if they have a Poisson distribution with lambda = 5.213, and N (number of 13-month intervals) = 1374. Once again, the value of lambda has been fit to give the best results. Photo Source

Figure 2. Histogram of the number of months with temperatures in the top third (tercile) of the historical record for all possible contiguous 13-month periods. Red line shows the expected number if they have a Poisson distribution with lambda = 5.213, and N (number of 13-month intervals) = 1374. Once again, the value of lambda has been fit to give the best results. Photo Source

Note that the total number of periods is much larger (1374 instead of 116) because we are looking, not just at June-to-June, but at all possible 13-month periods. Note also that the fit to the theoretical Poisson distribution is better, with Figure 2 showing only about 2/3 of the RMS error of the first dataset.

The most interesting thing to me is that in both cases, I used an iterative fit (Excel solver) to calculate the value for lambda. And despite there being 12 times as much data in the second analysis, the values of the two lambdas agreed to two decimal places. I see this as strong confirmation that indeed we are looking at a Poisson distribution.

Finally, the sting in the end of the tale. With 1374 contiguous 13-month periods and a Poisson distribution, the number of periods with 13 winners that we would expect to find is 2.6 … so in fact, far from Jeff Masters claim that finding 13 in the top third is a one in a million chance, my results show finding only one case with all thirteen in the top third is actually below the number that we would expect given the size and the nature of the dataset …

w.

Data Source, NOAA US Temperatures, thanks to Lucia for the link.

I’m impressed at how nicely your 13 month samples fit the Poisson distribution. Nice review.

Lucia has an update based on low serial autocorrelation data for the 48 states. It’s apparently a lot closer to white noise than she thought previously.

As always, astounding reasoning, Willis. I find your conclusion flawless. I find that it also supports something that I have long suspected — few people are actually qualified to work with statistics or make statistical pronouncements. From what I recall, Jeff was only quoting some ass at NOAA, so perhaps it isn’t his fault. However, you really should communicate your reasoning to him. I think that there is absolutely no question that you have demonstrated that it is a Poisson process with significant autocorrelation — indeed, from the histogram (exactly as one would expect) and that as you say if anything it suggests that there have (probably) been other thirteen month stretches. It is also interesting to note that the distribution peaks at 5 months. That is, the most likely number of months in a year to be in the top 1/3 is between 1/3 and 1/2 of them! . They seem to think that every month is an independent trial or something.

. They seem to think that every month is an independent trial or something.

Yet according the reasoning of the unknown statistician at NOAA, the odds of having any interval of 5 months in the top third are

Sigh.

rgb

1 in 2.6 is close enough to 1 in 1.6 million for the average climate alarmist; what’s your beef? Nothing that a little data adjustment won’t fix.

Willis, Can I please borrow your brain for a few days.. I could make a gazillion dollars with all that extra smarts and speed of thought. I can’t even comprehend the amount of work and effort that it even took to come up with the line of analysis, let alone sit down with the data. But then, I still don’t have your brain. But then, unfortunately, not many scientists do either.

Thank you, Sir.

Say you investigate a sample of teen age boys. You will find that each has a height. Do the measurements. Run the numbers.

Now investigate a sample of time intervals with lightening strikes. Not every time interval has a strike. There’s the rub!

Your example (people lined up at a bank teller’s window) is the same idea. Somewhere (long ago), I believe hearing or reading that this is exactly why the Poisson distribution was invented. Not that many folks make it that far in their education.

Willis, I think you are one of the most clever people I have ever read……very well done and keep up the good work,

Robert

This whole 1 in 1.6 million issue has been great entertainment. It’s also been an eye opener, to see so many climate scientists struggling with a fairly basic statistical concept.

Nice work, Willis – I can’t see a flaw in your work but it’s been a long day so I’ll look again for the sake of due diligence in the morning. The data has Poisson written all over as I head off to bed, and that doesn’t leave a lot of wiggle room.

I’m not getting the controversy. We’re not dealing with a stationary process here. The guy said: “These are ridiculously long odds, and it is highly unlikely that the extremity of the heat during the past 13 months could have occurred without a warming climate.” No kidding. And, we are currently at a plateau in temperature which is the result of combining the steady warming since the LIA with the peak of the ~60 year temperature cycle. So, what’s the gripe? The globe has been warming. Everyone knows it’s been warming. The disagreement is over the cause.

It would appear that inadvertently Mr. Masters, or whoever provided him with his numbers, has arrived at a ratio that is quite correct, the only problem being the ratio is applied to the wrong query. If you ask “what are the odds of a story about a human caused plague of horrendous heatwaves, which appears in any Lamestream Media source, NOT being complete BS?” the ratio of 1 in 1.6 million appears, to my eye at least, to be just about spot on.

Brilliant work, Willis.

Bart: The point is that the claimed 1 in 2.6 million chance that this June to June “event” is BS. and regardless of whether there has been warming or not, this “event” is indistinguishable from random.

as in the song:

You may have it warm in the US. Here in Europe we have a rather cold and wet early summer.

Is there also a 1 in 2.6 probability to experience a series of coldest 13 continuous months within a 116 years period? of wettest? of windiest? of cloudiest? of …?

Anthropogenic warming seems to be more frequent in Northern America than elsewhere (or there are more blogs telling this there than elsewhere).

Robert Brown says:

July 10, 2012 at 10:24 pm

Thanks as always for your comments, Robert. I actually feel sorry for Jeff Masters, because I’ve made foolish public errors myself. It’s not easy, it’s painful, and it is the risk we all take when we blog. So I’ll pass on contacting him, it would look like kicking a man when he’s down.

I suspect he will hear about my analysis in any case. Most people who are truly interested in climate science read WUWT, regardless of their position on the AGW supporter/skeptic continuum, if only to find out what fools these mortals be today.

However, I doubt that in general I am “actually qualified to work with statistics”, as my knowledge of statistics (as with many things) is quite wide but is only deep in some places. However, I am well served by my habit of starting (and perhaps even finishing) by using my Mark One Eyeballs. I get as much data as I can, stretching back as far as I can, and then I put it up on the silver screen and I just think about it. I give it the smell test. I re-plot it from some other angle, I’m a graphical thinker. I give it the laugh test. I use color to give it another dimension. If necessary, I look at and consider each and every case or station record or proxy of the data individually. The work is often very boring, but also has flashes of fire and insight.

I look at the data first, before theorizing about the data, or calculating the statistics of the data, or analyzing the situation using pseudo data or red noise, or doing a monte-carlo analysis. I just look at the data in as many graphical representations as I can dream up. Then I look at it again.

I look because at the core, I’m trying to understand the data, not to measure its waistline. Oh, I may get around to that, but before I pull out the cloth tape and start taking the measurements, I want to know the habits and the relationships and the linkages and the interactions and ultimately the very form and meaning of the data.

Because at the end of the day, statistics are a model of reality. As a result, picking the appropriate model for the situation is the central, crucial, indispensable, and often overlooked first step of any statistical analysis. If you don’t start out with the correct understanding of what’s going on, all the statistics in the world won’t help you. And for me, the only way to get that understanding is to look at the longest dataset I can find, from as many angles as I can, and to think about the data in as many ways as I can.

All the best to you,

w.

Damn, a poisson distribution. I always new these climatologists were hiding something fishy !

Very good analysis Willis. It’s a shame that some of these cargo cult scientists are not capable of applying appropriate maths.

Doesn’t this analysis basically point out that the warming trend far smaller than the magnitude of variations? The fact that it peaks between 4 and 5 months tells us that the dominant variation is of that timescale. This is what we commonly call seasons.

None of this is surprising but it still does a very good job of pointing out how rediculous and inappropriate Masters’ comment was.

Maybe he should have thought about it before using it. The fact he apparently got it from someone at NOAA does not excuse his need to think whether it makes sense before using it himself.

AWG: a chance of 1 in 1.6 million of being close to a correct analyze …

Six months of record recent heat wave was a once in 800,000 year event????

There have been 372,989 correctly recorded daily high temperature records in the US since 1895. 84% of them were set when CO2 was below 350ppm.

http://stevengoddard.wordpress.com/2012/07/08/heatwaves-were-much-worse-through-most-of-us-history/

http://stevengoddard.wordpress.com/2012/07/08/ushcn-thermometer-data-shows-no-warming-since-1900/

Lots of things are very rare….

ttp://stevengoddard.wordpress.com/2012/07/11/1970s-global-cooling-was-a-one-in-525000-event/

http://stevengoddard.wordpress.com/2012/07/11/last-ice-age-was-a-one-in-a-google-event/

sheesh!

Poisson is by far the most important and least known mathematician for all aspects of real (non-relativistic) life.

Bart says:

July 10, 2012 at 11:32 pm

The controversy is that the “ridiculously long odds” he refers to are wildly incorrect …

w.

Robert Brown

I think you make a very good point when you say that few people are qualified to talk about statistics. That is so even on quite simple statistics, but at the sort of level of tree ring analysis and many other facets of climate science I think the maths is quite beyond most scientists as it is a special separate field that they are unlikely to have learnt in detail.

It would be interesting to know who is actually qualified to interpret statistics arising from their work or whether they get in genuine experts to check it through. I suspect the number that do is very small indeed.

tonyb

Willis

I have to be a pedant here but what you plotted is a bar chart not a histogram 😉

I think the main problem with pushing out the trite case of probability with a time series is that you have no base. For example where, and at what length of time, would you determine a norm. He assumes that time series are not second order stationary (why?) and hence you can get a change in the distribution with time (dirft) which is implicitly suggested in his (paraphraising) “…you can only get this if you’re in a warming world…”. This assumption is only the result of incomplete information as it only appears as such when choosing a small portion of the time series. So absolute nonsense, there is no need for any more statistics in my view as it is completely flawed because of incomplete data although you make a more appropriate case here.

Since, as you say, “one case with all thirteen in the top third is actually below the number that we would expect given the size and the nature of the dataset …” it follows that warming climate reduces the likelihood of severe weather. Which is what the “heat gradient flattening” POV (mine) predicts.

What Willis missed is that Jeff sold his website to the weather channel for some beaucoup buxes and he needs to deliver this sort of bs in a technical fashion so they can feel they are getting a good deal. Model that in your Poisson distribution. big guy!

Just pulling your leg, Willis.

Willis

Nice work. As Phil Jones is unable to use a spreadsheet I doubt if his high profile work is statistically sound. Don’t know about others like Mann as there is so much sound and light surrounding his work. I see him as the lynch pin so his expertise in statistics and analysis is obviously highly relevant

tonyb

Mr Masters is an intelligent weatherman, so he must already know that the US heatwave is primarily due to blocking, as was the Moscow heatwave a couple years ago.

Therefore the real question is “does CO2 cause an increase in blocking events?”.

Mr Masters may be able to tell me otherwise, but I’ve seen no hint that this is the case from the literature. But I’ve seen many times that low solar activity is linked with increased jet stream blocking.

I would therefore put the onus on Mr Masters to show that the null hypothesis is false: ie this event is related to solar activity, given the Ap index recently hit its lowest value for over 150 years.

It surely looks like a Poisson process and the 1 in 1.6 million figure is absolutely bollocks, but isn’t the interesting question how / if the lambda has changed over the years? Let’s say calculated from the data of a 30-year-or-so sliding period?

Unless you are certain of the underlying distribution, curve fitting and reading off the tails may lead to large errors. For example, you might try fitting a Burr distribution. It would be interesting to see if you get a similar result.

2.6 times in 1374 trials is a 1 in 528 chance.

Curiously, I happen to turn 44 years old this year which is also 528 months. (12 * 44)

cd_uk says:

July 11, 2012 at 1:45 am

Naw, you don’t have to be a pedant, cd_uk, it’s a choice, and one I’d advise you to give a miss.

But if you are going to be a pendant, you should at least be a good one. Mathworld says a histogram is:

Since that is exactly what I’ve done, it is indeed a histogram. Take a look at the Mathworld example, looks like mine.

w.

PS—By the way, what I believe you are talking about is generally called a “column chart”. In a “bar chart” the bars run horizontally, while in a column chart they run vertically.

The original mis-use of statistics is the sort that landed an innocent woman in prison for child abuse. Though eventually exonerated and released, she died by suicide. That’s how dangerous these people are!

It looks like a very nice analysis, thanks for it.

I’d just like to see a somewhat more solid proof that poisson distribution is the correct one to use in this case than “The first thing I noticed when I plotted the histogram is that it looked like a Poisson distribution.”. Both number of extreme records and number of their streaks is going to decline over time on normal data, but I suspect streaks are going to decline way faster because we’re working with fixed interval rather than portion of the record. Is the poisson distribution invariant to that?

Reading Willis’ response to rgb at 12:02 above puts me in mind of Richard Feynman talking about what his wife said with regard to Feynman joining the team to find out what happened to bring Challenger down….something along the lines of “You better do it…you won’t let go….you’ll keep circling around, looking at it from a different perspective than others…and it needs doing.” Ah, Willis, what a treasure you are….and in such good company.

I do agree with the general feeling that the “ridiculously long odds” are on very shaky ground, But I don’t agree with Willis’ statistical model. It may be that for n distinctly smaller than n the distribution of “n months in top third out of 13” is roughly approximated by a Poisson distribution, but it’s a leap of faith that the approximation is valid for n equal to 13. There is bound to be edge phenomenons.

For instance, the model predicts that “14 months out of 13 would be in the top third” happens about once in 1374 tries. On the other hand, we can be absolutely sure that this won’t happen until we get two Mondays in a week. This is an edge effect, The model breaks down for completely trivial reasons as soon as n is greater than 14, so we should not trust it too much for n equal to 13.

Great analysis. What really saddens me is that so many after years of study appear to have not done so well at basic statistics which is part of most courses where some analysis is likely to be required. May be it is just that many climatologists just can’t do statistics. I don’t know just looking for some rational explanation for the outrageous claims of so many over the last few years.

Willis – Beautiful work simply and elegantly explained. Entirely as we’ve come to expect from you.

Such calculations as p^N are based on independent random events which this is not.

With 20 and 60 year oscillations high event will occur roughly 60 years apart and the change of one being higher than the next is 1 / [the number of such events].

Over the last “118 years” there have been 3 (maybe even only 2) such events. Placing the odds of a 13 months of such significant highs occurring (without warming) at close to – 1 in 3 – sometime over this short time period at the height of the harmonic cycle.

With a small natural warming such has been going on since the LIA the chance is very close to 100%.

So if there is a claim that 13 months of co-joined, not independent, warm whether at the peak of this 60 year cycle is proof of a small natural warming trend (since 1895 or even the LIA) – the chances of that are approximately 66% likely.

To be fair though such limited datasets are usually rated against [N-1] events making even that an overstatement of the chance: more like 50% likely.

Statistics is for those who understand its intricacies, and I do not. It appears to me that extreme events may happen (but not necessarily) if certain principal conditions are satisfied. From my (non-climate related) experience this is most likely happen with cyclical events when one of two extreme plateaux (plural ?) is reached. If there is a 65 year cycle in the climate events (AMO at peak etc) , than it looks as the conditions are right for such events to be more frequent than usual.

Just a speculation of an idle mind.

So just to clarify – the actual odds of a running 13 month “month in top third” based on historical observational data is 2.6 out of 1374, or 1:528 (ish) ?

Maybe I should wait on Jeff opening a betting shop.

Willis, you say that picking the appropriate model for the situation is the central, crucial, indispensable, and often overlooked first step of any statistical analysis.

But the Poisson distribution is unbounded at the upper end. So the distribution that you fitted to your histogram also suggests that we should expect to find one instance (0.939) of a 13-month period in which 14 of the temperatures are in the top third. And it wouldn’t be that surprising to find a 13-month period with 15 (expected frequency 0.326) or 16 (expected frequency 0.106) of the individual months in the top third.

Does this really sound like the appropriate model?

Also, lambda in the Poisson distribution is the expected value of the mean of the data. So if you fit a Poisson distribution, you are determining that the mean number of months falling in their top third in a 13-month period is 5.213. (Note: the fact that you arrive at 5.213 seems odd to me, as I’d expect only 4.333 months out of every 13 on average to be in the top third. Am I missing something here?). However, it seems to me that your discovery that the mean of your distribution (5.213) remains the same when you oversample the same dataset is unsurprising. And it doesn’t really endorse the choice of Poisson as a distribution.

Nice work Willis. I like your approach.

So in over 1300 13 month periods, there has been just one where every month is in the top 3rd.

Yes, this was the right way to do the math (critic of earlier posts).

Have to look at the details more, like the reasoning for using 13 months, and using The warmest third instead of, say, warmest 10 percent, but at least the tools are good.

Willis, is your and his data Raw or after it has been mangled by Quality Control algorithms?

This sort of statistical mistake is reminiscent of certain other problems that do not necessarily follow simple intuition about stochastic events. The famous ‘birthday’ problem comes to mind– in a group of 50 people, there is about a 95% probability of two having the same birthday. In the case of the birthday problem, it is the difference between the probability of two particular people having the same birthday compared with any two people in a larger group. It raises the interesting question: If you included all possible combinations of six months out of a sequence of thirteen (rather than sequential months), would you arrive at an even higher probability? I suspect so, despite the fact that you would be reducing the correlations by spreading out the sample.

Major cities might almost have been designed to set higher max and higher min temperatures. Paint them black and carbon-dioxide could be awarded anothers recordbreaker’s medal – by dumb judges.

Willis Eschenbach says:

“I look because at the core, I’m trying to understand the data”

And that makes all the difference! It seems to me that the climate change action advocates are not trying to understand the data but are trying to understand how to use the data to promote the cause.

Nice work debunking this “wolf cry”.

Also, even if he were right about the stats, evidence for a warming climate is not proof it’s man made nor quantify how dangerous it might be.

Good job Willis.

The same weather pattern has been in place in the south for at least 30 years.

I recall my wife getting her citizenship on the steps of Tom Jefferson’s house in 1977 in 105 degree heat. Happens every year just a little more intense this year. Shade is strategic terrain.

Willis

I can see you’re conversational skills are as about as an inept as your stats.

Histograms deal in bins not categories: i.e. ranges 0-1, 1-2, 2-3 hence the x-axis labelling should be at each tick not between ticks you are plotting categories (0, 1, 2, 3, 4) where’s the bin range. As for Mathsworld it should know better. If you don’t believe me you can look at even elementary statistical packages such Excel: histogram vs bar chart functionality – note they are not the same.

This analysis seems useful, but needs to be improved. Presumably the data include temperatures from the last 50 years, which should show a signal from increasing global warming. It seems to me the appropriate analysis would be to fit the Poisson distribution to an unbiased sample, early in the 20th century, and use that fit to predict how often you would expect a 13 month consecutive warm period. I would imagine the odds would be small.

BTW Willis according to Mathworld the bar charts look a lot like yours too, oh yeah and they are plotted both vertically and horizontally.

http://mathworld.wolfram.com/BarChart.html

REPLY: And their histograms look like Willis’ too: http://mathworld.wolfram.com/Histogram.html

The grouping of data into bins (spaced apart by the so-called class interval) plotting the number of members in each bin versus the bin number. The above histogram shows the number of variates in bins with class interval 1 for a sample of 100 real variates with a uniform distribution from 0 and 10. Therefore, bin 1 gives the number of variates in the range 0-1, bin 2 gives the number of variates in the range 1-2, etc. Histograms are implemented in Mathematica as Histogram[data].

See also: Frequency curve http://mathworld.wolfram.com/FrequencyCurve.html

A smooth curve which corresponds to the limiting case of a histogram computed for a frequency distribution of a continuous distribution as the number of data points becomes very large.

Care to continue making of fool of yourself? – Anthony

Thomas

I think we’re both arguing the same point.

Standard stats and probabilities – derived therefrom – are often carried out on time series with little regard given to the degree of stationarity, drift and/or periodicity. To suggest that an event is somehow more probable in one part of the series than another would suggest that it is not second order stationary (pdf changes along time). But this can only be ascertained if one has enough data in order to identify drift or periodicity. We don’t, so these types of stats aren’t really appropriate because we can’t really workout the frequency of the events. Willis has given this a go and it does look as if one could question the whole argument.

“However, it seems to me that your discovery that the mean of your distribution (5.213) remains the same when you oversample the same dataset is unsurprising. And it doesn’t really endorse the choice of Poisson as a distribution.”

A poisson is close enough for the task, and oversampling is fine for the task. The task is rhetorical: to illustrate that when you shop through a dozen possible 13-month spans, there will be one of the 13 that happens to stray in the direction of your pet theory, as well as a few that stray in the opposite direction. You pick the one of 12 that best fits your pet theory, then run with it. If the news report had come out with calendar years, Dec-Dec, and said the Dec-Dec years were trending toward global warming most of us would accept a Dec-Dec span as not-eyebrow-raising.

June-June sounds OK because we are thinking “summer.”

If the analysis had said “Nov-Nov,” it would be a bit more odd, and we would be thinking, “why examine a Nov-Nov year? Why not Dec-Dec, which is nearly the same span just more natural-sounding, or why not Jan-Jan?”

when you go the extra mental step and think, “what is the justification for Jun-Jun,” then it should occur in your mind that, among other reasons for selecting Jun-Jun, it might be cherry-picking.

Then, to test this “cherry-picking” hypothesis, all you have to do is take one of two alternate analyses, and see whether the data remain similar, or stray away from the pet theory.

You can then either calculate all other or a few oher 13-month spans, or run all 13-month spans.

Either way serves the purpose of seeing whether Jun-Jun is the most favorable 13-month, out of the 12 avaiable, to select in order to cherry-pick, or whether the Jun-Jun finding happens to be robust.

So, the RMSEA could be calculated for all possible 13-month spans, and compared to the Jun-Jun.

Also, it would be nice to see the Poisson parameters and RMSEA for each of the 12 13-month spans. And, to x2 test for significant differencs between a few match-ups.

For the purposes of testing whether there is a warming trend, either way is sufficient to test Jun-Jun as either a fluke on the high end or as actualyl representative. Choice of Poisson is also not mission-critical for this.

The truth is that there may be distributions that fit the data better, and even theroetically match better, such as having an upper bound. But the reality is that nature follows her own course, and is not bound by the limits of any of our mathematical models. We will always have an error of approximation when developign a model to maximally account for distributions of data from nature. We bring our models in to help proxy nature. Nature does not “follow” our models, as much as we might get seduced into believing this. Not even fractals.

The Eastern US makes up about 1% of earth’s surface, and about 3% of earth’s land surface.. The real problem to be tackled was, What is the probability that an arbitrary area making up

3% of earth’s land surface will have a period of 13 months ( why not 12 months or 17 months?) with all temperatures in the top 1/3 (not 1/6 or 2/5) of temperatures for that month over the period 1895 to 2012 ( why not 1860 to 2012?). Jeff Master’s computation was total data snooping.

As I sadly learned many years ago, when I was single and had money to blow on horse racing, it’s easy to get estimated probabilites of 1 in 10,000 or one in a million by looking for all imaginable correlations of PAST data- the calculations are futile unless they can reliably predict FUTURE data.

Thanks Anthony

Yip that’s right, that was a response to WIllis citing of the Mathworld. So tell me did you get your stats training from a website as well.

Here’s a few:

http://en.wikipedia.org/wiki/Histogram

Tell me, because they don’t look like Willis’ is Willis wrong?

Your other sets of graphs are histograms, you’re curve is a probability distribution function, which is computed from the standard deviation and the mean – go look it up and you don’t need a histogram to plot it. But what is plotted above is not a histogram, try using the histogram in excel and see what the plot looks like – not like the ones above.

Otherwise don’t take my word for it ask another statistician.

REPLY: Well I think you are being pedantic. Some comic relief might help:

Replying to Nigel Harris:

Also, lambda in the Poisson distribution is the expected value of the mean of the data. So if you fit a Poisson distribution, you are determining that the mean number of months falling in their top third in a 13-month period is 5.213. (Note: the fact that you arrive at 5.213 seems odd to me, as I’d expect only 4.333 months out of every 13 on average to be in the top third. Am I missing something here?)

Willis has this whole thing upside down. He’s fitting lambda to the data, rather than comparing the data to the known lambda (lambda is simply the probability of success times the number of events, and thus has by definition to be 13/3). That means his conclusion is exactly backwards.

Reasoning correctly, we know that if there is no autocorrelation between hot months, then we should get a Poisson distribution with lambda = 4.3333. We don’t, instead we have a significant excess of hot streaks. All this proves that it’s a non-Poisson process, i.e. that there is some autocorrelation, and the temperature in a given month is not independent of the temperature of the surrounding months! Having thus proved it’s non-Poisson, you can’t then draw further conclusions using the Poisson distribution.

It doesn’t prove anything about global warming one way or the other.

Put another way, if he does the same for cold streaks, he’ll find the same thing – an apparent lambda > 4.333, indicating autocorrelation in the data set. And that won’t say anything about global warming either.

If, on the other hand, he were to do some further analysis – say take the dates of all cold streaks >9 months and all hot streaks > 10 months, and see if there’s a consistent trend towards more hot streaks of cold streaks, that would be an actual result.

Willis,

I’m still puzzled why your distribution has such a high mean value. You haven’t provided a link to your data but the first chart is easy enough to parse: there was one 13-month period with no top-tercile months, six with one month in the top tercile, nine with two top-tercile months and so on. By my calculations, you show a total of 597 months in the top tercile. And that’s out of 116 13-month period = 1508 months in total. But this implies that 39.6% of months were in the top tercile, which surely cannot be so.

Nigel

We should remember we are dealing with the “Adjusted” data here.

The adjustments have moved the 1930s temperatures down by about 0.49C in the most recent analysis of how this varies over time.

So here is what the Raw and Adjusted US temperatures look like over time (using an 11 month moving average given how variable the monthly records are)

http://img692.imageshack.us/img692/6251/usmonadjcjune2012i.png

And then the Adjusted version of monthly and 11 month moving average. The monthly temperature anomaly for the US can be +/- 4.0C. June 2012 was only +1.03C so much less than the historic level of variability.

[But it was warm over the last year, although the Raw temperatures were just as warm in 1934, close in 1921, 1931, 1953 and 1998].

http://img535.imageshack.us/img535/8739/usmoncjune2012.png

“What Willis missed is that Jeff sold his website to the weather channel for some beaucoup buxes and he needs to deliver this sort of bs in a technical fashion so they can feel they are getting a good deal. Model that in your Poisson distribution. big guy!”

🙂 Alas, some things even Willis can’t calculate!

Willis,

Ah, I see my error: every June is counted twice. So if 94 out of 116 June months were all in the top tercile, then that would explain how you could have 597 top-tercile months.

But that seems to imply that you have analysed a slightly different issue to the one the ridiculous Jeff Masters quote was about.

What Jeff Masters actually said was: *Each* of the 13 months from June 2011 through June 2012 ranked among the warmest third of *their* historical distribution for the first time in the 1895 – present record.

In other words, June 2011 was in the top tercile of all Junes, July 2011 was in the top tercile of all Julys and so on.

You seem to have looked at the probability of finding a 13-month period in which all 13 months are in the top tercile of all historical monthly temperatures (which most Junes will be), rather than each month being in the top tercile of its own monthly history.

I don’t need the data to say what the result would be. If we define the problem as finding the probability of a 13-month period with all 13 months in their respective top terciles, then the best fit Poisson distribution will (by definition) have lambda of 13/3 or 4.333, which means the expected number of 13-month periods with N=116 is not 0.2 but 0.05. And with N=1374 it is 0.55.

Although the Poisson distribution is clearly not a “correct” model, as it allows for logically impossible results such as 14 top-tercile months out of 13, it does describe the data pretty well as far as it goes. (The cumulative probability of all the logically impossible outcomes happens to be very small indeed).

But in the end, all this analysis says is that an event which happens to have occurred just once in this particular historical dataset is statistically expected to have occurred about once, based on the characteristics of this particular historical dataset.

Lucia on the other hand, has attempted to answer the correct question, and although she seems to have gotten into something of a muddle, she seems to now be of the opinion that the likelihood of 13 top-tercile months in a row (each in the top tercile of their own distributions, that is), in a dataset with the same general characteristics as the US lower 48 temperature record, but in the *absence of a forced trend*, is perhaps around 1 in 134,000. So Masters was indeed ludicrously wrong, but not perhaps by nearly as much as you suggest.

This is a keeper. Please add this to the Climate Fail List in the main menu.

Okay on second thought…

In this analysis, each “record” month is added to 13 different consecutive intervals. For instance, if there is an isolated streak of three consecutive record months, it will add to the statistic:

– two 13-month intervals with 1 record month

– two 13-month intervals with 2 record months

– ten 13-month intervals with 3 record months

Each streak is therefore added to the statistic with all of its “heads” and “tails” which definitely affects the shape of the resulting histogram. This does not mean your approach is wrong but I definitely think that it deserves a solid mathematical proof that poisson distribution is appropriate here.

In my personal opinion, the analysis should concentrate on streaks of “record” months and evaluate their relative frequency, i.e. “if there is N streaks of M consecutive record months then we can expect X streaks of (M+1) consecutive record months. And of course it would still deserve solid mathematical proof for whatever function is used for the approximation.

Next step should probably be to evaluate evolution of these proportions over increasing length of the record. But that may actually lead you to conclusions you don’t want to see – such as that the chance to see a 13-month streak early on the record is in fact much higher than to see the same streak late in the record. Or, we can say, the chance to see a 13-month streak late on the record is way lower than the chance to see it anywhere on the record.

And I won’t even mention the hell you could get to if you tried to perform the same analysis with record lows and compared these distributions and evolutions with each other.

Returning to the original claim, I think the problem lies in the statement “assuming the climate is staying the same as it did during the past 118 years”. What does it mean “staying the same”? Does it mean temperature will continue to rise at the same speed? Or does it mean it will stay within the same boundaries? Depending on which interpretation of this confusing statement you use you can get to very similar or very different results.

Steve R says:

Bart: The point is that the claimed 1 in 2.6 million chance that this June to June “event” is BS. and regardless of whether there has been warming or not, this “event” is indistinguishable from random

Whatever that means, that is not what Willis has shown.

To demonstrate that this event is indistinguishable from randomness about a non-warming trend, one would have to demonstrate that such an event is likely, given a random dataset with zero trend. The question then arises as to what version of a random dataset is appropriate to use to estimate the “random” likelihood from. Masters used white noise with zero trend. Lucia initialy favored something redder, with a high degree of autocorrelation, still with zero trend. These lead to very different estimates of the probability of such an event being the result of randomness about a zero trend. Lucia has since decided that a more pinkish hue is probably more in tune with the assumptions of the problem, leading to an estimate that is closer to Master’s original.

Apart from their quibble over how much autocorrelation to use, both of those analyses are incorrect formulations of the question. They both compare the probability of the current event against the assumption of zero change in climate, none whatsoever, over the last 118 years. The only people that claim that there has be no change whatsoever in global temp over 118 years live in the vivid imaginations of the alarmist profiteers. Zero change is not on the table, so drawing comparison to the probability of an event from a zero change model is … well … pointless. Unless your point is to make alarmist propaganda aimed at people who dont understand statistics. In that case it is effective, just grossly dishonest.

That was what Masters was doing. In an attempt to win a minor battle over geeky stats territory, Lucia’s acceptance of Master’s comparison to an absolutely unchanging climate ceded him victory in the propaganda war. Turns out, she mostly lost the geeky stats battle, too. The better choice would have been to demonstrate the pointless nature of his assumptions, rather than accepting them for the sake of a losing argument. But at least her approach was to pick a model of randomness associated with a cliam about how climate works, and calc the odds of that particular randomness producing the observed result. That is how “distinguishing observed events from randomness” is properly done.

Masters got that part right, too. His fault is that he modeled a claim that no one is making – zero climate change whatsoever. The claim he modeled holds that there is no trend in surface temp, nor even any non-random variation in temp (like a cycle), for the last 118 years. It is a strawman claim. He then proceeds to compound his offense by pretending that:

1. “has warmed in the past” means “is warming now” and

2. ruling out “no climate change wahtsoever” means “catastrophic man made global warming we are all going to die if we dont follow what Glorious Leader wants us to do”.

Whereas Lucia addressed Master’s argument ineffectively, Willis simply ignores it altogether. He didn’t analyze a model of a claim about how climate works, to determine if the observed event was consistent with that claim. He just fit a curve to the observed events, and found that one of the the observed events is near to the curve that he fit to the observed events. Doesn’t say anything whatsoever about the validity of Master’s claim.

w.-

Have you seen http://dotearth.blogs.nytimes.com/2012/07/10/cool-pacific-pattern-shaped-2011-weather-extremes-heat-dominates-u-s-in-2012/ which contains these quotes from the National Climate Extremes Committee’s latest report?

” La Niña-related heat waves, like that experienced in Texas in 2011, are now 20 times more likely to occur during La Niña years today than La Niña years fifty years ago.

– The UK experienced a very warm November 2011 and a very cold December 2010. In analyzing these two very different events, UK scientists uncovered interesting changes in the odds. Cold Decembers are now half as likely to occur now versus fifty years ago, whereas warm Novembers are now 62 times more likely.”

This sets off my BS alarm — clanging! I don’t think it is even statistically possible to develop a method to arrive at such conclusions, certainly not ‘predictive odds’.

Can you comment? clarify?

After reading this, I can not get over the idea that Jeff Masters is the Maxwell Smart of Climate Science…

Another nice job by Willis, but I am not sure saying he is smarter than a climate scientist is high praise. 🙂

One man’s meat is another man’s Poisson.

A poisson distribution is likely only if the occurrences are near to random. Random is not a thing that the “consensus” would entertain for warming periods

Without having much of a stats background, but some measure of common sense, I give 4 stars (out of 5) to Nigel’s 3:42 am post for explaining the obvious problem with calling the distribution a Poisson distribution, and 5 stars to pjie2’s post at 6:21 am for his confirmation of Nigel’s post and his further elaboration of the consequences.

With but limited stats knowledge, clearly if you assign a 1/3 probability to Outcome A and test the event 13 times, you will get 1/3 x 13 = 4.33 occurrences of Outcome A on average over time. That is, on average, every three events will yield one Outcome A. Nigel made the point that the data therefore does not fit a Poisson distribution, and he also pointed out the problem with the endpoints.

pjie2 clarified Nigel’s point about it not being a Poisson distribution and then went on to explain the implication, i.e., that there must be autocorrelation between the months, since (I presume from his explanation) if there were not, the distribution would indeed show a better fit to a Poisson distribution if the data were in fact random. That is, the mean would approximate the expected 4.333.

As for the original analysis by NCDC, that I do understand. Assuming randomness, there’s a 1/3 chance of a month falling in the top third of all events, by definition, and the chance of 13 consecutive positive outcomes (defined here as a Success) is 1/3 to the 13th power, or .0000006272. This is 6.272 Successes in 10 million, or 10,000,000 divided by 6.272 = 1 Success in 1,594,400 attempts. (NCDC’s calculator obviously goes to more decimal places than mine.)

Since another attempt is made each month, there are 12 attempts per year and one Success will be expected to occur every 132,833 years, assuming randomness. So Jeff Masters’ 124,652 AD should be 134,845 AD, and he was being conservative (because he divided by 13 instead of 12 to reach his result, but there are only 12 new datapoints per additional year.)

That said, if, per pjie2, it’s not a random distribution, all bets are off and we’re playing with a loaded die. Furthermore, since we skeptics have (most of us anyway) always been willing to concede that warming has occurred, and even that it is to some extent likely to be manmade, why would we expect the die to be anything but loaded when performing such an analysis?

If the earth now begins to cool, we will not have to wait thousands of years before we get 13 consecutive months in the coolest third either, I’d wager. Given the relatively short time span of 116 years (or 118?) in this situation, it might easily occur in the first or second decade of a significant cooling if monthly temps drop fairly rapidly and end up running consistently below the 116-year average.

Willis is a ‘conversational skill’?

Who woulda thunk it … (Perhaps you meant “I can see your conversational skills …” yes, I know pedantic, particularly when it comes to your and you’re [literally: “you are”])

.

Lucia’s update:

“Update Wow! I didn’t realize the US temperatures had such low serial auto-correlation! I obtained data for the lower 48 states here:

http://www7.ncdc.noaa.gov/CDO/CDODivisionalSelect.jsp

Based on this, the lag 1 autocorrelation is R=.150, which is much lower than R=0.936. So ‘white noise’ isn’t such a bad model. I am getting a probability less than 1 in 100,000. I have to run the script longer to get the correct value! ”

Eschenbach’s method doesn’t appear to differentiate what we would expect without warming from what we would expect with warming. It might be slightly more meaningful if he did similar calculations for the first and second halves of the record and then compared the two. But it’s still a weird way to look at the issue. Lucia’s makes more sense.

Clearly 1/3^13 is incorrect because a month being in the top 1/3 warmest is not an independent event – it is much more likely to occur if the entire year in question is a warm year for instance.

What would be more meaningful, but I lack the maths to be able to do it, it to look at how likely a run of 13 months in the top 1/3 is using conditional probability – ie how likely is it that a month is in the top 1/3 given that the previous month was also in the top 1/3? And then extrapolate this to 13 in a row.

The NY Times just published an article in their science section linked on their main page:

http://www.nytimes.com/2012/07/11/science/earth/global-warming-makes-heat-waves-more-likely-study-finds.html?hpw

Some of the weather extremes bedeviling people around the world have become far more likely because of human-induced global warming, researchers reported on Tuesday. Yet they ruled it out as a cause of last year’s devastating floods in Thailand, one of the most striking weather events of recent years.

A new study found that global warming made the severe heat wave that afflicted Texas last year 20 times as likely as it would have been in the 1960s. The extremely warm temperatures in Britain last November were 62 times as likely because of global warming, it said.

The findings, especially the specific numbers attached to some extreme events, represent an increased effort by scientists to respond to a public clamor for information about what is happening to the earth’s climate. Studies seeking to discern any human influence on weather extremes have usually taken years, but in this case, researchers around the world managed to study six events from 2011 and publish the results in six months.

Some of the researchers acknowledged that given the haste of the work, the conclusions must be regarded as tentative.

“This is hot new science,” said Philip W. Mote, director of the Climate Change Research Institute at Oregon State University, who led the research on the Texas heat wave and drought. “It’s controversial. People are trying different methods of figuring out how much the odds may have shifted because of what we have put into the atmosphere.”

The general conclusion of the new research is that many of the extremes being witnessed worldwide are consistent with what scientists expect on a warming planet. Heat waves, in particular, are probably being worsened by global warming, the scientists said. They also cited an intensification of the water cycle, reflected in an increase in both droughts and heavy downpours.

The study on extreme weather was released along with a broader report on the state of the world’s climate. Both are to be published soon in the Bulletin of the American Meteorological Society. The broad report found no surcease of the climate trends that have led to widespread concern about the future.

The Arctic continued to warm more rapidly than the planet as a whole in 2011, scientists reported, and sea ice in the Arctic was at its second-lowest level in the historical record. In 2010, rains were so heavy that the sea level actually dropped as storms moved billions of gallons of water onto land, they said, but by late 2011 the water had returned to the sea, which resumed a relentless long-term rise.

So far this year in the United States, fewer weather disasters seem to be unfolding than in 2011. But it is still turning out to be a remarkable year, with wildfires, floods, storms that knocked out electrical power for millions and sizzling heat waves in March and June.

Globally, the new research makes clear that some of the recent weather damage resulted not from an increased likelihood of extremes, but from changes in human exposure and vulnerability. The 2011 floods in Thailand are a prime example.

An analysis by Dutch and British scientists found that the amount of rain falling in Thailand last year, while heavy, was not particularly unusual by historical standards, and that “climate change cannot be shown to have played any role in this event.”

More important, the researchers said, was rapid development in parts of Thailand. Farm fields have given way to factories in the floodplains of major rivers, helping to set the stage for the disaster.

In the new report, researchers in Oregon and Britain found that natural climate variability played a big role in setting the stage for the heat wave in Texas. The weather in 2011 was heavily influenced by a weather pattern called La Niña, which has effects worldwide, including making drought in the American Southwest more likely.

But even taking that into account, the researchers found, the overall warming of the planet since the 1960s made it about 20 times as likely that such a heat wave would occur in Texas in a La Niña year.

Martin P. Hoerling, a meteorologist with the National Oceanic and Atmospheric Administration who was not involved in the new study but is conducting his own research on the Texas disaster, agreed that human-induced global warming had probably made the odds of record-setting heat somewhat more likely. But he said his research showed that the rainfall deficits were unrelated to global warming.

He said he was skeptical about several aspects of the new paper, including the claim of a 20-fold increase in likelihood.

More broadly, he said he was worried that the newly published studies had been done so hastily that the conclusions may not stand the test of time. “We need to think carefully about what kind of questions we can credibly pursue with this sort of rapid turnaround,” Dr. Hoerling said.

The post on Lucia’s blog which I found most accessibly to demolish Masters’ statistical simpemindedness (or ignorance) is by Climatebeagle. It demonstrates the mental & math error underlying the 1:1.6 million assertion so ably that a complete statistical stupe could grasp it:

climatebeagle (Comment #99257)

July 10th, 2012 at 2:54 pm

Using the same logic as Jeff Masters and looking at the US data:

5 consecutive months in top-third should occur every 20 years but have occurred 18 times in 116 years.

6 consecutive months in top-third should occur every 60 years but have occurred 11 times in 116 years.

7 consecutive months in top-third should occur every 182 years but have occurred 4 times in 116 years.

8 consecutive months in top-third should occur every 546 years but have occurred 3 times in 116 years.

@Nigel Harris, you said “What Jeff Masters actually said was: *Each* of the 13 months from June 2011 through June 2012 ranked among the warmest third of *their* historical distribution for the first time in the 1895 – present record.”

No, Jeff Masters was looking at a thirteen month period, not individual months compared to the same months over a period. To quote from the original – “Thus, we should only see one more 13-month period so warm between now and 124,652 AD”.

I take this as a reasonable person to mean a period of 13 consecutive months.

Willis Eschenbach says:

July 11, 2012 at 12:51 am

“The controversy is that the “ridiculously long odds” he refers to are wildly incorrect …”

Yes, well, that much is obvious. But, I don’t think yours is necessarily far better, as it is still treating the data as if it were stationary random data. Lots of distributions look a lot like the Poisson distribution. My point is, this isn’t a fight worth fighting. All he is saying is that temperatures have risen. They have, though they no longer are. It says nothing about attribution.

JJ says:

July 11, 2012 at 7:39 am

“They both compare the probability of the current event against the assumption of zero change in climate, none whatsoever, over the last 118 years. “

Exactly.

AGW heatwave downed by a poisson pen.

I work in the private sector, and actually get paid to do things like apply Poisson and Negative Binomial distributions correctly.

I would hire pjie2 to do this work with me. Willis, not so much.

In this case, lambda is known by definition, and the data are not independent.

Willis’s analysis demonstrates nothing other than this. In his own words: “picking the appropriate model for the situation is the central, crucial, indispensable, and often overlooked first step of any statistical analysis”. As Willis demonstrates here- by picking the wrong model.

As much as I despair about errors such as these, I despair more about those people who eat it up, uncritically.

John West said: “It seems to me that the climate change action advocates are not trying to understand the data but are trying to understand how to use the data to promote the cause.”

Pot, meet the kettle.

I mean, even if we take Willis’ argument at face value, the odds are still 2.6 in 1374, or about 0.2%. Those are still long odds. Does that in any way lend credence to the argument that observed warming of the globe in the latter third of the 20th century can be blamed on humans? Not in the slightest.

Willis,

Any comment on Lucia’s updated estimate, and the estimate calculated by Tamino?

http://tamino.wordpress.com/2012/07/11/thirteen/#more-5309

BCC says:

July 11, 2012 at 9:50 am

“I work in the private sector, and actually get paid to do things like apply Poisson and Negative Binomial distributions correctly. I would hire pjie2 to do this work with me. Willis, not so much.”

This is why I enjoy this site so much. Eventually someone shows up who understands the issue. It doesn’t ever seem to matter what the issue is either, as they range from logging, to fire suppression, to nuclear reactors, to tsunamis, and here, to statistical analysis.

As for all those kudos extended to Willis for his analysis, I’ve always found that it’s better to wait until the Nigels, pjie2s, and BCCs show up before jumping into unknown waters too quickly. Regardless, this site is, I believe, unequaled for its ability to draw out explanations from those intimately involved whatever issue is being discussed. The comments are nearly always as valuable a read as the original article, and often more so.

(To BigBadBear at 8:55 am: The 1/3 to the 13th power is correct assuming randomness, which was my assumption. I was just explaining the derivation of the NCDC calculation. Their assumption of randomness was incorrect, however.)

Willis,

You have a good start on the analysis, but you need to take an extra step – you need to de-trend the data. Find the best linear fit to your data set – temperature vs. time. Then subtract that trend from each month’s temperatures – this is the data that you need to analyze for the expected frequency of top thirds. The odds you calculated, 2.6 / 1374 are the odds given whatever linear trend exists in the data – your number may or may not be influenced by the trend.

A much more useful exercise would be to split the data into two periods – the first half and the second half. For each half, figure out how many months in each contiguous year are in the top third of the whole data set. Figure out if the odds of seeing a contiguous year in the top third has changed from the first ~58 years to the second ~58 years. So, for example, if the first half yields 2.6 and the second half gives the same number, then the probability of setting this record hasn’t changed.

For those worrying why lambda = 5.2+ and not *exactly* 4.333, I want to remind you that this is not continuous data. This study involves discrete data, in which monthly readings fall into ‘buckets’ that do not permit fractions. For example, given the set of four numbers [1,2,2,3], one finds that there are three data points in the top *half* of the distribution, because the #2 bucket cannot be subdivided. For the same reason a ‘tie’ to an old temperature record counts as a new temperature record.

@Bart: Correct. Masters’ point is this:

These are ridiculously long odds, and it is highly unlikely that the extremity of the heat during the past 13 months could have occurred without a warming climate.

Where “a warming climate” means a climate that has warmed recently (at least, that’s what it means in terms of the math we’re using to analyze it).

We don’t see many people still claiming that the US isn’t warming (or, hasn’t warmed), but if you do: well, here’s another piece of evidence which would indicate that they’re wrong.

I haven’t had time to read all the comments and I really need to re-read/digest the article, but I was under the impression that a Poisson Distribution applies only when the event in question occurs at a known average rate and is not dependent on the time since the previous event (the degree of randomness?). I’m not sure this applies in a valid way to Willis’ argument from a brief read through?

I think it was really the NCDC that did the original 1,594,323 calculation (and it is for 13 months not 12 months which is ). They wrote:

“Warmest 12-month consecutive periods for the CONUS

These are the warmest 12-month periods on record for the contiguous United States. During the June 2011-June 2012 period, each of the 13 months ranked among the warmest third of their historical distribution for the first time in the 1895-present record. The odds of this occurring randomly is 1 in 1,594,323. The July 2011-June 2012 12-month period surpassed the June 2011-May 2012 period as the warmest consecutive 12-months that the contiguous U.S. has experienced.”

Here: click on “Warmst 12 month consecutive periods for CONUS”

http://www.ncdc.noaa.gov/sotc/national/2012/6/supplemental

In the raw records, of course, 1934 would tie the current 13 month average.

Bart says:

I mean, even if we take Willis’ argument at face value, the odds are still 2.6 in 1374, or about 0.2%. Those are still long odds.

In order to take Willis’ argument at face value, one has to understand what Willis’ argument is. I dont think most here understand it, and that includes Willis.

The gist of the argument presented is that if you find a Poisson distribution that approximates some observations, then those observations are likely to fall near that Poisson distribution. That isnt a particularly interesting argument, being a tautology. It says nothing about the climate, nor about the claims made by Jeff Masters. The odds claimed by Masters and the odds claimed by Willis are irrelevant to each other. Comparing them is meaningless, and neither of them is individually of any interest to the question at hand.

JJ

mb says:

July 11, 2012 at 3:21 am

Thanks, mb. The model is not predicting how many months will come up out of 13. It is predicting how many months will come up. You are correct that there will be an “edge effect”, since we are only looking at 13-month intervals. But since it only affects ~ one case in 1400, the effect will be trivially small.

Suppose that in fact there is one run of 14 in the data. Since we are counting in 13-month intervals, in the first case (June to June only) it will be counted as a run of 13. And in the second case (all 13 month intervals) it will be counted as two runs of 13 … but in neither case does that materially affect the results shown above.

So in practice, the edge effect slightly increases the odds of finding a run of 13.

w.

Nigel Harris says:

July 11, 2012 at 3:42 am

See my response above. Your objection is real but makes no practical difference.

w.

cd_uk says:

July 11, 2012 at 5:41 am

OK, great, you’re right. And Mathworld is wrong …

Keep believing that, cd_uk, hold tight to that, it seems important to you. Meanwhile, in the real world, such trivial differences as you point out are roundly ignored.

w.

Is this not like the game of the extreamly high probability of finding two people born on the same day of the month (not same month) (any two people born on the same date, e,g the 13th) in a room of more than 15 people?

Maybe he punched in seconds- close enough for government work.

I note that cd_uk’s use of punctuation is as sloppy as his reasoning.

Nigel Harris says:

July 11, 2012 at 6:39 am

I have assumed all along that it has a high mean value because the data is autocorrelated. This pushes the distribution to be “fat-tailed”, increasing the probability that we will find larger groups and decreasing the probability of smaller groups.

pjie2 says:

July 11, 2012 at 6:21 am

I disagree. We have not shown it is not a Poisson distribution. We have shown that it is a special kind of Poisson distribution, a “fat-tailed” Poisson distribution where all results are shifted to somewhat higher values.

Can we draw conclusions from that? Because of the agreement of the calculated “lambda” in the smaller and larger datasets, along with the smaller RMS error in the larger datasets, I say that we can, because the distribution actually represents and accurately describes the data.

And since the distribution and the data agree, since the distribution accurately describes the data, it doesn’t matter how we arrived at that distribution, or how it is calculated.

My thanks to both of you,

w.

PS—Upon reflection, I see that you are right, that I shouldn’t have fit the value of lambda. I should have used the mean of the actual data … and in fact, the mean of the first analysis (June to June) is 5.15, while the mean of the second data is 5.17. By fitting, I had gotten a value of 5.21 for both, a trivial difference … which shows definitively that it is indeed a Poisson process, and so your objections in both cases do not apply. I’ve added an update to the head post acknowledging my error, and thanking you both for pointing it out.

Nigel Harris says:

July 11, 2012 at 7:21 am

No, that’s not what I looked at at all. I looked to see whether June of year X was in the top third of Junes, July of year X was in the top third of Julys, and so on. That’s why I got the same answer that Jeff Masters got, that June 2011 to June 2012 was the only interval with 13 months all in the warmest third. If I’d done what you claim above, I wouldn’t have found that.

w.

Rod Everson says:

July 11, 2012 at 8:26 am

Sorry, but your common sense has failed you, and your lack of a stats background is showing. See my post above.

w.

verbal1 says:

July 11, 2012 at 8:35 am

Why would I want to differentiate between “what we would expect without warming from what we would expect with warming.” I’m just looking at the data, and seeing from the data what the distribution is. Yes, the distribution would be different if the globe hadn’t been warming for centuries … so what? I’m looking simply at the odds of finding 13 out of 13 months in the warmest third.

w.

BCC says:

July 11, 2012 at 9:50 am

Sorry, BCC, but in fact I have shown above that this is the right model. How have I shown it? Because the value that I got from iteratively fitting lambda is almost exactly that of the theoretical lambda, which as you point out is “known by definition” in both cases. I have added an update to the head post discussing this.

You would have known this, BCC, if you had bothered to download the data and do the math yourself before uncapping your electronic pen … me, I wouldn’t hire you to do any work with me, you give opinions without first doing your homework.

w.

John@EF says:

July 11, 2012 at 9:54 am

Nope. I’ll leave them to their methods. In Tamino’s case, I’ve been banned from his blog for years for asking inconvenient questions, so he can rot for all I care, I wouldn’t increase his page view count by one.

w.

Mark from Los Alamos says:

July 11, 2012 at 10:32 am

Absolutely not. I’m not looking to find what the odds are of finding 13 in some imaginary detrended world. I’m interested in finding the odds in this world, the real world.

w.

You also have to consider that a continuous period of warmth in the USA is actually no more surprising than a continuous period of warmth in any other 8,000,000 square km of land area in order assess the probability of noting a 13-month period of high temperatures. Masters is using sampling bias with his post-hoc choice of the USA as his area of study.

The sheer number of data points we track means that statistically records will be broken more frequently than the average person would guess. Is your town having it’s hottest ever day? What about the next town over, or another in the area? Your county? Your region / state? Your country? What about the hottest week? Hottest month? Hottest year? What about the coldest? Wettest? Driest? Windiest? Sunniest? Cloudiest? that list gives 112 statistics that could apply just to you, at this time.

Willis E says:

I don’t follow. A Poisson distribution is (by definition) for independent events, right? And we know that the temperature series is auto-correlated, so, not independent?

So how can an auto-correlated series follow a Poisson distribution?

(Sure, you might approximate a lightly auto-correlated series by a Poisson distribution, but that approximation is going to give you some healthy-sized errors on the fringes).

>Willis Eschenbach:

>

>Thanks, mb. The model is not predicting how many months will come up out of 13. It is predicting >how many months will come up. You are correct that there will be an “edge effect”, since we are >only looking at 13-month intervals. But since it only affects ~ one case in 1400, the effect will be >trivially small.

I don’t agree. The data you want to describe, and which you have graphed, is: For each n, the “The number of 13 month intervals with n months in the top third”. This gives a number C(n), which you label as “count” in your graph. C(n) is certainly zero if n is greater or equal to 14.You claim that C(n) is approximated by a Poisson distribution P(n), and finally use this to estimate the expected frequency C(13) by P(13), Actually you estimate the inverse of P(n) by the inverse of P(n). You do not graph or estimate how many month will come up.

The edge is the number 13. My argument is that since it is obviously not a good idea to estimate the frequency C(14) by P(14), Even if C(n) is approximated well by P(n) for n less than say 7, I doubt that it’s a good idea to approximate C(13) by P(13).

>Suppose that in fact there is one run of 14 in the data. Since we are counting in 13-month >intervals, in the first case (June to June only) it will be counted as a run of 13. And in the second >case (all 13 month intervals) it will be counted as two runs of 13 … but in neither case does that >materially affect the results shown above.

>So in practice, the edge effect slightly increases the odds of finding a run of 13.

I agree, but it is irrelevant to my argument. The point is not that some 14 month sequences show up as pairs of 13 month sequences. The point is that the model definitely breaks down for n equal to 14, so why should we believe it for 13, 13 being so close to 14.

>w.

Willis,

Thanks for your responses.

I still can’t see how, if you’ve done what you say you’ve done, you can have 597 out of 1508 months that fall into the top tercile. Your sample set consists of essentially all 116 years of data, barring possibly a handful of months at the start of the series. Surely by definition 1/3 of all months will fall into the top tercile for their month. The fact that all the Junes are counted twice shouldn’t matter. And no matter how they’re distributed across the groups of 13 months, I think the mean should be close to 4.33 not 5.15.

Am I being thick?

Nigel

Anthony

The point regarding the histogram was a rather lighthearted throwaway, hence the smiley but Willis seems to have thrown his toys out of his pram at this.

As for the clip, how did you know I worked with dirt people [SNIP: Right in one, but let’s not be giving away our trade secrets, Dr. D. I, for one, am very impressed with the caliber of our commenters. -REP]. Anyways I’ll take it on the chin, but as a geophysicist aren’t you running the risk of being tarred with the same brush 😉

So, if I understood correctly, ‘One man’s mean, is another man’s Poisson?’

Windchaser – wrong – according to NOAA the continental US has cooled over the last decade, in all zones but one, I seem to remember. That the world is warmer this decade than last is not surprising as we are still probablyrecovering from the Little Ice Age and will be until we are not. No controversy therefore and no surprise that “extreme events” are happening.

If you believe that trees are thermometers, the recent paper (“This is what global cooling really looks like – new tree ring study shows 2000 years of cooling – previous studies underestimated temperatures of Roman and Medieval Warm Periods – as seen on WUWT) suggests that the medieval and Roman warm events were more “extreme” than our present warm period. But why trust proxies when we have lots of evidence that these warm periods were real and global. Unfortunately we had no idiot MSM to record those events, but if you read Roman accounts of the time they also had ridiculous and unscientific beliefs about what was driving their weather. Plus ca change……..

Jim

Fair enough, a bit sloppy – but then it is a blog.

Upon reflection, I see that you are right, that I shouldn’t have fit the value of lambda. I should have used the mean of the actual data

No, you should have used the known probability of any given month being in the top 1/3, i.e. 33.3%, times the number of events (13), i.e. a lambda of 4.3333. A Poisson distribution is only appropriate if the probabilities for non-overlapping time intervals are independent.

In fact, your analysis is even weaker than I thought, since all you’ve shown is that Poisson is the wrong model, i.e. that hot months “clump” together more than would be expected by random chance. That could be for several different reasons, most notably (1) if there is a trend over time, or (2) if there is autocorrelation between successive months. Note that those are independent – you could have a non-stationary dataset without autocorrelation, or a data set with autocorrelation but no net trend.

In point of fact, for temperature data both (1) and (2) are already known to be true, so a Poisson model is doubly wrong. The fact that the combination of an upward trend and a certain degree of autocorrelation has resulted in something that looks a bit like a different Poisson distribution with a larger mean is irrelevant.

@Nick in Vancouver:

Even if the US has cooled over the last decade (which it hasn’t, or at least I doubt it after the last 13 months), it could still be significantly warmer than average for the period we’re looking at (the last 110 years). And even last year, when the US was “cooling”, this was the case. Obviously, we have a much greater chance of hitting hot records after the temperature has gone up than not.

As a side note, if your cooling or warming trend is weak enough that a couple hot or cold years can completely upset it, then it’s not really very useful. So I’m kind of skeptical of things like 10-year plots that show we’re cooling, and then the next year we’re warming, and then the year after that, we’re cooling again. That’s noise, not a real, statistically-significant trend.

So looking at longer-term trends: Yes, the US is warmer than average, and has been for at least the last decade. Moreover, the warming is big enough that we see things happening that we wouldn’t expect to see if the US had not been warming.

Nigel Harris says:

July 11, 2012 at 1:17 pm

My understanding is that the record is counted if it is in the top third of all records up to that date, not if it is in the top third of all historical records for all time. It doesn’t make sense any other way, to me at least.

w.

The maximum likelihood estimator for lambda for a Poisson population is:

lambda(MLE) = 1/n sum[i=1 to n](Ki) (http://en.wikipedia.org/wiki/Poisson_distribution#Maximum_likelihood)