Guest Post by Willis Eschenbach

Although it sounds like the title of an adventure movie like the “Bourne Identity”, the Bern Model is actually a model of the sequestration (removal from the atmosphere) of carbon by natural processes. It allegedly measures how fast CO2 is removed from the atmosphere. The Bern Model is used by the IPCC in their “scenarios” of future CO2 levels. I got to thinking about the Bern Model again after the recent publication of a paper called “Carbon sequestration in wetland dominated coastal systems — a global sink of rapidly diminishing magnitude” (paywalled here ).

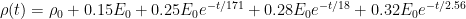

Figure 1. Tidal wetlands. Image Source

Figure 1. Tidal wetlands. Image Source

In the paper they claim that a) wetlands are a large and significant sink for carbon, and b) they are “rapidly diminishing”.

So what does the Bern model say about that?

Y’know, it’s hard to figure out what the Bern model says about anything. This is because, as far as I can see, the Bern model proposes an impossibility. It says that the CO2 in the air is somehow partitioned, and that the different partitions are sequestered at different rates. The details of the model are given here.

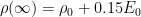

For example, in the IPCC Second Assessment Report (SAR), the atmospheric CO2 was divided into six partitions, containing respectively 14%, 13%, 19%, 25%, 21%, and 8% of the atmospheric CO2.

Each of these partitions is said to decay at different rates given by a characteristic time constant “tau” in years. (See Appendix for definitions). The first partition is said to be sequestered immediately. For the SAR, the “tau” time constant values for the five other partitions were taken to be 371.6 years, 55.7 years, 17.01 years, 4.16 years, and 1.33 years respectively.

Now let me stop here to discuss, not the numbers, but the underlying concept. The part of the Bern model that I’ve never understood is, what is the physical mechanism that is partitioning the CO2 so that some of it is sequestered quickly, and some is sequestered slowly?

I don’t get how that is supposed to work. The reference given above says:

CO2 concentration approximation

The CO2 concentration is approximated by a sum of exponentially decaying functions, one for each fraction of the additional concentrations, which should reflect the time scales of different sinks.

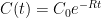

So theoretically, the different time constants (ranging from 371.6 years down to 1.33 years) are supposed to represent the different sinks. Here’s a graphic showing those sinks, along with approximations of the storage in each of the sinks as well as the fluxes in and out of the sinks:

Now, I understand that some of those sinks will operate quite quickly, and some will operate much more slowly.

But the Bern model reminds me of the old joke about the thermos bottle (Dewar flask), that poses this question:

The thermos bottle keeps cold things cold, and hot things hot … but how does it know the difference?

So my question is, how do the sinks know the difference? Why don’t the fast-acting sinks just soak up the excess CO2, leaving nothing for the long-term, slow-acting sinks? I mean, if some 13% of the CO2 excess is supposed to hang around in the atmosphere for 371.3 years … how do the fast-acting sinks know to not just absorb it before the slow sinks get to it?

Anyhow, that’s my problem with the Bern model—I can’t figure out how it is supposed to work physically.

Finally, note that there is no experimental evidence that will allow us to distinguish between plain old exponential decay (which is what I would expect) and the complexities of the Bern model. We simply don’t have enough years of accurate data to distinguish between the two.

Nor do we have any kind of evidence to distinguish between the various sets of parameters used in the Bern Model. As I mentioned above, in the IPCC SAR they used five time constants ranging from 1.33 years to 371.6 years (gotta love the accuracy, to six-tenths of a year).

But in the IPCC Third Assessment Report (TAR), they used only three constants, and those ranged from 2.57 years to 171 years.

However, there is nothing that I know of that allows us to establish any of those numbers. Once again, it seems to me that the authors are just picking parameters.

So … does anyone understand how 13% of the atmospheric CO2 is supposed to hang around for 371.6 years without being sequestered by the faster sinks?

All ideas welcome, I have no answers at all for this one. I’ll return to the observational evidence regarding the question of whether the global CO2 sinks are “rapidly diminishing”, and how I calculate the e-folding time of CO2 in a future post.

Best to all,

w.

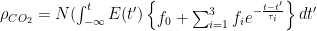

APPENDIX: Many people confuse two ideas, the residence time of CO2, and the “e-folding time” of a pulse of CO2 emitted to the atmosphere.

The residence time is how long a typical CO2 molecule stays in the atmosphere. We can get an approximate answer from Figure 2. If the atmosphere contains 750 gigatonnes of carbon (GtC), and about 220 GtC are added each year (and removed each year), then the average residence time of a molecule of carbon is something on the order of four years. Of course those numbers are only approximations, but that’s the order of magnitude.

The “e-folding time” of a pulse, on the other hand, which they call “tau” or the time constant, is how long it would take for the atmospheric CO2 levels to drop to 1/e (37%) of the atmospheric CO2 level after the addition of a pulse of CO2. It’s like the “half-life”, the time it takes for something radioactive to decay to half its original value. The e-folding time is what the Bern Model is supposed to calculate. The IPCC, using the Bern Model, says that the e-folding time ranges from 50 to 200 years.

On the other hand, assuming normal exponential decay, I calculate the e-folding time to be about 35 years or so based on the evolution of the atmospheric concentration given the known rates of emission of CO2. Again, this is perforce an approximation because few of the numbers involved in the calculation are known to high accuracy. However, my calculations are generally confirmed by those of Mark Jacobson as published here in the Journal of Geophysical Research.

There’s a massive CO2 sink that resides over Siberia during winter, it is rapidly ‘taken up’ by foliage during Spring and Summer.

We affect the partition in many different ways, on what we plant and harvest and what we do with the harvest. Numerous other biological systems do as well and none are fully understood.

excellent thought post Willis……..

I still can’t figure out how CO2 levels rose to the thousands ppm….

….and crashed to limiting levels

Without man’s help……….

The categories are fixed so that you can see net effects.

Their graph is made in a manner which would make readers not realize how much biomass growth occurs from human CO2 emissions.

Human emissions averaged around 27 billion tons a year of CO2 during the decade of 1999-2009 (on average 7 billion tons annually of carbon), which amounted to about 270 billion tons of CO2 added to the atmosphere. Meanwhile there was a measured increase in atmospheric CO2 levels of 19.4 ppm by volume, 155 billion tons by mass, an amount about 57% of the preceding but only 57% of it.

If one looks at where the other 115 billion tons went, it was a mix of uptake by the oceans and it going into increased growth of biomass (carbon fertilization from higher CO2 levels) / soil.

Approximately 18% (49 billion tons CO2, 13 billion tons carbon) went into accelerated growth of biomass / soil, and about 25% went into the oceans.

To quote TsuBiMo: a biosphere model of the CO2-fertilization effect:

“The observed increase in the CO2 concentration in the atmosphere is lower than the difference between CO2 emission and CO2 dissolution in the ocean. This imbalance, earlier named the ‘missing sink’, comprises up to 1.1 Pg C yr–1, after taking land-use changes into account.” “The simplest explanation for the ‘missing sink’ is CO2 fertilization.”

http://www.int-res.com/articles/cr2002/19/c019p265.pdf

In fact, global net primary productivity as measured by satellites increased by 5% over the past three decades. And, for example, estimated carbon in global vegetation increased from approximately 740 billion tons in 1910 to 780 billion tons in 1990:

http://cdiac.esd.ornl.gov/pns/doers/doer34/doer34.htm

Other observations include those discussed at http://www.co2science.org/subject/f/summaries/forests.php

The categories are fixed so that one can import a sense of order and predictability to a collection of processes that lack both. I can just as easily categorize the residence time of foodstuffs in my refrigerator as beverage, pre-packaged, and leftovers. That doesn’t mean I can predict whether the salsa will become empty within three months or stick around to generate new life forms. It presumes a level of understanding of the “carbon budget” that doesn’t exist. But with such a model I can calculate how much milk will be in my fridge in 2050. Regardless of that result, the dried clump of strawberry jam on the third shelf won’t be inconsistent with my projection.

Turtles, indeed.

Ah! A warmist recently told me that the residence time of CO2 is 2 to 500 years. I replied that that is quite the error bar. He probably had looked up that Bern model and got his information from there. But it really doesn’t make any sense and I would file it under make-work schemes or epicycles. A product of the Warmist Works Progress Administration.

“what is the physical mechanism that is partitioning the CO2 so that some of it is sequestered quickly, and some is sequestered slowly?”

“PV=nRT” basically dashes such a fantastic model on the rocks. A classic example of the modeller’s propensity to assume something corny in order to make the model do their bidding. One school assumes “well mixed” while its class clown decides to countermand the principles behind partial pressures. Hocus-pocus, tiddly-okus, next it’ll be a plague of locusts.

“Calculating” to 4.16 or 1.33 years indicates a remarkable of “accuracy” 3.65 days residence time for “some” CO2. The oceans do NOT absorb CO2 from the atmosphere. The oceans have “vast pools of liquid CO2 on the ocean floors” which keep constant supply of dissolved CO2 and other elemental gases in the water column ready to disperse. See Timothy Casey’s excellent “Volcanic CO2” posted at http://geologist-1011.net While there visit the “Tyndall 1861” actual experiment with Casey’s endnotes and the original translation of Fourier on greenhouse effect. Reality is different than the forced orthodoxy.

The average time molecules remain in the atmophere as a gas is probably a matter of hours. Think how fast a power plant plume vanishes to a global background level. Think about how clouds absorb and transport CO2. It is the different lengths of reservoir changing cycles that is changing the amount of gaseous CO2 we measure in the atmosphere. I have evidence that most of anthropogenic emissions cycle through the environment in about ten years and, at present, contribute less than 10% to atmospheric levels. Click on my name for details.

I’m way out of my depth here, but The Hockey Schtick wrote about The Bern Model with advice from “Dr Tom V. Segalstad, PhD, Associate Professor of Resource and Environmental Geology, The University of Oslo, Norway, who is a world expert on this matter.”

http://hockeyschtick.blogspot.co.uk/2010/03/co2-lifetime-which-do-you-believe.html

Imagine you have three tanks full of water: A, B, and C. A and B are connected with a large pipe. A and C are connected with a narrow pipe. The water levels start off equal.

We dump a load of water into tank A. Quite quickly, the levels in tanks A and B equalise, A falling and B rising. But the level in tank C rises only very slowly. Tank A drops quickly to match B, but then continues to drop far more slowly until it matches C.

Dumping an extra load in A while this is going on would again lead to another fast drop while it matched B again. It ‘knows’ which is which because of the amount in tank B.

I gather the BERN model is more complicated, and the parameters listed are an ansatz obtained by curve-fitting a sum of exponentials to the results of simulation. But I think the choice of a sum of exponentials to represent it is based on the intuition of multiple buffers, like the tanks.

John Daly wrote some stuff about this – I haven’t worked my way through it, so I can’t comment on validity, but I thought you might be interested.

http://www.john-daly.com/dietze/cmodcalc.htm

“Partitioning” is trivial

The simple case of a single exponent corresponds to a first-order linear equiation, but this does not describe the complex nature.

CO2 evolves according to a higher-order linear equation (or a system of first-order linear equations that is the same). Very reasonable. That is where the “partitioning” comes,

You write down these quations and look for eigenmodes. These are the exponents. IPCC effectively claims, there are 6 first order equations or a single 6th order linear equation. OK, no objection, although there can be even more eigenmodes, but let us assume these are the major 6.

Now, the general solution is a sum of these exponents with ARBITRARY pre-factors at each exponent. How to define these pre-factors?

The pre-factors are defined by the initial conditions: the particular CO2-level and 5 (6-1) derivatives (!). IPCC claims, these are 14%, 13%, 19%, 25%, 21%, and 8%. What does it mean?

Effectively IPCC claims to know the 5th derivative of CO2 population down to 1% accuracy level!!!

Sorry, as a physicist I cannot buy such accuracy in derivatives of an experimental value.

Two words… Occam’s Razor

CO2 is determined by climatic factors. Temperature-independent CO2 fluxes into/out of the atmosphere (especially minor ones like the human input) are compensated by the system (oceans).

If we could magically remove 100 ppm of the CO2 from the atmosphere in one day, what would be the transient system response (after 10 days, 1 month, a year…)?

Forgot smth to add.

IPCC claims to Bern model to find out these “prefactors” in simulations with a “CO2-impulse”.

The point is, the linear exponential solutions are valid only in the vicinity of an equilibrium. This means, IPCC must use an infinitesimal CO2-impulse to define the system response.

What does IPCC instead?

They assume the “CO2-impulse” being an instantaneous combustion of ALL POSSIBLE FOSSILE FUELS.

The response is in no way linear then and the results are just crap.

They even introduce some model for “temperature increase” due to higher CO2. The higher temperature means there is less absorption of CO2 by oceans etc…

This is not a science, but a clear misuse of it.

The idea CO2 is partitioned in any way sounds like complete bull to me – CO2 is quickly well mixed in the atmosphere. But its possible some CO2 sinks could be diminishing, that might help explain some of the increase in atmospheric concentration (plus as others have pointed out higher temperatures mean less absorption by the oceans).

I don’t think any discussion of the carbon cycle should be without mention of Salby’s work, if you haven’t seen it:

http://youtu.be/YrI03ts–9I

[REPLY: It was discussed here on April 19. -REP]

The basic assumption of this model seems to be that there is some “perfect” amount of CO2 that the earth tries to return to. Otherwise, if adding CO2 causes it to slowly go away, we should have no CO2 now, right? Thus, they must believe that it does down to this “perfect” amount and just stays there. Why does it stop diminishing? What mechanism could cause it to do so? For that matter, what mechanism would cause it to try and return to some “perfect” amount?

If CO2 goes down to this “perfect” amount and just stays there, CO2 over time should mostly be at that level all throughout history, is it? In fact, it goes up and down all the time, why is that? In fact, even in recent history it has gone up and down, that being the case, how can we even vaguely estimate how fast it will do so, much to the accuracy they claim here. In fact, since we know that CO2 goes up and down, if it does go down over time, we should be able to tell over a long enough time period how fast it goes down on average.Since we do have records of CO2 in the past, we should be able to compare this idea to the real world, and if what they are saying is true, that it goes down steadily over time (despite the actual records that say it does not), should we not be able to check this real world record against this model? Has it been checked? If it has not been checked, is this science? If it has not been checked, this model is fiction.

Also, their idea is that if CO2 increases, it then decreases, well, where does it go? The only place it can go is into the ground as oil, coal, natural gas, etc. This if we burn these, we are merely returning to the atmosphere what came from the atmosphere. This should return to the atmosphere what they claim here is being steadily removed. We need to keep doing this, otherwise we will run completely out of CO2, right? If this is not true, they need to demonstrate that CO2 will go down to this mythical “perfect” amount and just stays there.

Also, if CO2 is decreasing all the time, as they claim, yet it goes up and down over time (and note that the world does not end when it does), then something must be adding it, what, and is it enough to keep us from running out completely? Since we know that in the distant past there was far more CO2 (yet life flourished, go figure), yet now it is near to the level where all life on earth will die, we cannot rely on whatever natural processes add CO2 to bring it back up, since it obviously is not working, CO2 is dangerously low. We need to invent a way to return the CO2 back to the atmosphere. Occording to the IPCC, we have, now they are trying to stop us from doing what this model claims we must do to survive.

Once you understand the logical underlying assumption of this, that there must be a “perfect” level of CO2 that the earth tries to return to, the actual logic is:

The history of the earth shows that there is no perfect amount of CO2 that the earth tries to return to.

We the IPCC however, say that there is.

We say that because we wish it to be so.

We wish it to be so because if it is true, we can tax you and regulate you if it is not perfect.

We are the only authority on when it is perfect.

Ignore that real world behind the curtain!

Peter Huber used to make the controversial claim that North America is a carbon sink, based on a 1998 article in Science. This was based on prevailing winds blowing from West to East, with higher concentrtions of CO2 found on the West coast than the East. Later papers doing carbon inventories have disputed this. Huber responded that there was plenty of ways to miss inventory. Whoever is right, Huber makes a good case that the US does a better job than the rest of the world of replacing farmland with trees.

Isn’t just like a bunch of resistors in parallel? 1/R =1/r1+1/r2+1/r3…..

FTA: “Anyhow, that’s my problem with the Bern model—I can’t figure out how it is supposed to work physically.”

It is because the process of CO2 sequestration is not solved by an ordinary differential equation in time, but by a partial derivative diffusion equation. It has to do with the frequency of CO2 molecules coming into contact with absorbing reservoirs (a.k.a. sinks). If the atmospheric concentration is large, then molecules are snatched from the air frequently. If it is smaller, then it is more likely for an individual molecule to just bob and weave around in the atmosphere for a long time without coming into contact with the surface.

This gives rise to a so-called “fat-tail” response. Such a fat-tail response can be approximated as a sum of exponential responses with discrete time constants.

I am not, of course, advocating the Bern model parameters. The modeling process is reasonable and justifiable, but the parameterization is basically pulled out of a hat.

What we actually see in the data is that CO2 rate of change is effectively modulated by the difference in global temperatures relative to a particular baseline. What is more likely? That CO2 rate of accumulation responds to temperatures, or that temperatures respond to the rate of change of CO2? The latter would require that temperatures be independent of the actual level of CO2, which is clearly not correct. Hence, we must conclude that CO2 is responding to temperature, and not the other way around.

In case anyone misses the point, let me spell the implications out clearly: fat tail or no, the response time for sequestering the majority of anthropogenic CO2 emissions is relatively short, and the system is having no trouble handling it. CO2 levels are being dictated by temperatures, by nature, not by humans.

Interesting Willis. The problem reminds me of pharmacokinetics where the fate of drugs/toxins in the body are studied; wish I knew enough about pk to be more specific unfortunately it was only ancillary to my field of study. Any toxicologists around?

Thanks. I thought I was the only one that didn’t believe this fallacy.

The fast processes will finish with it’s CO2 then go after the next batch !

The alarmists just want an excuse to say CO2 remains in the atmosphere for 100 years which is can’t possibly do.

The different timings are only relevent for the first 376.1 years of the model after that they are totally irrelevant as all sinks will be working. In fact in the real world they will be totally irelevant as all the sinks will be working all of the time. It just padding for the report to make it look more technical.

CO2 works like resistors in parallel.

1/RT=1/R1 + 1/R2+1/R3

So the total resistance can’t be more than the smallest resistor.

for CO2 the 1/2 life of the total can’t be greater than the 1/2 life which is shortest.

The Bern Model needs to introduced to the Law of Entropy (diffusion of any element or compound within a gas or liquid to equal distribution densities). And it should also be introduced to osmosis and other biological mechanisms for absorbing elements and compounds across membranes.

In fact, it seems to need a serious dose of reality

Bart says:

May 6, 2012 at 11:51 am

I want to repeat this part of my post, because people may miss it in with the other stuff, and I think it is important.

What we actually see in the data is that CO2 rate of change is effectively modulated by the difference in global temperatures relative to a particular baseline.

What is more likely? That CO2 rate of accumulation responds to temperatures, or that temperatures respond to the rate of change of CO2? The latter would require that temperatures be independent of the actual level of CO2, which is clearly not correct. Hence, we must conclude that CO2 is responding to temperature, and not the other way around.

In case anyone misses the point, let me spell the implications out clearly: fat tail or no, the response time for sequestering the majority of anthropogenic CO2 emissions is relatively short, and the system is having no trouble handling it. CO2 levels are being dictated by temperatures, by nature, not by humans.

Bart says:

May 6, 2012 at 11:51 am

Thanks, Bart. That all sounds reasonable, but I still don’t understand the physics of it. What you have described is the normal process of exponential decay, where the amount of the decay is proportional to the amount of the imbalance.

What I don’t get is what causes the fast sequestration processes to stop sequestering, and to not sequester anything for the majority of the 371.6 years … and your explanation doesn’t explain that.

w.

Surely they don’t serious use the sum of five or six exponentials, Willis. Nobody could be that dumb. The correct ordinary differential equation for CO_2 concentration , one that assumes no sources and that the sinks are simple linear sinks that will continue to scavenge CO_2 until it is all gone (so that the “equilibrium concentration” in the absence of sources is zero (neither is true, but it is pretty easy to write a better ODE) is:

, one that assumes no sources and that the sinks are simple linear sinks that will continue to scavenge CO_2 until it is all gone (so that the “equilibrium concentration” in the absence of sources is zero (neither is true, but it is pretty easy to write a better ODE) is:

is the rate at which the ocean takes up CO_2. Left to its own devices and with only an oceanic sink, we would have:

is the rate at which the ocean takes up CO_2. Left to its own devices and with only an oceanic sink, we would have:

is the constant of integration. I mean, this is first year calculus. I do this in my sleep. The inverse of $R_1$ is the exponential decay constant, the time required for the original CO_2 level to decay to

is the constant of integration. I mean, this is first year calculus. I do this in my sleep. The inverse of $R_1$ is the exponential decay constant, the time required for the original CO_2 level to decay to  of its original value (for any original value

of its original value (for any original value  ). If there are two processes running in parallel, the rate for each is independent — if (say) trees remove CO_2 at rate $R_2$, that process doesn’t know anything about the existence of oceans and vice versa, and both remove CO_2 at a rate proportional to the concentration in the actual atmosphere that runs over the sea surface or leaf surface respectively. The same diffusion that causes CO_2 to have the same concentration from the top of the atmosphere to the bottom causes it to have the same concentration over the oceans or over the forests, certainly to within a hair. So both running together result in:

). If there are two processes running in parallel, the rate for each is independent — if (say) trees remove CO_2 at rate $R_2$, that process doesn’t know anything about the existence of oceans and vice versa, and both remove CO_2 at a rate proportional to the concentration in the actual atmosphere that runs over the sea surface or leaf surface respectively. The same diffusion that causes CO_2 to have the same concentration from the top of the atmosphere to the bottom causes it to have the same concentration over the oceans or over the forests, certainly to within a hair. So both running together result in:

, the exponential time constant is 1/5 of what it would be for one of them alone. This is not rocket science.

, the exponential time constant is 1/5 of what it would be for one of them alone. This is not rocket science.

,

,  :

:

Interpretation: Since CO_2 doesn’t come with a label, EACH process of removal is independent and stochastic and depends only on the net atmospheric CO_2 concentration. Suppose

where

If (say) trees and the ocean both remove CO_2 at the same independent rate, the two together remove it at twice the rate of either alone, so that the exponential time constant is 1/2 what it would have been for either alone. If there are five such independent sinks (where by independent I mean independent chemical processes), all with equal rate constants

This is completely, horribly different from what you describe above. To put it bluntly:

Compare this when

(correct) versus

(incorrect). The latter has exactly twice the correct decay time, and makes no physical sense whatsoever given a global pool of CO_2 without a label. The person that put together such a model for CO_2 — if your description is correct — is a complete and total idiot.

Note that this would not be the case if one were looking at two different processes that operated on two different molecular species. If one had one process that removed CO_2 and one that removed O_3, then the rate at which one lowered the “total concentration of CO_2 + O_3” would be a sum of independent exponentials, because each would act only on the partial pressure/concentration of the one species. However, using a sum of exponentials for independent chemical pathways depleting a shared common resource is simply wrong. Wrong in a way that makes me very seriously doubt the mathematical competence of whoever wrote it. Really, really wrong. Failing introductory calculus wrong. Wrong, wrong, wrong.

(Dear Anthony or moderator — I PRAY that I got all of the latex above right, but it is impossible to change if I didn’t. Please try to fix it for me if it looks bizarre.)

rgb

Nullius in Verba says:

May 6, 2012 at 11:19 am (Edit)

My thanks for your explanation. That was my first thought too, Nullius. But for it to work that way, we have to assume that the sinks become “full”, just like your tank “B” gets full, and thus everything must go to tank “C”.

However, since the various CO2 sinks have continued to operate year after year, and they show no sign of becoming saturated, that’s clearly not the case.

So what we have is more like a tank “A” full of water. It has two pipes coming out the bottom, a large pipe and a narrow pipe.

Now, the flow out of the pipe is a function of the depth of water in the tank, so we get exponential decay, just as with CO2.

But what they are claiming is that not all of the water runs out of the big pipe, only a certain percentage. And after that percentage has run out, the remaining percentage only drains out of the small pipe, over a very long time … and that is the part that seems physically impossible to me.

I’ve searched high and low for the answer to this question, and have found nothing.

w.

What about rain water, which, in its passage through the air dissolves many of the soluble gases e.g. CO2 present in the atmosphere, and which as part of ‘river waters’ eventually makes its way into the oceans?

River and Rain Chemistry

Book: “Biogeochemistry of Inland Waters” – Dissolved Gases

.

“The IPCC, using the Bern Model, says that the e-folding time ranges from 50 to 200 years.”

**********************

Strikes me as a pretty wide ranging estimate. More like a ‘WAG’.

I file this Bern Model under “more BAF (Bovine Academic Flatulence)”.

The physiology of scuba diving divides body tissues into different categories, with different “half-lives”, or nitrogen abosrption rates. Some tissues absorb, and release Nitrogen rapidly, others more slowly; they are given different diffusion coefficients.

Nitrogen absorbed in your tissues in diving is the cause of the bends.

Maybe the Bern Conspiracy is thinking that some absorption mechanisms operate at different rates others. How fast do forests absorb CO2 compared to oceans? etc. Perhaps that is what they are thinking.

mfo says:

May 6, 2012 at 11:19 am

mfo, take a re-read of my appendix above. The author of the hockeyschtick article is conflating the residence time and the e-folding time. As a result, he sees one person saying four years or so for residence time, and another person saying 50 to 200 years for e-folding time, and thinks that there is a contradiction. In fact, they are talking about two totally separate and distinct measurements, residence time and e-folding time.

w.

Willis Eschenbach says:

May 6, 2012 at 12:07 pm

“What I don’t get is what causes the fast sequestration processes to stop sequestering, and to not sequester anything for the majority of the 371.6 years … and your explanation doesn’t explain that.”

The best I can tell you is what I stated:”It has to do with the frequency of CO2 molecules coming into contact with absorbing reservoirs (a.k.a. sinks). If the atmospheric concentration is large, then molecules are snatched from the air frequently. If it is smaller, then it is more likely for an individual molecule to just bob and weave around in the atmosphere for a long time without coming into contact with the surface.” The link I gave explains it from a mathematical viewpoint.

rgbatduke says:

May 6, 2012 at 12:12 pm

“The correct ordinary differential equation…”

It’s a PDE, not an ODE. See comment at May 6, 2012 at 11:51 am.

About 1/2 of the annual human emission are absorbed each year. If they weren’t the growth in CO2 as a % of total would be growing, which it isn’t.

Assuming an exponential rate of uptake, we have a series something like this:

1/2 = 1/4 + 1/8 + /16 + 1/32 ….

With each year absorbing 1/2 of the residual of the previous, to match the rate of the total.

ie: R = R^2 + R^3 + R^4 … R^n , where 0 < R infinity.

What this means is that tau is 2 years. 1/4 + 1/8 = 0.25 + 0.125.= 0.375 = approx 1/e

The mathematical and physical ability of climate scientists appears to be very poor.

The worst case is the assumption by Houghton in 1986 that a gas in Local Thermodynamic Equilibrium is a black body. This in turn implies that the Earth’s surface, in radiative equilibrium, is also a black body, hence the 2009 Trenberth et. al. energy budget claiming 396 W/m^2 IR radiation from the earth when the reality is presumably 63 of which 23 is absorbed by the atmosphere.

The source of this humongous mistake is here: http://books.google.co.uk/books?id=K9wGHim2DXwC&pg=PA11&lpg=PA11&dq=houghton+schwarzschild&source=bl&ots=uf0NxopE_H&sig=8vlpyQINiMyH-IpQrWJF1w21LQU&hl=en&sa=X&ei=6Z2mT7XyO-Od0AWX3LGTBA&ved=0CGMQ6AEwBA#v=onepage&q&f=false

Here is the [good] Wiki write-up: http://en.wikipedia.org/wiki/Thermodynamic_equilibrium

‘In a radiating gas, the photons being emitted and absorbed by the gas need not be in thermodynamic equilibrium with each other or with the massive particles of the gas in order for LTE to exist….. If energies of the molecules located near a given point are observed, they will be distributed according to the Maxwell-Boltzmann distribution for a certain temperature.’

So, the IR absorption in the atmosphere has been exaggerated by 15.5 times. The carbon sequestration part is no surprise; these people are totally out of their depth so haven’t fixed the 4 major scientific errors in the models.

And then they argue that because they measure ‘back radiation’ by pyrgeometers, it’s real They have even cocked this up: a radiometer has a shield behind the detector to stop radiation from the other direction hitting the sensor assembly. So, assuming zero temperature gradient, the signal they measure is an artefact of the instrument because in real life it’s zero. What is measures is temperature convolved with emissivity, and so long as the radiometer points down the temperature gradient, that imaginary radiation cannot do thermodynamic work!

This subject is really the limit of cooperative failure to do science properly. Even the Nobel prize winner has made a Big Mistake!

I’m not sure of the significance of the e-folding time. I presume it must be related to the rate at which a particular sink absorbs CO2, in which case why not use the absorption time? As for the partitions, I just don’t get it. Surely there must be a logical explanation in the text for the various percentages listed.

ferd berple says:

May 6, 2012 at 12:25 pm

“About 1/2 of the annual human emission are absorbed each year.”

In the IPCC framework, that 1/2 dissolves rapidly into the oceans. So, if you include both the oceans and the atmosphere in your modeling, there is no rapid net sequestration.

I agree with the IPCC on the former. But, I do not agree with them that the collective oceans and atmosphere take a long time to send the CO2 to at least semi-permanent sinks.

Bart says:

May 6, 2012 at 12:21 pm

No, the link you gave explains simple exponential decay from a mathematical viewpoint, which tells us nothing about the Bern model.

w.

Willis said: What I don’t get is what causes the fast sequestration processes to stop sequestering, and to not sequester anything for the majority of the 371.6 years … and your explanation doesn’t explain that.

================================

Either there are five different types of CO2…..or CO2 is not well mixed at all……or each “tau” has a low threshold cut off point

The only things that can have a low threshold cutoff point are biology

Cos the other sinks are fully saturated, or already absorbing all they can, so they leave the rest behind for the other longer sinks – then they can claim the natural sinks are saturated and we are evil despite it making no sense. Its all based on the assumption that before 1850 co2 levels were constant and everything lived in a perfect state of equilibirum just on the very the systems absorbtion capacity. Ahhh the world of climate science!

I must step away, so apologies if anyone has a question or challenge to anything I have written. Will check the thread later.

All I’ve got time for today is: Here we go again …

Welcome to the ‘troop’ which denies the measurable EM-nature of bipolar gaseous molecules, e.g. is studied in the field of IR Spectroscopy.

.

The rate of each individual sink is meaningless. What is important is that the total increase each year remains approximately 1/2 of annual emissions. Everything else is simply the good looking girls the magician uses to distract the audience from the sleight of hand.

As Willis points out, the thermos cannot know if the contents are hot or cold. Similarly, the sinks cannot know how long the CO2 has been in the atmosphere, so you cannot have differential rates depending on the age of the CO2 in the atmosphere.

1/2 the increased CO2 is absorbed each year. therefore 1/2 the residue must also be absorbed year to year. The sinks cannot tell if it is new CO2 or old CO2.

What are the mechanisms for removing CO2 from the atmosphere?

1. Asorbsion at the surface of seas and lakes

2, Absorbsion by plants through their leaves

3, Washed out by rain.

Any others?

What is the split in magnitude between these methods because I’d expect some sort of equilibrium for each of 1 & 2 whereas 3 seems to be one way.

Every year this lady named Mother Nature adds a whole lot of CO2 to the atmosphere, and every year she takes out a whole lot. The amount she adds in a given year is only loosely correlated with the amount she takes out, if at all. Year after year we add a little more CO2 to the atmosphere, still only around 4% of the average amount MN does. There is no basis for contending that the amount we add is responsible for what may or may not be an increased concentration with respect to recent history. All we know is that CO2 frozen in ice averages around 280 ppm, but this is definitely an average value as the ice can take hundreds of years to seal off. The only numbers in this entire discussion that have a basis in fact are 220 gT in and out, and an average four year residence time. All else is speculation/conjecture/WAG.

Occam’s Razor rules as always.

Bart says:

May 6, 2012 at 12:32 pm

In the IPCC framework, that 1/2 dissolves rapidly into the oceans.

Nonsense. The oceans cannot tell if that 1/2 comes from this year or last year. If the oceans rapidly absorb 1/2 of the CO2 produced this year, then they must also rapidly absorb 1/2 the remaining CO2 from last year in this year. And so on and so on, for each of the past years.

The ocean cannot tell when the CO2 was produced, so it cannot have a different rate for this years CO2 as compared to CO2 remaining from any other year.

rgbatduke says:

May 6, 2012 at 12:12 pm

Thanks, Robert, your contributions are always welcome. Unfortunately, that is exactly what they do, with the additional (and to my mind completely non-physical) restriction that each of the exponential decays only applies to a certain percentage of the atmospheric CO2. Take a look at the link I gave above, it lays out the math.

Your derivation above is the same one that I use for the normal addition of exponential decays. I collapse them all into the equivalent single decay with the appropriate time constant tau.

But they say that’s not happening. They say each decay operates only and solely on a given percentage of the CO2 … that’s the part that I can’t understand, the part that seems physically impossible.

w.

_Jim says:

May 6, 2012 at 12:35 pm

_Jim and Mydogs, please, this thread is about CO2 sequestration and the Bern Model. Please take the blackbody discussion to some other more appropriate thread.

Thanks,

w.

ferd berple says:

Your comment is awaiting moderation.

May 6, 2012 at 12:39 pm

Bart says:

May 6, 2012 at 12:32 pm

In the IPCC framework, that 1/2 dissolves rapidly into the oceans.

ps: when I said “nonsense” I was referring only to the IPCC framework or any other mechanism that suggests different absorption rates based on the age of the CO2 in the atmosphere.

Willis Eschenbach says:

May 6, 2012 at 12:33 pm

“No, the link you gave explains simple exponential decay from a mathematical viewpoint, which tells us nothing about the Bern model.”

No, that’s not what it explains at all. It is a statistical model in which the probability distribution is exponential, to be used in finding a solution of the Fokker-Planck equation. The “decay” he shows is actually 1/(1+a*sqrt(t)), the reciprocal of 1 plus a constant time the square root of time.

Sorry I cannot explain it better right now. Must go.

son of mulder asks:

“Any others?”

There is overwhelming evidence that the biosphere is expanding due to the increase in CO2. There is no doubt about that. Therefore, it is not in ‘equilibrium’. As ferd berple points out, more of the increase is absorbed every year.

In addition, the oceans contain an enormous quantity of calcium, which is utilized by biological processes to form protective shells for organisms. Those organisms require CO2. With more CO2 available, those organisms rapidly proliferate. When they die, they sink to the ocean floor, thus permanently removing CO2 from the atmosphere.

The planet is greening due to the added CO2, which is completely harmless at current and future concentrations. If CO2 increases from 0.00039 of the atmosphere to 0.00056 of the atmosphere, it is still a very minor trace gas. At such low concentrations plants are the only thing that will notice the change. And any incidental warming will be minor, and welcome.

I am particularly intrigued by the 17.01 year figure, I had no idea climate science was so precise.

son of mulder says:

May 6, 2012 at 12:36 pm

Any others?

================

bacteria…..the entire planet is one big biological filter

They are the most abundant…………..or we wouldn’t be here

Willis Eschenbach – “However, there is nothing that I know of that allows us to establish any of those numbers. Once again, it seems to me that the authors are just picking parameters.”

I would point out a very important part of the link you referenced (http://unfccc.int/resource/brazil/carbon.html):

“All IRFs are obtained by running the Bern model (HILDA and 4-box biosphere) as used in SAR or the Bern CC model (HILDA and LPJ-DGVM) as used in the TAR.” – (IRF’s -> impulse response functions, time factors, and final percentages)

The percentages you quoted are the resulting partial absorptions of various climate compartments resulting from running the Bern model, which is described in Siegenthaler and Joos 1992 (http://tellusb.net/index.php/tellusb/article/viewFile/15441/17291). In short, those percentages are results, not the inputs, of running the Bern model – presented by Joos et al for use by other investigators if they wish to apply the Bern model to their calculations.

Also note the statement that “Parties are free to use a more elaborate carbon cycle model if they choose.” Again – the results of the Bern model were offered as an available computational tool for further work.

I hate to say this, but you give the impression you did not fully read the UN reference (with percentages) that you opened the discussion with…

“My thanks for your explanation. That was my first thought too, Nullius. But for it to work that way, we have to assume that the sinks become “full”, just like your tank “B” gets full, and thus everything must go to tank “C”.”

That’s where I was going with the following paragraph. The buffer ‘tank B’ doesn’t stop absorbing because it’s full, it stops absorbing because the levels equalise. If you keep pouring water into tank A continuously, the water level keeps going up in B continuously. The tanks have infinite capacity, but the ratios of their capacities are much smaller.

The partitioning is the equivalent of the ratio of surface areas in each tank. If A and B are of equal size, then half the water in A flows into B and half stays where it is. If B is a lot bigger than A, then the level in A drops more and the level in B only rises a tiny amount heightwise, although the changes in volume are the same. The atmospheric analogy to surface area is the derivative of buffer content with respect to concentration.

I just went through that post with Salby video (didn’t have time before). Amazing that people still misunderstand the natural CO2-rise argument (like by Salby). Again:

The rise in the atmospheric CO2 is caused by warming climatic factors. The source is anthropogenic CO2, because it’s available in the atmosphere, but the cause is the warmth. Without anthropogenic CO2, oceans would have to release the necessary CO2 to achieve the climatically driven atmospheric CO2.

rgbatduke says:

May 6, 2012 at 12:12 pm

Robert, if I understand you correctly, I can’t tell you how happy I am to see you write this. That was my first response when I read the linked description of the Bern Model, it made my head explode. Unfortunately, I didn’t (and don’t) have your familiarity with the underlying math, so I had to set up some separate sample exponential decay streams in Excel, combine them, and then calculate iteratively the joint time constant … all of which very clumsily lead me to the conclusion that you established with a few lines of math …

Since I did that, which was maybe five years ago, I’ve questioned it and people have given vague handwaving explanations for something that I, like you, consider to be “wrong, wrong, wrong” and without any physical justification.

w.

Willis, with all due respect, that is ALL I had (and have) time for; I have to ‘be somewhere’ shortly. Thanks. I ‘capeesh’/capisce/’savvy’ the expressed desire to stick-to-the-issue-presently-being-debated, too. Good luck with your present efforts, and with that I gotta run … 73’s

.

CO2 started increasing about 1750. Human emissions of CO2 more-or-less started at that time as well. Here is a chart of Human Emissions in CO2 ppm versus the amount of CO2 that actually stayed in the air each year (the airborne fraction – about 50%) since 1750.

http://img163.imageshack.us/img163/9917/co2emissandcon1750.png

Global CO2 levels only increased 1.94 ppm last year (to 390.45 ppm – a little lower than expected) while human emissions continued increasing to about 9.8 billion tonnes Carbon (about 4.6 percentage points of CO2).

The natural sinks of CO2 have been increasing gradually over time so that they are now over 224 billion tons Carbon versus 220 billion tons in 1750. (the actual natural sinks and sources level might be closer to 260 billion tons going by some recent estimates of plant take-up but none-the-less).

http://img233.imageshack.us/img233/1323/carbonnatsinks1750.png

The amount that the natural sinks absorb each year seems to be directly related to the concentration in the atmosphere. There is an equilibrium level of CO2 at about 275 ppm in non-ice-age conditions (this is the level it has been at for the past 24 million years).

So the natural sinks and sources are in equilbrium (give or take) when the CO2 level is 275 ppm or the Carbon level in the atmosphere is 569 billion tonnes.

The rise of the natural sinks over the past 250 years indicate the sinks will absorb down or sequester about 1.0% per year of the excess over this 569 billion tons or 275 ppm.

The last 65 years have been very close to the 1.0% level. It doesn’t matter how much we add each year. The plants and oceans and soils will respond to how much is in the air, not how much we add. And it is about 1.0% of the excess Carbon in the amtosphere each year – Bern model or no.

http://img580.imageshack.us/img580/521/co2absor17502011.png

It will take about 150 years to draw down CO2 to the equilibrium of 275 ppm if we stop adding to the atmosphere each year. Alternatively, we can stabilize the level just by cutting our emissions by 50%.

To attempt to clarify what I wrote in my previous post (http://wattsupwiththat.com/2012/05/06/the-bern-model-puzzle/#comment-978032):

The exponentials, percentages, and time factors in the link Willis Eschenbach provided are approximations that reproduce the results of running the Bern model – much as 3.7 W/m^2 direct forcing per doubling of CO2 is the approximation of running radiative code such as MODTRAN, allowing quick calculations without having to run the model over and over again. I.e., the percentages and time factors are shorthand for the model.

As Joos stated in that link (http://unfccc.int/resource/brazil/carbon.html), the Bern model approximations were offered as a tool for use by others, and “Parties are free to use a more elaborate carbon cycle model if they choose.”

OK, I’ve looked at the model details via the provided link. They are frigging insane. I mean seriously, one should just take the article’s provided advice and ‘use a more complex model’ if we like. I like. Here is a very simple linear model. Still too simple, but at least I can justify its structure:

, that is completely independent of the concentration

, that is completely independent of the concentration  . Because the atmosphere is vast, the percentage of CO_2 in the atmosphere can be considered to be the amount of CO_2 added divided into the total where the latter basically does not vary, hence I don’t need to work harder and write an ODE that saturates at 100% CO_2 — we are in the linear growth regime of a saturating exponential and I can assume assume that the concentration increases linearly at a constant rate independent of how much is already there (true until a significant fraction of the atmosphere is CO_2, utterly true when 400 ppm is CO_2).

. Because the atmosphere is vast, the percentage of CO_2 in the atmosphere can be considered to be the amount of CO_2 added divided into the total where the latter basically does not vary, hence I don’t need to work harder and write an ODE that saturates at 100% CO_2 — we are in the linear growth regime of a saturating exponential and I can assume assume that the concentration increases linearly at a constant rate independent of how much is already there (true until a significant fraction of the atmosphere is CO_2, utterly true when 400 ppm is CO_2). .

.  and

and  are themselves directly proportional to (or more generally dependent on) other sensible quantities — we might expect the former to be proportional to the total surface area of the ocean for example, or to be related to some function of its area, its local temperature, and the concentration of CO_2 in the water already (which MIGHT vary appreciably geographically, as seawater is not well-mixed and it has its own sources and sinks). We might expect the latter to be dependent on the total surface area of CO_2 scavenging tree leaves, or more simply to total acreage of trees, again leaving open a more complex model that couples in the further modulation by water availability, hours of sunlight, and so on. Still, averaging over these latter probably makes this simple model already pretty reasonable.

are themselves directly proportional to (or more generally dependent on) other sensible quantities — we might expect the former to be proportional to the total surface area of the ocean for example, or to be related to some function of its area, its local temperature, and the concentration of CO_2 in the water already (which MIGHT vary appreciably geographically, as seawater is not well-mixed and it has its own sources and sinks). We might expect the latter to be dependent on the total surface area of CO_2 scavenging tree leaves, or more simply to total acreage of trees, again leaving open a more complex model that couples in the further modulation by water availability, hours of sunlight, and so on. Still, averaging over these latter probably makes this simple model already pretty reasonable.

and where

and where  is the steady state concentration one arrives at eventually from any starting concentration, as long as

is the steady state concentration one arrives at eventually from any starting concentration, as long as  (see linearization requirement above).

(see linearization requirement above).  is a constant of integration used to set the initial conditions. If you started from no CO_2 in the air at all, you would make

is a constant of integration used to set the initial conditions. If you started from no CO_2 in the air at all, you would make  so that

so that  . We don't start from zero, so we have to choose it such that

. We don't start from zero, so we have to choose it such that  comes out right. At the steady state concentration, the sinks remove CO_2 at the rate

comes out right. At the steady state concentration, the sinks remove CO_2 at the rate  , balancing the sources.

, balancing the sources. and one approaches it exponentially with time constant

and one approaches it exponentially with time constant  — you can’t get much simpler than that. In fact, if you know

— you can’t get much simpler than that. In fact, if you know  and can measure

and can measure  one can is done, no need for complex integrals over sums of exponential sinks times a source rate (what the hell does that even MEAN).

one can is done, no need for complex integrals over sums of exponential sinks times a source rate (what the hell does that even MEAN). for example, describing source production that is increasing linearly in time, or

for example, describing source production that is increasing linearly in time, or  , a model that assumes source production is itself increasing towards an eventual peak at some rate with exponential time constant

, a model that assumes source production is itself increasing towards an eventual peak at some rate with exponential time constant  . The former suffers from the flaw that it increases without bound. The latter is probably not terrible, but I’m guessing CO_2 sources are bursty and that this equation is a pretty crude approximation of the industrial revolution and eventual saturation of production/sources. Both suffer from the fact that CO_2 production might depend on the concentration

. The former suffers from the flaw that it increases without bound. The latter is probably not terrible, but I’m guessing CO_2 sources are bursty and that this equation is a pretty crude approximation of the industrial revolution and eventual saturation of production/sources. Both suffer from the fact that CO_2 production might depend on the concentration  — although these production mechanisms can probably be handled with negative

— although these production mechanisms can probably be handled with negative  .

. depends on the temperature! A warming ocean can be a CO_2 source (or a heavily reduced sink as its uptake is reduced). A cooling ocean can sequester more CO_2, faster. But even this is too simple because part of the eventual sequestration involves chemistry and biology that depend on temperature, sunlight, animals activity, ocean currents and nutrients… so it is with all of the rates. They themselves might be — indeed, almost certainly are — functions of time!

depends on the temperature! A warming ocean can be a CO_2 source (or a heavily reduced sink as its uptake is reduced). A cooling ocean can sequester more CO_2, faster. But even this is too simple because part of the eventual sequestration involves chemistry and biology that depend on temperature, sunlight, animals activity, ocean currents and nutrients… so it is with all of the rates. They themselves might be — indeed, almost certainly are — functions of time!

Interpretation: We make CO_2 at some rate,

However, CO_2 is removed from the atmosphere by processes that literally have a probability of removing a CO_2 molecule per unit time, given the presence of a molecule to remove. They are all proportional to the concentration. If I double the concentration, I present twice as many molecules per second to the e.g. surface of the sea as candidates for adsorption or to the stoma of a leaf as candidates for respiration and conversion into cellulose or sugar or whatever. They are all independent; if some particular wave removes a molecule of CO_2 at 11:37 today, a leaf on a tree in my back yard doesn’t know about it. The removed CO_2 has no label, and the jostling of molecules in the well-mixed warm air guarantees that one cannot even meaningfully deplete the local concentration of CO_2 by this sort of process, so both remain proportional to the same total concentration

The nice thing about this is that it is a well-known linear first order inhomogeneous ordinary differential equation, and can be directly integrated just as simply as the previous one. The result is (non-calculus people can take my word for it):

where

This simple linear response model shows precisely how one expects the eventual atmospheric concentration of CO_2 to saturate as long as saturation is achieved at low net concentrations of the total atmosphere such that the total relative fractions of N_2 and O_2 were the same and are still much larger than CO_2 taken together. And it is a well known and easily understood one. Equilibrium is

Now as models go this one sucks — it is arguably TOO simple, but it is easy to fix. For example, the ODE is the same if one has a source rate that isn’t constant but is itself a function of time —

A bigger problem is that for the ocean

However, even if we put far more complex differential forms into this ODE, it remains pretty easy to solve without making any sort of formal approximation or decomposition. Matlab lets one program it in and solve it in a matter of minutes, and graph or otherwise present the results at the same time. Writing a parametric form and then fitting the parameters to past data in hope of predicting the future is also possible, although it is a bit dicey as soon as you have a handful of nonlinear parameters because then one is trying to optimize a possibly non-monotonic function on a multidimensional manifold, which is the literal definition of “complex systems” in the Santa Fe institute sense.

Unless you know what you are doing — and few people do — you are likely to start the optimization process out with some set of assumptions and optimize with e.g. a gradient search to find an optimum that “confirms” those assumptions, ignoring the fact that a far better fit is available but is nowhere particularly near your initial guess. In a rough landscape, there might always be local maxima near at hand to get trapped on, and even finding the right neighborhood of the optimal fit can be challenging. Imagine an ant searching for the highest point on the surface of the earth by going up from wherever you drop them. Nearly every point they get dropped on will take them to the top of a grain of sand or a small hill. Only a teensy fraction of the Earth’s surface is mountains, a smaller one big mountains, a handful of mountains the highest peaks in a range, and one range the right range, one mountain the right mountain, one small area of slopes the right SLOPE, ones, that go straight on up to the top without being trapped.

There may be some way to formally justify the Bern model. Offhand I can’t see it — integrating E(t) is fine, but integrating it while multiplying it by that bizarre sum of exponential terms? It doesn’t even look like it has the right asymptotic form, implicit saturation. In other words, although it uses time constants, the time constants aren’t the time constants of a presumed exponential sequestration process that removes CO_2 at a rate proportional to its concentration, they are more like “relaxation times”. The expression looks like an unbounded integral growth in concentration modulated by temporal relaxation times that have nothing to do with concentration but rather describe something else entirely.

Just what is an interesting question, but this is not a sensible sequestration model, which is necessarily at least proportional to concentration. At higher concentrations, plants take up more of it and grow faster. The ocean absorbs more of it (at constant temperature) because more molecules hit the surface per unit time. More of the CO_2 that makes it into the ocean is taken up by algae and bound up, eventually to rain down onto the sea floor, removed from the game for a few hundred million years.

I cannot believe that there isn’t anybody out there in climate-ville that hasn’t worked all this out in a believable model of some sort, something that is a perturbation of the first order linear model I wrote out above. If not, shame on them.

rgb

In relation to sources and sinks, can Willis, or anyone else explain this image of global CO2 concentrations ?

Why don’t warm tropical oceans give high CO2 ?

Why is there a band of high CO2 around the 35S ?

How is the distribution over Africa and S America explained ?

Why does Antarctic ice appear to be such a strong absorber in parts and why such strong striation?

http://www.seos-project.eu/modules/world-of-images/world-of-images-c01-p05.html

Seems this is a mental image issue – like putting the cart in front of the mule. It isn’t the carbon dioxide in the atmosphere that controls the timing. You can buy paints, some are fast-drying. Some dry slowly. Eventually, they all dry. CO2 sinks (hundreds, not 6 or 3) do their thing in their own way, so other things equal (constant CO2 levels), a very slow process might take 371.3 years to sequester a unit of gas, a very fast process might take 1.33 years to do the same. Thus, the numbers (wherever they came from) might have meaning – just not that described. So, one should really call it the Bourne Model insofar as the identity of the processes is a mystery and no one is sure just what is going on.

Well, whatever the Bern model does, it must be correct. After all, once you match the results of 9 GCM models (except one outlier), you have matched them all. CO2 sensitivity was assumed to be 2.5 K to 4.5 K in the models.

” After 80 years, the increase in global average surface temperature is 1.6 K and 2.4 K, respectively. This compares well with the results of nine A/OGCMs (excluding one outlier) which are in the range of 1.5 to 2.7 K”

KR says:

May 6, 2012 at 12:58 pm

Say what?

Look, KR, they used the box model to calculate the IRFs. The part you seem to be missing is that once they have used the box model to calculate the IRFs, then

claiming that the IRFs represent actual physical processes.

Next, you say the IRFs are the “resulting partial absorptions of various climate compartments”. This is similar to what the citation says, that they “reflect the time scales of different sinks”. Both of you are claiming that the IRFs have reflect real-world processes… so could you explain just how it is that the atmosphere is divided into “various climate compartments”, some of which decay quickly and some slowly?

You say ” the results of the Bern model were offered as an available computational tool for further work” … I understand that. What I don’t understand is the physical basis for what they are claiming, which is that e.g. 13% of the airborne CO2 hangs around with an e-folding time of 371.6 years, but is not touched during that time by any of the other sequestration mechanisms.

Perhaps you could explain to us how that works, that certain CO2 molecules are sequestered but some are immune to sequestration for hundreds of years.

I also note that there are no less than four different (and in fact very different) IFTs that have been used by the UN … hardly what one would expect if they actually are the “resulting partial absorptions of various climate compartments”. How are we supposed to pick one of them?

The SAR used five IRFs reflecting, according to you, five “climate compartments”, while the TAR used only three. What happened to two of the “climate compartments” between the SAR and the TAR … the IRFs just disappeared, what happened to the “climate compartments” that you claim they represent? Did they go out of business?

More to the point, why should we believe the model at all? The conclusion of your citation says:

The model calculates CFC-II well, but it misses on CO2. In other words, the model doesn’t work very well, and even the authors don’t understand why their model doesn’t work … not encouraging.

w.

Fascinating stuff, cheers Willis. So instead of trying to capture CO2 underground why not just dump into a high speed sink?

KR says:

May 6, 2012 at 1:19 pm

Both you and the citation I gave have said that the IRFs represent actual physical processes. You said the IRFs represent the “ resulting partial absorptions of various climate compartments”. My citation says that the IRFs “ reflect the time scales of different sinks”

Now you want to claim that this is just a way to approximate the model. Come back when you make up your mind and can explain why the citation is wrong.

w.

PS—See also Robert Brown’s comments above (rgbatduke) …

So … does anyone understand how 13% of the atmospheric CO2 is supposed to hang around for 371.6 years without being sequestered by the faster sinks?

Perhaps you need to look at this the other way around. We have often heard about the 800 year time lag between high temperatures and CO2 concentrations. Is it a coincidence that 371.6 is about half of 800? Is it possible that if the CO2 concentration were to suddenly drop, then various processes would act to raise the CO2? And that the part of the CO2 that is in the deep oceans may take 371.6 years to reach the atmosphere and add 13% to the overall increase in CO2 concentration?

RGB at duke says:

May 6, 2012 at 1:21 pm

…Please try to fix it for me if it looks bizarre.

Here is something bizarre that no one can do much about, let alone fix it.

http://www.vukcevic.talktalk.net/TMC.htm

Dr Burns says:

May 6, 2012 at 1:30 pm

Why does Antarctic ice appear to be such a strong absorber…

Antarctic is simply bizarre, see the link above

They may be discussing on-rate constants only. The concept might be equilibrium and saturation in different reservoirs. The on-rate for a given reservoir is the atmospheric concentration times the rate constant. The off rate is the reservoir concentration times the off-rate constant. If the on-rate equals the off-rate, then the reservoir is at equilibrium. No net uptake would occur.

rate(on) = k(on) * [conc(air)]

rate(of) = k(off) * [conc(res)]

No net uptake occurs when rate(on) = rate(off), that’s equilibrium.

In the real case, you also have a loss rate for CO2 via other routes, e.g. diatom skeletons in the ocean, and leaves or grasses on land. Which means the reservoirs don’t necessarily saturate, and they can continue to take up more CO2. In some cases, the on-rate may depend on the rate of dropout loss in that reservoir since near equilibrium limits the net uptake.

d(CO2 air)/dt = k(off) * [CO2(res)] – k(on) * [CO2(air)]

d(CO2 res)/dt = k(on) * [CO2(air)] – k(off) * [CO2(res)] – k(dropout) * [CO2(res)]

If the reservoir is in dynamic equilibrium, and to a reasonable approximation the reservoir concentration doesn’t change. Then

-k(on) * [CO2(air)] – k(off) * [CO2(res)] = k(dropout) * [CO2(res)] ,

which means both sides of the equation are constant, since CO2(res) doesn’t change.

Since the dropout material doesn’t readily cycle back to the air via the reservoir, it only makes sense when the dropout material can be returned to the atmosphere via another route, e.g. biological digestion, fire, volcanoes, or burning fossil fuel (coal). Returning the dropout material to the atmosphere creates the Carbon cycle we think about.

Partitions in the atmosphere itself make no sense, unless you are silly enough to count flying birds (%P) <- that's an emoticon.

From 1976 to 1997, atmospheric 14CO2 was measured. The levels spiked after due to atmospheric testing of nuclear weapons that ended in the 1960s. These data show the 14CO2 half-life is about 11 years (see raw data here: http://cdiac.ornl.gov/trends/co2/cent-scha.html). In the first approximation, uptake mechanisms won't know the difference between carbon isotopes. It is quite safe to say half of the CO2 in the atmosphere is turned over in about 11.4 years. Five half-lives is about 57 years. That means only about 3% of the CO2 present in the atmosphere 57 years ago is still in the air.

It seems we have an idea of what the dropout rate for CO2 must be, and thus what the replenishment rate must be to keep the atmosphere in roughly steady state. In this simple model, a sudden change in atmospheric CO2 concentration could shift the equilibrium concentration in the reservoirs, and then establish a new constant uptake rate somewhat higher than the old one. If you think about it, perhaps that does make some sense in some cases, such as faster plant growth, or more alkalinty in the ocean (sorry catastrophists, biological action converts carbonic acid to bicarbonate, e.g. by nitrogen fixation).

Yes, models can be misused. It's up to you to decide when they are appropriate.

http://www.seos-project.eu/modules/world-of-images/world-of-images-c01-p05.html

That’s July, two months after annual peak.

Why don’t warm tropical oceans give high CO2 ?

Maybe they do, but you can’t evaluate “vertical” fluxes only on the basis of concentrations in a month (average). The horizontal transport of CO2 in the atmosphere is spatialy and temporarily very dynamic (sesonal). It could also be rain (CO2 scrubbing) in the tropics…

Why is there a band of high CO2 around the 35S ?

High sommer in NH?

How is the distribution over Africa and S America explained ?

Why does Antarctic ice appear to be such a strong absorber in parts and why such strong striation?

Others should speculate. Seasons, moisture, snow, sst, surface altitude, energy budget, mass budget…

Since you like differential equations…

Start with three variables A, B, and C. The volume of flow from A to B is k_AB (A-B), and the volume of flow from A to C is k_AC (A-C).

So

dA/dt = k_AB (B-A) + k_AC (C-A) + E

dB/dt = k_AB (A-B)

dC/dt = k_AC (A-C)

where E is the rate of emission.

Treat L = (A, B, C) as a vector, ignore E for the moment to get dL/dt = ML where M is a matrix of constants. Diagonalise the matrix to get two independent differential equations (the rows of M are not linearly independent), each giving a separate exponential decay with a different time constant. Transforming back to the original variables gives a sum of exponentials.

(I think the two time constants are -k_AB-k_AC +/- Sqrt(k_AB^2-k_AB k_AC +k_AC^2) but I did it quickly.)

Hi _Jim: I did not state that there is no absorption of IR by GHGs. What l add though is that the present IR physics which claims 100% direct thermalisation is wrong and thermalisation is probably indirect at heterogeneous interfaces.

The reason for this is kinetic. Climate Science imagines that the extra quantum of vibrational resonance energy in an excited GHG molecule will decay by dribs and drabs to O2 and N2 over ~1000 collisions so it isn’t re-emitted. This cannot happen: exchange is to another GHG molecule and the principle of Indistinguishability takes over. [See ‘Gibbs’ Paradox’]

This is yet more scientific delusion in that all it needs is the near simultaneous emission of the same energy photon from a an already thermally excited molecule thus restoring LTE. This happens throughout the atmosphere at the speed of light so the GHGs are an energy transfer medium. The conversion to heat probably takes place mainly at clouds.

Frankly I am annoyed because this is the second tome through ignorance you have called me out this way. It’s because i have 40 years’ post PhD experience in applied physics I can show how climate science has been run by amateurs who have completely cocked the subject up. Nothing is right. The modellers are fine though because Manabe and Wetherald were OK, but once Hansen, Trenberth an Houghton took control, the big mistakes happened. it looks deliberate.

Following on from an earlier poster’s comments, the science of decompression diving uses a similar approach to that of the Bern model, to understand the movement of nitrogen into and out of a divers’ body tissues. The approach was pioneered by JS Haldane in 1907. He came up with the idea of using tissue compartments which exchanged nitrogen at different rates. Tissues like blood and nerves were fast, and were able to quickly equilibrate with any changes in the partial pressure of nitrogen. Tissues like muscle and fat were of intermediate speed, while bone was extremely slow. What Haldane did was to come up with a crude multi-compartment (tissue) model, and then carry out very extensive tests to tweak the model so that divers (goats in his initial experiments) did not get decompression sickness (gas bubbles forming in tissue). Over a hundred years on, Haldane-type tissue models are used in most divers’ decompression computers, and they work extremely well.

Some important points about diving science: 1. Haldane, and those who followed, constantly tested and refined their models against experimental data – a process which continues today – over a hundred years on. 2. The models are a crude approximation of a complex system (the human body), but at least the physics is reasonably well understood e.g. the local temperature and pressure gradients and the kinetics of the physical processes – solubility, perfusion and diffusion. 3. In decompression models, only one of the tissue compartments usually controls the behaviour of the model (rate of ascent/decompression stop timing). Therefore, small errors or uncertainties in each compartment are not a major problem.

The application of this approach to climate science is, in my view, highly problematic because: 1. There simply has not been enough time/effort to refine these models against experimental data – a process which can take many decades. 2. The models are a profoundly crude approximation of a bewilderingly complex system (global carbon cycle), about which most of physics/biology/geology/chemistry/vulcanology etc, etc are not well understood. 3 In climate models all of the compartments contribute to the behaviour of the model (CO2 sequestration rate) and so errors and uncertainties in each compartment are cumulative.

Bill Illis says:

May 6, 2012 at 1:16 pm

So the natural sinks and sources are in equilbrium (give or take) when the CO2 level is 275 ppm or the Carbon level in the atmosphere is 569 billion tonnes

====================================

Bill, this is biology…..what you’re calling equilibrium is exactly what happens when a nutrient becomes limiting……..

Bill Illis says:

May 6, 2012 at 1:16 pm

Very interesting charts as always, Bill. Thanks.

[note: the 3rd one has the wrong starting year].

Willis Eschenbach – “You say ” the results of the Bern model were offered as an available computational tool for further work” … I understand that. What I don’t understand is the physical basis for what they are claiming, which is that e.g. 13% of the airborne CO2 hangs around with an e-folding time of 371.6 years, but is not touched during that time by any of the other sequestration mechanisms.”

Then, Willis, I suggest you read the original papers on the Bern model, such as Siegenthaler and Joos 1992.

The percentages you listed are the results of running the Bern model, and as such are a convenient shorthand. The actual physical processes include mixed layer oceanic absorption, eddy currents and thermohaline circulation, etc. The very link you provided states that:

(emphasis added)

Your claim that these are percentages and time constants are the direct processes is a strawman argument – Joos certainly did not make that claim, he stated that these were a useful approximation.

I have to say I find your claims otherwise, and in fact your original post, to be quite disingenuous.

I have been wondering, if there is any reason to expect the rate of carbon exchange between the athmosphere and the ocean ( and other sinks) to be diffrent for diffrent isotopes of C ( CO2 for that matter ) . In other word might it be possible to infere something about the uptake rate of the sinks from the data (its accessible at the CDIAC website ) for the athmospheric dC14 content and the spike caused in it by open air nuclear bomb testing in the last century. I belive the i have somwhere seen a statment claming the “athmospheric half life” calculated from this “experiment” is 5.5 – 6 for the c14 isotope.

It is called a box model. Box models were discarded in the late 70’s, early 80’s, because they cannot describe complex systems.

There is an input into the system, the release of carbon from geologic sources (Vulcanism) and fossil fuels; the influx of carbon into the biosphere.

There is an output from the system, mineralization of carbon into muds, which will become rock; the efflux from the system.

At steady state, influx = efflux. In the previous 800,000 years of pre-industrial times CO2 is between 180-330 ppm. So either we were VERY lucky that influx=efflux due to chance; or the rate of efflux is coupled to the rate of influx. Thus, when CO2 is high, marine animals do well, the ocean biotica grows, more particulate organic matter sinks to the bottom of the ocean, more carbon trapped in mud, more mineralization.

Basic control mechanisms in fact.