NEW UPDATES POSTED BELOW

As regular readers know, I have more photographs and charts of weather stations on my computer than I have pictures of my family. A sad commentary to be sure, but necessary for what I do here.

Steve Goddard points out this NASA GISS graph of the Annual Mean Temperature data at Godthab Nuuk Lufthavn (Nuuk Airport) in Greenland. It has an odd discontinuity:

Source data is here

The interesting thing about that end discontinuity is that is is an artifact of incomplete data. In the link to source data above, GISS provides the Annual Mean Temperature (metANN) in the data, before the year 2010 is even complete:

Yet, GISS plots it here and displays it to the public anyway. You can’t plot an annual value before the year is finished. This is flat wrong.

But even more interesting is what you get when you plot and compare the GISS “raw” and “homogenized” data sets for Nuuk, my plot is below:

Looking at the data from 1900 to 2008, where there are no missing years of data, we see no trend whatsoever. When we plot the homogenized data, we see a positive artificial trend of 0.74°C from 1900 to 2007, about 0.7°C per century.

When you look at the GISS plotted map of trends with 250KM smoothing, using that homogenized data and GISS standard 1951-1980 baseline, you can see Nuuk is assigned an orange block of 0.5 to 1C trend.

Source for map here

So, it seems clear, that at least for Nuuk, Greenland, their GISS assigned temperature trend is artificial in the scheme of things. Given that Nuuk is at an airport, and that it has gone through steady growth, the adjustment applied by GISS is in my opinion, inverted.

The Wikipedia entry for Nuuk states:

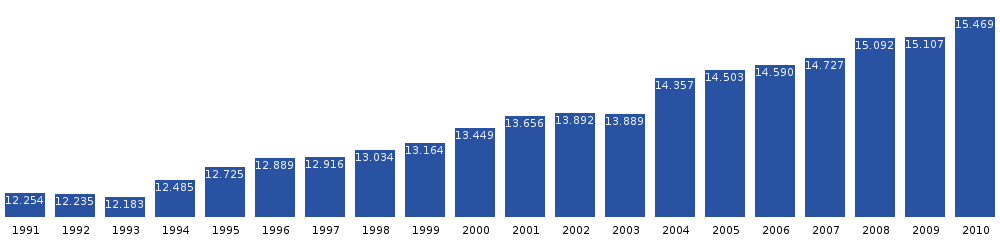

With 15,469 inhabitants as of 2010, Nuuk is the fastest-growing town in Greenland, with migrants from the smaller towns and settlements reinforcing the trend. Together with Tasiilaq, it is the only town in the Sermersooq municipality exhibiting stable growth patterns over the last two decades. The population increased by over a quarter relative to the 1990 levels, and by nearly 16 percent relative to the 2000 levels.

Nuuk population growth dynamics in the last two decades. Source: Statistics Greenland

Instead of adjusting the past downwards, as we see GISS do with this station, the population increase would suggest that if adjustments must be applied, they logically should cool the present. After all, with the addition of modern aviation and additional population, the expenditure of energy in the region and the changing of natural surface cover increases.

The Nuuk airport is small, but modern, here’s a view on approach:

Closer views reveal what very well could be the Stevenson Screen of the GHCN weather station:

Here’s another view:

The Stevenson Screen appears to be elevated so that it does not get covered with snow, which of course is a big problem in places like this. I’m hoping readers can help crowdsource additional photos and/or verification of the weather station placement.

[UPDATE: Crowdsourcing worked, the weather station placement is confirmed, this is clearly a Stevenson Screen in the photo below:

Thanks to WUWT reader “DD More” for finding this photo that definitively places the weather station. ]

Back to the data. One of the curiosities I noted in the GISS record here, was that in recent times, there are a fair number of months of data missing.

I picked one month to look at, January 2008, at Weather Underground, to see if it had data. I was surprised to find just a short patch of data graphed around January 20th, 2008:

But even more surprising, was that when I looked at the data for that period, all the other data for the month, wind speed, wind direction, and barometric pressure, were intact and plotted for the entire month:

I checked the next missing month on WU, Feb 2008, and noticed a similar curiosity; a speck of temperature and dew point data for one day:

But like January 2008, the other values for other sensors were intact and plotted for the whole month:

This is odd, especially for an airport where aviation safety is of prime importance. I just couldn’t imagine they’d leave a faulty sensor in place for two months.

When I switched the Weather Underground page to display days, rather than the month summary, I was surprised to find that there was apparently no faulty temperature sensor at all, and that the temperature data and METAR reports were fully intact. Here’s January 2nd, 2008 from Weather Underground, which showed up as having missing temperature in the monthly WU report for January, but as you can see there’s daily data:

But like we saw on the monthly presentation, the temperature data was not plotted for that day, but the other sensors were:

I did spot checks of other dates in January and February of 2008, and found the same thing: the daily METAR reports were there, but the data was not plotted on graphs in Weather Underground.The Nuuk data and plots for the next few months at Weather Underground have similar problems, as you can see here:

But they gradually get better. Strange. It acts like a sensor malfunction, but the METAR data is there for those months and seems reasonably correct.

Since WU makes these web page reports “on the fly” from stored METAR reports in a database, to me this implies some sort of data formatting problem that prevents the graph from plotting it. It also prevents the WU monthly summary from displaying the correct monthly high, low, and average temperatures. Clearly what they have for January 2008 is wrong as I found many temperatures lower than the monthly minimum of 19 °F they report for January 2008, for example, 8°F on January 17th, 2008.

So what’s going on here?

- There’s no sensor failure.

- We have intact hourly METAR reports (the World Meteorological Organization standard for reporting hourly weather data for January and February 2008.

- We have erroneous/incomplete presentations of monthly data both on Weather Underground and NASA GISS for the two months of Jan Feb 2008 I examined.

What could be the cause?

WUWT readers may recall these stories where I example the impacts of failure of the METAR reporting system:

GISS & METAR – dial “M” for missing minus signs: it’s worse than we thought

Dial “M” for mangled – Wikipedia and Environment Canada caught with temperature data errors.

These had to do with missing “M’s” (for minus temperatures) in the coded reports, causing cold temperatures like -25°C becoming warm temperatures of +25°C, which can really screw up monthly average temperatures with one single bad report.

Following my hunch that I’m seeing another variation of the same METAR coding problem, I decided to have a look at that patch of graphed data that appeared on WU on January 19th-20th 2008 to see what was different about it compared to the rest of the month.

I looked at the METAR data for formatting issues, and ran samples of the data from the times it plotted correctly on WU graphs, versus the times it did not. I ran the METAR reports through two different online METAR decoders:

http://www.wx-now.com/Weather/MetarDecode.aspx

http://heras-gilsanz.com/manuel/METAR-Decoder.html

Nothing stood out from the tests with the decoder I did. The only thing that I can see is that some of the METAR reports seem to have extra characters, like /// or 9999, like these samples, the first one didn’t plot data on WU, but the second one did an hour later on January 19th:

METAR BGGH 191950Z VRB05G28KT 2000 -SN DRSN SCT014 BKN018 BKN024 M01/M04 Q0989 METAR BGGH 192050Z 10007KT 050V190 9999 SCT040 BKN053 BKN060 M00/M06 Q0988

I ran both of these (and many others from other days in Jan/Feb) through decoders, and they decoded correctly. However, that still leaves the question of why Weather Underground’s METAR decoder for graph plotting isn’t decoding them correctly for most of Jan/Feb 2008, and leaves the broader question of why GISS data is missing for these months too.

Now here’s the really interesting part.

We have missing data in Weather Underground and in GISS, for January and February of 2008, but in the case of GISS, they use the CLIMAT reports (yes, no ‘e”) to gather GHCN data for inclusion into GISS, and final collation into their data set for adjustment and public dissemination.

The last time I raised this issue with GISS I was told that the METAR reports didn’t effect GISS at all because they never got numerically connected to the CLIMAT reports. I beg to differ this time.

Here’s where we can look up the CLIMAT reports, at OGIMET:

http://www.ogimet.com/gclimat.phtml.en

Here’s what the one for January 2008 looks like:

Note the Nuuk airport is not listed in January 2008

Here’s the decoded report for the same month, also missing Nuuk airport:

Here’s February 2008, also missing Nuuk, but now with another airport added, Mittarfik:

And finally March 2008, where Nuuk appears, highlighted in yellow:

The -8.1°C value of the CLIMAT report agrees with the Weather Underground report, all the METAR reports are there for March, but the WU plotting program still can’t resolve the METAR report data except on certain days.

I can’t say for certain why two months of CLIMAT data is missing from OGIMET, why the same two months of data is missing from GISS, or why Weather Underground can only graph a few hours of data on each of those months, but I have a pretty good idea of what might be going on. I think the WMO created METAR reporting format itself is at fault.

What is METAR you ask? Well in my opinion, a government invented mess.

When I was a private pilot (which I had to give up due to worsening hearing loss – tower controllers talk like auctioneers on the radio and one day I got the active runway backwards and found myself head-on to traffic. I decided then I was a danger to myself and others.) I learned to read SA reports from airports all over the country. SA reports were manually coded teletype reports sent hourly worldwide so that pilots could know what the weather was in airport destinations. They were also used by the NWS to plot synoptic weather maps. Some readers may remember Alden Weatherfax maps hung up at FAA Flight service stations which were filled with hundreds of plotted airport station SA (surface aviation) reports.

The SA reports were easy to visually decode right off the teletype printout:

From page 115 of the book “Weather” By Paul E. Lehr, R. Will Burnett, Herbert S. Zim, Harry McNaught – click for source image

Note that in the example above, temperature and dewpoint are clearly delineated by slashes. Also, when a minus temperature occurs, such as -10 degrees Fahrenheit, it was reported as “-10″, not with an “M”.

The SA method originated with airmen and teletype machines in the 1920′s and lasted well into the 1990′s. But like anything these days, government stepped in and decided it could do it better. You can thank the United Nations, the French, and the World Meteorological Organization (WMO) for this one. SA reports were finally replaced by METAR in 1996, well into the computer age, even though it was designed in 1968.

From Wikipedia’s section on METAR

METAR reports typically come from airports or permanent weather observation stations. Reports are typically generated once an hour; if conditions change significantly, however, they can be updated in special reports called SPECIs. Some reports are encoded by automated airport weather stations located at airports, military bases, and other sites. Some locations still use augmented observations, which are recorded by digital sensors, encoded via software, and then reviewed by certified weather observers or forecasters prior to being transmitted. Observations may also be taken by trained observers or forecasters who manually observe and encode their observations prior to transmission.

History

The METAR format was introduced 1 January 1968 internationally and has been modified a number of times since. North American countries continued to use a Surface Aviation Observation (SAO) for current weather conditions until 1 June 1996, when this report was replaced with an approved variant of the METAR agreed upon in a 1989 Geneva agreement. The World Meteorological Organization‘s (WMO) publication No. 782 “Aerodrome Reports and Forecasts” contains the base METAR code as adopted by the WMO member countries.[1]

Naming

The name METAR is commonly believed to have its origins in the French phrase message d’observation météorologique pour l’aviation régulière (“Aviation routine weather observation message” or “report”) and would therefore be a contraction of MÉTéorologique Aviation Régulière. The United States Federal Aviation Administration (FAA) lays down the definition in its publication the Aeronautical Information Manual as aviation routine weather report[2] while the international authority for the code form, the WMO, holds the definition to be aerodrome routine meteorological report. The National Oceanic and Atmospheric Administration (part of the United States Department of Commerce) and the United Kingdom‘s Met Office both employ the definition used by the FAA. METAR is also known as Meteorological Terminal Aviation Routine Weather Report or Meteorological Aviation Report.

I’ve always thought METAR coding was a step backwards.

Here is what I think is happening

METAR was designed at a time when teletype machines ran newsrooms and airport control towers worldwide. At that time, the NOAA weatherwire used 5 bit BAUDOT code (rather than 8 bit ASCII) transmitting at 56.8 bits per second on a current loop circuit. METAR was designed with one thing in mind: economy of data transmission.

The variable format created worked great at reducing the number of characters sent over a teletype, but that strength for that technology is now a weakness for today’s technology.

The major weakness with METAR these days is the variable length and variable positioning of the format. If data is missing, the sending operator can just leave out the data field altogether. Humans trained in METAR decoding can interpret this, computer however are only as good as the programming they are endowed with.

Characters that change position or type, fields that shift or that may be there one transmission and not the next, combined with human errors in coding can make for a pretty complex decoding problem. The problem can be so complex, based on permutations of the coding, that it takes some pretty intensive coding to handle all the possibilities.

Of course in 1968, missed characters or fields on a teletype paper report did little more than aggravate somebody trying to read it. In today’s technological world, METAR reports never make it to paper, they get transmitted from computer to computer. Coding on one computer system can easily differ from another, mainly due to things like METAR decoding being a task for an individual programmer, rather than a well tested off the shelf universally accepted format and software package. I’ve seen all sorts of METAR decoding programs, from ancient COBOL, to FORTRAN, LISP, BASIC, PASCAL, and C. Each program was done differently, each was done to a spec written in 1968 for teletype transmission, and each computer may run a different OS, have a program written in a different language, and different programmer. Making all that work to handle the nuances of human coded reports that may contain all sorts of variances and errors, with shifting fields presence and placement, is a tall order.

That being said, NOAA made a free METAR decoder package available many years ago at this URL:

http://www.nws.noaa.gov/oso/metardcd.shtml

That has disappeared now, but a private company is distributing the same package here:

This caveat on the limulus web page should be a red flag to any programmer:

Known Issues

- Horrible function naming.

- Will probably break your program.

- Horrible variable naming.

I’ve never used either package, but I can say this: errors have a way of staying around for years. If there was an error in this code that originated at NWS, it may or may not be fixed in the various custom applications that are based on it.

Clearly Weather Underground has issues with some portion of it’s code that is supposed to plot METAR data, coincidentally, many of the same months where their plotting routine fails, we also have missing CLIMAT reports.

And on this page at the National Center for Atmospheric Research (NCAR/UCAR), we have reports of the METAR package failing in odd ways, discarding reports:

>On Tue, 2 Mar 2004, David Larson wrote: > >> >>Robb, >> >>I've been chasing down a problem that seems to cause perfectly good >>reports to be discarded by the perl metar decoder. There is a comment >>in the 2.4.4 decoder that reads "reports appended together wrongly", the >>code in this area takes the first line as the report to process, and >>discards the next line. >>

Here’s another:

On Fri, 12 Sep 2003, Unidata Support wrote: > > ------- Forwarded Message > > >To: support-decoders@xxxxxxxxxxxxxxxx > >From: David Larson <davidl@xxxxxxxxxxxxxxxxxx> > >Subject: perl metar decoder -- parsing visibility / altimeter wrong > >Organization: UCAR/Unidata > >Keywords: 200309122047.h8CKldLd027998 > > > The decoder seems to mistake the altimeter value for visibility in many > non-US METARs.

So my point is this. METAR is fragile, and at the world’s premier climate research center, it seems to have problems that suggest it doesn’t handle worldwide reports yet it appears to be the backbone for all airport based temperature reports in the world, which get collated to GHCN for climate purposes. I think the people that handle these systems need to reevaluate and test their METAR code.

Even better, let’s dump METAR in favor of a more modern format, that doesn’t have variable fields and variable field placements requiring the code to not only decode the numbers/letters, but also the configuration of the report itself.

In today’s high speed data age, saving a few characters from the holdover of teletype days is a wasted effort.

The broader point is; our reporting system for climate data is a mess of entropy on so many levels.

UPDATE:

The missing data can be found at the homepage of DMI, the Danish Meteorological Institute http://www.dmi.dk/dmi/index/gronland/vejrarkiv-gl.htm “vælg by” means “choose city” choose Nuuk to get the numbers monthly back to january 2000.

Thanks for that. The January 2008 data is available, and plotted below at DMI’s web page.

So now the question is, if the data is available, and a prestigious organization like DMI can decode it, plot it, and create a monthly average for it, why can’t NCDC’s Peterson (who is the curator of GHCN) decode it and present it? Why can’t Gavin at GISS do some QC to find and fix missing data?

Is the answer simply “they don’t care enough?” It sure seems so.

UPDATE: 10/4/10 Dr. Jeff Masters of Weather Underground weighs in. He’s fixed the problem on his end:

Hello Anthony, thanks for bringing this up; this was indeed an error in

our METAR parsing, which was based on old NOAA code:

/* Organization: W/OSO242 – GRAPHICS AND DISPLAY SECTION */

/* Date: 14 Sep 1994 */

/* Language: C/370 */

What was happening was our graphing software was using an older version of

this code, which apparently had a bug in its handling of temperatures

below freezing. The graphs for all of our METAR stations were thus

refusing to plot any temperatures below freezing. We were using a newer

version of the code, which does not have the bug, to generate our hourly

obs tables, which had the correct below freezing temperatures. Other

organizations using the old buggy METAR decoding software may also be

having problems correctly handling below freezing temperatures at METAR

stations. This would not be the case for stations sending data using the

synoptic code; our data plots for Nuuk using the the WMO ID 04250 data,

instead of the BGGH METAR data, were fine. In any case, we’ve fixed the

graphing bug, thanks for the help.

REPLY: Hello Dr. Masters.

It is my pleasure to help, and thank you for responding here. One wonders if similar problems exist in parsing METAR for generation of CLIMAT reports. – Anthony

If your goal is manipulate the data in untraceable ways to suit your political agenda, why would you want to change the current reporting system?

REPLY: I don’t think there’s active manipulation going on here, only simple incompetence, magnified over years. It’s like California’s recent inability to change the payroll system for state workers to do what the governor wanted, minimum wage for all until budget was passed. Still not fix for the old payroll system and budget is 93 days overdue. – Anthony

Yep, the good old SA Reports were pretty easy to read – posted on the bulletin board at most semi-decent size airfield.

I stopped flying before METARS came in, and haven’t bothered looking at them too much to be honest – but what a shambles when I do take the time!!!!

What next? Encrypted to stop “the wromg people” reading them?

I think “Nuuk Godthab Luvthavn” should read “Nuuk Godthaab Lufthavn”. Godthaab is a kind of Danish godt haab = good hope. Lufthavn is Danish for Airport. They changed the Danish name Godthaab to Nuuk.

REPLY: fixed, thanks – Anthony

GISS is completely screwed up, it always skews positive with each “fix” and “error” and Hansen who runs it is completely untrustworthy as a scientist as he gets arrested regularly during climate change protests. This goes to the credibility of the public science community inside NASA and the NOAA, Hansen need to be moved to a new position, and the data and the correction for GISS needs to be revisited – the U.S. government is risking a Climategate by leaving these guys in charge, and we will have lost a generation if climate change is real, or wasted a lot of money fixing a false problem if it is not.

Credibility and full attention to every detail is required for great science, GISS does not have it. Climategate II is coming, and NASA should know it.

Fascinating.

I used to have the impression that data like these were collected and collated by dedicated individuals, usually wearing white lab coats, who would diligently record the information and relay it accurately to others.

The system you describe is more SNAFU than one would expect, especially with the importance of how the data are interpreted and used to bolster far reaching policy decisions.

An overhaul is long overdue.

Correction needed?

Should be “little.” Tough error to spot.

REPLY: Thanks, all that work I put in and all people can do is point out typos 😉 fixed -A

What a fascinating and thorough account. Is this problem the same worldwide (with the increased numbers of airports used by GHCN) or is a missed autotranslation of the METAR reports more likely at locations where there is great variability in weather conditions?

REPLY: Thanks. I think American collection systems (NCDC does the whole GHCN which GISS then uses) have trouble getting reports from other countries correctly formatted (as alluded to at NCAR/UCAR). This leads to a lot of dropped data.

The missing “M” problem shows up the worst at cold, high latitudes, because an M25/M27 that gets coded as 25/M27 gets turned into plus 25C from – 25C…huge difference and only one error like that in one month is enough to raise the monthly average a degree or two. When you are looking for less than a degree for an AGW signal. that stands out with confirmation bias…just look at all the red yellow and orange above 60N in the GISS map – Anthony

Anthony-

I agree there may not be active manipulation in the missing data, what is being actively manipulated is the rise caused by missing data wherein they increase the numbers via manipulation of missing data, and their is manipulation in the correction. Your recent article caught that:

http://wattsupwiththat.com/2010/09/30/amopdo-temperature-variation-one-graph-says-it-all/

“Note this data plot started in 1905 because the PDO was only available from 1900. The divergence 2000 and after was either (1) greenhouse warming finally kicking in or (2) an issue with the new USHCN version 2 data.”

Their mere mention of possibility 2) above is as close as you will ever see to one scientist calling another a liar in a peer reviewed paper. This stuff sneaking in bodes very badly for GISS over the next year or two. The tide is turning, the real scientists are taking back over.

As I’ve mentioned before, we tend to think that days gone by were not very different from today. We forget how revolutionary things were in their time that are today so commonplace that we don’t need to think about them. MP3s are recent. DVDs and CDs are recent. Cell phones are recent. ASCII as an overwhelming standard is recent. Radial tires are recent. Jet engines are recent. Before all of those things there was something else that we used for the same or similar function, and in those days those things were the standard. In 100 years almost all of our technology and lives will be completely different, since things will change in ways that are impossible to predict.

It is completely pointless to compare weather records from ANY previous time with today, because the records were kept for different purposes than they are now. 100 years ago an airport required a windsock… period. 50 years ago they needed the temperature, RH, wind speed and direction. Today we need as much information as possible, as accurately as possible, because who is going to try operating a $200M plane when they aren’t absolutely certain?

As a Canadian, I know that the only really important temperature is 0C. You need to know when it’s above or below freezing. Other than that range, there’s colder, and warmer. The difference between 25C and 27C is essentially meaningless, however the same difference between -1C and 1C is extremely important.

Any time I see a “spliced” temperature record I know that it is completely valueless. You can’t compare a visually daily read mercury-in-glass thermometer measurement with a 5 minute sampled thermistor record.

Oops – was ranting. Anyway, the problem with throwing out METAR is that none of the past records will then have any value, and we would essentially be starting at zero. The real issue is that some people put far too much value on older records, and unless you continue keeping records in the exact same format you can’t compare to older records.

Once again, what jumps out is the need to take just a small even tiny proportion of worldwide stations available, and do real quality work with each. By hand. With love. With citizen scientists on board. At a fraction of the current price. Quantify the local station issues, moves, instrumentation, etc, and the UHI using McKitrick, work here, and the Russian work shown at Heartland, as preliminary standards for UHI.

It’s so obvious, with a bit of patient digging, that there really has been no serious or unnatural temperature rise. And now, thanks Anthony for this superb analysis to highlight likely causes for the recent funny figures and funny trends, that should put the professionals to shame. How do you manage it? And why are the professionals so slow to say Thank You???

“Looking at the data from 1900 to 2008, where there are no missing years of data, we see no trend whatsoever. When we plot the homogenized data, we see a positive artificial trend of 0.74°C from 1900 to 2007, about 0.7°C per century.”

How do they justify these adjustments? We see this time and again with raw/homogenized data, where it looks like it’s adjusted to insert a similar upward trend.

Since it looks like a duck, and quacks like a duck, they should have a VERY good explanation as to why it is anything but a duck IMO.

Worthy of McI, but are you going to change the name WUWT to “Climat Audit”?

(I have used all the aviation reports you mention; for pilots there was/is no problem there.)

Weather matches annual sunspot activity.

Climate matches sunspot cycles.

People have no idea what a drop in activity will do to annual weather and climate change. Few study it.

So why does some weather man place a weather monitor within a mile of an airplane terminal?

“Lazy” is the answer.

And I add, it is hot standing behind a prop plane in revved motion. Notice where the equipment is. It is in the revved space of every plane that preps for leaving and catches anything coming in.

From previous post:

REPLY: Thanks, all that work I put in and all people can do is point out typos 😉 fixed -A

Sorry about that, I should have used my “Dear Moderators” tag as I expect those to be deleted. The graphs hadn’t even loaded (dial-up) and I was just reading the text, thought I should slip in that note early. FWIW it is thorough, well researched, and informative, and I say that without even having really read it yet.

Thanks for all your effort Anthony. We got enough prolums with these extreme latitude airports with their weather stations beside the black tarmac all year round without any more added.

Orkneygal says: “If your goal is manipulate the data in untraceable ways to suit your political agenda, why would you want to change the current reporting system?”

REPLY: I don’t think there’s active manipulation going on here, only simple incompetence, magnified over years. – Anthony

Anthony, there is a mid-way explanation: that only “unwanted” problems are ever tackled. That is to say: if ever the system has errors producing temperature readings that are too low, they “adjust” the figures to increase the reading, but clearly as they are more than happy with errors that increase the readings, there is no incentive whatsoever (negative incentive) to tackle these issues.

Who knows at what point “suspecting there may be a problem … but not having the time” becomes active fraud of: “knowing there is a helpful problem and not allocating the time”?

There is a free Windows utility at NirSoft.com called MetarWeather – METAR decoder. This is the page …

http://www.nirsoft.net/utils/mweather.html

Have not used this one myself but I can say with certainty that his utilities are excellent. And there are tons of them there, all free.

Thank you Anthony for such a detailed audit. Truly worthy of Steve McIntyre. Thank goodness there are “amateurs” around who care about accuracy and attention to detail.

Just an idle observation.

Under what circumstances will the known errors in observation and reporting lead to an unjustifed apparent increase in temperature…and which will lead to a decrease?

Seems to me that its quite easy to make a thermometer read high, but a lot more difficult to make it read low…even if all the follow up stuff is done entirely correctly.

METAR wasn’t much of an improvement over the old SA’s. We used to have symbols for sky condition, a circle for clear, a circle with a vertical bar for scattered, two bars for broken, and a cross in the middle for overcast. Never could remember whether H stood for haze or hail. Fortunately we went to a private forecaster for my final years.

I get the impression there’s a lot of good data out there that we could recover with a more robust data conversion program, and lessen our reliance on Filnet imaginary data.

I do notice that the observers in the early years had the same trouble our stateside observers had – reading thermometer temps too high, which required downward adjustment by our present climate data crunchers. It must have been the lack of CO2 in the atmosphere back then, because the problem seems to be worldwide.

Anthony: “So, it seems clear, that at least for Nuuk, Greenland, their GISS assigned temperature trend is artificial in the scheme of things. Given that Nuuk is at an airport, and that it has gone through steady growth, the adjustment applied by GISS is in my opinion, inverted.”

Is this aspect more then just programing error?

Where is the temperature adjustment for 1979 when this airport was built, 2.5 miles and at 283 feet elevation. Seems like a big change, since I doubt many weather observers would have hiked out to take daily readings.

PS Anthony, found this picture while trying to find the airport history. shows a closer view of the screen.

http://community.webshots.com/photo/fullsize/2586822000028795203gdgcKn

“Here’s Febrary 2008, also missing Nuuk, but now with another airport added, Mittarfik:”

Did you mean “Prins Christian Sund?”?

Temperatures in Greenland

http://www.dmi.dk/dmi/index/klima/klimaet_indtil_nu/temperaturen_i_groenland.htm

The missing data can be found at the homepage of DMI, the Danish Meteorological Institute http://www.dmi.dk/dmi/index/gronland/vejrarkiv-gl.htm “vælg by” means “choose city” choose Nuuk to get the numbers monthly back to january 2000.

REPLY: Thanks! – Anthony

It is interesting that the raw data sets show no temperature change, zero anomaly, yet the ‘corrected’ data sets show that thermometers over read early in the set collection so the new version shows a rising trend. Amazing what you can do fiddling with the figures.

The position of the Stevenson screen does not help matters.

Nice work and analysis!

Now that these data never reach paper and the format is just a (very poor) interface between different software packages, the obvious question is: Why not use XML for this purpose? XML was made for this kind of thing. It will eliminate the problem of missing fields etc.

So the proper authority should revise its standard and format the METAR information using XML.

IMHO, that is.

Fascinating, absolutely fascinating!

Sherlock Holmes would have been MOST impressed with this investigation.

Thanks for all the very hard work, Anthony.

Data issues, data handling issues & data homegenisation.

Or;

“Lies, damned lies & statistics”?

Monthly:

http://www.dmi.dk/dmi/vejrarkiv-gl?region=7&year=2010&month=9

Anthony,

This article with your previous ones mentioning METAR clearly illustrate that the reporting is broken by design.

Even in the days of Baudot encoding, one could type e.g. NULL instead of 99999 or whatever “magic value” to represent an unknown number. It is absolutely ludicrous that best-practice in data collection, management and processing isn’t being used for these vital function. I use the word “ludicrous” as the least-bad adjective.

Great work on the Nuuk weather data analysis.

Regarding the errors introduced by METAR and its applications, a better data format is XML, it can remove much of the ambiguity found in ad-hoc formats like META . XML’s weakness is its larger size, but with improved data transmission and storage capacity, the size of XML is not an issue.

There are some efforts to standardize the collection and exchange of weather information in XML. A major one is by EUROCONTROL – the ‘Weather Information Exchange Model’ (WXXM) which was released in 2007. It was followed by a model of weather data – Weather Exchange Conceptual Model (WXCM) released in 2008.

More recently the WXXM was updated as a result of the WXCM and an XML schema was created – the Weather Information Exchange Schema (WXXS).

See http://www.eurocontrol.int/aim/public/standard_page/met_wie.html

The wunderground issue with temps being cut off is something that has showed up this week. It seems like for some reason temps are automatically cut off when it goes below 0c. Same thing with norwegian ones as well.

If there is no active manipualtion of data how do you explain the almost vertical, disconnected end 2010 line? Or will this disappear as more data are reported? Is this the only station wher end year data are recorded in the middle of the year?

Super Research, surely the prompt for a peer reviewed paper by someone with more time available than Anthony.

There have also been some worthy suggestions in the comments, which IMHO should be passed to the team reviewing the World Temperature Records.

Lucy Skywalker says:

October 3, 2010 at 1:07 am

Once again, what jumps out is the need to take just a small even tiny proportion of worldwide stations available, and do real quality work with each. By hand. With love. With citizen scientists on board. At a fraction of the current price. Quantify the local station issues, moves, instrumentation, etc, and the UHI using McKitrick, work here, and the Russian work shown at Heartland, as preliminary standards for UHI.

It’s so obvious, with a bit of patient digging, that there really has been no serious or unnatural temperature rise. And now, thanks Anthony for this superb analysis to highlight likely causes for the recent funny figures and funny trends, that should put the professionals to shame. How do you manage it? And why are the professionals so slow to say Thank You???

As you say there is a complete lack of quality management in the keeping of these records. Anthony alluded to the reason – the records were seen as transitory and the next hour should be corrected. Nowadays, that lack of quality assurance in safety critical data should not be acceptable especially as there are numerous methods of ensuring accurate data entry and secure transmission and decoding of data.

The professionals will not like change, no-one does, especially if it is an ‘outsider’ pointing out that they have let things slip. It is of course always possible that there are others who find that the poor data gives a good excuse for ‘adjustment’.

Thanks Anthony.

Very interesting, educational, and persceptive.

Another example of why WUWT is #1.

Wow. Just wow.

I’m thinking that the Venn diagram of Weatherman, Pilot, Scientist, Computer Nerd and Detective must be a lonely place. You’re bringing us all along – but these little audits are simply amazing.

Thank you, THANK you for your hard work and leadership.

The two METAR’s from NUUK Airport in the article is NOT valide, the equal sign (=) in the end is missing. see below

METAR BGGH 191950Z VRB05G28KT 2000 -SN DRSN SCT014 BKN018 BKN024 M01/M04 Q0989

METAR BGGH 192050Z 10007KT 050V190 9999 SCT040 BKN053 BKN060 M00/M06 Q0988

Probably issued manual by the observer as a telegram, an automatic software generated METAR will always have the equal sign (=) in the end.

It’s unfortunately a well known problem in Greenland.

Svend

Observer in Kangerlussuaq

Great Post.

Yes xml would be the way to go. Designed for data validation. Everything I read about climate data seems to suggest the handlers and archivers of this data are struggling with partials solutions to half century old problems. They need some modern engineers to fix their systems so they can get decent data to actually do science with…

$3k dollars can buy a raid 5, TB server. Online backup solutions with rsync are cheap. Teenagers are building data loggers for cats with gps aware arduinios. There’s no excuse for extending temperature data problems forward other than entrenched government systems and that the problems and weaknesses allow exploitation.

Solomon Green @ October 3, 2010 at 4:45 am

The “ability” to record “data” from future time periods is truly awe inspiring. That demonstrated “ability” should dispel any questions about current and past data.

Whodathunkit?

Wow, Anthony! Very nice. Very thorough, very complete. While you’re very familiar with most of the nuances of climate reporting, I can’t imagine the work hours to produce this. Well done.

My question. You’ve laid out many issues with the reporting and interpretation systems in place. Now, other than the readers here at WUWT, who do you tell? Does GISS not have any auditors for their data? If they do, they should be fired. Though very detailed, the end product of GISS is what carries most significance with me and probably the rest of the readers here, the GISS graphs. In the end, the historical graph presents an all-too-familiar pattern. The further away from present, adjust downward, then adjust upward with imaginary data. Keep up the good work, one day, someone in power will see this and act upon it………..hopefully, not in the manner the 10:10 people would wish.

What an elegant piece of investigation!! Many thanks from all of us.

I notice that there is not a single comment from the warmist brigade – don’t they ever read this or are they just too afraid to show their miserable, overfunded heads above the parapet?

Geof Maskens

As a programmer, I can say that METAR is a very poor way to transmit data. That it was created by government is not a surprise.

There many common ways to transmit data without errors. XML was suggested and that would work fine and be human readable. If the XML file was then ZIPed or TARed, you could reduce the file size and detect any transmission errors and still be in a widely accepted format.

“I’m thinking that the Venn diagram of Weatherman, Pilot, Scientist, Computer Nerd and Detective must be a lonely place.”

Weatherman, cloud lover and ex-pilot is an awful lot lonelier.

I supported the development of the Automated Flight Service Station (AFSS) for the FAA a number of years ago and one of the problems the contractor had was that there was no fixed format for the weather messages. As you noted the messages were originally send by teletype and were hand formatted. They came up with an interface document by recording the messages and figuring out what the most likely formats might be. For example, people would abbreviate thunderstorm THND, THNDR, TSTM ect.

One discussion I was in was why there were two line feeds at the end of a severe weather message. One older flight service guy told us it was because there was a requirement for the receiver to write his signature after the message on the teletype to acknowledge that he had seen it. Try doing that on a computer screen!

You’d have to look at the raw messages to see why they were rejected. It could very well be that it depends on who was on duty when the messages were sent and whether that person had a “unique” way of interpreting the format.

With modern data compression, repeated field values (like blanks / white space) would be compressed out anyway. I’d vote for going back to something like the simple fixed format….

FWIW, if they try to deflect this by saying “Use ASOS it’s better anyway”, I think I’ve found “issue” in the ASOS system (at least at San Jose Intl. and Phoenix Skyharbor).

http://chiefio.wordpress.com/2010/10/02/asos-vs-sjc-vs-nearby-town/

http://chiefio.wordpress.com/2010/10/01/sjc-san-jose-international/

GIStemp makes up the annual value from ‘seasonal’ values, with three months in a ‘season’. BUT… It can make a season with an entire month missing (and this is after it has ‘made up’ values for missing months from “nearby” places up to 1000 km away during ‘homogenizing’ so who knows what is in the months that are ‘there’… and those months may have missing days..) AND it can make a year with an entire missing season. So you could be missing all of winter and two months on each side and still get an annual value. Not only does this hide holes from the missing data, but it hides the existence of the root problem as well…

So even for places that DO have a continuous series in the GISS graphs, you have no idea where the data really are from and how much of it is really missing.

A bunch of missing days can be covered over in making the monthly mean that goes into GHCN. Then GIStemp can ‘fill in’ missing months from somewhere else. If there are at least 2 months in any ‘season’ it makes the whole season, and if one whole season is gone, it still makes an annual number. So what data actually DID go into the annual number at the end? Nobody knows.

Yes, I do mean “nobody”.

It’s all done by automated computer codes “homogenizing” filling in and “adjusting” and interpolating and exterpolating and, well, making stuff up. No person need apply.

Then they pronounce it God’s Own Truth to 1/100 C.

And here you show that it’s based on data that can swing from -20 C to 20 C by coding error and simply be dropped wholesale some times from crappy code.

Oh, I’d also look closely at those METARS for data that looks extreme. The QA process for USHCN (that I’d expect to be duplicated elsewhere) uses an average of ‘nearby’ ASOS stations as a ‘procrustian bed’ of sorts for the daily values. Any too long or too short get replaced with an average of the ASOS votes. Also any beyond some number of std deviations from the past history of the station get dropped. Which leads me to wonder how much history has to be missing for other reasons before you start requiring that winter extreme lows be within a few std dev of summer averages….

As you put it, yes, the whole thing is a “mess” from start to end. They would have better results if they went back to LIG, eyeballs, paper and pen, and people thinking about what they do with the data.

(No, that’s not a ‘luddite’ view. It’s just saying they have so thoroughly screwed up the automation that they could not screw up the paper and LIG as much – it can’t make up fantastic values. They COULD have a well designed near perfect automated system, and they have tried, but even the ASOS ‘have issues’ the way they have done it. Frankly, I’m beginning to think that the amateurs and volunteer stations may make the best QA available on the “professional” system.)

the adjustment applied by GISS is in my opinion, inverted

this is what I’ve been saying for years.

for non-programmers it’s a relatively easy error to make, and a difficult error to find, to reverse a sign or counter in a loop. thus instead of applying cooling adjustment from the initial point foward, the cooling is applied from the current year backward, resulting in more and more cooling adjustments being applied to the earliest data.

if you don’t expose your program to rigorous testing, you’ll probably never even see it, because the error isn’t apparent in the short term.

and I’ll bet that the GISS algorithm wasn’t a) coded by competent programmers b) thoroughly tested or c) documented. much like the UEA debacle.

of course, they would never open up their programming to analysis. they can never admit even that there “might” be a problem. it would blow the lid off of the entire warming pot.

Anthony, this is first-rate work. When smoothed, it’s even better!

Sure glad all those on-coming planes missed you.

RE: Method for Determination of “Weather Noise”.

For the month of June from 2001 -2010, Tmean +/- AD, where AD is the classical average deviation from the mean, is 5.1 +/- 0.5 deg C. The range for Tmean is 4.3 – 6.4 deg C and the range for AD is 0.1 – 1.4 deg C.

I request comments on my proposal that the classical average deviation from the mean is a measure or metric for “weather noise”.

For an accurate determination of weather noise, Tmax +/- AD and Tmin +/- AD should be computed for each day of the entire record since sunlight is constant over the sample period of one day. Any variation of the mean Tmax and Tmin would then be due to various conditions or events of weather such as clouds with and without rain or snow, air pressure, humidity, dust, tides, haze, fog, etc.

Actually, for a “rough” determination of weather noise, we can use only several days of the year such as the equinoxes and solstices or one day from each month.

Using temperature data from the remote weather station at Quatsino, B.C., which is located on the northwestern coast and near the end of Vancouver Island, I found for Sept. 21 from 1900-2009:

Mean Tmax +/- AD: 290.2 +/- 1.8 K

Range for Tmax : 287.2 – 293.6 K

Range for AD : 0.5 – 3.3 K

Mean Tmin +/- AD: 282.6 +/- 1.5 K

Range for Tmin : 280.3 – 284.2 K

Range for AD : 1.2 – 2.2 K

From other and similar analyses of the data for March, June, September and December, I found grand mean AD for the Tmax and Tmin metrics is +/- 1.5 K.

Using a simple t-test I also found that for p<o.o1 the means of two data sets usually differed by at least 1 K. For p < 0.001, the means of two data differed by at least or more than 1.5 K.

The big question is: If we analyze the temperature records of a reasonable number of remote weather stations with a long record of ca 100 years, what would we get for the AD's ? Can we also claim the AD is measure of "natural variation" of climate for a region?

for

Thank you for a very clear, detailed, and informative article.

Enlightening, succinct and alarming (not new by Anthony – in addition to the enlightening, succinct & inspiring posts at WUWT!). I suggest a massive sharing of this post to afford the world’s population with another explicit example of the need for real time actual transparency and honest peer review of papers and “documentation” upon which our futures are being based.

Damn good work Anthony/WUWT!

I’ve enjoyed this one a lot, Anthony. However, please don’t let everyone think that the recent (stupid) discontinuity is the only one for Nuuk. There is a truly real one, occurring at September 1922, of about 1.1 deg C, and it happened in the space of a month or so. It endured at a constant level for several decades. In the period before the witching month another stable period had also endured for decades. You don’t believe this 😉 ! Well I could demonstrate it easily if only I knew how to post graphics (GIFs) to the thread. The step change occurs at all southern Greenland sites, and in Iceland too. If you’d like to see this, and if you wish, many other plots of related discontinuities, for example in well known indices like PDO and others, I could send them by email (what address?). Believe me, it’s not my imagination.

Robin – who’s done a large amount of work on climate data over the last 16 years.

david says:

Anthony: “So, it seems clear, that at least for Nuuk, Greenland, their GISS assigned temperature trend is artificial in the scheme of things. Given that Nuuk is at an airport, and that it has gone through steady growth, the adjustment applied by GISS is in my opinion, inverted.”

Is this aspect more then just programing error?

I don’t know if it’s programming ‘error’ or ‘by design’ but it is a consistent part of GIStemp. I’ve benchmarked the first 2 steps of GIStemp and the data are ‘warmed’ by the process fairly consistently.

This is one of my older postings. Full of long boring tables of numbers, but it lets you see in detail form what GIStemp did to the character of the data for some particular countries. It consistently makes changes of the form that would induce a warming trend.

http://chiefio.wordpress.com/2009/11/05/gistemp-sneak-peek-benchmarks-step1/

Step1 is the “homogenize” step and, IMHO, does most of the ‘screwing up’. It is then followed by STEP2 that does UHI correction – backwards 1/2 the time, and finishes the job. Step3 hides this mess via making the broken grid/box anomalies where it compares “apples to frogs” as the thermometer in a grid/box in the baseline is almost always NOT the one in the box today, so an anomaly comparing them is exactly what again?… BTW, with 8000 boxes (until recently) and about 1200 thermometers in GHCN in 2010 most of the boxes have NO thermometer in them. GIStemp makes it’s anomalies by comparing an old thermometer to a ghost thermometer in many cases. Perhaps most cases. It “makes up” a grid / box value from “nearby” thermometers up to 1200 km away. THEN it makes the grid / box anomaly. Sometimes even ‘ghost to ghost’… Such are the ways of ‘climate science’… When folks bleat that it’s done with anomalies and trot out examples of A thermometer being compared to itself: That is how it OUGHT to be done, but not how it IS done. Most of GIStemp breakage happens using straight temperatures and long before it makes the grid/box anomalies, and those are done ‘apples to frogs’ anyway. (Apples to ghost frogs? Just the nature of the analogy needed to describe it speaks volumes…)

And here we find out the ghost frogs have deformed and missing limbs to begin with…

Is there any graphical representation of flow of climate information, available anywhere?

For example:

Airports -> METAR -> GHCN -> CRU

-> GISS

This kind of incredible analysis alone should be sufficient to debunk the AGW claims.

More awesome work by Mr Watts. I am truly awed by his diligence and tenacity.

Now for my question: How do we turn all of this work into actionable items, agents for change? To whom do we write? How do we format such in-depth analysis for people (politicians I presume) who are used to one-liners and sound bites?

I had an opportunity to attend a conference last week where Robert Glennon of ASU spoke about his new book: “Unquenchable: America’s Water Crisis and What To Do About It”.

It was a very interesting presentation, little focus on climate change per se, but a lot about population growth, water use and responses to it. Haven’t read the book, but if it’s like the presentation, it’s probably a good read.

You might invite him to do a post.

Why is the station data even being used anymore for climate study?

There are so many drawbacks for reaching any valid conclusions with global averages that use station data. The number of devices that are “assumed” to be calibrated and function correctly over a range of temperatures is absurd. Each device matters because each one is used to represent such a vast area.

I strongly argue that satellite data should be the only source of data used in the era that it is available. It is a single (or pair) device that is measuring for all points. That is an inherently more reliable system to collecting useful data.

Perhaps one of the most frustrating things in the study of the climate is the difference in the sources of temperature. A standard should be set and everyone should use that. The difficulty is as usual…. politics.

John Kehr

The Inconvenient Skeptic

Well, this Software Engineering cloud-loving pilot also thinks XML is the way to go.

Awesome analysis, Anthony. The only way I can remember METARS is Rod Machado’s:

Should Tina Walk Vera’s Rabbit Without Checking The Dog’s Appetite

Station Time Wind Visibility RVR Weather Clouds Temp Dewpoint Altimeter

Nice to know GISS is at the forefront of technology and 2010 is the warmest ever…

GISS does not agree with anything but Hansen’s predictions.

Why is it used at all? It’s alarming, like Hansen.

What is really alarming is the false state of temperature it generates, and that derived from faulty data interpretation plus bad siting issues.

What we have in GISS, Nuuk and the METAR system is data reporting that is worse than previously imagined.

Anthony et. al.

GISS is not the only government climate outfit with automated quality control issues. Acording to Canada’s Auditor General, Environment Canada has similar problems as outlined in the following report – http://www2.canada.com/story.html?id=3430304 -.. This was briefly picked up by Canada’s MSM. It didn’t get legs because, I think, the Canadian public said ” Ho Hum, what else is new?”

For quality world climate reports, color Canada blank.

Another outstanding research effort, Anthony. Fascinating analysis, excellent detective work.

I notice that one of the pictures of the airport shows a couple of Dash 7’s parked on the tarmac, in front of the alleged Stevenson screen. The engines put out a fair amount of heat, and the props will push that back at the screen quite effectively.

REPLY: I don’t know for certain that is the weather station there, NCDC doesn’t know the exact location either. that’s why I’m asking readers for help. – Anthony

Further to the Audior Generals report a quote, ” Human quality control of climate data ceased as of April 1, 2008. Automated quality control is essentially non-existent. There is no program in place to prevent erroneous data from entering the national climate archive”.

RSS Monthly for September is out:

http://www.remss.com/data/msu/monthly_time_series/RSS_Monthly_MSU_AMSU_Channel_TLT_Anomalies_Land_and_Ocean_v03_2.txt

More like: “Lies, damned lies, and climate science.”

I have codes to extract data from metar reports as part of my work. yes, they are an utter mess. human readable but difficult to get a computer to do (it’s hard to find EVERY case where the data comes through in a funny format)

Another GISS bites the dust….

Another GISS bites the dust….

And another one’s gone….and another one’s gone….

Excellent sleuth work, Anthony!

-Chris

Norfolk, VA, USA

Instead of adjusting the past downwards, as we see GISS do with this station, the population increase would suggest that if adjustments must be applied, they logically should cool the present.

It always burns me to see 1930s era temperature readings “adjusted downward to account for modern day UHI rather than the other way round. I am reminded of this as Los Angeles last week set a new record 113 F that broke the thermometer. Any UHI adjustment is worse than a guess.

GISS (should I use two ZZs to end the acronym?)

I propose that one fine day when Hansen and all of his other co-conspirators there are sacked…or sacked, arrested and tried (lol), that Anthony be named its new director.

He seems to have a better understanding of proper temperature measurements than the turkeys currently running it.

Now folks don’t take me the wrong way, I know there are plenty of extremely bright people working there no doubt, its just that their leader is a former scientist who is now nothing but an activist.

And this tone, set by Hansen, from the top, shows in the GISS sloppiness at the poles.

-Chris

Norfolk, VA, USA

Thanks, Anthony and Steve, for yet another example of why it’s worse than we thought.

When do you guys sleep?

“geoff says:

October 3, 2010 at 9:38 am

I have codes to extract data from metar reports as part of my work. yes, they are an utter mess. human readable but difficult to get a computer to do (it’s hard to find EVERY case where the data comes through in a funny format)”

That the US did WX one way and the other countries did it another way was not good for international aviation. Thus came METAR, and it was something like a political compromise (including all the defects of both sides, of course).

At the time Richard Collins of “Flying” mag complained that it was no good because too MUCH info had to be transmitted by the then limited connections. Remember that a transponder still only uses numbers 1 to 7 to save 1 bit.

Good catch in tracing down all the possible sources. The chief difficuly and problem lies with GHCN. GISS, starts with the assumption that GHCN data is complete. They account for a few exceptions:

1. They use USHCN

2. The pull antarctice data in.

3. they correct a a couple individual stations when they have been supplied the missing data.

They dont go checking for more data or different data sources. That’s the job of NOAA.

For their annual figure they adopt a rule that says you can calculate an annual figure with missing months. A good check would be to determine if this rule biases the record hi or low. that would be a good job for somebody to pick up and plow through thousands of stations for hundreds of months. Or you can download gisstemp, change the rule and see if it matters. ( since I dump any year that has less than 12 months of data, I’ll suggest that result doesnt change much. )

Rui Sousa says:

October 3, 2010 at 8:17 am (Edit)

Is there any graphical representation of flow of climate information, available anywhere?

For example:

Airports -> METAR -> GHCN -> CRU

-> GISS

$$$$$$$$

all the info is out there if somebody want to take the time to draw the chart

I have a question that I haven’t seen asked…

Many stations can’t be used to measure local, let alone global temperatures. But if they’re no good for that, then what _are_ they good for? I mean, really, it either works or it doesn’t, right? Okay, so who pays for these things to not work as advertised?

Lost in admiration for your skill and tenacity. Brilliant.

Why didn’t your ‘M’ post some time ago produce a flurry of activity and corrections?

No doubt Fred Pearce will pick this up and write another article fir the Sunday Mail.

Anthony, thank you for this extremely thorough investigation and analysis! So far, I have not been able to do more than an initial scan.

It caught my attention because I have had the fortune of spending quite a few days in Nuuk during the past eleven months. Toward more and more autonomy from Denmark, Greenland is enjoying the attention and tourist traffic that go along with the many facets of the “melting scares”. However, its more and more self-confident and pragmatic population is keenly looking toward new resource development, from offshore oil and gas (compare with Greenpeace action at the beginning of September…) to Rare Earth minerals and cyclically changing fisheries.

As an aside, there are decent temperature records from Southern Greenland, showing a steady decline in recent years. I will dig them up.

What is more obvious, based on what I see with GISS, GHCN, HADCRU and other products of the “Climate Illuminati” is that they care very much, but only when it supports their cause.

A bureau of standards for climate data, run independently of the climate science community, is badly needed. But even this must be held to rigorous, transparent standards if it is to be trusted.

Note to moderators/writers:

I’m just nit-picking here, but sometimes it can be a little hard to tell where an article has shifted from commentary to quotes, or from update back to original text. It would clarify things for your readers if, for example, the updates in this article were closed off with ‘End of Update’ or some such, where they are inserted in the middle of the article. There was a post recently concerning NASA/GISS, where it was easy to miss the switch from Anthony’s commentary to the article being commented on.

My apologies if this is something you are aware of, but consider the effort in fixing to be better spent elsewhere (or not at all!) – that’s your decision to make, not mine – but in case you’re not aware of it, if no-one says anything, you’ll never know.

Please don’t take this post as anything except the most minor constructive criticism. Peace, love, home-cooking and good weather to you all 🙂

This problem if GISS not being able to obtain data while the data are available by other means has been going on for years (Steve McIntyre has made note of it in the past).

It seems to be a matter of priority. GISS’s priority seems to be getting the desired results. As long as the results they get are consistent with their stated hypothesis, they will see no reason to dig into and might resist any such digging as a “waste of time and resources” since clearly the results are “correct” and match the hypothesis.

Missing values allow them to calculate things. The more missing values they have, the more they get to calculate. These calculations tend to bias things more toward their hypothetical result and so are not really seen as a “bad thing”.

What needs to happen is for someone to go through and plug in all those missing values from all those stations from around the world and pump that through their software and see what comes of it but they know nobody has the time or resources to do that.

There needs to be an open database of climate data that all have access to. The problem is that the people who sell climate data are not going to provide it for free. I was surprised at how much it cost to obtain temperature archives. People are charging a small fortune for those data.

This is the perfect niche opportunity for some well-respected institution of higher learning who has no dog in the climate hunt one way or the other (which would rule out the UC system and most Ivy League schools). They could assemble a climate data base and make it available for other institutions to use. They would have no interest in the outcome, their only interest would be in the quality of the data in the database.

Probably never happen.

Anthony:

The coding languages you listed are not as important as whether they used Regular Expressions to parse the date or whether they tried to code some sort of pattern recognition themselves. Modern languages all have access to a Regular Expression Library (sometimes with predictive pattern recognition), BUT, old time coders working from an old code base typically don’t use it. I think this is the problem you’re experiencing.

More modern languages like Perl or Python have this facility built-in. You may want to contact http://www.perlmonks.org to see if they will look at the problem for you. Alternatively, I could do it myself if you want to supply me with exemplars of all the weird output formats and the “rules”. I’ve done a few projects like this, and, it usually takes a few weeks of successive approximations to get it right. The fact that a whole team might be required for C or COBOL should not deter you.

REPLY: What we might have here is an opportunity to contribute a truly “robust” decoder that can be integrated into a wide variety of systems. Intriguing idea. – Anthony

Why fix the code when it will only be used to disprove your theory???

Remember GISS and CRUT do a GREAT job of infilling data that is missing. It works better the more missing data they have, at least for them!!

Is it feasible to “crowd source” similar QC of data used for GISS and UCR analyses? The workflow you’ve used has proven robust – the challenge lies in correcting the data. Mosh and Chiefio are up to their elbows in analysis of the data, but is there a way to ‘cook book’ this for the rest of us ala Surfacestaions? Or are all of these stations a unique mystery, a finite series of ‘one offs’?

kuhnkat says:

October 3, 2010 at 11:22 am (Edit)

Why fix the code when it will only be used to disprove your theory???

Remember GISS and CRUT do a GREAT job of infilling data that is missing. It works better the more missing data they have, at least for them!!

$$$$$$$$$$$

“infilling” has no noticeable effect on the answer. I use no infilling whatsoever in my approach and the answer is the same. the reason for this is mathematically obvious.

1 X 3

Average them.

Now in fill:

1,2,3

Average them.

The problem with “infilling” and extrapolation is NOT that it gives you the wrong answer. The problem is it doesnt “carry forward” the uncertainty in the infilling

data operation. In the first example you have two data points. In the second example, you do NOT have three data points, you have 2 data points and the model ( with error) of the third. So if your calculating a standard deviation, you dont get to pretend ( in the second example) that you magically have a third data point, although people do.

To recap: Infilling and extrapolation ( averaging) dont bias ( generally) your estimate. if you think they do, it’s very easy to prove if they do. What infilling and extrapolation does do, is give you a false sense of certainty ( smaller error bars) unless you account for it

LearDog says:

October 3, 2010 at 11:22 am (Edit)

Is it feasible to “crowd source” similar QC of data used for GISS and UCR analyses? The workflow you’ve used has proven robust – the challenge lies in correcting the data. Mosh and Chiefio are up to their elbows in analysis of the data, but is there a way to ‘cook book’ this for the rest of us ala Surfacestaions? Or are all of these stations a unique mystery, a finite series of ‘one offs’?

########

ah ya. there are a whole host of crowd sourced projects that would be great. I know of one guy who is working all by himself on reconciling the airport locations. he doesnt post or spend his time commenting, he just plugs away at a known problem. As I’ve said a bunch of times there are many known problems, many problems that do not get attention because folks get distracted by the wrong issues. It’s getting a bit weary stating it all over again. When it comes to problems that will have an impact on the final numbers ( they have a chance too ) the problems are:

1. the accuracy of metadata.

2. the uncertainty of adjustments.

3. reconciliation of data sources.

The problems are not:

1. using anomalies

2. dropping stations

3. UHI adjustments ( its the input to the adjustment, not the math itself)

4. extrapolation

Which means that the real problems ( lets call them issues ) are not with GISS, but rather with all the stuff they ingest. Now GISS makes a nice target, but technically, if you want to make a difference in understanding the numbers, focus on NOAA. And from my perspective, it’s a bit annoying to have so much energy focused on the wrong area.

So, my wishlist:

1. the data flow diagram everybody has been to lazy to draw.. clickable with links

to the underlying documents and files. I’ll contribute all my R code to read files and docs. ( but I refuse to scrape web pages, drives me batty )

2. A mash up of the station inventories. Again, I’ll contribute the R code. to pull metadata.

3. On its own damn server.

I’m gunna refuse to write any html because it sucks and drives me crazy.

I have a, probably naive, question regarding METAR. METAR reports an integer value for the temperature. Therefore the actual temperature value will be rounded. Aircraft pilots need to know when the temperature is so hot that they require to take special action for take off. For safety reasons would not the practice be for all temperature reports in METAR to be rounded up, eg 30.1 degrees C reported as 31 degrees C ?

Regards,

Ian

OH, and i refuse to write an regular expressions beacuse they too drive me batty

pat <- "\\.([^.]+)$"

just to grab the file extension! and that took way too long to figure out

Anthony, there are a number of tools you can use to develop the regular expressions

to decode the stream. In any case I can test it

Usually a careful audit of the data will reveal whether laziness and incompetence occurs in a biased fashion. People may tend to fix only what they want to fix.

Before the days when programmers could assign the value NaN (Not a Number) to a numerical data type, it was common to assign an unreasonable value like 999 to indicate missing data. If we could go back and just change the sign of all missing or erred data entries, making them a negative value instead of positive, I wonder if there would be a significant lowering of temperature in the record? Following the many articles at WUWT, one thing stands out for me. It seems that when errors in data occur, it always results in hotter temperatures.

Are you sure it is an artifact of incomplete data?

Or an artifice of incomplete data.

Regards

“Which means that the real problems ( lets call them issues ) are not with GISS, but rather with all the stuff they ingest. ”

Exactly. Which is why I would like to see the “missing” values plugged in and the whole thing run through the mill again to see what pops out the other side. But there are some subjective pieces, such as deciding which way to adjust the values of a station for UHI.

But “liking to see” something and actually having the time and other resources needed to do it is another thing entirely.

Nuuk summer temperatures are still significantly lower than in the 1930s. Here is a graph of July average temperature: http://www.appinsys.com/GlobalWarming/climgraph.aspx?pltparms=GHCNT100AJulJulI188020090900110MR43104250000x

Dya think they are grappling with this at Exeter? Didn’t they ask for issues from out side the group? Is it too late? Maybe Anthony, you should send them this critique. I belielve as Lucy does that the mule work in reviewing the thousands of stations is going to have to devolve on volunteers outside the parish. Perhaps the Nuuk data problem should be sent directly to the Senate or Congressional committee that gave NCAR/NOAA their marching orders on the data quality issue so they will be forced to deal with it. Otherwise, we will soon have the fourth whitewash of the year. “Yeah we found a few issues but a statistical evaluation of the data set shows that the effect on the trends is insignificant .”

When i saw this article I thought: Ok, how about september 2009 then?

On september 2009 the coldest semtember temperature registered was measured on Greenland, -46.1 C – on Summit, the Ice sheet:

http://www.klimadebat.dk/forum/vedhaeftninger/summit8799.jpg

http://www.klimadebat.dk/forum/vedhaeftninger/summitminus47b.jpg

http://www.dmi.dk/dmi/iskold_september_paa_toppen_af_groenland

This event is obviously likely to have been accompanied by a severely cold september 2009 on Greenland.

So did GISS manage to publish september 2009 data for Nuuk?

http://wattsupwiththat.files.wordpress.com/2010/10/nuuk_giss_datatable.png

Nope. The only month missing for 2009 is …. september.

Anthony congratulations on yet another outstanding piece of work.

You say “I don’t think there’s active manipulation going on here, only simple incompetence, magnified over years.”

Maybe that’s true, but you have have to wonder at the fact that all the “incompetence” that gets uncovered always seems to be in the same direction…..

When have we ever seen an “error” which showed LESS warming?

Very simple answer really, they took the thermometer inside so that they could have more light to read it 😉

If you check out WU’s blogs and such you may change your opinion of their agenda.

I have to agree with Anthony – simple plain incompetence at work in government, and I’ve previously opined that AGW hypothesis seems very much similar – the result of scientific incompetence, mostly due to the physical sciences being influenced by the imprecision of the social sciences and their consensus beliefs.

What prompts this view is the obvious sincerity many AGW’s advocates have concerning CO2 emissions – it’s the outcome, in American terms, of the Pelosi’s and Reid’s of this world doing science – and what Feynman called Cargo-Cult science 0 the science when you aren’t doing science.

And it’s the well meant policies of the well meaning that seem to cause most of humanity’s misery over the centuries.

Louis Hissink says:

October 3, 2010 at 3:43 pm

“And it’s the well meant policies of the well meaning that seem to cause most of humanity’s misery over the centuries.”

C.S Lewis:

‘Of all tyrannies, a tyranny sincerely exercised for the good of its victims may be the most oppressive. It would be better to live under robber barons than under omnipotent moral busybodies. The robber baron’s cruelty may sometimes sleep, his cupidity may at some point be satiated; but those who torment us for our own good will torment us without end for they do so with the approval of their own conscience.’

So can someone tell me how we can measure global temperature change to accuracies of 0.1 C if METAR codeing drops decimals?

Final accuracy of data depends on the least accurate data in the dataset, not the most.

Well,

The entire GHCN V3 inventory is now progressing through geocoding. Damn it takes long. If people want to I’ll see if I can provide access so that the crowd can source away.

Other points:

Missing Values: In GHCN missing values are coded as -9999. No need to worry about dropped signs in this one. been check many many times.

crosspatch:

“Exactly. Which is why I would like to see the “missing” values plugged in and the whole thing run through the mill again to see what pops out the other side. But there are some subjective pieces, such as deciding which way to adjust the values of a station for UHI.

But “liking to see” something and actually having the time and other resources needed to do it is another thing entirely.”

what do you mean by missing values?

1. values that GHCN has thrown out because of QA?

2. Months that are dropped because of missing days in the month?

3. Data that somebody generated that GHCN did not use?

Three different issues:

1. You would have to get back to the source data.

2. this may be in the new QA flags, or you have to go to daily data.

3. You would have to source and verify that data.

three big tasks. Any takers?

Here: how QA works in V3

DMFLAG: data measurement flag, nine possible values:

blank = no measurement information applicable

a-i = number of days missing in calculation of monthly mean

temperature (currently only applies to the 1218 USHCN

V2 stations included within GHCNM)

QCFLAG: quality control flag, seven possibilities within

quality controlled unadjusted (qcu) dataset, and 2

possibilities within the quality controlled adjusted (qca)

dataset.

Quality Controlled Unadjusted (QCU) QC Flags:

BLANK = no failure of quality control check or could not be

evaluated.

D = monthly value is part of an annual series of values that

are exactly the same (e.g. duplicated) within another

year in the station’s record.

K = monthly value is part of a consecutive run (e.g. streak)

of values that are identical. The streak must be >= 4

months of the same value.

L = monthly value is isolated in time within the station

record, and this is defined by having no immediate non-

missing values 18 months on either side of the value.

O = monthly value that is >= 5 bi-weight standard deviations

from the bi-weight mean. Bi-weight statistics are

calculated from a series of all non-missing values in

the station’s record for that particular month.

S = monthly value has failed spatial consistency check

(relative to their respective climatological means to

concurrent z-scores at the nearest 20 neighbors located