Above: map of mean temperature and departure by state for February 1936 in the USA, a 5 sigma event. Source: NCDC’s map generator at http://www.ncdc.noaa.gov/oa/climate/research/cag3/cag3.html

Steve Mosher writes in to tell me that he’s discovered an odd and interesting discrepancy in CRU’s global land temperature series. It seems that they are tossing out valid data that is 5 sigma (5σ) or greater. In this case, an anomalously cold February 1936 in the USA. As a result, CRU data was much warmer than his analysis was, almost 2C. This month being an extreme event is backed up by historical accounts and US surface data. Wikipedia says about it:

The 1936 North American cold wave ranks among the most intense cold waves of the 1930s. The states of the Midwest United States were hit the hardest. February 1936 was one of the coldest months recorded in the Midwest. The states of North Dakota, South Dakota, and Minnesota saw the their coldest month on record. What was so significant about this cold wave was that the 1930s had some of the mildest winters in the US history. 1936 was also one of the coldest years in the 1930s. And the winter was followed one of the warmest summers on record which brought on the 1936 North American heat wave.

This finding of tossing out 5 sigma data is all part of an independent global temperature program he’s designed called “MOSHTEMP” which you can read about here. He’s also found that it appears to be seasonal. The difference between CRU and Moshtemp is a seasonal matter. When they toss 5 sigma events it appears that the tossing happens November through February.

His summary and graphs follow: Steve Mosher writes:

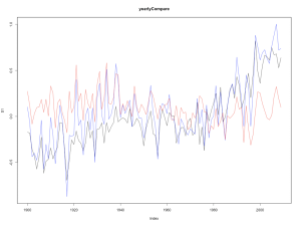

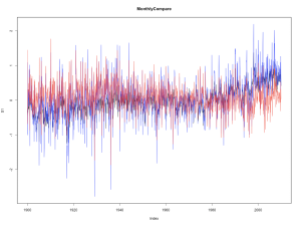

A short update. I’m in the process of integration the Land Analysis and the SST analysis into one application. The principle task in front of me is integrating some new capability in the ‘raster’ package. As that effort proceeds I continue to check against prior work and against the accepted ‘standards’. So, I reran the Land analysis and benchmarked against CRU. Using the same database, the same anomaly period, and the same CAM criteria. That produced the following:

My approach shows a lot more noise. Something not seen in the SST analysis which matched nicely. Wondering if CRU had done anything else I reread the paper.

” Each grid-box value is the mean of all available station anomaly values, except that station outliers in excess of five standard deviations are omitted.”

I don’t do that! Curious, I looked at the monthly data:

The month where CRU and I differ THE MOST is Feb, 1936.

Let’s look at the whole year of 1936.

First CRU:

had1936

[1] -0.708 -0.303 -0.330 -0.168 -0.082 0.292 0.068 -0.095 0.009 0.032 0.128 -0.296

> anom1936

[1] “-0.328″ “-2.575″ “0.136″ ”-0.55″ ”0.612″ ”0.306″ ”1.088″ ”0.74″ “0.291″ ”-0.252″ “0.091″ ”0.667″

So Feb 1936 sticks out as a big issue.

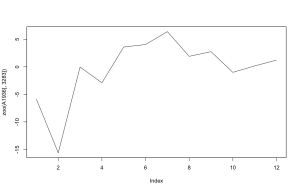

Turning to the anomaly data for 1936, here is what we see in UNWEIGHTED Anomalies for the entire year:

summary(lg)

Min. 1st Qu. Median Mean 3rd Qu. Max. NA’s

-21.04000 -1.04100 0.22900 0.07023 1.57200 13.75000 31386.00000

The issue when you look at the detailed data is for example some record cold in the US. 5 sigma type weather.

Looking through the data you will find that in the US you have Feb anomalies beyond the 5 sigma mark with some regularity. And if you check Google, of course it was a bitter winter. Just an example below. Much more digging is required here and other places where the method of tossing out 5 sigma events appears to cause differences(in apparently both directions). So, no conclusions yet, just a curious place to look. More later as time permits. If you’re interested double check these results.

had1936

[1] -0.708 -0.303 -0.330 -0.168 -0.082 0.292 0.068 -0.095 0.009 0.032 0.128 -0.296

> anom1936

[1] “-0.328″ “-2.575″ “0.136″ ”-0.55″ ”0.612″ ”0.306″ ”1.088″ ”0.74″ “0.291″ ”-0.252″ “0.091″ ”0.667″

had1936[1] -0.708 -0.303 -0.330 -0.168 -0.082 0.292 0.068 -0.095 0.009 0.032 0.128 -0.296> anom1936[1] “-0.328″ “-2.575″ “0.136″ ”-0.55″ ”0.612″ ”0.306″ ”1.088″ ”0.74″ “0.291″ ”-0.252″ “0.091″ ”0.667″

Previous post on the issue:

CRU, it appears, trims out station data when it lies outside 5 sigma. Well, for certain years where there was actually record cold weather that leads to discrepancies between CRU and me. probably happens in warm years as well. Overall this trimming of data amounts to around .1C. ( mean of all differences)

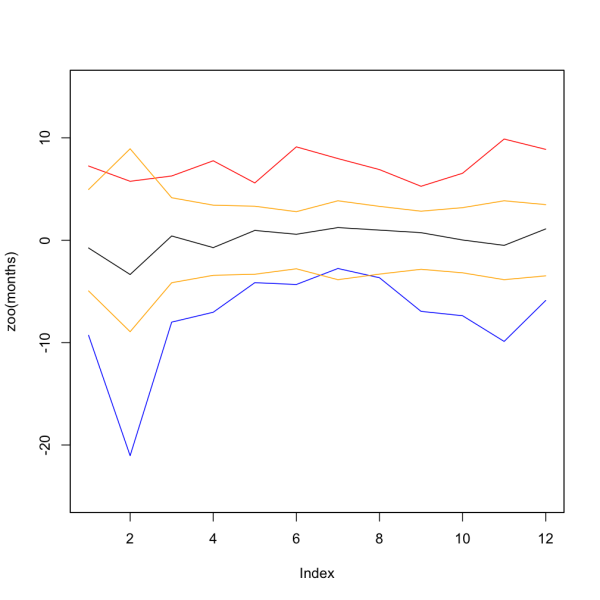

Below, see what 1936 looked like. Average for every month, max anomaly, min anomaly, and 95% CI (orange) And note these are actual anomalies from 1961-90 baseline. So that’s a -21C departure from the average. With a standard deviation around 2.5 that means CRU is trimming departures greater than 13C or so. A simple look at the data showed bitterly cold weather in the US. Weather that gets snipped by a 5 sigma trim.

And more interesting facts: If one throws out data because of outlier status one can expect outliers to be uniformly distributed over the months. In other words bad data has no season. So, I sorted the ‘error’ between CRU and Moshtemp. Where do we differ. Uniformly over the months? Or, does the dropping of 5sigma events happen in certain seasons? First lets look at when CRU is warmer than Moshtemp. I take the top 100 months in terms of positive error. Months here are expressed as fractions 0= jan

Next, we take the top 100 months in terms of negative error. Is that uniformly distributed?

If this data holds up upon further examination it would appear that CRU processing has a seasonal bias, really cold winters and really warm winters ( 5 sigma events) get tossed. Hmm.

If this data holds up upon further examination it would appear that CRU processing has a seasonal bias, really cold winters and really warm winters ( 5 sigma events) get tossed. Hmm.

The “delta” between Moshtemp and CRU varies with the season. The worst months on average are Dec/Jan. The standard deviation for the winter month delta is twice that of other months. Again, if these 5 sigma events were just bad data we would not expect this. Over all Moshtemp is warmer that CRU, but when we look at TRENDS it matters where these events happen

So CRU are a bunch of Tossers….?…….:-)

Am I missing something? I do not any labels for the lines in three of Mosher’s graphs.

I wonder if there is code that does that in the Climategate software release.

I don’t know if anyone has taken much of a look at the Climategate code beyond what is in Harry’s Readme file.

Or, given the recommendations of increased openness at CRU, if the relevant source is readily available now.

Steve Mosher: Please add title blocks to your graphs that specify what the graphs represent and the color coding of the datasets. I have no idea which dataset is which.

Title blocks are time consuming but they give the viewers an idea of what you’re illustrating. The more info the better.

An old and wise rule-of-thumb in data analysis is ‘never discard data for only statistical reasons’. Such as a 5-sigma cut-off. Only discard data on the basis on subject-matter expertise (e.g. declaring the values to be physically impossible, or so extraordinary as to be incredible) or specific investigation revealing measurement or other problems. Once again, the geographers and programmers behind climate alarmism have revealed a weakness in their statistical practice.

Five sigma from what, a thirty year average?

[REPLY – HadCRUt goes back to around 1850. ~ Evan]

A couple of quick thoughts:

Winter/cold temps are more volatile because air is dryer. Therefore standard deviation in winter is higher.

In the same vein, for a normal distribution,a 5 sigma monthly event occurs at p = 3 x 10^-7. For 10,000 stations, this implies that one of them will see a 5 sigma event every 30 years or so. What are they talking about?

More BS from the CRU. The “consensus” continues to crumble.

Well clearly it is invalid, it does not fit the computer models, the CO2 induced climate change paradigm and propaganda or the political thrust behind the scam, so bin it!

Hide the decline?

And yet, “Millitary Games” in the APAC region exclude a very big chunk of the world in this area. Hummmm….

Seems to me, the millitary machine is growing in the APAC space. I’ll take wagers, there will be a war for resources (In particular water) in my lifetime in the APAC region, and, sadly, New Zealand might bear the worst brunt of that battle (Well, they do have more “freely” available fresh water than Australia, or any other country in this space to be honest, does).

You know, it would be nice if we had some sort of profession where people gathered data, tried to ensure it was accurate, and recorded and disseminated such data along with trends and patterns. As opposed to fudging, deleting, massaging, and re-imagining data to fit an agenda.

I wonder what we would call such a profession?

The 1930’s in the USA has the lowest lows and the highest highs. I think there is good reason for this, in particular 1936. The PDO was about to flip into negative, but more important is perhaps the solar position. SC16 which peaked around 1928 was weak with a today count of around 75 SSN. Take off the Waldmeier/Wolfer inflation factor and this cycle would be close to a Dalton Minimum cycle. 1936 is near cycle minimum, so with the already weak preceding solar max the EUV values would be similar or less than today.

This is the pattern that looks to be occurring during low EUV, extremes at both ends of the temperature scale probably because of pressure differential changes that produce unusual pressure cell configurations that in turn form blocking patterns to jet streams. We have seen this occur in many regions over the past 2-3 years, Russia burning, South America freezing, Japan at record highs with Australia recording 30 year high snow falls and now amidst severe flooding like Pakistan. Go back a little further and we see record high temps in Australia with massive bush fires that rocked our world while the NH winter was a white out.

EUV is a big player of extremes, add to that the concurrent PDO and Arctic/Antarctic oscillations with the likelihood of stronger/more frequent La Nina episodes coupled with less ocean heat uptake, the stage is set for more extremes but with a downward trend.

You can see the code for this in the MET’s released version of CRUTEM in the station_gridder.perl

# Round anomalies to nearest 0.1C - but skip them if too far from normal

if ( defined( $data{normals}[$i] )

&& $data{normals}[$i] > -90

&& defined( $data{sds}[$i] )

&& $data{sds}[$i] > -90

&& abs( $data{temperatures}{$key} - $data{normals}[$i] ) <=

( $data{sds}[$i] * 5 ) )

{

$data{anomalies}{$key} = sprintf "%5.1f",

$data{temperatures}{$key} - $data{normals}[$i];

}

It is well documented that the winter season has the highest variability.

Jones, et al, 1982, Variations in Surface Air Temperatures: Part 1. Northern Hemisphere, 1881-1980

Jones, et al, 1999, Surface Air Temperature and Its Changes Over the Past 150 Years

As to documenting the standard deviation drops …

Jones and Moberg, 1992, Hemispheric and Large-Scale Surface Air Temperature Variations: An Extensive

Revision and an Update to 2001

Jones notes in an earlier paper that the corrections were prevalent for Greenland. These wx record coding errors remind me of the sort of thing that Anthony himself was pointing out here.

Does Jones inappropriately drop or adjust Feb 1936 records? Someone should go look. Most of the CRU data sets are available here:

http://www.metoffice.gov.uk/climatechange/science/monitoring/subsets.html

———–

Just a side note, Mosher’s stuff was posted prematurely with unlabeled graphs and abbreviated discussion (for instance – which paper is ‘the paper’ reread by Mosher). Given how careful he was with the response to McKitrick, I wonder if he personally approved posting these notes in draft form?

For us more ordinary people, please point out what “5 sigma” stands for. We await your elucidation.

Personally I am familiar with 6 sigma, as in being told that’s “about one in a million,” when the machine shop I was at, right after ISO 9002 certification, decided the Six Sigma quality assurance program was something the customers wanted and it would get us more business. “6 sigma” would have been the maximum defect rate allowed on parts that got shipped. You may freely guess the results, and anyway “that’s a goal to work towards.” And as with the American economy and the Stimulus Bill, if we hadn’t had that plan then the layoffs would have been much worse, of course, obviously, without a doubt, which those who recommended the Six Sigma (SS?) plan were certain of.

For the love of God, please put comprehensive labels on the axes, and include keys when there are multiple graphs in one plot. You could be reaching a MUCH larger audience with just a little more work. I’m a Ph.D. research scientist, and if I can’t discern what’s in a graph, what hope is there for the average first-time visitor?

Anthony says: “As a result, CRU data was much warmer than his analysis was, almost 2C.”

Mosher says: “Over all Moshtemp is warmer that CRU…”

So with these 5 sigma deletions, CRU is almost 2°C warmer than Moshtemp, and Moshtemp is overall warmer than CRU.

Is this one of those dangerous CAGW-inducing positive feedbacks?

What do the various colors signify on the graphs?

Detailed analysis on a regional and country basis by E.M.Smith/Chiefio have also shown consistent trends of winter records being manipulated and adjusted, but with no clear explanations of how and why. Maybe this is identifying the same thing?

Although this paper is not adequately illustrated and summarized, I get enough to see an interesting problem with tossing 5 sig for the future! If we are to have runaway global warming, soon the temp anomalies will be more frequently over 5 sig – are they going to toss these, too? You can bet your life they wont. This is a temporary thing to ensure the trend until they don’t need it anymore to maintain the present hotter than before, then, like GISS, they will re-adjust the earlier numbers.

Trimming what seem extreme outliers (“5-sigma” events) is never a good policy, because these tend to indicate inflection-points where positive regimes reverse to negative, and vice versa. Given valid data, such selective processing is a form of arbitrary smoothing, unjustifiably excluding anomalies which may in fact be no such thing.

So-called psychic researchers such as J.B. Rhine play this game in reverse, ignoring null-results in favor of “flash-points” supportive of their subjective theses. CRU’s unacknowledged resort to such seasonal manipulation completely invalidates any and all Warmist conclusions, obviating the very nature of their pseudo-scientific enterprise. If the Hadley Center’s fancy academics do not know, much less admit to this, they stand as one with Rene Blondlot, Trofim Lysenko, Immanuel Velikhovsky, and other charlatans of that same ilk.

Sorry Bob,

The Plots are just made on the fly as I walk through the data, For the chart in question, Red is the MAXIMUM departure from normal, blue is the minium. orange is +- 1.96sd, black is the mean. “index” is month from 1-12. For me this is just a curiousity that other can go take a look at as I’m working on debugging other stuff.

The procees of throwing out 5 sigma events has a seasonal bias

If this is true, then it cannot be seen as anything other than scientific misconduct, unless the CRU can justify this step on physical grounds.

Noblesse Oblige says:

September 5, 2010 at 6:24 am

> Winter/cold temps are more volatile because air is dryer. Therefore standard deviation in winter is higher.

More than just that – the coldest region, the northern US, can get get air masses from the polar, arctic, Pacific, and Gulf of Mexico regions during the winter. During the summer the northern air masses generally don’t make it down between mid-June and mid_August.

It shouldn’t matter – the 5 sigma range widens in the winter. Umm, 5 sigma of exactly what? If it’s 5 sigma of all monthly temperatures, then that really sucks. If it’s 5 sigmas of all the Februaries, that’s another matter. It it’s 5 sigmas of daily temps, then there’s a decent chance it’s throwing away bad data (e.g. missing signs, though for Feb ’36, I could believe North Dakota be either -14 or +14 °F).

When I used to acquire large quantities of data electronically I used to use Chauvenet’s criterion for outlier rejection, basically you reject data that falls outside a probability (normal) of 1/(2n). In my datasets that amounted to 3.5 sigma. In the case of CRU data where they’re picking up data from all over the world it should help to get rid of the more egregious errors (like the Finnish dropped negative sign data for example). Seems prudent.

That’s a lot like what’s happening now. Not quite as bad yet.

What was so significant about this cold wave was that the 1930s had some of the mildest winters in the US history. 1936 was also one of the coldest years in the 1930s. And the winter was followed one of the warmest summers on record which brought on the 1936 North American heat wave.

That’s classic weather perturbed by volcanoes, and there were a lot of them in the ’30s.

http://www.volcano.si.edu/world/find_eruptions.cfm

So in line with the missing m for minus from airport data its exclude real data if it exceeds the preconcieved results? Or would the missing m ,have not mattered at CRU because all cold winter weather will now be 5 sigma points off the expected trend? More torture the data until it confesses?

As Mosher points out, this is documented in the papers describing HadCRUT and CRUTEM.

http://www.cru.uea.ac.uk/cru/data/temperature/HadCRUT3_accepted.pdf

http://www.sfu.ca/~jkoch/jones_and_moberg_2003.pdf

Yup, alledged CO2 warming does NOT cause worse temperature extremes than what we have actually recorded before the period when man made CO2 is supposed to be causing problems!!

Records indicate that the Nile river has frozen over at least twice.

Those events were probably six sigma events.

But they were real, none the less.

As Mosher points out, this is documented in the papers describing HadCRUT and CRUTEM.

So it’s alright, then.

The decade of the 1930’s is home to 25 of the 50 US State all-time hottest day records of any decade since 1880. Doesn’t any of this warming data get tossed, too?

Or, is it only cold data like 1936, or Orland CA (1880 and 1900), that gets tossed?

The big agw lie rolls on.

Its well known that blow freezing temperatures are unstable–this is because the specific heat of water is twice as high as the specific heat of ice.

“5-sigma” refers to five standard deviations outside of the average value. In experimental science, measuring such an extremely divergent data point might be an indication that something had gone temporarily wrong in the data collection process. For example, maybe a power glitch affecting an instrument, or contamination getting into a sample. In the absence of any way to verify that the specific data point was a valid one, a scientist might simply screen it out of the data set. There is a statistical argument for doing this, based on the fact that for a normally-distributed (bell curve) population of data, a “5-sigma” value should occur only one in a million times.

However – and this is a big however – if you have independent corroboration of the 5-sigma data point, you really should not throw it out of your data set. For example, the 1936 data set shows extreme cold values all over the north-central U.S., which were independently recorded. This is not simply one thermometer experiencing a glitch. Add to this the written historical records which provide a softer verification of the extreme temperatures. (I say softer, because any narrative record would have been dependent on the thermometer recordings for its objectivity.)

The irony here, of course, is that the CRU is rejecting “extreme weather events” that occurred in the past to arrive at its projections of CAGW, at the same time that extreme weather events in the present (including extreme cold snaps) are being embraced as proof positive that CAGW is happening Today! Yes indeedy, It’s the End of the World as we Know It!!

No, no, NO! That’s not the way to make the past colder and the present warmer.

They should be keeping the 5-sigma cold events for pre-1970, and tossing them for post-1970. Then, toss the 5-sigma warm events for pre-1970, and keep them for post-1970.

See? Instant global warming.

@ kadaka: 5-sigma refers to data that lies more than 5 standard deviations from the mean. Sigma is shorthand for standard deviation, taken from the Greek letter sigma. For data that follows a normal distribution, half will lie at less than the mean, and half will lie at greater than the mean. The probability of an event occurring within a given number of standard deviations is:

+ – 1 sigma: 68.2 percent

2 sigma: 95 percent

3 sigma: 99.7 percent

There is also a slightly different formulation, based on “Six Sigma” manufacturing, which looks at defects. Here’s a link:

http://money.howstuffworks.com/six-sigma2.htm

kadaka,

Sigma is the Greek character used to denote standard deviation. Thus a ‘5 sigma’ data point is one that lies more than five standard deviations from mean. It suggests that the data point is an outlier. Or, using the real meaning of the word, it is an anomaly.

Although it might be as said, just a way to get rid of most of the winter data that is out of the norm, another explanation is the lazy explanation:

they put that into the code to get rid of bad temperature readings…with the assumption that anything outside of 5 sigma was a bad reading. This is a real bad way to do this, but shrug, if you were lazy and didn’t really care, and your research was funded regardless of how well you modeled…well its “good enough for government work.”

Upper Amazon in drought.

http://www.reuters.com/article/idUSTRE6825EU20100903

Left unsaid in article is that Peru and Bolivia are facing another record cold year.

A breaking story.

http://www.heraldscotland.com/news/politics/holyrood-fiasco-peer-s-40k-for-chairing-climategate-review-1.1052947

It’s interesting that your graphs are very consistent with forest fire intensity/severity for the 20th century. About midway in my career (forestry) I suggested that there appeared to be a distinct synchronicity to the fire seasons that must correlate wih some Uber-climatic trend beyond what we got from the day-to-day weather predictions, and maybe even multiple trends that periodically became in-phase and led to our periodic large fire years. It was suggested that I might be better served to continue cruising timber.

If the seasonal bias is in the winter, are there an equal amount of warm 5+ sigmas and cold 5+ sigmas?

The same kind of tossing is done in the USHCN dataset creation and I would expect something similar in the creation of the GHCN. Some details on the kinds of tossing done are here:

http://chiefio.wordpress.com/2010/04/11/qa-or-tossing-data-you-decide/

with pointers to the referenced documents.

So, good catch Mosh!

Also, it is my belief that this kind of low temperature data tossing is why we get the “hair” clipped on low side excursions in the “pure self to self” anomaly graphs I made. The onset of the ‘haircut’ is the same as the onset of the “QA Procedures” in the above link…

This example simply uses a very large baseline more or less standard approach and still finds the “haircut”:

http://chiefio.wordpress.com/2010/07/31/agdataw-begins-in-1990/

Canonical world set of dT/dt graphs (a variation on “first differences):

http://chiefio.wordpress.com/2010/04/11/the-world-in-dtdt-graphs-of-temperature-anomalies/

A more “standard” version shows the same effect:

http://chiefio.wordpress.com/2010/04/22/dmtdt-an-improved-version/

So take that 1936 case, and start having it applied to the daily data that goes into making up the monthly data that goes into making things like GHCN, and suddenly it all makes sense… And it explains why when you look in detail at the trends by MONTH some months are ‘warming’ while others are not…

It’s not the CO2 causing the warming, it’s complex and confusing data “QA” that drops too much good data and selective data listening skills…

Oh, and selectivity as to when volatile stations are in, and not it, the data set. High volatility in during cold excursion baseline, then taken out at the top of a hot run, so that the following cool does not have a chance of matching the prior cold run.

http://chiefio.wordpress.com/2010/08/04/smiths-volatility-surmise/

Basically, they sucked their own exhaust and believed their own BS instead of doing a hard headed look at just what they were doing to the data. Same human failure that has brought down programmers, tech company startups, and stock system traders for generations.

It should have been a cold winter.

The previous four sunspot cycles were cooler. This allowed for cooler years in cycle boundaries.

The Sept. 1933 to Feb. 1944 was the first Global-Warming cycle of that century.

Sept. 1933 was the first year of the cycle. 1934 marked the “Dust Bowl”. The average rain for the US, was just above 23 inches, 4 inches below average. It was a warm winter of an average 36 degrees.

1936 was about the same in precipitation,

The average winter temperature for that winter per the NOAA was 28.54, 2d lowest from 1896 to 2008.

Sunspot activity for the previous year, mean of 36.10 and for 1936, a mean of 79.7.

Thus, I have to ask the question, how much glacier ice was melting? Did the sudden up swing in average US temperatures in 1926 and 1927; 1931 and 1932; and 1934 and 1935 cause major melting of the Polar Ice Caps and Northern hemisphere glaciers. Was the runoff cooling the oceans and the USA?

Is the cause of the US cooling as the planet began to warm up from a 54 year dormant sunspot cycle period?

In review of Glacier Bay maps, there was little lost in the glaciers from 1907 to 1929. That significantly changed with the warmer sunspot cycles. There doesn’t appear to be new measurements of the Bay until after WWII.

The milder sunspot cycles showed continued melting of the Glacier Bay Glaciers. There does appear to be a difference between 1907 to 1929 and from 1930 to 1949 in terms of ratio.

The Muir Glacier melted almost twice as fast from 1930 to 1948 as it did from 1907 to 1929. From 1930 to 1948 (20 years) it melted 5 miles up the Muir Inlet. From 1907 o 1929 (23 yrs) about 2.5 miles with a curve in the inlet.

From Wolf point to the 1948 melt point the inlet thins out a bit. Not sure what that would have done to the melt speed. Many factors.

In the Wachusett Inlet, the glacier melted roughly 3.5 miles, a mile and a half more for the years 1930 to 1949 in comparison to the 1907 to 1929 melt period covering 2 miles of melt. There is a two-year period difference in the two time periods.

Why mention that? Glaciers have been melting since that last Mini-Ice Age, but researchers were not watching the ice melt.

In today’s Alaska, glaciers are melting at about 60 feet a year at Seward and Juneau, Alaska.

1936 had 9 tropical storms, 7 hurricanes and one major hurricane. That was the largest hurricane season for the cycle. 1937 was the peak cycle year for 1933 to 1943 sunspot cycle.

There were No La Ninas and no El Ninos. Does that tend to reflect a cold year? I have no proof of that.

This repeated again in 1979 to 1980.

When glaciers and Polar Ice Caps are maxing at the end of a cool susnpot cycle, hurricanes pick up and La Ninas and El Ninos drop off.

Strange!

Paul

For us more ordinary people, please point out what “5 sigma” stands for. We await your elucidation.

5 sigma means 5 or more standard deviations from the average. standard deviation is a measure of variance (deviation) from the average expected in single data points given a Gaussian (random, exponential, bell curve or whatever other term you learned) distribution.

If you have a Gaussian distribution then about 1/3rd of your measurements will be within 1 standard deviation of the average, 19/20ths will be within 2 standard deviations, and only about .26% will not be within 3 standard deviations (3 sigma), and .0063% will be 4 or more standard deviations (4 sigma) from the average, while the chance of any single data point meeting the 5 sigma standard given a Gaussian distribution is about .000057% – often called one-in-a-million although one-in-two-million is closer to the truth.

Note that having a large number of 5 sigma data points does not mean anythings wrong, it merely means that the data does not follow a Gaussian distribution.

this is a quickie, if not clear enough look up “probability” “Gaussian distribution” “standard deviation” for some basic stats reading, it helps a lot in trying to understand climate science.

As other commenters have noted, 5-sigma applies to normal (bell-shaped) distributions. But are these temperatures normally distributed? If they follow a different distribution then the concept of sigma, and all the associated probabilities, do not apply.

Financial markets have often run into this confusion, and (as Taleb documents in The Black Swan) this contributed to the financial crash. He says that financial markets follow a Mandelbrot distribution instead, which has fatter tails and therefore a higher likelihood of outlier events. These outliers would be preposterously unlikely under a normal distribution, which is how traders were reporting 28-sigma events and other such nonsense.

Let alone 28-sigma: five-sigma is pretty unlikely. So is a normal distribution really applicable or not?

Some examination of the 5sd filter in the UK CRUTEM code.

http://rhinohide.wordpress.com/2010/09/05/mosher-deviant-standards/

It appears that if the sientific comunity was today to design tolet paper, the brown side would be on your finger.

Check out Chauvenet’s criterion, I posted on here earlier but for some reason it didn’t make it.

Rob Findlay says:

September 5, 2010 at 11:31 am

“As other commenters have noted, 5-sigma applies to normal (bell-shaped) distributions. But are these temperatures normally distributed? ”

Actually, CRU must be unaware that to assume a normal distribution they are agreeing that temp has a normal distribution, ie, a natural variation, and therefore there are no significant anthropogenic caused forcings. This is one of the drawbacks of having “everyman’s statistical analysis” available in XLS – it allows researchers to dispense with real statistical analysis.

Above, I cited: Jones and Moberg, 1992, Hemispheric and Large-Scale Surface Air Temperature Variations: An Extensive Revision and an Update to 2001

This is a typo. The cite should read:

Jones and Moberg, 2003, Hemispheric and Large-Scale Surface Air Temperature Variations: An Extensive Revision and an Update to 2001

Thank you Rob Findlay!

I ground all the way through this thread with the Black Swan thought banging around in the back of my head. The quickest way to encounter a Black Swan is to ignore the outliers. That Gaussian cupola “thing” that they used to evaluate risk being one of the contributors to the festivities we are experiencing now.

Rebecca C says:

September 5, 2010 at 9:59 am

“5-sigma” refers to five standard deviations outside of the average value. In experimental science, measuring such an extremely divergent data point might be an indication that something had gone temporarily wrong in the data collection process. For example, maybe a power glitch affecting an instrument, or contamination getting into a sample. In the absence of any way to verify that the specific data point was a valid one, a scientist might simply screen it out of the data set. There is a statistical argument for doing this, based on the fact that for a normally-distributed (bell curve) population of data, a “5-sigma” value should occur only one in a million times…”.

Not the correct approach with climate, where temperature is the result of deterministic chaos and linear statistical methods break down.

Once again the CRU have been spotted throwing away the data, what a big bunch of tossers they are!

Rob Findlay says:

September 5, 2010 at 11:31 am

“As other commenters have noted, 5-sigma applies to normal (bell-shaped) distributions. But are these temperatures normally distributed? ”

There is a parallel in finance where the father of the efficient market hypothesis, all three version s of which assume that price changes are distirbuted normally, Egunene Fama has, as a result of observing so many five sigma events in the history of price movements has disgarded the normal distribution in favour of Mandelbrot’s staple Paretian distributions.

As someone who has spent his whole working life in an industry based on using low level statistics I have learnt that if empirical data does not fit the model one should disgard the model not the data.

This seems to be a very goodc example of a classic type of statistical mistake. The mechanics of statistic analysis are deceptively simple, particularly today when we have tools like Excel, SPSS, Matlab etc.

However to actually use these tools correctly you need to have a profound knowledge of the mathematical basis of statistics, of the characteristics of the data you are analyzing and preferably also of the quirks of the particular tool you are using. Unfortunately this is very often not the case,

In this particular case CRU seems to have made one of the most common (and least excusable) errors. They have assumed that their data has a normal distribution without bothering to verify that this is actually true.

Ben says:

September 5, 2010 at 10:20 am

Although it might be as said, just a way to get rid of most of the winter data that is out of the norm, another explanation is the lazy explanation:

they put that into the code to get rid of bad temperature readings…with the assumption that anything outside of 5 sigma was a bad reading. This is a real bad way to do this, but shrug, if you were lazy and didn’t really care, and your research was funded regardless of how well you modeled…well its “good enough for government work.”

And to assume that this is what they did is the lazy way out rather than take the trouble to read the documentation!

What was actually done was to use the 5sigma test as a screen and then examine those data for signs of problems:

“To assess outliers we have also calculated monthly standard deviations for all stations with at least 15 years of data during the 1921–90 period. All outliers in excess of five standard deviations from the 1961–90 mean were compared with neighbors and accepted, corrected, or set to the missing code. Correction was possible in many cases because the sign of the temperature was wrong or the temperature was clearly exactly 10

Maybe it is the moderate climate of the UK that lends the UK researchers towards tossing very cold temperatures. They don’t know what to do with that; they never experienced anything that cold so subconsciously they decide to ignore it and code their programs that way.

So, i’d trust the Russians more about this.

Ok on the first graph there is a black line, a blue line, and red line…

What does the black line represent?

What does the Blue line represent?

What does the Red line represent?

I obviously have similar questions for all the other graphs.

Phil. says:

September 5, 2010 at 8:57 am

“[…]Seems prudent.”

Phil., i hope you don’t work in avionics?

I hate it when they make one blanket programming assumption to attempt and filter the data in some way without actually doing the footwork necessary to determine of what they are ignoring is actually valid or not. Just because it’s 5 sigma doesn’t automatically mean it’s bad. What the heck are they doing here? These data sets are becoming nothing but little playgrounds for the manipulators.

John A says: September 5, 2010 at 8:51 am

“If this is true, then it cannot be seen as anything other than scientific misconduct, unless the CRU can justify this step on physical grounds.”

This is just nuts. The criterion is clearly stated in their published paper, which is Mosh’s source. You can argue about whether it is a good idea, but there’s nothing underhand.

Charles S. Opalek, PE says: September 5, 2010 at 9:39 am

“Or, is it only cold data like 1936, or Orland CA (1880 and 1900), that gets tossed?

The big agw lie rolls on.”

Most arguments around here are that the 30’s were warmer than what the scientists say. Omitting cool 30’s data reduces the apparent warming trend.

Rob Findlay says: September 5, 2010 at 11:31 am

” But are these temperatures normally distributed?”

Good question (although they are talking anomalies here). A lot of the argument about whether recent warming is statistically significant etc is based on that dubious assumption.

Of course, the real question no-one is looking at much here, is whether tossing a few out-of-range months for individual station data really matters either way.

Looks like lazy programming and little effort to analyze the impact of the code. It’s probably a lot more difficult to do a good quality check for bad data and as long as you’re getting the results you expect ……….

Re is the temperature data distributed normally?

This link shows a distribution chart for monthly average temperature data for Abilene, Texas, from Hadley’s CRUT3 that was posted to the web shortly after the Climategate event of November 2009. The distribution is in one-degree C increments.

http://tinypic.com/r/n4xeo3/7

Having but simple statistical skills, this seems anything but normally distributed to me. I.e, there is no typical bell-curve shape, this has one “lobe” below the mean, and another “lobe” above the mean.

Perhaps others can comment on that chart, which was generated by me the hard way, in Excel (TM) but without using their statistics pack. Any errors or other faults are mine alone.

Just to be clear. As Ron Notes these are my working notes. I’m basically working through my stuff comparing it with CRU. The SST stuff matched pretty well, and when there was an difference I wrote to Hadley the difference was reconciled. With the Land portion I’ve never tried matching them exactly, so I figure I should give it a go. The large monthly deviations, seemed odd, so I went to back to Brohan 06 and sure enough, found the step documented. a quick spot check of the worst month and I know enough to move on and get back to this issue later. As for it’s overall impact? well I have to finish a bunch of other work. THEN, one can answer the question “does it make any real difference.”

Of coure, they omit 5-sigma data. They have to make sure to cook the numbers to support the CAGW hypothesis.

nick:

“This is just nuts. The criterion is clearly stated in their published paper, which is Mosh’s source. You can argue about whether it is a good idea, but there’s nothing underhand.”

Ya, I’m glad they documented it. I had no clue why my numbers had so much variability in certain months and with prototype code testing the prospect that I had made a mistake was foremost in my mind.

DirkH says:

September 5, 2010 at 1:12 pm

Phil. says:

September 5, 2010 at 8:57 am

“[…]Seems prudent.”

Phil., i hope you don’t work in avionics?

Why Dirk, would you rather not eliminate bad data and spurious points?

Tenuc says:

September 5, 2010 at 12:10 pm

Once again the CRU have been spotted throwing away the data, what a big bunch of tossers they are!

Once again some posters here have been spotted jumping the gun because something supports their prejudices rather than check the facts!

Ron’s got a really good post on more of the reason behind the difference.

http://rhinohide.wordpress.com/2010/09/05/mosher-deviant-standards/#comment-967

He tracks it down to a different reason than tossing 5sigma events.

Richard M says:

September 5, 2010 at 1:20 pm

Looks like lazy programming and little effort to analyze the impact of the code. It’s probably a lot more difficult to do a good quality check for bad data and as long as you’re getting the results you expect ……….

Looks like prudent checking of the data to me, then again I’m talking about what they actually do not your fantasy of what they do.

Met Office Meets “my backside”.

“Australia – Global warming my backside with the coldest day in 100 years

12 Jul 2010

WIDESPREAD cloud and persistent rainfall has kept temperatures down right across Queensland.

Longreach, in the Central West, received persistent rain from Tuesday evening, dropping the temperatures by about four degrees. The temperature then barely moved yesterday, reaching a maximum of 11 degrees; 12 degrees below the long-term average and the coldest July day in 44 years of records.Isisford, further south, was even colder, getting to just 10 degrees. This was the town’s chilliest day since before records began in 1913, almost a century ago.”

http://www.meattradenewsdaily.co.uk/news/140710/australia___global_warming_my_backside_with_the_coldest_day_in__years.aspx

…-

“Temperature records to be made public

Climate scientists are to publish the largest ever collection of temperature records, dating back more than a hundred years, in an attempt to provide a more accurate picture of climate change.”

Climate scientists have come under intense pressure following the Climategate scandal at the University of East Anglia, where researchers were criticised for withholding crucial information, meaning their research could not be independently checked.

Sceptics have also attacked climate change research over the quality of the records being used as evidence for the impact mankind has had on the world’s climate since the industrial revolution.

But the Met Office, which is hosting an international workshop with members of the World Meteorological Organisation to start work on the project, is now planning to publish hourly temperature records from land-based weather stations around the world.”

http://www.telegraph.co.uk/earth/environment/climatechange/7981883/Temperature-records-to-be-made-public.html

http://www.smalldeadanimals.com/archives/014798.html

Steve

Thanks for your exploring – Interesting results.

This suggests that there may be more cold excursions from arctic weather than warm excursions from the tropics. This may be systemic in that warm events may be moderated by ocean evaporation, while cold swings in the Arctic may have nothing comparable to dampen the swing.

Steven mosher says:

September 5, 2010 at 1:54 pm

nick:

Ya, I’m glad they documented it. I had no clue why my numbers had so much variability in certain months and with prototype code testing the prospect that I had made a mistake was foremost in my mind.

Ron suggests that it your choice of data set that is responsible for the differences, when he disabled the 5-sigma algorithm it made no difference to the results.

http://rhinohide.wordpress.com/2010/09/05/mosher-deviant-standards/

Fred H. Haynie says: Five sigma from what, a thirty year average?

Only if below their desired average – never if above!

The central problem is one of credibility. Neither the CRU nor any other agency has credibility if they will not publicly archive the raw data, with an unbroken chain of custody.

Whether they post their adjusted data is immaterial. The original, raw data is what matters. In fact, it is all that really matters. Throwing out data, whether it changes the final result or not, is done for manipulation.

Publicly archive the raw data for the public that pays for it.

If I had presented an assignment at university with such sloppy and incomplete graphs, it would have been returned without marking and I would have received no credit.

The standard of presentation at this site is slipping.

Some of the critics of WUWT say there is no science evident here. This posting certainly supports that observation.

Here’s one example of how this was reported in Australia at the time:

The Argus Friday 14 February 1936

NORTH AMERICA FREEZES IN ANOTHER COLD WAVE

Never in living memory has the North American continent experienced such cold

weather as at present.

Rest at: http://newspapers.nla.gov.au/ndp/del/article/11882393

Benoit Mandelbrot has a lot to say about chaotic data sets. In his book “The (mis)Behaviour of Markets”, he demonstrates that it is a serious fallacy to assume that the stockmarket behaves as a normal or Gaussian distribution, with standard deviation. On that assumption, five or six-sigma events are (would be) extremely rare; reality contradicts the theoretical… er… model, and these pesky booms and busts come along more frequently than the… er… model says they ought to.

Same principle with….. ah, but you’re ahead of me, aren’t you!

It seems to me that they could be omitting this data for two possible reasons.

1. To reduce the amount of noise in the trend. This makes any “anthropogegic” fingerprint appear more obvious and greater than it actually is.

2. To reduce the appearance of unusual extreme weather anomalies in their records so that modern extreme weather events could falsely be labelled as “unprecedented”. That word that is wrongly and massively overused by the CAGW alarmists.

These are just more tricks used to bolster the very weak CAGW theory.

So-called scientists who prefer massaged data to empirical data are not worthy of the name ‘scientist’. Once again, these people have been caught red-handed. Would everyone please stop giving them grant money?

Phil. says:

September 5, 2010 at 1:55 pm

”

“DirkH says:

Phil., i hope you don’t work in avionics?”

Why Dirk, would you rather not eliminate bad data and spurious points?”

Why – because you would endanger lifes.

And no, in engineering i wouldn’t eliminate “bad data” and “spurious points”. Rather, i would examine exactly these “spurious points”. Very very closely.

Think about your answer. You just said that the cold wave of 1936 was bad data; a spurious point – something that has not really happened.

If all scientists work this way, no wonder they never achieve anything.

ATTN: Anthony and Steve

In the 1930’s, what level of acccuracy was used for temperature measurements?

It certainly was not to +/- 0.001 deg F. Were not temperatures measured to +/- 1 deg F? These computed numbers should be rounded back to the the level to the accuracy used for temperature measurements.

If temperature data are rounded off to nearest whole deg C, global warming vanishes!

DirkH says:

September 5, 2010 at 4:00 pm

Phil. says:

September 5, 2010 at 1:55 pm

”

“DirkH says:

Phil., i hope you don’t work in avionics?”

Why Dirk, would you rather not eliminate bad data and spurious points?”

Why – because you would endanger lifes.

And no, in engineering i wouldn’t eliminate “bad data” and “spurious points”. Rather, i would examine exactly these “spurious points”. Very very closely.

Think about your answer. You just said that the cold wave of 1936 was bad data; a spurious point – something that has not really happened.

No I didn’t and neither did CRU, their procedure has been incorrectly described here.

As I pointed out several hours ago:

http://wattsupwiththat.com/2010/09/05/analysis-cru-tosses-valid-5-sigma-climate-data/#comment-475769

What was actually done was to use the 5-sigma test as a screen and then examine those data for signs of problems:

“To assess outliers we have also calculated monthly standard deviations for all stations with at least 15 years of data during the 1921–90 period. All outliers in excess of five standard deviations from the 1961–90 mean were compared with neighbors and accepted, corrected, or set to the missing code. Correction was possible in many cases because the sign of the temperature was wrong or the temperature was clearly exactly 10……

John A says:

September 5, 2010 at 8:51 am

If this is true, then it cannot be seen as anything other than scientific misconduct, unless the CRU can justify this step on physical grounds.

It looks more like carelessness and application of rules in algorithms that a meteorologist would say were not realistic, in the same way a botanist would say using tree rings for temperature is not realistic. But the result was ‘in line with expectations’ so a confirmation bias made it all look good.

This is the problem when guesstimation algorithms are used to ‘correct’ or ‘adjust’ data they can lead to systemic errors that are not immediately apparent.

So scientific misconduct is perhaps a little strong – but it was (is) an amateurish approach to collating data demonstrating a lack of quality control or quality management.

I started tracking weather statistics for Huntley, Montana back in 2006. At that time I downloaded the historical data from: United States Historical Climatology Network, http://cdiac.ornl.gov/epubs/ndp/ushcn/ushcn.html . This January I ran across some discrepancies so I downloaded the same file again and compared it to the original file. When I graphed the result it looked like someone had gone through the entire file with a computer program decreasing all of the temperature records prior to 1992. I still have both original files. February 1936 from the original file downloaded in 2006 was -.62 for the month and February 1936 from the file downloaded in January was -2.1 degrees F. My comments at that time were “Isn’t climate science fun! Everyday you learn new ways to commit fraud. I’d love to see the programmers’ comments on this little piece of code. I can imagine that it is something like “Hallelujah Brother, 2009 is the warmest year on record!!!!!”

Orkneygal says:

September 5, 2010 at 2:38 pm

If I had presented an assignment at university with such sloppy and incomplete graphs, it would have been returned without marking and I would have received no credit.

The standard of presentation at this site is slipping.

…—…—…

Er, uhm, no.

This was NOT a “university assignment”. It was NOT a graded paper, nor a funded paper (of any kind) nor a response to a privately funded nor commercial “assignment” to be returned to the boss for a profit and loss analysis.

It was (is!) a privately-volunteered, unfunded, spontaneous self-assigned question by a private individual. It WAS then bravely “volunteered” for public review by that individual for public review and comment and correction – which was subsequently provided by the private author in his response above.

The supposed “science” that has created Mann-made global warming IS publicly funded and has been awarded Nobel Prizes and billions of dollars of new funding over the past 20+ years. THAT “science” is hidden, has no review outside of friends and associates, and is NOT corrected nor publicly reviewed. But that “science” is responsible for 1.3 trillion in new taxes and today’s recession.

Looks like according to GISS this is a non-issue.

Let’s look at the numbers for “Annual and five-year running mean surface air temperature in the contiguous 48 United States (1.6% of the Earth’s surface) relative to the 1951-1980 mean,” from originating page here, using the tabular data. For 1936 the annual mean anomaly was only +.13°C.

The global average temperature anomaly is of course more important as it tracks the AGW signal. By the land-only (aka meteorological stations-only) numbers, tabular data here, the 1936 annual mean anomaly was exactly 0.00°C. With the Contiguous US (CONUS) only having 1.6% of the Earth’s surface, with the seas occupying 70% thus CONUS only being 5.3% of the land area, if there was a little snip of the 1936 US winter numbers then it still wouldn’t have meaningfully changed the more important global anomaly number.

Also, from the article:

Why?

In the winter months, around the temperate zones, there are the temporary snowfall accumulations. Snow falls, the albedo switches from dark ground to shiny snow, snow melts, the albedo is back to dark ground. With air temperatures checked so near the ground, the heating from sunlight during the day is important. So one December the meteorological conditions give CONUS little if any snow, next year there’s enough that the more northern areas have snow on the ground for the entire month. The albedo change will yield a larger temperature difference for that month between those years than a similar change in precipitation for a summer month like July, even when other factors (ratio of cloudy to clear days, etc) would yield little or no difference. Thus the albedo change gives a potential for greater temperature variances during the winter months than the rest of the year. Amazingly enough, it looks like that’s what you’ve found.

So, someone thought they had found something that looked suspicious with a temperature dataset, made up some graphs, posted here while noting he’s not making conclusions just noticing something that looks off, then certain commentators point out there is nothing wrong, it’s in the documentation, it makes no difference even if true, his analysis is faulty, etc.

Would it be wrong to say Mosher has just pulled a Goddard?

☺

——-

Side notes: GISS now says, for CONUS, 1934 had only 1.20 Celsius anomaly units, 1998 and 2006 were clearly warmer at 1.32 and 1.30 respectively. For the global numbers, the January-July Mean Surface Temperature Anomaly (°C) graph shows 2010 the warmest of the 131 year record.

2010 is gonna be da hottest evah!

Ron Broberg says:

September 5, 2010 at 7:17 am

You can see the code for this in the MET’s released version of CRUTEM in the station_gridder.perl

[From the code notes!]

# Round anomalies to nearest 0.1C – but skip them if too far from normal

if ( defined( $data{normals}[$i] )

&& $data{normals}[$i] > -90

—…—…—…—

Harold Pierce Jr says:

September 5, 2010 at 4:14 pm

ATTN: Anthony and Steve

In the 1930′s, what level of acccuracy was used for temperature measurements?

It certainly was not to +/- 0.001 deg F. Were not temperatures measured to +/- 1 deg F? These computed numbers should be rounded back to the the level to the accuracy used for temperature measurements.

—…—…—…—…—…—…

Look above: CRU input data is “rounded off” to the nearest 1/10 of one degree BEFORE analysis begins – yet the entire CAGW theory is based on a change of less than 1/2 of one degree.

Worse “Phil” claims proudly that the subsequent “corrections” to the original data for “exactly 10 degrees differences” ??????? – plus eliminating data outside of 5 sigma is “prudent” and good science.

Thanks Anthony!

May be good:

FOX News: Hannity Special: The Green Swindle

Sunday, Sep 5, 9 PM EST

If this information is correct, the researchers at CRU are guilty of laziness and sloppiness in their research. Good statistical researchers take the time to get to know their data and understand why extreme values are present while lazy researchers write programs that toss out these extreme values. Looks like someone messed up here.

“When they toss 5 sigma events it appears that the tossing happens November through February.”

Of course it’s the low temperatures. Look at DMI’s arctic temperatures at http://ocean.dmi.dk/arctic/meant80n.uk.php, any year. It’s the same for all latitude’s temperature readings. Maximums don’t vary as much as minimums do. I still wonder exactly why this is so, in the physics of the atmosphere I mean.

So, there’s the hike to the temperatures. We all knew it was there somewhere. And I’m sorry but that IS called manipulation of the data. Still want to better understand our atmosphere so keep the science coming Athony, it’s thanks to you, no doubt.

Does that have to do with the MSM rarely covering cold events ?? Where they deleted too ?? ☺

Well RACookPE1978, by the nature of your response, I see that you are in complete agreement with me.

The posting would not be accepted as an assignment response at my University.

Sloppy graphs are a sign of sloppy logic.

JTinTokyo says:

September 5, 2010 at 6:47 pm

If this information is correct, the researchers at CRU are guilty of laziness and sloppiness in their research.

As I’ve pointed out above they do not do this.

Any real scientist would know if you have such an effect as high summer temperatures having a fraction of the winter temperature’s standard deviation (stdev) you would have to do this “purification” on a monthly or even weekly basis. And since certain stations show large variance to the normal variance in their area, as a station in a valley where the others are mainly the plains, it would really have to be preformed on a per station basis.

I don’t see why any are being tossed, even if it’s clearly a mistake, say 15 entered instead of 51, 25 instead of -25.

If it’s really cold around an area and a reading of all other stations are, let’s say -4.3 stdev, why toss a few that are -5.1 stdev. Now if all surrounding temperatures are +3 stdev and one lone station nearby comes in at -6 stdev, that clearly should be examined on a one by one basis by human eyes and hopefully with a brain. They would be very rare and could easily be handled. If it’s clearly should have been 51 and not 15 by checking surrounding stations, change it, with tags and notes of the correction, don’t toss it.

It would shock me if these “scientists” in the climate agencies were found to be applying such proper critique to the data they so control.

So ,

How many other arbitrary data “corrections” does CRU and GISS rely upon?

Seasonal bias is a big deal. How is this acceptable to climate scientists?

How about granting entities that have spent so much money on building models that provide great graphics, but such poor prognostication.

Doesn’t anyone care that the foundation data is based upon cooked books?

Virginia is poised to answer some of these questions.

Weren’t the late 1930s warmer than the present?

From: wayne on September 5, 2010 at 6:48 pm

It’s just physics. Systems like to shed heat, until they equalize with their surroundings. For maximums you’re filling faster a leaking bucket, for minimums you’re either filling it slower, not at all, or perhaps even removing heat (colder air moves in, water evaporating after rainfall). Thus for the same absolute values of energy rate changes, you’ll get larger changes in minimums than you will maximums (for sunlight, gaining an extra 5 watts per square meter yields a smaller temperature increase than the temperature decrease from losing 5).

Ron Broberg says:

September 5, 2010 at 7:17 am

Thanks for the pointer to the code. http://www.metoffice.gov.uk/climatechange/science/monitoring/reference/station_gridder.perl

I want to pueck. Looks like quick and dirty code to me. Takes me back to programming in the 70’s. Guess I’m too used to writing 100% pure code with every single boundary verified.

What happens in PERL if an “undef”ed standard deviation is then used in calculations? NaN? Zero? Exception? Nothing? That “undef” for standard deviations is set in the block above the one you supplied above?

ATTN: RACookPE1978

I downloaded some temperature data for the remote Telluride CO from the USHCN.

The monthly temperature data for Tmax and Tmin are reported to +/- 1 deg F (ca. 0.5 deg C). The computed monthly Tmean was reported to +/- 0.00001 deg F. The SD was reported to +/- 0.000001. This is nuts!

The problem is that most “climate scientists” are computer and math jocks not experimentalists. Nowadays digital data from instruments is assumed to be 100% accurate especially by begining grad studentrs.

wayne says:

September 5, 2010 at 7:26 pm

I don’t see why any are being tossed, even if it’s clearly a mistake, say 15 entered instead of 51, 25 instead of -25.

If it’s really cold around an area and a reading of all other stations are, let’s say -4.3 stdev, why toss a few that are -5.1 stdev. Now if all surrounding temperatures are +3 stdev and one lone station nearby comes in at -6 stdev, that clearly should be examined on a one by one basis by human eyes and hopefully with a brain. They would be very rare and could easily be handled. If it’s clearly should have been 51 and not 15 by checking surrounding stations, change it, with tags and notes of the correction, don’t toss it.

That’s pretty much what CRU does.

It is rather rare as you surmise:

“We made changes to about 500 monthly averages, with approximately 80% corrected and 20% set to missing. In terms of the total number of monthly data values in the dataset this is a very small amount (<0.01%)."

So how would the current “5 sigma event tossing” have dealt with this event?

“…The world record for the longest sequence of days above 100° Fahrenheit (or 37.8° on the Celsius scale) is held by Marble Bar in the inland Pilbara district of Western Australia. The temperature, measured under standard exposure conditions, reached or exceeded the century mark every day from 31 October 1923 to 7 April 1924, a total of 160 days…”

Over a hundred degrees for 160 days.

In the early 20’s.

And to think, the world is much warmer now…

Phil.

Ya it looks like the big trim comes in BEFORE CRU. If I read ron correctly CRU are using an adjusted version while I’m using GHCN raw. Of course this just turns the discussion to the differences between the raw and adjusted. IN THE END, the differences end up not being that great, but 2C in one month floored me, especially when I had matched the SST results to damn near 1/100th.

Phil. says:

September 5, 2010 at 9:23 pm

Assumed a quote from CRU:

“We made changes to about 500 monthly averages, with approximately 80% corrected and 20% set to missing. In terms of the total number of monthly data values in the dataset this is a very small amount (<0.01%)."

That tells me nothing. Well… no, it actually does. It tells me it takes mere change of 0.01% of the records to make a rather large error in the trend that Steven Mosher is preliminarily showing.

You seem to know right where to go and get the core information. Do you happen to know where the 600 alterations/droppings are with the from and to and why, or those dropped, what they were and why. That wouldn’t be a very big file for them to keep and make public. Would save a lot of duplicate work. Or is it up to an investigator to try to recreate what they have done to the temperature records?

There’s a very good reason to toss 5-sigma data.

The result is data that ‘looks’ less noisy, yielding graphs which are more convincing in appearance.

You have got to be kidding me. Outliers are indicators of either some important behavior or bad data. Either way you have to investigate. Ad hoc application of a statistical cut-off is not good data analysis policy. You are just an apologist.

Wonder of NE Oregon is one of those areas where the extremes are dropped from the record. Meacham is notorious for extremes, and August saw two inches of snow fall on summer tourists up the top of the tram at Wallowa Lake. That is nearly two months ahead of the official start of Autumn! Let alone Winter!

Geoff Sharp says:

The 1930′s in the USA has the lowest lows and the highest highs. I think there is good reason for this, in particular 1936. The PDO was about to flip into negative, but more important is perhaps the solar position. SC16 which peaked around 1928 was weak with a today count of around 75 SSN. Take off the Waldmeier/Wolfer inflation factor and this cycle would be close to a Dalton Minimum cycle. 1936 is near cycle minimum, so with the already weak preceding solar max the EUV values would be similar or less than today.

Missed this earlier, my money would be on local events for the cold winter weather. I’d look at Black Sunday 1935 and it’s like for a cause.

tty says:

September 5, 2010 at 12:29 pm

This seems to be a very goodc example of a classic type of statistical mistake. The mechanics of statistic analysis are deceptively simple, particularly today when we have tools like Excel, SPSS, Matlab etc.

However to actually use these tools correctly you need to have a profound knowledge of the mathematical basis of statistics, of the characteristics of the data you are analyzing…..

_________________________________________________

One of my major pet peeves with the readily accessible statistical programs and the “instant PhD in Statistics” seminars pushed by the “flavor of the month” salesmen/consultants.

I would not be surprised if this was done so the error bars of the data was narrowed significantly. If the error is too large the small warming signal is lost in the noise. Since we are talking a signal of less than one degree per century they had to get the error lower somehow.

AJ Strata shows a 1969 graph: the CRU computed sampling (measurement) error in C for 1969. Graph at http://strata-sphere.com/blog/index.php/archives/11420

AJ Says

“They start at 0.5°C, which is the mark where any indication of global warming is just statistical noise and not reality. Most of the data is in the +/- 1°C range, which means any attempt to claim a global increase below this threshold is mathematically false. Imagine the noise in the 1880 data! You cannot create detail (resolution) below what your sensor system can measure. CRU has proven my point already – they do not have the temperature data to detect a 0.8°C global warming trend since 1960, let alone 1880.”

So when might we see the actual data upon which the speculation wrt global warming is based? No data= no science. All claims are opinion without the missing? data. What say you Phil Jones?

Jaye says:

September 6, 2010 at 8:37 am

Phil. says:

September 5, 2010 at 8:57 am

Seems prudent

You have got to be kidding me. Outliers are indicators of either some important behavior or bad data. Either way you have to investigate. Ad hoc application of a statistical cut-off is not good data analysis policy.

I think this is at least the fifth time that I’ve pointed out that this wasn’t what was done, not to mention Rod and Mosh in his most recent post!

You are just an apologist.

No I just read the material, perhaps you should do likewise?

Nice. Good catch. It would be interesting to take your top 100 months in terms of positive/negative error and plot them by year. Is there any change in the occurrence of outliers? More extremes as the climate warms? Oh wait it would be simpler to look at the variance by year. Never mind.

Phil.

I think a lot of people should read more carefully. Hmm. I thought I was pretty clear at a couple points that the trimming happened in both warm and cold, that IN THE END the difference was minimal and that somebody should double check the stuff. Ron pretty much explained that the CRU 5sig screen does little and that the key lies in the processing post GHCN. since I’m trying to match CRU that was huge relief for me. Anyways hopefully when Im done there will be a “standards” based way for people to quickly run a CRU type analysis. And then people can fiddle about with the trimming of outliers and see that the difference really makes no difference.. execpt at the margins where angels dance on the heads of pins.

I’m wondering if people will clue in.

1. heres a small difference, oh makes no substantial difference

2. heres another small difference, oh makes no difference

etc.

My experience in finding these is this. The more I find, the more confidence I have that the peculiarities of men and methods dont amount to a hill of beans in this matter. the warming is there. anybody who wants to question it should look at data integrity. thats some serious grunt work.

Steven Mosher says:

September 6, 2010 at 11:16 pm

Phil.

I think a lot of people should read more carefully. Hmm. I thought I was pretty clear at a couple points that the trimming happened in both warm and cold, that IN THE END the difference was minimal and that somebody should double check the stuff.

I thought you were pretty clear too.

Ron pretty much explained that the CRU 5sig screen does little and that the key lies in the processing post GHCN. since I’m trying to match CRU that was huge relief for me.

Right, as I understand Ron the difference in the datasets appears to be in the homogenization step (at GHCN?)

The trouble is that if the model was soundly based there should not be many outliers of 5 sigma+. It makes no diffference that the outliers are equally spread between warm and cold. The fat tails indicate that the smoothed model is not a good fit for the data. Unless all or nearly all the outliers can be put down to errors in data gathering, the Gaussian distribution is not an appropriate one on which to base the model and another more appropriate one should be sought.

Solomon Green says:

September 7, 2010 at 12:52 pm

The trouble is that if the model was soundly based there should not be many outliers of 5 sigma+. It makes no diffference that the outliers are equally spread between warm and cold. The fat tails indicate that the smoothed model is not a good fit for the data. Unless all or nearly all the outliers can be put down to errors in data gathering, the Gaussian distribution is not an appropriate one on which to base the model and another more appropriate one should be sought.

What model are you talking about? Five sigma is an improbable value regardless of whether it’s a Gaussian distribution or not, the actual probability isn’t invoked by CRU it’s just used as a threshold. It seems like a good choice since about 80% of them are correctable errors.

Jim Powell says:

September 5, 2010 at 4:43 pm

The greatly different historical data you cite for Feb 1936 at Huntley MT are but the tip of the iceberg of the massive data revisions evident in UHCN Ver. 2. While some egregious spurious trends were reined in (e.g., Grand Canyon AZ), a great many station records that showed no trend in Ver. 1 suddenly acquired one.

What is unacceptable is that these revisions were made surreptitiously in the so-called “unadjusted” record, downloadable from the GISS site as the default (“from all sources”) option. Many of them simply staircase the yearly data downward from some relatively recent year. Talk about manufacturing spurious trends! Climate data crunchers who want their results to be taken seriously need to look at–and overcome–such fundamental issues of data integrity, rather than contenting themselves with the clerical task of compiling regional or global averages and blindly reporting the “trend.”

I’d urge everyone to treasure whatever downloads they made several years ago. They’re as close to the elusive “raw” data as we”ll come. BTW, if anyone has a Ver. 1 download for Gonzales TX, I’d much appreciate a copy.

Roger Sowell says:

September 5, 2010 at 1:44 pm

Obviously you present the histogram of mean temeprature for all of the months of the year over the entire Abilene TX record. It consequently has a blocky, distinctly bimodal structure due to the nearly sinusoidal seasonal cycle. If you did a similar compilation for each month separately, you’d get something much closer to a bell-shaped histogram. (Interestingly, however, such treatment often produces histograms that are still bimodal, but not extremely so.)

While the goodness-of fit to the gaussian model needs to be established on a case-by-case basis, it is the standard deviation of the unimonthly distribution that is involved in identifying outliers.