Since we’ve been talking about IPCC’s “Africagate” recently, it seemed like an opportune time to point out what sort of GISS station adjustment goes on in data from it’s nearby neighbor island. Welcome Verity Jones first guest post on WUWT. FYI for those who don’t get the implied data munging title, “Munge” is sometimes backronymmed as Modify Until Not Guessed Easily. – Anthony

Since we’ve been talking about IPCC’s “Africagate” recently, it seemed like an opportune time to point out what sort of GISS station adjustment goes on in data from it’s nearby neighbor island. Welcome Verity Jones first guest post on WUWT. FYI for those who don’t get the implied data munging title, “Munge” is sometimes backronymmed as Modify Until Not Guessed Easily. – Anthony

Guest post by Verity Jones

This started out as a discussion point following E.M. Smith’s blog post Mysterious Madagascar Muse. The gist of the original article centered around the availability of data after 1990 in the GHCN dataset and the NASA/GISS treatment of temperature on the island. Well Madagascar has a bit of a further story to tell. I had offered to plot a ‘spaghetti’ graph of the temperatures from the ten stations used on Madagascar, and this has proven interesting as an example of how data is adjusted and filled in by GISS.

To start, the annual mean temperatures plotted on a graph (Figure 1) show clearly the differences between the stations – Antananarivo is high altitude and relatively cool, with a cooling trend; of the other stations, some have cooling trends, most are warming. Also noticeable is the very sparse data after 1990. Note the darker blue data for Maintirano, of which more later.

With such temperature differences between sites, obviously you cannot just average the temperatures. This is what it looks like if you do (Figure 2), and it clearly does not work as an average temperature for the island.

Normalizing each of the temperature series by calculating the mean temperature for that station for the baseline period of 1951-1980 allows plotting of an anomaly-based ‘spaghetti’ graph (Figure 3). This shows what looks like warming-cooling-warming climate cycles very clearly and it is possible to fit a third order polynomial trendline though the averaged data. I’ve seen this again and again for data I’ve plotted around the world (incidentally these were for WUWT regular TonyB).

E.M.Smith finds seven other rural stations within 1000km that may contribute to homogenization. They also show cooling to about 1965-1975, then a warming trend. This is lost from the homogenized data.

One final thing. Even the patchy data stops in 2005, so after this date Madagascar too gets ‘filled in’ data from elsewhere – it seems from the rural stations up to 1000km away – again. And even the stations used to ‘fill in’ have patchy data – many have a gap then ONE DATA POINT in 2009. Note that there was no data for 10 years prior to 2009 in this station.

This is unbelievable. Rather than give a lot of plot examples, check the station hyperlinks below for yourself:

| Ile Juan De N | 17.1 S | 42.7 E | 111619700000 | rural area | 1973 – 2009 | ||

| Dzaoudzi/Pama | 12.8 S | 45.3 E | 163670050000 | rural area | 1951 – 2009 | ||

| Iles Glorieus | 11.6 S | 47.3 E | 111619680000 | rural area | 1956 – 2009 | ||

| Ouani (Anjoua | 12.1 S | 44.4 E | 111670040000 | rural area | 1963 – 1984 | ||

| Serge-Frolow | 15.9 S | 54.5 E | 168619760000 | rural area | 1954 – 2009 | ||

| Ile Europa | 22.3 S | 40.3 E | 111619720000 | rural area | 1951 – 2009 | ||

| Porto Amelia | 13.0 S | 40.5 E | 131672150004 | rural area | 1987 – 200 |

Fort-Dauphin is my favorite. Lots of 999’s near the end…

http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=125671970000&data_set=1&num_neighbors=1

Great post – but the title makes me feel a little ill.

Well it confirms what everybody suspected: if the US data gathering is as bad as the surface station project shows, it can only be worse from the rest of the world, with the political unrest and/or lack of technology that characterizes most of it.

So that is GHCN which seems to be patchy and erroneous?

One Ilya Goz and John Graham-Cumming have jointly discovered what maybe an error in the HADCRUT3 station error data.

The apparent mistakes include:

1. “.. it appears that the normal error used as part of the calculation of the station error is being scaled by the number of stations in the grid square. This leads to an odd situation that Ilya noted: the more stations in a square the worse the error range. That’s counterintuitive, you’d expect the more observations the better estimate you’d have.

2. “The paper [Brohan et al.] says that if less than 30 years of data are available the number mi should be set to the number of years”. In some cases though the number of years is less the error appears to have been incorrectly calculated based on 30 years.”

What is even more intriguing – “..Ilya Goz.. correctly pointed out that although a subset had been released, for some years and some locations on the globe that subset was in fact the entire set of data and so the errors could be checked…”

The apparent errors Ilya and JCG have apparently revealed is indeed interesting. But what is also most interesting is that “a subset” which only applies for “some locations on the Globe” are in fact “the entire set of data”?

Is it true then, that HADCRUT3, which is supposed to represent the temperatures for the entire Globe, only represents those of some locations on the Globe, and that too inaccurately?

The questions that arise: Which locations? What parts of the Globe are left out and not represented? Why is this? If they were represented how would that affect the temperatures?

Is this another scam of epic proportions in the making?

Like the NIWA data, this is simply fraud. Unlike NIWA, the justification, it does not even have the rationale of the bogus temp adjustments.

even with no scientific education whatsoever, it’s clear what verity’s observations are telling us:

verity and/or anthony may be able to make something of the following:

Bishop Hill: Has JG-C found an error in CRUTEM?

Climate John Graham-Cumming, the very clever computer scientist who has been replicating CRUTEM thinks he and one of his commenters have found an error in CRUTEM…

http://bishophill.squarespace.com/blog/2010/2/7/has-jg-c-found-an-error-in-crutem.html

which links to:

Something odd in the CRUTEM3 station errors

http://www.jgc.org/blog/2010/02/something-odd-in-crutem3-station-errors.html

a little levity to end:

Business Standard, India: Abdullah bats for Pachauri, says IPCC chief wrongly targeted

“Recently, Pachauri is under tremendous attack. People did not spare Jesus Christ, Prophet Mohammad when they spoke about harmony and good will. Gandhi was targeted for his good work also. Today, Pachauri is being targeted… One day, they will realise,” (Union Renewable Energy Minister Farooq) Abdullah said at the Delhi Sustainable Development summit…

http://www.business-standard.com/india/news/abdullah-bats-for-pachauri-says-ipcc-chief-wrongly-targeted/85119/on

Australian: Feral camels clear in Penny Wong’s carbon count

Scientists have found camels to be the third-highest carbon-emitting animal per head on the planet, behind only cattle and buffalo. Culling the one million feral camels that currently roam the outback would be equivalent to taking 300,000 cars off the road in terms of the reduction to the country’s greenhouse gases.

But Climate Change Minister Penny Wong told The Australian there was little point doing anything about Australia’s feral camels as only the CO2 of the domesticated variety is counted under the Kyoto Protocol…

http://www.theaustralian.com.au/news/nation/feral-camels-clear-in-penny-wongs-carbon-count/story-e6frg6nf-1225827641354

finally, the determination of the ‘players’ involved is expressed neatly in the final line of the FinTimes below:

UK Financial Times: Tony Jackson: Carbon trading’s riders hold on to the handlebars

In other words, the whole climate change agenda could head off in unexpected directions. In that context, carbon trading could turn out like a bicycle: it moves forward, or falls over. If the latter, bad luck. But it would not be the end of the story.

http://www.ft.com/cms/s/0/b32a9e14-1452-11df-8847-00144feab49a.html

Just eyeballing this …

… it appears that the 10 year running average of the summed averages of the anomalies for the adjusted data is a better fit to Fig 3 than the summed averages of the anomalies for the raw data.

Maybe you could run a diff between your two series in Fig 5 and that in Fig 3.

I’m betting that the adjusted data make a better fit. It seems that your ‘Normalized Unadjusted Annual Mean Temperatures for Stations on Madagascar’, at least superficially, resembles GISS normalization! 😀

I also notice that your station list seems to be truncated on Fig 1.

I count 10 stations, 6 warming and 4 cooling (linear trend).

You only name only 8 on the plot.

(missing red square and purple diamond)

A stage where I am surprised in any way at the manner of temp data handling, is already far behind.

When considering Madagascar climate date it is important to consider two key factors that may have a significant impact on that data – 1) Deforestation and 2) Political instability.

The island of Madagascar has lost up to 90% of its original forest cover – half of that in the past 50 years. Many parts of the country suffer from serious erosion and land degradation. The loss of forest cover of such an extensive area surely has climate impacts – especially concerning temperatures and precipitation.

Madagascar, like many former colonial nations, has been beset with political instability since independence in 1960. Worldwide, the loss of weather recording stations in the past half century has been dramatic – but even in those which remain, quality control is an issue that should be addressed.

I began here about half an hour ago and started on the click-here tree to follow some of the other goings-on around the world in other countries with temperature data issues. It is a comfort to know that in the USA our weather reporting stations are reporting continuously with the most technically advanced equipment, properly sited, and properly documented.

What’s that you say? — Really! — Where?

http://www.surfacestations.org/

Averaging temperatures from different sites is indeed a bad idea, but averaging each site’s temperature trend over consistent time frames can give an idea how the area fared.

I used this approach for the hadCRUT3 temperatures for the USA.

http://sowellslawblog.blogspot.com/2010/02/usa-cities-hadcrut3-temperatures.html

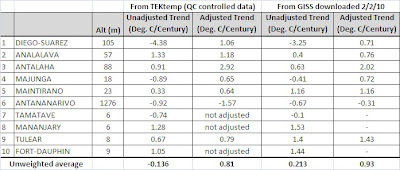

I also just noted that the simple averaged trends of GISTemp data shows less warming than the simple averaged trends on VJ’s TEKtemp database. VJ’s Quality Control increases the linear warming trends

I was wondering why Fig 3 had a greater range than Fig 5.

VJs TEKtemp, adj – raw ave trend: .949 C

GISTemp, adj – raw ave trend: .717C

VJ, what adjustments are you making? Is the TEKtemp just using GISTemp, but applying different filters to determine what is included in the final numbers? Or are you creating your own ‘adjusted’ series?

Verity,

I could follow your post easily. Good read. Although what it shows about GISStemp and NOAA/NCDC/GHCN dataset treatment of Madagascar temperatures is not good.

Question 1 – Have you seen, online or in literature, any NASA/GISS rationalization(s)/explanation(s) about why they treated the Madagascar temperatures the way that you show? Or another way to ask, have you seen anything that tells us their intent?

Question 2 – Do you know if there are GHCN stations that exist in Madagascar that are not being used in the GISStemp product? If so, again have you seen any rationalizations/explanations from NOAA/NCDC or NASA/GISS as to why some stations may not be used? Again looking for their intent.

John

I am sure by now everyone realizes there are 2 pats here. LOL. And both from the nether regions.

I have done a similar analysis of GISS data for stations near Mackay in Australia and also found:

cooling trends turned into warming trends;

UHI correction DOUBLING a warming trend;

a nearby rural station reclassified as urban so its cooling trend can be adjusted;

the GISS homogenization adjustment MORE THAN the average trend of 7 rural stations up to 500km away;

data being up to 6 years in error.

I have put this study up at http://kenskingdom.wordpress.com/

So it’s definitely not a one-off accident!

With that sort of patchy data sets, they should switch to use treemometers!!! ;<)

From watching all of the inherent data recording errors, and problems uncovered by this continuing line of inquiry, in trying to establish a real surface temp average, that is properly weighted, for the total surface area, is a crap shoot.

Compared to just taking the past data from regional areas separately, and combining them into an analog weather forecast, that can then be applied to the separate regions, to better utilize the local resources available.

Investing this much time, researching into the patterns of natural variability, that generate the local characteristics of the weather, typical to the separate regions, would give a much more profitable outcome, in the short and long run.

Might be a much better option, that generates solutions to the real local problems, rather than just trying to further define the Global problem, and still getting nothing done any one place, for the time and money spent.

Not to devalue the process we are trying to sort out here, but if real solutions are to be gotten rapidly , it will come from better prediction of short and long term weather, so local/regional, land use behavioral changes can be made in a timely manor.

Not from taxing or penalizing random peoples, for the lack of a good forecast system, that serves the needs of the world, while being individually suited for each of the separate climate regions.

If the UN was the organization it claims to be, (with the moral fiber it should have), they would be working toward the end game, of sharing all data and methods that work, to benefit the most people, irregardless of who they are or where they live.

The best form of government, is the one that best serves the interests of the common man/woman, and cares for the needs of the people, governed with the least interference in their freedom, to live as they would like, assisting the formation of robust family structure, with open communication between neighbors, that relieves stress and increases productivity.

Another question as I try to understand what you did, VJ …

When you plot “unadjusted” data for a particular station, are you plotting all the records for that station – or have you merged the multiple records into a single series? If you have merged them, how? If you have only plotted record 0, then you have left out multiple data points (records 1, 2, …) in the raw data sets that are incorporated into the homogenized data.

I note that the following stations in your linked list include multiple records:

Iles Glorieus

Ouani (Anjoua

Serge-Frolow

Ile Europa

Porto Amelia

Has someone standardised UHI as a negative effect rather than a positive one for all these corrections?

Seems to be the case pretty often that UHI is used to adjust UP…

The real scandal here is not the adustments themselvs but the fact that so many adjustments have to made due to lack of data. I have worked with climate data in more than 30 countries worldwide (including Madagascar) and know that the lack of reliable data is widespread. The installation of, say, just 100 stations in those continents with poor data would cost a miniscule proportion of the trillion earmearked for climate change mitigation and be a marked improvement on what we have at the moment. After a year or two these new stations could also be used to add value to current patchy records.

Ron Broberg (21:58:46) :

VJ’s Quality Control increases the linear warming trends

The QC applied to the database is that it does not plot any years with a missing monthly value. This is because we found that the way the seasonal and monthly averages were being calculated seemed rather conveniently ‘filled in’, sometimes with higher temperatures. An example is here for Ile Europa:

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.111619720000.1.1/station.txt

Note the D-J-F Average for 2009 is 28.3. Dec 2008 is missing (999.9) but if you back calculate GISS must have ‘assumed’ a value of 27.7 for Dec 2008) to calculate this value, which seems on the high side. This will also affect the annual mean temperature; that is why we don’t use years with missing months.

When you calculate a trend, it is sensitive to the span of years. If you toss out years at either end of the series it can affect the trend, that is why the database values differ. My collaborator Kevin has quantified the years with missing data – it is worse than we thought.

I was wondering why Fig 3 had a greater range than Fig 5.

Fig 3 is Unadjusted data, Fig 5 is Adjusted.

The only ‘adjustment’ the database makes is in two QC criteria:

– years with missing months are not plotted

– the code for automated graph plotting requires a minimum of 20 years (not necessarily consecutive) to plot a trend. Kevin has now reduced this to 10 years, because we have started to look at short-term trends which show the climate cycles very clearly.

I’ve had problems with Excel truncating the graph legends and cannot get all the stations to display in Fig 1. However, the missing ones are preferentially displayed in Figs 3/4 so it is possible to work them out.

Maastricht airport (Netherlands) according to GISS (USA)

http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=633063800000&data_set=1&num_neighbors=1

according to KNMI (Netherlands)

http://www.knmi.nl/klimatologie/daggegevens/datafiles3/380/etmgeg_380.zip

(daily values from 1906/01/01 until yesterday )

John Whitman (22:06:26) :

Thanks for the kind comments.

Question 1 – Have you seen, online or in literature, any NASA/GISS rationalization(s)/explanation(s) about why they treated the Madagascar temperatures the way that you show? Sorry, no I haven’t and I really can’t comment on intent.

Question 2 – Do you know if there are GHCN stations that exist in Madagascar that are not being used in the GISStemp product? In other parts of the world, yes, but I have not specifically looked at Madagascar.

Ron Broberg (23:04:25) :

Re treatment of multiple records. This was initially a problem for us. Kevin looked long and hard at it and checked everything he did against GHCN and GISS data (since we have separate databases for each). Overall he concluded that the way he combined stations was very close to that of NOAA/GHCN and NASA/GISS and the data and trends were not impacted. We have only started to see differences when looking at stations with patchy data for the reasons mentioned above.

All the graphs above use GISS’ own data – only the trends in the table use mention the database.

You Americans have a thing called class action lawsuits i think…

Hi VJones, thanks for answering. A couple of more questions while I try to visualize what you have done. I may be covering common ground that everyone around here knows; if so, my apologies.

GISTemp uses processes data in three sets. I’ll call them Datasets 0, 1, and 2.

Dataset 0 is, for foreign locations, GHCN v2.mean. This data often has multiple records for a single station id. This is what you get here. If you read the legend, you will see the multiple record ids. (Five for Porto Amelia, including one pretty bogus looking one)

Dataset 1 merges the various records for a station id into one record.

Five Porto Amelia records merged into one.

Dataset 2 applies the various UHI and homogenization.

Five Porto Amelia records merged into one.

So when you say ‘raw’, do you mean dataset 0?

If you mean dataset 0, are you using all the records or just record 0.

(If you are data scraping from ,a href=”http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.131672150000.0.1/station.txt”>this URL, I think you are using just record 0.

Or do you mean the merged record, dataset 1, when you say raw?

Similarly, when you say ‘adjusted’, are you using dataset 2?

Are you data scraping from here:

http://data.giss.nasa.gov/gistemp/station_data/

Or are you calculating the values from source and ghcn data?

http://www.rhinohide.cx/co2/gistemp/

Sorry for all the questions. Without your source code and somewhat bare description of data sources, I’m floundering a little trying to get clear on which data sets you are comparing and their sources. Thanks for any clarification.

Above, I said:

Dataset 2 applies the various UHI and homogenization.

Five Porto Amelia records merged into one.

I should have said:

Dataset 2 applies the various UHI and homogenization.

Porto Amelia with homogenization adjustments.

John Whitman (22:06:26) :

Question 1 – Have you seen, online or in literature, any NASA/GISS rationalization(s)/explanation(s) about why they treated the Madagascar temperatures the way that you show? Or another way to ask, have you seen anything that tells us their intent?

I know you aimed this at vjones, but I’ve been up to my eyeballs in GIStemp for a while, so I’ll share my observations too. They (Hansen et.al.) have some published papers that say why they think they can do what they do. I don’t find them particularly convincing. As to ‘intent’, my ‘take’ on that is a combination of “Because they can” mixed with “They have to”. The data are incredibly sparse around the planet. You have a bad choice: Admit the data are too full of holes and from too short a time period to tell you much about the whole place. Or. Fabricate “in-fill” data somehow. Hansen has a published paper that says you can make up data from up to 1000 km away, so that’s what they do.

Question 2 – Do you know if there are GHCN stations that exist in Madagascar that are not being used in the GISStemp product?

Nope. GIStemp sucks in the GHCN data set whole. The more interesting question is this: Are there more thermometers in Madagascar than are used by NOAA / NCDC when they make GHCN? Oh Yea:

http://www.wunderground.com/cgi-bin/findweather/getForecast?query=madagascar&wuSelect=WEATHER

Wunderground has about 14 with current reports showing (and about a half dozen listed with blanks next to the name). Plenty of stations with lots of data. NONE of it making it into the GHCN data set (all Madagascar ‘cuts off’ in 2006 – last data in 2005). But not to worry, GIStemp can make it up…

If so, again have you seen any rationalizations/explanations from NOAA/NCDC or NASA/GISS as to why some stations may not be used? Again looking for their intent.

As near as I can figure it out from what they have said, NCDC thinks actual stations don’t matter so they can chop and change at will (since the anomaly will save them…) and seem to have got the idea in their heads that a few stations at major airports is all we really need. GISS says the station changes are not their problem, look how hot it is…

But as to motivation or intent, you just can’t know. It’s inside their heads and you can’t see in there. Not that you’d want to 😉

Meh. It’s supposed to be “MUNG”, not “munge”. It stands for “MUNG Until No Good”.

Somewhat OT…

Menne et al (2010) Table 1 seems a pretty damning indictment for USHCN:

Max temps

Full Adj. Raw

Good 0.35 0.28

Poor 0.32 0.14

… so the homogenization increases the trend by at least 40%.

Verity Jones says

“This shows what looks like warming-cooling-warming climate cycles very clearly and it is possible to fit a third order polynomial trendline though the averaged data. I’ve seen this again and again for data I’ve plotted around the world (incidentally these were for WUWT regular TonyB).”

Verity did some splendid work for me in response to my interest in climate cycles. These can be clearly seen in the Historic temperature records collected here on my site;

http://climatereason.com/LittleIceAgeThermometers/

I have written several articles on these cycles that can be accessed from the site under ‘arcticles from a historical perspective.’

The clear evidence of peaks and troughs even through the Little Ice Age was quite striking. It was also very striking how Dr Hansens Giss records started from the depths of one of those troughs. Why anyone should think it strange that temperatures have increased since the end of the LIA -let alone try to restructure our economy on that basis-seems rather odd to me.

Another commenter here said;

“The real scandal here is not the adustments themselvs but the fact that so many adjustments have to made due to lack of data.”

Looking at the broad sweep of the history of instrunental records since 1660 I would find it very difficult to believe any more than the generality of the trends shown, as the data is so over adjusted and there has been so much interpolation going on. With the inaccuracy of thermometers until recent years (when other factors kick in) to believe we can parse temperature changes since 1880 to fractions of a degree is, I believe, misleading. This especially so as the station coverage in 1880 was still very poor as can be seen in Dr Hansen’s paper on the subject (available from my site)

Verity is an extremely diligent person who thoroughly checks all her lines of evidence and rejects those that appear suspicious. I look forward to her future posts.

Tonyb

“””vjones (00:17:30) : ””’

Verity, thanks for your response to my comment “John Whitman (22:06:26) :”. I am assuming that if I can see in their own statements/documentation the justifications that NASA/GISS and NOAA/NCDC/GHCN use for their treatment of data then it would go a long way to help me understand why they do it.

”””E.M.Smith (00:42:02) : . . . They (Hansen et.al.) have some published papers that say why they think they can do what they do. “”””

Chiefo, thanks for pointing the way. Hope you don’t mind if I check back with you occasionally as I wander into the land of NASA/GISS and NOAA/NCDC/GHCN intent. To boldly go . . . where every open independent thinker (aka as skeptic) must. [apologies to Gene Roddenberry]

”””E.M.Smith (00:42:02) : Nope. GIStemp sucks in the GHCN data set whole. The more interesting question is this: Are there more thermometers in Madagascar than are used by NOAA / NCDC when they make GHCN? Oh Yea: ””

Chiefo, your question is much more precise than my original question. Thanks again.

John

Anachronda (00:51:38) : Meh. It’s supposed to be “MUNG”, not “munge”. It stands for “MUNG Until No Good”.

Strange. Wonder if this is another of those “California things”. To me, a Mung is either a tribesman from Laos or a bean used for sprouts. “Munge” is the only term I’ve seen for “fold bend spindle and mutilate until done” as in the article.

John Whitman (01:49:35) :

”””E.M.Smith (00:42:02) : Nope. GIStemp sucks in the GHCN data set whole. The more interesting question is this: Are there more thermometers in Madagascar than are used by NOAA / NCDC when they make GHCN? Oh Yea: ””

Chiefo, your question is much more precise than my original question. Thanks again.

You are most welcome. Gotta keep an eye on which shell the pea was placed under at the start of the game, dontcha know…

How NCDC can get away with saying the data are ‘unavailable’ or not on a fast enough schedule when you can just hit Wunderground is a question that some Senator or other ought to ask about… Perhaps while asking Wunderground if they would like to have a contract to provide a consistent up to date data series…

“Strange. Wonder if this is another of those “California things”. To me, a Mung is either a tribesman from Laos or a bean used for sprouts. ”

Notice that he had it capitalized. It’s an acronym used by scientist types, coined at MIT.

http://www.absoluteastronomy.com/topics/Mung

Basically, describing a scenario where you manipulate the data so much that it becomes meaningless and has no indentifiable tie to the inputs.

Ron Broberg (00:37:14) :

Over the past six months or so I have delved increasingly into the various GISS and GHCN data sets, starting with scraping ‘raw’/combined and adjusted station data from the .txt files from: http://data.giss.nasa.gov/gistemp/station_data/ and ending up downloading the GHCN v2.mean file and examining the GISS combined/adjusted file (converted from binary – an intermediate file in GIStemp processing). Here the sources were kept simple:

Unadjusted data was, for each of the stations mentioned in Table 1, the merged (combined) data – Dataset 1 in your list (from http://data.giss.nasa.gov/gistemp/station_data/). Antananarivo, for example has three data series:

Antananarivo/ 18.8 S 47.5 E 125670830000 452,000 1889 – 1990

Antananarivo/ 18.8 S 47.5 E 125670830001 452,000 1961 – 1970

Antananarivo/ 18.8 S 47.5 E 125670830002 452,000 1987 – 2005

These are combined into a single (‘unadjusted’) one:

Antananarivo/ 18.8 S 47.5 E 125670830002 452,000 1889 – 2005

Adjusted data was for each of the stations mentioned in Table 1, the (combined) homogenized data – Dataset 2 in your list (from http://data.giss.nasa.gov/gistemp/station_data/)

We could have a discussion about how ‘raw’ is ‘raw’ data. That is why I used the term ‘unadjusted’.

TonyB (01:23:20) : – thanks for the endorsement

John Whitman (01:49:35) :

I am assuming that if I can see in their own statements/documentation the justifications that NASA/GISS and NOAA/NCDC/GHCN use for their treatment of data then it would go a long way to help me understand why they do it.

I can’t say that worked for me 😉

E.M.Smith (00:42:02) : – thanks for fielding the questions – I reckoned you’d have a better answer.

vjones (23:56:19) :

Fig 3 is Unadjusted data, Fig 5 is Adjusted.

in reply to Ron Broberg (21:58:46) :

I was wondering why Fig 3 had a greater range than Fig 5.

Actually, my reply was wrong. The range is different because in Fig 5, for the + values, I erroneously allowed one data point (>1.5) to be cut off; the – values are not as strongly negative because of averaging, which reduces the range.

VJ , I dont if you would find this useful, but Jon Peltier has a free Excel add in that does ‘loess’ smoothing:

http://peltiertech.com/WordPress/loess-utility-awesome-update/

Say what? They fill in data for Madagascar — a large island 400KM from the African Continent — with data from stations on the African Continent? Does anybody have an issue with that?

They have discarded, as demonstrated by E.M.Smith, almost all cold places in order to “HIDE THE DECLINE” He has shown that there is also a “Southamerican Gate” where all high altitude stations were also removed (where in winter time there are inhabited places where temperatures reach minus 25 degrees celsius).

E.M.Smith (03:08:06) : How NCDC can get away with saying the data are ‘unavailable’ or not on a fast enough schedule when you can just hit Wunderground is a question that some Senator or other ought to ask about…

I’m pretty sure that the data is pushed to NOAA/NCDC by the various national weather agencies and not pulled by NOAA/NCDC.

I know you can run the GIStemp code. Have you tried adding the more recent Weather Underground data to the GHCN files to see how it changes the trends for the individual Madagascar stations? Sounds like a fun project to me. Just convert the WU data into a new record, add it to the v2.mean file, crunch the numbers, and compare. That way you can show what the actual effect is of neglecting to use it.

@VJ:

Thanks for the clarification. If I understand you correctly, you are comparing the data set 1 (merged) with data set 2 (homogonized). Then you sum the averages of the anomalies for the 10 stations with respect to a 1951-1980 baseline for each station.

I did a quick look at station 125670090 (Diego-Suarez) which had the steepest cooling trend. The “UHI” adjustment (homogenization) does not add to the merged temperature data. It subtracts from it as expected. What is happening, similar to Darwin, is that data pre-1960 is being truncated. Since the 1940-1960 period has a sharp declining trend, the net effect is that the ‘trend’ is increasing, even while homogenization drives down the actual temperature record.

I don’t know yet if other records show a similar pre-1960 truncation, but I suspect so. I’ll look at it more tonight.

http://rhinohide.cx/co2/img/125670090003.png

Richard Holle (23:02:26) :

“From watching all of the inherent data recording errors, and problems uncovered by this continuing line of inquiry, in trying to establish a real surface temp average, that is properly weighted, for the total surface area, is a crap shoot.”

Very true, Richard. Even if we had a perfect and complete raw data-set available. the resulting average temperature would still have no meaning in terms of what observers experience locally at any moment in time.

To make the whole exercise even more futile, any observed anomalies in the trend would be useless because climate is ultimately driven by deterministic chaos. It’s a shame so many climate scientist seem to have ‘forgotten’ the work of Edward Lorenz as they strive to prove the case for CAGW.

This problem was well summed up by the words of Robert May, one of the founders of the then new science of non-linear systems :-

“The mathematical intuition so developed ill equips the student to confront the bizarre behaviour exhibited by the simplest of discrete non-linear systems.

Not only in research, but also in the everyday world of politics and economics, we would all be better off if more people realised that simple non-linear systems do no necessarily possess simple dynamic properties.”

I have always found in worrisome that ‘the temperature data and global averages/trends’ is not the primary focus (they are more interested in other activities/research) of cru/noaa&ncdc/giss.

No matter what your view on AGW – just look at the lack of configuration control, quality control, software standards etc… Its terrible.

I think we (US) needs an organization whose only focus is the temperature data and global averages/trends.

If I were to analyze trends from last night’s SuperBowl, I could have extrapolated a final score of 40 – 0 (Patriots) from the first quarter or 40 – 24 from the results of the first half.

Projecting future events off trends is nothing more than a simple projection or forecast. If you’re feeling charitable, you might call it an “hypothesis.”

Also, a trend does not tell us anything about the cause.

Given the steady stream of revelations about the “folded, bent, spindled, and mutilated” temperature data from around the world, I am puzzled by many skeptics still admitting that “the climate has indeed warmed; the only question is why.”

Two questions:

(a) Is there any unequivocal temperature data that indicates the Earth as a whole (as opposed to selected locations) actually warmed over the past century or so?

(b) Does it make sense to speak of a global temperature at all?

/Mr Lynn

I noted one comment where the person was talking about the deforestation of Madagascar. Are issues like that ever factored into the GISS numbers? My understanding is that a reduction in vegetation would increase temperatures, all other factors being equal.

MrLynn:

Totally agree. Climate is a general description of weather patterns in a particular place over a particular length of time. It can be no more than regional and must be expected to change. How’s that for a definition?

MrLynn (13:04:10) :

I am puzzled by many skeptics still admitting that “the climate has indeed warmed; the only question is why.”

Until a couple of months ago I’d have wavered between being a lukewarmer and outright skepticism, and I’ve been looking at the data for a while. It is the cyclic nature of the warming that has convinced me otherwise – both the data as in Figure 3, and seeing the temperature trends plotted worldwide on maps: http://diggingintheclay.blogspot.com/2010/01/mapping-global-warming.html

More on that soon…

It is only a couple of years ago that my husband persuaded me to “look into the science instead of believeing the propaganda” and WUWT became a regular read (I lurked for a while then used various names). In the begining I did not understand how the ‘global average temperature’ was calculated (and made naive and silly comments to that effect). I actually thought they used the trend of each station.

Of course as shown above, trends are very sensitive to at what part of a cycle the trend is started/ended.

As far as I am concerned the current models and anomaly maps have been found wanting and have had their day. Whether or not we come up with a different method or accept that our climate is complex and always will be is another matter.

Phil Jourdan (13:17:28) :

Are issues like that [deforrestation] ever factored into the GISS numbers?

It seems not, nor are land use or population changes. Also ‘once an airport, always an airport’ (airports being regarded as ‘rural’ of course, regardless of size).

vjones

Please take a look at this link:

http://www.tutiempo.net/en/Climate/Madagascar/MG.html

I found it by googling Madagascar weather station records. This site has 28 locations with tabulations of daily data. Some of the months are missing daily data but the stations I checked had records from 1973 through January 2010. You may wish to see if these reconsile with what you started with.

Since I mentioned I’d look at more graphs this eve,

here is a link to the other Madagascar stations plotting DS1 with DS2.

http://rhinohide.cx/co2/madagascar/img/index.html

Chas (06:12:39) :

That does look useful – thanks!

Mike Rankin (16:39:21) :

Good find – that is a potentially useful site, although getting data by month is slow.

Ron Broberg (20:35:16)

Great. I did not include them individually for the sake of brevity. Now I need to ask what you have done – are these from the same straight GISS records I used, or GHCN V2.mean or…? Are you able to run GIStemp?

I’ve run GIStemp, but the charts above are data scrapes from the GISS GIStemp station_data site. I’ve charted the “metANN” column. I’ll put together a bigger post later. I’d like to quantify the different effects of the data truncation and the homogenization temp adjustment. I’d also like to understand what’s causing the data truncation, since that also appears in Darwin. And, third, I’m going to want to recreate the data in DS1 and DS2 because I like the idea of adding the recent 15 years of wx data from WU to fill in for the recent patchy data. OTOH … I do have a full time+ job … so much to do … so little time 🙂

Ron Broberg (06:59:21) :

I’d also like to understand what’s causing the data truncation…

As I understand it, GIStemp homogenizes non-rural stations by comparison with rural ones – any non-rural station that does not have three IIRC matching rural reference stations is not used or the portion for whcih there is no match is not used.

I do have a full time+ job … so much to do … so little time 🙂 Ditto