That is a total of 365,000 high temperature records and 365,000 low temperature records. During the second year of operation, each day and each station has a 50/50 chance of breaking a high and/or low record on that date – so we would expect about 182,500 high temperature records and about 182,500 low temperature records during the year.

In the third year of the record, the odds drop to 1/3 and the number of expected records would be about 121,667 high and low temperature records.

In a normal Gaussian distribution of 100 numbers (representing years in this case,) the odds of any given number being the highest are 1 out of 100, and the odds of that number being the lowest are also 1 out of 100. So by the 100th year of operation, the odds of breaking a record at any given station on any given day drop to 1/100. This mean we would expect approximately 1000 stations X 365 days / 100 years = 3,650 high and 3,650 low temperature records to be set during the year – or about ten record highs per day and ten record lows per day.

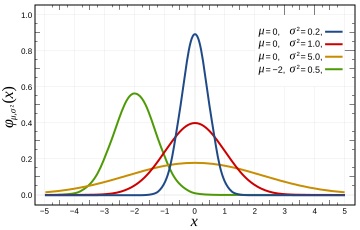

This provides the news media lots opportunity to get hysterical about global warming every single day – even in a completely stable temperature regime. The distribution of temperatures is Gaussian, so it won’t be exactly ten per day, but will average out to ten per day over the course of the year. In a warming climate, we would expect to see more than 10 record highs per day, and fewer than 10 record lows per day.

In a cooling climate, we would expect to see more than 10 record lows per day, and fewer than 10 record highs per day. The USHCN record consists of more than 1000 stations, so we should expect to see more than 10 record highs per day. Throw in the UHI effects that Anthony and team have documented, and we would expect to see many more than that. So no, record high temperatures are not unusual and should be expected to occur somewhere nearly every day of the year. They don’t prove global warming – rather they prove that the temperature record is inadequate.

No continents have set a record high temperature since 1974. This is not even remotely consistent with claims that current temperatures are unusually high. Quite the contrary.

| Continent | Temperature | Year |

| Africa | 136F | 1922 |

| North America | 134F | 1913 |

| Asia | 129F | 1942 |

| Australia | 128F | 1889 |

| Europe | 122F | 1881 |

| South America | 120F | 1905 |

| Antarctica | 59F | 1974 |

http://www.infoplease.com/ipa/A0001375.html

Here is the code discussed in comments:

// C++ Program for calculating high temperature record probabilities in a 100 year temperature record

// Compilation : g++ -o gaussian gaussian.cc

// Usage : ./gaussian 100

#include <iostream>

main(int argc, char** argv)

{

int iterations = 10000;

int winners = 0;

int years = atoi(argv[1]);

for (int j = 0; j < iterations; j++)

{

int maximum = 0;

for (int i = 0; i < years; i++)

{

maximum = std::max( rand(), maximum );

}

int value = rand();

if (value > maximum)

{

winners++;

}

}

float probability = float(winners) / float(iterations);

std::cout << "Average probability = " << probability << std::endl;

}

It is the nature of records that they will always be broken.

Nor do these records show the supposed increase of “extreme events”. Thank you Steve, another snappy fact for our arsenal.

Global warming doesn’t act by increasing high temperatures. It works, as I understand it, by increasing LOW temperatures. What I would expect to see in a greenhouse warming climate would be a lot of record warm low temperatures.

Recently I read that Prague just broke the temperature record from 19th century in early April. Its cool that we have just surpassed Little Ice Age record today 😉

Yeah, more high lows and less low lows, but certainly more high highs and less low highs!!!

Nature is the norm. The abnormality is in the “surprise” at the “unexpected” during the observation of natural events, especially when those natural events do not correspond to the outputs of computer models.

Here is a completely subjectif observation based on perception…

Can you recall the intensity of the warmth of the sun on your face? I always enjoyed feeling the warmth of the sun on my face, more in the Spring when we get out of winter. However, I do remember a feeling of scorching on my face from about 2005 to 2007. I remember driving and trying to cover my face on my left side because it was not warmth but burning feeling. Last year I did not feel this. I wonder how the summer sun will feel this year, but I certainly don’t think that it will be scorching hot.

No, it would not cause higher high temps because CO2 would also block inbound IR from the Sun during daytime. It would act to moderate daytime high temps but it would act to prevent radiative cooling. As a result, the atmosphere should heat up in the mid troposphere. It would get heated from the sun in the daytime and from surface radiation at night … but that isn’t happening according to the observations so we can just can the whole silly notion.

Given UHI – we can expect more high records than low records. I was expecting to see what we actually did get these past few years at the end of the article – not a continent summary.

surfacestations.org shows that we’re not measuring just the air temp in some random location. We’re measuring some sort of human activity mixed in with the air temp. Human activity doesn’t strike me as random – so I wonder how that affects the Gaussian distribution.

“in a cooling climate” – not really – in a colder than average climate – you’d get more low records. You can be warming up, but colder than average, and still see more record lows. That’s always the big issue with the warmist since we’re currently coming down from a high point – we probably still set more high records than low records – which doesn’t show that we’re still warming.

Thank you, Ray, for clearing that up.

Steve,

Conversely you should have added that record low temperatures have been set after 1974

http://www.infoplease.com/ipa/A0001377.html

From the anti science department

http://voices.kansascity.com/node/4387

What is a Gaussian given Hansen at the controls.

Records are made to be broken.

“WE’RE ALL GONNA DIE IN A FIREBALL IN FIVE YEARS!!!!” sounds like a broken record to me.

Very helpfull description of probability for highs and lows, I like the list of continental highs, have you got one for lows with the years. ?

Imagine it’s April, in California. Why, within the same month, one may set two records:

1) Earliest grrrrrrrrrreat heat everrrrrrrrr!

2) *whispered (most heating degree days ever in the month of April)

RE: crosspatch (15:55:54)

Then your thoughts on the large number of record low numbers would be?

With all due respect to the poster, things are not as simple as presented. This sort of thing hurts my brain since my last foray into statistics was 1/4th of a mean life time ago! The problem gets compounded by the fact that we measure temperature in rounded off degrees, be they N.1 or N.001 degrees. That means that a new record temperature must not only be higher than the old record but must exceed it by some discrete amount, 0.1 or 0.001 degrees in my example above. That lowers the odds of any new record occurring. The odds go down fast the cruder the thermometer used. The poster’s point is still correct in that in a stable and unchanging climate there will still be new records over the time scale we have be taking measurements. It would be fun for some poor math major to plot us a series of probability curves for new records calculated parametrically with varying standard deviations, sample size and bin size. Nice post. SL

re: crosspatch (16:12:01) :

“No, it would not cause higher high temps because CO2 would also block inbound IR from the Sun during daytime.”

Not much inbound IR from the sun.

crosspatch,

Do you think warm low temperatures might have something to do with the proliferation of cities, asphalt, lawns, and irrigated fields? I keep a thermometer on my bicycle and sometimes see 3-5C difference between neighborhoods and adjacent open spaces on summer nights. Dew condensing on grass releases large amounts of heat.

Has anyone factored in all the badly sited weather stations and tried to make an adjustment for the UHI effect on those sites?

Such a map would probably be far different than those preferred by the warming alarmists.

Steven, you might have referred to this in preparing this post:

http://www.numberwatch.co.uk/record.htm

A couple years ago I looked at the distribution of high and low temperature records in the Kansas City area. It’s not a perfect weather station, having moved at least twice in the past 120 years, if not more. Fully one third of the high temperature records (year around) were set in the 1930s. Fully one third of the low temperature records have been set since 1980. The all time low temperature record in KC was set in 1989; it was -23 degrees on December 22 or 23 that year.

In a perfectly even climate, that is not the right distribution.

At least we know the high temperatures aren’t getting hotter.

Also it looks like we won’t die in a fireball here in the US yet according to the climate forecast system, looks like a slow cooling trend anomoly-wise for the rest of the year for the US and most of Canada.

http://www.capecodweather.net/cfs-archive/771-cfs-outlook-april-1st-2009

I noticed that despite all the warming going on in Antarctica, it’s last high-temp record was set in 1974. 1978 for the south pole itself.

However, the record low was set in Vostok in 1983.

I wonder if Steig would comment.

It kinda makes you wonder doesn’t it.

Steven Goddard…

Interesting and very useful article! From your article:

So no, record high temperatures are not unusual and should be expected to occur somewhere nearly every day of the year. They don’t prove global warming – rather they prove that the temperature record is inadequate.

I have insisted since 2005 that the very small fluctuation of temperature observed in the last decade fits within the normal fluctuations of temperature which can occur during the Holocene. The bounds for the oscillation, incorrectly called “anomaly”, are from -3 °C to 3 °C, which give a total fluctuation of 6 °C.

I think these guys (AGWers) are skilled businessmen who are taking advantage of this cyclical momentum to catch money. (Sorry, Anthony… I cannot resist saying it)

“From error to error one discovers the entire truth.” – Sigmund Freud.

This concept does not apply to Algore and his greedy band of liars.

“It is not a pollutant and not causing global warming or climate change.”

The SSOTUS (Supreme Scientists Of The United States) have designated CO2 to be a pollutant, Nature be d……

“A major tenet of the environmental paradigm is that almost all change is due to human activity. Once a change is determined it triggers a search for the human cause without consideration of natural change.”

Humans have the deep pockets. Polar Bears and Penguins do not pay taxes or fees.

Given our current events, I amend Michael Crichton’s “State of Fear” to read states of fear. The latest fear is engendered by the swine flu. My first thought that this was a viral attack on the economy and financial community resulting from contaminated pork in recent Congressional legislation.

————————————————————-

Human CO2 Hysteria:

Spending billions on a non-existent problem

By Dr. Tim Ball

http://canadafreepress.com/index.php/article/10605

The term “greenhouse gas emissions” is either deliberately misleading or indicates complete ignorance of the science, or both. What they really mean is CO2, yet it is less than 4% of greenhouse gases and the human portion a fraction of that. Why do they want it reduced? It is not a pollutant and not causing global warming or climate change. Reducing it is completely unnecessary and harmful for the plants and will cost trillions. They propose energy alternatives that are potentially more dangerous because they don’t work and can replace only a fraction of existing energy sources. This pattern of identifying the wrong agent of change, blaming humans, and proposing inadequate replacements at great cost is not new. We saw very similar events and sequences with claims that Chlorofluorocarbon (CFC) was destroying the ozone layer.

This seems like something Matt Briggs should have posted (or at least commented about)

My father was in the gold mining business. In more than any other type of mining successfully mining gold depends on accurate sampling to define as best as possible the ore grade AND minerology so there will not be any unpleasant surprises after the mine is put into production. This is because in mining all of the money is spent up front (developing the mine, putting in the mill and transportation infrastructure) before production. Frequently it takes several years of operation before one knows just how well (or poorly) one will do with a given gold property

Son Thomas went into the oil biz as a geopysicist. During my career three dimensional seismic data cubes were invented and perfected so there was a very rich and fairly accurate data cube to use for predicting drilling results. Data collection could be be expensive, but after spending a few million $ on the 3D survey and drilling a few expendable wildcats one knew if this prospect would be a good investment or not. It is just the opposite of mining, when one considers the cost of a large deep water production facility and pipeline needed to connect to the economic world could get into the hundreds of millions of dollars pretty quickly.

Dad and I would savor a beer or two after dinner and have great and very antimated discussions about practical sampling and sampling theory because that is what it is all about. If you can successfully predict what will be found in between two boreholes, you have sampled enough. if you are badly surprised, not so.

I look at the sorts of ‘information’ being offered to support the AGW thesis, and I sure find it lacking. Specifically I find it lacking in methodology, in repeatability, in documentation and in density of data volumes. Oh, and I forgot to mention the lack of predictability that the GCM models offer.

Whatever it is, it is not the successful application of technology for economic means, unless one considers ones continued employment as the only economic success necessary.

“Robert (16:30:16) :

re: crosspatch (16:12:01) :

“No, it would not cause higher high temps because CO2 would also block inbound IR from the Sun during daytime.”

Not much inbound IR from the sun.”

But the extra CO2 would block the extra IR trapped/coming downward from other extra CO2 molecules from above.

Something the AGW community never talks about. How does the extra IR trapped by CO2 make it back to the surface with all those IR intercepting CO2 molecules in the way.

Basically it is just a random walk around the atmosphere with only a few IR photons making it back down. They still make it out into space within a few minutes of what they would have before the extra CO2 was there.

OT, but I’ve been thinking about this for awhile.

————————

3 record low temperatures set in my area over the past year and no record highs.

Anthony; If you want to drill down a little further there is potential for new record highs and lows for both maximum and minimum temperatures. A total of 4 ways to create a new record each day.

At my nearest major city – Melbourne – they’ve been keeping records since 1st May 1855. Each day there are 4 possible records to break; multiplied by 365 = 1460 records. Divide that by 104 years means we should set 14 new records every year!

Unfortunately for Melbourne, the UHI effect is meaning we’re creating a lot of high minimum records and no low minimum records since 1978.

A few years ago, Phil Jones of the CRU was speaking on Robyn Williams’ Science Show (ABC Radio National). He stated (this is from memory) the record number of record high temperatures was entirely due to global warming. When I analysed Australian data most of the records dated back to the 1920s and 30s. Very few were recent.

The excellent site that jeez linked to is as good in its own way as the late John Daly’s site:

http://www.numberwatch.co.uk/record.htm

Here’s the home page for anyone who can’t figure out how to contact WordPress:

http://www.numberwatch.co.uk/number%20watch.htm

Worth bookmarking, IMHO.

A related question: What type of distribution do, for example, monthly temperatures follow. Surely not Gaussian. Is there skewness and kurtosis?

I am sure this question has been tackled but I have not found references. Maybe no parametric distribution fits.

Article is a nice way of presenting the issue. Should be clear to anyone — even me.

These kind of records are also a good way of assessing whether the “adjustments” made to the historic temperatures levels are accurate.

Very few of these records would be from the adjusted datasets but would just be from local record-keeping.

Reported record temperatures are abnormal if they aren’t real. Whenever there are record temperatures reported, I take it with a grain of salt.

Heat Waves in Southern California.

Are They Becoming More Frequent and Longer Lasting?

http://climate.jpl.nasa.gov/files/LAHeatWaves-JournalArticle.pdf

Almost every day I observe a politician, journalist or practicing “scientist” exhibit either ignorance of the difference between climate and weather (a distinction I learned in the 4th grade), or a deliberate disregard for the the difference.

Shameful, at best, and possibly despicable.

The record low temp in Australia (-23C ) was set in June 1994 at Charlotte Pass (1800m asl) but only after the weather station was relocated to a new position at the bottom of the valley. It stands to reason that the night time temperatures will be colder in this location. To my knowledge the station has been moved again – higher up the slope due to problems with excessive drifting snow so it is unlikely that a record low will be set any time soon. FTR there are only 3 weather stations in Australia that have ever recorded temperatures of -20 or less and all of these are within the Alpine area of NSW above 1400m.

I did a simple minded test of your observation by taking the record high and low temperatures in the US by date and state from Wikipedia, putting the events in Excel and plotting the cumulative highs minus cumulative lows. The lows lead the highs until the 1930s, when the highs gained until the 1960s, after which the lows exceeded highs bringing 2008 back to even. A polynomial best fit, assuming each state is a weather station, says we are in a cooling trend now in the USA.

Hopeless blend of hot air and hubris

Greg Melleuish | April 28, 2009

http://www.theaustralian.news.com.au/story/0,25197,25395364-7583,00.html

There is a very important law in politics and economics known as the law of unintended consequences. When governments intervene in matters about which they have limited knowledge, and this is basically everything, they can take steps that make things worse rather than better.

The same law applies to the natural world. Plimer describes a universe so complex that it is simply not feasible that any computer model devised by a human being could capture its complexity.

State action based on such limited knowledge invariably will have unforeseen consequences that may well prove quite harmful.

Humility can be seen as the antidote to hubris. Human beings should be humble in the face of the immense forces of nature and recognise that their power to manipulate and change the world is very limited. They can do this only if they recognise that adherence to climate change is the ultimate expression of hubris. There are times when the best thing for the state to do is nothing.

The analysis assumes that the temperature measurements are spatially and temporally independent which they are not. Increase the number of stations to 1000,000 to see the error in the reasoning, or/and have them measure every five minutes to boot.

While I don’t think that 1917 was a record, Northern hemisphere temperature data indicate the following (from CO2 Science data sheets GHCN).

Temperature anomaly

1916 -0.282542

1917 -0.643615

1918 -0.299733

1919 -0.271429

I’m not one to make correlations but wasnt the last flu outbreak about then? Hmm?

A slight correction. One expects more high and low temperature to be recorded as the reliability of thermometer improves. If you 1930’s thermometer was rated +/-0.1 degrees, then the actual maximum temperature was recorded as 91.6 degrees. in 2009 the thermometer is rated +/- 0.005 and the recording is 91.64 degrees. the record WILL always be broken.

Steve,

Looking at NOAA’s anomaly map for the month of April, I noticed something odd. The map says it represents anomaly for a period of 25 days.

http://www.hprcc.unl.edu/products/maps/acis/MonthTDeptUS.png

I’m just south of the Seattle area, and the map shows my area about 2 degrees below to 2 degrees above normal for this period. Or to sum that up, normal. We’re about 2 degrees below normal today, but thru this month, we’ve run 5 to even 10 degrees below normal on most days. We’ve only had reasonable weather in the last week or so.

This suggests the anomaly doesn’t really cover the period noted, but perhaps is a snapshot.

I’ll see if I can get some more information. But I’ve checked the almanac at our local weather station, and on those days I’ve checked, we’ve been significantly under normal. (5-10 degrees) I don’t suppose someone’s got their finger on the scale here…?

http://www.king5.com/weather/

So bets are HE will be right !!!!

Next possible statistical analysis :Hysterical mass/political movements vs. Temperatures.

I bet we´ll find a time lag:

1) Maximum Temperatures PRECEDING Hysterical mass/political movements

2) Hysterical mass/political movements PRECEDING Minimum Temperatures

3) Dictatorships following 02

4) Wars following 03

Jeff of Gembrook (AU) (17:17:27) :

Have you seen this study?

http://mclean.ch/climate/Melbourne_UHI.htm

Leif, you beat me to it!

This post’s analysis is based upon independent random events, which earth atmospheric temperature certainly is not. To say that the odds of a record temperature is 1 out of 100 requires a randomness that does not exist.

Solar output (here we go again!) is anything but random, instead, it is quite constant within a narrow range. Earth’s orbital distance varies a bit, but on any given day of the year, it is approximately the same. So much for randomness.

Interesting post, but it does not fly for me.

If anyone is ever in Los Angeles with an afternoon to spare, one could go to the Museum of Science and Industry to watch the bell curve machine in operation. Hundreds of black balls are introduced, one at a time, at the top center, then the balls bounce off of small pegs as they fall into one of about a dozen adjacent slots. The slots in the center fill up the most, and the slots on the far ends receive only a few balls. That is randomness in action.

Not read all of the posts here….maybe said already…

The whole “record high” thing is exacerbated because it is easier to get a false record high than a false record low. A wx stn can be made hotter than the “true” temp, but it is all but impossible to make it colder. Yes?

It is easier to measure a record high and a record high-low than to measure a record low or a record low-high. Got that?

Clive

Southern Alberta … where is is snowing again .. this is getting real old…a winter from hell !

“and the perfect unchanging climate (which our AGW friends imagine used to exist before they were born.)”

..and immediately, you’ve lost me already. ~snip~ Name one climatologist who (even in the last century) EVER thought that there was some kind of “perfect temperature” for earth (geared to which species??) and that the climate hadn’t been changing in perpetuity. Name one.

You are erecting on knocking down views that have never existed. How intelligent.

I’ve often wondered about the math and probability for these situations, thanks for the post.

Leif, how does that affect the analysis? Would one then expect to find records in spatial and temporal clusters? Extended periods in given locations with few or no records at all? A “lumpier” distribution of records?

Ed says: “Nature is the norm…”

Now I don’t think anyone doubts that at all. The point is whether precautionary action should be taken to avoid terrible effects. Picture this: a 10km wide asteroid headed straight for Ed’s house (be that where it may). Though on geological times scales, this is absolutely normal, would Ed be standing in his garden beneath the growing shadow, uttering “Nature is the norm…” I think not. At the first hint of a possible collision, hopefully months to years prior, earth’s residents will be working on a solution to prevent the impact. This ain’t the Cretaceous any more and, maybe, we finally have a choice of whether or not to enter forseeable extinction events.

[snip]

Reply: Seriously, thou shalt not insult other posters or our host, (although clever and extremely subtle innuendo may be acceptable) or entire posts will be deleted from now on.

I have spoken. ~ charles the moderator.

Robert (16:30:16) : Not much inbound IR from the sun.

“Everyone is entitled to his own opinion, but not to his own fact”—

Daniel Patrick “Pat” Moynihan

DR (17:49:55) :

Q: Heat Waves in Southern California.

Are They Becoming More Frequent and Longer Lasting?

A: Sure. And we know why.

http://wattsupwiththat.com/2008/08/23/how-not-to-measure-temperature-part-69/

[Let us know when you have something you would like to contribute or we’ll just delete, your choice and ours ~ charles the moderator]

[sorry db, I’m being more agressive than you ~ ctm]

The number of record highs is not really changing the average temperature but the changes in the number of record lows and as been said above the number of record high lows. I plotted out the 10 and 30 year monthly highs and lows for the central england temperature (CET) record. See link below. The level of the monthly lows really raised in recent years but the highs were pretty typical of the whole record. The plot shows the highest or lowest monthly anomaly for a running 10 or 30 year span. Note this has also been adjusted to remove the long term temperature rise of 0.26°C per century.

http://gallery.me.com/wally#100002/CET%20max%20min%20residuals&bgcolor=black

Gary Hladik (18:45:11) :

Leif, how does that affect the analysis? Would one then expect to find records in spatial and temporal clusters? Extended periods in given locations with few or no records at all? A “lumpier” distribution of records?

To find out, one should find [by cross correlation] at what distance between them stations are ‘independent’ in space and similar in time. Then base the analysis on those numbers. I don’t know precisely what they are except that [certainly for time] they are such as to invalidate the analysis. People in the business of analyzing weather station [Anthony?] siting know what those numbers are. A similar analysis was done a long, long time ago for sunspot numbers taken every day. Over a cycle there are ~4000 such numbers, but the number of independent values is only ~20. So to base the ‘error bar’ or occurrence probability on 4000 values rather than 20 leads to very wrong conclusions.

In dealing with records (snowfalls at a particular location, floods on a given river, etc) under the assumption that they are randomly distributed, you can take, say 200 years of data and labelling the first as a record, there should be Ln200 records broken during the 200 year period – about 5. I explained this calculation on another post (concerning flooding on the Red River of the North) and did a calculation on the data over 150 years or and came out with 5 which was the actual answer and demonstated the probability of the number of records for the period to be statistically normal. One reader criticized my calculation saying that …sure 5 may be what you would expect but you wouldn’t expect 2 records to fall within 10 years of each other… naturally the nature of randomness doesn’t bar two records being close together. Now I know there are factors out there that one can point to to show that such data won’t be random ( various cyclic oscillations etc.) but on the right scale, these are muted. In any case, if there is a looming inexorable change occurring, then the records should greatly exceed Ln N, if not, maybe we don’t have that much to worry about.

The discussion of record highs got me wondering if more GHGs lead to lower high temperature records.

Near sea level in my zip code in perpetually-humid Fort Lauderdale, the record high temps for July and August only have 1 day over 100 degrees. Most record high temps are in the 97-98 area. This from Weather Underground calendar view for July and August.

A few hundred miles north (and much farther west) where I grew up in much drier Dallas Texas, July and August have record highs above 100 for almost every single day.

So, do greenhouse gasses prevent warming? Places with less water vapor in the air (Dallas) get much hotter in summer time.

Its telling to look at the temperature ranges. Calendar view shows similar average low temperatures in both places, but lower average highs in Fort Lauderdale than in Dallas.

Wasn’t C02 supposed to have a positive feedback relationship with water vapor? Isn’t that the single most important mechanism in the AGW hypothesis? More C02 means more water vapor means more heat?

And yet, it looks to me like more water vapor, the most important GHG, leads to lower high temperatures. What gives?

Matt Bennett (18:31:03) :

“and the perfect unchanging climate (which our AGW friends imagine used to exist before they were born.)”

..and immediately, you’ve lost me already. Erecting utterly disingenuous and ridiculous strawmen like that is par for the course here. Name one climatologist who (even in the last century) EVER thought that there was some kind of “perfect temperature” for earth (geared to which species??) and that the climate hadn’t been changing in perpetuity. Name one.

———————————

Matt,

On the assumption that you would like to take CO2 out of the atmosphere, I would further assume that the perfect climate for the AGWers is that which occurs at 285 ppm CO2 or thereabouts.

Maybe that sets the perfect temperature range. Why don’t you you tell us what we’re trying to achieve ?? I really wouldn’t mind knowing from someone who clearly has an opinion on this.

Serious question, by the way.

The high temperature at whatever time it was taken is not the high temperature for that day. Temperature records made and broken are an artifact of the study design (said with a large grain of salt). I wouldn’t be able to get through the door with a dissertation study design similar to what is currently in place to report record temps.

Leif, Roger,

Temperatures at a location are typically thought of being represented as a Gaussian distribution. Here is an empirical example:

http://folk.uib.no/ngbnk/kurs/notes/node28.html

The odds of a record high at any given station on any given day in a hundred year record are 100/1. It doesn’t make any difference how many stations you have. Does the total number of craps tables in Vegas affect your odds at any particular one of them? Of course not.

Matt,

Mann’s hockey stick is based on a 1,000 year stable climate – until you purchased an SUV.

Matt Bennett (18:31:03) : “Name one climatologist who (even in the last century) EVER thought that there was some kind of “perfect temperature” for earth (geared to which species??) and that the climate hadn’t been changing in perpetuity. Name one.”

Oh, I dunno – Mann, maybe? He still insists that Earth’s climate was near as dead flat as any experimentally measured process can possibly be – flat through the historically confirmed worldwide Roman and medieval warm periods, flat through the dark age that killed a sizable proportion of humanity through cold and crop failures and flat through the little ice age when they held winter events on the frozen Thames, until it suddenly started increasing recently. And taken in conjunction with the hysterical pronouncements from his camp about tree deaths and so on due to factions of a degree temperature change, I’ll agree the point was put snidely, but basically fair I think. To be a straw man, the argument of one’s opponent has to be put in a deliberately weak manner. The characterisation given in the article was derisory, but given that it is only the tenth part of the hysterics from the AGW crowd, it certainly is not deliberately weak. Furthermore, the argument that followed did not hinge upon any feature of the alleged straw man. The argument works perfectly well even if the climate does vary, and by quite a lot. So the straw man allegation fails.

A more serious problem seems to be the working out of probabilities. After two random measurements, is it indeed p=1/3 that a record high will next be recorded? I’d want a statistician to comment on that. Statistical distributions are funny things and a lot of ‘obvious’ properties aren’t properties at all.

Matt Bennett (18:31:03) : “Erecting utterly disingenuous and ridiculous strawmen like that is par for the course here. Name one climatologist who (even in the last century) EVER thought that there was some kind of “perfect temperature.”

Matt, therein lies part of the issue — they won’t tell us what the goal is. There is no target or even a desired stasis. We are, right now, in roughly similar condition as existed in the early 1980s as measured by global average temps, ice extents, cyclonic energy, and all the other metrics.

One thing is for sure, they keep telling us that it is warmer now than ever in history or that the Arctic ice cap is melting faster than ever in history. The presumption is, therefore, the perfect temperature is something cooler than now, yet here we are cooler than more than a decade ago.

In January of 2000, the US Weather Service released a report that said 1999 was the warmest year since the organization had been keeping data. The media picked up the story and announced that 1999 was the warmest on record. Then candidate, Al Gore took it a bit further by saying 1999 was the warmest year in HISTORY! Mr. Gore’s statement is true only if his notion of history goes back only to the beginning of the data set he accepts — ironically one that began roughly 33 years after the establishment of the office he sought. As one who uses historical climate in my academic work, I know for an empirical fact that it was distinctly warmer than now during at least four extended periods since the development of an agrarian culture six thousand years ago (a better notion of history than Mr. Gore’s 1817 beginning of history).

The AGWers are not happy with the present condition or the cooling trend nor do they identify what their goal is. Why? Because it has NOTHING to do with the climate. The goal is, and always has been, the carbon tax. President Obama has $800 billion of carbon tax as a key element in his budgets going forward. Without that source of revenue, his most expensive agenda items would not have a chance.

Matt Bennett (18:31:03) :

“and the perfect unchanging climate (which our AGW friends imagine used to exist before they were born.)”

..and immediately, you’ve lost me already. Erecting utterly disingenuous and ridiculous strawmen like that is par for the course here.

Well, the implicit assumption behind a complaint that we have an anomaly in our present temperature is that there is a non-anomalous benchmark (often held out as some global average of a prior period of time). That sure sounds to me like an expectation of a perfected standard of climate that does not change …

If there is no benchmark standard, then there can be no anomaly, and we can all go home since there is no anomalously high temperature and no climate change…

Name one climatologist who (even in the last century) EVER thought that there was some kind of “perfect temperature” for earth

Hansen. He makes the anomaly maps, which by definition mean he has some non-anomaly state in mind as his benchmark. He regularly rants that we are too anomalous to the high side, which means he thinks we have a perfected temperature at zero anomaly. QED.

The IPPC. They state that we need to do lots of things to get the anomaly back down to zero; ergo they think it is the perfected temperature.

Repeat for any / all AGW advocates who think that the anomaly measurement in appropriate and that we need to do something to reduce it toward zero.

You are erecting on knocking down views that have never existed.

Nope. Presenting them with a bit of emotion and maybe a smidgeon of hyperbole, but relatively accurately presenting the notion behind the AGW anomaly rants. (That the anomaly means something relative to some non anomalous ideal). If the non anomalous ideal is variable, then please inform us what the formula / function looks like so we can incorporate this rubber ruler into our expectations…

How intelligent.

I thought so too.

“A wx stn can be made hotter than the “true” temp, but it is all but impossible to make it colder. Yes? ”

I don’t know, Clive. Plant a shade tree behind it and see what happens….

Steven Goddard (19:17:02) :

Temperatures at a location are typically thought of being represented as a Gaussian distribution.

Here is an empirical example:

http://folk.uib.no/ngbnk/kurs/notes/node28.html

If you divide the data into two half [1st and 2nd] they do not have the same mean [some refer to that as Global Warming] so are not drawn from the same distribution

The odds of a record high at any given station on any given day in a hundred year record are 100/1.

Not at all. The odds are not constant with time. If there is a general trend up [or down], then the odds for a record high at a later time is higher than at an earlier time.

It doesn’t make any difference how many stations you have.

But this statement of yours does:

“This mean we would expect approximately 1000 stations X 365 days / 100 years = 3,650 high and 3,650 low temperature records to be set during the year – or about ten record highs per day and ten record lows per day.”

Steve,

I did the same thing several months ago with US temperatures only. What I found was that 2/3rds of record high temperatures were recorded before 1950. If I remember correctly, record lows were about 50/50 distributed over the 1st and second half of the 20th century. I used 1950 as a good round number as it (roughly) divided the temperature record equally and, I figured, CO2 levels would be higher in the latter half of the century. All things being equal, I’m certain that there were fewer recording stations in the first half of the century, (especially in western states).

My thought was that “global warming” would create higher highs and that a greater number of high temperature records would be broken post 1950 than before……that isn’t the case.

Many variables/factors left out, but still a neat exercise.

“And yet, it looks to me like more water vapor, the most important GHG, leads to lower high temperatures. What gives?”

I have often wondered myself if water vapor reflects energy from the sun even when clouds aren’t formed. I would love to have the time to find out, but that is just not possible for me right now. Anyone else know?

Bill Illis (17:17:20) :

“Robert (16:30:16) :re: crosspatch (16:12:01) :“No, it would not cause higher high temps because CO2 would also block inbound IR from the Sun during daytime.”

Not much inbound IR from the sun.”

But the extra CO2 would block the extra IR trapped/coming downward from other extra CO2 molecules from above.

Something the AGW community never talks about. How does the extra IR trapped by CO2 make it back to the surface with all those IR intercepting CO2 molecules in the way.

Most of the IR is coming upward from the earth, not downward from the sun, and it’s radiating day and night. As I understand it from other threads, most of the CO2 trapping occurs very near the surface, holding the heat down where the thermometers are.

Anthony,

Speaking of record temps :

Is there an update on the record cold in Edmonton, Canada from some weeks ago that you were going to get confirmation on? Was the record cold temperature really broken by -12 C degrees? I had read it was broken by -14 C degrees.

The story about Edmonton from here at WUWT :

http://wattsupwiththat.com/?s=edmonton

Ohioholic (19:49:07) :

“And yet, it looks to me like more water vapor, the most important GHG, leads to lower high temperatures. What gives?”

I have often wondered myself if water vapor reflects energy from the sun even when clouds aren’t formed. I would love to have the time to find out, but that is just not possible for me right now. Anyone else know?

Water vapor, before acquiring enough density as to form visible clouds, absorbs and scatters photons from any photon stream which is hitting on it. Mie’s theory permits the estimation of photon dispersion by almost all kinds and sizes of particles.

Ohioholic (19:49:07):

To say the truth, scattering and reflectivity of photons by clouds are not well understood processes.

Comparing infoplease’s highest and lowest record tables, we have:

Continent Warmest Record Coldest Record

Africa 1922 1935

N America 1913 1945

Asia 1942 1933

Australia 1881 1994

Europe 1881 unknown

S America 1905 1907

Antartica 1974 1983

Asia seems to be the only continent known to have a coldest record earlier than a warmest record, but realize that the coldest Asian record was in Oimekon, Russia, whereas the warmest Asian record was in Israel!

Okay, I’m game. Let’s put this thing though the ringer.

I have records for my rural area back to 1894 (skipping 1895-1912)

That make 94 years of record.

Going back 1/3 from 2006 to 1975 (31 yrs) I have 102 Maximum Lows.

That’s 102/365 = .279, far short of an AGW trend.

Same year stretch I have 123 Maximum Highs.

That’s 123/365 = .336, just right for Gaussian but no AGW Cigar.

Hey, this is fun.

Working the other way, from 1894 to 1943

I have 200 Maxium Highs.

That’s 200/365 = .548 . Whew, was it hot back then or is it just me?

I have 183 Maximum Lows.

That’s .501 . Oh man, the nights for 1912 to 1943 were balmy, blimey.

Sorry folks, no AGW here.

Sorry, Gore, that’s the way the CO2 bubbles.

Hansens is a soda, did you know that?

Oh, before I forget, 1895 to 1912 is missing from our records. Seems thieves hit main st. and stole all the thermometers in 1895.

I really do have 94 years of record.

Thanks for that post, Steve! You are rapidly becoming my second favorite climate guide (after Anthony, of course). Sorry about that, Al and Hansen.

The North American record high temperature record will eventually be broken….

….just as soon as another rusty trash-burning barrel or BBQ is placed close enough to a Stevenson screen.

Leif,

Please read the article more carefully before commenting. The article says:

The total number of craps tables affects the total number of winners, but it does not change the odds at any particular craps table. Likewise, the more stations you have, the more records will be set.

Work through this simple exercise.

The first year of the record, there is of course a 100% probability of setting the record high. The second year, there is a 50/50 probability. the third year, there is one chance out of three. etc. This is a defining characteristic of a Gaussian, as in the temperature distribution in Bergen.

http://folk.uib.no/ngbnk/kurs/notes/node28.html

Steven Goddard wrote: ” No continents have set a record high temperature since 1974. This is not even remotely consistent with claims that current temperatures are unusually high. Quite the contrary.”

And almost all of these all-time continental temperature maxima occurred WELL before urban heat islands could be blamed. In fact, these observations were taken way back in a time when observations had a great degree of sanity and were not located next to airport runways [as Anthony argues many times on here].

Great work, Steven.

Chris

Norfolk, VA, USA

Speaking about temperatures:

US aplogizes for Global Warming!

http://www.climatedepot.com/a/486/US-apologizes-for-global-warming-Obama-administration-issues-mea-culpa-on-Americas-role-in-causing-climate-change

Idiots.

Pieter F (19:26:21) : “President Obama has $800 billion of carbon tax as a key element in his budgets going forward. “

A tax pure and simple, the budget tables list the anticipated “climate revenues” at $646 billion. Senior White House staff later revised that estimate upward, to a range of $1.3 trillion to $1.7 trillion in the first eight years.

Has anyone factored in all the badly sited weather stations and tried to make an adjustment for the UHI effect on those sites?

Such a map would probably be far different than those preferred by the warming alarmists.

In all fairness, I have to (very reluctantly, kicking and screaming) concede that if my statistics are right, UHI is accounted for reasonably in USHCN1.

Perhaps not in USHCN2, which method is shrouded in mystery and doubletalk.

I have the USHCN raw trend data for USHCN. I averaged all the sites they rate as “urban”. The 100-year trend for those sites averages around 0.5C higher than non-urban. 9% of total sites are urban. They apply an adjustment of around -0.05C/century. This seems about correct.

I grant NOAA the assumption that their adjustment applies to the final total of all stations and not merely urban stations. Granting this, I must concede USHCN1 is probably more-or-less correct.

Now, there may be a problem concerning what they consider to be urban. And there is the “exurbanization” factor to contend with. That maight throw a wrench in. But presuming that their parameters are sound (and they may be), their adjustment is about right.

HOWEVER, as for “badly sited” stations . . . bad in a microsite sense, that is . . . well, that is a story for another day!

(He said with an air of great mystery and portent.)

My rural site is one of the better one’s on Anthony’s scale, and the first 31 yrs of my 94 of record beat the crud out of the last 31 yrs.

Totally wipes the floor.

The only thing abnormal I see is the decision to close down most of the rural stations in favor of heat island ovens.

it ain’t C02, baby, it’s your concrete & asphalt jungle fever.

Developer diptheria.

David LM (17:53:50) David, here in western Colorado, the higher the elevation, the colder it gets. The valley floors are always the warmest temps around. I assume this is due to the thinner atmosphere allowing more heat to escape. More IR in and more IR out on a daily basis, the thinner the air is.

Dan Lee (19:10:46) A similar effect to altitude, ie. the mass of the air. Less H2O, less mass and greater range of temperature day to night. One could also argue the proximity of a large body of water stabilizes temperature. An examination of the desert temperatures surrounding Lake Powell may be able to seperate the two. Lake Powell has about the same amount of coastline as California.

Steven Goddard Interesting perspective, thank you.

Steven Goddard (20:22:24) :

Work through this simple exercise.

The first year of the record, there is of course a 100% probability of setting the record high. The second year, there is a 50/50 probability. the third year, there is one chance out of three. etc. This is a defining characteristic of a Gaussian

Work through this simple exercise:

Assume that halfway through the 100 years there is a dramatic climate change [e.g. like the Younger Dryas] where the temperature jumps 15 degrees, then that year has almost a 100% chance of setting a record high, the second year after that has a 50/50 chance, etc. Now, if there were jumps halfway to the 50 years and to the 75 years, the same will be true for them, so the odds of records depends very much on the trend. So, at the end, we are not down to 1/100 chance.

Rubbing the point in as hard as I possibly can:

Double-digit record setting high years: Sleepy No. CA town pop 3000

1918 – 10

1919 – 17

1920 – 12

1926 – 10

1929 – 23

1932 – 19

1936 – 16

1939 – 11

1986 – 10

1987 – 12

1988 – 14

1991 – 15

Score is Wildcats – 8, Gores – 4

EvanmJones,

sorry, their non-urban sites include quite a few that should be rated urban. Kinda screws up exercises like yours!!

Robert and Mike McMillan,

“Not much inbound IR from the sun.”

Absolutely incorrect. Please do a simple Google search and educate yourself.

Direct sunlight has a luminous efficacy of about 93 lumens per watt of radiant flux, which includes infrared (47% share of the spectrum), visible (46%), and ultra-violet (only 6%) light.

From: http://en.wikipedia.org/wiki/Infrared

The earth radiates as a blackbody at about 280 K. The sun at about 5800 K. The power in the Earths spectrum is shifted into the infrared due to its low output. The Suns into the visible due to its much higher output. Now look at the bottom chart on this page:

http://marine.rutgers.edu/mrs/education/class/josh/black_body.html

Notice the NOTE below it. Yes, you read it right. The earths spectrum, to be seen on the chart in comparison to the sun, is magnified 500,000 times!!!!! You may have seen similar comparison charts with the earth spectrum as high as the suns and a passing mention that it has been adjusted for comparison. Did you wonder by HOW MUCH??? Now you know!!

I am not sure where this myth came from, BUT, the sun outputs PLENTY of IR!!!! The fact that most of it is absorbed in the upper atmosphere probably helps continue the myth.

This site has an interesting graphic showing what wavelengths of the sun reaches the ground. Ever see this represented in the Energy Budget Cartoons?

http://www.windows.ucar.edu/tour/link=/earth/Atmosphere/earth_atmosph_radiation_budget.html

Didn’t think so. They are all WRONG!!!!!! Maybe not by a large amount, but, enough. Note: you’ll be doing yourself a favor if you ignore the Greenhouse propaganda printed on this page. They still believed a real Greenhouse works because it “traps” IR!!!!

The real question I have, that hasn’t been answered, is how is this downwelling IR handled by the so-called reradiation physics of so-called GreenHouse gasses. Accepting that little IR from the sun directly reaches the surface, it would STILL get down by re-radiation just like the AGW crowd claims the Upwelling IR eventually makes it out to space after a delay in re-radiation!!!!! So, exactly how accurate are those cartoons that don’t even mention Sun downwelling IR and makes a big deal about all the Upwelling Earth IR that takes a LOOOOOONG time to get out????

As I said, they are CARTOONS!!!! I wonder if the Models have it???

Hey, any of you smart guys have a REAL answer??

Wow. This trivial math isn’t in the sourcebook already for local meteorologists?

Mike McMillan and Bill Illis

See my comment at 19:01:39

The solar radiation spectrum is shown here: http://physweb.bgu.ac.il/COURSES/Astronomy1/Graphics/solar_spectrum.png

Not everyone sees the same way so there might be a slight variation on the divisions, but about 45 -51% of the area under the curve is to the right of the visible bound, that is, in the IR range. The following site claims 51%.

http://home.wanadoo.nl/paulschils/03.04.html

WIKIPEDIA has a page – scroll to Composition

http://en.wikipedia.org/wiki/Sunlight

Steve,

I must be missing something – are you implying a connection between the all-time continent highs and the chances of a record at an individual station? Or were the continental records intended as an interesting coda?

Ron de Haan (20:30:44) :

Speaking about temperatures:

US aplogizes for Global Warming!

http://www.climatedepot.com/a/486/US-apologizes-for-global-warming-Obama-administration-issues-mea-culpa-on-Americas-role-in-causing-climate-change

Idiots.

If you take responsibility for causing a non-problem, then do you get to pay non-penalties?

Who is Obama’s audience for this? Who is he trying to impress or win over? or is this just a general view he has that the US is a source of bad things in the world. He just doesn’t seem to be very proud of his nation.

Leif,

I made it quite clear in the article how a trend affects the probability. You aren’t saying anything which contradicts that.

I wrote a simple C++ program for calculating Gaussian probabilities.

———————-

#include

main(int argc, char** argv)

{

int iterations = 10000;

int winners = 0;

for (int j = 0; j < iterations; j++)

{

int maximum = 0;

int years = atoi(argv[1]);

for (int i = 0; i maximum)

{

winners++;

}

}

float probability = float(winners) / float(iterations);

std::cout << “Average probability = ” << probability << std::endl;

}

—————————

After 100 years, the probability is 0.01

./gaussian.exe 100

Average probability = 0.0106

“In a cooling climate, we would expect to see more than 10 record lows per day, and fewer than 10 record highs per day.”

Steve, I am afraid this is quite oversimplifying: we could be in a cooling situation and yet, because of strong high pressure centers reach very high temperatures in summer (think Australia’s latest summer…) or very cold lows in winter, absence of clouds…

Sorry, the WordPress html processor mangled the C++ program. It won’t take greater than or less than symbols.

One more try-

#include

main(int argc, char** argv)

{

int iterations = 10000;

int winners = 0;

for (int j = 0; j < iterations; j++)

{

int maximum = 0;

int years = atoi(argv[1]);

for (int i = 0; i maximum)

{

winners++;

}

}

float probability = float(winners) / float(iterations);

std::cout << “Average probability = ” << probability << std::endl;

}

kuhnkat (21:09:10) “The fact that most of it is absorbed in the upper atmosphere probably helps continue the myth.”

I don’t think that is true either! The main thing seems to be that Earth’s IR peak is beyond the bound that most folks use to cut off their solar spectrum diagrams. So, out of sight, out of mind.

This one shows more information, especially the notion that Earth’s IR peaks between 8 – 10 µm while the Solar IR is at a peak near the Visible, say 0.7 and diminishes much by 2.5 to 3.0 µm.

This one has “top of Atmosphere” and “radiation at sea level” so shows at what wavelengths solar IR is taken out and where it reaches to sea level.

http://physweb.bgu.ac.il/COURSES/Astronomy1/Graphics/solar_spectrum.png

Phil,

You state: ” I would further assume that the perfect climate for the AGWers is that which occurs at 285 ppm CO2 or thereabouts.”

Since you’ve asked honestly, I’ll answer in good faith as best I can. At the same time I will attempt to address the misunderstanding that others below your post seem to have reached (Ron, Pieter, E M Smith et al)

Your statement is not true at all. There is no such thing as ‘perfect climate’ – just think how absurd it would be to assume anyone thinks there is such a thing. Besides the fact that you have hugely varying climates existing simultaneously on the planet at any one time (at various latitudes, altitudes, depths and even those between day and night), which species are we defining it be the “perfect climate” for?? That’s why people who THINK that that is what climatologists think find it so easy to “knock down” the argument – the truth is, it’s never existed in the first place. Do you see the stupidity of it? The climate changes, is changing and always has changed.

HOWEVER, the climate’s various changes happen at different times, on different scales and in response to different (sometimes multiple) forcings. Climatologists have long known this. The ‘anomaly’ your friends here are so intent on homing in on (whether it’s Mann’s graph or any other expression of change in a variable) is simply the difference between the ‘now’ and a chosen baseline or average over a given time (generally represented by where/when we have sufficient data to know that our trendline amounts to more than random noise in the system) It is utter rubbish to assert that Mann maintains there has never been change above or below averge, or that the MWP didn’t exist in Europe, or that the LIA is false. He has never asserted that the climate was utterly stable, unchanging or ideal. What IS apparent after a careful look at his excellent and exhaustive reconstruction, using the best proxies available, is that right now we are way above the range within which these perturbations have been fluctuating, at least over the period he’s reconstructed. This is simply fact. It is accepted by all thinking climate researchers. Despite what you might have heard or read, the Mann graph stands firmly accepted as one of the most carefully compiled and studied products of science to date.

It does not mean that the climate has not changed much more dramatically in the past, however, and all climatologists accept this without question. Research the Vostock Ice Core data and look at the pattern driven largely by our orbital cycles with a CO2 feedback and you’ll see what I mean. It’s a case of which particular forcing(s) is dominating at any given time. The 285ppm figure that you cite is simply a rough guide to where we are ‘supposed to be’ at about now in the cycle of ice ages/interglacials. That’s all. If you look carefully at the Vostock graphs, CO2 varies cyclically between about 180ppm during the depths of an ice age up to 290ppm during the height of an interglacial. We are clearly way out of ‘normal territory’ at 390ppm today (this has not been seen for millions of years) and we have gotten to this point over decades, rather than the normal ‘thousands of years’ it would take – which is still considered rapid, geologically, by the way.

So there’s no perfect temperature, scientists are just comparing incoming data to the average of the various data sets we have and expressing how much change (pos or neg) has occurred – NOT stating that any given temp is ‘ideal’. Do you see what I mean?

Cheers,

Matt

Ohioholic (19:49:07) : “I have often wondered myself if water vapor reflects energy from the sun even when clouds aren’t formed. I would love to have the time to find out, but that is just not possible for me right now. Anyone else know?”

Between 0.75 and 3.5 microns, there are 6 absorption lines in the water vapor spectrum. They are fairly thin, but perhaps through the magic of “broadening” as happens with CO² (according to some), they will become almost contiguous, thus blocking a significant amount of the incoming energy, maybe 30%. There’s still a lot left between 0.14 and 0.8 microns.

Steven Goddard (21:53:38) :

I made it quite clear in the article how a trend affects the probability. You aren’t saying anything which contradicts that.

I’m saying that with a trend the chance is not 1/100 after the 100th year. Did you do work through the simple exercise?

And what about the cold ones?

When were they set??

Actually, what is observed is that the all distribution up there is shifted to higher temperatures, i.e. the maximum of the probability density function corresponds to higher and higher temperatures but fluctuations around it still exist

http://www.cdc.noaa.gov/csi/images/GRL2009_ClimateWarming.pdf

.

evanmjones (20:31:31) :

Mr. evanmjones,

I’m still anxious to see that co2 1940’s thread you talked about making a couple weeks ago.

[REPLY- Haven’t forgotten. Looking for data. The graph I found shows fossil fuel consumption up c. 50% from 1940 – 1944 in spite of all the war damage. But I need solid numbers. (I also need to find out how much CO2 incinerating a city releases.) ~ Evan]

Ron House: “To be a straw man, the argument of one’s opponent has to be put in a deliberately weak manner.”

Well, the imputed argument was: “…the perfect unchanging climate (which our AGW friends imagine used to exist before they were born.)”

I don’t know of any climate scientist who claims a “perfect unchanging climate”. Some climate scientists may claim that climate has been relatively stable in the recent past, but that’s not the same as a “perfect unchanging climate”. So, yes, a straw man is being beaten around.

There is no such thing as a “perfect” or “ideal” climate. The earth’s climate may be more or less hostile to human survival and comfort, but that has little to do with perfection. We may also prefer some climates over others, but preference doesn’t imply perfection.

What does matter for humans is that the climate remains not too far from the range of the past 10,000 years, the period during which human civilisation developed, since our way of life is dependent on that range.

sorry, their non-urban sites include quite a few that should be rated urban. Kinda screws up exercises like yours!!

In case you didn’t notice, I pointed out that possibility. Besides, if that were true, the trend increase would average lower and UHI would be that much lower as well. In fact, as the urban % increased and the trend dropped, the adjustment would remain correct (stipulating that it is correct in the first place, which it may be).

The fact is that sites rated as urban warmed 0.5C/century faster than those rated non-urban. 9% of sites are rated as urban. The Adjustment is 0.05C. If this is wrong someone has to tell me why. I know the difference in offset is outrageous. But we are not talking offset, we are talking trend.

Besides, raw data from suburban sites shows even less warming than rural sites. Strange but true. Sites rated as urban show a lot more warming.

FYI, the overall raw trend for the US, weighting all stations equally, is +0.14C per century.

I will discuss microsite issues at a later time, but for now I must defer.

The Australian record of 128 F (53.1 C) at Cloncurry, Queensland in 1889 is not officially recognised anymore. I believe that it was found to be inconsistent with other temperatures recorded in the region on that day, and the quality of recording equipment was dubious (improvised screen made from a beer crate; Anthony would not be impressed). The official record is now 123 F (50.7 C) at Oodnadatta in South Australia in 1960.

It’s worth noting that, over many stations over many years, the chances of an all-time record being due to faulty equipment or a misread are pretty high.

SL,

Yes, but no. If the temperature is reprted to 0.1C, then 22.44C is reported as 22.4C and 22.45C is reperted as 22.5C. So a new high temperature of at 0.1C higher than the previous record high is not required to get a new instrument high (replace argument with one using 5 significant figure and you can show the same with ever increasingly small differentials, no matter what the actual ability of a measurement to be made is – the digital discretization of the data is pretty much irrelevant to this argument). And since instruments drift randomly and instruments are replaced somewhat randomly with arbitraily small offsets (assuming they are calibrated correctly) in random direction from the calibration from the instrument they are replacing, new record highs or lows should still be occurring statistically as frequently as modelled by the article (but we’d have to take account of the UHI, actual number of stations, reading frequencies, etc.).

David LM (17:53:50) David, here in western Colorado, the higher the elevation, the colder it gets. The valley floors are always the warmest temps around. I assume this is due to the thinner atmosphere allowing more heat to escape. More IR in and more IR out on a daily basis, the thinner the air is.

Now, would that same formula work for an outer atmosphere found by probes to have shrunken 30% ?? i.e. – is the heat escaping?

[REPLY- Haven’t forgotten. Looking for data. The graph I found shows fossil fuel consumption up c. 50% from 1940 – 1944 in spite of all the war damage. But I need solid numbers. (I also need to find out how much CO2 incinerating a city releases.)

Scorched Earth, burned war materials, exploded ordinance in addition to the consumed contruction materials of which much of it accumulated over centuries. Wow, that is a tough assignment.

Matt Bennett (18:52:20) :

Ed says: “Nature is the norm…”

Now I don’t think anyone doubts that at all. The point is whether precautionary action should be taken to avoid terrible effects.

OK, if you’re so in favour of precautionary action how about this. I think most scientists would agree that there is a high probability that we are not the only intelligent civilisation in the universe, in fact there are probably many. Dr Drake of the Drake equation estimates about 10,000 for example. Assuming there are 10,000 then there is a good chance that some of these are hostile, more advanced, and could at this very moment be monitoring our radio transmissions!

Obviously the consequences of a more advanced hostile alien race attacking the earth would be far more serious than even AGW, and so I’m sure you’ll agree that we should:

a) Stop all radio transmissions immediately

b) Massively increase millitary spending in order to prepare ourselves for the attack (just in case).

sorry, but this math is complete bogus. the idea that 10 stations would report a maximum on a day, and 10 stations report a minimum in one country is absurd. this would actually be a sign of a massive problem with the surface station network.

beyond that, the science isn t really based on those daily records at any station.

instead we average stations and days over a year and find a remarkable result:

http://www.metoffice.gov.uk/corporate/pressoffice/2008/images/g_r_ranked_hadCRUT3_lg.gif

the science is sound.

This may have been mentioned before (penalty for coming late to a active discussion), but:

The debate is all about antropomorphic global warming – that is, man-made global warming – due to mankinds output of green-house gases.

Well if such a thing is true, then the total amount of additional green-house gases must be produced by the total world population. This implies that we each have an average green-house gas footprint.

Given that the world population doubles roughly every 60 years, we would need to halve the average green-house gas footprint every 60 years just to maintain the status quo. That looks like a no-win game to me.

Why is everybody focussed on trying to change the climate side of the equation?

Surely decreasing the size of the global population over the same time period (3 generations) would be easier, and have a more lasting effect. Or is that a taboo that we are not ready to face quite yet?

A nice picture of the station where the North American 1913 record was taken. Too bad they all can’t be of this quality.

http://docs.lib.noaa.gov/rescue/mwr/050/mwr-050-01-0010.pdf (Figure 1)

No climatologists believed that the climate was stable before the invention of the automobile – other than the IPCC.

http://en.wikipedia.org/wiki/File:Hockey_stick_chart_ipcc_large.jpg

Interesting how the AGW crew wants to have it both ways. Stable when convenient, and then deny it when convenient.

If one only looks at the warming trend of one short period and ignores the fact that this is only part of ongoing and alteranating long term cool/warm cycle , then the so called record global warming can be made to look abnormal or alarming. Taken in the context of a longer period , the warming is just another warming cycle of many such events.

LEAST SQUARE TREND LINES PER DECADE FOR VARIOUS PAST WARM AND COOL PERIODS

[Per HADCRUT3vgl]

1900-1926 0.048 C COOL [AMO –VE, PDO –VE &+VE]

1926-1944 0.187 C WARM [AMO & PDO POSITIVE]

1964-1976 0.108 C COOL [AMO& PDO NEGATIVE]

1994 -2008 0.187 C WARM [AMO & PDO POSITIVE]

Notice that the period 1926-1944 had the same rate of warming as 1994-2008. Periods of global warming existed well before 1976-2008.

1900 -2009 0.073 C PAST CENTURY [equivalent of 0.73 C/century]

2002 -2009 -0.195 C LATEST COOL [PDO –VE SEPT/07, AMO –VE JAN/09]

What made the most recent warming[1994-2007] more significant was that some of the AMO and PDO levels were higher than usual [third highest after 1878]

Another observation is that most of the recent warming period was really in the period 1994- 2007 and not 1976-2008. So the real warming was a decade and some three years , a very short period indeed and not a climate trend or long term trend at all. It is amazing how 13 years got blown out of all proportions by the AGW science and misrepresented as an alarming and an un precedented trend when it was really another warm hicup of this planet where regular alteranating cool and warm hicups are par for the planet.

Matt Bennett (22:30:03) :

“What IS apparent after a careful look at his excellent and exhaustive reconstruction, using the best proxies available, is that right now we are way above the range within which these perturbations have been fluctuating, at least over the period he’s reconstructed. This is simply fact. ”

This “fact” depends on the choice of “the period he’s reconstructed”. But does this “fact” hold up everywhere else? I also notice the disclaimer “using the best proxies available”. A lot depends on that.

Brendan H,

Your point is correct, but I think you’re also interpreting an ambiguous word usage by Anthony in a way it need not be.

First of all, Anthony was obviously making a hyperbolic argument to enforce his point. He doesn’t believe anyone believes that, only that the way the data is presented to the general public it would be virtually impossible for the public to not draw that conclusion. Much like the “North Pole will be ice-free this year (gasp!)” statements. It doesn’t matter whether the North Pole has or hasn’t been so in the past, one virtually forces a general audience to draw the conclusion desired even though the data doesn’t support it.

Second – within the recognized flexibility of the Engligh language Anthony could just as easily have meant, “perfect[ly] unchanging climate.” Again, nobody actually believes that, but it would be EXTREMELY difficult for people, for instance, watching Al Gore’s movie and looking at his presentation of the Mann Hockey Stick to not draw that conclusion.

THAT is the point that Anthony, I think, was trying to make.

I neglected to add that 10 of the highest global temperature anomalies also took place in the period 1994-2008 when AMO and PDO were both warm or positive and at higher than normal levels during some years. As both are now negative and anticipated to be so for some time , it is not surprising that we are having more cooler weather like we had in the 1970’s. CO2 is tracking completely opposite of this cooling.

“What does matter for humans is that the climate remains not too far from the range of the past 10,000 years, the period during which human civilisation developed, since our way of life is dependent on that range.”

Our way of life would be shattered by a two degree departure from the mean???

Of course, I’ll have to agree that we certainly benefited from the 8 degree rise in temperature from 20,000 years ago. http://www.geocraft.com/WVFossils/last_50k_yrs.html

Steve Keohane (20:49:16) :

Are the valleys warmer at night?

In general higher elevations are cooler during the day, thanks to convection and the adiabatic lapse rate (about 1°F per 200 feet). At night radiational cooling cools air near the surface which flows down hill and chills the valley. The temperature inversion thus formed can be quite thin (less than 100 feet) and scours away quickly in the morning with the first breeze.

OTOH, on a bicycle tour through the searing (2003) eastern Oregon & Idaho summer there were a couple canyons where the valley suffered radiant heat stored in the basalt canyon walls during the day – we baked all night long.

Brendan H (00:32:52) :

“Ron House: “To be a straw man, the argument of one’s opponent has to be put in a deliberately weak manner.” Well, the imputed argument was: “…the perfect unchanging climate (which our AGW friends imagine used to exist before they were born.)” I don’t know of any climate scientist who claims a “perfect unchanging climate”. Some climate scientists may claim that climate has been relatively stable in the recent past, but that’s not the same as a “perfect unchanging climate”. So, yes, a straw man is being beaten around.”

You carefully didn’t quote the part of my reply where I pointed out that the argument made did not rely on any part of this “perfect unchanging climate” snipe. Yes, that remark by the OP was snide, but no, the argument made did NOT rely on the “perfect unchanging” part of it. Indeed, even with a heck of a lot of variation in the past, as long as the current variation is out of the previous range, statistically more record maxima should be getting set, and they are not. So there is NO straw man argument here, just AGW alarmists pouncing on an irrelevancy (which was obviously intended to be nothing but a facetious wisecrack, as you surely well know) and trying to misdirect people’s attention with it whilst ignoring the actual logical content of the OP’s argument. Deal with the actual argument, please – if you can.

Leif Svalgaard (20:54:24) :

Steven Goddard (20:22:24) :

Work through this simple exercise.

The first year of the record, there is of course a 100% probability of setting the record high. The second year, there is a 50/50 probability. the third year, there is one chance out of three. etc. This is a defining characteristic of a Gaussian

Work through this simple exercise:

Assume that halfway through the 100 years there is a dramatic climate change [e.g. like the Younger Dryas] where the temperature jumps 15 degrees, then that year has almost a 100% chance of setting a record high, the second year after that has a 50/50 chance, etc. Now, if there were jumps halfway to the 50 years and to the 75 years, the same will be true for them, so the odds of records depends very much on the trend. So, at the end, we are not down to 1/100 chance.

Therefore, your point is an underlying increasing trend will raise the probability of record highs in later years, which we don’t see, yes? Seems to me this adds credibility to SG’s thesis.

Matt Bennett (22:30:03):

Mann’s reconstruction is far from “excellent and exhaustive” from what I’ve seen. A simple search of Climate Audit for Mann will show more than enough examples of questionable practices to cast his entire body of work into doubt.

no sod,

The math is both correct and is basic statistics. This is exactly how the temperature distribution would behave in a stable climate. If you have a disagreement about the math – cite something specific. The world “bogus” is not a mathematical proof.

Those here who believe that pedantic arguments about semantics strengthen the case for AGW, are deluding themselves. All it indicates is their complete lack of skill at interpreting rhetorical techniques – like sarcasm.

crosspatch (16:12:01) :

No, it would not cause higher high temps because CO2 would also block inbound IR from the Sun during daytime. It would act to moderate daytime high temps but it would act to prevent radiative cooling. As a result, the atmosphere should heat up in the mid troposphere. It would get heated from the sun in the daytime and from surface radiation at night … but that isn’t happening according to the observations so we can just can the whole silly notion.

You are correct, sir — that’s what is supposed to be happening if AGW from greenhouse gas emissions is occurring. The highs don’t get higher, the lows get warmer.

Yet another inconvenient fact.

I agree with Leif. The variables used are not independent, so the author’s entire argument fails. Also, why does the article have pictures of Gaussian distribution when the type of distribution makes no difference to the author’s argument?

Scott B (06:46:43) :

Matt Bennett (22:30:03):

Mann’s reconstruction is far from “excellent and exhaustive” from what I’ve seen. A simple search of Climate Audit for Mann will show more than enough examples of questionable practices to cast his entire body of work into doubt.

Amen to that. Matt, if you have always accepted Mann’s work at face value, try starting here http://www.climateaudit.org/?p=2322 where McIntyre talks about the official reports on the subject.

Steve,

As Leif Svalgaard and one or two others have pointed out, your method is seriously overstating the likelihood of record events in a day. As Leif pointed out upthread, daily temp readings are not independent events, but your analysis assumes that they are. Temp readings on successive days aren’t properly Gaussian.