11:19 AM 08/03/2017

A new study using an expensive climate supercomputer to predict the risk of record-breaking rainfall in southeast England is no better than “using a piece of paper,” according to critics.

“The Met Offices’s model-based rainfall forecasts have not stood up to empirical tests and do not seem to give better advice than observational records,” Dr. David Whitehouse argued in a video put together by the Global Warming Policy Forum.

Whitehouse, a former BBC science editor, criticized a July 2017 Met Office study that claimed a one-in-three of parts of England and Wales see record rainfall each winter, largely due to man-made climate change.

Using its $127 million supercomputer, the Met Office found in “south east England there is a 7 percent chance of exceeding the current rainfall record in at least one month in any given winter” and “a 34 percent chance of breaking a regional record somewhere each winter” when other parts of Britain were considered.

“We have used the new Met Office supercomputer to run many simulations of the climate, using a global climate model,” Met Office scientist Vikki Thompson said of the study.

The Met Office commissioned the study in response to a series of devastating floods that ravaged Britain during the 2013-2014 winter. Heavy winter rains caused $1.3 billion in damage in the Thames River Valley.

Scientists said supercomputer modeling could have predicted the flooding. Thompson said the supercomputer “simulations provided one hundred times more data than is available from observed records.”

But Whitehouse said the supercomputer’s models did “not give any better information than what could be obtained using a piece of paper.”

Using observational records, Whitehouse argued the 7 percent “chance of a month between October and March exceeding the record for that month in any year is equivalent to a new record being set every 86 months.”

“New monthly records were set twice in the 216 October-March months between 1980 and 2015,” he said. “Therefore the ‘risk’ of a new record for monthly rainfall is 5.5% per year, according to the record.”

“Between 1944 and 1979, there were three new record monthly rainfalls – an 8.7 per cent chance of any month in a year exceeding the existing record,” Whitehouse continued, adding that “between 1908 and 1943, there were 4 record events – a risk of 14.5%.”

“The risk of monthly rainfall exceeding the monthly record in the Southeast of England has not risen, contrary to many claims,” he argued based on the observational data, adding the “Met Office computer models do not give any more reliable insight than the historical data.”

WATCH:

Follow Michael on Facebook and Twitter

Content created by The Daily Caller News Foundation is available without charge to any eligible news publisher that can provide a large audience. For licensing opportunities of our original content, please contact licensing@dailycallernewsfoundation.org.

Can it play tic tac toe?

Just put in the answer you want, wait and wallah it will give you the answer you want via 10 billion phony calculations. Isn’t the state of science just grand!

“wallah” ?? You mean “voila”…

If you program a super duper computer that costs 130 million USD with the political established UNFCCC the result will still be the political established UNFCCC.

Shirley you jest, Grant. It’s “Viola.”

Do you want to play a game (War Games)

It’s entertaining to watch postmodern neomarxism, that believe nothing is truth, claim that their policy based science(actually a part of the new Marxism) is truth?

Pong or Missile Command?

Garbage in Garbage out. 127 Millions worth. 🙂

Roger

Why people believe more computer power will give you better answers is a mystery.

“Why people believe more computer power will give you better answers is a mystery.”

No mystery. IF YOU KNOW WHAT YOU ARE DOING, there are situations where you can use computing power to overpower problems that are otherwise intractable. For example, in the early days of military satellites, the USAF used programs based on solving the equations for an ellipse to predict satellite positions. But as their mission requirements became more demanding, the equations got out of hand. They switched to step by step numerical integration. The later requires more computing power. But it scales far more easily.

And the numerical integrations software was easily validated. If it predicted that a particular satellite would rise above the horizon at Kodiak Island at 0534Z at an azimuth of 343 degrees, successful acquisition of the satellite at 0534Z went a long way toward validating the software. (In practice, they did run a lot of additional tests)

The problem in climate modeling is that there is no evidence whatsoever that the modelers know what they are doing. The models are basically unvalidated. It’s all faith based.

Getting an answer quicker doesn’t make it a better answer.

The leading climate models did not agree with each other 30 years ago and have not converged. This is because the modeling groups use different parameterizations for clouds and water vapour, the dominant greenhouse gas.

There is a link between solar activity, clouds and climate that the models ignore. (See Nir Shaviv’s lectures on Youtube and his published papers.) The IPCC rightly discounts TSI (solar irradiance) but ignores

So, if the models omit the Sun, we should expect them to fail to reflect reality.

Why is this so difficult for everyone?

There is no known way to uniquely solve a system of partial differential equations. It’s not a matter of computational power; the solution doesn’t exist.

You can throw all the gigaflops and terraflops at it you want; the problem can’t be solved using existing mathematics.

You could reduce the grid size but obviously if your model is fundamentally flawed you will just get more of the same flawed output, possible just quicker. Interesting that a ‘scientist’ doesn’t know that a model does not produce data, but then she does work for the Met Office.

I think you actually have to write a computer program to realize just how DUMB computers are. People who have never programed a computer just don’t realize that it will do just what you tell it to do, nothing more, nothing less. It can’t think for itself, it can’t make independent judgements, it can only spew out what you told it to spew out.

No better than just following historical averages? For a climate computer model, that would be very good performance./sarc

Can it sing “Daisy, Daisy” like HAL did in the movie, “2001: A Space Odyssey?”

No. Unless you load an MP3 of the song. Garbage needs to be programmed in before it can be output.

HAL was not connected to the interweb then, so now no need for programming, just google it, it’s all there! Or ask Siri! LOL

As the Hon C Monckton might say ‘this is just argument from authority (of a a bloody great big computer)!

The producers from CSI Miami rent it to play their “scientific” laboratory animations and therefore find it’s accuracy very useful.

They’re using two different definitions of data in one statement. That’s wrong to the point of dishonesty.

Measurements can provide data in the computing sense, but simulations can’t produce data in the climate science sense. That’s “cloud castling.” You can’t use assumptions as evidence for future assumptions.

Well, not honestly.

I actually think many scientists and the horde of pseudo-scientists that infest Climate Science do not understand the difference. It’s not dishonesty so much as plain ignorance and a belief in the conclusion, which means the “right” answer must mean the “right” assumptions.

The main weakness in rainfall forecasting is the parameters used in the model. Majority of the cases, these parameters rarely relate to rainfall. Because of this even to date the long-rainfall forecasts in India both by IMD and other scientific institutes-private forecasters. When I was in IMD/Pune we used to prepare the forecast based on the parameters defined by the then British IMD chief. The best regression parameters were used in the regression equation. Here most of the computations were made with very combursome hand driven number meter — not calculator. At that time IMD has no computer and all the work is carried out with manual calculations [thus no data manipulation as these are carried out by lower rank assistants]. Now In India sophisticated computers are in use in the forecast. The forecasts rarely predicted rainfall >110% and < 90% range of average rainfall — the averages vary with institutions as they use different data sets.

We used to have a forecaster [daily weather] who used to give very accurate prediction based on the ground based data maps. With the sophisticated computers and satellite data it has not improved much except the movement of the cyclones in the sea.

Dr. S. Jeevananda Reddy

In my book “Dry-land Agriculture in India: An Agromaterological and Agroclimatological Perspective”, BS Publications, Hyderabad, India, 2002, 429p on page 126:

“It appears that sometimes the low tech is more powerful over the high tech. The 1998 Hurricane George in Central America can be cited as an example, where it was proved that human experience is more vital than the sophisticated computer estimates using ultra-modern data sets. Hurricane George raged through the Gulf of Mexico and lumbered toward New Orleans/USA after ravaging the Caribbean and Florida/USA. There, local television meteorologists stood in front of computerized screens and grimly echoed the National Hurricane Center Official Forecast, the New Orleans would be the Hurricane’s next ground zero. But on WWL-TV, an 80 year old weather forecaster gripped a black marker pen, pointed to his plastic weather-board and calmly assured viewers that the Hurricane would veer off toward Biloxi, Miss. As usual, Nesh Charles Roberts Jr., an 80-year old private meteorological consultant, was right. India Meteorological Department also used to have one such person at their central forecasting centre in Pune, namely George. But, unfortunately such people never get any recognition but those work on sophisticated computers get all the show. I situ-knowledge and thumb rules are very important in the short-term weather forecasts over the sophisticated technology, under monsoon conditions. It is vital for accurate forecasts! Yet who cares!”

When I was a scientist at ICRISAT, early morning scientists used to wait for my bus arrival to know the rainfall for the day. For Hyderabad, the Thumb rule is if there is a low pressure system around Kolkotta/West Bengal, Hyderabad in AP will be dry. Based on the morning weather map, I used to tell them which rarely failed.

Dr. S. Jeevananda Reddy

I was in New Orleans for hurricane Andrew and when hurricane Gilbert entered the Gulf of Mexico.

Bob Breck accurately forecast that Andrew would jog west, missing New Orleans, but pummel the bayous to our West, including Baton Rouge.

Bob Breck also accurately forecast that Gilbert would hammer the Yucatan.

Nor was Bob Breck shy about asking Professor Bill Gray for Gray’s opinion regarding approaching storms and conditions.

It appears you are cherry picking subjective views regarding selected events and then stating that one event as if it is universal or pervasive.

There are many meteorologists who combine experience, history and technology to develop quality forecasts; many visit this website, comment here or even run the website.

Nevertheless, when a storm of Katrina, Camille, Gilbert’s or Andrew power enters or develops in the gulf, everyone prepares for a major storm. Only fools, or as is common around the Gulf Yankees and West coast visitors ignore the danger.

Hurricanes used to visit the East coast regularly; there are cities full of people who treat hurricanes as trivial events. Since the 1960s, I’ve heard experienced meteorologists trying to warn East coast residents of the danger.

Conflating your poor opinion regarding television meteorologists as applying to all professional broadcast meteorologists is improper.

ATheok — I doubt you understand what I presented!

In the Hurricane action time, I was in USA and saw this in media reports. The meteorologists of TV presented what the official report says and they haven’t presented their opinion.

Dr. S. Jeevananda Reddy

Interesting, thanks.

Without you providing names of meteorologists, links to videos or verified transcripts; I’ll base what I observed while living in New Orleans against your claims.

Nor is your ad hominem regarding my understanding, useful to your claim.

Using a computer to play Go and beat humans was done creating a learning program that ran trough huge numbers of games to learn what works and what doesn’t, it would interesting to use a similar method of analyzing the historical weather and climate data and see what an artificial intelligence program would predict as future weather and climate with out gaming it with BS assumptions like we do now with CGMs.

Let’s give the boys a fidget to play with next time.

“simulations provided one hundred times more data than is available from observed records.” Ponder that.

I did. Beat me to the punch.

Yes, it’s the first thing that caught my eye. Part of me wants to believe it is just a typo, but the other part is worried that it is not.

That is like saying a crystal ball or a set of tarot cards provides data.. !!

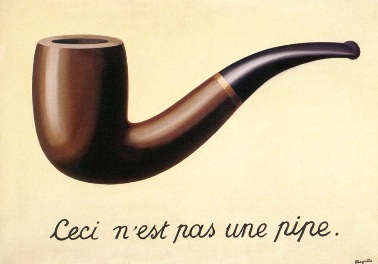

This picture should be at the entrance of any supercomputing facility:

Urederra, I doubt that they’d get it – the scientists not the programmers.

Forget using that computer for climate models. Hook it up to synthesizers and program it to produce some killer beats. It could usher in a new sub-genre of electronic music called “synthetic data”, but use the French translation to make it sound cool — données synthétiques

It’s funny how climate “scientists”, global warming enthusiasts and alarmists have all forgotten the golden rule of computer output:

Garbage in, Garbage out.

Since no one else seems to have pointed it out, if rainfall were a random process, new records will be common in the early years of record keeping and will be less common as the length of the record increases. If 5cm of rain falls on the rainiest day of the first year of record keeping (and if things are random), there’s a 50% chance that the second year will set a new record, a 33% chance of a new record on the third year … etc.

Something of the sort may well be happening here.

Touche’

But wouldn’t extreme (3+ sigma) events become more probable with time? What happens early becomes part of the’ normal’ history (they define the mean and SD) and the ‘un-normal’ events will show up with enough sampling.

Mods –

…Whitehouse, a former BBC science editor, criticized a July 2017 Met Office study that claimed a one-in-three of parts of England and Wales see record rainfall each winter, largely due to man-made climate change….

Should read

” Whitehouse, a former BBC science editor, criticized a July 2017 Met Office study that claimed a one-in-three CHANCE of parts of England and Wales seeING record rainfall each winter, largely due to man-made climate change. “?

[It is incorrect in the source Daily Caller article as well… -mod]

If these computers were that good they would be picking tattslotto numbers or horse race winners .

TABCorp in Australia, with which I still have a “gaming license” had a system called “HALO”. It was worth AU$1bil. Could not touch it. “Monitored” at that time by Microsoft MOM2000. Yes, really! No changes around Melbourne Cup Day. Betting is big business in Aus, so much so that “luxbet.com.au” (I set up the systems management for that server farm) was “hosted” out of the Northern Territories to get around regulatory rules. Not sure about today.

That is of absolutely no use unless they can tell which region will be affected.

Before the event happens, of course.

GIGO a supercomputer merely means you can use a lot more GI and get your GO faster.

Has the MET has bought body and soul into climate ‘doom’ an action which has brought it considerable funding, what other results would you expect?

“Has the MET has bought body and soul into climate ‘doom’ ”

Sadly …YES

Their forecasting is poor, they rarely get the weather correct beyond 3 days so resort to lucrative long-term scare mongering.

Exactly a month ago the press and various forecasting agencies were predicting the UK was about to enter a six week heatwave of record temperatures as a Spanish plume was due to head north and get stuck. Since then it has largely been a wetter and less settled summer than average.

And yet they still create press releases predicting decades and centuries out.

Decades of failed climate models.

Whined excuse, MetO needs a supercomputer.

Super computers require brilliant highly trained people to properly utilize. Otherwise, it is a big power hungry paperweight that can calculate more commands per day. Thus providing more calculations per day; but not one bit better than the slower computers.

Sadly, MetO lacks brilliant people and highly trained technicians.

British citizens should demand that MetO only use wind and solar to run their supercomputer. It will not change the quality of their forecasts.

Here’s a one. Don’t even need any paper.

(Having a semi-working brain does help tho)

Its like a computer prog but not really. More of a Science Hypothesis.

1. CO2 is actually an atmospheric coolant

2. This cooling causes water vapour in the air to condense faster than it otherwise might. (Making clouds)

3. Clouds being clouds, especially over the UK, tend to make it rain

4. The rain disperses said cloud(s) and makes it sunnier than it was.

5. As it happens, sunshine is as equally important as CO2 is for plants.

6. Hence the extra sunshine makes the plants grow more than they did and we get Global Greening

And everyone thought CO2 made them grow faster.

(Indirectly it did, you really must be careful of getting Cause & Effect the right way round and therein is the problem with current (digital) computers – they all have a clock controlling them.

One thing *has* to happen before any other.

Another problem with digital computers is sampling.

Don’t sample often enough and you effectively create negative time/frequencies and Lo-and-Behold – positive feedback becomes a reality.

Yes?

You *did* realise I meant that positive feedback is real only to the computer and also you spotted how I’ve perfectly described a ‘climate thermostat’?

good

Computer is fine, it is a great machine.

“Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?”

GIGO !

Met office translation:

“Garbage In, Gospel Out”

Good one!

As a Brit I have given up taking any notice of the weather forecast and just look out the window (you can see the MET office building in Exeter it has no windows) and if I do hear what the forecast is I expect the opposite, even when there’re issuing storm warnings. There’s all this shouting and arm waving and then very little happens and if anything happens the BBC etc are all over it trying to hype it up to “climate change”.

James Bull

‘you can see the MET office building in Exeter it has no windows’

Brilliant! That explains a lot 🙂

JB,

I’m afraid that I’m getting equally cynical about US weather forecasts. We now have Doppler radar, geostationary weather satellites, computers, and supposedly a better understanding of meteorology than when I was a young man. Yet, subjectively, it doesn’t seem to me that forecasts are any better than formerly, except maybe in California, which only has two seasons — sunny and rainy.

Put the computer to better use, like playing video games.

“simulations provided one hundred times more data”

Stop right there. What the heck are they saying??

Since when is a simulation capable of providing ‘data’?!

Their simulations provided 100 times more guesses that there is data. So what? The ‘model’ should have started with an analysis of historical trends which would have highlighted the downward trend in the number of new records with rising temperatures.

As always with model averages, they provide no information.

If there is 100 model runs, one of those runs is the most accurate of the 100 runs (and we can never know which one is) The other 99 dilute the most accurate run.

What is produced at end are not probabilities. It’s merely net casting, trying to catch the real number in your net of forecast number range.

Any accuracy is complete luck.

What “unprecedented” rainfall in England looks like!

https://notalotofpeopleknowthat.wordpress.com/2017/07/26/met-office-unprecedented-rainfall-nonsense/

Strange how they think that polluting observational data with one hundred times more fabricated data can be considered to be an improvement.

I’ll assume October to March = 6 months with records beginning in 1910 as I understand it from http://www.bbc.co.uk/news/uk-25944823. When records began is important in calculating the probability of a record as will be clear from the following.

In 1910 the probability for a particular month to be a record for that month is 1 and assuming randomness.

in 1911 the probability is 1/2 then for subsequent years 1/3, 1/4 1/5.

By 2017 its 1/108 But there are 6 months for a record to occur in so the % probability of a record month in 2017 is (1-((1-(1/108))^6))*100 = 5.43 ie calculate the probability of no record in the 6 months and subtract from 1 to get the probability of a record. Multiply by 100 to get % probability

Now 5.43% is pretty close to the caluclation of 5.5 from the rainfall record. May I suggest that this is pretty consistent with the the climate in SE England being random as regards rainfall. Why is the Met Office wasting money on supercomputers when a simple bit of probability calculation vs the record implies the rainfall in SE England is random? And where is the anthropogenic climate change if it’s random.

It’s the age old use of brute force computing when you don’t actually know enough about what is happening to codify it.

They simply don’t know enough to be able to codify it.

Same with Climate/Global Circulation Models – we simply don’t know enough to program them. Bigger computers produce junk quicker, for more money.

Sheet of paper: $0.02

Climate Supercomputer: $127 Million

Climate scientists spend $127 Million to prove they don’t know WTF they’re talking about: PRICELESS

If climate scientists really had to play with a supercomputer, one can build a machine from Raspberry PIs with 32 nodes for about $2000 (see the web for plans). It’s probably only as powerful as a standard PC. But it runs parallel programs and would probably reach the same nonsense conclusions for a much, much cheaper price.

Jim

The climate supercomputer was invented by fake scientists to fool gullible people. It’s the modern version of the charlatan’s crystal ball. Give me money and I will tell your fortune

Well, if the computer simulations did not approximately match the hand-calculated results, there would have been something wrong in the programming.

The Met office don’t even forecast yesterdays weather correctly. To think that Group Captain Stagg was able to predict a window of opportunity for the D-Day landings in 1944 without the aid of any super computers and now they cannot even get tomorrow right. So we are supposed to believe that they are right in 83 years time but not next week. If they dredged the rivers and drainage channels we would not notice the heavy rain. The EU says we have to let the land flood because………they say we have to let the land flood. They save a newt by drowning every small mammal in the area or some such nonsense.

GWPF. Great at criticising other people’s work, but never make a sensible contribution themselves. I’ll take some notice of them when they finally produce the global temperature analysis they promised (with blaring trumpets and waving flags) 2 years ago.

Bobby, you said “I’ll take some notice of them when they finally . . . “, try asking questions that have some meaning.You’re asking the wrong questions so you don’t have to worry about the answers.

The thing is, this study was widely hailed in the global warming community that extreme weather was proven to be more likely now.

Impressive sounding numbers, 7% chance, 34% chance, that no global warming believer would check or try to understand: just trumpet around the internet.

But it really is just the simple averages of the numbers experienced in the records and the basic simple math. I pointed this out on another board and at least one believer then checked the math but the rest just went along merrily citing more extreme weather is here.

Actually, the Met Office numbers were not even right.

First, there is really no trend in UK rainfall by region since the monthly records began in 1873 (maybe a tiny small increase but nothing significant. the monthly records are sufficiently random-in-nature that one could conclude there is no statistically significant trend).

So, there is a one-in-144 year (0.7%) chance of breaking a monthly record in any particular region in any particular month.

In any given winter (6 months * 0.7%) = 4.2% chance than any particular region will break a record in that winter.

The Met Office says it is now a 7.0% chance that a record will be broken in a region in a month but it is certainly just 4.2%.

Then they say there is 34% chance 1 of the 9 regions will break a monthly record in any given winter. 9 * 4.2% = 37.8% (their number is less than the basic math)

Climate scientists have always been bad at math.

And no climate model has been accurate enough on a very small regional basis to say it is the climate model results rather than the basic probabilities.

Climate Scientist: What number do you want?

Government Numpty: 6C degrees rise by 2100.

Climate Scientist: How much will you give me?

Government Numpty: £2,000,000

Climate Scientist: The answer is 6.062C. And it’s robust.

Who says Climate Scientists are bad at math?

Jorge

That particular Climate Leech is good at knowing which side of her/his bread is buttered!

And to 3 dp, too!

Auto

Has anyone pointed out that in order to achieve claims of ‘unprecedented’ rainfall, they have ignored about 150 years of rainfall data from the UK?

Here’s the link to the press release: http://www.metoffice.gov.uk/news/releases/2017/high-risk-of-unprecedented-rainfall

Basically they have thrown away all the data prior to 1910, ie from then back to the start of the series in 1766, and replaced it with the multiple runs of their model of the UKs rainfall, then analysed their new ‘data’. Thus somehow proving that climate change creates extreme weather or some such nonsense.

If you look at the series from 1766 you will see current rainfall in the UK is nothing out of the ordinary, indeed worse events have been recorded through the series.

From the article: ““The risk of monthly rainfall exceeding the monthly record in the Southeast of England has not risen, contrary to many claims,” he argued based on the observational data,”

There’s that pesky “observational data” messing up the CAGW narrative again!

Probably nothing wrong with the computer. It is the crap they are feeding it. Like using a Ferrari as a paper weight.

Poor UK Met Off. They should have read the Hitchhikers Guide to the Galaxy and payed careful attention, like taking notes, in particularly the parts about Marvin and his troubled existence.

Given the near certain unlikelihood of Forecasts, they could have saved a lot of Pounds-Loot by just as mentioned, using a pencil and piece of paper and perhaps a mobile to call up people all over UK to ask what they thought the next winter would turnout to be and figured an average with standard deviation.

Polls are no better than Super Computers with the UK Met Off’s “models” but least but at least the UKer’s could complain assured knowing that the “Forecast” was produced by Them and no one and nothing else!

Ha ha 😉

PS. Not to worry though about the ill-spent 100 million pounds cause that was no doubt payed for by the EU. It’s the loans from the EU that under writ the 1 billion pounds in damages that the Brexitters need worry now, cause in 2019 those loans could be called in and that will be a shock to the market.

So Super Computer equals Birds Intestines?

The fine art of Scrying the Future..

Guts of small animals,tea leaves or Special playing cards..all just as accurate as GIGO.

And so much cheaper for the taxpayer.

Could have saved a lot of money and improved accuracy with a big box containing a mechanical coin flipper.

2016. Big computer, faulty models. Result: rubbish.

2017. Bigger computer, faulty models. Result: rubbish, only more detailed now.

Yeah, but it”s the P.R. value. Computer output = must be right; supercomputer output = REALLY must be right. Thirty-some years ago when I was a math tutor, I saw the same thing with calculators. Kid types in 6 x 6, key sticks so he really types 6 x 66, gets 396, writes it down, keeps going.

Weather models fail at periods of, typically around 2 weeks because of the chaotic nature of the atmosphere.and the inability of the models to capture

They must take into account several large-scale phenomena, each of which is governed by multiple variables and factors. For example, they must consider how the sun will heat the Earth’s surface, how air pressure differences will form winds and how water-changing phases (from ice to water or water to vapor) will affect the flow of energy. They even have to try to calculate the effects of the planet’s rotation in space, which moves the Earth’s surface beneath the atmosphere. Small changes in any one variable in any one of these complex calculations can profoundly affect future weather.

In the 1960s, an MIT meteorologist by the name of Edward Lorenz came up with an apt description of this problem. He called it the butterfly effect, referring to how a butterfly flapping its wings in Asia could drastically alter the weather in New York City.

Climate models are much less effected by this butterfly effect because their equations are different, based more on processes that relate to the radiation involved with the heating/cooling of the planet that are not subjected to as much chaos.

This would be most true if you can nail down all the long term forces involved and represent them and their changes with high confidence in your climate model. This is where we enter the realm of speculative theory and not fact and especially in the current world, not objective science.

Simplifying the case, we can keep all things equal in a climate model, then dial in X amount of greenhouse gas warming from CO2 and its feed backs(for example additional warming from increasing H2O). When we run this thru something like 100 climate models with slight variations in some of the equations (ensembles) and come up with a very similar outcome, it provides higher confidence in the solution, for temperatures let’s say.

However, every ensemble member is programmed with a similar inadequacy to represent natural forces from natural cycles. This would still be ok, in a world where all things remain constant except for the increase in CO2.

This would also be ok in a world where we know with high confidence, what the sensitivity is in the atmosphere to changes in CO2(feedbacks and so on included).

But we don’t. All we have is a speculative theory that represents our best guesses…………that have been lowering the CO2 sensitivity for 2 decades. The biggest issue regarding this is the selling of these climate model products as being nearly infallible, settled science that provide us with guidance that can be imposed with impunity.

Taking this out into a realm of even higher speculation is when we take climate model output and try to use it to predict long term weather(which is climate) in specific regions or locations.

Even if we could nail down GLOBAL warming to +1.8 deg C and can use that to forecast heavier rains and more high end flooding events globally and more melting of ice or other global effects, the ability to try to pinpoint specific regional “weather” effects(averaged longer term-which is climate) is very limited……yet, this big drop in the expected skill of such projections is not properly communicated. It’s all presented as part of the “Settled Science”.

This is the opposite of using the authentic scientific method. We should present theories objectively using honest assessments of confidence…….that CHANGE as we learn more.

When the disparity between global climate model temperature projections and observations grows, as it clearly has, the scientific method dictates that we adjust the theory to decrease the amount of warming(or go in whatever direction the data takes us)………..instead of waiting for the observed warming to “catch up” to our preconceived notion based on a clearly busted version of the speculative theory that best supports political actions. Ego’s, cognitive bias, funding and political affiliation of climate scientists, modelers and others should play no role in the outcome of scientific products…….yet, they actually define the field of climate change/science today……..taken by most to be synonymous with “human caused” climate change.

“The biggest issue regarding this is the selling of these climate model products as being nearly infallible, settled science that provide us with guidance that can be imposed with impunity.”

Why would they need any computers at all, if this is settled? Their actions say that all that came before was junk. As we said. Today’s silly report will be obsoleted by the next bigger computer report.

Whaaaaaaaatttt?

“Scientists said supercomputer modeling could have predicted the flooding. Thompson said the supercomputer “simulations provided one hundred times more data than is available from observed records.””

So….supercomputers are taking actual REAL observations….and MAKING UP 100X more “data”???

Because this super computer is a time machine??

And yet, even with 100X more data…they STILL aren’t better than basic math.

Perfect.

‘Thompson said the supercomputer “simulations provided one hundred times more data than is available from observed records.”’

Output from simulations IS NOT DATA!

They are random numbers. The supercomputer is just a super fast random number generator. Then it applies statistical analyses to the random numbers and outputs probability curves. That’s really what a simulation is. But supercomputer sounds impressive, random number generator is laughable

A slower random sequence generator

http://slotsetc.com/images/pictures/slots/jennings/jennings_buckaroo.jpg

Reminds me . . . I wrote my own random number generator 35+ years ago, because I wasn’t happy with the operating system RNG, which was notoriously NOT random.

I took the floating point system time, moved it to an integer to strip off the decimal portion. Then I subtracted that integer value from the saved system time to get the decimal value. Then I multiplied the whole number by the remaining decimal number. I figured that was about as random as I could get out of a PDP/11.

I believe that weather forecasters need to look at the sky, for several reasons: obtaining clues for changes in the near term, say the next 6 hours, to lend confidence to remotely sensed data and computer model output in order to validate what they are seeing virtually; and not least importantly, to also gain experience over the long haul to marry observational information to forecast outcomes. Meteorologists in their 30s and even 40s have not and are not being trained first as weather observers, as were those of us older folks, as automated platforms and sensors took away much of the need for that. I believe that many of today’s forecasters are sorely lacking in the ability to maximize their forecasting acumen, as they have less ability to understand what they are looking at in the sky, if they even bother to tear themselves away from the computer screen array. The old-timers had observational platforms, even cupolas, to accurately and consistently gauge the sky. I believe there IS a place for windows in meteorological offices, and forecasters need to continue to be trained in the observational practices to enhance weather forecasting. In the U.S., ASOS does not even see any clouds above 15k feet; how many times has a meteorologist been able to see cirrocumulus in the morning to foretell severe weather 12 hours later? Clues matter.

In regard to the referenced UKMO study, it appears that their “scientists,’ as well as all of today’s so-called climate scientists, need to study historical weather events to a much greater degree. This should be done in the course of their degree studies, but I don’t think much of that happens nowadays. A pity.

Actually 4caster, you make a fine case for setting serial liars about the coming weather adrift in small boats sans paddle or sail.

If they are particularly arrogant, air dropped into the middle of an ocean might be necessary.

Such methods might focus their attention… but there again stupid is incurable.

John Robertson, I believe that most weather forecasters do the best job they can (although I do know a few who couldn’t care less), but the technology makes even them, their supervisors, and their administrators believe that there is nothing to be learned or gained from older methods and practices. True, there has been a quantum leap forward in the science, understanding, and technology in meteorology, but that does not mean we should throw out proven methods, even if they are considered old. Most forecasters don’t “lie,” but when your behind is in the hot seat and a forecast or a decision on whether a warning needs to be made, sound versus flawed decision-making can rapidly separate the men from the boys (or the women from the girls, as the case may be). But, experience is often a valuable resource on which to rely, and I feel that today’s forecasters do not have all the ammunition they could have, as the science has left behind some useable resources.

My opinion on many of the TV people I now see in the U.S. is that they are sorely lacking in the ability to analyze atmospheric data, and they rely solely on some private forecaster with little experience, who in turn relies solely on the NOAA/NWS, and that my or may not turn out so well. The not-so-recent trend toward employing eye-catching young women for on-camera work can backfire during rapidly evolving weather situations. They may have degrees, but their lack of experience is apparent. That’s not a knock against women, but it IS a knock against the hiring of any person for ratings purposes at the expense of the ability to cogently communicate needed and possibly vital weather information.

That depends who is answering. If I was one of the engineers I’d say this supercomputer is the best thing to happen in our lifetime.

I live in SE England. About ten years ago we had a hot dry summer and endless BBC news reports featuring hand-wringing journos and Met Office ‘experts’ filmed in front of empty reservoirs telling us this was the shape of things to come, because… Global Warming. I’m old enough to realise that people who are as consistently wrong as the BBC and the Met Office are probably just talking pish.

Assuming floods are random events, the number of records expected to be set in a period of N years would be:

Ln(N) [treating the first year in the series as a record]

From 1908 to 2015, the chain of precipitation records expected to be set would be:

Ln(108) ~5

In the article it is 4+3+2 =9, suggesting a degree of auto correlation but the declining number with subsequent 36 year periods shows a logarithmic character. For example, carrying on with our random approach, we would have to go three times as long, 324 yrs (including the first 108yrs) before we would get another record flood:

Ln(324) ~6

If they have flood records before 1908 that they were using, then our Ln calculation number would be a little bit closer to theirs, although not a lot closer. Example: if they had a record flood in medieval times, say anno 1015, then by 2015 we would have expected only:

Ln (1000) ~7 increasing records.

Because of auto correlation in cyclic climate patterns (AMO, etc) , there is likely bunching up of records with long intervening periods of lesser flooding activity. My prediction for UK flooding is we could break the flood record in SE England one more time before 2050, and possibly not again for a century or two later. I fear Dr Whitehouse was wrong too in his analysis, although I agree with him, the forecast could have been done in minutes with a pencil and paper.

It seems the 27m£ for a computer was to compensate for poor statistical skills. And who’s to say they even included AMO, etc in their computer calculation. Remember doom forecaster in chief Phil Jones of UEA admitted in the climategate emails he didn’t know how to use Excel!

The price of toilet paper is scandalous these days.

I wrote this a long time ago, but it seems appropriate:

Dedicated to the UK Meteorological Office.

To the tune of ‘American Pie’ by Don McLean – https://www.youtube.com/watch?v=uAsV5-Hv-7U

A long long time ago

I’d look out the window and predict the

Weather for the day.

If I saw clouds I’d call for rain,

If not I’d say “It’s fine again.”

And folks were fairly happy

Either way.

But lately we’ve been automated,

My good intentions are frustrated.

All of my predictions

Turn out to be fictions.

Last week I said it would be dry.

It nearly made me want to cry

When floods demolished Hay-on-Wye,

The day the forecast died.

“Bunk, bunk, our programs are junk!

They’ve been written by a kitten or a twelve-year-old punk.

The modeller’s a toddler, or perhaps he was drunk.

And now our reputation is sunk.”

Now, if you don’t have any skills

And you’re hepped up on happy pills,

If you can’t make a living,

You’ll find the Met forgiving.

You don’t have to get it right,

Say hot is cold and black is white!

If snow clouds are a-forming,

Blame them on global warming!

If your critics point out flaws,

Tell them that you know the cause.

Say the machines have blundered

Because you’re underfunded!

When you have a CPU,

You can let it think for you!

Although sometimes its thoughts aren’t true..

The day the forecast died.

I started singing: “Bunk, bunk, our programs are junk!

They’ve been written by a kitten or a twelve-year-old punk.

The modeller’s a toddler, or perhaps he was drunk.

And now our reputation is sunk.

Now our reputation is sunk.”

We were happy girls and boys,

We had lots of brand new toys.

A high-tech installation

To model the whole nation.

Now taxpayers are getting mad.

Despite the money that we’ve had,

Our forecasts are appalling.

It’s really rather galling.

They just don’t seem to understand.

The finest programs in the land

Can’t make predictions work

When weather goes berserk.

So here’s the burden of my song,

Our models were right all along!

It’s the real world that got it wrong

The day the forecast died.

I started singing: “Bunk, bunk, reality’s junk!

It’s been written by a kitten or a twelve-year-old punk.

The modeller’s a toddler, or perhaps he was drunk.

But still, our reputation is sunk.…

Still, our reputation is sunk.”

Nice! I bet today you would get sued for defaming them if you released that on youtube.

whither forecasting

It’s not a supercomputer, it’s a dupercomputer.

weird now everyone here believes in the observational record.

[massive generalization FAIL – “everyone”? certainly Mosh has developed amazing mind reading powers -mod]

I live in the South East of England and if the weather forecast shows a rain cloud passing over my house at 3pm then I expect rain at 3pm because their computer is brilliant for short range forecasting. If the price of good short range forecasting is politically correct long range forecasting then I can easily igno0re that.

That is more to do with high resolution doppler radar and satellite imagery combined to get specific, accurate short range forecast of your local weather, showing exactly what fronts moving towards your area are actually doing and gauging the time and amounts of precip in specific areas and times.

What this “super” computer is for is to backstop and propagate the lie that humans have to stop all energy production, agriculture and manufacturing because we are hurting their Mother Gaia. Nothing to do with meteorology or science.

You will not be able to easily ignore the huge increase in power bills you will see in the near future, resulting from the actions taken that were justified with these long-term forecasts.