Part II: How the central estimate of pre-feedback warming was exaggerated

By Christopher Monckton of Brenchley

In this series I am exploring the cumulative errors, large and small, through which the climatological establishment has succeeded in greatly exaggerating climate sensitivity. Since the series concerns itself chiefly with equilibrium sensitivity, time-dependencies, including those arising from non-linear feedbacks, are irrelevant.

In Part I, I described a small error by which the climate establishment determines the official central estimate of equilibrium climate sensitivity as the inter-model mean equilibrium sensitivity rather than determining that central estimate directly from the inter-model mean value of the temperature feedback factor f. For it is the interval of values for f that dictates the interval of final or equilibrium climate sensitivity and accounts for its hitherto poorly-constrained breadth [1.5, 4.5] K. Any credible probability-density function for final sensitivity must, therefore, center on the inter-model mean value of f, and not on the inter-model mean value of ΔT, skewed as it is by the rectangular-hyperbolic (and hence non-linear) form of the official system gain equation G = (1 – f)–1.

I showed that the effect of that first error was to overstate the key central estimates of final sensitivity by between 12.5% and 34%.

Part II, which will necessarily be lengthy and full of equations, will examine another apparently small but actually significant error that leads to an exaggeration of reference or pre-feedback climate sensitivity ΔT0 and hence of final sensitivity ΔT.

For convenience, the official equation (1) of climate sensitivity as it now stands is here repeated. There is much wrong with this equation, but, like it or not, it is what the climate establishment uses. In Part I, it was calibrated closely and successfully against the outputs of both the CMIP3 and CMIP5 model ensembles.

Fig. 1 illuminates the interrelation between the various terms in (1). In the current understanding, the reference or pre-feedback sensitivity ΔT0 is simply the product of the official value of radiative forcing ΔF0 = 3.708 W m–2 and the official value of the reference sensitivity parameter λ0 = 3.2–1 K W–1 m2, so that ΔT0 = 1.159 K (see e.g. AR4, p. 631 fn.).

However, as George White, an electronics engineer, has pointed out (pers. comm., 2016), in using a fixed value for the crucial reference sensitivity parameter λ0 the climate establishment are erroneously treating the fourth-power Stefan-Boltzmann equation as though it were linear, when of course it is exponential.

This mistreatment in itself leads to a small exaggeration, as I shall now show, but it is indicative of a deeper and more influential error. For George White’s query has led me to re-examine how, in official climatology, λ0 came to have the value at or near 0.312 K W–1 m2 that all current models use.

Fig. 1 Illumination of the official climate-sensitivity equation (1)

The fundamental equation (2) of radiative transfer relates flux density Fn in Watts per square meter to the corresponding temperature Tn in Kelvin at some surface n of a planetary body (and usually at the emission surface n = 0):

(2) ![]() | Stefan-Boltzmann equation

| Stefan-Boltzmann equation

where the Stefan-Boltzmann constant σ is equal to 5.6704 x 10–8 W m–2 K–4, and the emissivity εn of the relevant surface n is, by Kirchhoff’s radiation law, equal to its absorptivity. At the Earth’s reference or emission surface n = 0, a mean 5.3 km above ground level, emissivity ε0, particularly with respect to the near-infrared long-wave radiation with which we are concerned, is vanishingly different from unity.

The Earth’s mean emission flux density F0 is given by (3),

where S0 = 1361 W m–2 is total solar irradiance (SORCE/TIM, 2016); α = 0.3 is the Earth’s mean albedo, and 4 is the ratio of the surface area of the rotating near-spherical Earth to that of the disk that the planet presents to incoming solar radiation. Rearranging (2) as (4) and setting n = 0 gives the Earth’s mean emission temperature T0:

A similar calculation may be performed at the Earth’s hard-deck surface S. We know that global mean surface temperature TS is 288 K, and measured emissivity εS ≈ 0.96. Accordingly, (3) gives FS as 374.503 W m–2. This value is often given as 390 W m–2, for εS is frequently taken as unity, since little error arises from that assumption.

The first derivative λ0 of the Stefan-Boltzmann equation relating the emission temperature T0 to emission flux density F0 before any radiative perturbation is given by (5):

The surface equivalent λS = TS / (4FS) = 0.192 K W–1 m2 (or 0.185 if εS is taken as unity).

The official radiative forcing in response to a doubling of atmospheric CO2 concentration is given by the approximately logarithmic relation (6) (Myhre et al., 1998; AR3, ch. 6.1). We shall see later in this series that this value is an exaggeration, but let us use it for now.

Then the direct or reference warming in response to a CO2 doubling is given by

A similar result may be obtained thus: where Fμ = F0 + ΔF0 = 238.175 + 3.708 = 241.883 W m–2, using (2) gives Tμ:

Then –

a little less than the result in (7), the small difference being caused by the fact that λ0 cannot have a fixed value, because, as George White rightly points out, it is the first derivative of a fourth-power relation and hence represents the slope of the curve of the Stefan-Boltzmann equation at some particular value for radiative flux and corresponding value for temperature.

Thus, the value of λ0, and hence that of climate sensitivity, must decline by little and little as the temperature increases, as the slightly non-linear curve in Fig. 2 shows.

Fig. 2 The first derivative λ0 = T0 / (4F0) of the Stefan-Boltzmann equation, which is the slope of a line tangent to the red curve above, declines by little and little as T0, F0 increase.

The value of λ0 may also be deduced from eq. (3) [here (10)] of Hansen (1984), who says [with notation altered to conform to the present work]:

“… for changes of solar irradiance,

“Thus, if S0 increases by a small percentage δ, T0 increases by δ/4. For example, a 2% change in solar irradiance would change T0 by about 0.5%, or 1.2-1.3 K.”

Hansen’s 1984 paper equated the radiative forcing ΔF0 from a doubled CO2 concentration with a 2% increase ΔF0 = 4.764 W m–2 in emission flux density, which is where the value 1.2-1.3 K for ΔT0 = ΔF0λ0 seems first to have arisen. However, if today’s substantially smaller official value ΔF0 = 3.708 W m–2 (Myhre et al., 1998; AR3, ch. 6.1) is substituted, then by (10), which is Hansen’s equation, ΔT0 becomes 0.991 K, near-identical to the result in (7) here, providing further confirmation that the reference or pre-feedback temperature response to a CO2 doubling should less than 1 K.

The Charney Report of 1979 assumed that the entire sensitivity calculation should be done with surface values FS, TS, so that, for the 283 K mean surface temperature assumed therein, the corresponding surface radiative flux obtained via (2) is 363.739 W m–2, whereupon λS was found equal to a mere 0.195 K W–1 m2, near-identical to the surface value λS = 0.192 K determined from (5).

Likewise, Möller (1963), presenting the first of three energy-balance models, assumed today’s global mean surface temperature 288 K, determined from (2) the corresponding surface flux 390 W m–2, and accordingly found λS = 288 / (4 x 390) = 0.185 K W–1 m2, under the assumption that surface emissivity εS was equal to unity.

Notwithstanding all these indications that λ0 is below, and perhaps well below, 0.312 K W–1 m2 and is in any event not a constant, IPCC assumes this “uniform” value, as the following footnote from AR4, p.631, demonstrates [with notation and units adjusted to conform to the present series]:

“Under these simplifying assumptions the amplification of the global warming from a feedback parameter c (in W m–2 K–1) with no other feedbacks operating is 1 / (1 – c λ0), where λ0 is the ‘uniform temperature’ radiative cooling response (of value approximately 3.2–1 K W–1 m2; Bony et al., 2006). If n independent feedbacks operate, c is replaced by (c1 + c2 +… + cn).”

How did this influential error arise? James Hansen, in his 1984 paper, had suggested that a CO2 doubling would raise global mean surface temperature by 1.2-1.3 K rather than just 1 K in the absence of feedbacks. The following year, Michael Schlesinger described the erroneous methodology that permitted Hansen’s value for ΔT0 to be preserved even as the official value for ΔF0 fell from Hansen’s 4.8 W m–2 per CO2 doubling to today’s official (but still much overstated) 3.7 W m–2.

In 1985, Schlesinger stated that the planetary radiative-energy budget was given by (11):

where N0 is the net radiation at the top of the atmosphere, F0 is the downward flux density at the emission altitude net of albedo as determined in (3), and R0 is the long-wave upward flux density at that altitude. Energy balance requires that N0 = 0, from which (3, 4) follow.

Then Schlesinger decided to express N0 in terms of the surface temperature TS rather than the emission temperature T0 by using surface temperature TS as the numerator and yet by using emission flux F0 in the denominator of the first derivative of the fundamental equation (2) of radiative transfer.

In short, he was applying the Stefan Boltzmann equation by straddling uncomfortably across two distinct surfaces in a manner never intended either by Jozef Stefan (the only Slovene after whom an equation has been named) or his distinguished Austrian pupil Ludwig Boltzmann, who, 15 years later, before committing suicide in despair at his own failure to convince the world of the existence of atoms, had provided a firm theoretical demonstration of Stefan’s empirical result by reference to Planck’s blackbody law.

Since the Stefan-Boltzmann equation directly relates radiative flux and temperature at a single surface, the official abandonment of this restriction – which has not been explained anywhere, as far as I can discover – is, to say the least, a questionable novelty.

For we have seen that the Earth’s hard-deck emissivity εS is about 0.96, and that its emission-surface emissivity ε0, particularly with respect to long-wave radiation, is unity. Schlesinger, however, says:

“N0 can be expressed in terms of the surface temperature TS, rather than [emission temperature] T0 by introducing an effective planetary emissivity εp, in (12):

so that, in (13),

This official approach embodies a serious error arising from a misunderstanding not only of (2), which relates temperature and flux at the same surface and not at two distinct surfaces, but also of the fundamental architecture of the climate.

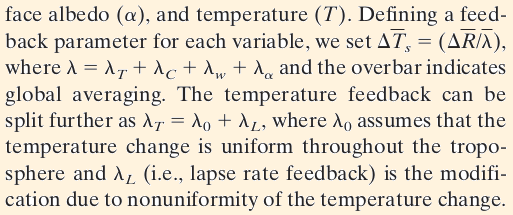

Any change in net flux density F0 at the mean emission altitude (approximately 5.3 km above ground level) will, via (2), cause a corresponding change in emission temperature T0 at that altitude. Then, by way of the temperature lapse rate, which is at present at a near-uniform 6.5 K km–1 just about everywhere (Fig. 3), that change in T0 becomes an identical change TS in surface temperature.

Fig. 3 Altitudinal temperature profiles for stations from 71°N to 90°S at 30 April 2011, showing little latitudinal variation in the lapse-rate of temperature with altitude. Source: Colin Davidson, pers. comm., August 2016.

But what if albedo or cloud cover or water vapor, and hence the lapse rate itself, were to change as a result of warming? Any such change would not affect the reference temperature change ΔT0: instead, it would be a temperature feedback affecting final climate sensitivity ΔT.

The official sensitivity equation thus already allows for the possibility that the lapse-rate may change. There is accordingly no excuse for tampering with the first derivative of the Stefan-Boltzmann equation (2) by using temperature at one altitude and flux at quite another and conjuring into infelicitous existence an “effective emissivity” quite unrelated to true emissivity and serving no purpose except unjustifiably to exaggerate λ0 and hence climate sensitivity.

One might just as plausibly – and just as erroneously – choose to relate emission temperature with surface flux, in which event λ0 would fall to 254.6 / [4(390.1)] = 0.163 K W–1 m2, little more than half of the models’ current and vastly-overstated value.

This value 0.163 K W–1 m2 was in fact obtained by Newell & Dopplick (1979), by an approach that indeed combined elements of surface flux FS and emission temperature T0.

The same year the Charney Report, on the basis of hard-deck surface values TS and FS for temperature and corresponding radiative flux density respectively, found λS to be 0.192 K W–1 m2.

IPCC, followed by (or following) the overwhelming majority of the models, takes 3.2–1, or 0.3125, as the value of λ0. This choice thus embodies two errors one of modest effect and one of large, in the official determination of λ0. The error of modest effect is to treat λ0 as though it were constant; the error of large effect is to misapply the fundamental equation of radiative transfer by straddling two distinct surfaces in using it to determine λ0. As an expert reviewer for AR5, I asked IPCC to provide an explanation showing how λ0 is officially derived. IPCC curtly rejected my recommendation. Perhaps some of its supporters might assist us here.

In combination, the errors identified in Parts I and II of this series have led to a significant exaggeration of the reference sensitivity ΔT0, and commensurately of the final sensitivity ΔT, even before the effect of the errors on temperature feedbacks is taken into account. The official value ΔT0 = 1.159 K determined by taking the product of IPCC’s value 0.3125 K W m–1 for λ0 and its value 3.708 W m–2 for ΔF0 is about 17.5% above the ΔT0 = 0.985 K determined in (9).

Part I of this series established that the CMIP5 models had given the central estimate of final climate sensitivity ΔT as 3.2 K when determination of the central estimate of final sensitivity from the inter-model mean central estimate of the feedback factor f would mandate only 2.7 K. The CMIP 5 models had thus already overestimated the central estimate of equilibrium climate sensitivity ΔT by about 18.5%.

The overstatement of the CMIP5 central estimate of climate sensitivity resulting from the combined errors identified in parts I and II of this series is accordingly of order 40%.

This finding that the current official central estimate climate sensitivity is about 40% too large does not yet take account of the effect of the official overstatement of λ0 on the magnitude of that temperature feedback factor f. We shall consider that question in Part III.

For now, the central estimate of equilibrium climate sensitivity should be 2.3 K rather than CMIP5’s 3.2 K. Though each of the errors we are finding is smallish, their combined influence is already large, and will become larger as the compounding influence of further errors comes to be taken into account as the series unfolds.

Table 1 shows various values of λ0, compared with the reference value 0.264 K W–1 m2 obtained from (8).

| Table 1: Some values of the reference climate-sensitivity parameter λ0 | ||||

| Source | Method | Value of λ0 | x 3.7 = ΔT0 | Ratio |

| Newell & Dopplick (1979) | T0 / (4FS) | 0.163 K W–1 m2 | 0.604 K | 0.613 |

| Möller (1963) | TS / (4FS) | 0.185 K W–1 m2 | 0.686 K | 0.696 |

| Callendar (1938) | TS / (4FS) | 0.195 K W–1 m2 | 0.723 K | 0.734 |

| From (8) here | T0 / (4F0) | 0.264 K W–1 m2 | 0.985 K | 1.000 |

| Hansen (1984) | T0 / (4F0) | 0.267 K W–1 m2 | 0.990 K | 1.005 |

| From (7) here | T0 / (4F0) | 0.267 K W–1 m2 | 0.991 K | 1.006 |

| Schlesinger (1985) | TS / (4F0) | 0.302 K W–1 m2 | 1.121 K | 1.138 |

| IPCC (AR4, p. 631 fn.) | 3.2–1 | 0.312 K W–1 m2 | 1.159 K | 1.177 |

Nearly all models adopt values of λ0 that are close to or identical with IPCC’s value, which appears to have been adopted for no better reason that it is the reciprocal of 3.2, and is thus somewhat greater even than the exaggerated value obtained by Schlesinger (1985) and much copied thereafter.

In the next instalment, we shall consider the effect of the official exaggeration of λ0 on the determination of temperature feedbacks, and we shall recommend a simple method of improving the reliability of climate sensitivity calculations by doing away with λ0 altogether.

I end by asking three questions of the Watts Up With That community.

1. Is there any legitimate scientific justification for Schlesinger’s “effective emissivity” and for the consequent determination of λ0 as the ratio of surface temperature to four times emission flux density?

2. One or two commenters have suggested that the Stefan-Boltzmann calculation should be performed entirely at the hard-deck surface when determining climate sensitivity and not at the emission surface a mean 5.3 km above us. Professor Lindzen, who knows more about the atmosphere than anyone I have met, takes the view I have taken here: that the calculation should be performed at the emission surface and the temperature change translated straight to the hard-deck surface via the lapse-rate, so that (before any lapse-rate feedback, at any rate) ΔTS ≈ ΔT0. This implies λ0 = 0.264 K W–1 m2, the value taken as normative in Table 1.

3. Does anyone here want to maintain that errors such as these are not represented in the models because they operate in a manner entirely different from what is suggested by the official climate-sensitivity equation (1)? If so, I shall be happy to conclude the series in due course with an additional article summarizing the considerable evidence that the models have been constructed precisely to embody and to perpetuate each of the errors demonstrated here, though it will not be suggested that the creators or operators of the models have any idea that what they are doing is as erroneous as it will prove to be.

Ø Next: How temperature feedbacks came to be exaggerated in official climatology.

References

Charney J (1979) Carbon Dioxide and Climate: A Scientific Assessment: Report of an Ad-Hoc Study Group on Carbon Dioxide and Climate, Climate Research Board, Assembly of Mathematical and Physical Sciences, National Research Council, Nat. Acad. Sci., Washington DC, July, pp. 22

Hansen J, Lacis A, Rind D, Russell G, Stone P, Fung I, Ruedy R, Lerner J (1984) Climate sensitivity: analysis of feedback mechanisms. Meteorol. Monographs 29:130–163

IPCC (1990-2013) Assessment Reports AR1-5 are available from www.ipcc.ch

Möller F (1963) On the influence of changes in CO2 concentration in air on the radiative balance of the Earth’s surface and on the climate. J. Geophys. Res. 68:3877-3886

Newell RE, Dopplick TG (1979) Questions concerning the possible influence of anthropogenic CO2 on atmospheric temperature. J. Appl. Meteor. 18:822-825

Myhre G, Highwood EJ, Shine KP, Stordal F (1998) New estimates of radiative forcing due to well-mixed greenhouse gases. Geophys. Res. Lett. 25(14):2715–2718

Roe G (2009) Feedbacks, timescales, and seeing red. Ann. Rev. Earth Planet. Sci. 37:93-115

Schlesinger ME (1985) Quantitative analysis of feedbacks in climate models simulations of CO2-induced warming. In: Physically-Based Modelling and Simulation of Climate and Climatic Change – Part II (Schlesinger ME, ed.), Kluwer Acad. Pubrs. Dordrecht, Netherlands, 1988, 653-735.

SORCE/TIM latest quarterly plot of total solar irradiance, 4 June 2016 to 26 August 2016. http://lasp.colorado.edu/data/sorce/total_solar_irradiance_plots/images/tim_level3_tsi_24hour_3month_640x480.png, accessed 3 September 2016

Vial J, Dufresne J, Bony S (2013) On the interpretation of inter-model spread in CMIP5 climate sensitivity estimates. Clim Dyn 41: 3339, doi:10.1007/s00382-013-1725-9

Jo Novas’ husband thinks the same http://www.perthnow.com.au/news/opinion/miranda-devine-perth-electrical-engineers-discovery-will-change-climate-change-debate/news-story/d1fe0f22a737e8d67e75a5014d0519c6

So this Kindergarten does draining real money down the river since 1896 :

A MATHEMATICAL discovery by Perth-based electrical engineer Dr David Evans may change everything about the climate debate, on the eve of the UN climate change conference in Paris next month.

A former climate modeller for the Government’s Australian Greenhouse Office, with six degrees in applied mathematics, Dr Evans has unpacked the architecture of the basic climate model which underpins all climate science.

He has found that, while the underlying physics of the model is correct, it had been applied incorrectly.

He has fixed two errors and the new corrected model finds the climate’s sensitivity to carbon dioxide (CO2) is much lower than was thought.

“Yes, CO2 has an effect, but it’s about a fifth or tenth of what the IPCC says it is. CO2 is not driving the climate; it caused less than 20 per cent of the global warming in the last few decades”.

Dr Evans says his discovery “ought to change the world”.

“But the political obstacles are massive,” he said.

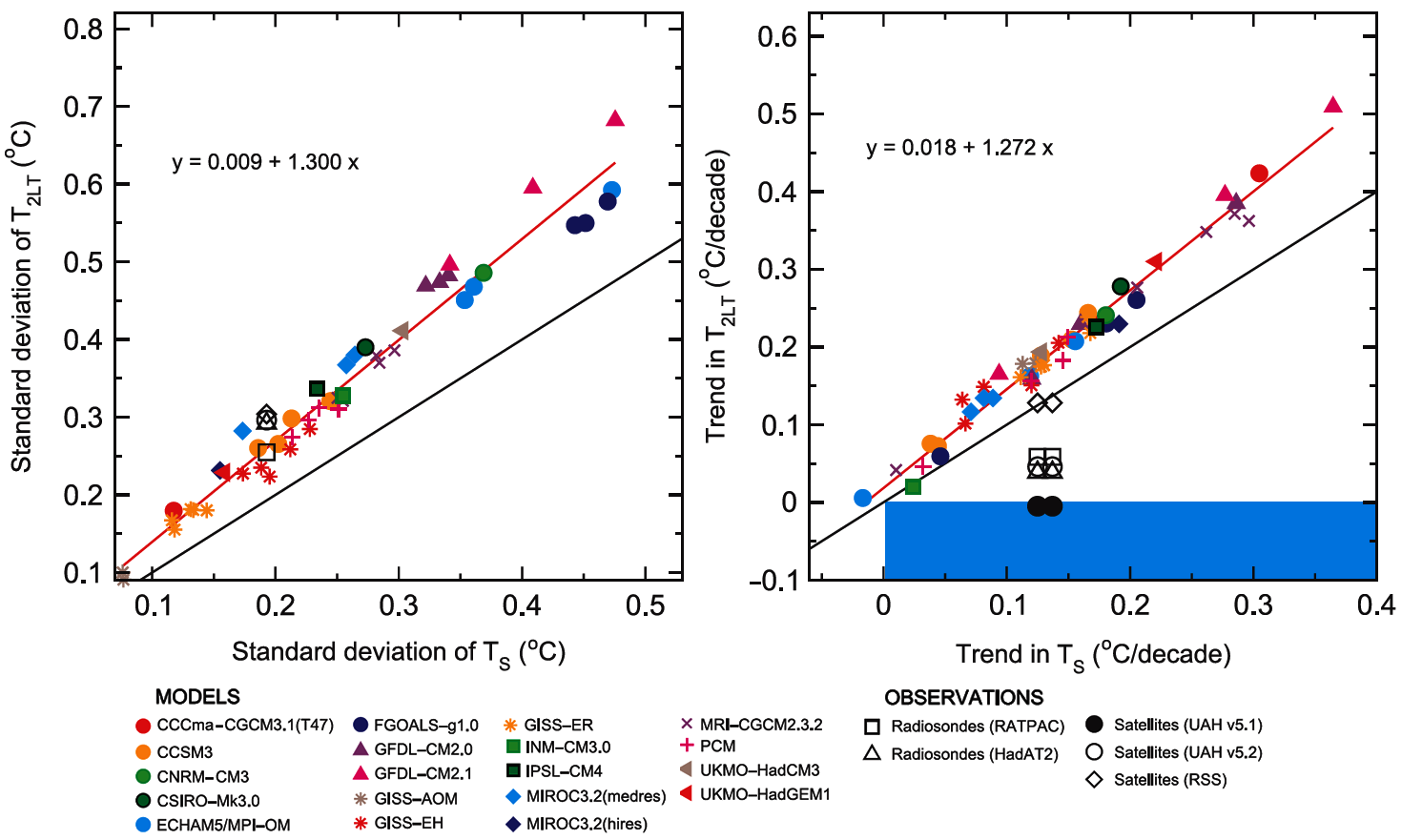

His discovery explains why none of the climate models used by the IPCC reflect the evidence of recorded temperatures. The models have failed to predict the pause in global warming which has been going on for 18 years and counting.

“The model architecture was wrong,” he says. “Carbon dioxide causes only minor warming. The climate is largely driven by factors outside our control.”

There is another problem with the original climate model, which has been around since 1896.

___________________________

aghasting. / zum Kotzen /

David Evans’ work is very interesting. He advances a new theory of climate in which separate sensitivity calculations are performed at the emission altitudes for cloud tops, CO2, water vapor, methane etc. He finds climate sensitivity to be low.

The present series, by contrast, offers no new theory. It merely points out certain influential discrepancies between mainstream climate science and mainstream science. Mainstream climate science turns out to be wrong.

“Yes, CO2 has an effect, but it’s about a fifth or tenth of what the IPCC says it is.”

IPCC says it’s 3.2 K. A fifth of that is 0.64 K. I believe Dr. Evans is correct. Lindzen and Spencer independently calculated the climate sensitivity not from models but from empirical satellite data. They got the same value of 0.6 K indicating strong negative feedback since the no feedback sensitivity is around 1 K as demonstrated here by Lord Monckton

None of this is how the Planck response was calculated. The real method fully accounts for nonuniform temperature, lapse rate and emissivity. MERRA and ERA-Interim give an observation-based Planck response of about -3.1 W m-2 K-1.

See Soden & Held (2006, http://dx.doi.org/10.1175/JCLI3799.1 ), Bony et al. (2006, http://dx.doi.org/10.1175/JCLI3819.1) and Dessler (2010, http://dx.doi.org/10.1175/JCLI-D-11-

00640.s1).

Since the calculation is performed at the mean emission altitude, changes in lapse rate are irrelevant, since they are feedbacks and do not influence lambda-zero; emissivity at that altitude is as near unity as makes no difference; and the only significant non-uniformities in temperature are latitudinal, but they are insufficient to alter lambda-zero significantly. And Schlesinger did indeed determine lambda-zero as described, since when the models have followed, as Soden & Held’s list of values for lambda-zero makes clear.

“Since the calculation is performed at the mean emission altitude”

Your calculation does, but the one used for the IPCC result doesn’t. That calculation is done at all levels of the atmosphere as explained in the papers I cited.

Performing the calculation of lambda-zero at the mean emission altitude gives a result larger than performing it at any lesser altitude. Therefore, a calculation performed at all altitudes ought to yield a lesser value for lambda-zero than the emission-altitude value.

In any event, it is at the emission altitude that climate sensitivity should be determined. And the calculation at that altitude is uncomplicated.

_You_ calculated at an averaged mean emission altitude. The CMIP calculations are performed properly, see Section 2 in Soden & Held (2006, http://dx.doi.org/10.1175/JCLI3799.1 ). It’s less than 2 pages and it explains how the real technique is very different from what you’ve done here so anyone who’s interested in a way in which Planck feedback is commonly calculated should probably read it.

I think Soden & Held (2006) and many other papers explain very clearly why the full-physics result is different from using your simplified model and from the figures it’s pretty obvious. If you’re still struggling to grok it, I can point you to other examples.

I read Soden & Held (2006) in 2007 and have referred to it often since. It confirms the point I have been making: that the decision was made [originally in Schlesinger 1985] to model the reference sensitivity parameter incorrectly, using a mixture of top-of-atmosphere flux and surface temperature. Soden & Held’s Methodologty section confirms that this is exactly what is done, and it is an error.

“It confirms the point I have been making: that the decision was made [originally in Schlesinger 1985] to model the reference sensitivity parameter incorrectly, using a mixture of top-of-atmosphere flux and surface temperature. Soden & Held’s Methodologty section confirms that this is exactly what is done, and it is an error.”

From Soden & Held:

“The temperature feedback can be split further as lambda_T = lambda_0 + lambda_L, where lambda_0 assumes that the temperature change is uniform throughout the troposphere and lambda_L (i.e., lapse rate feedback) is the modification due to nonuniformity of the temperature change.”

The temperature change used in calculating the Planck feedback is “uniform [vertically] throughout the troposphere”. There is no change in lapse rate and whichever “emission altitude” you select, the temperature change there is identical to the surface. This addresses your issue and does better than your simplified calculations because it accounts for regional lapse rates and moisture profiles plus spectral variation in absorption.

I think your post needs to be corrected to state that you misinterpreted the way in which the Planck feedback was calculated and that all of your concerns are fully accounted for in the actual calculations. The correct result is close to the IPCC-reported 3.1 W m-2 K-1.

The furtively pseudonymous “Mie Scatter”, who has much to learn about climate sensitivity, and about the civilized manner of conducting an argument, for he hurls insults freely from behind that cowardly curtain, should read both the head posting and Soden & Held with rather more care.

Only a sentence or two before the sentence cited by “Mie Scatter”, Soden & Held make quite explicit the point I have made in the head posting: that the official value of lambda-zero, like that in Schlesinger (1985), is determined from emission-altitude flux and hard-deck surface temperature.

If “Mie Scatter” would only actually read Soden and Held, he would learn that the table of values of lambda-zero (listed as “Planck” among the feedbacks in the table) shows values in excess even of delta-T(surface) / [4 delta-F(emission)], when the correct use of the Stefan-Boltzmann equation is to relate temperature and flux at the same surface. It is for that reason, rather than because of supposed variations in emissivity, that the official value of lambda-zero is far too high.

in Soden and Held, they calculate lambda0 by translation of the temperature profile…

Two methods, same results.

“I read Soden & Held (2006) in 2007”

Maybe you should read it again ?

Don’t miss the last figure for your friend Willis…

Toncul is out of his depth. The value of lambda-zero in Soden and Held is said to be determined by reference to surface temperature and emission flux, and the list of values labeled “Planck” in the table of feedbacks in that paper are plainly calculated that way (albeit with small additional adjustments for other reasons). The method described in Soden & Held, which is described in detail but with any offered justification by Schlesinger (2985), is an erroneous method. Those unfamiliar with astrophysical equations such as the SB equation will naturally find it surprising that so basic an error can have been made and perpetuated, but that is the fact.

Monckton of Brenchley: you originally said that they included a change in the lapse rate when calculating lambda_0. Soden & Held explained “lambda_0 assumes that the temperature change is uniform throughout the troposphere” i.e. no change in the lapse rate.

Do you now agree that Soden & Held did not change the lapse rate in their calculation?

Thew correct method of determining the reference sensitivity parameter lambda-zero is to determine it as the first derivative of the fundamental equation of radiative transfer at the emission altitude, where incoming and outgoing fluxes are by definition equal, and then, having taken the product of that derivative and any forcing of interest, determine the corresponding temperature change at that altitude. Then, if the lapse-rate is to be held genuinely and undeniably constant at the pre-feedback stage, the change in temperature at the emission altitude is equal to the change in temperature at the surface.

However, doing the calculation as Schlesinger does, and as the models whose values of that parameter are listed in Soden & Held (2006) do, one is no longer retaining the uniform temperature change as between the emission altitude and the hard-deck surface, wherefore one is by implication altering the lapse-rate.

“However, doing the calculation as Schlesinger does, and as the models whose values of that parameter are listed in Soden & Held (2006) do”

Maybe Schlesinger did that in 1985. GCMs do not deal with λ₀. And while S&H call Planck sensitivity λ₀, they make no mention of “emission altitude” or any equivalent global mean concept. Again I ask you to point to any occurrence of such usage in S&H 2006. It isn’t there. They do not need it.

Monckton’s “fundamental equation” is an equation made “fundamental” by Monckton.

Mr Oldberg should check the references, whereupon he will see that the equation is the official equation. Also, it has been calibrated using CMIP3 and CMIP5 outputs. He is, as usual, out of his depth here.

“Official” is not the same as “fundamental” .

Yes, this is looking more and more like a straw man argument.

I have repeatedly requested a reference to where this is stated as being “the official equation” and I have not yet seen one.

Err, no, a power is not an exponential, it’s a power term “of course”.

The linearisation of S-B is reasonably accurate within small changes, however if you are going to question the accuracy of this you cannot do so by using “global average” temperatures since you cannot meaningfully take the average unless it’s linear, and you are maintaining that it is not. I’m sure the author has an appropriate Latin phrase for that fallacy.

The fundamental problem with climate models is that they are tuned to best fit 1960-1990 period which is not representative. This means that they do not fit the early 20th c. warming, do produce the post WWII cooling and do not reproduce the pause. This is why Karl et al decided to change the data to fit the modelled behaviour, so as pretend all is well with the broken models.

As I pointed out in the last post there are published articles on the change in sensitivity in models as global temperature rises. This is probably a reflection of non-linearity of SB which is correctly used in the detail of the models.

While the intent of cataloguing all the errors and how they accumulate is a worthy one I get the impression that CoB is out of his depth already and does not have the depth of knowledge of physics and maths to make sound arguments and conclusion.

In answer to Greg, References establishing that the official sensitivity equation is just that are on the slide illustrating the equation, whose elements are also sourced and described in the text.

A fourth-power relation is by definition an exponential relation.

The head posting has already pointed out that the error arising from the official assumption that lambda-zero is constant is small: indeed, a graph of the underlying equation shows a near-linear curve.

I have verified that latitudinal temperature and flux differences do not materially affect the global calculations shown in the head posting.

The extent of my knowledge of physics and math is not the issue. The issue is the physics and math shown in the head posting.

Monckton of Brenchley September 3, 2016 at 11:18 pm said, in error, as Greg points out:

“A fourth-power relation is by definition an exponential relation.”

Want to think about that? If x is a variable, e^x or any a^x is exponential. x^n is a power, a term of a polynomial. Both are non-linear (in general) – if I recall correctly!

I do not propose to quibble about semantics. Let us agree that a fourth-power relation is not a linear relation.

You were happy to quibble when you thought you were right, now it’s ‘semantics’. If you want to attack the IPCC ( which is merit worthy ) , don’t make it too easy for the warmists to shoot you down.

So why are you bitching about the IPCC “erroneously ” assuming it’s linear? Yet it fine when you do it. There seems to be goose / gander issue here.

Greg is quibbling. I said the error arising from non-linearity was small but pointed to a larger error.

F(x) = x^n where the exponent, n, is held constant and the base, x, varies is a polynomial function.

F(x) = n^x where the exponent, x, varies, and the base n is a constant is an exponential function.

F(x, y) = x^y where both x and y vary is plotted as a surface and is called general exponentiation.

m^n where both m and n are constants is simply a constant.

Having taught mathematics and computer science since 1970, a BIG difference is taught in courses in the design & analysis of algorithms between polynomial-time algorithms and exponential-time algorithms. Obviously, for the sake of faster running algorithms, one usually prefers a polynomial-time designed algorithm over an exponential-time algorithm that does the same calculation. (Look up the P vs. NP problem which is still unsolved.)

Monckton of Brenchley: Greg is quibbling. I said the error arising from non-linearity was small but pointed to a larger error.

Nevertheless, Greg is correct. I’d be happier (fwiw) if you simply admitted a small error in nomenclature, apologized, and moved on.

What “error of nomenclature” is Mr Marler talking about? IPCC is cited in the head posting as stating that the reference sensitivity parameter is a “uniform” response when it is not in fact “uniform”. The head posting correctly states that the error makes little difference to the sensitivity calculation, but that it points to a larger error – the use in the models (see e.g. Soden & Held, 2006) of a mixture of surface temperature and emission-surface flux in determining that parameter.

From the equation three, it is clear that the estimate relates to assumption of 0.3 and 4. Nobody knows on the accuracy of these assumptions. Based on such assumed values we are trying to establish sensitivity factor. Then, how accurate this will be a big question mark.

Dr. S. Jeevananda Reddy

Equation 3 is not contentious. It shows the flux density at the Earth’s emission surface.

I would have thought the defining the emission flux density by using the incoming radiation is at least questionable.

The mean emission altitude is the mean altitude at which, by definition, incoming and outgoing fluxes are equal.

And if Greg considers IPCC’s use of net incoming radiation at the emission altitude as the basis for determining emission temperature to be incorrect, let him address his concern not to me but to the IPCC secretariat.

I am working on global solar and net radiation issues since 1970. I did not question equation 3 but I questioned the constants — 0.3 and 4.

Dr. S. Jeevananda Reddy

Dr Reddy questions the appropriateness of assuming that the Earth’s albedo (or reflectance, i.e., the fraction of incoming radiation reflected harmlessly straight back into space) is 0.3. However, that is the value that most models assign to it. If Dr Reddy does not like that value, he must say why, and propose and justify a different value.

Dr Reddy also questions the fact that the ratio of the surface area of the disk that the Earth presents to solar radiation to that of the rotating sphere of identical radius is 1:4. However, the surface area of a disk is pi times the square of the radius, and the surface area of a sphere of identical radius is 4*pi times the square of the radius, from which the ratio 1:4 is self-evident.

I’m waiting on Anthony to publish a submission that directly addresses the question of why an albedo of 0.3 is too low.

I take issue here with your presentation. If you want to substitute incoming for out going you need to explain why. It is not the basic definition outgoing flux. Now you have said why you are doing that, it makes more sense.

Don’t quibble.

Equation 3 uses an albedo estimate with one (1) significant figure (two at most implied from other discussions), and yet, the derived flux is given to six (6) significant figures! That is a basic error in the handling of calculations, not unlike what undergraduate students did routinely when slide rules were replaced by hand calculators. If albedo is a fundamental component of sensitivity estimation, then we really are limited in what we can say about the precision of intermediate and end calculations.

The output of the calculation in the head posting is a final sensitivity expressed to a single digit of precision. Intermediate calculations, as is usual, retain the available precisions.

But you are claiming to establish small and accumulating errors using grossly uncertain calculations.

Which calculations are “grossly uncertain”, and why does Greg consider them uncertain?

You say just above that you are only claiming single digit accuracy in key values, so any result cannot be more accurate than that. This can not provide a basis for showing “small errors”.

Don’t quibble. Small errors are those which affect sensitivity by some tents of a degree – the precision to whic the outcome is presented,

When I took Statics & Dynamics in college the use of the pocket calculator was first approved. The text ant the answers in th back of the book all assumed the use of a slide rule. As a result, most of the answers that the students using pocket calculators got did not agree with the book.Since you are dealing with the difference in sin, tan or cos, cot of small differences in angles and the calculator was taking these differences to eight or ten places the result was a large difference in the answer, as much as an order of magnitude in some cases. Students would rework “wrong” answers several times and get frustrated. The professor then made answer sheets for each chapter fo the calculator to solve the problem.

Excellent article.

Many thanks.

There is a paper ” Hug & Barrett vs IPCC ” , Oct 11, 2001 . In section 4, it reaches the same conculsion as you have. In that they state ” Mount Pinatubo reduced Earth’s temperature by 0.3 K and and the estimated reduction in forcing of 2 w/m^2. It gives a sensitivity of 0.15 w/m^2. ”

All of the papers I’ve seen trace back to TSI being at 1368 – 1370… round up of course … Since 1368 – 1370 was shown to be in error from defective instrumentation, and the new TSI is 1360 – 1362, How did they get any of the numbers to Match?

They also estimate a lot, then mix in numbers that are significant in number, then claiming they are accurate. From what I can see, the new TSI should have given them a 0.8 K rather than 1.2 K. That is a third.

A small difference in the any of the incoming or outgoing estimates, have a big impact on the final numbers. For example, it is only an assumption that the TSI varies no more than 0.12%, which is a reduction of 1.6 w/m^2. That is conviently below the 2 w/m^2 That caused a reduction in temperature of 0.3 K . A mere additional 0.08 % change in TSI in either direction, changes the final numbers substantially.

Based on the TSI from 1370 w/m^2 to 1360 w/m^2, would have in the math reduced the warming from co2 by 1.5 K with the sensitivity at 0.15 w/m^2. At the IPCC level at 0.67 w/m^2, it’s 4 times that.

Would the IPCC accept a mathematical reduction of 6.0 K ?

In a previous post, you already “demonstrated” (at least, this is what uou thought…) that equilibrium climate sensitivity cannot be larger than1.6 K. So why are you discussing such details now ? …

Your present post is stupid for two reasons.

– First, the reference warming is not used in climate sensitivity calculations, whatever from what. So whatever you find, your conclusion is wrong.

– Second, your calculation is wrong… For such a simple calculation using global means, a simple derivative show that lambda_0 = T0/(4xF0). Your equation 9. Except that in this equation, you should have used the surface temperature TS (and in that case the effective emissivity is not 1 and equal to F0/sigma/TS^4) rather than T0. So you should have written : lambda_0 = TS/(4xF0). Think about it : the relationship you want to use necessary relates a flux at TOP OF THE ATMOSPHERE (where the forcing is defined) and SURFACE temperature.

I shall not be drawn on what final sensitivity will be whiten the present series is complete.

The reference sensitivity is of course used in climate-sensitivity calculations. See e.g. AR3, ch. 6.1, for a discussion. Feedbacks are quantified as forcings denominated in Watts per square meter of the reference warming.

And,as explained in the head posting, the SB equation must be applied to a sing,e surface only. It is an erroneous use of the equation to do as Schlesinger did and attempt to relate surface temperature and emission-altitude flux via the SB equation.

The correct procedure is to determine the temperature change from the flux change at the emission altitude. Subject only to lapse-rate feedback, the emission-altitude temperature change and the surface temperature change will be approximately equal.

Your reference to IPCC looks wrong, but because you speak about AR3 : just tell me which page of the AR3 report deltaT0 is used to get ECS… In fact, I think it is done somewhere in a IPCC report, BUT it doesn’t mean that you necessary need deltaT0 to get the sensitivity. The “reference sensitivity” (deltaT0, or related parameter lambda0) is used for a feedback decomposition. Then, if you can decompose the response you can also recompose the response, and get the sensitivity. But you can also DIRECTLY get the sensitivity without doing such decomposition.

As an example, let’s take … your OWN calculation! Here :

https://wattsupwiththat.com/2016/08/03/ipcc-has-at-least-doubled-true-climate-sensitivity-a-demonstration/

You did NOT use lambda0 or deltaT0 to get the final 1.6 K estimate (which was wrong for other reasons : first, it was not a ECS calculation but a TCR). And I already detailed the calculation to show you that this is the case. Do I show it again ?

About the second point :

The correct equation is not an exact application of SB, and relates a flux at top of the atmosphere and a surface temperature change, so at different levels. Of course, you have no problem with that, because the equation that appears in all your posts and that you have used to get te 1.6 K value (not correctly), relate a flux at top of the atmosphere (deltaF0) and a surface temperature change (deltaTeq), so at different levels. Note that you now remove the “eq” of “deltaTeq” to hide the fact that this temperature change is necessary an equilibrium one. See revious discussions).

In addition, applying the equation with the emission temperature is stupid (if done correctly) : I explain why.

In equilibrium F0 is 240 Wm-2 and the emission temperature is 255 K (or about 33 K smaller than the mean surface temperature).

If CO2 is added, albedo unchanged, and equilibrium reached, then F0 is .. 240 Wm-2 and the emission temperature is … UNCHANGED (with no lapse rate change, temperature change at a given altitude is the surface change, yes, BUT the emission altitude increases).

Toncul misunderstands atmospheric dynamics. At the start of a sensitivity calculation, emission flux is 238 W/m2 and emission temperature is 255 K. After a forcing and before feedbacks, the flux and temperature have increased to 241 and 256, at that altitude. And after feedbacks the flux and temperature at that altitude have increased again. Via the broadly invariant lapse rate, and subject only to the lapse-rate feedback, the surface temperature will rise by about the same amount as the temperature at the altitude that was, at the start of the calculation, the emission altitude, and the new emission altitude will be higher.

And it does not matter by what methods the models reach their exaggerations of climate sensitivity. Eq, 1, when informed with the forcing and feedback values officially deduced by the models, reproduces the climate sensitivities reported by or deduced from the models. But once corrections are made to allow for the errors in the official position, far lower sensitivities emerge, demonstrating that in some fashion the models indeed embody the errors.

Indeed. At any equilibrium state of the climate, overall thermal energy is constant so energy-in equals energy-out. Consider two equilibrium states. If albedo is unchanged between the two states then energy-in will be the same. So energy-out must also be the same. But the temperature of the emission altitude is pretty much defined by energy-out via Stephan-Boltzmann. Hence this must also be the same.

First, there is nothing about atmospheric dynamics, here…

Then, whatever you can say : the emission temperature in equilibrium will be still 255 K if albedo is unchanged. Because the emission temperature doesn’t care about what Mr Monckton of Brenchley think. the emission temperature care about the energy emitted, that has to be 240 Wm-2 in equilibirum whatever you do to the climate system, if albedo is unchanged.

From your reasonning, the emission temperature change is the same as the surface change.

So if we follow your reasoning the surface temperature change in equilibirium should be 0, whatever the forcing…

deltaT0 is a value obtained from simple calculations, or from climate models (all calculation agree with roughly 1 degree of warming for CO2 doubling). Whatever the method you use to get deltaT0, evenby using the wrong method of Monckton of Brenchley, it would not change the response of a climate model to CO2. And it would also not change your stupid 1.6 K calculation in your first post. This is so easy to understand. So easy.

(my comment above is for MR Monckton of Brenchley).

Toncul persists in not understanding the dynamics of the atmosphere under perturbation by a forcing. Before the forcing, in a presumed pre-existing steady state, the mean emission altitude is, say, 5.3 km. At that altitude, where by definition incoming and outgoing fluxes of radiation are equal, the net incoming solar radiation is known to be about 238 W/m2, and the measured emissivity is at or very close to unity. From the SB equation, temperature at that altitude is 254.5 K or thereby.

Now, add a forcing of 3.7 W/m2. The flux at the emission altitude at 5.3 km increases from a little over 238 to a little under 242 W/m2, and temperature at that altitude rises by about 1 K to around 255.5 K.

Since this temperature is greater by about 1 K than the emission surface, the old emission surface is no longer the emission surface. Instead, the new emission surface is around 150 m higher than before.

In the other direction, assuming no variation in the lapse-rate (for, if there were one, it would count as a feedback), the Earth’s surface and all altitudes in between warm by about the same amount as the old emission surface – i.e., 1 K.

Of course, the IPCC assumes – contrary to a growing body of evidence – that the increase in water vapor in the tropical mid-troposphere will reduce the mean lapse-rate somewhat. But the story of the glaringly missing tropical mid-troposphere hot-spot is another story, and not for today.

Finally, it does not matter by what method the models incorporate any or all of the errors that I am describing in this series. For I began by carefully and successfully calibrating the official climate-sensitivity equation against the models’ output, and I showed that it does fairly represent the climate sensitivity interval that they predict.

Since correction of the errors modifies the form of the equation and alters the values of its independent variables, the consequence of the corrections is that climate sensitivity will. In that event, a discrepancy will have arisen between the results of the modified official equation and the results from the models. If readers become convinced that most or all of the errors I shall be identifying in this series are indeed errors, then honest modelers would want to modify their models to make the necessary corrections. If, on the other hand, I am wrong, no corrections will be necessary.

Meanwhile, the world continues to warm at a rate considerably below what IPCC had predicted in 1990, forcing IPCC itself almost to halve its original projections of medium-term global warming (while keeping its job by leaving the longer-term predictions unadjusted).

“the surface temperature will rise by about the same amount as the temperature at the altitude that was”

Really? If the surface is absorbing and radiating an extra 3.7W/m2 its temperature would rise from 288K to 288.68K.

Mr Monckton of Brenchley,

Writting long (unclear) comments and partly changing subject doesn’t make you being right…

What I said above was clear. And what Ian H was clear too.

In equilibrium and with no albedo change, it is self evident that the emission temperature is unchanged.

The effective emission temperature is (Flw/sigma)^0,25 where Flw is outgoing terrestrial radiation at top of the atmosphere (roughly 240 Wm-2) and sigma is SB constant.

If you add a forcing (let’s say 4 Wm-2) then the emission temperature is reduced to (Flw/sigma)^0,25 with Flw=240-4 = 236 Wm-2. which is self evident too.

Because the system emits less than it gets, it warm up until the emission temperature is back to 255 K (if albedo is unchanged), roughly of about 1 K everywhere (if there is no feedbacks). and you agree with that :

you correctly say that the new altitude of the emission temperature is higher of about 150 m once the warming has warmed up of 1K.

For a temperature gradient of 6.5 K / km and if temperature increase of 1K everywhere, you need to go 1000/6.5 = 150 m higher to find back the altitude at which temperature is equal to 255 K.

So you agree that the emission temperature is unchanged in equilibrium (if albedo is unchanged). And you are right to agree with me. Because saying the opposite would be deeply stupid.

Toncul, who is grievously out of his depth both in science and in the manner of conducting a discussion, continues to assert the obvious, that the emission temperature remains the same unless insolation or albedo changes. However, the altitude at which the emission temperature occurs rises as the atmosphere warms, as carefully explained in a previous comment by me. Toncul appears unfamiliar with the concept of an emission altitude that is not fixed. If so, let him address his concerns to the IPCC secretariat, not to me.

In equilibrium which is selfevident. I also said that I agree with you that the emission level rise of 150 m. and you tell me that I don’t get this particular point… Do you have schizophrenia ?

Don’t be childish. Now that you have conceded that the altitude of the emission surface rises with warming, you have also conceded that at the former emission surface the temperature will have risen, whereas previously you were trying to dismiss my argument in the head posting on the ground that there would be no such rise. You are out of your depth here, and would do well to leave the discussion to others better qualified to participate and more willing to debate intelligently and politely.

I conceded nothing view that I never said the opposite…

And, as I already explained (do you read my comments before answering?), this is in agreement with the fact that the emission temperature remains 255 K in equilibrium, if albedo is unchanged (which is self-evident per definition of the emission temperature…).

Toncul now claims that he did not in fact suggest that since emission temperature always remains the same I had been in effect suggesting that the change in surface temperature must be zero. Since he now understands that, though the emission temperature always remains the same, the altitude at which that temperature obtains rises with warming, he should now understand that I was not in effect suggesting that the change in surface temperature must be zero.

Why use algebra and mind boggling math when a crystal ball and random shots at a dart board seem to suffice ?

Do I need the sarc tag .

Robert from Oz displays a formidable knowledge of the methods by which the modelers actually make their predictions. Expelliamus!

Thank you my lord .

“It took me years to figure this out, but finally there is a potential resolution between the insistence of the climate scientists that CO2 is a big problem, and the empirical evidence that it doesn’t have nearly as much effect as they say.”

Dr Evans is an expert in Fourier analysis

– and leaves taxpayers with trillions of $$ to spend for math high priests controverting about non existing ‘Catastrophic Anthropogenic Global Warming’.

Dr. S. Jeevananda Reddy: “From the equation three, it is clear that the estimate…”

Are you referring to Monckton’s estimate, or the IPCC’s? Monckton’s blog post ignores how the IPCC calculations were done and his claimed “inaccuracies” are all accounted for in those calculations. Bony et al. (2006) Appendix B gives a summary.

The simple equations in the blog post are mainly used to demonstrate a principle or for teaching in introductory textbooks, see for example Ambaum’s Thermal Physics of the Atmosphere. Atmospheric physicists understand that these are simplified expressions from which you can’t directly calculate the true global Planck response.

The IPCC nowhere explains how lambda-zero is calculated, and it refused to do so when requested. In fact, it lifts its estimate of lambda-zero from the models. And the models incorporate the error made by Schlesinger.

You really think that lambda_0 is used in climate models ? Are you such stupid ?

As I explain above, your calculation makes no sense at all.

Values of lambda-zero in the models are listed in e.e. Soden and Held, 2006, and are discussed extensively in the literature, as indicated by the references in the head posting.

If the models did not represent the fundamental equation of radiative transfer, they would not work at all.

If Your Posterior does not like this he should address his concerns to the modellers.

Yes models represent the fundamental equation of radiative transfer and we can get the value of lambda_0 from that. Of course they do not use lambda_0, because they are much complicated than the simple equation shown in your Figure 1…

Calculation from climate models agree with simple calculations from global means, such as the calculations you say are wrong but are correct, but they disagree with your calculation, that you say is correct but is wrong :).

The models embody various errors, including the error by which they inflate the value of lambda-zero by determining it from flux at one altitude and temperature at another.

Are you completely dumb ???

First there is no error.

Second, even if there were an error in lambda_0 calculation, it would not be an error embodied by climate models, but just an error in the lambda_0 calculation… view that this value is not used in climate models. It is just calculated by using climate models.

Your Posterior (for that, I think, is what “ton cul” means in French) continues to be discourteous in this thread. Whether Your Rearness likes it or not, the official equation was calibrated against the models’ output and duly reproduced their stated climate-sensitivity intervals with some precision, both for CMIP3 and for CMIP5.

The models are naturally constructed so as to be able to take account of forcings and then of the additional forcings that are temperature feedbacks. They take account of feedbacks by starting with the direct or reference temperature change in response to the initial forcing and then making appropriate adjustments throughout the atmospheric column at all latitudes to represent the additional forcing, denominated in Watts per square meter per Kelvin of the original forcing, that is the temperature feedback.

The values of the individual temperature feedbacks, model by model, and of the reference sensitivity parameter, model by model, are published. See e.g. Soden & Held (2006); Vial et al. (2013).

It matters not whether the values that are listed are derived ex post facto: what matters is that these are the official values derived from the models, and, if these values are wrong, then either the official derivation is wrong or the models are wrong. Either way, it becomes quite impossible to sustain the case for a high climate sensitivity, as will become all too apparent once this series is concluded.

Don’t you just love the classic alarmist activist ‘normal co-worker/peer friendly’ conversational mode?

Frequent direct yet obviously and absurdly incorrect ad hominems.

Complicated obtuse meandering sentences.

Stuck on willful reading miscomprehensions.

Circular logic confusing readers.

…

On and on; yapping junk yard dogs throw in willy nilly any quibble, nit pick, observations or definition denial possible, as if their attempts at effecting small perturbations of the Questions/Answers have any effect on reality.

One would think that they would take up the challenge and produce an equally illustrative and descriptive article of their own. Clearly describing both formula applications and history while providing citations and scientific extracts regarding their insistence of calculations and feedbacks.

Only that would mean taking and publishing a definitive stand on their use of relative formulas; open for explicitly detailed discussions.

Great Article, Lord Monckton!

Monckton of Brenchley: Vial et al. (2013) point to Soden & Held (2006). If you want to know how the CMIP5 values were calculated then read the Soden & Held (2006) methods section. They explain how lambda_0 is calculated using proper radiative kernels.

Yes, this is more complex than your blog post, but it’s necessary to account for how Earth’s atmosphere is not completely uniform and how infrared absorption depends on wavelength. Accounting for everything, the Planck response works out at about 3.1 W m-2 K-1 as reported by the IPCC.

The method by which lambda-zero is represented in the models is well explained in Soden & Held (2006), and it is an erroneous method. It embodies the error made by Schlesinger (1985) in pretending that one could satisfactorily obtain reference sensitivity by using emission-surface flux and surface temperature. One cannot do that and obtain a correct result. Instead, one should determine the temperature change from the flux change at the emission altitude and then, via the lapse rate, adjust surface temperature commensurately. Changes to the lapse rate are feedbacks and not part of the forcings.

Monckton of Brenchley: that’s not how they did it, I think you need to read Soden & Held’s methods section.

To check whether you understood the first part: let’s say that for one wavelength band we have a well-defined emission altitude. For a change in surface temperature of 1 K in Soden & Held’s method, what is the change in temperature at this emission altitude in their calculation?

My point in the head posting is a simple one, and, as Soden and Held demonstrate, a correct one. The official methodology determines lambda-zero as – to first order – the ratio of surface temperature to four times emission-surface flux. That is an unacceptable abuse of the fundamental equation of radiative transfer.

” The official methodology determines lambda-zero as – to first order “

That is completely misleading. “To first order”?? They calculate the derivative of λ₀ with respect to T₀. But then they multiply by the derivative of T₀ wrt T_S. That is just proper chain rule, with full accuracy. Nothing “first order” about it. And it has nothing to do with “the fundamental equation of radiative transfer”.

Monckton of Brenchley: “My point in the head posting is a simple one, and, as Soden and Held demonstrate, a correct one. The official methodology determines lambda-zero as – to first order – the ratio of surface temperature to four times emission-surface flux. That is an unacceptable abuse of the fundamental equation of radiative transfer.”

It’s now clear that you’ve completely misunderstood what Soden & Held did. Could you explain, for a single atmospheric column, how you _think_ they calculated the change in top-of-atmosphere flux? This should make it easier to work out precisely where you’re going wrong.

MieScatter appears so shocked at the very notion that the modelers could have made a basic error that he persists in misunderstanding not only what they are saying but also what I am saying. The point is very simple. Lambda-zero is determined in Soden and Held on the basis of surface temperature and not (as it should be, if surface temperature is used) surface flux, but on the basis of emission-altitude flux, 5 km above ground level. The basis on which it is determined in the kernels is thus defective a priori, and leads to a considerable and unwarrantable overstatement of climate sensitivity.

“but on the basis of emission-altitude flux, 5 km above ground level”

This is bizarre. I have been posting excerpts from Soden and Held, which explain just how it is done, and it is nothing like tise. Lord M offers nothing to support his claims. But the matter is easily tested. Nowhere in S&H is there any mention of exission altitude. I invite Lord M to find a single instance.

Monckton of Brenchley: Soden & Held calculate the change in top-of-atmosphere flux given a 1 C global average change in temperature at 5 km altitude (and every other altitude within the troposphere). Don’t you agree?

MieScatter — I refer to those values given along with equation 3 only — 0.3 & 4.

Dr. S. Jeevananda Reddy

Remember to multiply it by the margin of error of the equipment used too. Most of it is like 0.15°C up or down as drag and +- 0.05°C as spec. Nobody runs around verifying the calibration of these sensors either.

Climate sensitivities are emergent properties of models found by analysing model behaviour, Where do you get this idea that models “adopt” a value of λ0 from anywhere?

The IPCC value is a summary of the way models behave it is not a value that the IPCC provides for modellers to use.

Models are build up from basic physics ( plus some gross and likely wrong “parameters” for the key processes of climate for which they do not have “basic physics” ). The CO2 forcing is an input, the sensitivity is an “output”.

I say output in quotes because it is in face largely determined by tweaking the poorly constrained input parameters which gives the modellers a large margin to produce whatever sensitivity they wish.

This is clearly stated in Hansen 2005.

The values of lambda-zero adopted in the models are listed, CMIP3 model by model, in Soden & Held (2006).

The purpose of that paper, and of Vial et al. (2013) fire the CMIP5 ensemble, is to present the various forcings and feedbacks that “emerge” from the models.

It will be evident by the time this series is concluded that the models are embodying substantial errors, or their output values for climate sensitivity would not be as high as they are.

“The values of lambda-zero adopted in the models are listed, CMIP3 model by model, in Soden & Held (2006).”

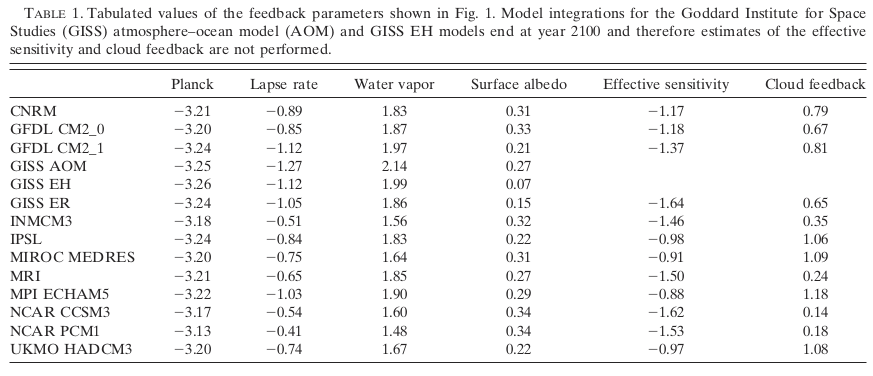

Greg is right. I have shown S&H Table 1 below. The attached text (et seq) describes how they are derived from the models. They are not “adopted in the models”. They are emergent properties, as Greg says.

It matters not whether the values of lambda-zero are determined ex-post-facto or applied ab initio. If the values of lambda-zero that “emerge” from the models are excessive, then either the official method by which they were extracted is wrong or the models are wrong, or perhaps both.

Monckton of Brenchley: It matters not whether the values of lambda-zero are determined ex-post-facto or applied ab initio. If the values of lambda-zero that “emerge” from the models are excessive, then either the official method by which they were extracted is wrong or the models are wrong, or perhaps both.

That may be true (I don’t disagree), but your use of the word “adopted” has been misleading. Possibly the modelers “tune” the parameters so that the value of sensitivity they want “emerges” from the model, but I don’t think that can be convincingly shown (I am always alert to the possibility of being corrected after writing something like that.)

I am disinclined to quibble about semantics. Non notatio, sed notio.

https://judithcurry.com/2015/02/06/on-determination-of-tropical-feedbacks/

If you want to know how the mainstream modelling community rig the parameters to get high CO2 sensitivity read my article detailing how they down graded the parametrised volcanic forcing ( thus increasing the sensitivity ) in order to reconcile model output with the 1960-1990 climate record.

This is one of an infinite choice of values and leads to a similarly high CO2 sensitivity.

The GISS team under Hansen had deliberately abandoned physics based empirical values in favour or reconciling model output ( without downgrading CO2 sensitivity ).

The article is fully referenced and shows how Lacis et al probably were a lot closer in 1990 than the ‘convienient’ values later adopted.

Again read Hansen et al 2005. It is quite open about the scope for producing whatever results you like and explains a lot of detail. This is a clear and rigorous paper explaining how it all works.

If you read Roe (2009) you will see that lambda_0 is by definition a constant since it is the

constant of proportionality for an ideal system (which can be whatever you choose) between a

change in the input (here the flux) and the output (here the temperature) before any feedback

occurs. If you change the reference temperature of your system without feedback then lambda_0

will change but it will still be a constant for the rest of the calculation. Furthermore as Roe stated

in 2009 the equation is wrong since it assumes a linear system when clearly the climate is nonlinear since at the very least the Stefan-Boltzmann equation is nonlinear. Roe goes on to

present the next order equation that takes into account nonlinearities.

Fundamentally this discussion is thus flawed since we are putting numbers into an equation that

we know is wrong. It is however a good starting point to talk about feedbacks and their effects.

Furthermore computer climate models do not work by calculating feedbacks and then work out

the temperature. Rather they simulate a model climate and then derive the temperature and feedbacks from that. As far as I can tell using this “official equation” is a simple way to compare models since you can compare derive feedbacks and then see what changes in each model.

Geronimo is broadly correct. However, as the head posting points out, the non-linearity arising from the fourth-power SB equation is small.

Though the current generation of models do not use feedback values as inputs, feedback values are deductible from their outputs, which is how I was able to calibrate the official equation successfully against the models.

What I am demonstrating in this series is that the processes inbuilt into the models, reflected in the official sensitivity equation, are leading to outputs inconsistent with the underlying physics.

Based on this standard diagram

http://www.ipcc-wg1.unibe.ch/publications/wg1-ar4/faq/fig/FAQ-1.1_Fig-1.png

The absorptivity of IR radiation by the atmosphere is 350/390 = 0.90, which I think is significantly different than 1.0.

Radiation toward the surface is 324 W/m2 indicating a sky temperature of 9.13°C (48.43°F).

Radiation from the atmosphere away from the planet is (235-40) W/m2 indicating an effective sky temperature of -24.54°C (-12.17°F).

Radiation from the planet system is 235 W/m2 due to an albedo of 31.3%, as shown in the diagram. Assuming an emissivity of 1.0 that radiation suggests an effective blackbody temperature of -19.42°C (-2.96°F).

Basically, for any of the equations to make any sense, the 40 W/m2 from the surface, thru the atmospheric window, must be accounted for separately. That gives the non-unity emissivity that must be divided into the atmosphere’s radiation numbers to obtain a more accurate effective emission temperature. Because of the lower temperature and pressure at increased altitude, the atmospheric emissivity will be significantly lower than the value computed above.

Also, since the upward and downward effective emission temperatures are significantly different (9°C vs -24°C) that completely invalidates some of the arguments made above.

Unfortunately, the IPCC has decided not to provide the derivations for any of these “magic” equations and, therefore, has proven beyond all doubt that … (I don’t want to get too negative).

It is evident from the diagram that, for a surface temperature 288 K, the corresponding radiative flux density is 390 watts per square meter, implying emissivity at the surface is 1.

It is also evident from the diagram that at the emission altitude the flux density is much as given in the head posting at eq. (3), from which it follows that at that altitude emissivity is also at or close to 1.

The reason why one should not mix two distinct surfaces when applying the SB equation, as Mr Clemenzi does here, is given in the head posting.

Talking of atmospheric energy flowcharts….. There was a discussion here recently, as to whether downwelling longwave radiation (DLR) could warm the oceans (because LWR can only penetrate the top ten microns). So I thought I would create an energy flowchart that did not include DLR warming of the oceans. And contrary to the suggestion that oceans would freeze if DLR was not absorbed by them, the flowchart is balanced. (Not sure if it is entirely logical and feasible, of course…)

The major difference in this flow diagram is the double-headed red arrow, which represents DLR from the troposphere hitting the ocean, not being absorbed, and being reradiated back up again. So this is an energy flux that is bouncing around in the atmosphere and not doing very much at all, in terms of surface heating (although it can heat the atmosphere, the land-surface, and provide the latent heat of vaporisation for the top micron of the water surface). Which would mean that oceanic warming is dependent on incident SW insolation.

The left purple upflow is ULR resulting from SW absorption by sea, and DLR-SW absorption by land.

The right blue downflow is DLR from the atmosphere being absorbed by land.

The small green-turquoise arrows represent thermic and latent heat radiation. I have split them into two, to represent flows from direct SW oceanic heating (green) and from SW-DLR heating of land surfaces (turquoise).

“The major difference in this flow diagram is the double-headed red arrow, which represents DLR from the troposphere hitting the ocean, not being absorbed, and being reradiated back up again.”

It isn’t actually a major difference, which is why your plot still balances. The issue about DLR not being absorbed is a red herring. Sea surface at, say, 15°C, must radiate a whole lot of heat. To stay steady temperature, that flux must be balanced. The solar absorbed by sea comes to the surface, but is not nearly enough. DLR makes up the balance. It does not need to be absorbed.

Your accounting says DLR is re-radiated. That is a strained description of the physics, but the effect is the same. The upflux is the sum of solar and DLR, for surface heat balance.

What if DLR increases – will that warm the sea? Yes! If the sea doesn’t warm, there is now too much flux being supplied for the surface to radiate. Some absorbed solar can’t escape, and that will heat the surface (and below) until balance is restored.

Good analysis, but it misses the point. The question is not “Can DLR heat the ocean?”, the correct question is “Why doesn’t DLR heat the ocean?”. The answer is rather simple.

On land, the surface temperature drops below the atmospheric temperature almost as soon as the Sun sets. By morning, the temperature drops about 20°F. If it was not for the DLR, that change would be over 100°F.

However, the ocean temperature drops by less than 2°F and the DLR and ULR are about the same.

So, what’s the difference? When water cools, its density increases and the cold layer sinks bringing warmer water to the surface. As a result, several feet of water must lose heat for the temperature to drop. However, with solids, the outer surface continues to cool because rock and soil are pretty good thermal insulators. As a result, the lower atmosphere is cooled as its stored energy is used to limit the change in temperature (known as the greenhouse effect). Looking at radio sonde soundings will make this obvious. I have provided a few samples here

(works with Windows XP and Vista, not Windows 10).

The concept of down welling long wave radiation from the atmosphere “heating a surface” shows a misunderstanding of radiation heat transfer. There is energy going both up and down, and this is generally true for radiation between two sources, but the net effect of the back radiation is to decrease net radiation heat transfer up. There is no radiation heat transfer down unless the atmosphere is warmer than the surface. The decrease in heat transfer up caused by this back radiation then requires compensation by other heat transfer means (conduction, convection, and evapotransporation) to remove the required excess energy deposited at the surface by sunlight (there is no other significant source of this surface energy). The mechanism of the atmosphere absorbing radiation from the surface causing a temperature rise (the so called atmospheric greenhouse effect) is due only to the increase in average altitude in radiation to space (from the radiating atmosphere rather than the surface), and no other reason (assuming constant albedo and lapse rate). It is not from the back radiation heating the surface.

Mr Weinstein is broadly correct, though perhaps the clearest metaphor for the effect of CO2 is provided by Professor Christopher Essex at the University of Western Ontario. He says the interaction of photons of near-infrared radiation with CO2 molecules is akin to turning on billions of tiny radiators throughout the atmosphere. The additional heat that thus arises is then transported both upward and downward in the atmospheric column by various processes.

RC, you said, “Radiation toward the surface is 324 W/m2 indicating a sky temperature of 9.13°C (48.43°F).”

Does this “sky temperature” take into account the fact that blue light is scattered out of the dominantly green light coming from the sun?

No, the 324 W/m2 is longwave IR emitted by the atmosphere. Light from the Sun is included in the albedo and the 168 W/m2 and 67 W/m2 absorbed by the surface and atmosphere. About 70% of the absorbed energy is in the invisible IR. The blue light helps us to see (albedo), but has little effect on the temperature.

RC and others,

This “standard diagram’ shows a surface reflectance of 30 W/m2 (~9%). I have submitted an article that I hope Anthony will publish, wherein I argue that this value is too low.

Clyde Spencer: “RC and others, This “standard diagram’ shows a surface reflectance of 30 W/m2 (~9%). I have submitted an article that I hope Anthony will publish, wherein I argue that this value is too low.”

In that diagram, 198 W m-2 reaches the surface and 30 W m-2 is reflected. That’s about 15% reflection.

If you think you’ve found errors in the albedo measured by the MODIS and CERES satellite instruments, that would be fascinating and there are many journals who would be happy to publish your work.

If we are concerned about albedo or total reflectance, what is germane is the total light reflected out of the total incoming. Of 342 Watts, 30 is supposedly reflected by the surface (8.8%). The question is NOT how much of the light that doesn’t get absorbed by the atmosphere or reflected by clouds and aerosols is reflected by the surface! Maybe we can quibble about the 67 watts that get absorbed by the atmosphere because the atmosphere re-radiates it. However, that still gives about 11% reflected by the surface out of the total incoming.

The alternate diagram provided by ralfellis shows 23 watts, lowering the total reflectance even more.

Even if I wanted to go to the trouble of providing a manuscript for publication, I doubt if any journal would touch it. My credentials are not in climatology, and I’m no longer in academia. However, if my argument is sound, then people at the public trough can run with it. The concept isn’t that difficult. It just seems that the people working in the field don’t have the right background and have missed it.