Guest Post By Werner Brozek, Edited By Just The Facts

(Note: If you read my report with the January data and just wish to know what is new with the February data, you will find the most important new things from lines 7 to the end of the table.)

In order to answer the question in the title, we need to know what time period is a reasonable period to take into consideration. As well, we need to know what the slope should be for the time period in question. For example, do we mean that the slope of the temperature-time graph must be 0 or do we mean that there has to be a lack of “significant” warming over a given period? With regards to what a suitable time period is, NOAA says the following:

”The simulations rule out (at the 95% level) zero trends for intervals of 15 yr or more, suggesting that an observed absence of warming of this duration is needed to create a discrepancy with the expected present-day warming rate.”

To verify this for yourself, see page 23 here.

Below, we will present you with just the facts and then you can decide whether or not the climate models are still valid. The information will be presented in three sections and an appendix. The first section will show for how long there has been no warming on several data sets. The second section will show for how long there has been no “significant” warming on several data sets. The third section will show how 2013 to date compares with 2012 and the warmest years and months on record so far. The appendix will illustrate sections 1 and 2 in a different way. Graphs and a table will be used to illustrate the data.

Section 1

This analysis uses the latest month for which data is available on WoodForTrees.com (WFT). However WFT is not updated for GISS, Hadcrut3 and WTI past November so I would like to thank Walter Dnes for the GISS and Hadcrut3 numbers. All of the data on WFT is also available at the specific sources as outlined below. We start with the present date and go to the furthest month in the past where the slope is a least slightly negative. So if the slope from September is 4 x 10^-4 but it is – 4 x 10^-4 from October, we give the time from October so no one can accuse us of being less than honest if we say the slope is flat from a certain month.

On all data sets below, the different times for a slope that is at least very slightly negative ranges from 4 years and 9 months to 16 years and 4 months.

1. For GISS, the slope is flat since January 2001 or 12 years, 2 months. (goes to February)

2. For Hadcrut3, the slope is flat since April 1997 or 15 years, 11 months. (goes to February)

3. For a combination of GISS, Hadcrut3, UAH and RSS, the slope is flat since December 2000 or an even 12 years. (goes to November)

4. For Hadcrut4, the slope is flat since November 2000 or 12 years, 4 months. (goes to February)

5. For Hadsst2, the slope is flat from March 1, 1997 to March 31, 2013, or 16 years, 1 month. Hadsst2 has not been updated since December. The slope from March 1997 to December 2012 is -0.00015 per year and the flat line is at 0.33. The average for January and February 2013 is 0.299, so at least two months can be added to the period with a slope of less than 0. Furthermore, Dr. Spencer said that “Later I will post the microwave sea surface temperature update, but it is also unchanged from February.“ So since we can rule out a huge upward spike in Hadsst2 for March, I believe I can conclude that if Hadsst2 were updated to March, then there would be no warming for 16 years and 1 month.

6. For UAH, the slope is flat since July 2008 or 4 years, 9 months. (goes to March)

7. For RSS, the slope is flat since December 1996 or 16 years and 4 months. (goes to March) RSS is 196/204 or 96% of the way to Ben Santer’s 17 years.

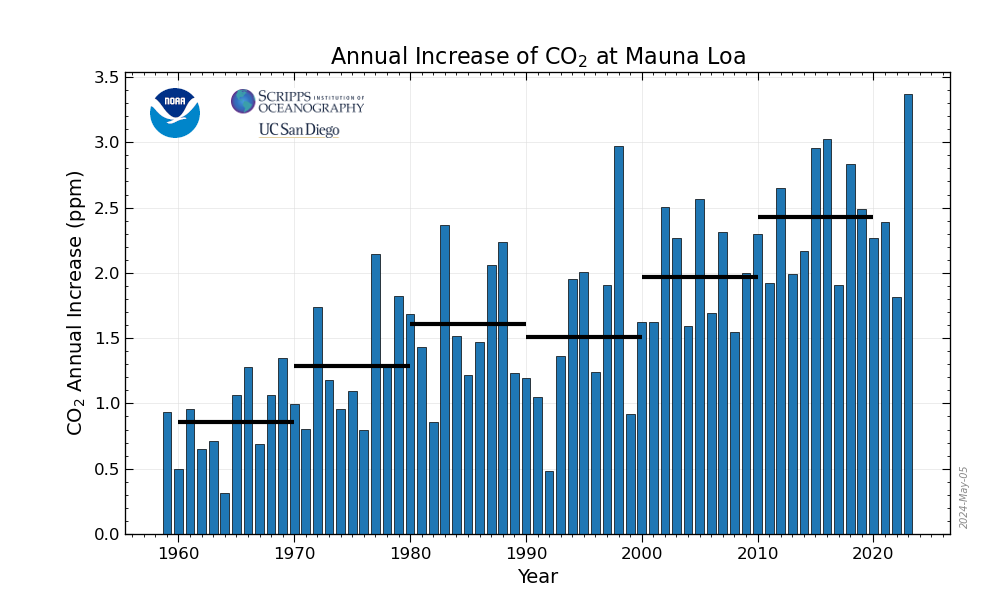

The next graph, also used at the head of this article, shows just the lines to illustrate the above. Think of it as a sideways bar graph where the lengths of the lines indicate the relative times where the slope is 0. In addition, the sloped wiggly line shows how CO2 has increased over this period.

When two items are plotted as I have done, the left only shows a temperature anomaly. It goes from 0.1 C to 0.6 C. A change of 0.5 C over 16 years is about 3.0 C over 100 years. And 3.0 C is about the average of what the IPCC says may be the temperature increase by 2100.

So for this to be the case, the slope for all of the data sets would have to be as steep as the CO2 slope. Hopefully the graph illustrates that this is untenable.

The next graph shows the above, but this time, the actual plotted points are shown along with the slope lines and the CO2 is omitted.

Section 2

For this analysis, data was retrieved from SkepticalScience.com. This analysis indicates for how long there has not been significant warming according to their criteria. The numbers below start from January of the year indicated. Data have now been updated either to the end of December 2012 or January 2013. In every case, note that the magnitude of the second number is larger than the first number so a slope of 0 cannot be ruled out. (To the best of my knowledge, SkS uses the same criteria that Phil Jones uses to determine significance.)

For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.134 C/decade at the two sigma level from 1990

For UAH the warming is not significant for over 19 years.

For UAH: 0.146 +/- 0.170 C/decade at the two sigma level from 1994

For Hadcrut3 the warming is not significant for over 19 years.

For Hadcrut3: 0.095 +/- 0.115 C/decade at the two sigma level from 1994

For Hadcrut4 the warming is not significant for over 18 years.

For Hadcrut4: 0.095 +/- 0.110 C/decade at the two sigma level from 1995

For GISS the warming is not significant for over 17 years.

For GISS: 0.111 +/- 0.122 C/decade at the two sigma level from 1996

If you want to know the times to the nearest month that the warming is not significant for each set to their latest update, they are as follows:

RSS since September 1989;

UAH since June 1993;

Hadcrut3 since August 1993;

Hadcrut4 since July 1994;

GISS since August 1995 and

NOAA since June 1994.

Section 3

This section shows data about 2013 and other information in the form of a table. The table shows the six data sources along the top and bottom, namely UAH, RSS, Hadcrut4, Hadcrut3, Hadsst2, and GISS. Down the column, are the following:

1. 12ra: This is the final ranking for 2012 on each data set.

2. 12an: Here I give the average anomaly for 2012.

3. year: This indicates the warmest year on record so far for that particular data set. Note that two of the data sets have 2010 as the warmest year and four have 1998 as the warmest year.

4. anom: This is the average of the monthly anomalies of the warmest year just above.

5. month: This is the month where that particular data set showed the highest anomaly. The months are identified by the first two letters of the month and the last two numbers of the year.

6. anom: This is the anomaly of the month just above.

7. y/m: This is the longest period of time where the slope is not positive given in years/months. So 15/11 means that for 15 years and 11 months the slope is slightly negative.

8. Ja.an: This is the January, 2013, anomaly for that particular data set.

9. Fe.an: This is the February, 2013, anomaly for that particular data set.

10. M.an: This is the March, 2013, anomaly for that particular data set.

20. avg: This is the average anomaly of all months to date taken by adding all numbers and dividing by the number of months. Only the satellite data includes March.

21. rank: This is the rank that each particular data set would have if the anomaly above were to remain that way for the rest of the year. Of course it won’t, but think of it as an update 10 or 15 minutes into a game. Expect wild swings from month to month at the start of the year. As well, expect huge variations between data sets at the start.

| Source | UAH | RSS | Had4 | Had3 | Sst2 | GISS |

|---|---|---|---|---|---|---|

| 1. 12ra | 9th | 11th | 10th | 10th | 8th | 9th |

| 2. 12an | 0.161 | 0.192 | 0.433 | 0.406 | 0.342 | 0.56 |

| 3. year | 1998 | 1998 | 2010 | 1998 | 1998 | 2010 |

| 4. anom | 0.419 | 0.55 | 0.540 | 0.548 | 0.451 | 0.66 |

| 5. month | Ap98 | Ap98 | Ja07 | Fe98 | Au98 | Ja07 |

| 6. anom | 0.66 | 0.857 | 0.818 | 0.756 | 0.555 | 0.93 |

| 7. y/m | 4/9 | 16/4 | 12/4 | 15/11 | 16/1 | 12/2 |

| 8. Ja.an | 0.504 | 0.441 | 0.432 | 0.390 | 0.283 | 0.60 |

| 9. Fe.an | 0.175 | 0.194 | 0.482 | 0.431 | 0.314 | 0.49 |

| 10. M.an | 0.184 | 0.204 | ||||

| 20. avg | 0.288 | 0.280 | 0.457 | 0.411 | 0.299 | 0.55 |

| 21. rank | 3rd | 6th | 9th | 9th | 12th | 10th |

| Source | UAH | RSS | Had4 | Had3 | Sst2 | GISS |

If you wish to verify all 2012 rankings, go to the following:

For UAH, see here, for RSS see here and for Hadcrut4, see here. Note the number opposite the 2012 at the bottom. Then going up to 1998, you will find that there are 9 numbers above this number. That confirms that 2012 is in 10th place.

For Hadcrut3, see here. Here you have to do something similar to Hadcrut4, but look at the numbers at the far right. One has to back to the 1940s to find the previous time that a Hadcrut3 record was not beaten in 10 years or less.

For Hadsst2, see here. View as for Hadcrut3. It came in 8th place with an average anomaly of 0.342, narrowly beating 2006 by 2/1000 of a degree as that came in at 0.340. In my ranking, I did not consider error bars, however 2006 and 2012 would statistically be a tie for all intents and purposes.

For GISS, see here. Check the J-D (January to December) average and then check to see how often that number is exceeded back to 1998.

To see all points since January 2012 in the form of a graph, see the WFT graph below:

Appendix

In this part, we are summarizing data for each set separately.

RSS

The slope is flat since December 1996 or 16 years and 4 months. (goes to March) RSS is 196/204 or 96% of the way to Ben Santer’s 17 years.

For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.134 C/decade at the two sigma level from 1990.

The RSS average anomaly so far for 2013 is 0.280. This would rank 6th if it stayed this way. 1998 was the warmest at 0.55. The highest ever monthly anomaly was in April of 1998 when it reached 0.857. The anomaly in 2012 was 0.192 and it came in 11th.

Following are two graphs via WFT. Both show all plotted points for RSS since 1990. Then two lines are shown on the first graph. The first upward sloping line is the line from where warming is not significant according to the SkS site criteria. The second straight line shows the point from where the slope is flat.

The second graph shows the above, but in addition, there are two extra lines. These show the upper and lower lines using the SkS site criteria. Note that the lower line is almost horizontal but slopes slightly downward. This indicates that there is a slight chance that cooling has occurred since 1990 according to RSS per graph 1 and graph 2.

UAH

The slope is flat since July 2008 or 4 years, 9 months. (goes to March)

For UAH, the warming is not significant for over 19 years.

For UAH: 0.146 +/- 0.170 C/decade at the two sigma level from 1994

The UAH average anomaly so far for 2013 is 0.288. This would rank 3rd if it stayed this way. 1998 was the warmest at 0.419. The highest ever monthly anomaly was in April of 1998 when it reached 0.66. The anomaly in 2012 was 0.161 and it came in 9th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to UAH.

Hadcrut4

The slope is flat since November 2000 or 12 years, 4 months. (goes to February.)

For Hadcrut4, the warming is not significant for over 18 years.

For Hadcrut4: 0.095 +/- 0.110 C/decade at the two sigma level from 1995

The Hadcrut4 average anomaly so far for 2013 is 0.457. This would rank 9th if it stayed this way. 2010 was the warmest at 0.540. The highest ever monthly anomaly was in January of 2007 when it reached 0.818. The anomaly in 2012 was 0.433 and it came in 10th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to Hadcrut4.Graph 1 and graph 2.

Hadcrut3

The slope is flat since April 1997 or 15 years, 11 months (goes to February)

For Hadcrut3, the warming is not significant for over 19 years.

For Hadcrut3: 0.095 +/- 0.115 C/decade at the two sigma level from 1994

The Hadcrut3 average anomaly so far for 2013 is 0.411. This would rank 9th if it stayed this way. 1998 was the warmest at 0.548. The highest ever monthly anomaly was in February of 1998 when it reached 0.756. One has to go back to the 1940s to find the previous time that a Hadcrut3 record was not beaten in 10 years or less. The anomaly in 2012 was 0.406 and it came in 10th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to Hadcrut3. Graph 1 and graph 2.

Hadsst2

For Hadsst2, the slope is flat since March 1, 1997 or 16 years, 1 month. (goes to March 31, 2013). Hadsst2 however has not been updated since December on WFT. The slope from March 1997 to December 2012 is -0.00015 per year and the flat line is at 0.33. The average for January and February 2013 is 0.299, so at least two months can be added to the period with a slope of 0. Furthermore, Dr. Spencer said that “Later I will post the microwave sea surface temperature update, but it is also unchanged from February.“ So since we can rule out a huge upward spike in Hadsst2 for March, I believe I can conclude that if Hadsst2 were updated to March, then there would be no warming for 16 years and 1 month.

As mentioned above, the Hadsst2 average anomaly for the first two months for 2013 is 0.299. This would rank 12th if it stayed this way. 1998 was the warmest at 0.451. The highest ever monthly anomaly was in August of 1998 when it reached 0.555. The anomaly in 2012 was 0.342 and it came in 8th.

Sorry! The only graph available for Hadsst2 is the following this.

GISS

The slope is flat since January 2001 or 12 years, 2 months. (goes to February)

For GISS, the warming is not significant for over 17 years.

For GISS: 0.111 +/- 0.122 C/decade at the two sigma level from 1996

The GISS average anomaly so far for 2013 is 0.55. This would rank 10th if it stayed this way. 2010 was the warmest at 0.66. The highest ever monthly anomaly was in January of 2007 when it reached 0.93. The anomaly in 2012 was 0.56 and it came in 9th.

The highest ever monthly anomaly was in January of 2007 when it reached 0.93. The anomaly in 2012 was 0.56 and it came in 9th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to GISS. Graph 1 and graph 2

Conclusion

Above, various facts have been presented along with sources from where all facts were obtained. Keep in mind that no one is entitled to their facts. It is only in the interpretation of the facts for which legitimate discussions can take place. After looking at the above facts, do you feel that we should spend billions to prevent catastrophic warming? Or do you feel we should take a “wait and see” attitude for a few years to be sure that future warming will be as catastrophic as some claim it will be? Keep in mind that even the MET office felt the need to revise its forecasts. Look at the following and keep in mind that the MET office believes that the 1998 mark will be beaten by 2017. Do you agree?

By the way, here is an earlier prediction by the MET office:

“(H)alf of the years after 2009 are predicted to be hotter than the current record hot year, 1998.”

When this prediction was made, they had Hadcrut3 and so far, the 1998 mark has not been broken on Hadcrut3. 2013 is not starting well if they want a new record in 2013.

Here are some relevant facts today: The sun is extremely quiet; ENSO has been between 0 and -0.5 since the start of the year; it takes at least 3 months for ENSO effects to kick in and the Hadcrut3 average anomaly after February was 0.411 which would rank it in 9th place. Granted, it is only 2 months, but you are not going to set any records starting the race in 9th place after two months. So even if a 1998 type El Nino started to set in tomorrow, it would be at least 4 or 5 months for the maximum ENSO reading to be reached. Then it would take at least 3 more months for the high ENSO to be reflected in Earth’s temperature. How hot would November and December then have to be to set a new record? In my opinion, the odds of setting a new record in 2013 are extremely remote.

JTF, It looks great! Thanks!

Please, don’t do this every month.

Cees de Valk says: April 8, 2013 at 8:41 am

Please, don’t do this every month.

Eventually these articles will likely go quarterly, but until the message sinks in far and wide, I am inclined to keep beating the drum monthly. This article pokes at the weakest point in the Catastrophic Anthropogenic Global Warming narrative, i.e. it’s not getting warmer…

“So for this to be the case, the slope for all of the data sets would have to be as steep as the CO2 slope. Hopefully the graph illustrates that this is untenable.”

Both statements are untrue. The slope of the CO2 curve can’t be meaningfully “illustrated” on that graph, since the ordinate doesn’t start at zero. Also, temperature doesn’t follow concentration; it follows a logarithmic value of the concentration ratio relative to Time = zero. This is a very different thing from what the graph actually illustrates.

The post is mostly valid, but it’s way too long for the amount of actual content.

Spin that SKS!

Thanks, Werner, for your excellent post reminding us how constant temperatures really are – almost as if regulated by a global thermostat.

If we got rid of the silly notion of using centigrade and ‘temperature anomaly’ and started using actual temperature in Kelvin, it would quickly become apparent to all that GMT has changed very little during the whole history of the temperature record. On an up-tick of the 60y weather cycle ‘scientists’ try to panic us with warming, and on the down-tick of the cycle they threaten the coming of an Ice age.

Can’t blame the media for this one, it’s firmly at the door of foolish climatologists who are using a conjecture which is clearly false.

OK, I’ll bite:

The U.S., as of 2012,

has decreased CO2 production to meet Kyoto number

(per the WUWT on Friday).

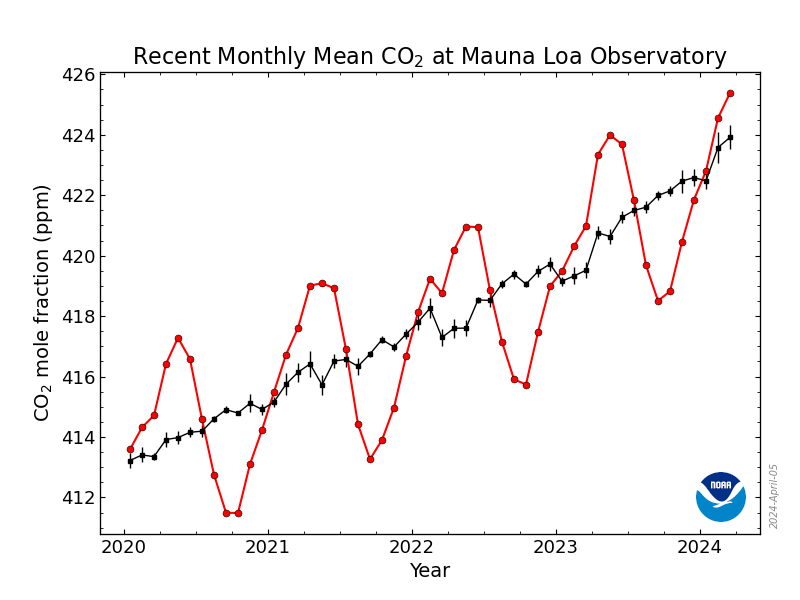

But, Mauna Loa, in this article, shows a very monotonic increase:

just as if it had no relationship to the U.S. at all

and mostly decoupled from the world economy

The Mauna Loa numbers look more like a 3 month lag of TSI over the southern oceans….

With the most influential source of heat being the sun, I would guess a greater probability is cooler for awhile. Also, there is a big focus on temperature data. The cloud cover and snow cover duration are big factors as well as oceans, volcanic activity, meteor strikes etc. The list of variables to forecast is too substantial to justify billions in CO2 reductions. Invest in hardening our electrical power infrastructure and “weathering” thru the droughts and severe winters, as seen in Europe and Asia the last few years.

Models are nice tools, but reality is what it is.

This is excellent. It makes it very clear that current panic over future extremes in temperature simply has no place in the world today. Mitigation was never the solution anyway.

Time for humankind to stop feeling guilty like naughty children just because a tiny percentage of us think the rest of us are bad. Time for humankind to grow up. Time to stop wasting taxpayes money and, most importantly, time to stop wrecking the environment in the name of saving the environment.

Thanks, JTF. I’m pleased to read that you plan to keep beating this drum on a monthly basis. This is the message that needs to reach home.

Global mean temperature must decline in a statistically significant trend for a period of from thirty to fifty years, doing so under steadily rising CO2 concentrations, before the climate science community ever begins to question its central dogma.

In the meantime, everyone else will just have to decide what it is they do, or do not, want to believe about AGW, hopefully based upon their own evaluation of the various arguments and counter-arguments about it.

Beta Blocker: I have to disagree with “Global mean temperature must decline in a statistically significant trend for a period of from thirty to fifty years, doing so under steadily rising CO2 concentrations, before the climate science community ever begins to question its central dogma.”

They’ll drop it as soon as it isn’t viable with the general public – either going to ocean acidification or going into the “New Ice AGE!!! OMG we’re all going to DIE!” mode. It never was about science.

Very nice job. I’m an IT/Finance numbers guy (education: Ga Tech Physics), and a devote data bigot – the more we see this type of analysis, the easier and quicker the response to alarmist data manipulation (AKA Marcotting).

Question: what is the base period used to generate the temperature data anomaly for title chart: slopes vs calendar years (ie: warmer than what base period)?

I think I’m looking at “temperature anomaly” spreads in some of these allegedly “best practice” trend tracking data sets (uti/from: 2000.9/trend @ about 0.19 to gistemp/from: 2001.33/tend @ about 0.58 = 0.39 degree C) that appear to exceed 12 years of the predicted 3 degrees/century IPCC warming.

It’s laughable that educated people (?) take this stuff seriously, let alone propose to spend trillions “fixing” it…however, now that I think about it, the “fix” might be as simple as better basic science education.

“Global mean temperature must decline in a statistically significant trend for a period of from thirty to fifty years, doing so under steadily rising CO2 concentrations, before the climate science community ever begins to question its central dogma.”

Pretty pessimistic and ultimately no more than a shot from the hip. The MS media in europe is already beginning to role over. . I’m sure there are some hard core team members who will go to their graves unwilling to admit their mistake, but in science as in all other human endeavors, self-interest always wins out. There are scientists who are already sniffing an opportunity to be iconoclasts. 5 more years of no warming will in my opinion be enough to bring this down for all practical purposes…

Susan says:April 8, 2013 at 9:23 am

But, Mauna Loa, in this article, shows a very monotonic increase:

just as if it had no relationship to the U.S. at all

and mostly decoupled from the world economy

Humans account for some 3-4% of the CO2 released into the atmosphere annually, so changing a fraction of a percent of that 3-4% doesn’t do much. More CO2 information here: http://www.co2science.org/index.php

“Global mean temperature must decline in a statistically significant trend for a period of from thirty to fifty years, doing so under steadily rising CO2 concentrations, before the climate science community ever begins to question its central dogma.”

Really? I suspect we’ll start hearing about the need to give control of our economies to unaccountable international bureaucracies in order to stave off the coming ice age about five minutes after all the current in-production scare pieces about global warming have aired. They know it’s a lie, so why should they be careful about it?

Steve Keohane says: April 8, 2013 at 10:08 am

Humans account for some 3-4% of the CO2 released into the

atmosphere annually, so changing a fraction of a percent

of that 3-4% doesn’t do much.

OK,

so where does the CO2 indicated by Mauna Loa actually come from on the cycle measured?

Do we have any empirical measurements showing the source?

Pokerguy,

“5 more years of no warming will in my opinion be enough to bring this down for all practical purposes…”

Let’s say 4 more years – that will end it when Obama’s reign ends. Rather a fitting end, I think.

jorgekafkazar says:

April 8, 2013 at 9:11 am

Also, temperature doesn’t follow concentration; it follows a logarithmic value of the concentration ratio relative to Time = zero. This is a very different thing from what the graph actually illustrates.

On three data sets,the graphs show no relation between CO2 and temperature for 16 years. Is it possible that the effect of CO2 is saturated so that even the supposed logarithmic relationship is no longer valid?

Consider making your work attractive for use by middle school students and teachers. They need it. Also,if it is banned by our educational bureaucracy you might become stars.

The difference between skeptic and warming sites is that on the skeptic sites readers are asked to make their own opinion while on the warming sites readers are expected to run with what the “experts” and their admirers say.\

Irony: in the climate debate, only the Republican-claimed view is obtained democratically.

Tenuk says:

April 8, 2013 at 9:14 am

If we got rid of the silly notion of using centigrade and ‘temperature anomaly’ and started using actual temperature in Kelvin, it would quickly become apparent to all that GMT has changed very little during the whole history of the temperature record.

That is true. Another way of looking at this whole thing is how Richard Courtney stated on another post that the yearly temperature changes by 3.8 C over the course of the year anyway. And then some are concerned about the 0.8 C that we have gone up since 1750 and are worried that we may reach the dreaded 2 C mark soon. To read about the normal yearly variation, see:

http://theinconvenientskeptic.com/2013/03/misunderstanding-of-the-global-temperature-anomaly/

So when you plot a graph with no units on one axis, presumably it doesn’t relate to anything physically real. But one thing you can guess from whatever that sawtooth graph is supposed to be, is that it changes much faster, than it could possibly change if it had a 40 to 70 year decay time constant. It almost looks like it has a regular near annual cyclic variation going on. I couldn’t figure out which of the listed graphs it is supposed to be; it sure looks different from all the others in that firs figure.

Susan says:

April 8, 2013 at 9:23 am

“OK, I’ll bite:

The U.S., as of 2012,

has decreased CO2 production to meet Kyoto number

(per the WUWT on Friday).

But, Mauna Loa, in this article, shows a very monotonic increase:

just as if it had no relationship to the U.S. at all

and mostly decoupled from the world economy

The Mauna Loa numbers look more like a 3 month lag of TSI over the southern oceans….”

Go to woodfortrees. Take the Mauna Loa curve. average it over 12 months to remove seasonal signal. Now make a derivative to see the YoY change.

Look at around 2008.

See the Big Financial Crisis, when all the industrial production stalled around the globe?

No?

Me neither. It looks to me like CO2 does what it does, independent of human activity.

Rhoda R says:

April 8, 2013 at 10:01 am

“They’ll drop it as soon as it isn’t viable with the general public – either going to ocean acidification or going into the “New Ice AGE!!! OMG we’re all going to DIE!” mode. It never was about science.”

Exactly…I’s about control and redistribution of your wealth!!!

Werner Brozek says:

April 8, 2013 at 10:34 am “On three data sets,the graphs show no relation between CO2 and temperature for 16 years. Is it possible that the effect of CO2 is saturated so that even the supposed logarithmic relationship is no longer valid?”

Historically, we see no relationship to global temperatures and CO2. The surface temperature is more influence by gas density than composition.

Hal Javert says:

April 8, 2013 at 10:03 am

Question: what is the base period used to generate the temperature data anomaly for title chart: slopes vs calendar years (ie: warmer than what base period)?

Ideally it would be the most recent 30 year period. However different sources use different times. So the higher the anomaly, the cooler was the base period. I have gotten around this problem by giving the rank if the present anomaly were to continue the rest of the year. So on the table, you will see that GISS has the highest anomaly at 0.55, however the more important number is that this would rank as 10th warmest at this point two months into the year.

Susan says: April 8, 2013 at 10:24 am Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption]

Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption] Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption]

Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption] Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption]

Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption] Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption]

Carbon Dioxide Information Analysis Center – Click the pic to view at source[/caption]

OK, so where does the CO2 indicated by Mauna Loa actually come from on the cycle measured?

Do we have any empirical measurements showing the source?

In terms of the anthropogenic component, here are Anthropogenic CO2 Emissions from Fossil-Fuels;

[caption id="" align="alignnone" width="542"]

here’s Cumulative Anthropogenic CO2 Emissions from Fossil-Fuels;

[caption id="" align="alignnone" width="542"]

and here’s the associated data:

http://cdiac.esd.ornl.gov/trends/emis/glo.html

There is also an argument that Land Use Changes have impacted CO2 concentration via Carbon Flux to the Atmosphere;

[caption id="" align="alignnone" width="542"]

also shown cumulatively here:

[caption id="" align="alignnone" width="542"]

However, Land Use data bases on Houghton et al. is highly suspect i.e.;

http://cdiac.ornl.gov/trends/landuse/houghton/houghton.html

which even the IPCC had to acknowledge in AR4:

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch7s7-3-1-3.html

In Houghton & Hackler 2001;

http://cdiac.ornl.gov/ftp/ndp050/ndp050.pdf

they found that:

However, by Houghton, 2008;

http://cdiac.esd.ornl.gov/trends/landuse/houghton/houghton.html

he found that;

Given that Houghton, 2008 inflated the starting starting point to 500.6 Tg,, versus the still questionable starting point of 397 Tg in Houghton & Hackler 2001, the entire Land Use CO2 contribution argument should be looked at with a jaundiced eye.

Susan, the Mauna Loa observatory measures global atmospheric concentration possibly with a slight NH lead. The squiggle just proves that ther is more temperate land in the NH, and that about half of biological carbon sequestration is terrestrial plants that take it up in summertime.

China, not the US, is the biggest CO2 emitter, and they are growing like crazy despite the US recession. The US ‘meeting’ Kyoto goals actually means only about a 5% reduction, which works out to about 1% of global anthropogenic CO2, which is of course rounding error invisible in the chart.

The fact that there has been no statistically significant change in temp for more than 15 years (or 17 in a different paper) falsifies present climate models. By itself it does not yet falsify the thesis that there could be some AGW. But for sure the models are now proven too sensitive, so existing (AR4) predictions for both rate and level are too alarmist and the urgency for immediate action is gone.

justthefactswuwt says: April 8, 2013 at 11:06 am

In terms of the anthropogenic component, here are Anthropogenic

CO2 Emissions from Fossil-Fuels;

Nice set of calculations and estimates,

but they don’t seem to match the real data of Mauna Loa and

the empirical evidence of an world wide economic slow down.

If the hypothesis is correct, Mauna Loa should have shown something significant

in 2008-2009.

Since it didn’t where did the CO2 come from and what are the characteristics of

that source?

Excellent work from Werner.

I’ve noticed his previous posts here and elsewhere and am very pleased to see it given greater prominence here.

Werner Brozek asks:

“Is it possible that the effect of CO2 is saturated so that even the supposed logarithmic relationship is no longer valid?”

It is beginning to look like that.

The only real evidence we have shows that CO2 follows temperature, not vice-versa. The major effect of CO2 occurred in the first 20 ppm. Now that CO2 is approaching 400 ppmv, its effect is simply too small to measure.

The reality is that at current concentrations, additional CO2 just doesn’t matter. For all practical purposes, its warming effect is non-existent.

D b stealey

I see greater warmth and greater cold than today stretching back as far as my records currently go, around 1000AD. I therefore have reached the same conclusion as you, that anything above the ‘pre industrial’ level of 280ppm appears to make little or no difference.

Tonyb

Susan says: April 8, 2013 at 11:28 am National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption]

National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption] National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption]

National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption] National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption]

National Oceanic and Atmospheric Administration (NOAA) – Earth System Research Laboratory (ESRL) – Click the pic to view at source[/caption]

Nice set of calculations and estimates,

but they don’t seem to match the real data of Mauna Loa and

the empirical evidence of an world wide economic slow down.

If the hypothesis is correct, Mauna Loa should have shown something significant

in 2008-2009.

Since it didn’t where did the CO2 come from and what are the characteristics of

that source?

Here’s the Full Mauna Loa CO2 record;

[caption id="" align="alignnone" width="600"]

here’s the 5 year;

[caption id="" align="alignnone" width="600"]

and here’s the Annual Mean Growth Rate:

[caption id="" align="alignnone" width="600"]

In terms of data, here’s Monthly Mean data;

ftp://ftp.cmdl.noaa.gov/ccg/co2/trends/co2_mm_mlo.txt

here’s Annual Mean data;

ftp://ftp.cmdl.noaa.gov/ccg/co2/trends/co2_annmean_mlo.txt

and here’s Annual Mean Growth Rate data:

ftp://ftp.cmdl.noaa.gov/ccg/co2/trends/co2_gr_mlo.txt

If you look at the Annual Mean Growth Rate data for the last 15 years;

# year ann inc

1998 2.93

1999 0.93

2000 1.62

2001 1.58

2002 2.53

2003 2.29

2004 1.56

2005 2.52

2006 1.76

2007 2.22

2008 1.60

2009 1.88

2010 2.43

2011 1.83

2012 2.67

there does appear to have been a slow down in the growth rate during 2008/2009 however it is also readily apparent that there are are other factors in play, e.g. it seems unlikely that the .93 annual increase in 2009 is attributable solely to the Dot Com crash.

However, I am not sure that it is fruitful to expend too much energy challenging the anthropogenic contribution to CO2 concentrations. As Werner and dbstealey point out, the key question is not whether anthropogenic CO2 emissions are increasing global CO2 concentrations, but rather why increasing global CO2 concentrations, do not appear to be causing any measurable warming.

justthefactswuwt:

At April 8, 2013 at 11:06 am you answer the question of Susan at April 8, 2013 at 10:24 am which was

However, your answer only addresses the sources of CO2 from human activities.

Nature emits 34 molecules of CO2 for each molecule of CO2 emitted from all human activities.

Hence, I write to answer the major part of Susan’s question. And I provide information which can be found in one of our 2005 papers

(ref. Rorsch A, Courtney RS & Thoenes D, ‘The Interaction of Climate Change and the Carbon Dioxide Cycle’ E&E v16no2 (2005) )

I do this to provide information and do NOT want this answer to Susan’s question to deflect from its important subject which is “Are Climate Models Realistic?” in terms of their global temperature projections.

MECHANISMS OF THE CARBON CYCLE

Short-term processes

1. Consumption of CO2 by photosynthesis that takes place in green plants on land. CO2 from the air and water from the soil are coupled to form carbohydrates. Oxygen is liberated. This process takes place mostly in spring and summer. A rough distinction can be made:

1a. The formation of leaves that are short lived (less than a year).

1b. The formation of tree branches and trunks, that are long lived (decades).

2. Production of CO2 by the metabolism of animals, and by the decomposition of vegetable matter by micro-organisms including those in the intestines of animals, whereby oxygen is consumed and water and CO2 (and some carbon monoxide and methane that will eventually be oxidised to CO2) are liberated. Again distinctions can be made:

2a. The decomposition of leaves, that takes place in autumn and continues well into the next winter, spring and summer.

2b. The decomposition of branches, trunks, etc. that typically has a delay of some decades after their formation.

2c. The metabolism of animals that goes on throughout the year.

3. Consumption of CO2 by absorption in cold ocean waters. Part of this is consumed by marine vegetation through photosynthesis.

4. Production of CO2 by desorption from warm ocean waters. Part of this may be the result of decomposition of organic debris.

5. Circulation of ocean waters from warm to cold zones, and vice versa, thus promoting processes 3 and 4.

Longer-term processes

6. Formation of peat from dead leaves and branches (eventually leading to lignite and coal).

7. Erosion of silicate rocks, whereby carbonates are formed and silica is liberated.

8. Precipitation of calcium carbonate in the ocean, that sinks to the bottom, together with formation of corals and shells.

Natural processes that add CO2 to the system

9. Production of CO2 from volcanoes (by eruption and gas leakage).

10. Natural forest fires, coal seam fires and peat fires.

Anthropogenic processes that add CO2 to the system

11. Production of CO2 by burning of vegetation (“biomass”).

12. Production of CO2 by burning of fossil fuels (and by lime kilns).

Several of these processes are rate dependant and several of them interact.

At higher air temperatures, the rates of processes 1, 2, 4 and 5 will increase and the rate of process 3 will decrease. Process 1 is strongly dependent on temperature, so its rate will vary strongly (maybe by a factor of 10) throughout the changing seasons.

The rates of processes 1, 3 and 4 are dependent on the CO2 concentration in the atmosphere. The rates of processes 1 and 3 will increase with higher CO2 concentration, but the rate of process 4 will decrease.

The rate of process 1 has a complicated dependence on the atmospheric CO2 concentration. At higher concentrations at first there will be an increase that will probably be less than linear (with an “order” <1). But after some time, when more vegetation (more biomass) has been formed, the capacity for photosynthesis will have increased, resulting in a progressive increase of the consumption rate.

Processes 1 to 5 are obviously coupled by mass balances. Our paper assessed the steady-state situation to be an oversimplification because there are two factors that will never be “steady”:

I. The removal of CO2 from the system, or its addition to the system.

II. External factors that are not constant and may influence the process rates, such as varying solar activity.

Modeling this system is a difficult because so little is known concerning the rate equations. However, some things can be stated from the empirical data.

At present the yearly increase of the anthropogenic emissions is approximately 0.1 GtC/year. The natural fluctuation of the excess consumption (i.e. consumption processes 1 and 3 minus production processes 2 and 4) is at least 6 ppmv (which corresponds to 12 GtC) in 4 months. This is more than 100 times the yearly increase of human production, which strongly suggests that the dynamics of the natural processes here listed 1-5 can cope easily with the human production of CO2. A serious disruption of the system may be expected when the rate of increase of the anthropogenic emissions becomes larger than the natural variations of CO2. But the above data indicates this is not possible.

The accumulation rate of CO2 in the atmosphere (1.5 ppmv/year which corresponds to 3 GtC/year) is equal to almost half the human emission (6.5 GtC/year). However, this does not mean that half the human emission accumulates in the atmosphere, as is often stated. There are several other and much larger CO2 flows in and out of the atmosphere. The total CO2 flow into the atmosphere is at least 156.5 GtC/year with 150 GtC/year of this being from natural origin and 6.5 GtC/year from human origin. So, on the average, 3/156.5 = 2% of all emissions accumulate.

This is not surprising because few things in nature are so stable that they vary by as little as 2% p.a..

I stress that I hope this answer to Susan’s question will not lead to deflection of the thread onto the carbon cycle. The subject of this thread is “Are Climate Models Realistic?” in terms of their global temperature projections.

Richard

highflight56433 says:

April 8, 2013 at 10:55 am

Historically, we see no relationship to global temperatures and CO2. The surface temperature is more influence by gas density than composition.

As it turns out, when considering the period from 1850 to 2013, it was only for a period of about 20 years from 1978 to 1998 that the CO2 and temperatures went up at the same time. In hindsight, it seemed to have been a coincidence.

As for your second statement, we cannot go there on this thread. Speaking for myself, until you can convince Dr. Spencer and Dr. Lindzen that CO2 does not even have an extremely small influence, I will not even pay attention. But to put this in a positive way, we both agree that extra CO2 will not have catastrophic affects on our climate, now or in the future.

richardscourtney says: April 8, 2013 at 12:02 pm

That’s good stuff. Would you be interesting in writing an article for WUWT on the carbon cycle? Essentially your comment, with the Mauna Loa graphs/links as an intro, the anthropogenic CO2 emission/flux graphs/links included in place within your comment and graphs/links to empirical data supporting changes in the non-anthropogenic variables added where ever possible?

justthefactswuwt:

At April 8, 2013 at 12:46 pm you write to me saying.

Firstly, I was sincere in saying that I did not want to deflect this thread onto the carbon cycle. This thread has an important subject: Werner has put much – and quality – work into providing it, and it deserves to be treated properly.

Having said that, I would only agree to writing an article for publication on Anthony’s blog if he were to ask me for it. It is his blog and I would not presume his wanting something from me unless he said he wanted it.

Importantly, I am not sure the suggested article would be useful. I have often posted about the carbon cycle on WUWT and anybody wanting my views can search the WUWT archives. Also, discussions of the carbon cycle always devolve to a debate of the opposing views of Ferdinand Engelbeen (whom I respect) and myself.

However, if Anthony asks for it then I would provide such an article.

Richard

Richard

We can always spice up proceedings by laying bets as to how long it would take before Ferdinand turned up once the words ‘Richard Courtney’ and ‘carbon cycle ‘ and ‘wuwt’ hit the interweb.

Tonyb

I, for one, would find an article written on the carbon cycle to be very useful, especially if it included links for all the data on natural CO2 sources. Yes, we have had articles that addressed that topic, but not quite so succinctly. I wonder if Susan has not been mislead into thinking that all or most CO2 in the atmosphere is man-made in origin, because in reading the mass media and anything written by so-called climate scientists, one could come to that conclusion. The most important take-away is that man-made sources of CO2 are a very small part of total atmospheric emissions, and this, if for no other reason, is why reductions in emissions will be ineffective as any sort of temperature control mechanism.

But if you use ‘one of the world’s best climate models…’ you can make these sort of claims:

http://www.bbc.co.uk/news/science-environment-22063340 (Plane flights ‘to get more turbulent’)

Never let the facts stand in the way of a good story.

Even a small fall of say 0.5 C would greatly increase the number of years without warming to 50 or more. The warmists would need to extend their duration of no warming before the models are invalidated “A pause of 50 years isn’t enough. It takes at least 100 years to say that warming has stopped”.

The only reasonable reaction to the models and the alarmism is laughter. Bill McKibben is flying from the snow covered US (50% has snow in the middle of spring) to Australia to tell us it’s immoral to fly around the world because the world is too hot.

If indeed the global temperature changes are in the long run chiefly driven by the changes of the solar activity…

(- as it very much looks from this graph:

http://www.woodfortrees.org/plot/sidc-ssn/from:1855.95/to:1996.41/mean:514/normalise/plot/hadcrut4gl/from:1855.95/to:1996.41/mean:514/normalise

– a normalised plot for SIDC-SSN [which well correlates with TSI] and HADCRU4GL data for the period from the beginning of the solar cycle 10 [1855-1867] to the end of the solar cycle 22 [1986-1996] and with the quite long 4 average solar cycles [42.8 years] spanning running average (the average length of the solar cycle during SC10-22 is 10.7 years) for smoothing. The graph prima facie shows quite a consistent, close correlation of the two during the last ~one and half century of the instrumental measurements)

…if indeed the global temperature changes are in the long run chiefly driven by the changes of the solar activity, then during at the very least the rest of the current solar cycle 24…

(which according to the smoothed SSN and Ap data [http://solen.info/solar/] most probably already peaked last year and most probably will have a very low average SSN [the average SSN is much better indicator of the total solar energy received by Earth in the long run, then the shortlasting values of the solar cycle peaks – and the average i.e. reveals that the average solar activity in the solar cycles 21-22 – average SSN 80.9 – was almost as high as in the solar cycles 18-19 – average SSN 82.9] AROUND ~25 [-even now just a ~year past the supposed peak is its average SSN 34.36! and we can now already with sufficient certainty expect the current solar cycle 24 being at its end comparable with the solar cycle 5 at the beginning of the 19th century when the Dalton minimum occured] and most likely will have a quite significantly lower average SSN than the solar cycle 14 at the beginning of the 20th century when the last centenial warming trend began)

…then during at the very least the rest of the current solar cycle 24 and during the beginning of the solar cycle 25 – in other words: IN THE NEXT 7-10 YEARS – we quite likely can’t expect any significant upward trend of the global average surface temperatures.

In my opinion this conditional and very conservative (omiting completely the prediction for the next solar cycle 25 – for which is too early to state it with sufficient certainty) although now already quite very likely prediction based on the flat instrumental temperature composites trend from the multiple sources in the last more than decade, latest SSN and Ap data as well as the study of the past solar activity and it’s close (however not unlagged [-the temperature change lag behind the solar ctivity change in the long run is ~one to two solar cycle lengths – given the partial cancelling effect of the periodical rising and then descending of the solar flux activity during the whole Hale magnetic polarity solar cycle ~22 years – and given the correlation of SSN with TSI periodical rising and then descending within the range of ~~1W/m2 TSI]) correlation to the instrumental temperature composites – if indeed true – can imply the gravest political consequences for the CAGW agenda and its proponents in the next decade.

Dr. Jan Zeman, Prague, Czech Republic

Good one! Marcotting suggests Marketing (the message).

If only that were so. The way G. Lean, alarmist environmental columnist for the Telegraph spins it, this pause is a reprieve that gives us a second chance to keep the temperature rise below two degrees.

Thanks, Werner. The data is what it is, then we talk about it, theorize.

The last decade and a half does not seem to point to higher global temperatures in the near future, in spite of CO2 at Mauna Loa ever increasing; a saturation of some sort is suggested, I think.

I can’t help it but this looks to great extent like a pointless cherry-picking exercise to me.

“this record is flat for 15 years, that record is flat for 7 years…”… come on, what is that supposed to mean? “97% of temperature records agree that temperatures are flat for 13 years”?

Personally I don’t think the 1998 El Nino counts as part of the current flat trend, for instance. It sure helps keeping the past end of many of your trend lines up, but at the time it was a huge positive anomaly, definitely not “business as usual”.

And don’t forget that most of GCMs involved in the mentioned statement can’t actually simulate ENSO so you should remove the ENSO influence first, and only then start looking for flat trends you want to compare with them.

You may want to check Dr. Spencer’s site, he recently mentioned a decent method how ENSO influence can be subtracted from a temperature record.

tumetuestumefaisdubien1 says:

April 8, 2013 at 3:11 pm

IN THE NEXT 7-10 YEARS – we quite likely can’t expect any significant upward trend of the global average surface temperatures.

Thank you! Have you seen the article:

http://wattsupwiththat.com/2012/02/13/german-skeptics-luning-and-vahrenholt-respond-to-criticism/

They would certainly agree with you. And in addition, Germany has now had five winters in a row that were below the long term average. With the sun in a slump and with cold winters, it must be hard to convince people of the dangers of global warming.

Thanks for the link. It’s interesting how hysterical were the reactions to Lüning and Vahrenholt, while they only state the obvious. I think we will see more of this hysteria on the side of the CAGW alarmists as their prediction models will be falsified in the comming years. For fanatics it is hard to swallow the truth.

I think the reality is quite simple: the descent of the solar activity since the SC22 is at least so steep as at the end of the 18th century before the Dalton minimum and it is already in order of like SSN -50 in averages (from SSN 80.6! average of the SC22 to SSN <30 – which will be most likely the average of the SC24) – which means order of like 0.5W/m2 decline of average TSI just in the visible spectrum, not speaking about the UV, microwaves etc. Such a steep decline during just 2 solar cycles hardly can be offsetted by an anthropogenic CO2 even if the most alarmistic estimations of its forcing would be true. In my opinion when looking at the last SSN data no way there can be any significant rise of the temperature trend in the next decade (and if the SC25 will have a simmilar activity like the current SC24 – which I strongly suspect – there will be a significant decline). We know enough about the solar activity and its forcing that this prediction can be done with almost absolute certainty. I wrote my post to support the ideas from the article from the the side of what causes the almost flat temperature trends since the end of the SC22 and the 1997-98 El Nino respectively (which the sharp change of the solar activity between SC22/SC23 in my opinion indirectly caused). I even used simmilar graph in my recent article (http://janzeman.blog.idnes.cz/clanok.asp?cl=326337 which was syndicated by several other internet outlets – and the Czech CAGW lunatics were quite furious in the discussions 🙂 although I know that the trends still aren't too significant. – they will be in the comming decade and I think it will be the bitter end of the CAGW craze.

Here in Prague we have had first spring day yesterday – I mean one could go out without a winter jacket – before it was quite exceptionally cold with snow and almost all the time freezy. I see this on my March gas/heating meter/bill too – I pay even little more than in the February! I don't remember such a long winter here since my childhood in 70ties. It is indeed difficult to convince people who live through this about the dangers of a global warming which the EU still absurdly pushes.

Dr. Jan Zeman, Prague, Czech Republic

Kasuha:

I am quoting your entire post at April 8, 2013 at 3:26 pm so there is no possibility that I am quoting you out of context.

You need new spectacles if you think consideration of what is happening now “looks like … “cherry picking”. What period would not look like cherry picking to you?

The data is the data. It is not “supposed to mean” anything. It is what it is. That is where warmunists go wrong. Data can be interpreted to mean things according to the understandings of those who analyse it. But it is NOT “supposed to mean” what you – or anyone else – wants it to mean. However, an understanding why data is not supposed to mean anything requires an understanding of the scientific method, so there is no possibility of being able to explain it to a warmunist.

Similarly, different data sets provide a range of indications. Of course, warmunists select only the data which best provides the indication of what they think the data is supposed to mean. Hence, it is not surprising that you question a presentation of all the available data. Scientists consider all the available data.

You continue in like manner with your comments about ENSO. Whether or not you think ENSO should be included is not relevant to anything. The data is of reality, and ENSO is part of reality.

You say most GCMs don’t emulate ENSO. In fact, none of them do. And that alone is sufficient to demonstrate the models don’t emulate reality because ENSO is part of reality.

Your solution to this failure of the models to emulate reality is a suggestion that the measurements of reality should be adjusted to agree with the failure of the models. Clearly, Kasuha, you don’t understand this thing called science.

And, no, I am not going to discuss what you have misunderstood about Spencer’s work.

In conclusion, and as a kindness, I suggest you buy a clue before posting in future.

Richard

Kasuha says:

April 8, 2013 at 3:26 pm

Personally I don’t think the 1998 El Nino counts as part of the current flat trend, for instance.

I beg to differ for the following reasons. Please take a look at the two slope lines. One is for just over 16 years and the other is for just over 13 years. Both are flat. One starts before the 1998 El Nino and the other starts afterwards. The reason both are flat is that the La Ninas on either side of the El Nino cancel out the effect of the El Nino.

http://www.woodfortrees.org/plot/rss/from:1996.9/plot/rss/from:1996.9/trend/plot/rss/from:2000.08/trend

A while back, we were challenged to prove global warming had stopped 15 years ago. I did that and was promptly accused of cherry picking a start prior to the 1998 El Nino. I could not help it that 15 years ago took us on the other side of the El Nino. Another person started after the El Nino and was accused of not going 15 years! On RSS and several other data sets, 1998 has not been beaten yet. When would you consider it time to conclude the climate models are “not robust”?

Tume etc too long name LOL re solar activirty v global temps. I have noted that of late L Svaalgard has not been hoping in….. again LOL

@eliza

Somehow I haven’t heard about the alarmist “tipping point” for long time. Now it looks there was indeed a tipping point – the end of the solar cycle 22 :)))

jorgekafkazar said: “…Also, temperature doesn’t follow concentration; it follows a logarithmic value of the concentration ratio relative to Time = zero. This is a very different thing from what the graph actually illustrates.”

Werner Brozek responds: “On three data sets,the graphs show no relation between CO2 and temperature for 16 years. Is it possible that the effect of CO2 is saturated so that even the supposed logarithmic relationship is no longer valid?”

Quite true, none shown. And yes, very possible it’s significantly saturated. Have you ever estimated the average distance that radiation travels to space from a spherical mass of gas at ground level? [Hint: photons are very, very stupid.]

jorgekafkazar says:

April 8, 2013 at 6:27 pm

Have you ever estimated the average distance that radiation travels to space from a spherical mass of gas at ground level?

Try the following:

http://wattsupwiththat.com/2011/03/29/visualizing-the-greenhouse-effect-molecules-and-photons/

“In this posting, we consider the interaction between air molecules, including Nitrogen (N2), Oxygen (O2), Water Vapor (H2O) and Carbon Dioxide (CO2), with Photons of various wavelengths. This may help us visualize how energy, in the form of Photons radiated by the Sun and the Surface of the Earth, is absorbed and re-emited by Atmospheric molecules.”

I don’t understand why the uncertainties are degrees per decade. In a hundred years, if the earth’s temps were 1.23 degrees C warmer than today (consistently), would one say “No warming has occurred?”

Ed Barbar says:

April 8, 2013 at 9:26 pm

I don’t understand why the uncertainties are degrees per decade. In a hundred years, if the earth’s temps were 1.23 degrees C warmer than today (consistently), would one say “No warming has occurred?”

As far as SkS is concerned, they just happen to give the numbers per decade and WFT uses per year. That is no big deal. If the warming is 1.0/decade or 0.10/year, it would amount to the same thing.

As for what an additional 1.23 C would mean, of course it would be a warming, however I seem to be missing the context that you are talking about. Are you talking about a slope of 0.00 with an uncertainty of +/- 0.123/decade? In that case, we would say there is a 50/50 chance that warming or cooling of up to 1.23 C can occur in 100 years. And furthermore, we are 95% certain that 1.23 would not be exceeded in either direction. But if it had actually happened, then there would no longer be a probability of it happening.

But so what if it went from the present 0.80 to 2.03; would it be catastrophic and should billions be spent to prevent that from happening? We have survived the added 0.80 C very well and I just do not see that an additional 1.23 C would be all that bad. However that is a different topic.

Susan says:

April 8, 2013 at 11:28 am

If the hypothesis is correct, Mauna Loa should have shown something significant

in 2008-2009.

Since it didn’t where did the CO2 come from and what are the characteristics of

that source?

+++++++

The world slowed down, but China and emerging growth countries are GROWING and putting out more CO2 than other countries have cut. Even Germany is building a dozen new Coal Burning plants now while they are not growing.

Ed Barbar:

re your question at April 8, 2013 at 9:26 pm.

Warming is an increase in temperature.

So, if in a hundred years the temperature had not risen for 50 years then there would have been no warming for 50 years, and this would be true whatever the temperature then was,

Richard

> Personally I don’t think the 1998 El Nino counts as part of the

> current flat trend, for instance. It sure helps keeping the past

> end of many of your trend lines up, but at the time it was a

> huge positive anomaly, definitely not “business as usual”.

Ever heard of “Cherry Picking”? BTW, at that time, the warm-mongers were screaming about how it was going to be “the new normal”.

> And don’t forget that most of GCMs involved in the

> mentioned statement can’t actually simulate ENSO so you

> should remove the ENSO influence first, and only then

> start looking for flat trends you want to compare with them.

Let me get this straight… because your all-knowing/singing/dancing GCMs can’t model some parts of the climate record, you want that record thrown out? What’s next? Does all indication of cooling, which is contrary to the models, get thrown out, because it’s “inconvenient” for the carbon-tax money-grab? The science I was taught in high school was that if a theoretical model failed to conform with the data, the model was either thrown out (e.g. 19th century “ether” theory) or fixed until it did conform with the data. The science I was taught did not consist of throwing out data that did not conform with a theoretical model.

jorgekafkazar says

If I read Werner Brozek’s graph at the top of this post correctly; the sawtooth line is the plot of CO2 concentration in ppm(v) over time. It has nothing to do with the temperature. It does not need to have a zero point. What I think is causing the confusion is that there is no concentration scale on the graph. It would have been better to have put a concentration scale on the right side of the graph and making note that the trend lines and the concentration are not related.

Re rogerknights says: April 8, 2013 at 3:16 pm

With respect to Mr Lean’s perspective in the Telegraph;

He concedes that the “pause” in warming has occurred but sees it as a chance to avoid worse, more dangerous, warming that will supposedly follow unless certain policies are undertaken.

It may be useful to think about how long the pause needs to be before the policies become redundant.

If, for example, infrastructure has a thirty year life-cycle and the pause lasts thirty years then no policy would needs to be implemented. Natural wear and tear would lead to society compensating at no extra cost. And anything less than thirty years is arguably weather, not climate.

If infrastructure had a fifteen year life-cycle and the pause also lasts 15 years then any policies implemented in 1998 would have been wasteful and so harmful.

So how long is infrastructure expected to last?

That varies from system to system. Power plants, transport systems and flood defences will all vary depending on location, use and obsolescence from new technology. It is open to discussion. Don’t take my word as a Holy Oracle.

But the current pause is getting near the lower end of infrastructure lifetimes. For example EUROPEAN STANDARD EN 61400-1 “The design lifetime for wind turbine classes I to III shall be at least 20 years.”

And so the current pause is getting near the point where climate change is not a justification for that infrastructure.

We are experiencing exactly such a peak as in mid-forties; 30-year warming (1910-1945) followed by 30 years of cooling. Climate easily changes without help of CO2.

http://www.woodfortrees.org/plot/hadcrut3vgl/from:1910/to:1945

To

justthefactswuwt,

richardscourtney,

Mario Lento, and anyone else who responded.

While I suspect that due to the clueless WordPress, non-discussion technology, you likely won’t see this. Sigh.

Thanks for responding with all the measurements and beliefs that have been evangelized.

Unfortunately, as you mention, apart from a few bits of real data, must of what was espoused was conjectures, calculation (based on a multiplicity of assumptions), and wishful thinking.

We have real data and a real world event:

the U.S., supposedly 30% of the CO2 production,

has been flat for 8 years.

Mauna Loa doesn’t even come close to showing this:

it just keeps on trucking with no changes.

So, just where is it coming from?

As far as I can tell,

we don’t know

and

we have no clue.

so, No, the Climate models are just plastic models for belly bucking little boys.

For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.134 C/decade at the two sigma level from 1990

For UAH the warming is not significant for over 19 years.

For UAH: 0.146 +/- 0.170 C/decade at the two sigma level from 1994

For Hadcrut3 the warming is not significant for over 19 years.

For Hadcrut3: 0.095 +/- 0.115 C/decade at the two sigma level from 1994

For Hadcrut4 the warming is not significant for over 18 years.

For Hadcrut4: 0.095 +/- 0.110 C/decade at the two sigma level from 1995

For GISS the warming is not significant for over 17 years.

For GISS: 0.111 +/- 0.122 C/decade at the two sigma level from 1996

If you’re claiming that the warming is not significant, for it to be a meaningful statement you should state the level of the significance. In this case it’s at the 0.025 level, that is there’s somewhere between a 2.5% and 5% chance that it’s not warming, or there’s a greater than 95% chance that it’s actually warming! In horse race terms that’s 30 or 40 to one odds, looks like you’re backing an outsider!

greymouser70 says:

April 9, 2013 at 12:01 am

jorgekafkazar says

If I read Werner Brozek’s graph at the top of this post correctly; the sawtooth line is the plot of CO2 concentration in ppm(v) over time. It has nothing to do with the temperature. It does not need to have a zero point. What I think is causing the confusion is that there is no concentration scale on the graph. It would have been better to have put a concentration scale on the right side of the graph and making note that the trend lines and the concentration are not related.

The confusion is deliberate, only the CO2 data is normalized and the scale adjusted to fill the scale and plotted vs trends to give the impression that there’s a huge mismatch between CO2 and T. An honest way to present the data would be to normalize it all and plot the values (not the trends for T) on the same scale or plot ln(CO2) instead. If you realize that it’s the T trends that are plotted then what the graph is showing is that T is increasing linearly with a fairly constant trend (the CO2 is increasing with a fairly linear trend too, of ~0.5%/year).

Susan:

I am saddened by your reply at April 9, 2013 at 9:27 am .

It says

That is so wrong that it is risible.

Clearly, you did not read a word of the answer I took the trouble to provide.

OK. I have learned: you don’t ask questions wanting answers so I will not bother to provide an answer if you ask another question.

Richard

Phil.:

Your post at April 9, 2013 at 10:03 am displays complete ignorance of what confidence limits indicate.

To avoid others being misled by your post I copy a post I provided on another WUWT thread to here. Please note its part which concludes saying,

“And that is all the confidence limits say; nothing more.”

Richard

In the thread at

http://wattsupwiththat.com/2013/04/03/proxy-spikes-the-missed-message-in-marcott-et-al/

richardscourtney says:

April 6, 2013 at 6:44 am

Werner Brozek:

Thankyou for your post addressed to me at April 5, 2013 at 5:47 pm.

Following several attempts to answer your specific questions in different ways – and cognisant of the need to use language comprehensible to onlookers – I have decided to provide this general answer in hope that it adequately covers all the issues you raise.

A full and proper consideration of these issues requires reference to text books concerning the use of statistical procedures as part of the philosophy of science. This very brief answer is my attempt at an overall view of confidence limits.

Nothing is known with certainty, but some things can be inferred from a data set to determine probabilities of being ‘right’ and ‘wrong’. These probabilities are the ‘confidence’ which can be stated.

As illustration, consider a beach covered in pebbles.

There are millions of the pebbles. A random sample of, say, 100 pebbles is collected and each pebble is weighed. This provides 100 measurements each of the weight of an individual pebble. From this an average weight of a pebble can be calculated. One such average is the ‘mean’ and it is obtained by dividing the total weight of all the pebbles by the number of pebbles (in this case, dividing by 100).

The pebbles on the beach can then be said to have the deduced mean weight.

However, none of the pebbles in the sample may have a weight equal to the obtained average. In fact, none of the millions of pebbles on the beach may have a weight equal to that average. Any average – including a mean – is a statistical construct and is not reality.

(This difference of an average from reality is demonstrated by the average – i.e. mean – number of legs on people. On average people have less than two legs because nobody has more than two and a few people have less than two.)

In addition to considering the mean weight of pebbles in the sample, one can also determine the ‘distribution’ of weights in the sample. Every pebble may be within 1 gram of the mean weight. In this case, there is high probability that any pebble collected from the beach will have a weight within 1 gram of the obtained average. But that does NOT indicate there are no pebbles on the beach which are 10 grams heavier than the obtained average. (This leads to the wider discussions of sampling and randomness which I am ignoring.)

Importantly, there is likely to be a distribution of weights such that most pebbles in the sample each have a weight near the mean weight, and a few pebbles have weights much lower and much higher than that average. This may provide a uniform distribution of weights within the sample. However, the sample may not have a uniform distribution because no pebble can weigh less than nothing, but a few pebbles may be much, much heavier than the mean weight: in this case, the sample is said to be ‘skewed’.

Assuming the sample is uniform then it is equally likely that a pebble will be within a range of weights heavier or lighter than the mean weight. (If the sample is skewed in the suggested manner then the likely range of weights heavier than mean weight is greater than the likely range of weights lighter than that average.) These ranges are the + and – ‘errors’ of the average and have determined probabilities.

The probable error range of +/-X at 99% confidence says that 99 out of 100 pebbles will probably be within X of the mean.

The probable error range of +/-X at 95% confidence says that 95 out of 100 (i.e. 19 out of 20) pebbles will probably be within X of the mean.

The probable error range of +/-X at 90% confidence says that 90 out of 100 (i.e. 9 out of 10) pebbles will probably be within X of the mean.

etc.

And that is all the confidence limits say; nothing more.

Therefore, if the weights of two pebbles are within the probable error range then at the stated confidence they cannot be distinguished as being different from the sample mean. And if a ‘heavy’ pebble has a weight within the probable error then that pebble’s weight says nothing about the sample mean.

In the above example, the sample mean is a statistical construct obtained from individual measurements of pebbles. And it is meaningless in the absence of a probable error with stated confidence: such an absence removes any indication of what the weight of a pebble from the beach is likely to be. And every pebble has equal chance of being within the +/- range of the mean.

The linear trend of a time series is also a statistical construct obtained from individual measurements. It has confidence limits with stated probability and it, too, is meaningless without such confidence limits.

At stated confidence a trend is equally likely to have any value within its limits of probable error.

(This is the same as any pebble is equally likely to have any weight within its limits of probable error from the mean weight.)

Therefore, if the trend is 0.003 ±0.223 °C/decade at 95% confidence then there is a 19:1 probability that the trend is somewhere between

(0.003-0.223) = -0.220 °C/decade and (0.003+0.223) = 0.226 °C/decade.

And, in this example, -0.220 °C/decade is not discernibly different from 0.226 °C/decade or from any value between them. There is a range of values from -0.220 °C/decade to 0.226 °C/decade which are not discernibly different. And similar is true for all probable error ranges.

Importantly, this lack of discernible difference is not affected by whether or not the range straddles 0.000 °C/decade.

I hope this is adequately clear.

Richard

Phil. says:

April 9, 2013 at 10:03 am

In this case it’s at the 0.025 level, that is there’s somewhere between a 2.5% and 5% chance that it’s not warming, or there’s a greater than 95% chance that it’s actually warming!

In addition to what Richard has already said on this matter, I would like to add the following. As you can see from NOAA’s statement at the start of my article, the climate community regards 95% as the appropriate level by which to measure whether warming is “significant” or not.

Generally speaking, I agree with you. When it comes to the 2.5%, that would be more true when I give the nearest month for which there is no significant warming. So for RSS, there would be about a 2.5% chance that there is cooling from September 1989. However from 1990, the numbers are 0.127 +/- 0.134. So that gives a 0.007/0.268 = 0.026 or a 2.6% chance of being below 0 based on the 95%. Then when we add the other 2.5%, we get 5.1%. In other words, there is a 94.9% chance of warming since 1990 based on RSS since 1990. I agree this is a large number! But the more important number for me is that since December, 1996, or for 16 years and 4 months, there is a less than 50% chance of warming since the slope is very slightly negative at -2.3524e-05 per year.

Susan says:April 8, 2013 at 10:24 am

Steve Keohane says: April 8, 2013 at 10:08 am

Humans account for some 3-4% of the CO2 released into the

atmosphere annually, so changing a fraction of a percent

of that 3-4% doesn’t do much.

OK,

so where does the CO2 indicated by Mauna Loa actually come from on the cycle measured?

Do we have any empirical measurements showing the source?

Here is a video of the AIRS satellite showing where in the world it comes from.

http://airs.jpl.nasa.gov/news_archive/2010-03-30-CO2-Movie/

Under Images and then CO2 on the menu bar to the left of this video, there are many options to look at CO2 over time.

Phil. says:

April 9, 2013 at 10:22 am

The confusion is deliberate, only the CO2 data is normalized and the scale adjusted to fill the scale and plotted vs trends to give the impression that there’s a huge mismatch between CO2 and T.

See:

http://newsbusters.org/blogs/noel-sheppard/2012/12/04/climate-realist-marc-morano-debates-bill-nye-science-guy-global-warmi#ixzz2E8w1uK5F

Here is part of the exchange between Nye and Morano:

“MORANO: Sure. Carbon dioxide is rising. What’s your point?

NYE: OK.

MORANO: No.

NYE: So here’s the point, is it’s rising extraordinarily fast. That’s the difference between the bad old days and now is it’s –”

Note that it is Nye, one of “your” people, who says that CO2 is “ rising extraordinarily fast”. And you accuse me of misrepresenting things? Would Nye not fully endorse what I showed?

However if you wish, we could look at it another way. Let us compare the change with reference to 0 K and 0 CO2 since 1750. It is generally agreed that since 1750, temperatures have gone up by 0.8 C and that CO2 has gone up from 280 ppm to 395 ppm. If we convert temperatures to K, the percent increase is (287.8 – 287)287 x 100 = 0.28%. However the CO2 increase is (395 – 280)/280 x 100 = 41%!

richardscourtney @April 9, 2013 at 11:43 am

1. 30% risible..

2. didn’t read…

3. don’t want answers

a) according to one source, the U.S. in 2011 was 8,876 mt out of 33,992 world mt.

(everyone’s numbers can and do vary)

=> somewhere between 25 and 30% of anthropological CO2 is allegedly the U.S.

b) Yes, I read most of the diatribes.

While interesting, they repeat of what has been articulated

many times.

c) so, just how does Mauna Loa measurements tie to reality?

I’m still waiting for an “out of the box”, thinking answer

rather than a regurgitation of the mundane, party line,

which is known to be an over simplification of an ill-understood

set of phenomena.

So, any good ideas on just what Mauna Loa actually measures from a source perspective?