Guest Post By Werner Brozek, Edited By Just The Facts

In order to answer the question in the title, we need to know what time period is a reasonable period to take into consideration. As well, we need to know exactly what we mean by “stalled”. For example, do we mean that the slope of the temperature-time graph must be 0 in order to be able to claim that global warming has stalled? Or do we mean that we have to be at least 95% certain that there indeed has been warming over a given period?

With regards to what a suitable time period is, NOAA says the following:

”The simulations rule out (at the 95% level) zero trends for intervals of 15 yr or more, suggesting that an observed absence of warming of this duration is needed to create a discrepancy with the expected present-day warming rate.”

To verify this for yourself, see page 23 of this NOAA Climate Assessment.

Below we present you with just the facts and then you can assess whether or not global warming has stalled in a significant manner. The information will be presented in three sections and an appendix. The first section will show for how long there has been no warming on several data sets. The second section will show for how long there has been no significant warming on several data sets. The third section will show how 2012 ended up in comparison to other years. The appendix will illustrate sections 1 and 2 in a different way. Graphs and tables will be used to illustrate the data.

Section 1

This analysis uses the latest month for which data is available on WoodForTrees.org (WFT). (If any data is updated after this report is sent off, I will do so in the comments for this post.) All of the data on WFT is also available at the specific sources as outlined below. We start with the present date and go to the furthest month in the past where the slope is a least slightly negative. So if the slope from September is 4 x 10^-4 but it is – 4 x 10^-4 from October, we give the time from October so no one can accuse us of being less than honest if we say the slope is flat from a certain month.

On all data sets below, the different times for a slope that is at least very slightly negative ranges from 8 years and 3 months to 16 years and 1 month:

1. For GISS, the slope is flat since May 2001 or 11 years, 7 months. (goes to November)

2. For Hadcrut3, the slope is flat since May 1997 or 15 years, 7 months. (goes to November)

3. For a combination of GISS, Hadcrut3, UAH and RSS, the slope is flat since December 2000 or an even 12 years. (goes to November)

4. For Hadcrut4, the slope is flat since November 2000 or 12 years, 2 months. (goes to December.)

5. For Hadsst2, the slope is flat since March 1997 or 15 years, 10 months. (goes to December)

6. For UAH, the slope is flat since October 2004 or 8 years, 3 months. (goes to December)

7. For RSS, the slope is flat since January 1997 or 16 years and 1 month. (goes to January) RSS is 193/204 or 94.6% of the way to Ben Santer’s 17 years.

The following graph, also used as the header for this article, shows just the lines to illustrate the above. Think of it as a sideways bar graph where the lengths of the lines indicate the relative times where the slope is 0. In addition, the sloped wiggly line shows how CO2 has increased over this period:

The next graph shows the above, but this time, the actual plotted points are shown along with the slope lines and the CO2 is omitted:

Section 2

For this analysis, data was retrieved from WoodForTrees.org and the ironically named SkepticalScience.com. This analysis indicates how long there has not been significant warming at the 95% level on various data sets. The first number in each case was sourced from WFT. However the second +/- number was taken from SkepticalScience.com

For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.136 C/decade at the two sigma level from 1990

For UAH, the warming is not significant for over 19 years.

For UAH: 0.143 +/- 0.173 C/decade at the two sigma level from 1994

For Hacrut3, the warming is not significant for over 19 years.

For Hadcrut3: 0.098 +/- 0.113 C/decade at the two sigma level from 1994

For Hacrut4, the warming is not significant for over 18 years.

For Hadcrut4: 0.095 +/- 0.111 C/decade at the two sigma level from 1995

For GISS, the warming is not significant for over 17 years.

For GISS: 0.116 +/- 0.122 C/decade at the two sigma level from 1996

If you want to know the times to the nearest month that the warming is not significant for each set, they are as follows: RSS since September 1989; UAH since April 1993; Hadcrut3 since September 1993; Hadcrut4 since August 1994; GISS since October 1995 and NOAA since June 1994.

Section 3

This section shows data about 2012 in the form of tables. Each table shows the six data sources along the left, namely UAH, RSS, Hadcrut4, Hadcrut3, Hadsst2, and GISS. Along the top, are the following:

1. 2012. Below this, I indicate the present rank for 2012 on each data set.

2. Anom 1. Here I give the average anomaly for 2012.

3. Warm. This indicates the warmest year on record so far for that particular data set. Note that two of the data sets have 2010 as the warmest year and four have 1998 as the warmest year.

4. Anom 2. This is the average anomaly of the warmest year just to its left.

5. Month. This is the month where that particular data set showed the highest anomaly. The months are identified by the first two letters of the month and the last two numbers of the year.

6. Anom 3. This is the anomaly of the month immediately to the left.

7. 11ano. This is the average anomaly for the year 2011. (GISS and UAH were 10th warmest in 2011. All others were 13th warmest for 2011.)

Anomalies for different years:

| Source | 2012 | anom | warm | anom | month | anom | 11ano |

|---|---|---|---|---|---|---|---|

| UAH | 9th | 0.161 | 1998 | 0.419 | Ap98 | 0.66 | 0.130 |

| RSS | 11th | 0.192 | 1998 | 0.55 | Ap98 | 0.857 | 0.147 |

| Had4 | 10th | 0.436 | 2010 | 0.54 | Ja07 | 0.818 | 0.399 |

| Had3 | 10th | 0.403 | 1998 | 0.548 | Fe98 | 0.756 | 0.340 |

| sst2 | 8th | 0.342 | 1998 | 0.451 | Au98 | 0.555 | 0.273 |

| GISS | 9th | 0.56 | 2010 | 0.66 | Ja07 | 0.93 | 0.54 |

If you wish to verify all rankings, go to the following:

For UAH, see here, for RSS see here and for Hadcrut4, see here. Note the number opposite the 2012 at the bottom. Then going up to 1998, you will find that there are 9 numbers above this number. That confirms that 2012 is in 10th place. (By the way, 2001 came in at 0.433 or only 0.001 less than 0.434 for 2012, so statistically, you could say these two years are tied.)

For Hadcrut3, see here. You have to do something similar to Hadcrut4, but look at the numbers at the far right. One has to back to the 1940s to find the previous time that a Hadcrut3 record was not beaten in 10 years or less.

For Hadsst2, see here. View as for Hadcrut3. It came in 8th place with an average anomaly of 0.342, narrowly beating 2006 by 2/1000 of a degree as that came in at 0.340. In my ranking, I did not consider error bars, however 2006 and 2012 would statistically be a tie for all intents and purposes.

For GISS, see here. Check the J-D (January to December) average and then check to see how often that number is exceeded back to 1998.

For the next two tables, we again have the same six data sets, but this time the anomaly for each month is shown. [The table is split in half to fit, if you know how to compress it to fit the year, please let us know in comments The last column has the average of all points to the left.]

| Source | Jan | Feb | Mar | Apr | May | Jun |

|---|---|---|---|---|---|---|

| UAH | -0.134 | -0.135 | 0.051 | 0.232 | 0.179 | 0.235 |

| RSS | -0.060 | -0.123 | 0.071 | 0.330 | 0.231 | 0.337 |

| Had4 | 0.288 | 0.208 | 0.339 | 0.525 | 0.531 | 0.506 |

| Had3 | 0.206 | 0.186 | 0.290 | 0.499 | 0.483 | 0.482 |

| sst2 | 0.203 | 0.230 | 0.241 | 0.292 | 0.339 | 0.352 |

| GISS | 0.36 | 0.39 | 0.49 | 0.60 | 0.70 | 0.59 |

| Source | Jul | Aug | Sep | Oct | Nov | Dec | Avg |

|---|---|---|---|---|---|---|---|

| UAH | 0.130 | 0.208 | 0.339 | 0.333 | 0.282 | 0.202 | 0.161 |

| RSS | 0.290 | 0.254 | 0.383 | 0.294 | 0.195 | 0.101 | 0.192 |

| Had4 | 0.470 | 0.532 | 0.515 | 0.527 | 0.518 | 0.269 | 0.434 |

| Had3 | 0.445 | 0.513 | 0.514 | 0.499 | 0.482 | 0.233 | 0.403 |

| sst2 | 0.385 | 0.440 | 0.449 | 0.432 | 0.399 | 0.342 | 0.342 |

| GISS | 0.51 | 0.57 | 0.66 | 0.70 | 0.68 | 0.44 | 0.56 |

To see the above in the form of a graph, see the WFT graph below.:

Appendix

In this part, we are summarizing data for each set separately.

RSS

The slope is flat since January 1997 or 16 years and 1 month. (goes to January) RSS is 193/204 or 94.6% of the way to Ben Santer’s 17 years.

For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.136 C/decade at the two sigma level from 1990.

For RSS, the average anomaly for 2012 is 0.192. This would rank 11th. 1998 was the warmest at 0.55. The highest ever monthly anomaly was in April of 1998 when it reached 0.857. The anomaly in 2011 was 0.147 and it will come in 13th.

Following are two graphs via WFT. Both show all plotted points for RSS since 1990. Then two lines are shown on the first graph. The first upward sloping line is the line from where warming is not significant at the 95% confidence level. The second straight line shows the point from where the slope is flat.

The second graph shows the above, but in addition, there are two extra lines. These show the upper and lower lines for the 95% confidence limits. Note that the lower line is almost horizontal but slopes slightly downward. This indicates that there is a slightly larger than a 5% chance that cooling has occurred since 1990 according to RSS per graph 1 and graph 2.

UAH

The slope is flat since October 2004 or 8 years, 3 months. (goes to December)

For UAH, the warming is not significant for over 19 years.

For UAH: 0.143 +/- 0.173 C/decade at the two sigma level from 1994

For UAH the average anomaly for 2012 is 0.161. This would rank 9th. 1998 was the warmest at 0.419. The highest ever monthly anomaly was in April of 1998 when it reached 0.66. The anomaly in 2011 was 0.130 and it will come in 10th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to UAH. Graph 1 and graph 2.

Hadcrut4

The slope is flat since November 2000 or 12 years, 2 months. (goes to December.)

For Hacrut4, the warming is not significant for over 18 years.

For Hadcrut4: 0.095 +/- 0.111 C/decade at the two sigma level from 1995

With Hadcrut4, the anomaly for 2012 is 0.436. This would rank 10th. 2010 was the warmest at 0.54. The highest ever monthly anomaly was in January of 2007 when it reached 0.818. The anomaly in 2011 was 0.399 and it will come in 13th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to Hadcrut4. Graph 1 and graph 2.

Hadcrut3

The slope is flat since May 1997 or 15 years, 7 months (goes to November)

For Hacrut3, the warming is not significant for over 19 years.

For Hadcrut3: 0.098 +/- 0.113 C/decade at the two sigma level from 1994

With Hadcrut3, the anomaly for 2012 is 0.403. This would rank 10th. 1998 was the warmest at 0.548. The highest ever monthly anomaly was in February of 1998 when it reached 0.756. One has to back to the 1940s to find the previous time that a Hadcrut3 record was not beaten in 10 years or less. The anomaly in 2011 was 0.340 and it will come in 13th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to Hadcrut3. Graph 1 and graph 2.

Hadsst2

The slope is flat since March 1997 or 15 years, 10 months. (goes to December)

The Hadsst2 anomaly for 2012 is 0.342. This would rank in 8th. 1998 was the warmest at 0.451. The highest ever monthly anomaly was in August of 1998 when it reached 0.555. The anomaly in 2011 was 0.273 and it will come in 13th.

Sorry! The only graph available for Hadsst2 is this.

GISS

The slope is flat since May 2001 or 11 years, 7 months. (goes to November)

For GISS, the warming is not significant for over 17 years.

For GISS: 0.116 +/- 0.122 C/decade at the two sigma level from 1996

The GISS anomaly for 2012 is 0.56. This would rank 9th. 2010 was the warmest at 0.66. The highest ever monthly anomaly was in January of 2007 when it reached 0.93. The anomaly in 2011 was 0.54 and it will come in 10th.

Following are two graphs via WFT. Everything is identical as with RSS except the lines apply to GISS. Graph 1 and graph 2.

Conclusion

Above, various facts have been presented along with sources from where all facts were obtained. Keep in mind that no one is entitled to their own facts. It is only in the interpretation of the facts for which legitimate discussions can take place. After looking at the above facts, do you think that we should spend billions to prevent catastrophic warming? Or do you think that we should take a “wait and see” attitude for a few years to be sure that future warming will be as catastrophic as some claim it will be? Keep in mind that even the MET office felt the need to revise its forecasts. Look at the following and keep in mind that the MET office believes that the 1998 mark will be beaten by 2017. Do you agree?

An error seems to have been made with all three tables. The new ones should have been put in with all December numbers up to date and with Hadcrut3 and 4 in 10th place.

Fitting a straight line to time series data assumes that the data generating process is linear.

We are quite sure that the underlying process is not linear. The imposition of a linear model is an analyst choice. This choice has an associated uncertainty such that you must either demonstrate that the physical process is linear or add uncertainty due to your model selection.

next, you need to look at autocorrelation before you make confidence intervals.

Well, I’m going to start with the premise that maybe global warming hasn’t stalled. It has stalled, but let’s just say that it hasn’t.

So what? The point is that we are recovering from the Little Ice Age, and the warming has been the product of nature, not of a theorized CO2 GHE. All they have actually is a theoretical model to back their conception of CO2’s GHE. No evidence. And other seemingly equally valid theoretical models do not lead CO2 to raise climate temps; for example, one model maintains that the GHE of CO2 is effectively nil after 200ppm.

The IPCC actually maintained that they had evidence of a causal correlation between CO2 & temperature until around 2004, when they were forced to acknowledge that there was no evidence. None. Nevertheless, Al Gore went ahead in his 2005 movie deceptively suggesting there was evidence. Because by and large the people don’t actually know about Al Gore & the IPCC’s deception on CO2, I ask that if possible you share and help spread the word about this key M4GW produced 3 minute video that shows algor’s blatant deception on CO2: http://www.youtube.com/watch?v=WK_WyvfcJyg&info=GGWarmingSwindle_CO2Lag

Werner/JTF, you also need to place a breakpoint somewhere near the start of the article. This takes up a lot of the WUWT front page.

Werner and JTFW, thank you.

Just above the Appendix should be this URL:

To see the above in the form of a graph, see the WFT graph below.

http://www.woodfortrees.org/plot/wti/from:2012/plot/gistemp/from:2012/plot/uah/from:2012/plot/rss/from:2012/plot/hadsst2gl/from:2012/plot/hadcrut4gl/from:2012/plot/hadcrut3gl/from:2012

Appendix

As well, the very last one at the end of the conclusion should have been:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16

Climate is always warming or cooling. It does tend to vary within some range for a time, though, unless something happens where the entire variation jumps to a new regime. The temperatures of the late 1990’s and early 2000’s for example topped out at about where they were in the 1930’s and 1940’s. There was really no “warming” in the second half of the 20th century. Temperatures cooled until the late 1970’s and then warmed back to where they were in the 1930’s.

Since the Dalton Minimum we have had the longest stretch of strong solar cycles since the Medieval Warm Period without a grand minimum. We also see the warmest temperatures since the Medieval Warm Period. If we are currently experiencing a period of several weak cycles, we might be in for a regime change as far as climate goes but on top of that regime we may still see a cycle of variation both warmer and cooler as we did in the LIA. Not all periods during the LIA were as cold as other periods.

What bothers me most about all of this “global warming” hype is that a brief portion of the temperature record was compared to CO2 change and causation was declared while at the same time ignoring previous recorded temperature changes where CO2 was not a factor (say 1912 to 1935).

Werner Brozek says: February 10, 2013 at 2:06 pm

An error seems to have been made with all three tables. The new ones should have been put in with all December numbers up to date and with Hadcrut3 and 4 in 10th place.

Corrected.

Dear Supporter

It seems that the temperature graphs are capable of misinterpretation by non-believers. This has led to unfortunate and counter-productive questions being raise by those who wish harm to The Cause. The tentacles of the Big Oil Funded Denier Conspiracy run deep.

We would like to assure all our supporters that we will leave no stone unturned in our quest to eliminate such disbelief.

As a first step, we have appointed a high-powered Adjustment Task Force. Their remit will be to understand in depth the root causes of such misinterpretation and make such data adjustments as are necessary to prevent their recurrence. They are taking guidance in this matter from the Modelling Secretariat to ensure that correct interpretations will then be made.

Secondly they will issue new guidelines on acceptable data for publication. ‘Observers’ please note…data falling outside of the expected ranges (as determined by the Modelling Secretariat) will be rejected.

Please delete this e-mail on receipt. Our colleagues in Norwich England had some difficulties by not doing so, so we urge you to double check.

William McClenney says: February 10, 2013 at 2:22 pm

Werner/JTF, you also need to place a breakpoint somewhere near the start of the article. This takes up a lot of the WUWT front page.

Added, thank you.

Werner Brozek says: February 10, 2013 at 2:29 pm

Just above the Appendix should be this URL:

To see the above in the form of a graph, see the WFT graph below.

http://www.woodfortrees.org/plot/wti/from:2012/plot/gistemp/from:2012/plot/uah/from:2012/plot/rss/from:2012/plot/hadsst2gl/from:2012/plot/hadcrut4gl/from:2012/plot/hadcrut3gl/from:2012

Appendix

As well, the very last one at the end of the conclusion should have been:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16

Corrected.

What’s the slope since Jan 1 1000 CE? 1 CE?

OOPs!

Appendix

As well, the very last one at the end of the conclusion should have been:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16

Corrected.

The very last one is the above going from 1990.

Climate Control Central Command (‘Data’ and ‘Observations’ Secretariat)

February 10, 2013 at 2:39 pm

###

Do you write for a living? If not, you should. Your post was brilliant.

Steven Mosher says:

February 10, 2013 at 2:16 pm

We are quite sure that the underlying process is not linear.

I agree with you. It should be a sine wave. But how would NOAA even make a statement based on a sine wave? As well, WFT works best with straight lines. So while this is not perfect, we have to use the tools we have.

Then we have some more for next year if global mean temps fail to rise.

After which the non-deniers have a choice. Deny or accept observations? No?

Steven Mosher says:

February 10, 2013 at 2:16 pm

next, you need to look at autocorrelation before you make confidence intervals.

True, but he’s just established that there is no trend over a given section. How could there be autocorrelation?

It is easy to say that the temperatures are still increasing. Take zero at 1996, say that ’98 and a little bit after was the “warm” part of a natural cycle, and the current bit, the “cool” part of the same cycle. Split the difference, in other words: a clear warming trend from 1996 to today.

But …

The assumption here is that we had a warm-cool cycle from 1998 to today. In order to get back to “business-as-usual” CO2 warming, we must start warming fairly soon, and get a double digit warming as the “cooling” trend stops (moreso if another natural warming cycle starts). So we need to have the temps going up by (in my view) 2015, or two years away.

Now the caveat and CAGW resolution: NOAA said a 15-year stall was not happening at a 95% , level. Okay, it is a 25-year event, predicted only at a 75% level. Remember, a thing before it happens, can have a 1% certainty of happening, but once it happens, it had a 100% chance of occurring. On a 1000-year level, a 25 year stall is unexpected, but does happen every so often.

That what is happening right now: or so the argument could go.

We only give up our fight for the future when 1) the future is upon us, and 2) we don’t care any more. If 1) happens but 2) doesn’t, we’ll just change the date.

Jimbo says: @ February 10, 2013 at 3:39 pm

…..After which the non-deniers have a choice. Deny or accept observations? No?

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

Nah, they just change the goal posts. The monster in the closet has already morphed from “An Ice Age is Coming” to “Global Warming” to “Climate Change” to “Weather Weirding”

The only thing that remains the same is the need for a crisis.

“The whole aim of practical politics is to keep the populace alarmed (and hence clamorous to be led to safety) by menacing it with an endless series of hobgoblins, all of them imaginary…. Civilization, at bottom, is nothing but a colossal swindle.” ~ H.L. Mencken

Mencken really hit the nail on the head with this one:

“As democracy is perfected, the office of president represents, more and more closely, the inner soul of the people. On some great and glorious day the plain folks of the land will reach their heart’s desire at last, and the White House will be adorned by a downright moron.”

As usual, the purported skeptics here fail to look at the interesting question, what is the longest period for each data set such that all trends shorter than that period are differ from a trend of 0.21 C per decade (the IPCC predicted value) by a statistically significant amount.

If you only look at the period in which the data does not differ statistically from zero, you are only using the dominance of noise in short term trends to evade the data, not to analyze it.

I’ve got a bit of a problem with the representation of CO2 (compared to linear trends) in the first graph. If you’d plotted the expected temperature response to the CO2 increase that might be more acceptable. Between 1998 and 2012 the annual average CO2 concentration has risen from ~367 ppm to ~394 ppm which according to the Myhre et al formula should produce a forcing of about 0.4 watts/m2 . If you’re a CAGW advocate this equates to a warming of 0.25-0.3 deg C . For a lukewarmer it’s more like 0.1 deg C. I doubt there is a statistically significant difference between the ‘lukewarmer’ projections and (any of) the actual data. This is possibly not the case for the CAGW projections but this ignores other factors such as reduced solar activity over the past decade (~0.1 deg C ??).

In a nutshell: the lukewarmer projections look well on track while the lower end CAGW projections are still possible.

My take – for what it’s worth.

Here is a WfT graph showing HadCrut4 temperature from 1850 – present day.

It is instructive to compare the periods ~1910-1940 (previous to the commencement of anthropogenic influence on climate) and the period ~1970-2000, the trends for which periods are effectively identical in duration and gradientt.

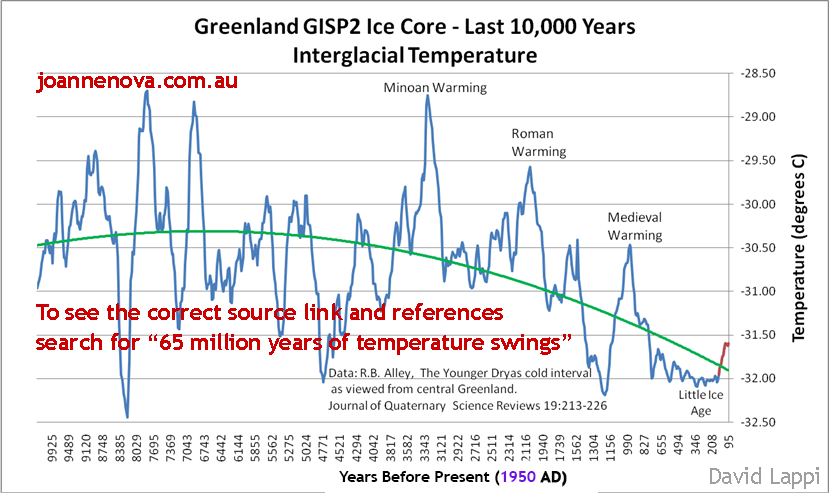

Clearly visible is the ~0.5 deg per century background warming – probably a section of the positive phase of the ~1000 year cycle responsible for the well-documented Minoan, Roman and Little Ice Ages and the respective cold periods separating them, and thus likely to change sign at some time in the future..

This is overlaid by a ~60 year harmonic that correlates quite well with the product of the Atlantic and Pacific ocean oscillations, correlating also with the cooling observed since ~2000.

Particularly noticeable is the statistically practically identical positive phases ~1910 – 1940 and ~1970 – 2000, for which Warmists for some unfathomable reason insist on different causes, presumably being unfamiliar with Occam’s Razor.

It appears from this graph that the cooling that has occurred since ~2000 will reverse ~2030.

This would suggest that – unless the solar effects overcome the current influences – the climate will continue to cool for the remainder of the current negative phase, until ~2030, when warming will recommence for a further ~30 year positive cycle.

http://www.woodfortrees.org/plot/hadcrut4gl/from:1850/trend/plot/hadcrut4gl/from:1910/to:1940/trend/plot/hadcrut4gl/from:2001/trend/offset:-0.1/plot/hadcrut4gl/from:1860/to:1880/trend/plot/hadcrut4gl/from:1970/to:2000/trend/plot/hadcrut4gl/from:1880/to:1910/trend/plot/hadcrut4gl/from:1940/to:1970/trend/plot/hadcrut4gl/from:1850/to:1860/trend/plot/hadcrut4gl/mean:120

Further to Climate Control Central Command (‘Data’ and ‘Observations’ Secretariat) says:

February 10, 2013 at 2:39 pm.

Also for our comrades in East Anglia – if questioned by children as to why more white fluffy stuff is coming out of the sky they should be reminded that this is just another very rare and exciting event and in no way should be compared with the last event in January which cancelled trains to Norwich and closed over 2000 schools – which was extremely rare and exciting.

(Anglia News of about 2 hours ago:

More snow is falling in many parts of the Anglia region and it’s settling in some places. Up to 10 cm (4 inches) could accumulate in places. All the region’s councils have gritters out as temperatures are expected to fall close to freezing)

Your concise analysis is appreciated. Woodfortrees is a handy tool. Here is another graph with a longer baseline using only BEST, UAH and RSS.

http://www.woodfortrees.org/plot/best/to:1980/plot/rss-land/plot/uah-land

The real issue is the underlying data. There are people who say that the historical LAND surface temps no longer represent reality due to uncheckable computer modifications. The US/NOAA/GISS data are based on data from weather stations that were never intended to maintain scientific integrity. Hadcru has similar problems. In fact, very few surface temperature stations were ever established with any intent of long term scientific value. The surfacestations project shows the major problem, ie “urban” contamination. Another major problem is the Time of Day Bias (TOBS), which is essentially insoluble, but GHCN, NOAA and Hadcru have big computers, so they must be ok.

Here is an example of a selective analysis of good rural stations.

http://hidethedecline.eu/pages/ruti/europe/western-europe-rural-temperature-trend.php

Rural weather stations show zero warming.

Another example is Antarctica. Note these four Amundsen-Scott, Vostok, Halley and Davis stations established in 1957.

All are maintained with the intent of long term scientific integrity, and all are located in a place where the warmers claim will show the first signs of CO2 induced global warming. All four stations show zero warming since 1957. These data alone refute the entire IPCC argument.

In reality, no global network of surface temperature stations exists even today. And certainly not over the global ocean which is 70 percent of the planet.

What DO we have??

Well, 30 years of satellites that don’t really measure surface temps. The USCRN is not global and it’s only 10 years old. Proxy temps have huge error bars, and they may not be global proxies. Ocean thermometers are pretty much non-existent.

As for IPCC being an “authority” on the issue, I’ll never base my understanding on the claims of any “authority”. For this issue, see Delingpole or a few other good sources such as this older summary — http://www.john-daly.com/forcing/moderr.htm

Doug Proctor:

At February 10, 2013 at 4:05 pm you say

Sorry, but that is NOT what NOAA said.

The NOAA falsification criterion is on page S23 of its 2008 report titled ‘The State Of The Climate’ and can be read at

http://www1.ncdc.noaa.gov/pub/data/cmb/bams-sotc/climate-assessment-2008-lo-rez.pdf

It says

So, the climate models show “Near-zero and even negative trends are common for intervals of a decade or less in the simulations”.

But, the climate models RULE OUT “(at the 95% level) zero trends for intervals of 15 yr or more”.

We now see that reality has had (at the 95% level) zero trends for more than 17 years whether or not one interpolates across or extrapolates back across the 1998 ENSO peak.

The facts are clear.

According to the falsification criterion set by NOAA in 2008, the climate models are falsified by the recent period of 16+ years of (at 95% confidence) zero global temperature trend. This is because NOAA says the climate models simulations often show periods of 10 years when global temperature trends are zero or negative but the simulations rule out near zero trends in global temperature for periods of 15 years. What the models “rule out” nature has done.

We need to clearly and repeatedly remind of what NOAA said in 2008. Otherwise the goal posts will be moved and and moved again until they are off the planet.

Richard

On Feb 10 at 2:16 pm, Steven Mosher says:

“We are quite sure that the underlying process is not linear. The imposition of a linear model is an analyst choice.”

I am quite sure that nobody has any idea what the underlying process is. It has been implied frequently that the underlying process is linear, but I have never seen anybody step up to the plate and say that the underlying process is one thing or another. If someone was willing to do that, one could at least devise an experiment to confirm or falsify that hypothesis. It seems pretty intuitive that the process is not linear, but the notion that it is up to the experimentalist to prove that, boggles my mind.

The business of taking the average of an ensemble of simulations is not even vaguely convincing. The best case is that one is correct and the others are not, but we don’t know which one is correct, do we? Another possibility is that none is correct. Given that all of the GCM’s use the basic notion that GHG concentration is the prime driver, and that CO2 is very, very important, they could all have the same fallacy built in. Maybe that’s not true, you know.

For us (or maybe just me) statistics dummies, could you briefly explain what “2 sigma” means, and how it relates to the term “statistically significant”. I went to the SkS website and played with their trend calculator, and read some of the definitions. For example if a linear trend is 0.003 +/- 0.003, then the slope of the trend is between 0.000 and 0.006. But what does the 2 sigma mean, and how do you determine statistical significance? And what does that +/- range mean?

Thanks

[Reply: Sigma is shorthand for ‘standard deviation’ (that probably doesn’t explain much if you are not familiar with statistics already). The more standard deviations you have, the further you are from average. The -/+ means how much the value is likely to vary. So saying I have an 8 ounce cup of tea +/- 1 means I might have anywhere form a 7 ounce to a 9 ounce cup of tea. Statistical significance takes more than a note to explain, but mostly says how much you can trust a conclusion. If I have a sample of 6 cups of tea and they are average 8 ounces, it might be that the next one is 9 or 10. If I have a sample of 1200 cups, and they are 8 ounces +/- 0.1 ounce, it is highly likely (very statistically significant) that my next cup will be betwwn 7.9 and 8.1 ounces. I can’t say that about my first sample as it is too small to be ‘statistically significant’. Back on that trend and +/-: When a trend has a value the same as the error range, it means you don’t know much about the trend. Having $2 +/- $2 says I might have money, or not, in equal probability… -ModE]

Not too happy about the way you put CO2 levels on the combined graph. What is the vertical axis in this picture? A temperature anomaly? Where is the axis for the CO2 levels? It clearly doesn’t start at zero. A wiggly line going up? I guess CO2 increased. But without numbers attached this is pretty meaningless. With no axis to pin this down you could scale it all over the page. So what does that wiggly line actually mean. Without an axis to give it context, not much.

Tom Jones says:

February 10, 2013 at 6:39 pm

The business of taking the average of an ensemble of simulations is not even vaguely convincing.

========

It is a nonsense. The earth has a near infinite number of possible futures. This is clearly shown by quantum mechanics. Some of those futures are more probable than others, but it is beyond our ability to calculate the probabilities.

Taking the average of 10 wrong answers does not improve the quality of the answer. If you ask 10 idiots the square root of a 100, will the average be closer to the correct answer than any one of the answers? No, it is 10 times more likely that one of the idiots will be closer to the true answer than is the average.

The reason for this is simple. The idiots have 10 chances to get closer to the correct answer. The average only has 1. Thus you would expect that if you repeated the exercise 11 times, only once would the average be closer. The other 10 times one of the idiots would beat the average

Can you imagine the panic and pandemonium if temperatures start trending downward?

For a variety of reasons, nothing would please me more.

SkepticGoneWild says:

February 10, 2013 at 6:42 pm

[Reply: Sigma is shorthand for ‘standard deviation’

=========

To complicate things further, most calculations of ‘standard deviation’ rely on the assumption that the underlying data is a “normal distribution”. Most of our statistical theory relies on this assumption.

However, it has been found that most time-series data (such as stock markets and climate) does not follow a normal distribution. This means that our confidence levels are often incorrect about how “likely” something is to happen. This leads people to bet the farm on a “sure thing” in the stock market, and is very similar to the current situation where politicians, scientists and countries are betting their economic future on climate.

We assume that the future is fairly certain because we expect the future to behave like the past. However, this is an entirely naive belief because we already know the past while we do not know the future. This leads us to significantly underestimate the size of the unknown when we try and consider all the things that might happen going forward.

Thus, the plans of mice and men aft go awry.

John Finn says:

February 10, 2013 at 4:43 pm

I’ve got a bit of a problem with the representation of CO2 (compared to linear trends) in the first graph.

Ian H says:

February 10, 2013 at 6:51 pm

What is the vertical axis in this picture?

I would like to thank you both for raising an excellent point. The way WFT works, you can plot several different things at the same time. Naturally the y axis is different for each case. If I were to just plot CO2 alone, it would look as follows and the y axis would go from about 360 ppm to about 397 ppm CO2. See:

http://www.woodfortrees.org/plot/esrl-co2/from:1997/to:2013

When two things are plotted as I have done, the left only shows a temperature anomaly. It happens to go from -0.2 to +0.8 C. I did not plan it this way, but as it turns out, a change of 1.0 C over 16 years is about 6.0 C over 100 years. And 6.0 C is what some say may be the temperature increase by 2100. See:

http://www.independent.co.uk/environment/climate-change/temperatures-may-rise-6c-by-2100-says-study-8281272.html

“The world is destined for dangerous climate change this century – with global temperatures possibly rising by as much as 6C – because of the failure of governments to find alternatives to fossil fuels, a report by a group of economists has concluded.“

So for this to be the case, the slope for three of the data sets would have to be as steep as the CO2 slope. Hopefully the graphs show that this is totally untenable.

Interesting that even with all the obvious fudging of numbers still going on, the alarmies can’t do any better than to say the temperature curve is “flat.” Any statement by them that it is flat infallibly means it is trending downward, and that cooling, not merely a pause in warming, has been occurring for the last 15 years.

A correction to one poster’s comment: There have never been any years anywhere near as warm as the 1934-1938 period since that time, NOAA and IPCC lies to the contrary notwithstanding – not even 1953, a relatively hot one, and certainly not 1997-1998. There has in fact been an overall cooling trend, as demonstrated by the negative slope of the regression line for the past 80 years. Cyclical variations in the meantime have not affected the overall downward trend over the longer term. And interestingly again, this long-term cooling has taken place while CO2 in the atmosphere increased by nearly 40 percent. Therefore, no CO2-caused, hence no man-caused, global warming. Q.E.D.

Second proof: Inter alia, animal respiration alone pours at least 30, maybe as much as 75 times as much CO2 into the air as human activity. And then, there is ordinarily 30 to 140 times as much water vapor – a substantially more effective heat-trapping substance than there is CO2, in the atmosphere at any given time. And these are only a couple of many examples that can be cited on both sides of the CO2 equation. Man is an infinitesimal of an infinitesimal – man’s role in climate change is therefore, mathematically speaking, one over infinity squared. Q.E.D. again.

The hubris and effrontery of people who think they can control climate – especially by controlling only one tiny fraction of one tiny factor in climate change – is truly breathtaking. Back off, God/Mother Nature (take your pick of which), we’re in charge now, they’re saying.

Ok. Thanks moderator and people. Things are a little bit clearer. Say for example the RSS data you posted:

“For RSS the warming is not significant for over 23 years.

For RSS: +0.127 +/-0.136 C/decade at the two sigma level from 1990″.

Could you run through how you determined it was 23 years for the above? Is it because the minus value of 0.136 gives one a negative trend of -0.009 C/decade (0.127 – 0.136)? So you are going back as far as you can to obtain a zero or slightly negative slope?

ferd berple says:

February 10, 2013 at 7:59 pm

“Thus, the plans of mice and men aft go awry.”

I think I prefer the original

“The best laid schemes of mice and men gang aft agley

An’ lea’e us nought but grief an’ pain,

For promised joy.”

It encapsulates the entire CAGW proposition from “scheme” with its connotation of dishonesty, to the obfuscation of “gang aft agley” which needs an expert to interpret, to the promise of an idealised future for a little inconvenience now.

Perhaps Mr. Burns was the true visionary.

Mod E you misunderstand statistical significance. When you say you have $2 +/- $2 it does not mean what you claim, if you’re using 1sigma bands then you’re saying there’s a 64% chance that you have between 0 and $4 and 18% chance that there’s less than 0. If you’re using the more likely 2 sigma limits then you’re saying there’s a 95% chance of 0 to $4 and 2.5% chance of less than 0.

Reply: OK, I mis-spoke. I was picturing a $2 bill that I either have, or do not. A discrete event. Ought to have said “Have $4 or No $ in equal probability”. Not so much a nonunderstanding of statistics as trying to make a simple example for a newbie to have a vague idea what’s going on. So I said “have money” when I ought to have said “have $4”. Please, feel free to actually answer the posters question instead of just nit-picking. Oh, and remember to keep it at a level that requires no prior understanding of statistics. Oh, and make NO simplifying statements for illustration that might in any way be at variance with hard core statistical truth… But make sure it is absolutely clear. And do it in less than 3 sentences. -ModE]

Reply 2: No need to be snippy ModE. Phil. was making a valid point. ~uberMod

Wow, this may be the final nail. The U.N. conspiracy has stalled temperature rise to really through off those who don’t see the conspiracy.

SkepticGoneWild says:

February 10, 2013 at 6:42 pm

could you briefly explain what “2 sigma” means

To add to what the moderator said, I would like to apply it to the numbers for RSS since 1990, namely: For RSS: +0.127 +/-0.136 C/decade at the two sigma level from 1990. See this illustrated on this graphic from the post:

http://www.woodfortrees.org/plot/rss/from:1990/plot/rss/from:1990/trend/plot/rss/from:1997/plot/rss/from:1990/plot/rss/from:1990/trend/plot/rss/from:1990/detrend:0.3128/trend/plot/rss/from:1990/detrend:-0.3128/trend/plot/rss/from:1997/trend

Now 0.127 – 0.136 is -0.009; and 0.127 + 0.136 is 0.263. What this is saying is that since 1990, on RSS, we can be 95% certain that the rate of change of temperature is between -0.009 and +0.263/decade. So while we may be 93% certain that it has warmed since 1990, we cannot be 95% certain. For some reason, in climate circles, 95% certainty is required in order for something to be considered statistically significant.

Now having said all this, RSS shows no change for 16 years. This means there is a 50% chance that it cooled over the last 16 years and a 50% that it warmed over the last 16 years. Since this exceeds NOAA’s 15 years, their climate models are in big trouble.

So you are going back as far as you can to obtain a zero or slightly negative slope?

Yes, but that was only to the nearest whole year. If you go to:

http://www.skepticalscience.com/trend.php

And if you then start from 1989.67, you would find:

“0.131 ±0.132 °C/decade (2σ)”

In other words, the warming is not significant at 95% since September 1989. However this is just barely true and it only goes until October. If it went to January with the huge jump, it could change.

@Ferd Berple:

I touch on there here:

http://chiefio.wordpress.com/2012/12/10/do-temperatures-have-a-mean/

Oh, and as temerature is an intrinsic property, it is not legitimate to average temperatures anyway (you need to convert to heat using mass and specific heat / enthalpy terms)

http://chiefio.wordpress.com/2011/07/01/intrinsic-extrinsic-intensive-extensive/

So the whole “Global Average Temperature” idea is broken in two very different ways, either one of which makes the whole idea bogus… But nobody wants to hear that, warmers or skeptics, as then they have to admit all their arguments over average temperature are vacuous…

Werner Brozek says:

February 10, 2013 at 9:12 pm

Now 0.127 – 0.136 is -0.009; and 0.127 + 0.136 is 0.263. What this is saying is that since 1990, on RSS, we can be 95% certain that the rate of change of temperature is between -0.009 and +0.263/decade. So while we may be 93% certain that it has warmed since 1990, we cannot be 95% certain.

Werner, thanks for your patient explanation. I understood most of what you stated, but I am somewhat confused by your statement in bold above. So according to the above data, the warming is not significant at 95%. But say the RSS data happened to be o.127+/- 0.127 C/decade at 2 sigma, you could then state that you are 95% certain that it warmed? But since the above real data had a negative value ( -0.009), you could not be 95% certain it warmed?

Dear Supporter

The General Secretary of the CCCC has asked me to write directly to you to inform you of an important change in the way in which our operations will henceforth be conducted.

Following on from the poor publicity and consequent Denier opportunities presented by the mishandling of the recent temperature and and trends by our ‘colleagues’ in the D&O Secretariat, they have all accepted compulsory early retirement.

We have taken this opportunity to remove D&O from our strategic portfolio of work and so the Modelling Secretariat will now have complete control of all ‘data’ released for any form of publication. To boost our capabilities in this area we hope soon to acquire the services (on secondment) of a valued team of experienced climatalarmists currently located in Norwich UK.

Please welcome them to our happy bunch of crusaders.

I urge you all to redouble your efforts to ensure that only Modelling secretariat approved data is ever released. There is only one True Message and we must ensure that there are no further misunderstandings because of a lack of zeal and enthusiasm for the cause..

Though regrettable, we also need to introduce severe disciplinary sanctions for any deviations from the path. To emphasise this point the previous leader of D&O will be making a public confession of his errors live on webcam tomorrow at noon. The memorial service will begin at 14:00.

The General Secretary also wishes to convey his best wishes to you all. His well-earned retirement begins forthwith – at an undisclosed location. Rest assured GS, that we will be able to find you so that your past ‘achievements’ can be put in their proper context and the judgement of history applied!

Of course, our honoured Emeritus Professor – who has recently added a further honour to his distinguished record – will continue to use Twitter to remind you of the scientific facts you need to use in your day-to-day work

Best Wishes to You All

LA

[/sarcasm ? Mod]

@mod

See the earlier message from the Climate Control Central Command (‘Data’ and ‘Observations’ Secretariat)

http://wattsupwiththat.com/2013/02/10/has-global-warming-stalled/#comment-1221832

All should become clear.

LA

Did anyone calculate the correlation between CO2 levels and temperature? Would be interestin to see?

SkepticGoneWild says:

February 10, 2013 at 10:07 pm

But say the RSS data happened to be o.127+/- 0.127 C/decade at 2 sigma, you could then state that you are 95% certain that it warmed?

You are 95% certain the real slope is between 0.000 and 0.254. So I guess there would be a 2.5% chance the slope is above 0.254 and a 2.5% chance the slope is less than 0. So you would be 97.5% certain there was warming in this case.

But since the above real data had a negative value ( -0.009), you could not be 95% certain it warmed?

I was tempted to say yes that “you could not be 95% certain it warmed”, but I think I may have to ask for help here. In view of the 97.5% that I mentioned above, I am starting to wonder if there is a very small negative value that would allow you to say that you can be sure it warmed at a 95% confidence level. Can Phil. or someone else help me out? Thanks!

It’s enough to make a grown man cry. Here we have a set of facts that no one is disputing, and the catastrophists are spinning it as a success

the lukewarmers are claiming it proves them right

and the sceptics(inluding me) are agog that anyone can doubt they were right all along

More cherry picking.

Let’s just concentrate on one of the data sets shown – the claim that Hadcrut4 temperature data is flat since November 2000.

Look also at the data from 1999. Or compare the entire Muana Loa data set from 1958 with temperature.

And remember, “statistical significance” here is at the 95% level. That is, even a 94% probability that the data is not a chance result fails at this level.

http://tinyurl.com/atsx4os

The final chart with the question “the MET office believes that the 1998 mark will be beaten by 2017. Do you agree?” looks rather different if you put a simple linear trend line through the data presented.

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16/trend

Steven Mosher says:

Fitting a straight line to time series data assumes that the data generating process is linear.

We are quite sure that the underlying process is not linear. The imposition of a linear model is an analyst choice. This choice has an associated uncertainty such that you must either demonstrate that the physical process is linear or add uncertainty due to your model selection.

next, you need to look at autocorrelation before you make confidence intervals.

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/mean:9/mean:7/derivative/plot/sine:10/scale:0,00001/from:1990/to:2015

=====

This is a very good point. Sadly this is what most of climate science seems to have been feeding us for the last 30 years. Especially the IPCC version of climate history. Show a trend over , say, the last 50 years (innocently chosen round number NOT). Then say “if present trends continue” , with the unstated yet implied assumption that this obviously what will happen if we don’t change our ways.

Now we are all agreed that this is bad and totally unscientific. So can a layman do any better messing around with the limited facilities offered by WFT.org ? [sic]

Firstly , if we are interested in whether temps are rising faster or slower why the hell aren’t we looking at rate of change rather than trying to guess it by look at the time series and squinting at bit. Take the DERIVATIVE .

If rate of change is above zero it’s warming, below zero it’s cooling. You don’t need a PhD to view that.

dT/dt or the time derivative is available on WTF.org , so let’s use it.

While we are there, let’s stop distorting the data with these bloody running means that even top climate scientists of all persuasions can’t seem to get beyond. Running means let through a lot the stuff we image we have filtered out and, worse still, actually INVERT some frequencies. ie turn a negative cycle into a positive one. That is why you can often see the running mean shows a trough when the data is showing a peak.

Again, we can do better even with limited tools like WTF offers.

Without going into detail on the maths , if you do a running mean of 12 , then 9 then 7 months this make a half decent filter that does not invert stuff and gives you a way smoother result because really does filter out the stuff you intended.

So I’ll just take one data series used above at random ( without suggesting it is more or less relevant than the others).

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/mean:9/mean:7/derivative/plot/sine:10/scale:0,00001/from:1990/to:2015

BTW if anyone knows how to get a grid or at least the x-axis, I just hacked a sine wave with minute amplitude but it won’t go all the way across. WFT.org is a bit of mess but easy to use for those who don’t know how to get and plot data themselves.

Just The Facts may like to add this sort of graph for all the datasets reported here.

Here’s another view of this, upping the filter to remove 2 years an shorter.

http://www.woodfortrees.org/plot/hadcrut4gl/from:1970/mean:24/mean:18/mean:14/derivative/plot/sine:10/scale:0,00001/from:1990/to:2015

Here we see the 80s and 90s each had two warming periods and one cooling each. Since 1997 it is swinging about evenly each side of zero.

I’d called that ‘stalled’ if we want to draw a simplistic conclusion.

Switch the columns for rows on the last table.

___Data|Sets|Across|Here

M

O

N

T

H

S

H

E

R

E

http://www.woodfortrees.org/plot/hadcrut4gl/from:1970/mean:24/mean:18/mean:14/derivative/plot/sine:10/scale:0,00001/from:1990/to:2015/plot/gistemp/mean:24/mean:18/mean:14/derivative/from:1970/plot/rss/from/mean:24/mean:18/mean:14/derivative/plot/hadsst2gl/from:1970/mean:24/mean:18/mean:14/derivative

Including more data sets. Interestingly it is only the RSS satellite data that shows a noticeably larger positive swing than negative swing in the more recent cycle.

Last Wednesday,, David Attenborough said on the BBC’s much acclaimed nature programme ‘Africa’ that the world had warmed by 3.5 deg. C over the last 2 decades. So much for temperatures being flat!

But it seems that the BBC has now backed down on this claim.

http://www.telegraph.co.uk/earth/environment/climatechange/9861908/BBC-backs-down-on-David-Attenboroughs-climate-change-statistics.html

The comment, first broadcast in the final episode of the Africa series last Wednesday, was removed from Sunday night’s repeat of the show.

So the extra heat must have gone into the oceans, where it fed Irene, Sandy and Nemo and is melting sea- and shelf ice everywhere.

E.M.Smith:

At February 10, 2013 at 9:25 pm you say

Not true.

For many years several of us have been pointing out those and other fundamental problems with the global temperature data sets. Please read Appendix B and the list of signatories to that Appendix in the item at

http://www.publications.parliament.uk/pa/cm200910/cmselect/cmsctech/memo/climatedata/uc0102.htm

Richard

MikeB says:

February 11, 2013 at 2:20 am

Last Wednesday,, David Attenborough said on the BBC’s much acclaimed nature programme ‘Africa’ that the world had warmed by 3.5 deg. C over the last 2 decades. So much for temperatures being flat!

He probably misread the script and missed the point in front. Don’t forget he is getting old and his eyesight is probably not good.

The Central England Temperature maximum trend for March has gone up 0.5 degrees C since 1994 whereas the minimum has fallen 1 degree C. If you look at the maximums and minimums for each month they are all doing different things. Some months are warming and some months are cooling.

If plot all the 1sts of January since 1878 you can see an 88 year (approx) sine wave.

Philip Shehan:

Your entire post at February 11, 2013 at 1:27 am says

I know it is a big ask for you, but please try not to be idiotic.

The question being addressed is “Has global warming stalled?”.

That question is about the here and now: it is not about some other time.

There is only one period to choose when considering what is happening NOW: i.e. back in time from the present (we can’t go forward because we don’t know the future). Any other period is a ‘cherry pick’: indeed, it is inappropriate.

So, the real issue is determination of how far back in time is needed discern global warming.

And an important consideration in this determination is whether or not (at the 95% level) a zero trend has existed for 15 or more years. This is because in 2008 NOAA reported that the climate models show “Near-zero and even negative trends are common for intervals of a decade or less in the simulations”.

But, the climate models RULE OUT “(at the 95% level) zero trends for intervals of 15 yr or more”.

I explain this with reference, quote, page number and link to the NOAA 2008 report in my post at February 10, 2013 at 5:48 pm.

Furthermore, the appropriate comparison to MLO CO2 records is over the determined period(s) of (at the 95% level) a zero trend(s). It is not “since 1958” because the global temperature has had (at the 95% level) a positive trend since then and, therefore, it would be an ‘apples to umbrellas’ comparison.

I understand your difficulty. What the models “rule out” nature has done, and this falsifies your cherished models. You need to come to terms with it.

Richard

I have but one question, if the rather dubious corrections to the older temperatures { people in the old days did not read the thermometers correctly} the trend may be down rather than zero.

This is more of a worry than warming, the last long cold spell some time ago was not conducive to comfort nor the growing of food.

It bothers me that I have young grand children and fools are squandering our future capacity to adapt quickly to a fate worse than a degree or two of warming.

Yes, it must have done mustn’t it? We can’t find it there but that’s no problem, where else can it have gone? ( This begs the question of why it should suddenly decide to store itself in the ocean instead of warming the air as it did before)

On the other hand, if the extra heat is not hiding somewhere then there is something wrong with the theory – and we can’t have that.

Sherlock Holmes said to Holmes…

For the accurate info about what Attenborough said, see:

http://blogs.telegraph.co.uk/news/jamesdelingpole/100202234/no-david-attenborough-africa-hasnt-warmed-by-3-5-degrees-c-in-two-decades/

None of the analysis presented here actually answers the question asked at the top of the page “Has global warming stalled?”. To answer that question, you need to start with the null hypothesis (to be disproved) that global warming has not stalled, but continues unabated.

Take Hadcrut4 for example. You give two facts: the trend from Nov 2000 is flat, and the trend since 1995 does not show significant warming (i.e. it fails to reject a null hypothesis of no warming at the 95% level). However, if you also look at what was happening before these dates, you can compare before and after.

For Hadcrut4, after Nov 2000, the trend is -0.008 +/- 0.171 C/decade. Between 1900 and Nov 2000 the trend was +0.064 +/- 0.010. The rising trend of 0.064 is well within the 2-sigma bounds of the trend since Nov 2000. It is also possible that the trend has doubled to 0.128 per decade, as that is also comfortably within the 95% limits of the trend since Nov 2000. So there is no statistical evidence presented here that suggests that temperature is not continuing to rise at at the rate that it was rising prior to Nov 2000, or even at a significantly faster rate.

It is indeed within the bounds of 95% confidence limits that global warming has stalled. It is also possible that it has reversed is now heading downwards. But it is also statistically quite possible that it has continued or even increased in trend.

As for the fact that trend since 1995 does not show significant warming; at 0.095 +/- 0.111 it may not be statistically distinguishable from a zero trend, but the trend estimated from this data is actually HIGHER than the long-run trend since 1900.

The same failure to answer the basic question is true of every fact presented here. Just because a cherry-picked period (and the analysis in this post is the very definition of cherry-picking!) has a trend that is statistically indistinguishable from zero doesn’t mean that global warming has stalled. You need to show that the trend is statistically distinguishable from continued (or even accelerated) warming.

Werner when we apply significance tests to this data as to whether the trend is warming we ought to be applying a ‘one tailed’ test not a ‘two tailed’ test. The latter says that there’s a 95% chance of being in the range whereas the former says there’s a 2.5% chance of being below the lower bound. In the case of the RSS data there’s an ~3% chance of being below 0, therefore you’d say that warming was significant at the 95% level. A better way of testing would be to use a test like the Pearson test and work with the correlation coef but you’d still use the ‘one tailed’ version. The 95% cut-off is commonly used in science and engineering often thought of as 20:1 odds of being right.

…the extra heat….

Begging the question.

Tom Curtis says:

February 10, 2013 at 4:24 pm

“As usual, the purported skeptics here fail to look at the interesting question, what is the longest period for each data set such that all trends shorter than that period are differ[ent] from a trend of 0.21 C per decade (the IPCC predicted value) by a statistically significant amount.”

A nonsensical question really, since if you pick any specific time period within any temperature data set and then look at trends, within that period, of a shorter length of time – all these shorter length trends will automatically have a higher level of uncertainty attached to them (the shorter the time period the greater the uncertainty in the data) and therefore the chances of them being statistically distinguishable, at the 95% level, from a trend of 0.21 C per decade will of course be lower and lower the shorter the trends get.

However, there’s plenty of long periods of time where the trend is different from a trend of 0.21 C per decade by a statistically significant amount, for instance, using this tool:

http://www.skepticalscience.com/trend.php

And looking at the HADCRUT 4 data from 1863 – 2013, you get a trend of 0.051 +/- 0.007 C/decade. So that’s a 150 year period where the trend only has a 5% chance of being higher than 0.058 C/decade, nowhere near as high as 0.21 C per decade.

It’s the same story with every other trend data set you can look at with the trend calculator – apart from the two satellite data sets since of course the data doesn’t go back as far. Can’t get a 150 year period with that. Interestingly though, with those satellite datasets, the trend since satellite records began, to present, is still statistically distinguishable from a trend of 0.21 C per decade, with 95% confidence in one case. The two results are:

RSS: 0.133 +/- 0.073 C/decade (so a maximum trend – at the 95% confidence level – of 0.2060 C per decade…still not as high as the IPCC predicted value of 0.21 C per decade unless you’re going to round up to 2 decimal places…and it could also be as low as 0.06 C/decade with the same level of confidence.

UAH: 0.138 +/- 0.074 C/decade (so a maximum trend – at the 95% confidence level – of 0.2120 C…so JUST within the bounds of their prediction. Could also be as low as 0.064 C/decade.

Philip Shehan says:

February 11, 2013 at 1:27 am

“More cherry picking.

Let’s just concentrate on one of the data sets shown – the claim that Hadcrut4 temperature data is flat since November 2000.

Look also at the data from 1999. Or compare the entire Muana Loa data set from 1958 with temperature.”

See Richard Courtney’s comment above. It is NOAA’s falsification criterion. Complain to NOAA. Stop confusing the Null Hypothesis with the weird CO2AGW theory’s predictions. CO2AGW is falsified NOW; this is enough. Make a new theory. (But I hope you fund yourselves next time)

cRR Kampen says:

February 11, 2013 at 2:35 am

“So the extra heat must have gone into the oceans, where it fed Irene, Sandy and Nemo and is melting sea- and shelf ice everywhere.”

Do you have any evidence for a radiative imbalance that has not been fudged with a junkyard climate model?

cRR Kampen says:

February 11, 2013 at 2:35 am

So the extra heat must have gone into the oceans, where it fed Irene, Sandy and Nemo and is melting sea- and shelf ice everywhere.

Antidotes for your ignorance are just a click away: http://wattsupwiththat.com/2013/02/08/bob-tisdale-shows-how-forecast-the-facts-brad-johnson-is-fecklessly-factless-about-ocean-warming/

Kelvin Vaughan says: ……”he probably misread the script and missed the point in front. Don’t forget he is getting old and his eyesight is probably not good.”

Oh no he didn’t misread the script. Don’t expect the BBC to miss a trick and not slip a bit of global warming propaganda in if they think they can get away with it. The ‘source’ of the warming figures were ‘unbiased’ ‘reliable’ ‘scientific’ / sarc, green campaign organisations such as Greenpeace and the WWF.

http://blogs.telegraph.co.uk/news/jamesdelingpole/100202234/no-david-attenborough-africa-hasnt-warmed-by-3-5-degrees-c-in-two-decades/

And yet a February 7 post on RealClimate, “2012 Updates on Model-Observation Results” concludes that all is well with the models. Would appreciate comments on their main tricks.

It’s chaotic. Why bother?

Climate Control Central Command (Modelling Secretariat) says: …….

Re: [/sarcasm ? Mod]

Mod, I think it was more parody / satire personally.

Boblo, did you really believe the good folks at RealClimate would say anything different? Truely they are the “deniers”. They are denying the proof of the failure of their climate models… a standard of proof they themselves established.

Nigel Harris says:

February 11, 2013 at 5:03 am

None of the analysis presented here actually answers the question asked at the top of the page “Has global warming stalled?”.

Thank you for your excellent points! I know there is a difference of opinion as to exactly what the 95% refers to in NOAA’s statement. I am not going to get into semantics here. For me, the most important facts are that RSS, Hadsst2 and Hadcrut3 show a slope of 0 for over 15 years. I realize there are error bars, but they have a 50% chance of going up or down from the point of 0 slope. So at the minimum, these three sets would be proof that global warming has stalled for a significant period of time. As for the other three sets, there could be room for debate here.

P.S. Thank you for a good idea!

Max™ says:

February 11, 2013 at 2:18 am

Phil. says:

February 11, 2013 at 5:09 am

Thank you! So I want to be sure I said the correct thing. Earlier, I said the following:

“If you go to:

http://www.skepticalscience.com/trend.php

And if you then start from 1989.67, you would find:

“0.131 ±0.132 °C/decade (2σ)”

In other words, the warming is not significant at 95% since September 1989.”

Is this last statement completely correct or should it be modified in some way? If so, how? Thanks!

sceptical says:

February 10, 2013 at 9:04 pm

Wow, this may be the final nail. The U.N. conspiracy has stalled temperature rise to really through off those who don’t see the conspiracy.

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

No it is not.

As long as the Mass Media papers over the cracks and no reporter will touch it, the Propaganda Machine will keep deflecting attention to ‘Weather Wierding’

The banks, financiers, and large corporations (advertisers) have much to much riding on this scam. (See my comment here on the MSM – banker connections )

For those like _Jim who think people are in control of corporation stock: Start with A Brief History Of The Mutual Fund “…Shady dealings at major fund companies demonstrated that mutual funds aren’t always benign investments managed by folks who have their shareholders’ best interests in mind….” Mutual funds separate the ‘owner’ of the stock from the ‘voter of the stock’ and therefore shift control of the company into the hands of the mutual funds.

Then there are our ‘benign’ bankers: Top Senate Democrat: bankers “own” the U.S. Congress and the last fleecing of the sheep: How AIG Bailout Drivings More Foreclosures and this article “How Wall Street Insiders Are Using the Bailout to Stage a Revolution” and this interview Heist of the century

Finally this article:

On Robberies committed with paper:

“Of all the contrivances for cheating the laboring classes of mankind, none has been more effectual than that which deludes them with paper money. This is one of the most effectual of inventions to fertilize the rich man’s field by the sweat of the poor man’s brow. Ordinary tyranny, oppression, excessive taxation: These bear lightly the happiness of the mass of the community, compared with fraudulent currencies and robberies committed with depreciated paper.” ~ Daniel Webster 1832

The ‘Carbon Economy’ is just the newest twist on the old game of “cheating the laboring classes of mankind” because every single one of those dollars come from the pockets of the laboring classes and finds its way into the pockets of the financiers with nothing given in return except the false promise that we are ‘Controlling the Climate’

Waking Activists up to these facts is where the true fight is. People may not understand Physics and Chemistry and Statistics but they do understand scams and frauds. In our favor the trust in bankers/financiers is at an all time low. It does not matter if you are far right or far left, or in the middle, we should all be on the same side when preventing a massive rip-off and the ‘collateral damage’ of the deaths of thousands if not millions.

The people behind the fraud know this is where the fight is. They have been very proactive against us in several different ways. These include:

1. Funding activists through foundations. They even muddy the funding trail by setting up go-betweens such as the Tides Foundation

2. Controlling the activists. This activity started long ago with the ‘Innocents’ Clubs’ and has moved to the modern NGOs

3. Repeating ad nauseum their claim that ‘deniers’ are funded by ‘Big Oil’ .

4. Declaring that ‘deniers’ are mentally deficient. To aid this latest tactic Dr. Lewandowsky is trying to publish two peer-reviewed papers designed to make skeptics look like flat-earth mouth breathers unfit for polite society.

Nigel Harris says:

February 11, 2013 at 1:51 am

looks rather different if you put a simple linear trend line through the data presented.

http://www.woodfortrees.org/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16/plot/hadcrut4gl/from:1990/mean:12/offset:-0.16/trend

Take a closer look at this. 2012 ended at 0.274. The slope of the line is 0.015. So if we multiply this by 5 to get us to 2017, we get 0.075, and adding to 0.274 gives 0.35 which is less than 0.40. So it would have to rise at a faster slope than is shown to reach the 1998 mark.

Sherlock Holmes says

It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of making a theory to fit the facts

Werner Brozek starts

In order to answer the question in the title, we need to know what time period is a reasonable period to take into consideration.

Henry says

A reasonable time period is one normal solar cycle i.e. at least 11 years.

http://www.woodfortrees.org/plot/hadcrut4gl/from:2002/to:2014/plot/hadcrut4gl/from:2002/to:2014/trend/plot/hadcrut3vgl/from:2002/to:2014/plot/hadcrut3vgl/from:2002/to:2014/trend/plot/rss/from:2002/to:2014/plot/rss/from:2002/to:2014/trend/plot/gistemp/from:2002/to:2014/plot/gistemp/from:2002/to:2014/trend/plot/hadsst2gl/from:2002/to:2014/plot/hadsst2gl/from:2002/to:2014/trend/plot/uah/from:2002/to:2014/trend

It is clear the odd one out here is UAH because it must have some calibration errors.

Obviously I did what Sherlock did, making my own dataset ensuring strict requirements for each weather station to be included.

My own data set for means shows a downward slope of -0.02 degree C per annum since 2000.

For the maxima I was able to do a best fit sine wave. We will be cooling until about 2038.

richardscourtney says:

February 11, 2013 at 3:24 am

And an important consideration in this determination is whether or not (at the 95% level) a zero trend has existed for 15 or more years. This is because in 2008 NOAA reported that the climate models show “Near-zero and even negative trends are common for intervals of a decade or less in the simulations”.

But, the climate models RULE OUT “(at the 95% level) zero trends for intervals of 15 yr or more”.

I explain this with reference, quote, page number and link to the NOAA 2008 report in my post at February 10, 2013 at 5:48 pm.

But you neglect to mention that those models did not include ENSO (clearly stated in the report).

I understand your difficulty. What the models “rule out” nature has done, and this falsifies your cherished models. You need to come to terms with it.

Since nature has not eschewed ENSO, nature has not in fact ‘done it’, in fact when the data is corrected for the presence of ENSO no such 15 year period is observed. You need to come to terms with that. Pick a period starting with an El Niño and ending with a La Niña and you’d expect flattening.

Philip Shehan says:

More cherry picking.

Let’s just concentrate on one of the data sets shown – the claim that Hadcrut4 temperature data is flat since November 2000.

Look also at the data from 1999. Or compare the entire Muana Loa data set from 1958 with temperature.

And remember, “statistical significance” here is at the 95% level. That is, even a 94% probability that the data is not a chance result fails at this level.

http://tinyurl.com/atsx4os

==========

Yes, I would agree with your introductory phrase , what you are doing is “more cherry picking”. The usual IPCC deception. Chose a period where both are going in the same direction and pretend this shows causation.

Why did you chose 1968? Let’s see. What does 1928 look like?

http://www.woodfortrees.org/plot/hadcrut4gl/from:1928/mean:12/plot/esrl-co2/from:1928/normalise/scale:0.75/offset:0.2/plot/hadcrut4gl/from:1999/to:2013/trend/plot/hadcrut4gl/from:2000.9/to:2013/trend

Now Mauna Loa record does not go that far back but no one pretends CO2 was dropping from 1930-1960. So what YOU were doing is cherry picking.

What the author was doing was testing the data against a specific claim that was intended to be falsifiable statement … which turned out to be falsified. There is no “cherry-picking” involved in testing where a hypothesis fits the claims of its authors.

That is simple, honest application of scientific method.

What part of “simple” and “honest” is causing you problems Professor Cherry-picker?

Werner Brozek says:

February 11, 2013 at 9:13 am

Phil. says:

February 11, 2013 at 5:09 am

Thank you! So I want to be sure I said the correct thing. Earlier, I said the following:

“If you go to:

http://www.skepticalscience.com/trend.php

And if you then start from 1989.67, you would find:

“0.131 ±0.132 °C/decade (2σ)”

In other words, the warming is not significant at 95% since September 1989.”

Is this last statement completely correct or should it be modified in some way? If so, how? Thanks!

You’re welcome. In your last statement I’d say that the null hypothesis is that no warming took place, i.e. that the real trend is less than or equal to zero. My alternate hypothesis would be that warming did take place, I therefore need to reject the null hypothesis on statistical grounds, I choose to do so at the 95% level. Using the data we have and doing a ‘one tailed’ test I would need to show that there is a less than 5% chance of the trend actually being zero or less. In the case you presented the data shows an ~2.5% chance of zero or below so the null hypothesis is rejected therefore warming is significant at the 95% level. I’d prefer to work with the original data and do a Pearson type test but I’d expect a similar result.

To fail to reject the null at the 95% level on this basis the threshold would be at 1.65 sigma so roughly 0.131±0.216°C/decade based on my back of the envelope calc.

Phil says: Since nature has not eschewed ENSO, nature has not in fact ‘done it’, in fact when the data is corrected for the presence of ENSO no such 15 year period is observed. You need to come to terms with that.

===

Oh, you gotta love it.

When the data is “corrected” ….. Hey bud, the DATA is correct, that’s why it’s called the data (singular datum: point of reference) . That’s where science starts. Now how about correcting the frigging models?

No one was “correcting” for the lack of ENSO when they were winding up the world at the end of the century. Then the “uncorrected” warming was a “wake up call” etc. etc. Now it we have to take it into account.

How about we “correct” for the 60y cycle and the 10y and the 9y and then come back and look at what climate is really doing?

When all the cycle peaked at once it was all fine and dandy to claim the world was about to turn into Venus. Now the wind is blowing in the other direction , it suddenly has to be corrected…. until next time it starts going up again and we can forget the “corrections”.

The sad thing is you guys are probably serious and honestly believe this garbage.

Phil. says:

February 11, 2013 at 10:00 am

Since nature has not eschewed ENSO, nature has not in fact ‘done it’, in fact when the data is corrected for the presence of ENSO no such 15 year period is observed. You need to come to terms with that. Pick a period starting with an El Niño and ending with a La Niña and you’d expect flattening.

I would be more inclined to agree with you if there had been a strong El Nino and then neutral conditions afterwards. However as it turns out, the La Ninas that followed the 1998 El Nino effectively cancelled it out. So the slope since 1997, or 16 years and 1 month is 0, however the slope from March, 2000, or 12 years and 11 months, is also 0. So in my opinion, nature has corrected for ENSO. However even if it didn’t, there comes a point in time where one has to stop blaming an ENSO from 14 years back for a lack of catastrophic warming. It would be like a new president blaming the old president for things 7 years after taking office. Note that the graph below actually starts in the La Nina region.

http://www.woodfortrees.org/plot/rss/from:1997/plot/rss/from:1997/trend/plot/rss/from:2000.16/trend

P.S. Thank you for

Phil. says:

February 11, 2013 at 10:29 am