From the Air Vent, reposted by invitation

Posted by Jeff Id on February 15, 2009

Guest post by Jeff C

Jeff Chas done an interesting and impressive recalculation of the automatic weather station AWS, reconstruction of the Steig 09 currently on the cover of Nature.

Warming of the Antarctic ice-sheet surface since the 1957 International Geophysical Year

Jeff C is an engineer who realized that the data was not weighted according to location in the original paper. He has taken the time to come up with a reasonable regridding method which more appropriately weights individual temperature stations across the Antarctic. It’s amazing that a simple, reasonable gridding of temperature stations can make so much difference to the final result.

———————-

Jeff Id’s AWS reconstructions using his implementation of RegEM are reasonably close to the Steig reconstructions. The latest difference plot between his reconstruction and Steig’s is quite impressive. Removing two sites from his reconstruction that were erroneously included in initial attempts (Gough and Marion) gives us this chart:

It is clear Jeff is very close as the plot above has virtually zero slope and the “noise level” is typically within +/- 0.3 deg C except for a few outliers (that’s the Racer Rock anomaly at the far right as we are using the original data). Although not quite fully there, it is clear Jeff has the fundamentals correct as to how Steig used the occupied station and AWS data with RegEM.

I duplicated Jeff’s results using his code and began to experiment with RegEM. As I became more familiar, it dawned on me that RegEM had no way of knowing the physical location of the temperature measurements. RegEM does not know or use the latitude and longitude of the stations when infilling, as that information is never provided to it. There is no “distance weighting” as is typically understood as RegEM has no idea how close or how far the occupied stations (the predictor) are from each other, or from the AWS sites (the predictand). Steig alludes to this in the paper on page 2:

“Unlike simple distance-weighting or similar calculations, application of RegEM takes into account temporal changes in the spatial covariance pattern, which depend on the relative importance of differing influences on Antarctic temperature at a given time.”

I’m an engineer, not a statistician so I’m not sure exactly what that means, but it sounds like hand-waving and a subtle admission there is no distance weighting. He might be saying that RegEM can draw conclusions based on the similarity in the temperature trend patterns from site to site, but that is about it. If I’ve got that wrong, I would welcome an explanation.

I plotted out the locations of the 42 occupied stations used in the reconstruction below. Note the clustering of stations on the Antarctic Peninsula. This is important because the peninsula is known to be warming, yet only constitutes a small percentage of the overall land mass (less than 5%). Despite this, 15 of the 42 occupied stations used in the reconstruction are on the peninsula.

Location of 42 occupied stations that form the READER temperature dataset (per Steig 2009 Supplemental Information). Note clustering of locations at northern extremes of the Antarctic Peninsula.

I decided to see what would happen if I applied some distance weighting to the data prior to running it through RegEM.

DISCLAIMER: I am not stating or implying that my reconstruction is the “correct” way to do it. I’m not claiming my results are any more accurate than that done by Steig. The point of this exercise is to show that RegEM does, in fact, care about the sparseness, location and weighting of the occupied station data.

I decided to carve up Antarctica into a series of grid cells. I used a triangular lattice and experimented with various cell diameters and lattice rotations. The goal was to have as many cells as possible containing occupied stations, but also to have as high a percentage of the cells as possible contain at least one occupied station. I ended up with a cell diameter of about 550 miles with the layout below.

Gridcells used for averaging and weighting. Cell diameter is approximately 550 miles. Value in parenthesis is number of occupied stations in cell. Note that cell C (northern peninsula extreme) contains 11 occupied stations, far more than other cells. Cells without letters have no occupied stations.

I sorted the occupied station data (converted to anomalies by Jeff Id’s code) into groups that corresponded to each gridcell location. If a gridcell had more than one station, I averaged the results into a single series and assigned it to the gridcell. Unfortunately, 14 of the 36 gridcells had no occupied station within them. Most of these gridcells were in the interior of the continent and covered a large percentage of the land mass. Since manufacturing data is all the rage these days, I decide to assign a temperature series to these grid cells based on the average of neighboring grid cells. The goal was to use the available temperature data to spread observed temperature trends across equal areas. For example, 17 stations on the peninsula in three grid cells would have three inputs to RegEM. Likewise, two stations in the interior over three grid cells would have three inputs to RegEM. The plot below shows my methodology.

Shaded cells with single letter contain occupied stations. Cells with two or more letters have no occupied stations but have temperature records derived from average of adjacent cells (cell letters describe cells used for derivation). Cells with derived records must have three adjacent or two non-contiguous adjacent cells with occupied stations or they are left unfilled.

I ended up with 34 gridcell temperature series. Two of the grid cells I left unfilled as I did not think there was adequate information from the adjacent gridcells to justify infilling. Once complete, I ran the 34 occupied station gridcell series through RegEM along with the 63 AWS series. The same methodology was used as in Jeff Id’s AWS reconstruction except the 42 station series were replaced by the 34 gridcell series.

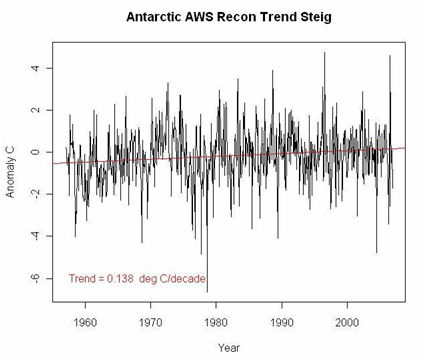

For comparison, here is Steig’s AWS reconstruction:

Calculated monthly means of 63 AWS reconstructions using aws_recon.txt from Steig website. Trend is +0.138 deg C. per decade using full 1957-2006 reconstruction record. Steig 2009 states continent-wide trend is +0.12 deg C. per decade for satellite reconstruction. AWS reconstruction trend is said to be similar.

And here is my gridcell reconstruction using Jeff Id’s implementation of RegEM:

Calculated monthly means of 63 AWS reconstructions using Jeff Id RegEM implementation and averaged grid cell approach. Trend is +0.069 deg C. per decade using full 1957-2006 reconstruction record.

Although the plots are similar, the gridcell reconstruction trend is about half of that seen in the Steig reconstruction. Note that most warming occurred prior to 1972.

Again, I’m not trying to say this is the correct reconstruction or that this is any more valid than that done by Steig. In fact, beyond the peninsula and coast data is so sparse that I doubt any reconstruction is accurate. This is simply to demonstrate that RegEM doesn’t realize that 40% of the occupied station data came from less than 5% of the land mass when it does its infilling. Because of this, the results can be affected by changing the spatial distribution of the predictor data (i.e. occupied stations).

Fantastic work- congratulations! IMHO it would appear more reasonable to equalise temperature data on an equal area basis, as is done here, rather than apparently allowing the 5% tail (of the Antarctic Peninsula) to wag the (Continental Antarctic) dog, a la Stein et al.

Why not try and get this accepted as a counter publication?

I’m just a layman here, but it would seem that trying to deal with the fact that there is a concentration of measurements in a small area in Antarctica that is warming would be important.

Is that just not so?

Would it be too much work to see what the plot looks like once peninsula grid cells ( A / B / C / D / E ) are removed?

If we suspect that vulcanism has played a part in peninsular “warming” , then if we remove those (affected) cells, this should better reflect the rest of the land mass.

Essentially the (my, anyway) question is to ask how much influence is the peninsula itself having, not just station weighting.

Thanks…

Excellent job! Just another example of how the Man-made Global Warming Hypothesis is truly a phenomenon of number & data manipulation. All in the name of reaching a pre-determined result!

Pure GIGO.

I’m sorry and don’t mean to be grumpy, but sparse data measured with error have no inferential power. It doesn’t matter if you weight them, massage them, put them in a sack and beat them with stick, calculate the principal component eigen vectors, tickle them, squeeze them, or put them through the dishwasher; poor data are worthless and meaningless.

The confidence limits exceed the range of the data. The temp trend could be plus or minus 5 degrees. We have no way of knowing from these data. IMHO.

PS — please don’t use RegEM PCA when you engineer bridges, or chips, or cars, or anything else that which might inflict tremendous tragedy and suffering should it fail.

15 of 42 cells are in one area and that happens to be the warmest area? That doesn’t seem to me like it would give the right result.

And if we chart the main Antarctic Mass seperately from the peninsula, my guess is that the main mass has cooled far more than the Peninsula has warmed. And we are right back to that embarassing jpeg of Antarctica.

Which is why it had to be remodeled just recently.

SEPP Science Editorial #6-09 (2/7/09)

Returning to the Antarctic:

You may recall our skepticism about reported Antarctic warming [Science Editorial #4-09 (1/247/09)]:

Recall that Professor Eric Steig et al last month announced in Nature that they had spotted a warming in West Antarctica that previous researchers had missed through slackness – a warming so strong that it more than made up for the cooling in East Antarctica. Finally, Global Warming really was global.

The paper was immediately greeted with suspicion, not least because one of the authors was Michael Mann, ‘inventor’ of the infamous hockey stick, now discredited, and the data was reconstructed from very sketchy weather-station records. But also, because the Steig result was contradicted by the much superior MSU data from satellites.

As reported by Australia’s Herald Sun (Feb 4), the warming trend ‘arises entirely from the impact of splicing two data sets together’ Read this link and this to see Steve McIntyre’s superb forensic work. Why wasn’t this error picked up earlier? Perhaps because the researchers got the results they’d hoped for, and no alarm bell went off that made them check. Now, wait for the papers to report the error with the zeal with which they reported Steig’s warming.

http://blogs.news.com.au/heraldsun/andrewbolt/index.php/heraldsun/comments/going_cold_on_antarctic_warming#48360

———————————————–

University of Toronto geophysicists have shown that should the West Antarctic Ice Sheet (WAIS) collapse and melt in a warming world, as many scientists are concerned it will, it is the coastlines of North America and of nations in the southern Indian Ocean that will face the greatest threats from rising sea levels. The research is published in the February 6 issue of Science magazine

“This concern was reinforced further in a recent study led by Eric Steig of the University of Washington that showed that the entire region is indeed warming.”

Well now, not only is there no indication of a collapse of the WAIS – but it’s not even warming. The researchers end their news release with: “The most important lesson is that scientists and policy makers should focus on projections that avoid simplistic assumptions.” I agree fully.

http://www.sepp.org/

“Unlike simple distance-weighting or similar calculations, application of RegEM takes into account temporal changes in the spatial covariance pattern, which depend on the relative importance of differing influences on Antarctic temperature at a given time.”

I’m an engineer, not a statistician so I’m not sure exactly what that means, but it sounds like hand-waving and a subtle admission there is no distance weighting.

Well, I’m pretty good at deconstructing science Babblespeak… but this is a bit on the dense side. My cut at it ends up where yours does:

~”We don’t do simple distance weighting or similar things (implied air of superiority), RegEM uses time changing things in the pattern of how space is covered (wave of obfuscation over exact thing done) which depends on how time based changes move Antarctic temperature data”

Or my paraphrase: We figure that seasons might have an impact, so we try to match change patterns over time and ignore distance. You know, summer may warm up faster near the coasts but very slowly in the middle; winter comes and the pole drops fastest first; so we just vary the impact with time relationships and figure that drags some distance stuff in with it, maybe.

Richard Sharpe @ (10:26:48)

Richard, using the suspect readings from a very small area, taken on a volcanically-active peninsula, to make a case for an entire continent’s “multi-decadal warming trend” would, essentially, be akin to taking temperature readings from a few stations in South Florida to extrapolate the temperature trends (within a few hundredths of a degree Celsius) on Hudson Bay.

One can do it, but one shouldn’t expect to have one’s results taken seriously by others with even a modicum of rational thinking processes going on inside their craniums.

If I’ve misunderstood the point of your comment and question, I apologize in advance.

Jeff, this is yet another incredible piece of work that us lowly non-statisticians can only sit back and marvel over. Great job.

Now pardon me while I sit back and watch those evil capitalists burn all of that [snip] racing fuel and increase their carbon footprints! Woot!

[snip]

REPLY: B.C. Please stop making up slogans on trademarks, it puts this blog at risk. I’ve had to snip several of your posts in the past, and I don’t need the extra work. Final warning. – Anthony

Mike D. (11:06:32) :Pure GIGO.

I’m sorry and don’t mean to be grumpy, but sparse data measured with error have no inferential power. It doesn’t matter if you weight them, massage them, […]; poor data are worthless and meaningless.

The confidence limits exceed the range of the data.

Strange. This is exactly my evaluation of what GISS does in GIStemp!

In fact, my first response to the original issue (Steig paper) was “Wait a minute, they are making the same “coastal” error that Hansen does in GIStemp only inverting the direction of action! In a Very Cold Place projecting a sparse interior it will imply bogus warming interior where in warm places with most sites on the coast being ‘adjusted’ via interior stations it will imply — bogus warming of the coasts!)

And that is why I keep saying that the GIS data are ‘cooked’, and why I think the original Steig approach is in error.

Basically, the “Reference Station Method” does not work well for projecting into non-homogenous zones and is especially bad with coastal vs non-coastal over long distances and is even worse when linear offsets are used rather than a coefficient of correlation formula with (perhaps variable) slope (and perhaps variance over time… summer vs winter).

This induces error that exceeds the accuracy of the data; that then forces your precision down to the single digit range. And we know to “Never let your precision exceed your accuracy!” -Mr. McGuire.

So we again end up dancing in the error bands of the simulation (or extrapolation, or interpolation, or projection, or whatever fancy word you want to use for “made up ‘data’ set”).

We fret over 1/10ths or 1/100ths in “made up data” that are only useful in the 1/1 ths…

With that said: Jeff C.’s experiment demonstrates some of the sensitivities of this method to minor changes of assumption. It shows that the outcome is more related to the method than to the reality: And THAT is a very important lesson!

Anthony, will do and apologies for any problems.

The two Jeffs have demonstrated what fakery the temperature reconstructions employ.

Ignobale Prize for Mann & Steig, anyone?

EM,

I believe that you should be the official translator. Whenever you see a bit of pedantic folderol (BS), you can let each of us know what it means.

Maybe sometime in the future we could even have a dictionary here of words that are commonly used to prevaricate (lie).

Jeff,

Thanks for this exercise. I can hardly wait to see the responses and big words that will come soon.

Mike Bryant

Let us take these very small temperature fluctuations in perspective.

Antarctica today has ice that averages over 7000 feet thick and currently holds about 90% of all ice on Earth. The average temperature of this ice is -37˚C. Antarctica is so cold today that the vast majority of it never gets above freezing, which means it’s ice is safe from melting. From 1986 to 2000 both satellite data and ground stations show that Antarctica cooled and actually gained more ice by a staggering 26.8 million tons of ice per year.

The greater part of Antarctica experiences a longer sea ice season lasting 21 days longer than what it did in 1979. While the overall the amount of Antarctic sea ice has increased since 1979, some ice shelf is melting. This ice shelf melting was already floating on the ocean as a solid so very little sea level rise occurs because of this sea ice melt. This melting and freezing is part of a natural cycle that has gone on for over 30 million years long before any human influence.

Can someone enlighten me as I get confused with all the acronyms for everything.

I thought that Steig did the following:

1) Took ground data that have a long history but are geographically limited, and compared them with satellite data, station by station and claims reasonable agreement over the common time.

2) Made a grid of satellite data that covers the whole antarctic.

3) Using this grid extrapolated from the old data to the interior data and generated interior data for the times before satellites existed.

4) took the average and made a temperature versus time plot which he claims shows warming, though very slight.

It seems to me that the analysis above addresses the ground stations and uses them for extrapolating to the interior, which is something entirely different.

What am I misunderstanding?

Ed Scott say: “As reported by Australia’s Herald Sun (Feb 4), the warming trend ‘arises entirely from the impact of splicing two data sets together’”

Actually, Ed-san, if you re-read the article more closely, the warming trend referred to is strictly for Harry, just one station, which I believe Steig even denies was part of the final data set. It seems to have been a major player in some way, however, given that it had the largest trend and was well away from the peninsula. More work remains to be done on this.

As stated on Jeff’s blog, simply figure out what kind of grouping you need to maximize the trend, and there you go….

anna v

Steig’s paper did two entirely separate reconstructions. The AWS reconstruction was used as verification of the satellite reconstruction which showed a ‘strong’ warming trend. Steig has seen fit not to share the satellite data or even one line of the code his team wrote for creation of this paper, however he did point to RegEM claiming this was all of the code.

We have been able to come close to the AWS reconstruction but not the satellite. So since this is what we have, this is what we’re using.

What Jeff C showed that RegEM results are dramatically affected by the spatial distribution of the surface data. The implication is that this effect of the reconstruction is not proplerly accounted for. Actually it wasn’t discussed at all as far as I know. Since simple and reasonable regridding of the data creates so much difference in trend, the question becomes—

How can this rebuilt and heavily imputed data be used for verification of sat trend?

and of course

If the AWS data which verifies sat trend is flawed, what does that say for sat trend?

BTW: The satellite data was an IR surface measurement, not a lower troposphere measurement as in UAH or RSS.

“Note that most warming occurred prior to 1972”.

And most (if not all) warming occurred on 5% of Antarctica, where most of the recently active volcanoes are.

jorgekafkazar (12:53:21)

You say, “…which I believe Steig even denies was part of the final data set.”

——————————————-

I cannot find the denial by Dr. Steig in either the posted article or the referenced blog.

—————————–

“Previous researchers hadn’t overlooked the data. What they’d done was to ignore data from four West Antarctic automatic weather stations in particular that didn’t meet their quality control. As you can see above, one shows no warming, two show insignificant warming and fourth – from a station dubbed “Harry” shows a sharp jump in temperature that helped Steig and his team discover their warming Antarctic.”

“Harry in fact is a problematic site that was buried in snow for years and then re-sited in 2005. But, worse, the data that Steig used in his modelling which he claimed came from Harry was actually old data from another station on the Ross Ice Shelf known as Gill with new data from Harry added to it, producing the abrupt warming. The data is worthless.”

As a failed engineer (couldn’t hack the math) may I congratulate Jeff Chas on his superbly presented thought experiment. A tribute to engineers everywhere.

And dontcha love that throwaway line buried there in the middle:

“since manufacturing data is all the rage these days”

As Glenn Reynold says: Heh.

“This is simply to demonstrate that RegEM doesn’t realize that 40% of the occupied station data came from less than 5% of the land mass when it does its infilling. Because of this, the results can be affected by changing the spatial distribution of the predictor data (i.e. occupied stations).”

Let me see if I’m interpreting this correctly. Let me use fuel mileage as an example:

On two consecutive fillups, I record 22 and 26mpg, and determine that my average fuel mileage is 24mpg. But, I only used 5 gallons from the first fillup, and 12 gallons from the 2nd fillup, so it’s not as simple and simply averaging 22 and 26 together.

This is similar to 40% of the stations accounting to 40% of the signal event hough they are covering only 5% of the landmass.

Am I thinking about that right?

I am just a dummy trying to understand all the statistical mumbo jumbo, but it seems to me that a lot of people are just making up numbers using computer programs and a limited amount of real data. I see that playing around with the numbers gives one different results (which I think is your point here), but exactly why does anyone take all of this manipulation seriously? It reminds me of what my company does with sales data…then they feed us a line of bs about the metric being exact to 0.00…none of us believe THAT, either.

To moderator: I am trying to both have some fun here and explain what I have come to understand as the upshot of teh Two Jeff’s work.

Reading the comments about this post at Climate Audit, I get the feeling that the Mann & Steig discussion went something like this:

“Well, we got rid of the medieval warm period, Eric, so let’s deal with the Antartic cold period”

“Hmmm, how do we go about that?”

“Well, we know most of the key measurements are from the Antartic peninsula, which we know is warming due to the volcanoes underneath”

“OK … where are you going with this Mike??”

“So, we do a statistical analysis which does not weigh the measurements for geographical distribution. Therefore, the statistical analysis will have built into it the assumption that all stations have equal weighting and biases.”

“Oh, right Mike. They will all have equal weights, meaning they are assumed to be equally distributed, at equal latitudes and altitudes, in equal environments.”

“You got it … and we don’t need to state the assumptions as they aren’t assumptions … merely the product of our analytical method”.

“Now, how about that beer?”

The Jeff’s are on to something here and will be needed when stations.org gets them ratings done! Anthony, do you see it too?

this is my play on this… lets say you had 100 temp. stations in Florida and 10 in Canada and then said how hot north America was!!!!!!

The death of GW AGW CGW CC is coming to a place near you.

REPLY: yes I see it. – Anthony

Thanks all for the comments. I had not realized that Anthony had picked this up or I would have commented earlier.

Regarding the GIGO comment, I agree. That is why I was careful to state in the write-up that I was not saying this was the “correct” way to do it. I was simply trying to demostrate that if the series to RegEM were weighted in proportion to the amount of area they represented, the results change by a fairly significant amount. The fact that the warming decreased may or may not be significant, the important takeaway is that the answer changed.

Anna – I think Jeff Id answered your question, but just to reiterate, Steig did two reconstructions (satellite and AWS – Automated Weather Station). Dr Steig has provided both reconstructions to the public but has not provided the input data. Since the AWS data is available through other means (unlike the satellite data) most of the forensic work has focused on AWS reconstruction. Everything above is regarding the AWS recon but should be relevant to the satellite recon as Dr. Steig says the two used the same methodology and reached comparable results.

I had posted this comment below earlier on Climate Audit, but I’ll repeat it here as I think it might help clarify what could be happening.

My take on this is that RegEM will do a reasonable job on infilling missing data from dates if the temperature series used are well correlated. Take California as an example. If I had ten locations from the central valley (Bakersfield – Fresno area) that had data missing from scattered dates, RegEM would probably do a decent job infilling as all the sites have similar climactic trends.

Now add in two more California series of Eureka (NW Coast) and Palm Springs (SE Dessert). Although both are in California, their climates aren’t anything like the central valley. RegEM doesn’t know that these two sites are distant as it doesn’t have the lat/long coordinates. As Roman mentions in #11, the algorithm can recognize that the two new sites aren’t well correlated to the original ten and make implicit assumptions regarding distance. However, if lots of points in the time series are missing (as we know is the case) any trend difference may not be readily apparent. In addition, since there are only two outliers, their impact on the overall reconstruction is minimized. RegEM would still do a reasonable job on the central valley sites, but probably is off considerably for the other two.

We might have an analogous geographic inbalance in the Steig reconstruction due to the prevalance of peninsula and coastal stations.

This is analogous to calculating ore grade in, say, a porphyry copper deposit. Drill holes scattered around the area, and the copper content varies foot by foot throughout each drill length (in this case, the measured value T varies with time). Someone, please, find a talented geostatistician to take a look at these data and see if the principles of geostatistics can be brought to bear on the problem.

Otherwise, geologically, we can separate the areas on several bases, one being the active volcanic terrane that is western Antarctica, another being the seemingly geologically inert eastern Antarctica. There are undoubtedly other factors to consider – winds, currents, how much of the Antarctic is bedrock versus how much of the ice mass is underlain by submerged bedrock, etc.

Steig apparently is a geologist. He seems to have an amazingly simplistic view of the Antarctic and little knowledge of the application of statistics in geology. His work is not convincing.

Let’s try some scenarios for “Data Infilling” and compare the results.

SCENARIO 1: The Accountant.

“We’ll all I did was infill the profits between JAN 08 and Nov 08 which then showed that the Company really did make a large profit as declared to the shareholders. I know that the CEO and CFO sold all their shares directly after the company report was made public and before the current issues become evident, however….”

SCENARIO 2: The Detective.

“Well all I did was infill the evidence at the crime scene after all we all knew that he’d done it – it had to be him. Anyone claiming this to be a frame up just doesn’t know what their talking about….

SCENARIO 3: The Climate Scientist.

“Well, obviously there are no instruments in the target area, but this is simply solved by the use of validated “data infilling for the missing instruments” to provide for a confident measure of the area in question. We all do this, it’s a widely accepted practice these days….

The first two are criminals, what about the third?

Robert Wood (12:00:59) suggests awarding the “Ig-noble Prize” to Steig and Mann. Wonderful idea!

It’s high time that excellence in 1) data manipulation; 2) process obfuscation; and 3) data/code archival avoidance be given the notoriety they deserve.

Tom Karl should be in the running for NCDC smoke-and-mirrors recordkeeping

Wahl and Amman set a high standard for paleotemp reconstructions with “selected” tree-ring series

How about those stalactite O-isotope records that have to be inverted to give the “right” signals?

Candidates abound!

“And the winner is . . . . . . . .”

I suggest the prize be $10,000 for every hundredth of a degree the RSS/UAH global temp satellite metric deviates from the mean IPCC 4 projection.

Negative differences will require payments from the award winners!

Forecasts should have consequences.

Hi,

am I wrong or Steig et al actually used satellite data to fill the “gaps” for empty places? Isn’t that more accurate, or relevant, than taking the average of neighboring cells?

MattN (15:16:51)

Some real life advice: you are doing it wrong. You are correct that you need to weigh your numbers for miles driven, however you need to average gallons used per mile, not mpg. Assume, you drove

1) 100 miles at 20mpg, i.e. you used 5 gallons at 0.05 gallons per mile

2) 100 miles at 25mpg, i.e. you used 4 gallons at 0.04 gallons per mile

That’s 9 gallons for 200 miles or 22.22 mpg. Averaging gallons per mile yields 0.045 gallons per mile or 22.22 mpg. Simply averaging mpg gives you 22.5 mpg, which is 1.25% too high.

It gets more extreme, if you average mileage across fleets, where cars have strongly varying mileage. Let us look at the mini “fleet” of a two-car household with a SUV and a fuel-efficient car, both driven for the same distance (e.g. taking cars in daily turns). Assuming 20 mpg for the former, 40 mpg for the latter, they average at 26.6 mpg, not 30 mpg.

Jeff ID and Jeff C

Thanks for the clarification.

I do not see something wrong in the idea of making a map from detailed satellite data, checking the predictions/agreement in situ for each station ( not for the projection of the stations) during the time overlap. If the agreement is statistically good ( 1 sigma), then fitting the “mask” found to the individual station data, within statistical errors, for the years not common with the satellite should be a good enough estimator of the temperatures in the inner region.

You are claiming with this analysis that the comparison set from the ground data is biased ; i.e. Steig et all did not use fits to individual station data but collective fits to the data from them, in order to normalize post satellite era data with ground stations data.

OK, found the paper. Yes, they are playing with reconstructed stuff.

We use the RegEM

algorithm9–11 to combine the data from occupied weather stations with the TIR

and AWS data in separate reconstructions of the near-surface Antarctic temperature

field. Split calibration/verification tests are performed by withholding preand

post-1995 TIR andAWS data in separate RegEMcalculations

http://holocene.meteo.psu.edu/shared/articles/SteigetalNature09.pdf

You criticisms are valid.

I don’t understand this post. From what I understand, the weather station data was used to compare with satellite data for the same general location. After checking the satellite data for areas where it could be checked, and showing the satellite data correlated well with the weather stations, then the satellite data was used all over the continent. The results from Steig et. al. all came from the satellite data.

The author of this post didn’t even look at the satellite data, according to his comments here. This is a hack job of an analysis… he didn’t even analyze the basis for the paper’s conclusions. Do I understand this correctly?

MattN (13:11:41) :

As stated on Jeff’s blog, simply figure out what kind of grouping you need to maximize the trend, and there you go….

I suspect you might be right Matt. I have seen a lot of “correlation mining” going on with {self snip}. It would be interesting if the Jeffs could tweak RegEM parameters to see where the maximum trend is found, or maximum conclusion could be reached. Is that maximum trend the one that was used and reported, and if so, do the values for such parameters make sense, or are they the result of goal seeking the maximum result? I suspect all parameters are selected to provide the highest result, whether this is sensible or not. Nobody wants a flimsy hockey stick, you know…

bluegrue (22:58:51) :

MattN (15:16:51)

Careful: Both f you are over-analyzing (correctly – as it turns out!) your example(s) of gas mileage calculations to make your point.

BUT – both of you are really illustrating EXACTLY what Mann, Hanson, Steiger and their AGW disciples are NOT doing. You are discussing gas usage, miles driven, speeds, refills, driving habits and numbers of cars driven under different circumstances. You ARE discussing specific calculations and you ARE giving good examples of each of your points.

BUT – Hanson and Mann are (deliberately ?) NOT doing any of that. They are making exaggerated claims while screaming at politicians without giving ANYONE else a chance to review their methods, mathematics, or raw data.

From the BBC today

“At least 235 marine species are living in both polar regions, despite being 12,000km apart, a census has found.”………..

“He also added that the temperature differences in the oceans did not vary enough to act as a thermal barrier.

“The deep ocean at the poles falls as low as -1C (30F), but the deep ocean at the equator might not get above 4C (39F).”

http://news.bbc.co.uk/2/hi/science/nature/7888558.stm

So if deep sea animals can survive the trip between poles then i guess a small change in surface temperature would not bother them much.

Queen1 (15:22:06) :

I am just a dummy trying to understand all the statistical mumbo jumbo, but it seems to me that a lot of people are just making up numbers using computer programs and a limited amount of real data. I see that playing around with the numbers gives one different results (which I think is your point here), but exactly why does anyone take all of this manipulation seriously? It reminds me of what my company does with sales data…then they feed us a line of bs about the metric being exact to 0.00…none of us believe THAT, either.

Rarely has so much been said so clearly. You are no dummy. Your understanding is far better than many folks with PhDs.

I do like the idea of reconstructing satellite data back to more than 20 years before the satellites launched.

We can use these techniques for other things as well.

I wonder what Webvan or Pets.com stock prices would have been in 1957?

Paul K (00:13:00) :

I don’t understand this post. From what I understand, the weather station data was used to compare with satellite data for the same general location.

Right, that is the problem: “same general location”. This present analysis shows that if you change the way you define “same general location”, a significant difference appears in the two results, so the Steig calibration is not solid.

After checking the satellite data for areas where it could be checked, and showing the satellite data correlated well with the weather stations,

Which the present analysis shows it could not, that is correlate well, since a change in how the ground data are treated changes the results significantly.

then the satellite data was used all over the continent. The results from Steig et. al. all came from the satellite data.

No the results came from ground and satellite data where satellite data they existed, and from ground and extrapolations from ground data, using the mask of the satellite data, into the empty interior data . Since the mask was extracted based on shaky ground/satellite data agreement, the whole construct is shaky.

Imagine a student who attends a community college in nearby Tacoma has the following grades: Astrology I (B), Rap Music Theory (A), Yoga (A), Dance (B), Remedial Algebra (C-). I wonder if Professor Steig would allow RegEM to infill the grade for this student in his Climatology and Matlab seminars based on these grades.

For those like me who had never heard of RegEM, here is a paper definining it:

“The Regularized EM Algorithm”

LINK: http://www.cs.ucr.edu/~hli/paper/hli05rem.pdf

Paul K

The author of this post didn’t even look at the satellite data, according to his comments here. This is a hack job of an analysis…

Perhaps you missed the little war going on with Steig over the release of the satellite data and code he used to process it. If you look at the RC threads on the antarctic, they read like a 50 car pileup with people demanding data and code. (more wouldn’t hurt).

—

I should mention also that RomanM discovered on CA that the data from the satellite was 100% replaced in it’s reconstruction with RegEM data before comparison. The entire continent was replicated from 3 trends with different PC’s (curves). These PC’s are weighted at each of thousands of grid points and assumed to be temperatures. One hundred percent of the ‘real’ data was replaced……..

I’m not sure how the correlation analysis was done yet, but the RegEM used in this post also uses 3 PC”s to infill the huge amounts of missing data from each station. Don’t blame Jeff C for infilling, he’s just following the Steig/Mann recommended procedure. If Steig then used the infilled results to check the quality of the data, you are comparing some very very heavily processed sets of data against each other for proof that the analysis is good.

Therefore when you say—

The results from Steig et. al. all came from the satellite data.

It depends on what you mean by results. If the AWS recon verifies the result and the AWS reconstruction has serious problems, what meaning does the result have?

—–

What Jeff shows here is that the AWS reconstruction from the paper on the cover of Nature didn’t do an adequate job of taking into account the spatial distribution of the temperature stations resulting in the over weighting of the temperature trend increase of the peninsula.

This is what we thought might happen once we realized RegEM didn’t take into account the location of individual stations. The paper fails to discuss the issue entirely and the effect is so severe that even two non-climatologist engineers can figure it out in a short time frame.

The entire continent was replicated from 3 trends with different PC’s (curves). hese PC’s are weighted at each of thousands of grid points and assumed to be temperatures.

should say:

The entire continent was replicated with 3 trends or curves derived from principal component (PC) analysis. These 3 PC’s are weighted (multiplied by 3 coefficients per each gridcell) and added together at each of thousands of grid points and assumed to be temperatures.

@ Robert A Cook PE (02:29:56)

Actually, I just wanted to prevent MattN from relying on a faulty calculation in daily life, unrelated to climate and this thread.

However, I do not see, what this has to do with Mann whatsoever. My calculation was just illustrative and not the focus of my answer. It would have sufficed to assert “You need to do a weighted average of the inverse mpg to calculate the inverse of the average mpg.” or “Your average is wrong for the same reason that makes averaging over speeds wrong.”, as neither these assertions nor my calculation are proof of anything. On the other hand, the numerical result in Mann et.al. and the way they reached it were the central result of their paper. It was not a polished, perfect diamond, but a good snapshot of ongoing work, worthwhile publishing. It turns out, that their result is robust, i.e. other groups have by now come up with reconstructions of their own that replicate the hockey stick.

I know my take on Mann is unpopular here; I just wanted to clearly state my position, as my post was used to bash Mann et.al. I have read the arguments by Mcintyre & McKitrick, the take by van Storch, some other literature and peeked into CA and RC. I am not aware of any new arguments cropping up recently, so I motion to not further pursue the hockey stick on this thread, where I feel it is off topic.

Typo: Of course it is “von Storch” in the preceding post of mine, sorry.

Anthony & Co.,

Writing as a Geographer, I can tell you there are umpteen ways to do spatial interpolation/extrapolation, and the stats are well understood. There’s Inverse Distance, Splines, Geographically Weighted Regression, and several flavors of Kriging, to name just a few. There are packages designed specifically for climate applications: PRISM and MTCLIM for example. That Steig et al did not use any standard, well understood method, not even as a control, but “rolled their own”, indicates to me that their work was sloppy from the git-go.

What’s happening with the nort pole sea ice graph from NSIDC?

The graph has made a sharp drop last few days, and it seems ice is melting in Hudson Bay and some other places???

If I look to other images (from Cyrosphere, ijis web site,…) this loss in sea ice isn’t there…

Is this a fault by nsidc?

bluegrue,

This thread isn’t the place to discuss hockey sticks but this comment really caught me.

It was not a polished, perfect diamond, but a good snapshot of ongoing work, worthwhile publishing. It turns out, that their result is robust, i.e. other groups have by now come up with reconstructions of their own that replicate the hockey stick.

Part of the reason the result is “robust” is due to the fact that they use the same flawed data sets. The second part of the reason is that they use the same methods.

Here’s a link where I used the CPS method with Mann08 data to make any shape I wanted from it. Positive hockey sticks, negative, sine waves absolutely anything.

http://noconsensus.wordpress.com/2008/10/11/will-the-real-hockey-stick-please-stand-up/

You have quoted the right works, have you checked the math?

At least, we know it’s quite hot down there (relatively of course) for the moment

http://nsidc.org/data/seaice_index/images//daily_images/S_timeseries.png

the arctic is on its side is evaporating

http://nsidc.org/data/seaice_index/images/daily_images/N_timeseries.png

unless there’s a serious problem with NSIDC.

REPLY: “quite hot”? “Flanagan” that’s the most untrue and misleading comment ever made on this blog. Rephrase it please. -And be careful citing NSIDC, there’s some problems- Anthony Watts

Jeff Id (08:58:24)

So far the findings in Surface Temperature Reconstructions for the Last 2,000 Years by the National Research Council has been good enough for me. I have not looked into Mann08 or the ‘Composite and Scale’ method. I have bookmarked your post, but that is all I can promise; not sure whether I will find the time to look into it. (Yeah, I know, lame retreat 😉 )

One obvious question pops up right away: does your linked entry on CPS entail separate training and verification periods?

Jeff Id:

As a mere layperson statistician, I read this just after I was developing the sneaking suspicion that Steig was manipulating/”adjusting” the Satellite data, too, as well as having misled RegEM concerning the occupied and AWS data – the adjusted Satellite results and recon methods which he hasn’t released! Which means to me that there is very likely something wrong with his adjusted results and conclusions, or else that they don’t really show what he says and wants them to show.

Thanks for your and Jeff C’s work and explanations, which have really helped me battle through just exactly what is going on in “Climate Science” reconstructions. It’s stunning.

When I wrote this up, it was for Jeff Id’s site where most of the readers and commenters have closely followed the deconstruction of the paper’s methodology. Because of this, I didn’t provide a top-level overview to set the stage for my analysis. Now that Anthony has picked it up, let me clarify a few things to put this post in context. It might help answer some of the criticisms.

Steig provided two reconstructions, the satellite recon and the AWS recon (more on the meaning of AWS in a moment). He claims both use comparable methodology and provide similar results. In the paper, he shows the smoothed trends for both recons and they do look very similar.

The satellite recon uses two data sets, the temperature data from occupied stations (measurements from manned scientific colonies in Antarctica) and the measured temperature data from the AVHRR satellite. Since the AVHRR satellite uses IR imaging, cloud cover over Antarctica corrupts large portions of the measured satellite data. These data points are removed using a technique known as cloud masking. Both data sets are then processed using the RegEM algorithm to infill missing data (both before the satellite launch and during cloud masking intervals). RegEM outputs the satellite reconstruction with the missing data infilled.

The AWS recon uses a similar methodology but using temperature data from the occupied stations and from automated weather stations (AWS). The AWS data series are from 63 unmanned stations that are deployed throughout Antarctica. The stations are left in remote portions of the continent and upload the temperature measurements through a satellite link. Since the AWS are in a hostile environment with no maintenance for prolonged periods, they are prone to breakdowns (being covered with snow, electronic malfunctions, etc.). The breakdowns cause large gaps in the AWS records (analogous to the cloud-masking gaps in the satellite records). Also, there is no AWS data prior to 1980. RegEM is used with both the occupied station data and the AWS data to infill the gaps and create a reconstruction prior to 1980. This is a separate and different reconstruction from the satellite reconstruction.

The problem with the reconstruction forensics is that Steig did not release the input data (occupied station temperatures, AWS temperatures, and satellite temperatures) to the public. The occupied station and AWS data is available to the public through Steig’s source, through the British Antarctic Survey (BAS). The cloud-masked satellite data is not available. Because of this, most of the forensic work has focused on the AWS reconstruction which does not use *any* satellite data. I would love to work with the satellite reconstruction, but Dr. Steig has not seen fit to make the RegEM satellite input data available.

My point of the above post was to demonstrate that distance weighting the occupied station data into the RegEM algorithm significantly changes the result of the AWS reconstruction. Since the satellite reconstruction is said to use similar methodology (by Steig himself in the paper and SI) presumably its reconstruction would also change significantly.

I’ll be happy to answer any substantive questions, but charges of “hack job analysis” without understanding the fundamentals of Steig’s work will be ignored.

Jeff: excellent work! I’m an engineer involved in resource extraction who works with geological models. If any company tried to list reserves on a publicly traded company using the Steig methodology of averaging everything in the area without distance weighting they would at the least have a stop trade issued on their stock, and at most might be looking at jail time for fraud.

Matt N: let’s flesh out your example a little bit more. Let’s say you want to determine your 2 weeks average fuel use, and you’re a little paranoid so you fill up every day to make sure you never run out of gas. Monday to Friday your 30 mile one-way commute takes a couple of hours because of gridlock, and you buy 6 gallons (10 miles/gallon). On each of the two weekends you travel to visit your parents 300 miles away, traveling there on Saturday and back on Sunday, and again use 6 gallons on the wide-open freeways (50 miles/gallon). What is your mileage? If you average on a daily basis then you’d assume your vehicle is terrible. You have to determine what the appropriate use is and make sure you’re comparing with other vehicles that are used the same way.

Richard Sharpe (10:26:48) :

I’m just a layman here, but it would seem that trying to deal with the fact that there is a concentration of measurements in a small area in Antarctica that is warming would be important.

Is that just not so?

Mann made science seems to be a very corrupting influence in climate science.

I see Mann as the equivalent of one of those eugenicists who carefully compiled stats of how facial eye placement was a direct indicator of racial intelligence.

Congratulations on the quality of (most) of the scientific discussion presented above – it is very heartening to read.

I would like to pick up on a suggestion made much earlier in the postings by BC, Bruce, Robert Wood and Jim F, that the Antarctic Peninsula data are somehow suspect because this is a “volcanically active area”.

In asserting this they are clearly implying that the surface temperature observations from the Peninsula have ( or may have) been influenced by volcanic activity.

Folks, be careful here!

While there are well documented examples of the impact of volcanic eruptions (e.g Pinatubo) on short/med term local and global temperatures, there is no convincing evidence of the impact of “regional volcanism” on local surface temperatures (let alone on temperature trends).

In a region of active volcanism there will certainly be local areas of high geomthermal gradient/ heat flow. Such heat flow may or may not increase/decrease over short time periods. This might possibly influence the surface temperature readings made AT individual observation sites (you may perhaps expect some obvious signs of volcanic/geothermal activity at the observation site if that was the case). But it is drawing a fairly long bow.

Remember, we are looking for trends in surface temperature. We would need not just an association between any individual site and volcanism, but an increase in that volcanic activity also, to render the observations un-reliable.

I’ve not seen any credible evidence for increased volcanic activity in the Antartic Peninsula over the period in question.

In a broader sense, the hypothesis that local/regional activity may have influenced surface temperatures at any given site is worth considering, but only if examined along with all other possible sources of variability and un-reliability in the observations (e.g. measurement errors, biases, clustering, external factors, local variability, etc) which may impact on the intended end use of the data.

Bad reporting of a paper from Nature Geoscience last year may be partly responsible for giving this idea credence. The original article postulates that sub-ice volcanic activity may have an impact on ice flow dynamics, and influence ice sheet stability. The authors certainly do not propose that there has been an increase of volcanic activity during the period of supposed temperature increase.

(see Hugh F. J. Corr & David G. Vaughan. A recent volcanic eruption beneath the West Antarctic ice sheet. Nature Geoscience 1, 122 – 125 (2008).

A good example of the woolly reportage about this article can be found at:

http://www.tgdaily.com/content/view/41171/117

BC, Bruce, Robert Wood and Jim F – don’t be guilty of making unsupported assertions and be cautious of inference. You can’t argue against dodgy science by using dodgy science.

Here’s a blink of the two temperature reconstructions –

http://i39.tinypic.com/2cx6rdi.jpg

Looks like a classic GISS homogenization with the older temps dropping.

The Jeffs work is interesting to me, but I doubt it will reveal anything particularly exciting. Since the error range of the AWS reconstruction is over 50% it will be kinda hard to make a point one way or the other. The 5509 data points for the satellite data should be a better example of when RegEm can be employed and its limitations.

It might be interesting to select an area where surface temperature records have gaps and apply RegEm. Like Canada that lost so many sites.

@ Mike Stewart (05:36:39) :

I certainly did not intend to suggest that volcanism is contributing to local surface temperatures in Antarctica. My main point was how one might use geostatistical techniques with temperature readings in widely separated locations to “infer” temperatures over the entirety of the area under concern.

Additionally, I noted in an abbreviated way that East and West Antarctica are two different beasts, in terms of geology, oceanograpy, topography, temperature regimes and who knows what else. In geostatistics, that probably negates, or else tremendously complicates, using measured temperatures from the two areas to estimate temperatures across the combined area.

Ultimately (but unstated earlier) my point is that the two areas have so many differences – especially the one key to these discussions – recorded temperatures/temperature trends – that the two areas should be considered separately. A geologist ought to consider and speak to this (multiple working hypotheses, at a minimum) because they may fundamentally change the analysis. Steig, disappointingly, does not do so.

Thanks for your comments.

What is obvious is . . . figures don’t lie, but liars do figure.